Abstract

Prominent models of face perception posit that the encoding of social categories begins after initial structural encoding has completed. In contrast, we hypothesized that social category encoding may occur simultaneously with structural encoding. While event-related potentials were recorded, participants categorized the sex of sex-typical and sex-atypical faces. Results indicated that the face-sensitive right N170, a component involved in structural encoding, was larger for sex-typical relative to sex-atypical faces. Moreover, its amplitude predicted the efficiency of sex-category judgments. The right P1 component also peaked earlier for sex-typical faces. These findings show that social category encoding and the extraction of lower-level face information operate in parallel, suggesting that they may be accomplished by a single dynamic process rather than two separate mechanisms.

Keywords: face perception, N170, P1, person perception, social categorization, visual encoding

Introduction

People readily and rapidly glean a variety of information from the faces of others [1]. The perception of three categories in particular – sex, race, and age – have long been described to be obligatory. The encoding of these categories is important because, once perceived, they trigger a variety of cognitive, affective, and behavioral consequences that shape our everyday interactions with others [1].

For decades, face perception research has been largely guided by the cognitive architecture outlined by Bruce and Young [2]. This model assumes that a structural encoding mechanism initially constructs a representation of the face's features and configuration. Processing results from structural encoding are then sent down two functionally independent pathways. One pathway works to identify the target by recognition mechanisms, whereas a separate pathway works on processing facial expression, speech, and other `visually derived semantic information', such as social categories.

Various aspects of this model have been supported by neural evidence. For instance, electrophysiological studies have pinpointed a negative event-related potential (ERP) component peaking between 150 and 190 ms, which is highly sensitive to faces (the N170), distributed maximally over occipito-temporal cortex. Much of this research implicates the N170 in initial structural face encoding [3,4], but finds that it is insensitive to facial identity and familiarity [5] – a dissociation predicted by Bruce and Young's model [2]. Surprisingly, little is known, however, about the neural encoding of social category information. The assumption is that this information is encoded only after representations from structural encoding have been built, and by a separate mechanism: the `directed-visual encoding' module [2]. Thus, social category encoding should presumably take place subsequent to the N170 and be reflected by a qualitatively different process.

There are several reasons why we believe this assumption may not be warranted. Single-unit recordings in the macaque show that the transient response of face-sensitive neurons in temporal cortex initially reflects a rough, global discrimination of a visual stimulus as a face (rather than some other object or shape). Subsequent firing of this same neuronal population, however, appears to gradually sharpen over time by coming to represent finer facial information, including identity and emotional expression [6]. This initial rough distinction between face and non-face stimuli in macaque temporal cortex likely corresponds with the early face-detection mechanism in humans peaking approximately 100 ms after face presentation [7], which is approximately 70 ms before the structural encoding operations reflected by the N170 [3–5]. That the same neuronal population gradually transitions from processing global characteristics (e.g. face vs. non-face) into processing more complex information, such as identity and expression, is consistent with other evidence showing that representations in face-responsive neurons continuously sharpen across processing [8]. These findings open up the possibility that initial structural face encoding (indexed by the N170) and the encoding of finer, more complex information, such as social category cues, may actually be accomplished by a single temporally dynamic process [9–11], rather than two functionally independent modules working in stages, as classically theorized [2]. This single dynamic process would accumulate structural information and social category information in parallel, rather than sequential stages. Such parallel encoding is consistent with recent accounts of face perception, which posit that multiple codes (e.g. sex, race, identity, expression) are extracted simultaneously in a common multidimensional face-coding system. In this system, the dissociation between codes emerges not from separate neural modules [2], but rather from statistical regularities inherent in the visual input itself, which are processed in parallel [11].

An earlier study found that the N170 was modulated by a face's race, with enlarged left N170s in response to White, relative to Black, faces [12]. However, this study and another recent study [13] found no differential N170 responses to male versus female faces. Although these studies are valuable for understanding how the N170 responds to differences in low-level physical features, they are less valuable for understanding the N170's role in social category encoding (see Ref. [14]). That is, larger N170 effects in response to White versus Black faces could reflect visual differences intrinsic in the stimuli (e.g. skin tone and other race-specifying features) – not true encoding of race-category information. If N170 modulation had been found for male versus female faces, this would also have reflected low-level physical properties in the stimuli (e.g. the coloring of male vs. female faces). Such physical confounds could easily influence the N170 or a temporally overlapping component [14].

In this study, we use a facial morphing technique to isolate sexually dimorphic content (the visual information that cues perception of sex category [15]) and uncon-found all other physical information. ERPs involved in encoding sex category should be sensitive to the amount of sex-category content facially depicted. Regardless of whether faces are male or female, those with more sex-category content should receive more sex-category processing. If the N170 indexes this processing, this would suggest that social category encoding could take place alongside initial structural face encoding.

Methods

Participants

Twenty-three healthy, right-handed volunteers participated in exchange for $20.

Stimuli

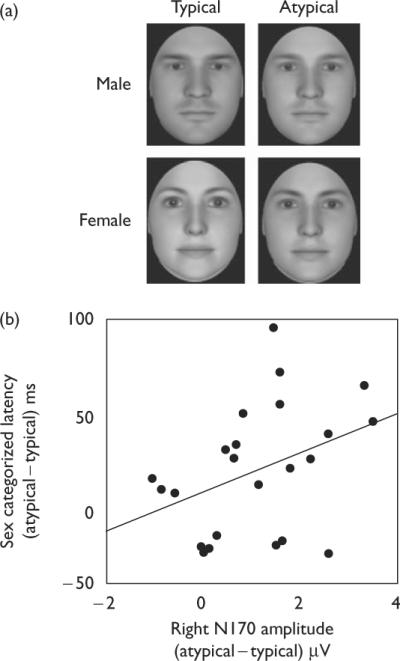

To conduct morphing along sex, we used three-dimensional face modeling software (FaceGen Modeler 3.1; Singular Inversions, Toronto, Ontario, Canada) enabling the semi-randomized generation of face stimuli with a high level of realism. This permitted manipulation of gendered face content while keeping all other perceptual information constant. Fifty unique male faces were generated at the anthropometric male mean and 50 unique female faces were generated at the anthropometric female mean, together composing the typical condition. Each typical face was then morphed to be approximately 25% less gendered (more androgynous), composing the atypical condition. Faces were directly oriented and cropped as to preserve only the internal face (for sample stimuli, see Fig. 1a). This morphing technique also allowed us to control for low-level visual differences (e.g. brightness, intensity). For instance, men are darker than women and this difference was reflected in our morphing algorithm [16]. Therefore, when a male face was made atypical, it became lighter; when a female face was made atypical, it became darker. As the typical and atypical conditions each contained male and female faces, such opposing effects cancelled each other out. As such, the typical/atypical conditions were tightly controlled against extraneous low-level visual-evoked responses.

Fig. 1.

(a) Sample stimuli. (b) Difference score (atypical – typical) of each participant's sex-categorization latencies as a function of each participant's difference score (atypical – typical) of his or her N170 amplitude. The more enlarged the N170 was for sex-typical (relative to sex-atypical) faces, the more efficient sex-typical faces were categorized (relative to sex-atypical faces).

Behavioral procedure

Each of the 200 face stimuli was presented once in one of four randomized orders on a black background using a computer monitor placed in front of the participants. Participants were instructed to categorize faces by sex as quickly and accurately as possible using left versus right hand button presses (whether male/female was left/right was counterbalanced). Participants were also told to avoid blinking during the trial and to only blink when a message appeared instructing them to do so. At the beginning of each trial, a fixation cross was presented for 800 ms, followed by a 500 ms interstimulus interval (ISI) of a blank screen. Then, the face appeared for 600 ms, followed by a 1000 ms ISI. Finally, a message informing participants to blink appeared for 2000 ms. After a 300 ms ISI, the next trial began. Participants completed several practice trials before experimental trials.

Electrophysiological recording and analysis

Participants were seated on a comfortable chair in a sound-attenuated darkened room. An electro-cap with tin electrodes was used to record continuous EEG from 29 sites on the scalp including sites over left and right fronto-polar (FP1/FP2), frontal (F3/F4, F7/F8), frontal-central (FC1/FC2, FC5/FC6), central (C3/C4), temporal (T5/T6, T3/T4), central-parietal (CP1/CP2, CP5/CP6), parietal (P3/P4), and occipital (O1/O2) areas; and five midline sites over the frontal pole (FPz), frontal (Fz), central (Cz), parietal (Pz), and occipital (Oz) areas. In addition, five electrodes were attached to the face and neck area: one below the left eye (to monitor for vertical eye movement and blinks), one to the right of the right eye (to monitor horizontal eye movements), one each over the left and right mastoids, and one on the nose (reference). All EEG electrode impedances were maintained below 5 kΩ for scalp electrodes, 10 kΩ for eye and nose electrodes, and 2 kΩ for mastoid electrodes. The EEG was amplified by an SA Bioamplifier (SA Instruments, San Diego, California, USA) with a bandpass of 0.01 and 40 Hz, and the EEG was continuously sampled at a rate of 250 Hz. Averaged ERPs were calculated offline from trials free of ocular and muscular artifact using an automatic detection algorithm (all trials were rejected in which either the difference in activity between electrodes directly below and above the left eye or the activity of an electrode directly to the right of the right eye exceeded 50 μV). The overall rejection rate was 17%. ERPs were generated by time locking to the onset of face stimuli and calculated relative to a 100 ms prestimulus baseline.

Results

Behavioral data

Participants made less categorization errors for typical targets (mean = 3.3%) than atypical targets (mean = 5.0%), and this difference was marginally significant [t(2) = 1.75, P = 0.09]. These incorrect trials were discarded from analysis. Expectedly, participants were also significantly quicker to categorize the sex of typical targets (mean = 573 ms) than atypical targets (mean = 592 ms) [t(22) = 2.61, P < 0.05].

Event-related potential data

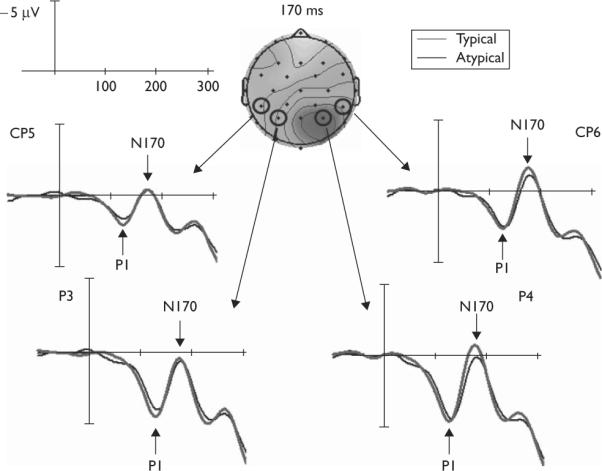

Given an a priori hypothesis of N170 involvement in social category encoding and its localization to occipito-temporal sites [3–5], we measured mean amplitudes between a poststimulus window of 150–190 ms that were averaged together for left occipito-temporal sites (CP5/P3) and together for right occipito-temporal sites (CP6/P4). The N170 in the left hemisphere did not reliably differ for sex-typical (mean = 0.97 μV) versus sex-atypical (mean = 1.09 μV) faces [t(22) = 0.29, P = 0.78]. The N170 in the right hemisphere, however, was significantly enlarged for sex-typical (mean = − 0.81 μV) relative to sex-atypical faces (mean = 0.21 μV) [t(22) = 8.25, P < 0.000001]. Figure 2 depicts the grand-average waveforms for sex-typical and sex-atypical faces along with a voltage map representing the difference in ERPs between sex-typical and sex-atypical faces. Voltage maps representing separate grand-average ERPs for sex-typical and sex-atypical faces are shown in Fig. 3, which show a canonical N170 topography, as reported in earlier face perception studies [3–5]. Peak latencies did not differ between sex-typical and sex-atypical faces in this time window [left: t(22) = 0.76, P = 0.45; right: t(22) < 0.01, P > 0.99].

Fig. 2.

Grand-average waveforms for typical and atypical faces at left and right occipito-temporal sites. Negative is plotted upward. In addition, a voltage map showing normalized difference waves (typical – atypical) at 170 ms is depicted. Darker coloring indicates higher negativity for typical faces.

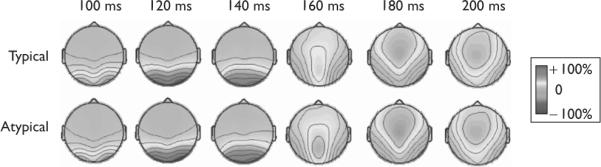

Fig. 3.

Voltage maps showing normalized grand-average event-related potential waves separately for typical and atypical targets. Note the canonical P1 (positivity effect between 100 and 140 ms) and N170 (negativity effect between 160–200 ms) topographies in bilateral occipito-temporal cortex across both conditions, but that the N170 is slightly stronger in the typical condition (but only in the right hemisphere).

If the N170's enlargement for sex-typical faces truly reflects encoding of sex-category content, its amplitude should be associated with sex-categorization latencies, as encoding should facilitate categorization. Specifically, more enlarged N170s in response to sex-typical (relative to sex-atypical) faces should be accompanied by faster categorizations of sex-typical (relative to sex-atypical) faces. For each participant, difference scores (atypical – typical) for right N170 amplitudes (at P4 site) and for response latencies were calculated. As predicted, more enlarged N170s for sex-typical (relative to sex-atypical) faces were associated with more efficient sex categorizations for sex-typical (relative to sex-atypical) faces [r(21) = 0.37, P < 0.05 (one-tailed for directional hypothesis)], as shown in Fig. 1b.

We also explored whether the earlier P1 component was modulated by sex typicality at occipito-temporal sites. Beyond its established role in low-level visuoperceptual processing, the P1 has recently been shown to play a role in global configural processing of the face, as its latency is modulated by configural transformations, such as face inversion [17]. If social category encoding runs parallel with the extraction of lower-level face information, as we argue, it is possible that the P1 may also be sensitive to sex typicality. Mean amplitudes and peak latencies between a poststimulus window of 100–140 ms were averaged together for left occipito-temporal sites (CP5/P3) and together for right occipito-temporal sites (CP6/P4). In the left hemisphere, P1 amplitude was not modulated by sex typicality [t(22) = 1.00, P =0.33] nor was P1 latency [t(22) = 1.41, P = 0.17]. In the right hemisphere, P1 amplitude was also not modulated by sex typicality [t(22) = 0.93, P = 0.36]. However, the P1 of the right hemisphere did peak earlier for typical faces (mean = 118 ms) relative to atypical faces (mean = 126 ms) [t(22) = 2.40, P <0.05].

Discussion

The right N170 was enlarged for faces containing more, relative to less, sex-category content and its amplitude predicted sex-categorization efficiency. These results suggest that beyond the N170's established role in structural encoding [4], it is also involved in encoding social category information. That social category processing spatially and temporally coincided with an index of early structural encoding (the N170) implies that processing from initial structural encoding does not feed forward to a subsequent social category encoding mechanism, as classically outlined by the Bruce and Young model [2]. Rather, the encoding of social categories may begin in parallel with structural encoding. These results suggest the possibility that structural encoding and social category encoding may be performed by a single dynamic process [9–11] rather than two separate mechanisms. This would be consistent with the perspective that the face perceptual system is structured in a common multidimensional face space, in which various aspects of the face could be encoded simultaneously (e.g. [11,18]).

In addition to the right N170, the right occipito-temporal P1 peaked earlier for sex-typical relative to sex-atypical faces. This agrees with recent work finding modulation of right P1 latency by configural face transformations (e.g. [17]). That sex-category processing was reflected in the earlier right P1 as well, a component thought to play a role in global configural face encoding [17], provides further support for the notion that social category encoding is part of a temporally dynamic process, in which sex-category and lower-level face information would be extracted across time and in parallel [9–11], as described earlier.

That sex-category encoding was lateralized to the right hemisphere, despite overall N170 and P1 effects in both hemispheres (Figs 2 and 3), agrees with a large body of neuroimaging and psychophysiological work finding that face processing tends to be right-lateralized [19]. The present results point to the importance of neural processing in right occipito-temporal cortex between 100 and 190 ms for extracting social category information from the face.

Conclusion

In summary, we found that the right N170 and right P1 were sensitive to sex-category facial content, and that N170 amplitude predicted the efficiency of sex categorizations. This shows that social category encoding and the extraction of lower-level face information operate in parallel, rather than sequential stages.

Acknowledgements

This research was funded by a National Science Foundation research grant (BCS-0435547) and a National Institute of Child Health and Human Development research grant (HD25889).

References

- 1.Macrae CN, Bodenhausen GV. Social cognition: thinking categorically about others. Annu Rev Psychol. 2000;51:93–120. doi: 10.1146/annurev.psych.51.1.93. [DOI] [PubMed] [Google Scholar]

- 2.Bruce V, Young AW. A theoretical perspective for understanding face recognition. Br J Psychol. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- 3.Eimer M. Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clin Neurophysiol. 2000;111:694–705. doi: 10.1016/s1388-2457(99)00285-0. [DOI] [PubMed] [Google Scholar]

- 4.Sagiv N, Bentin S. Structural encoding of human and schematic faces: holistic and part-based processes. J Cogn Neurosci. 2001;13:937–951. doi: 10.1162/089892901753165854. [DOI] [PubMed] [Google Scholar]

- 5.Bentin S, Deouell LY. Structural encoding and identification in face processing: ERP evidence for separate mechanisms. Cogn Neuropsychol. 2000;17:35–54. doi: 10.1080/026432900380472. [DOI] [PubMed] [Google Scholar]

- 6.Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- 7.Liu J, Harris A, Kanwisher N. Stages of processing in face perception: an MEG study. Nat Neurosci. 2002;5:910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- 8.Rolls ET, Tovee MJ. Sparseness of the neuronal representation of stimuli in the primate temporal visual cortex. J Neurophysiol. 1995;73:713–726. doi: 10.1152/jn.1995.73.2.713. [DOI] [PubMed] [Google Scholar]

- 9.Freeman JB, Ambady N, Rule NO, Johnson KL. Will a category cue attract you? Motor output reveals dynamic competition across person construal. J Exp Psychol Gen. 2008;137:673–690. doi: 10.1037/a0013875. [DOI] [PubMed] [Google Scholar]

- 10.Valentin D, Abdi H, O'Toole AJ, Cottrell GW. Connectionist models of face processing: a survey. Pattern Recognit. 1994;27:1209–1230. [Google Scholar]

- 11.Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- 12.Ito TA, Urland GR. The influence of processing objectives on the perception of faces: an ERP study of race and gender perception. Cogn Affect Behav Neurosci. 2005;5:21–36. doi: 10.3758/cabn.5.1.21. [DOI] [PubMed] [Google Scholar]

- 13.Mouchetant-Rostaing Y, Giard MH, Bentin S, Aguera P, Pernier J. Neurophysiological correlates of face gender processing in humans. Eur J Neurosci. 2000;12:303–310. doi: 10.1046/j.1460-9568.2000.00888.x. [DOI] [PubMed] [Google Scholar]

- 14.VanRullen R, Thorpe SJ. The time course of visual processing: from early perception to decision-making. J Cogn Neurosci. 2001;13:454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]

- 15.Goshen-Gottstein Y, Ganel T. Repetition priming for familiar and unfamiliar faces in a sex-judgment task: evidence for a common route for the processing of sex and identity. J Exp Psychol Learn Mem Cogn. 2000;26:1198–1214. doi: 10.1037//0278-7393.26.5.1198. [DOI] [PubMed] [Google Scholar]

- 16.Blanz V, Vetter T. A morphable model for the synthesis of 3D faces. SIGGRAPH'99. Association for Computing Machinery; Los Angeles, California, USA: 1999. pp. 187–194. [Google Scholar]

- 17.Boutsen L, Humphreys GW, Praamstra P, Warbrick T. Comparing neural correlates of configural processing in faces and objects: an ERP study of the thatcher illusion. Neuroimage. 2006;32:352–367. doi: 10.1016/j.neuroimage.2006.03.023. [DOI] [PubMed] [Google Scholar]

- 18.Tanaka J, Giles M, Kremen S, Simon V. Mapping attractor fields in face space: the atypicality bias in face recognition. Cognition. 1998;68:199–220. doi: 10.1016/s0010-0277(98)00048-1. [DOI] [PubMed] [Google Scholar]

- 19.Mercure E, Dick F, Halit H, Kaufman J, Johnson MH. Differential lateralization for words and faces: category or psychophysics? J Cogn Neurosci. 2008;20:2070–2087. doi: 10.1162/jocn.2008.20137. [DOI] [PubMed] [Google Scholar]