Abstract

Objective. To develop, implement, and review a competence-assessment program to identify students at risk of underperforming at advanced pharmacy practice experience (APPE) sites and to facilitate remediation before they assume responsibility for patient care.

Design. As part of the standardized client program, pharmacy students were examined in realistic live client-encounter simulations. Authentic scenarios were developed, and actors were recruited and trained to portray clients so students could be examined solving multiple pharmacy problems. Evaluations of students were conducted in the broad areas of knowledge and live performance.

Assessment. Measurements included student-experience survey instruments used to evaluate case realism and challenge; videos used to determine the fidelity of standardized clients, and clerkship performance predictions used to identify students who required individual attention and improvement prior to clerkship courses.

Conclusions. The assessment program showed promise as a means of discriminating between students who are prepared for APPEs and those at risk for underperforming.

Keywords: assessment, performance, patient, advanced pharmacy practice experience, standardized client

INTRODUCTION

In 2007, the University of Kansas School of Pharmacy (KU) developed a live competence-assessment program using unabridged whole cases to assess the practice competence of students. It was developed to better address new Accreditation Council for Pharmacy Education (ACPE) standards, which were adopted in December 2006.1 Whereas knowledge of pharmaceutical science had long been the core of assessment plans in colleges and schools of pharmacy, the new standards emphasized translation into patient care. The assessment goal was to determine student competence in translating knowledge into practice and to identify students at risk of underperforming in clerkships. To accomplish these objectives, the program placed students in a series of authentic simulated live clinical encounters with standardized clients representing a broad spectrum of pharmacy care and measured their actions directly. A standardized client is a live actor trained to perform a specific client role repeatedly. The term client is used because many significant encounters are with people other than patients, such as physicians, nurses and family members.

In-class role-plays, laboratory simulations, and objective structured clinical examinations (OSCEs) are among several methods for assessing clinical competence during performance, but these are more remote from actual practice than are whole-case live encounters. Epstein and Hundert suggest that the best examination settings for assessing competence would attempt to emulate real practice.2 In current literature, use of the term OSCE fails to distinguish between examinations using whole or discrete skill-based cases and does not require live performance. Schuwirth and colleagues concluded that competence decisions made using only discrete-skill OSCEs have limited psychometric value to assess competence in solving practice problems.3,4 This difference is analogous to assessing a student taking a sphygmomoter blood pressure measurement on a partner in a laboratory exercise compared with assessing a student conducting an evaluation of a hypertensive patient who is fearful of the results in a medication therapy management clinic.5,6 Moreover, other researchers suggest that validity is increased when students are tested in realistic conditions.4,7 Variability and unpredictability of live examinations and intermittent availability of patients are limitations of testing with patients in real settings. These limits have given rise to the development of assessment methods in medical education using stand-in performers.8,9

Authentic whole-case live encounters using performers have proliferated in medical schools and licensing examinations since first being introduced in 1964.7,10-12 Colleges and schools of pharmacy have adapted and refined the innovation for use in performance-based assessment of pharmacy students.13,14 The more inclusive term standardized client was chosen for the Kansas program to accommodate a broader set of assessment scenarios. By the early 2000s, US and Canadian medical and Canadian pharmacy license examinations required candidates to complete simulated live clinical encounters.15-17 Advantages of whole-case live assessments are highlighted by 2 general bodies of evidence in educational research. First, clinical experience suggests that competence should be measured while candidates are attempting to solve clinical problems, not only when demonstrating discrete skills.2,18 Second, in higher education, whole cases provide valid measures of competency and to also serve positively impact student learning.19-21 Messic and others suggest that learning is a consequence of assessment, and that an assessment program can be designed not only to measure competence but also to generate desirable outcomes.22-24 Whereas OSCE-style task assessments have been used historically to determine if specific skills have been mastered in KU pharmacy teaching laboratories, adding a live, whole-case standardized client program has afforded evaluation of problem solving, which is a higher-level composite set of these skills.

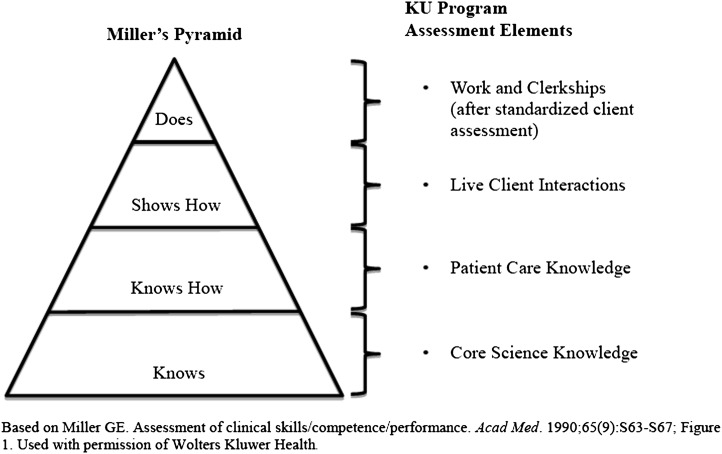

Assessment of both pharmacy knowledge and actions are combined in the KU program, which is illustrated with Miller’s Pyramid (Figure 1).25 Starting from the pyramid base, “knows” and “knows how” are represented by computer-based examinations of case-specific knowledge of the drugs, disease states, and practice knowledge required in each case given immediately before and after a live encounter. Next, “shows how” is represented by actual case-specific student performance. Standardized client observations are documented immediately after the encounter to assess the student’s pharmacy actions and communication. The entire examination up to this point is constructed to represent the most potentially realistic, valid, and reliable encounters achievable within the school environment where the assessment can be adequately controlled. “Does” is later assessed in actual practice settings. In-school performance examinations supplement and expand the school’s pharmacy practice experience assessments and sample competence in all students on a more equal and reliable basis across a spectrum representative of common practice. Such evaluations have been demonstrated to achieve reliability sufficient to be used for high-stakes decisions on medical student progression.26 By assessing students’ competence in live cases early in their school career, there can be time and resources for remediation.2

Figure 1.

Miller’s Pyramid applied to the Kansas School of Pharmacy Standardized Client Program.a

DESIGN

The University of Kansas School of Pharmacy provides rigorous professional training founded on pharmaceutical disciplines. Whereas assessment activity has focused on traditional multiple-choice assessments of knowledge, which are well-established as a measure of important pharmacy school outcomes, adding live performance examinations required the expansion of assessment activity. Van der Vleuten and colleagues report strong documentation in the performance-assessment literature that competence is specific, not generic, and thus, must be assessed in significant contexts.27

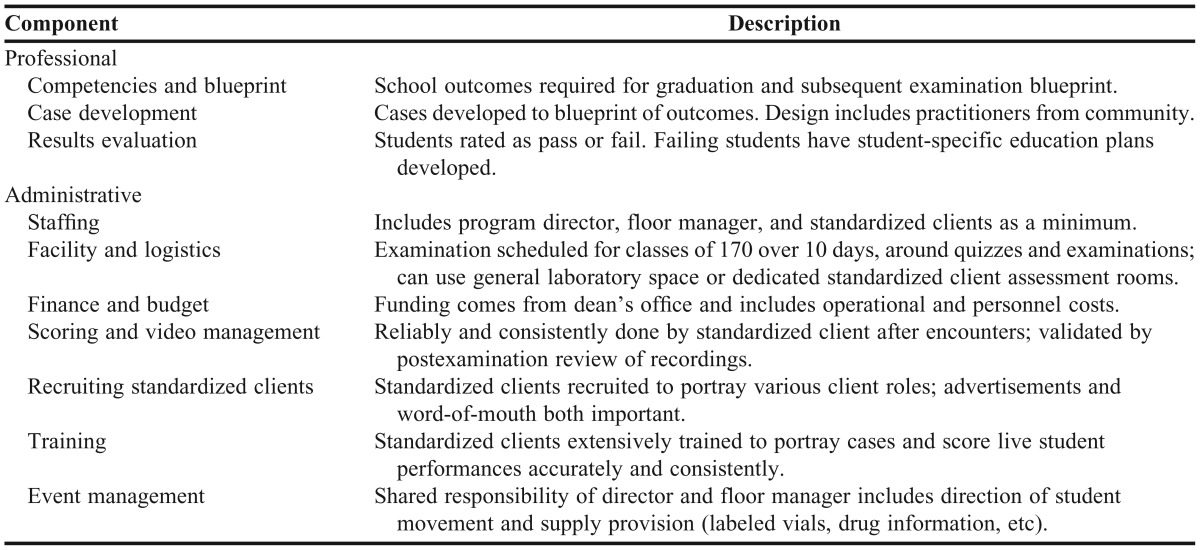

The University of Kansas School of Pharmacy created a program with 10 discrete critical components that can be seen as separate parts of work, each of which is subject to review and adjustment. There are 3 professional components requiring experienced pharmacists and pharmacy faculty members, and 7 administrative components requiring a standardized-client methodologist (Table 1).

Table 1.

Ten Essential Components of Standardized Client Program Development and Delivery

Professional Components

The school’s educational outcomes, within ACPE accreditation standards, were used as the examination framework, which was known as a blueprint.26,28 The KU blueprint was developed to ensure that performance would be sampled in the school’s significant outcome domains and that it would represent practice settings in proportion to historical graduate placement (75% community:25% health system) and practice activity classifications.29,30 For the pilot examination in 2007, 2 cases were designed by staff members along with 2 faculty members, and used for the class of 2008 (N=103). Students counseled a distracted mother (community setting) and resolved an overlooked allergy with a “grumpy” doctor (institutional setting). Four cases were used for each examination in subsequent years.

Cases were designed to place students in specific and standard situations in which successful resolution of pharmacy problems depended on the display of professional competence at the student’s current training level. Each case consisted of a scenario and an assessment. The scenario captured pharmacy problems so they could be represented in encounters between the student-as-pharmacist and the standardized client. Assessment captures the data regarding the specific pharmacist actions and quality of communication needed to resolve the cases through the use of checklists. For example, in 2 pilot cases, the standardized client documented after each encounter whether the student “asked the mother to demonstrate understanding of antibiotic prescription counseling” or “identified the glucose control issue to the physician before suggesting a solution.” Actions were to be objectively and precisely stated so they could be reliably observed and accurately recorded by nonpharmacist standardized clients.31 A communication scale common to all cases was adapted to assess communication32,33; standardized clients have been shown to rate student communication reliably.34,35 The pilot cases required an inordinate amount of time, highlighting the need for additional help in framing more cases.

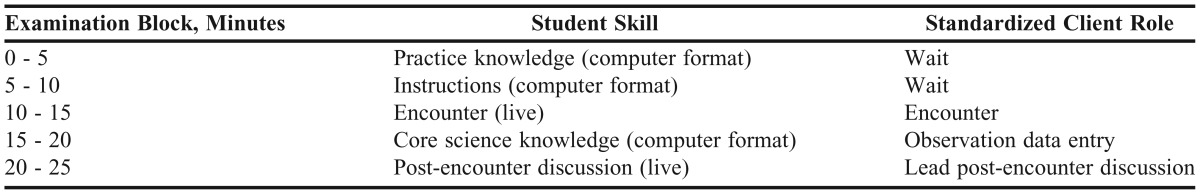

Four hundred fifty-seven of the school’s preceptors were invited to volunteer for single-day collaborative workgroup sessions. Over a 4-year period, the 26 (6%) preceptors who were able to attend 1 or more of the 10 scheduled workgroups per year developed 24 cases. Each case was framed in 5 segments: (1) problems and scenario; (2) pharmacist and standardized client actions; (3) review and assessment; (4) item standards; and (5) case standards. Because student performance in standardized client cases might be limited by knowledge of the science and appropriate practice actions to resolve the problems, case-specific measurement of knowledge was also developed as part of each case. Practice knowledge and core science knowledge questions specific to each case were written by school faculty members and included before and after the standardized client encounter (Table 1).

Knowledge-based results from core science and practice-knowledge domains, and performance-based results from action and communication domains were reported from each examination. The minimal competence cut point was the average of each of the student’s case scores by domain. Cut points were set as part of case development, with linear regression statistics on judgments made about whole student performances using defensible methods based on those described by Boulet and colleagues.36,37 The judgments came from case framers who classified representative checklists of student performances as “qualified” or “unqualified” at the student’s grade level. Judging for a communication cut point was made by standardized clients during the examination using the same statistics.

By 2 weeks after the examination, an individual report was sent to each student with the percentage of all points achieved in each domain, the class average, and whether there was a qualified demonstration. Students who failed in 1 domain were asked to create an individual educational plan with the associate dean for academic affairs for improvement based on their practice opportunities. Individual results were also reported to the school’s assessment committee. Composite results from each student’s 3 examinations across 3 years served as a threshold of readiness to begin advanced pharmacy practice experiences (APPEs) and were used to inform the school’s curriculum committee.

Administrative Components

Management of the new standardized client program was determined to be best provided by a director without other substantial responsibilities.38 One additional part-time staff member was added to the KU program after the pilot to manage student flow and place and remove case-specific documents and props during examinations. Standardized clients were considered temporary employees of the university but could be recruited to fill recurring roles.

Dedicated space that was configured so examinees did not overhear each other was a requirement on examination days. The space could be either program-managed or shared. Examinations for the 3 grade levels at KU were spread out to maximize use of space, staff, and ideal times for students. After the pilot year, each examination consisted of 4 cases for each student administered simultaneously to a wave of 4 students. This required forty-three 105-minute waves for the class of 170 students. Given that 4 waves took one 8-hour workday, the entire examination required 11 days. The first 9 examinations in the KU program were staged in shared pharmacy skills laboratory space and moved to a program-dedicated newly constructed space that opened in 2010. Program staff salary made up the majority of the program’s expense after initial fixed costs for supplies and equipment, such as cameras and storage media. Program financing came from a central administrative source (dean’s office) since the examination measures programmatic student outcomes.

Before each encounter, students completed the computer-aided knowledge assessment; after each encounter, students completed the core science assessment and the standardized client entered observations of pharmacist actions and communication responses (Table 2). Scores were expressed as percentages of possible points. Data for these scores were retained long-term in a Web-based database developed within the school and were used to create the reports previously described. Quality assurance studies were conducted on each standardized client in each examination. A sample of video recordings was observed and used to assess the quality of case presentation and student scoring by standardized clients and to train future uses of the same case. Video data were also retained as a long-term record of examination results.

Table 2.

Five Blocks of the Standardized Client Examination

The standardized client program needed to find people who could faithfully represent the assigned client profile while observing student performance accurately, lead student discussions, and be willing to work only 2 weeks annually. The program sought qualified people who were interested in this educational work and had control over their occupational life and personal time. Standardized clients serve well as recruiters because they often know people who have similar interests. To begin recruitment for our new program, an advertisement was placed in a news section of the local newspaper seeking “itinerant educators,” which yielded an abundant supply of qualified applicants who were satisfied to work once a year. Occasional advertisements of this type along with a recruitment pool from the university’s human resources Web site produced over 30 qualified standardized clients in 3 years.

Training for standardized clients involved teaching them to realistically and consistently represent their respective cases, simultaneously observe and reliably document student performance, and lead post-encounter discussions. They were trained at the school using video recordings from previous examinations and repetitious practice with staff members who role-played students for new cases. The typical training time for a standardized client was 10 hours over 4 sessions; less training time was needed for returning standardized clients. A floor manager was needed during examinations to direct students and to stage case-specific details, such as placement and removal of prescriptions, vials, and other dated medication records for as many as 100 encounters in a workday. The director provided floor management for the pilot examination but later transitioned this responsibility to an administrative support staff person, while remaining available as a backup. Other management duties for this support person included scheduling students for the examination, labeling their video files, and role-playing students in standardized client training.

EVALUATION AND ASSESSMENT

Fifteen assessment examinations covering students in their first through third years of the doctor of pharmacy (PharmD) program have been conducted to date, producing more than 1,300 student assessments and 5,000 discrete case encounters. The program was evaluated using 4 metrics. For the first metric (student satisfaction), over 90% of students in all 15 examinations agreed that the “case portrayals were realistic” and “the exam challenged me to think critically.” To assess the standardized client case performance for fidelity to ensure it was consistent across students, over 90% of the case-specific standardized client performance targets were performed in a 10% sample of video recordings of each standardized client’s work.

Another metric was to assess how accurately each standardized client had observed and documented what students did in the encounters. In the first examination, the standardized clients averaged 83% accuracy and in the 14 subsequent examinations, they averaged 95% when yes/no responses were compared for agreement with independent observers in the sample review of video recordings. In the final metric, student scores were used to identify individual students at risk of performing poorly in APPEs. Students who scored below passing on each examination domain were compared with students whose performance raised academic concerns during the experiences. Academic concern was defined as any issue requiring a pharmacy practice site intervention by the APPE director after student performance or behavior had been questioned by preceptors. Five out of 10 students in the class of 2012 who experienced notable difficulties in APPEs had also failed to establish competence in 1 or more domains in the last of their 3 examinations administered in the month before they started their APPEs. Moreover, 4 of those 5 had also been identified 2 years earlier in their first examination.

DISCUSSION

Student reports of high satisfaction with case realism and challenge following their participation in the KU program suggest that the students experienced meaningful simulation of the practice environment. Possible reasons that the students took the simulations seriously could be because they were being assessed, because the scenarios closely approximated professional encounters seen at clerk/technician sites, and because the simulation examinations had been described in practice classes and heard about from peers. Validity of the examination used to assess competence is also supported by high measures of standardized client case performance fidelity and observation accuracy. For fidelity, a case was required to have been presented in essentially the same way to each student to enable a fair comparison of resultant scores with an a priori standard, the cut point. Potential variation in how the cases were portrayed was quantified and reported so it could be determined if students were assessed with “the same case.” For accuracy, documentation of what students did during encounters relied on high levels of agreement between the observations of the standardized clients and independent observers. Finally, validity was established by precisely identifying low APPE performance. Identifying at-risk students was attempted only anecdotally prior to the assessment of performance in this program. The Associate Dean for Academic Affairs historically had to wait for APPE grades to identify at-risk experiential students, which was too late for remediation prior to on-time graduation. However, now with earlier standardized client examination data, improvement plans can be implemented prior to APPEs to minimize failures and contribute to maintaining and improving preceptor relations.

Standardized clients are a resource of exceptional value to the University of Kansas School of Pharmacy because they assess skills previously unevaluated during the professional training program. As an added value, appropriately trained standardized clients can also serve as capable educators in reflective discussions after the encounters. This dual purpose creates value for both students and faculty members. One examination in which standardized clients observe, perform, and lead students in discussion can be replicated for multiple students with minimal additional time investment. Early identification of the students who might encounter difficulties later in APPEs leaves room for educational remediation that can be delivered efficiently and individually for the few who need it.

Consistent financing is a key to the longevity of any program. Colleges and schools at which examinations develop within a department because of the passion of a single faculty member may struggle for ongoing resources. Central school financing fits well with central authority for assigning high stakes to the examination. Although tempting from a budget perspective, attempting to use faculty for program direction risks creating role conflicts with teaching, service, and scholarship. The director of the KU program came from a standardized patient program in a medical school. The authors suggest that an experienced standardized client methodologist working as dedicated staff or with an outside training consultant is a requirement for success in starting such a broad program.

A drawback in the present program is that it establishes only a pass/fail threshold and is not designed or powered to determine levels of excellence. It is not well-suited for the calculation of scores that translate to letter grades but instead is designed to determine who has reached the minimum threshold of preparation to begin practice experiences. Another possible limitation is that the design and operation of the program requires significant effort, dedicated personnel, and a substantial commitment of resources. At KU, the prediction of APPE failure was considered important enough to justify the administration initiating and continuing to invest in the program. The time commitment required will continue to be reviewed and streamlined to ensure extended viability and success.

SUMMARY

By using independent, highly trained standardized clients and a careful case-development process involving varied stakeholders, the program can be used to assess students performing as pharmacists in practice settings similar to those they are likely to experience in their careers. We were able to predict a substantial percentage of the students likely to perform poorly in practice experiences. The authors believe that this program will ultimately improve the quality of pharmacy care provided by our graduates by identifying and improving students not progressing adequately. The KU School of Pharmacy standardized client assessment program was designed to be portable to other professional schools and is being considered for use by others.

ACKNOWLEDGEMENTS

This program was funded in part by a generous gift from Wyeth Pharmaceuticals.

REFERENCES

- 1.Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree. Effective. July 1, 2007. http://www.acpe-accredit.org/deans/standards.asp. Accessed October 25, 2011.

- 2.Epstein RM, Hundert EM. Defining and assessing professional competence. J Am Med Assoc. 2002;287(2):226–235. doi: 10.1001/jama.287.2.226. [DOI] [PubMed] [Google Scholar]

- 3.Schuwirth LWT, van der Vleuten CPM. A plea for new psychometric models in educational assessment. Med Educ. 2006;40(4):296–300. doi: 10.1111/j.1365-2929.2006.02405.x. [DOI] [PubMed] [Google Scholar]

- 4.Frederiksen JR, White BY. Designing assessments for instruction and accountability: an application of validity theory to assessing scientific inquiry. Yearb Natl Soc Study Educ. 2004;103(2):74–104. [Google Scholar]

- 5.Harden RM, Gleeson FA. Assessment of clinical competence using an objective structured clinical examination (OSCE) Med Educ. 1979;13(1):41–54. [PubMed] [Google Scholar]

- 6.Newble D. Techniques for measuring clinical competence: objective structured clinical examinations. Med Educ. 2004;38(2):199–203. doi: 10.1111/j.1365-2923.2004.01755.x. [DOI] [PubMed] [Google Scholar]

- 7.Frederiksen N. The real test bias: influences of testing on teaching and learning. Am Psychol. 1984;39(3):193–202. [Google Scholar]

- 8.Epstein RM. Assessment in medical education. N Engl J Med. 2007;356(4):387–396. doi: 10.1056/NEJMra054784. [DOI] [PubMed] [Google Scholar]

- 9.Schuwirth LWT, van der Vleuten CPM. The use of clinical simulations in assessment. Med Educ. 2003;37(Suppl. 1):65–71. doi: 10.1046/j.1365-2923.37.s1.8.x. [DOI] [PubMed] [Google Scholar]

- 10.Barrows HS, Abrahamson S. The programmed patient: a technique for appraising student performance in clinical neurology. J Med Educ. 1964;39(8):802–805. [PubMed] [Google Scholar]

- 11.Barrows HS. An overview of the uses of standardized patients for teaching and evaluating clinical skills. Acad Med. 1993;68(6):443–451. doi: 10.1097/00001888-199306000-00002. [DOI] [PubMed] [Google Scholar]

- 12.Petrusa ER. Current challenges and future opportunities for simulation in high-stakes assessment. Simul Healthc. 2009;4(1):3–5. doi: 10.1097/SIH.0b013e3181992077. [DOI] [PubMed] [Google Scholar]

- 13.Austin Z, O’Byrne C, Pugsley J, Munoz LQ. Development and validation processes for an objective structured clinical examination (OSCE) for entry-to-practice certification in pharmacy: the Canadian Experience. Am J Pharm Educ. 2003;67(3):Article 76. [Google Scholar]

- 14.Sturpe DA. Objective structured clinical examinations in doctor of pharmacy programs in the United States. Am J Pharm Euc. 2010;74(8):Article 148. doi: 10.5688/aj7408148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.United States Medical Licensing Examination. Step 2 Clinical Skills Bulletin of Information. 2010. http://www.usmle.org/pdfs/bulletin/2012bulletin.pdf. Accessed October 25, 2011.

- 16.The Pharmacy Examining Board of Canada. General information. http://www.pebc.ca/index.php/ci_id/3146/la_id/1.htm. Accessed October 25 2011. [PMC free article] [PubMed]

- 17.Medical Council of Canada. Qualifying examination Part II. www.mcc.ca/en/exams/qe2. Accessed October 25, 2011.

- 18.Hodges B. Medical education and the maintenance of incompetence. Med Teach. 2006;28(8):690–696. doi: 10.1080/01421590601102964. [DOI] [PubMed] [Google Scholar]

- 19.Baylor AL, Kitsantas A. A comparative analysis and validation of instructivist and constructivist self-reflective tools (IPSRT and CPSRT) for novice instructional planners. J Technol Teach Educ. 2005;13(3):433–457. [Google Scholar]

- 20.Black P, Wiliam D. Inside the black box. Phi Delta Kappan. 1998;80(2):139–144. [Google Scholar]

- 21.Yorke M. The management of assessment in higher education. Assess Eval Higher Educ. 1998;23(2):101–116. [Google Scholar]

- 22.Messick S. The interplay of evidence and consequences in the validation of performance assessments. Educ Res. 1994;23(2):13–23. [Google Scholar]

- 23.van der Vleuten C. Validity of final examinations in undergraduate medical training. Br Med J. 2000;321(7270):1217–1219. doi: 10.1136/bmj.321.7270.1217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Crooks TJ. The impact of classroom evaluation practices on students. Rev Educ Res. 1988;58(4):438–481. [Google Scholar]

- 25.Miller GE. Assessment of clinical skills/competence/performance. Acad Med. 1990;65(9):S63–S67. doi: 10.1097/00001888-199009000-00045. [DOI] [PubMed] [Google Scholar]

- 26.Wass V, van der Vleuten CPM, Shatzer J, Jones R. Assessment of clinical competence. Lancet. 2001;357(9260):945–949. doi: 10.1016/S0140-6736(00)04221-5. [DOI] [PubMed] [Google Scholar]

- 27.Van der Vleuten CPM, Schuwirth LWT, Scheele E, Driessen EW, Hodges B. The assessment of professional competence: building blocks for theory development. Best Pract Res Clin Obstet Gynaecol. 2010;24(6):703–719. doi: 10.1016/j.bpobgyn.2010.04.001. [DOI] [PubMed] [Google Scholar]

- 28.Van der Vleuten CPM. The assessment of professional competence: developments, research and practical implications. Adv Health Sci Educ. 1996;1(1):41–67. doi: 10.1007/BF00596229. [DOI] [PubMed] [Google Scholar]

- 29.The American Pharmacist Association. Pharmacy Practice activity classification Version 1.0. 1998. www.pharmacist.com/sites/default/files/pharmacy_practice_activity_classification.pdf Accessed December 13, 2012. [DOI] [PubMed]

- 30.Council on Credentialing in Pharmacy. Scope of contemporary pharmacy practice: roles, responsibilities, and functions of pharmacists and pharmacy technicians. J Am Pharm Assoc. 2010;50(2):e35–e69. doi: 10.1331/JAPhA.2010.10510. [DOI] [PubMed] [Google Scholar]

- 31.Gorter S, Rethaus J, Scherpbier A, et al. Developing case-specific checklists for standardized patient-based assessments in internal medicine: a review of the literature. Acad Med. 2000;75(11):1130–1137. doi: 10.1097/00001888-200011000-00022. [DOI] [PubMed] [Google Scholar]

- 32.Hodges B, McIlroy JH. Analytic global OSCE ratings are sensitive to level of training. Med Educ. 2003;37(11):1012–1016. doi: 10.1046/j.1365-2923.2003.01674.x. [DOI] [PubMed] [Google Scholar]

- 33.Austin Z, Dolovich L, Lau E, Tabak D, Sellors C, Marini A, Kennie N. Teaching and assessing primary care skills: the family practice simulator model. Am J Pharm Educ. 2005;69(4):Article 68. [Google Scholar]

- 34.Feely TH, Manyon AT, Servoss TS, Panzarella KJ. Toward validation of an assessment tool designed to measure medical students’ integration of scientific knowledge and clinical communication skills. Eval Health Prof. 2003;26(2):222–233. doi: 10.1177/0163278703026002006. [DOI] [PubMed] [Google Scholar]

- 35.McLaughlin K, Gregor L, Jones A, et al. Can standardized patients replace physicians as OSCE examiners? BMC Med Educ. 2006;6(12):1–5. doi: 10.1186/1472-6920-6-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Boulet JR, Murray D, Kras J, Woodhouse J. Setting performance standards for mannequin-based acute care scenarios: an examinee-centered approach. Simul Healthc. 2008;3(2):72–81. doi: 10.1097/SIH.0b013e31816e39e2. [DOI] [PubMed] [Google Scholar]

- 37.Boulet JR, DeChamplain AF, Mckinley DW. Setting defensible performance standards on OSCEs and standardized patient examinations. Med Teach. 2003;25(3):245–249. doi: 10.1080/0142159031000100274. [DOI] [PubMed] [Google Scholar]

- 38.Adamo G. Simulated and standardized patients in OSCEs: achievements and challenges 1992-2003. Med Teach. 2003;23(3):262–270. doi: 10.1080/0142159031000100300. [DOI] [PubMed] [Google Scholar]