Abstract

Introduction

The CTSA Community Engagement Consultative Service (CECS) is a national partnership designed to improve community engaged research (CEnR) through expert consultation. This report assesses the feasibility of CECS and presents findings from 2008 to 2009.

Methodology

A coordinating center and five regional coordinating sites managed the service. CTSAs identified a primary previsit CE best practice for consultants to address and completed self‐assessments, postvisit evaluations, and action plans. Feasibility was assessed as the percent of CTSAs participating and completing evaluations. Frequencies were calculated for evaluation responses.

Results

Of the 38 CTSAs, 36 (95%) completed a self‐assessment. Of these 36 sites, 83%, 53%, and 44% completed a consultant visit, evaluation, and action plan, respectively, and 56% of the consultants completed an evaluation. The most common best practice identified previsit was improvement in CEnR (addressing outcomes that matter); however, relationship building with communities was most commonly addressed during consulting visits. Although 90% of the consultants were very confident sites could develop an action plan, only 35% were very confident in the CTSAs’ abilities to implement one.

Conclusions

Academic medical centers interested in collaborating with communities and translating research to improve health need to further develop their capacity for CE and CEnR within their institutions. Clin Trans Sci 2013; Volume 6: 34–39

Keywords: prevention, translational research, ethics

Introduction

Skills and knowledge relating to community engagement (CE) and community engaged research (CEnR), including Community Based Participatory Research (CBPR), are increasingly in demand at U.S. academic medical centers (AMCs). Institutions within the Clinical and Translational Science Award (CTSA) Consortium, a key National Institute of Health Roadmap initiative, recognize CE and CEnR as essential components of translational medicine.1 Institutions conducting research collaboratively with communities demonstrate improved success in identifying relevant problems and formulating adaptable, practical solutions.2 This report describes the work of the Community Engagement Consultative Service (CECS), a CTSA‐funded project designed to provide expert CE consultations for AMCs within the Consortium. An evaluation was conducted assessing the implementation process, participation rates, service process measures, service satisfaction, and lessons learned. This evaluation contributes to the national effort to develop AMCs’ CE and CEnR capacity.

Evolution of the CECS concept

CE is “a process of inclusive participation that supports mutual respect of values, strategies, and actions for authentic partnership of people affiliated with or self‐identified by geographic proximity, special interest, or similar situations to address issues affecting the well‐being of the community of focus.”3 CE depends on authentic, mutually beneficial partnerships to enhance and improve the research process. It makes translational research possible by helping researchers better understand community priorities.4, 5 CEnR involves many types of research with the common goal to strengthen the capacity to solve health challenges and address health disparities. The CECS project was funded to help researchers and institutions develop the knowledge, skills, and attitudes to successfully engage with internal and external groups and communities.2, 6, 7

In 2008, the Consortium‘s CE Key Function Committee (KFC), in conjunction with the Association for Prevention Teaching and Research, convened a series of regional workshops to explore CE Best Practices. A 2009 monograph, “Researchers and Their Communities: The Challenge of Meaningful Engagement,” summarized each workshop and articulated a series of “Best Practices.” 8 Overall, participating AMCs found the workshops and monograph useful and suggestive, with researchers expressing a need to act on the ideas and develop new capacities and expertise for CE. The CTSA‘s Steering Committee established the CECS to facilitate sites in sharing CE expertise and further develop CE capacity and contribution to translational medicine.

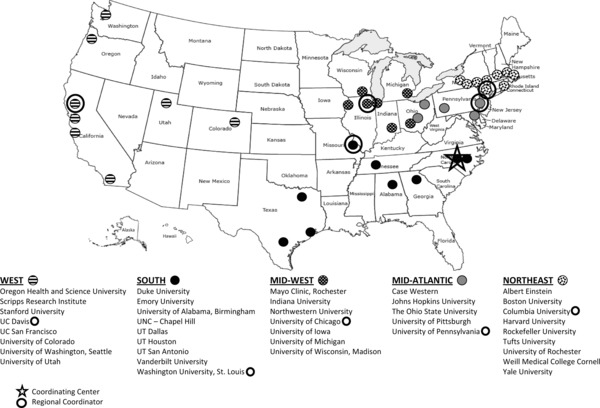

The service sought to address the expressed needs of CTSA researchers to: (1) identify experts willing to share their CE experiences; (2) promote site self‐assessment of capacity for CE and identification of areas for development; and (3) match sites with CE consultants and provide consultation funds. In the first year of this service (the period reported in this paper) the Consortium included 38 CTSA sites across five regions (Figure 1). Duke University served as the national coordinating site and five other universities assisted as regional coordinating sites (South: Washington University St. Louis; West: University of California, Davis; Mid‐West: University of Chicago; Mid‐Atlantic: University of Pennsylvania; and Northeast: Columbia University).

Figure 1.

CTSA sites and regions (n = 38), 2008–2009.

Duke University built and hosted a Consortium‐supported website that provided: a list of experts to serve as CE consultants; instructions for requesting a consultant; literature on CBPR and CEnR; and a list of 21 “Best Practices” in CE summarized from the 2009 monograph.8 A directory of experts was compiled by asking Consortium members to submit names of consultants and their area(s) of CE expertise. This list served as a reference for sites. The website also included online forms to submit pre‐ and postconsultant visit assessments. These assessments enabled sites to begin evaluating their own capacity to support CE.

Methods

The process

The regional coordinating sites and coordinating center announced the CECS, followed by monthly reminders during CE KFC conference calls and email, inviting sites to use the service. Sites could access the CECS Website to gain further information, and all sites were encouraged to complete a site self‐assessment of CE, regardless of whether they planned to use the service. After completing the assessment, a regional coordinating site or the coordinating center offered to assist sites that asked for help in identifying an appropriate CE expert based on the CE need they expressed. Sites made arrangements directly with the consultant for the visit and reported this information to the coordinating center. After the consulting visit, sites and consultants were asked to complete visit evaluations. In addition, sites were encouraged to develop or enhance an existing CE action plan for their institution based on information gained from the visit.

Sites were asked to identify only one Best Practice of interest on the site self‐assessment form. Thus, site interest in more than one Best Practice was not captured. Rather, the approach was to encourage institutions to focus on strengthening one Best Practice and to provide consultants ample opportunity to address one primary CE or CEnR need during the visit. The postvisit evaluation was designed to inquire about what best practice(s) were actually covered in some capacity during the visit.

Assessment forms

Four different assessments were conducted. All surveys were completed online; responses were confidential, but not anonymous. The “site self‐assessment,” a six‐item survey, sought to capture: sites’ preconsultant visit CE and CEnR activities; perceived preparedness for CE and CEnR; the primary CE best practice of interest for the consultation; and specific issues the site intended to address. The “site evaluation” was a five‐item survey designed for sites to reflect on the consultant visit. This included the number and types of CE Best Practices addressed during the consultant visit; perceived helpfulness of the consultant; the most and least liked aspects of the visit; and issues the site would like to cover if the service was offered in the future. The “consultant evaluation of the site” was a nine‐item tool assessing the consultant‘s perceived objective of the visit; perceived visit productivity; postvisit contact with the site; and perceived confidence in the site‘s ability to develop and implement an action plan. The “site action plan” assessed site perceptions on how the consultant visit helped shape or expand thinking about community partnerships and collaborations; and intra‐ and interinstitutional partnerships. The evaluations also helped capture new CE activities, ideas, and milestones. Sites could receive an electronic version of their completed forms.

Data collection and analyses

Data were collected from online assessment forms accessed via the CECS Website (http://www.dtmi.duke.edu/dccr/cecs). Sites completed and submitted the forms, which were subsequently downloaded as reports and stored by the coordinating center. Responses were coded and entered into a master database. Percent frequencies were calculated to summarize completion rates. Assessments were made for the aggregate sample of sites and consultants. A “planned” Best Practice and “addressed” Best Practice were defined respectively as one reported in the previsit site self‐assessment and one reported during the consultant visit. To assess whether a site‘s planned Best Practice of interest reported during the site self‐assessment was a Best Practice addressed during the consultant visit, the frequency of matched responses was calculated for the sample of sites that completed both the previsit site self‐assessment and the postvisit evaluation forms.

Results

Of the 38 sites in the Consortium during the offering of the service, 36, or 95%, completed a self‐assessment. Of those 36 sites, 30 (83%) completed a consultant visit, 19 (53%) completed an evaluation of their visit and 16 (44%) reported preparing an action plan as a result of the visit. Twenty (56%) consultants completed a visit evaluation.

The 21 Best Practices identified in the monograph8 were organized into five domains to capture the core, overarching elements of the CE and CEnR process: building/strengthening relationships with communities; collaboratively strengthening research agendas with communities; strengthening research methods; building and sharing resources; and engaging in outreach and dissemination. Three additional Best Practices reported by two or more sites in their self‐assessments (how to build partnerships with other CTSA sites; how to support, train, and/or engage faculty in CEnR; and how to conduct multisite CE activities with multiple communities) were added to the list (Table 1).

Table 1.

Community engagement list of best practices by domain

| Building/Strengthening Relationships with Communities |

| How to think broadly about how to define community and identify community partners |

| How to be culturally smart when approaching and working with communities |

| How to structure long‐term relationships with community partners |

| How to build trust with partners |

| Collaboratively Strengthening Research Agendas with Communities |

| How to refocus the research agenda to include primary care and prevention |

| How to design flexible research projects that incorporate the community |

| How to include community partners in the earliest stages of research planning |

| How to work with community partners to collectively set the research agenda |

| How to build larger partnerships through small pilot projects |

| How to build partnerships with other CTSA sites |

| How to conduct multisite community engagement activities with multiple communities |

| Strengthening Research Methods |

| How to use nontraditional, culturally sensitive, effective methods and strategies to recruit within communities |

| How to successfully use both high‐technological and low‐technological methods of engagement |

| How to demonstrate that community engagement has improved your population's health—outcomes that matter (evaluation) |

| How to support, train, and/or engage faculty in community engaged research |

| Building and Sharing Resources |

| How to link community engagement and policy making support |

| How to successfully leverage existing resources, information, and tools which have been effective in community engagement |

| How to identify ways to compensate community workers—Pay and Compensation |

| How to change expectations of data acquisition and sharing |

| How to identify ways to share funds with community partners |

| Engaging in Outreach and Dissemination |

| How to reach out to provide care—providing medical care outside of the walls of the academic medical center |

| How to successfully use innovative methods and strategies for disseminating information |

| How to identify the best training models for different audiences (medical students, researchers, community partners, etc.) |

| How to understand how to work with an entire practice staff, not just the providers |

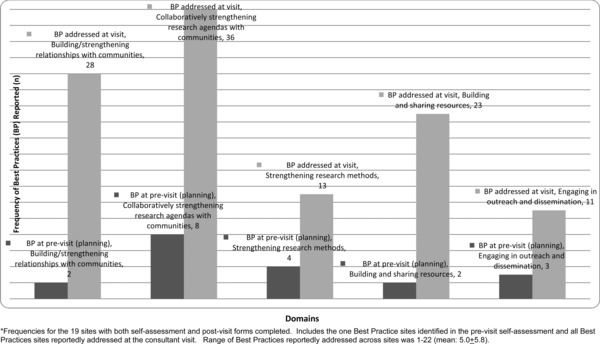

Prior to consultant visits, the three most common Best Practices reported were in the domains of “Collaboratively Strengthening Research Agendas,” “Strengthening Research Methods,” and “Engaging in Outreach and Dissemination.”

After the consulting visit, sites were asked to list all of the Best Practices that were addressed or discussed during the visit. A total of 111 instances of Best Practices were discussed during the consultant visits across the 19 postvisit responding sites. The number addressed varied across the sites (mean (SD): 5.8 (±5.0); range: 1–22). In terms of helpfulness of the visit, 13 reported “Very Helpful,” 4 reported “Helpful,” and 2 reported “Somewhat Helpful.” Virtually all sites reporting stated that the aspect they liked most about the visit was the breadth of CE and CEnR knowledge and experience of the consultant. Some also reported that they liked the opportunity for future collaboration with the consultant or other Consortium sites. What sites liked least was the short amount of time with the consultant (typically a one‐day or half‐day visit). Most indicated that such a service requires more time to process information and advice shared by the consultant.

Of the 19 sites with both a planned and an addressed Best Practice of interest (Figure 2), among all the Best Practices discussed during the consultant visit, 8 (42%) included the Best Practice they listed in the site self‐assessment, indicating they addressed what they planned to address. The most common Best Practices addressed during the visit were in the domains of “Collaboratively Strengthening Research Agendas” followed by “Building/Strengthening Relationships with Communities” and “Building and Sharing Resources.” When consultants were asked if the visits were productive, 16 reported they were “Very Productive” while 2 reported they were “Somewhat Productive.” Although 90% of the consultants were very confident sites could develop an action plan, only 35% were very confident in their institution‘s abilities to implement one. For the 16 sites that reported an action plan, many reported they were thinking strategically about how to implement the consultant‘s recommendations. Interestingly, despite the Best Practices discussed reflecting more research collaboration and relationship building with communities, action plans more so focused on improving intra‐ and interinstitutional research relationships and collaborative activities to address CE.

Figure 2.

Planned and addressed best practice (BP) domains, all sites reporting (n = 19).

Discussion

Overall, CECS was feasible with sites and consultants reporting it helpful and productive. Although a variety of CE Best Practices were referenced, the preconsultant visit domains related to research while postvisit domains emphasized relationship building, recognizing fundamental CE and CEnR processes must be built over time for collaboratively planned and executed research.5 Most sites in the Consortium may have perceived themselves as competent in CE principles, but were unaware of the necessity of often time‐consuming steps of community relationship building. However, based on the action plans submitted, and the modest confidence expressed by the consultants in sites’ abilities to implement CE and CEnR, increased efforts seem necessary to change institutional infrastructures to accomplish such research.

Three overlapping, practical lessons were learned from developing and implementing this service. First, sites need adequate time to organize and host a consultant visit. Budget constraints (amount allotted per site for the visit), consultant availability (sites seeking the same consultant or inability to confirm a visit within the project period), institutional level of engagement and community participation, and the need for extended time with the consultant are factors to consider in organizing such visits. Second, a broad menu of consultants should be made available. The CECS website directory, which was not exhaustive and was infrequently used, did not include consultants sought by sites and contained few community members. A broader consultant list, including researcher‐community member consultant pairs may be a useful strategy. Third, complete feedback is needed from participating sites. Although this was a CTSA Consortium service, only about half completed postvisit assessment forms. Efforts are necessary to increase response rates for representative and detailed evaluations.

For future CECS project activities, we recommend that the CTSA Consortium:

Assess baseline CTSA site‐specific characteristics and CE and CEnR activities. Such evaluations will provide useful site, regional, and national output that can be used to help foster collaborations and accelerate dissemination of effective CE Best Practices. In addition, content analyses of qualitative data collected can provide contextual information to help AMCs tailor CE activities to their specific needs. Evaluation efforts will require multisite Institutional Review Board (IRB) approvals, which should be supported by the Consortium to streamline reporting and further support interinstitutional collaborations.

Implement a researcher‐community member teamed approach. Findings indicate a need for AMCs to focus more on understanding and building rapport with communities, despite apparent interest in improving how to conduct CEnR within institutions. Providing clear examples of established partnerships as consultant pairs, and offering sites the option of consultant pair visits to gain the contextual benefit of their experiences in their own environment would strengthen the service.

Explore a common set of measures to assess CE and CEnR institutional adoption. During the time of this service, AMC researchers at many CTSAs had limited experience with and infrastructural support for CEnR. This is supported by previous literature in this journal citing few NIH‐funded studies reporting CE activities,9 the lack of extensive CEnR experience at some institutions (thereby potentially underestimating the importance and complexities of building community relationships), and the need for increased institutional CEnR capacity.4, 10, 11, 12, 13 Measures should address development in three relationship categories—engagement, partnership, and collaboration (Eder M, Carter‐Edwards L, Hurd TC, Rumala BB, Wallerstein N, unpublished manuscript), which could include AMC leadership infrastructural and financial support, and intrainstitutional network development (including research activities with nontraditional departments and community organizations).

Conduct follow‐up evaluations to determine the impact of the service. Given CE and CEnR are time‐consuming processes, multiple measures of the impact of the CECS service would be needed to evaluate incremental changes.

The CECS project was designed as an administrative service to CTSA sites and was the initial step as a Consortium‐led activity to provide specific guidance in CE and CEnR to promote and improve translational medicine. As such, this report contains aggregate observations rather than site‐specific claims. Subsequent annual evaluation of the consultant visits on the CE cores was beyond the scope of this service. Evaluation metrics would need to account for the range of CE experience and expertise, the CE Core funding level at each institution, each institution‘s primary CE goals, and change over time, as relationships are established and institutions move forward to develop, implement, and improve research agendas.

Conclusion

AMCs interested in collaborating with communities on health research and its translation need to understand and further develop their capacity for CE and CEnR. The process of identifying Best Practices in CE and CEnR and advancing those practices through an in‐person consultation service were reported by those who received a consultant visit as both useful and productive. More research is needed to build on this preliminary evaluation and to develop metrics and a methodology to assess CE capacity, identify needs, and measure growth in capacity among AMCs across the nation.

This study was declared exempt by the Institutional Review Board of the Duke Medical School (Pro00035011).

Acknowledgments

The authors would like to thank J. Lloyd Michener and Michelle Lyn for their contribution to the concept and design of the service, and Julie McKeel for her supervision in the development of the CECS website. The authors also thank the project‘s regional coordinators: Linda Ziegahn (UC Davis); Linda Cottler (Washington University, St. Louis); Bernard Ewigman (University of Chicago); Chanita Hughes‐Halbert (University of Pennsylvania); and Bernadette Boden‐Albala (Columbia University). This project has been funded in whole or in part with Federal funds from the National Center for Research Resources (NCRR), National Institutes of Health (NIH), through the Clinical and Translational Science Awards Program (CTSA), part of the Roadmap Initiative, Re‐Engineering the Clinical Research Enterprise. The manuscript was approved by the CTSA Consortium Publications Committee.

Note: At the time this work was conducted, Lori Carter‐Edwards, Jennifer Cook, and Mary Anne McDonald were with the Division of Community Health, Department of Community and Family Medicine, Duke School of Medicine, Durham, NC.

This work was funded by grants through the National Center for Research Resources (NCRR), National Institutes of Health (NIH), Duke Translational Medicine Institute Grant Number 3UL1RR024128–03S2 and 3UL1RR024128–04S3. Institute for Translational Medicine, University of Chicago, Grant #UL1RR024999.

This paper was also supported in part by Cooperative Agreement Number U48‐DP001944 from the Centers for Disease Control and Prevention. The findings and conclusions in this paper are those of the author(s) and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

References

- 1. National Center for Research Resources . NCRR Fact Sheet: Clinical Translational Science Awards. 2010; http://www.ctsaweb.org/docs/CTSA_FactSheet.pdf. Accessed December 31, 2010.

- 2. Israel BA, Coombe CM, Cheezum RR, Schulz AJ, McGranaghan RJ, Lichtenstein R, Reyes AG, Clement J, Burris A. Community‐based participatory research: a capacity‐building approach for policy advocacy aimed at eliminating health disparities. Am J Public Health November 1, 2010; 100(11): 2094–2102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Ahmed SM, Palermo A‐GS. Community engagement in research: frameworks for education and peer review. Am J Public Health Aug 2010; 100(8): 1380–1387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Halbert CH, Weathers B, Delmoor E. Developing an academic‐community partnership for research in prostate cancer. J Cancer Edu 2006; 21(2): 99–103. [DOI] [PubMed] [Google Scholar]

- 5. Suarez‐Balcazar Y, Harper GW, Lewis R. An interactive and contextual model of community‐university collaborations for research and action. Health Educ Behav. Feb 2005; 32(1): 84–101. [DOI] [PubMed] [Google Scholar]

- 6. Schulz AJ, Israel BAA, Parker EA, Lockett M, Hill Y, Wills R. The east side village health worker partnership: integrating research with action to reduce health disparities. Public Health Rep. Nov‐Dec 2001; 116(6): 548–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Wallerstein N, Duran B. Using community‐based participatory research to address health disparities. Health Promot Pract 2006; 7(3): 312–323. [DOI] [PubMed] [Google Scholar]

- 8. CTSA Community Engagement Key Function Committee and the CTSA Community Engagement Workshop Planning Committee . Researchers and Their Communities: The Challenge of Meaningful Community Engagement. Bethesda, MD: National Center for Research Resources; 2009. [Google Scholar]

- 9. Hood NE, Brewer T, Jackson R, Wewers ME. Survey of community engagement in NIH‐funded research. Clin Transl Sci 2010; 3(1): 19–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Ahmed SM, Beck B, Maurana CA, Newton G. Overcoming barriers to effective community‐based participatory research in US medical schools. Educ Health Jul 2004; 17(2): 141–151. [DOI] [PubMed] [Google Scholar]

- 11. Israel BA, Lichtenstein R, Lantz P, McGranaghan R, Allen A, Guzman JR, Softley D, Maciak B. The Detroit Community‐Academic Urban Research Center: development, implementation, and evaluation.[see comment]. J Public Health Manage Pract 2001; 7(5): 1–19. [DOI] [PubMed] [Google Scholar]

- 12. Michener L, Scutchfield FD, Aguilar‐Gaxiola S, Cook J, Strelnick AH, Ziegahn, L , Deyo RA, Cottler LB, McDonald MA. Clinical and Translational Science Awards and Community Engagement: now is the time to mainstream prevention into the Nation‘s Health Research Agenda. Am J Prevent Med 2009; 37(5): 464–467. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Allen ML, Culhane‐Pera KA, Pergament S, Thiede K. A capacity building program to promote CBPR partnerships between academic researchers and community members. Clin Transl Sci December 2011; 4(6): 428–433. [DOI] [PMC free article] [PubMed] [Google Scholar]