Abstract

After being considered as a nuisance to be filtered out, it became recently clear that biochemical noise plays a complex role, often fully functional, for a biomolecular network. The influence of intrinsic and extrinsic noises on biomolecular networks has intensively been investigated in last ten years, though contributions on the co-presence of both are sparse. Extrinsic noise is usually modeled as an unbounded white or colored gaussian stochastic process, even though realistic stochastic perturbations are clearly bounded. In this paper we consider Gillespie-like stochastic models of nonlinear networks, i.e. the intrinsic noise, where the model jump rates are affected by colored bounded extrinsic noises synthesized by a suitable biochemical state-dependent Langevin system. These systems are described by a master equation, and a simulation algorithm to analyze them is derived. This new modeling paradigm should enlarge the class of systems amenable at modeling. We investigated the influence of both amplitude and autocorrelation time of a extrinsic Sine-Wiener noise on:  the Michaelis-Menten approximation of noisy enzymatic reactions, which we show to be applicable also in co-presence of both intrinsic and extrinsic noise,

the Michaelis-Menten approximation of noisy enzymatic reactions, which we show to be applicable also in co-presence of both intrinsic and extrinsic noise,  a model of enzymatic futile cycle and

a model of enzymatic futile cycle and  a genetic toggle switch. In

a genetic toggle switch. In  and

and  we show that the presence of a bounded extrinsic noise induces qualitative modifications in the probability densities of the involved chemicals, where new modes emerge, thus suggesting the possible functional role of bounded noises.

we show that the presence of a bounded extrinsic noise induces qualitative modifications in the probability densities of the involved chemicals, where new modes emerge, thus suggesting the possible functional role of bounded noises.

Introduction

Cellular functions and decisions are implemented through the coordinate interactions of a very large number of molecular species. Central unit of these processes is the DNA, a polymer that is in part segmented in subunits, called genes, which control the production of the key cellular molecules: the proteins, via the mechanism of the transcription. Some relevant proteins, called transcription factors, in turn interact with genes to modulate either the production of other proteins or their own production.

Given the above rough outlook of the intracellular machineries it is not surprising that two modeling tools, actually born in other applicative domains, revealed to be of the utmost relevance in molecular biology. They are the inter-related concepts of feedback [1], [2] and of network [3]–[6], with their mathematical backbones: the dynamical systems theory and the graph theory, respectively. From the interplay and integration of these two theories with molecular biology, a new scientific field has appeared: Systems Biology [3]–[5].

Mimicking general chemistry, bipartite graphs were initially introduced in cellular biochemistry simply to formalize the informal diagrams representing biomolecular reactions [8]. Afterwards, and especially after the deciphering of genomes, it became clear that higher level concepts of network theories were naturally able to unleash fundamental biological properties, that were not previously understood. We briefly mention here the concepts of hub gene, and of biomolecular motif [3]–[7].

Note that the concept of network is also historically important in early phases of Systems Biology. Indeed, the first dynamical models in molecular biology were particular finite automata (graph-alike structures) called boolean networks [16]. These first pioneering investigations on the dynamics of biomolecular networks stressed two concepts that revealed nowadays to be two hallmarks in Systems Biology.

The first key concept is that biomolecular networks are multistable [12]–[15]. Indeed, it was quite soon understood – both experimentally and theoretically – that multiple locally stable equilibria allows for the presence of multiple functionalities, even in small groups of interplaying proteins [7], [17]–[25].

The second key concept is that the dynamic behavior of a network is never totally deterministic [9]–[11], but it exhibits more or less strong stochastic fluctuations due to its interplay with many, and mainly unknown, other networks, as well as with various random signals coming from the extracellular world. For long time the stochastic effects due these two classes of interactions were interpreted as a disturbance inducing undesired jumps between states or, with marginally functional role, as an external initial input directing towards one of the possible final states of the network in study. In any case, in the important scenario of deterministically monostable networks the stochastic behavior under the action of extrinsic noises was seen as unimodal. In other words, external stochastic effects were seen similarly as in radiophysics, namely as a disturbance more or less obfuscating the real signal, to be controlled by those pathways working as a low-pass analog filter [26], [27]. For these reasons, a number of theoretical and experimental investigations focused on the existence of noise-reducing sub-networks [26], [28], [29]. However, it has been recently shown the existence of fundamental limits on filtering noise [30].

Moreover, if noises were only pure nuisances, there would be an interesting consequence. Indeed, in such a case a monostable network in presence of noise should exhibit more or less large fluctuations around the unique deterministic equilibrium. In probabilistic languages this means that the probability distribution of the total signal (noise plus deterministic signal) should be a sort of “bell” centered more or less at the deterministic equilibrium, i.e. the probability distribution should be unimodal. However, at the end of seventies it became clear in statistical physics that the real stochastic scenario is far more complex, and the above-outlined correspondence between deterministic monostability and stochastic monomodality in presence of external noise was seriously challenged [31]. Indeed, it was shown that many systems that are monostable in absence of external stochastic noises have, in presence of random Gaussian disturbances, multimodal equilibrium probability densities. This counter-intuitive phenomenon was termed noise-induced transition [31], and it has been shown relevant also in genetic networks [32], [33].

Above we mainly focused on external random perturbations acting on genetic and other biomolecular networks. In the meantime, experimental studies revealed the other and equally important role of stochastic effects in biochemical networks by showing that many important transcription factors, as well as other proteins and mRNA, are present in cells with very low concentrations, i.e. with a small number of molecules [34]–[36]. Moreover, it was shown that RNA production is not continuous, but instead it has the characteristics of stochastic bursts [37]. Thus, a number of investigations has focused on this internal stochastic effect, the “intrinsic noise” as some authors term it [39], [40]. In particular, it was shown – both theoretically and experimentally – that also the intrinsic noise may induce multimodality in the discrete probability distribution of proteins [33], [41]. However, the fact that intrinsically stochastic systems may exhibit behaviors similar to systems affected by extrinsic Gaussian noises was very well known in statistical and chemical physics, where this was theoretically demonstrated by approximating the exact Chemical Master Equations with an appropriate Fokker-Planck equation [42]–[44], an approach leading to the Chemical Langevin Equation [45].

Thus, after that for some time noise was mostly seen as a nuisance, more recently it has finally been appreciated that the above-mentioned and other noise-related phenomena may in many cases have a constructive, functional role (see [46], [47] and references therein). For example, noise-induced multimodality allows a transcription network for reaching states that would not be accessible if the noise was absent [33], [46], [47]. Phenotype variability in cellular populations is probably the most important macroscopic effect of intracellular noise-induced multimodality [46].

In Systems Biology, from the modeling point of view Swain and coworkers [35] were among the first to study the co-presence of both intrinsic and extrinsic randomness, by stressing the synergic role in modifying the velocity and average in the context of the basic network for the production and consumption of a single protein, in absence of feedbacks. These and other important effects were shown, although nonlinear phenomena such as multimodality were absent. The above study is also remarkable since:  it has stressed the role of the autocorrelation time of the external noise and, differently from other investigations,

it has stressed the role of the autocorrelation time of the external noise and, differently from other investigations,  it has stressed that modeling the external noise by means of a Gaussian noise, either white or colored, may induce artifacts. In fact, since the perturbed parameters may become negative, the authors employed a lognormal positive noise to model the extrinsic perturbations. In particular, in [35] a noise obtained by exponentiating the classical Orenstin-Uhlenbeck noise was used [31].

it has stressed that modeling the external noise by means of a Gaussian noise, either white or colored, may induce artifacts. In fact, since the perturbed parameters may become negative, the authors employed a lognormal positive noise to model the extrinsic perturbations. In particular, in [35] a noise obtained by exponentiating the classical Orenstin-Uhlenbeck noise was used [31].

From the data analysis point of view, You and collaborators [48] and Hilfinger and Paulsson [49] recently proposed interesting methodologies to infer by convolution the contributions of extrinsic noise also in some nonlinear networks, including a synthetic toggle switch [48].

Our aim here is to provide mathematical tools – and motivating biological examples – for the computational investigation of the co-presence of extrinsic and intrinsic randomness in nonlinear genetic (or in other biomolecular) networks, in the important case of not only non-Gaussian, but also bounded, external perturbations. We stress that, at the best of knowledge, this was never analyzed before. Indeed, by imposing a bounded extrinsic noise we increase the degree of realism of a model, since the external perturbations must not only preserve the positiveness of reaction rates, but must also be bounded. Moreover, it has also been shown in other contexts such as mathematical oncology [50]–[52] and statistical physics [50], [53]–[55] that:  bounded noises deeply impact on the transitions from unimodal to multimodal probability distribution of state variables [51]–[55] and

bounded noises deeply impact on the transitions from unimodal to multimodal probability distribution of state variables [51]–[55] and  the dynamics of a system under bounded noise may be substantially different from the one of systems perturbed by other kinds of noises, for example there is dependence of the behavior on the initial conditions [51].

the dynamics of a system under bounded noise may be substantially different from the one of systems perturbed by other kinds of noises, for example there is dependence of the behavior on the initial conditions [51].

Here we assess the two most fundamental steps of this novel line of research.

The first step is to identify a suitable mathematical framework to represent mass-action biochemical networks perturbed by bounded noises (or simply left-bounded), which in turn can depend on the state of the system. To this extent, in the first part of this work we derive a master equation for these kinds of systems in terms of the differential Chapman-Kolgomorov equation (DCKE) [42], [56] and propose a combination of the Gillespie's Stochastic Simulation Algorithm (SSA) [38], [39] with a state-dependent Langevin system, affecting the model jump rates, to simulate these systems.

The second step relates to the possibility of extending, in this “doubly stochastic” context, the Michaelis-Menten Quasi Steady State approximation (QSSA) for enzymatic reactions [57]. We face the validity of the QSSA in presence of both types of noise in the second part of this work, where we numerically investigate the classical Enzyme-Substrate-Product network. The application of QSSA in this network has been recently investigated by Gillespie and coworkers in absence of extrinsic noise [58]. Based on our results, we propose the extension of the above structure also to more general networks than those ruled by the rigourous mass-action law via a stochastic QSSA.

Finally, we stress that the interplay between the extrinsic and intrinsic noises affecting a biomolecular network might impact on the dynamics of the involved molecules in many different and complex ways. As such, in our opinion this topic cannot be exhausted in a single work. For this reason, we provided three examples of interest in biology, and of quite different natures. One is the above-mentioned Michaelis-Menten reaction, the other two are illustrated in the third part of this work, and are the following:  a futile cycle [33] and

a futile cycle [33] and  a genetic toggle switch [18], which is a fundamental motif for cellular differentiation and for other switching functions. As expected, the co-presence of both intrinsic stochasticity and bounded extrinsic random perturbations suggests the presence of possibly unknown functional roles for noise in both networks. The described noise-induced phenomena are shown to be strongly related to physical characteristics of the extrinsic noise such as the noise amplitude and its autocorrelation time.

a genetic toggle switch [18], which is a fundamental motif for cellular differentiation and for other switching functions. As expected, the co-presence of both intrinsic stochasticity and bounded extrinsic random perturbations suggests the presence of possibly unknown functional roles for noise in both networks. The described noise-induced phenomena are shown to be strongly related to physical characteristics of the extrinsic noise such as the noise amplitude and its autocorrelation time.

Methods

Noise-free stochastic chemically reacting systems

We start by recalling the Chemical Master Equation and the Stochastic Simulation Algorithm (SSA) by Doob and Gillespie [38], [39]. Systems where the jump rates are time-constant are hereby referred to as stochastic noise-free systems. We consider a well stirred system of molecules belonging to  chemical species

chemical species  interacting through

interacting through  chemical reactions

chemical reactions  . We represent the (discrete) state of the target system with a

. We represent the (discrete) state of the target system with a  -dimensional integer-valued vector

-dimensional integer-valued vector  where

where  is the number of molecules of species

is the number of molecules of species  at time

at time  . To each reaction

. To each reaction  is associated its stoichiometric vector

is associated its stoichiometric vector  , where

, where  is the change in the

is the change in the  due to one

due to one  reaction. The stoichiometric vectors form the

reaction. The stoichiometric vectors form the  stoichiometry matrix

stoichiometry matrix  . Thus, given

. Thus, given  the firing of reaction

the firing of reaction  yields the new state

yields the new state  . A propensity function

. A propensity function  [38], [39] is associated to each

[38], [39] is associated to each  so that

so that  , given

, given  , is the probability of reaction

, is the probability of reaction  to fire in state

to fire in state  in the infinitesimal interval

in the infinitesimal interval  . Table 1 summarizes the analytical form of such functions [38]. For more generic form of the propensity functions (e.g. Michaelis-Menten, Hill kinetics) we refer to [62].

. Table 1 summarizes the analytical form of such functions [38]. For more generic form of the propensity functions (e.g. Michaelis-Menten, Hill kinetics) we refer to [62].

Table 1. Gillespie propensity functions. Analytical form of the propensity functions [38].

| Order | Reaction | Propensity |

-th -th |

|

k |

-st -st |

|

kXi(t) |

-nd -nd |

|

kXi(t)(Xi(t)−1)/2 |

|

kXi(t)(Xi ′(t) |

We recall the definition of the Chemical Master Equation (CME) [38], [39], [60], [61] describing the time-evolution of the probability of a system to occupy each one of a set of states. We study the time-evolution of  , assuming that the system was initially in some state

, assuming that the system was initially in some state  at time

at time  , i.e.

, i.e.  . We denote with

. We denote with  the probability that, given

the probability that, given  , at time

, at time  it is

it is  . From the usual hypothesis that at most one reaction fires in the infinitesimal interval

. From the usual hypothesis that at most one reaction fires in the infinitesimal interval  , it follows that the time-evolution of

, it follows that the time-evolution of  is given by the following partial differential equation termed “master equation”

is given by the following partial differential equation termed “master equation”

| (1) |

The CME is a special case of the more general Kolmogorov Equations [63], i.e. the differential equations corresponding to the time-evolution of stochastic Markov jump processes. As it is well known, the CME can be solved analytically only for a very few simple systems, and normalization techniques are sometimes adopted to provide approximate solutions [64]. However, algorithmic realization of the process associated to the CME are possible by using the Doob-Gillespie Stochastic Simulation Algorithm (SSA) [38], [39], [60], [61], summarized as Algorithm 1 (Table 2). The SSA is reliable since it generates an exact trajectory of the underlying process. Although equivalent formulations exist [38], [39], [65], as well as some approximations [62], [66], [67], here we consider its Direct Method formulation without loss of generality.

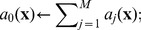

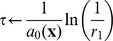

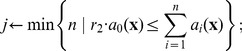

Table 2. Algorithm 1 Gillespie Stochastic Simulation Algorithm [38], [39].

| 1: Input: initial time t 0, state x 0 and final time T; |

| 2: set x←x 0 and t←t 0; |

| 3: while t<T do |

4: define

|

5: let  , r

2∼U [0,1]; , r

2∼U [0,1]; |

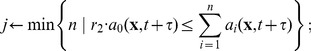

6: determine next jump as  ; ; |

7: determine next reaction as

|

| 8: set x←x+νj and t←t+τ; |

| 9: end while |

The SSA is a dynamic Monte-Carlo method describing a statistically correct trajectory of a discrete non-linear Markov process, whose probability density function is the solution of equation (1) [68]. The SSA computes a single realization of the process  , starting from state

, starting from state  at time

at time  and up to time

and up to time  . Given

. Given  the putative time

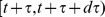

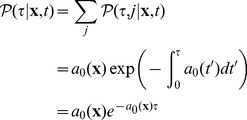

the putative time  for the next reaction to fire is chosen by sampling an exponentially distributed random variable, i.e.

for the next reaction to fire is chosen by sampling an exponentially distributed random variable, i.e.  where

where  and

and  denotes the equality in law between random variables. The reaction to fire

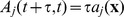

denotes the equality in law between random variables. The reaction to fire  is chosen with weighted probability

is chosen with weighted probability  , and the system state is updated accordingly.

, and the system state is updated accordingly.

The correctness of the SSA comes from the relation between the jump process and the CME [38], [68]. In fact, the probability, given  , that the next reaction in the system occurs in the infinitesimal time interval

, that the next reaction in the system occurs in the infinitesimal time interval  , denoted

, denoted  , follows

, follows

|

(2) |

since  is the probability distribution of the putative time for the next firing of

is the probability distribution of the putative time for the next firing of  , and the formula follows by the independency of the reaction firings. Notice that in equation (2)

, and the formula follows by the independency of the reaction firings. Notice that in equation (2)  represents the propensity functions evaluated in the system state at time

represents the propensity functions evaluated in the system state at time  , i.e. as if they were time-dependent functions. In the case of noise-free systems that term evaluates as

, i.e. as if they were time-dependent functions. In the case of noise-free systems that term evaluates as  for any

for any  , i.e. it is indeed time-homogenous whereas in more general cases it may not, as we shall discuss later. Finally, the probability of the reaction to fire at

, i.e. it is indeed time-homogenous whereas in more general cases it may not, as we shall discuss later. Finally, the probability of the reaction to fire at  to be

to be  follows by conditioning on

follows by conditioning on  , that is

, that is

| (3) |

Noisy stochastic chemically reacting systems

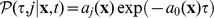

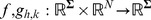

We now introduce a theory of stochastic chemically reacting systems with bounded noises in the jump rates by combining Stochastic Differential Equations and the SSA. Here we consider a system where each propensity function may be affected by a extrinsic noise term. In general, such a term can be either a time or state-dependent function, and the propensity function for reaction  reads now as

reads now as

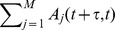

| (4) |

where  is a propensity function of a type listed in Table 1. The noisy perturbation term

is a propensity function of a type listed in Table 1. The noisy perturbation term  is positive and bounded by some

is positive and bounded by some  , i.e.

, i.e.

| (5) |

so we are actually considering both bounded and right-unbounded noises, i.e.  . In the former case we say that the

. In the former case we say that the  -th extrinsic noise is bounded, in the latter that it is left-bounded.

-th extrinsic noise is bounded, in the latter that it is left-bounded.

Note that in applications we shall mainly consider unitary mean perturbations, that is

We consider here that the extrinsic noisy disturbance  is a function of a more generic

is a function of a more generic  -dimensional noise

-dimensional noise  with

with  so we write

so we write  and equation (4) reads as

and equation (4) reads as

| (6) |

Notice that the use of a vector in equation (6) provides the important case of multiple reactions sharing the same noise term, i.e. the reactions may be affected in the same way by a unique noise source.

In equation (6)  is a continuous functions

is a continuous functions  and

and  is a colored and, in general, non-gaussian noise that may depend on the state

is a colored and, in general, non-gaussian noise that may depend on the state  of the chemical system. The dynamics of

of the chemical system. The dynamics of  is described by a

is described by a  -dimensional Langevin system

-dimensional Langevin system

| (7) |

Here,  is a

is a  -dimensional vector of uncorrelated white noises of unitary intensities,

-dimensional vector of uncorrelated white noises of unitary intensities,  is a

is a  matrix which we shall mainly consider the be diagonal and

matrix which we shall mainly consider the be diagonal and  .

.

When  does not directly depend on

does not directly depend on  , i.e. the extrinsic noise depends on an external source, which is the kind of noise we mainly consider, equation (7) reduces to

, i.e. the extrinsic noise depends on an external source, which is the kind of noise we mainly consider, equation (7) reduces to

| (8) |

We stress that the “complete” Langevin system in equation (7) is not a mere analytical exercise, but it has the aim of phenomenologically modeling extrinsic noises that are not totally independent of the process in study.

The Chapman-Kolmogorov Forward Equation

When a discrete-state jump process as one of those described in previous section is linked with a continuous noise the state of the stochastic process is the vector

| (9) |

and the state space of the process is now  . Our total process can be considered as a particular case of the general Markov process where diffusion, drift and discrete finite jumps are all co-present for all state variables [42], [56]. For this very general family of stochastic processes the dynamics of the probability of being in some state

. Our total process can be considered as a particular case of the general Markov process where diffusion, drift and discrete finite jumps are all co-present for all state variables [42], [56]. For this very general family of stochastic processes the dynamics of the probability of being in some state  at time

at time  , given an initial state

, given an initial state  at time

at time  shortly denoted as

shortly denoted as  , is described by the differential Chapman-Kolgomorov equation (DCKE) [42], [56], whose generic form is

, is described by the differential Chapman-Kolgomorov equation (DCKE) [42], [56], whose generic form is

| (10) |

Here  forms the drift vector for

forms the drift vector for  ,

,  the diffusion matrix and

the diffusion matrix and  the jump probability. For an elegant derivation of the DCKE from the integral Chapman-Kolgomorov equation [63] we refer to [56]. This equation describes various systems, in fact we remind that

the jump probability. For an elegant derivation of the DCKE from the integral Chapman-Kolgomorov equation [63] we refer to [56]. This equation describes various systems, in fact we remind that  the Fokker-Planck equation is a particular case of the DCKE without jumps (i.e.

the Fokker-Planck equation is a particular case of the DCKE without jumps (i.e.  ),

),  the CME in equation (1) is the DCKE without brownian motion and drift (i.e.

the CME in equation (1) is the DCKE without brownian motion and drift (i.e.  and

and  ),

),  the Liouville equation is the DCKE without brownian motion and jumps (i.e.

the Liouville equation is the DCKE without brownian motion and jumps (i.e.  and

and  ) and

) and  the ODE with jumps correspond to the case where only diffusion is absent (i.e.

the ODE with jumps correspond to the case where only diffusion is absent (i.e.  ).

).

We stress that, at the best of our knowledge, this is the first time where a master equation for stochastic chemically reacting systems combined with bounded noises is considered. Let

| (11) |

be the probability that at time  it is

it is  and

and  , given

, given  and

and  . The time-evolution of

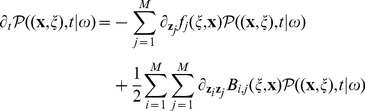

. The time-evolution of  is equation (10) where drift and diffusion are given by the Langevin equation (7), that is

is equation (10) where drift and diffusion are given by the Langevin equation (7), that is

| (12) |

with  the standard vector multiplication and

the standard vector multiplication and  the transpose of

the transpose of  . Moreover, since only finite jumps are possible, then the jump functions and diffusion satisfy

. Moreover, since only finite jumps are possible, then the jump functions and diffusion satisfy

| (13) |

for any  , and noise

, and noise  . Summarizing, for the systems we consider the DCKE in equation (10) reads as

. Summarizing, for the systems we consider the DCKE in equation (10) reads as

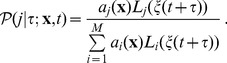

|

(14) |

|

This equation is the natural generalization of the CME in equation (1), and completely characterize noisy systems. As such, however, its realization can be prohibitively difficult and is hence convenient to define algorithms to perform the simulation of noisy systems.

The SSA with Bounded Noise

We now define the Stochastic Simulation Algorithm with Bounded Noise (SSAn). The algorithm performs a realization of the stochastic process underlying the system where a (generic) realization of the noise is assumed. As for the CME and the SSA, this corresponds to computing a realization of a process satisfying equation (14). This implies that, as for the SSA, the SSAn is reliable since the generated trajectory is exact. This, in future, will allow to use the SSAn as a base to define approximate simulation to sample from equation (14), as it is done from the SSA and the CME [62], [66], [67]. The SSAn takes inspiration from the (generic) SSA with time-dependent propensity functions [69] as well as the SSA for hybrid deterministic/stochastic systems [70]–[73], thus generalizing the jump equation (2) to a time inhomogeneous distribution, which we discuss in the following.

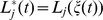

For a system with  reactions the time evolution equation for

reactions the time evolution equation for  ) is

) is

| (15) |

where  is the stochastic process counting the number of times that

is the stochastic process counting the number of times that  occurs in

occurs in  with initial condition

with initial condition  . For Markov processes

. For Markov processes  is an inhomogeneous Poisson process satisfying

is an inhomogeneous Poisson process satisfying

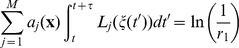

| (16) |

when  . In hybrid systems this is is a doubly stochastic Poisson process with time-dependent intensity, in our case this is a Cox process [74], [75] since the intensity itself is a stochastic process, i.e. it depends on the stochastic noise. More simply, in noise-free systems, this equation evaluates as

. In hybrid systems this is is a doubly stochastic Poisson process with time-dependent intensity, in our case this is a Cox process [74], [75] since the intensity itself is a stochastic process, i.e. it depends on the stochastic noise. More simply, in noise-free systems, this equation evaluates as  , thus denoting a time homogeneous Poisson process. As in [70], [72], [73], [76], [77] such a process ca be transformed in a time homogenous Poisson process with parameter

, thus denoting a time homogeneous Poisson process. As in [70], [72], [73], [76], [77] such a process ca be transformed in a time homogenous Poisson process with parameter  , and a simulation algorithm can be exploited. Let us denote with

, and a simulation algorithm can be exploited. Let us denote with  the time at next occurrence of reaction

the time at next occurrence of reaction  after time

after time  , then

, then

| (17) |

follows by equation (16) and higher order terms vanish by the usual hypothesis that the reaction firings are locally independent, as in the derivation of equation (1). Given the system to be in state  at time

at time  , the transformation

, the transformation

| (18) |

which is a monotonic (increasing) function of  is used to determine the putative time for

is used to determine the putative time for  to fire. Given a sequence

to fire. Given a sequence  of independent exponential random variables with mean

of independent exponential random variables with mean  for

for  and

and  , equation (16) implies that

, equation (16) implies that

| (19) |

This provides that, if the systems is in state  , then the next time for the next reaction firing of

, then the next time for the next reaction firing of  is the smallest time

is the smallest time  such that

such that

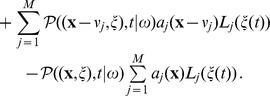

| (20) |

with  , and thus the next jump of the overall system is taken as the minimum among all possible times, that is by solving equality

, and thus the next jump of the overall system is taken as the minimum among all possible times, that is by solving equality

| (21) |

with  . This holds because

. This holds because  is still exponential with parameter

is still exponential with parameter  and the jumps are independent. We remark that for a noise-free reaction

and the jumps are independent. We remark that for a noise-free reaction  , thus suggesting that the combination of noisy and noise-free reactions is straightforward. The index of the reaction to fire is instead a random variable following

, thus suggesting that the combination of noisy and noise-free reactions is straightforward. The index of the reaction to fire is instead a random variable following

|

(22) |

The SSAn is Algorithm 2 (Table 3); its skeleton is similar to Gillespie's SSA, so the algorithm simulates the firing of  reactions in a (discrete) state

reactions in a (discrete) state  tracking molecule counts. In addiction to the SSA, this algorithm also tracks the (continuos) state storing the noises.

tracking molecule counts. In addiction to the SSA, this algorithm also tracks the (continuos) state storing the noises.

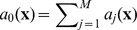

Table 3. Algorithm 2 Stochastic Simulation Algorithm with Bounded Noises (SSAn).

| 1: Input: initial time t 0, state x 0 and final timeT; |

| 2: set x←x 0 and t←t 0; |

| 3: while t<T do |

4: let  , r

2∼U [0,1]; , r

2∼U [0,1]; |

5: find next jump by solving equation (21), that is  while generating noise ξ(t) in t′ε[t, t+τ]; while generating noise ξ(t) in t′ε[t, t+τ]; |

6: determine next reaction as

|

| 7: set x←x+νj and t←t+τ; |

| 8: end while |

As for the SSA, jumps are determining by using two uniform numbers  and

and  . Step

. Step  is the (joint) solution of both equation (21) and Langevin system (7), i.e.

is the (joint) solution of both equation (21) and Langevin system (7), i.e.  in

in  . This allows to both

. This allows to both  determine the putative time for the next reaction to fire, i.e. the

determine the putative time for the next reaction to fire, i.e. the  solving equation (21), and to

solving equation (21), and to  update noise realization, i.e. system (7). This step is the computational bottleneck of this algorithm since it can not be analytical, unless for simple cases, as instead was for the SSA (step

update noise realization, i.e. system (7). This step is the computational bottleneck of this algorithm since it can not be analytical, unless for simple cases, as instead was for the SSA (step  had an exact solution for

had an exact solution for  ). We remark that this does not affect the exactness of the SSAn with respect to the trajectory of the underlying stochastic process. Being non-analytical an iterative method, e.g. the Newton-Raphson, has to be embedded in the SSAn implementation. Furthermore, noise integration is also non-analytical thus inducing a further numerical approximation issue. To this extent, the integral in equation (21), i.e. a conventional Lebesgue integral since the perturbation

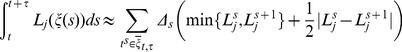

). We remark that this does not affect the exactness of the SSAn with respect to the trajectory of the underlying stochastic process. Being non-analytical an iterative method, e.g. the Newton-Raphson, has to be embedded in the SSAn implementation. Furthermore, noise integration is also non-analytical thus inducing a further numerical approximation issue. To this extent, the integral in equation (21), i.e. a conventional Lebesgue integral since the perturbation  is a colored stochastic process [78], can be solved by adopting an interpolation scheme. An example linear scheme is

is a colored stochastic process [78], can be solved by adopting an interpolation scheme. An example linear scheme is

|

(23) |

where

| (24) |

is a single trajectory of the vectorial noise process in  ,

,  for

for  and

and  the noise granularity. We remark that this is a discretization of a continuous noise, thus inducing an approximation, but is in general the only possible approach. To reduce approximation errors in the SSAn the maximum size of the jump in the noise realization, i.e. the noise granularity

the noise granularity. We remark that this is a discretization of a continuous noise, thus inducing an approximation, but is in general the only possible approach. To reduce approximation errors in the SSAn the maximum size of the jump in the noise realization, i.e. the noise granularity  , should be much smaller than the minimum autocorrelation time of the perturbing stochastic processes

, should be much smaller than the minimum autocorrelation time of the perturbing stochastic processes  .

.

Once the jumpt time  has been determined, sample values for

has been determined, sample values for  are determined according to equation (22) in step

are determined according to equation (22) in step  , as similarly done in the SSA. This sample is again numerical and an arbitrary precision can be obtained by properly generating the noise.

, as similarly done in the SSA. This sample is again numerical and an arbitrary precision can be obtained by properly generating the noise.

All these two equations, as well as the numerical method to solve equation (21) are implemented in the SSAn implementation which can be found in the NoisySIM free library [59], as discussed in the Results section.

Extension to non mass-action nonlinear kinetic laws

Large networks with large chemical concentrations, i.e. characterized by deterministic behaviors, are amenable to significant simplifications by means of the well known Quasi Steady State Approximation (QSSA) [7], [57], [58], [79]. The validity conditions underlying these assumptions are very well-known in the context of deterministic models [57], despite not much being known for the corresponding stochastic models. Recently, Gillespie and coworkers [58] showed that, in the classical Michaelis-Menten Enzyme-Substrate-Product network, a kind of Stochastic QSSA (SQSSA) may be applied as well, and that in such its limitations are identical to the deterministic QSSA. Thus, it is of interest to consider SQSSAs also in our “doubly stochastic” setting, even though possible pitfalls may arise due to the presence of the extrinsic noises. As an example, in Results section we will present numerical experiments similar to those of [58], with the purpose of validating the SQSSA for noisy Michaelis-Menten enzymatic reactions.

Of course, in a SQSSA not only the propensities may be nonlinear function of state variables, but they may depend nonlinearly also on the perturbations, so that instead of the elementary perturbed propensities we shall have generalized perturbed propensities of the form

where  is a vector with elements

is a vector with elements  for

for  . This makes possible, within the above outlined limitation for the applicability of the SQSSA, to write a DCKE for these systems as

. This makes possible, within the above outlined limitation for the applicability of the SQSSA, to write a DCKE for these systems as

| (25) |

As far as the simulation algorithm is concerned, it remains quite close to Algorithm 2 (Table 3) provided that the jump times are sampled according to the following distribution

| (26) |

Results

We performed SSAn-based analysis of some simple biological networks, actually present in most complex realistic networks. We start by studying the legitimacy of the stochastic Michaelis-Menten approximation of when noise affects enzyme kinetics [58]. Then we study the role of the co-presence of intrinsic and extrinsic bounded noises in a in a model of enzymatic futile cycle [33] and, finally, in a bistable “toggle switch” model of gene expression [24], [86]. All the simulations have been performed by a Java implementation of the SSAn, currently available within the NoisySIM free library [59].

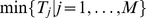

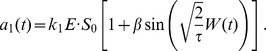

The Sine-Wiener noise [53]

The bounded noise  that we use in our simulations is obtained by applying a bounded continuous function

that we use in our simulations is obtained by applying a bounded continuous function  to a random walk

to a random walk  , i.e.

, i.e.  with

with  a white noise. We have

a white noise. We have

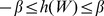

so that for some  it holds

it holds  . The effect of the truncation of the tails induced by the approach here illustrated is that, due to this “compression”, the stationary probability densities of this class of processes satisfy

. The effect of the truncation of the tails induced by the approach here illustrated is that, due to this “compression”, the stationary probability densities of this class of processes satisfy

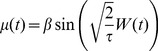

Probably the best studied bounded stochastic process obtained by using this approach is the so-called Sine-Wiener noise [53], that is

|

(27) |

where  is the noise intensity and

is the noise intensity and  is the autocorrelation time. The average and the variance of this noise are

is the autocorrelation time. The average and the variance of this noise are

and its autocorrelation is such that [53]

Note that, since we mean to use noises of the form  , i.e. the unitary-mean perturbations in equation (6), then the noise amplitude must be such that

, i.e. the unitary-mean perturbations in equation (6), then the noise amplitude must be such that  .

.

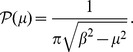

For this noise, the probability density is the following [89]

|

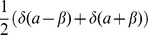

By these properties, this noise can be considered a realistic extension of the well-known symmetric dichotomous Markov noise  , whose stationary density is

, whose stationary density is  , for

, for  and

and  the Dirac delta function [80]. Finally, we remark that the white-noise process

the Dirac delta function [80]. Finally, we remark that the white-noise process  is generated at times

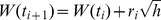

is generated at times  according to the recursive schema

according to the recursive schema  with initial condition

with initial condition  . Here

. Here  and

and  for

for  is its discretization step; it has to satisfy

is its discretization step; it has to satisfy  so we typically chose

so we typically chose  . Notice that the noise autocorrelation is expected to deeply impact on the simulation times.

. Notice that the noise autocorrelation is expected to deeply impact on the simulation times.

Enzyme kinetics

Enzyme-catalyzed reactions are fundamental for life, and in deterministic chemical kinetics theories are often conveniently represented in an approximated non mass-action form, the well-known Michaelis-Menten kinetics [7], [57], [58]. Such approximation of the exact mass-action model is based on a Quasi Steady-State Assumption (QSSA) [57], [79], valid under some well known conditions. In [58] it is studied the legitimacy of the Michaelis-Menten approximation of the Enzyme-Substrate-Product stochastic reaction kinetics. Most important, it is shown that such a stochastic approximation, i.e. the SQSSA in previous section, obeys the same validity conditions for the deterministic regime. This suggests the legitimacy of using – in case of low number of molecules – the Gillespie algorithm not only for simulating mass-action law kinetics, but more in general to simulate more complex rate laws, once a simple conversion of deterministic Michaelis-Menten models is performed and provided – of course – that the SQSSA validity conditions are fulfilled.

In this section we investigate numerically whether the Michaelis-Menten approximations and the stochastic results obtained in [58] still hold true in case that a bounded stochastic noise perturb the kinetic constants of the propensities of the exact mass-action law system Enzyme-Substrate-Product. Let  be an enzyme,

be an enzyme,  a substrate and

a substrate and  a product, the exact mass-action model of enzymatic reactions comprises the following three reactions

a product, the exact mass-action model of enzymatic reactions comprises the following three reactions

where  ,

,  and

and  are the kinetic constants. The network describes the transformation of substrate

are the kinetic constants. The network describes the transformation of substrate  into product

into product  , as driven by the formation of the enzyme-substrate complex

, as driven by the formation of the enzyme-substrate complex  , which is reversible.

, which is reversible.

The deterministic version of such reactions is

| (28) |

where we write  to distinguish the multiplication of

to distinguish the multiplication of  and

and  from complex

from complex  . By the relations

. By the relations

| (29) |

a QSSA reduces to one the number of involved equations. Indeed, since  is in quasi-steady-state, i.e.

is in quasi-steady-state, i.e.  , then

, then

| (30) |

Here  is termed the Michaelis-Menten constant. In practice, the QSSA permits to reduce the three-reactions model to the single-reaction model

is termed the Michaelis-Menten constant. In practice, the QSSA permits to reduce the three-reactions model to the single-reaction model

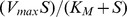

with non mass-action non linear rate  . In [58] the condition

. In [58] the condition

| (31) |

is used to determine a region of the parameters space guaranteeing the legitimacy of the Michaelis-Menten approximation. When condition (31) holds, a separation exists between the fast pre-steady-state and the slower steady-state timescales [79] and the solution of the Michaelis-Menten approximation closely tracks the solution of the exact model on the slow timescale.

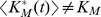

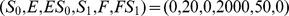

Here we show that the same condition is sufficient to legitimate the Michaelis-Menten approximation with bounded noises arbitrarily applied to any of the involved reactions. We start by recalling the result in [58] about the noise-free models given in Table 4. We considered two initial conditions:  one with

one with  copies of substrate,

copies of substrate,  enzyme and

enzyme and  complexes and products, and

complexes and products, and  one with

one with  copies of substrate,

copies of substrate,  enzyme and

enzyme and  complexes and products. As in [58] we set

complexes and products. As in [58] we set  and

and  ; notice that the parameters are dimensionless and, more important, in

; notice that the parameters are dimensionless and, more important, in  they satisfy condition (31) since

they satisfy condition (31) since  and

and  , in

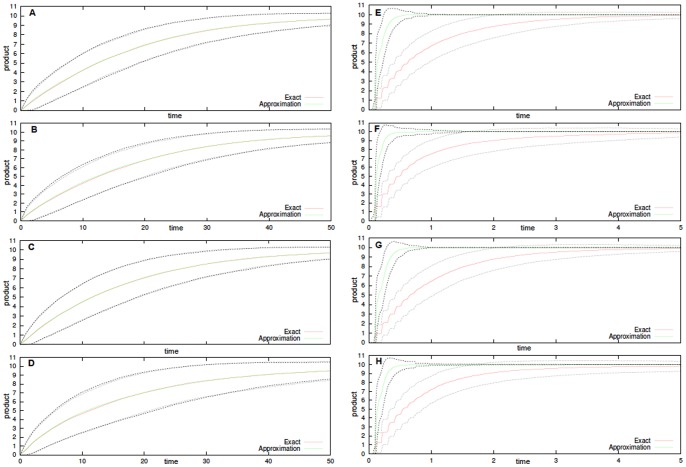

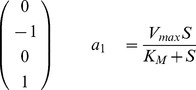

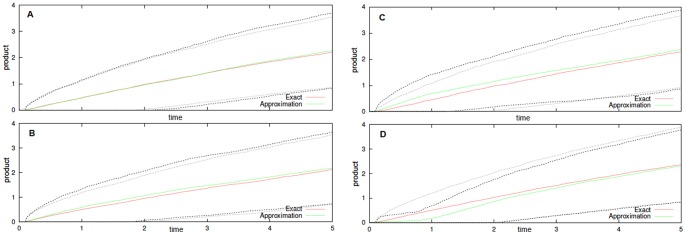

, in  no. In Figure 1 we reproduced the results in [58] for

no. In Figure 1 we reproduced the results in [58] for  in right panel and

in right panel and  in left. As expected, in

in left. As expected, in  the approximation is valid on the slow time-scale, and not valid in the fast, i.e. for

the approximation is valid on the slow time-scale, and not valid in the fast, i.e. for  , in

, in  it is not valid also in the slow time-scale.

it is not valid also in the slow time-scale.

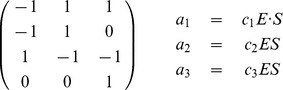

Table 4. Enzyme-Substrate-Product model.

|

|

Exact model (left) and Michaelis-Menten approximation (right) of enzymatic reactions: the stoichiometry matrixes (rows in order  ,

,  ,

,  ,

,  ) and the propensity functions.

) and the propensity functions.

Figure 1. Noise-free Enzyme-Substrate-Product system.

Product formation (averages of  simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. We have set

simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. We have set  and

and  ; the initial configuration is

; the initial configuration is  in A and

in A and  in B.

in B.

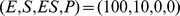

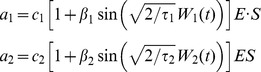

If noises are considered the models in Table 4 change accordingly. So, for instance when independent Sine-Wiener noises are applied to each reaction, the exact model becomes

|

and the Michaelis-Menten constant becomes the time-dependent function

|

Notice that the nonlinear approximated propensity  is now time-dependent, and, moreover, it depends nonlinearly on the noises affecting the system.

is now time-dependent, and, moreover, it depends nonlinearly on the noises affecting the system.

Thus condition (31) becomes time-dependent and we rephrase it to be

| (32) |

Note that if  then

then  , whereas if

, whereas if  then

then  .

.

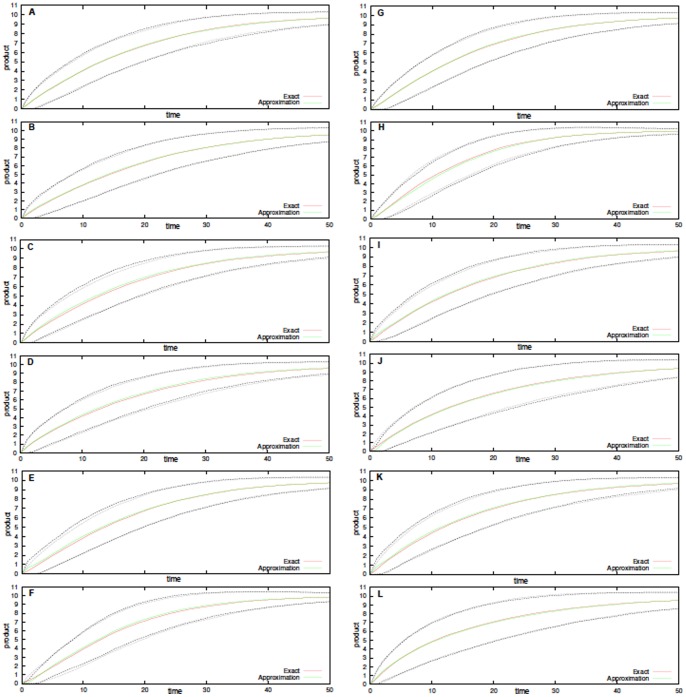

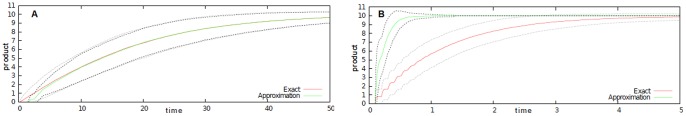

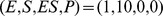

Each of the shown figures is the result of  simulations for model configuration where the simulation times, which span from few seconds to few minutes, depend on the noise correlation. When the same system of Figure 1

simulations for model configuration where the simulation times, which span from few seconds to few minutes, depend on the noise correlation. When the same system of Figure 1

is extended with these noises the approximation is still valid, as shown in the top panels of Figure 2. In addition, the approximation is not valid when condition (32) does not hold, as shown in the bottom panels of Figure 2, as it was in Figure 1

is extended with these noises the approximation is still valid, as shown in the top panels of Figure 2. In addition, the approximation is not valid when condition (32) does not hold, as shown in the bottom panels of Figure 2, as it was in Figure 1

. Notice that in there we use two different noise correlations, i.e.

. Notice that in there we use two different noise correlations, i.e.  in the left and

in the left and  for

for  in the right column panels, thus mimicking noise sources with quite different characteristic kinetics. Also, we set two different noise intensities, i.e.

in the right column panels, thus mimicking noise sources with quite different characteristic kinetics. Also, we set two different noise intensities, i.e.  in top panels and

in top panels and  (maximum intensity) in bottom panels, whereas all the other parameters are as in Figure 1. Summarizing, we get a complete agreement between enzymatic reactions with/without noise, independently on the noise characteristics when it affects all of the reactions.

(maximum intensity) in bottom panels, whereas all the other parameters are as in Figure 1. Summarizing, we get a complete agreement between enzymatic reactions with/without noise, independently on the noise characteristics when it affects all of the reactions.

Figure 2. Stochastically perturbed Enzyme-Substrate-Product system.

Product formation (averages of  simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. In A, B, C and D the initial configuration is

simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. In A, B, C and D the initial configuration is  , in all other panels is

, in all other panels is  . Independent Sine-Wiener noises are present in all the reactions. For

. Independent Sine-Wiener noises are present in all the reactions. For  ,

,  in A, B, E and F, and

in A, B, E and F, and  in all other panels. Also,

in all other panels. Also,  in A, C, E and G, and

in A, C, E and G, and  in all other panels.

in all other panels.

To strengthen this conclusion it becomes important to investigate whether it still holds when noises affects only a portion of the network and, also, whether it holds on the fast time-scale.

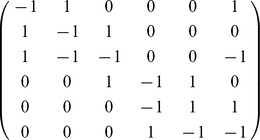

As far as the number of noises is concerned, we investigated various single-noise configurations in Figure 3. In there we used a single noise, i.e. two out of the three noises have  intensity, with both low and high intensities, i.e.

intensity, with both low and high intensities, i.e.  and

and  . Also, in that figure we vary the noise correlation time as

. Also, in that figure we vary the noise correlation time as  . As hoped, the simulations show that the approximation is legitimate in the slow time-scale for all the various parameter configurations, thus independently on the presence of single or multiple noises.

. As hoped, the simulations show that the approximation is legitimate in the slow time-scale for all the various parameter configurations, thus independently on the presence of single or multiple noises.

Figure 3. Stochastically perturbed Enzyme-Substrate-Product system.

Product formation (averages of  simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. In all panels the initial configuration is

simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics. In all panels the initial configuration is  . Here single Sine-Wiener noises various intensities and autocorrelations are used. In A

. Here single Sine-Wiener noises various intensities and autocorrelations are used. In A

and

and  , in B

, in B

and

and  , in C

, in C

and

and  , in D

, in D

and

and  , in E

, in E

and

and  , in F

, in F

and

and  , in G

, in G

and

and  , in H

, in H

and

and  , in I

, in I

and

and  , in J

, in J

and

and  , in K

, in K

and

and  and in L

and in L

and

and  . All other parameters are

. All other parameters are  .

.

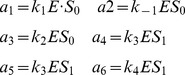

Finally, as far as the legitimacy of the approximation in the fast time-scale is concerned, i.e.  , our simulations show a result of interest: if the noise correlation is small compared to the reference fast time-scale and if single noises are considered the noisy Michaelis-Menten approximation performs well also on the fast time-scale. We remark that this was not the case for the analogous noise-free scenario in Figure 1

, our simulations show a result of interest: if the noise correlation is small compared to the reference fast time-scale and if single noises are considered the noisy Michaelis-Menten approximation performs well also on the fast time-scale. We remark that this was not the case for the analogous noise-free scenario in Figure 1

. In support of this we plot in Figure 4 the fast time-scale for

. In support of this we plot in Figure 4 the fast time-scale for  and

and  for the single noise model with a noise in the enzyme-substrate complex formation, i.e.

for the single noise model with a noise in the enzyme-substrate complex formation, i.e.  . Similar evidences were found in the configurations plotted in Figure 3 (not shown).

. Similar evidences were found in the configurations plotted in Figure 3 (not shown).

Figure 4. Stochastically perturbed Enzyme-Substrate-Product system.

Product formation (averages of  simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics in the fast time-scale

simulations, plotted with dotted standard deviation) for both exact and approximated Michaelis-Menten kinetics in the fast time-scale  . In all panels the initial configuration is

. In all panels the initial configuration is  . Here a single Sine-Wiener noise affects complex formation. In A

. Here a single Sine-Wiener noise affects complex formation. In A

and

and  , in B

, in B

and

and  , in C

, in C

and

and  , in D

, in D

and

and  .

.

Futile cycles

In this section we consider a model of futile cycle, as the one computationally studied in [33]. The model consists of the following mass-action reactions

where  and

and  are enzymes,

are enzymes,  and

and  substrate molecules, and

substrate molecules, and  and

and  the complexes enzyme-substrate. Futile cycles are an unbiquitous class of biochemical reactions, acing as a motif in many signal transduction pathways [81].

the complexes enzyme-substrate. Futile cycles are an unbiquitous class of biochemical reactions, acing as a motif in many signal transduction pathways [81].

Experimental evidences related the presence of enzymatic cycles with bimodalities in stochastic chemical activities [82]. As already seen in the previous section, Michaelis-Menten kinetics is not sufficient to describe such complex behaviors, and further enzymatic processes are often introduced to induce more complex behaviors. For instance, in deterministic models of enzymatic reactions feedbacks are necessary to induce bifurcations and oscillations. Instead, in [33] it is shown that, although the deterministic version of the model has a unique and attractive equilibrium state, stochastic fluctuations in the total number of  molecules may induce a transition from a unimodal to a bimodal behavior of the chemicals. This phenomenon was shown both by the analytical study of a continuous SDE model where the random fluctuations in the total number of enzyme

molecules may induce a transition from a unimodal to a bimodal behavior of the chemicals. This phenomenon was shown both by the analytical study of a continuous SDE model where the random fluctuations in the total number of enzyme  (both free and as a complex with

(both free and as a complex with  ) is modeled by means of a white gaussian noise on the one hand, and in a totally stochastic setting on the other hand. In the latter case it was assumed the presence of a third molecule

) is modeled by means of a white gaussian noise on the one hand, and in a totally stochastic setting on the other hand. In the latter case it was assumed the presence of a third molecule  interacting with enzyme

interacting with enzyme  according to the following reactions

according to the following reactions

By using  the stochastic model results to be both quantitatively and qualitatively different from the deterministic equivalent. These differences serve to confer additional functional modalities on the enzymatic futile cycle mechanism that include stochastic amplification and signaling, the characteristics of which depend on the noise.

the stochastic model results to be both quantitatively and qualitatively different from the deterministic equivalent. These differences serve to confer additional functional modalities on the enzymatic futile cycle mechanism that include stochastic amplification and signaling, the characteristics of which depend on the noise.

Our aim here is to investigate whether bounded noises affecting the kinetic constant, and thus not modifying the topology of the futile cycle network, may as well induce transition to bimodality in the system behavior. To this aim, here we analyze three model configurations:  the noise-free futile cycle, namely only the first six reactions,

the noise-free futile cycle, namely only the first six reactions,  the futile cycle with the external noise as given by

the futile cycle with the external noise as given by  and

and  the futile cycle with a bounded noise on the binding of

the futile cycle with a bounded noise on the binding of  and

and  , i.e. the formation of

, i.e. the formation of  , and

, and  is absent.

is absent.

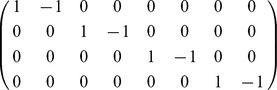

In Table 5 the noise-free futile cycle is given as a stoichiometry matrix and  mass-action reactions. The model simulated in [33] is obtained by extending the model in the table with a stoichiometry matrix containing

mass-action reactions. The model simulated in [33] is obtained by extending the model in the table with a stoichiometry matrix containing  and four more mass-action reactions. For the sake of shortening the presentation we omit to show them here. The model with a bounded noise in

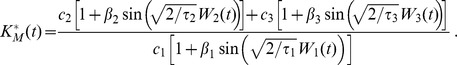

and four more mass-action reactions. For the sake of shortening the presentation we omit to show them here. The model with a bounded noise in  is obtained by defining

is obtained by defining

Table 5. Futile cycle model.

|

|

The noise-free enzymatic futile cycle [33]: the stoichiometry matrix (rows in order  ,

,  ,

,  ,

,  ,

,  ,

,  ) and the propensity functions.

) and the propensity functions.

|

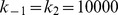

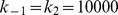

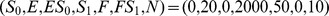

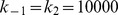

We simulated the above three models according to the initial condition used in [33]

which is extended to account for

which is extended to account for  initial molecules of

initial molecules of  , when necessary. The kinetic parameters are dimensionless and defined as

, when necessary. The kinetic parameters are dimensionless and defined as  ,

,  ,

,  ,

,  ,

,  for the noise-free and the bounded noise case, and

for the noise-free and the bounded noise case, and  ,

,  and

and  when the unimodal noise is considered [33]. Furthermore, when the bounded noise is considered the autocorrelation is chosen as

when the unimodal noise is considered [33]. Furthermore, when the bounded noise is considered the autocorrelation is chosen as  according to the highest rate of the reactions generating the unimodal noise.

according to the highest rate of the reactions generating the unimodal noise.

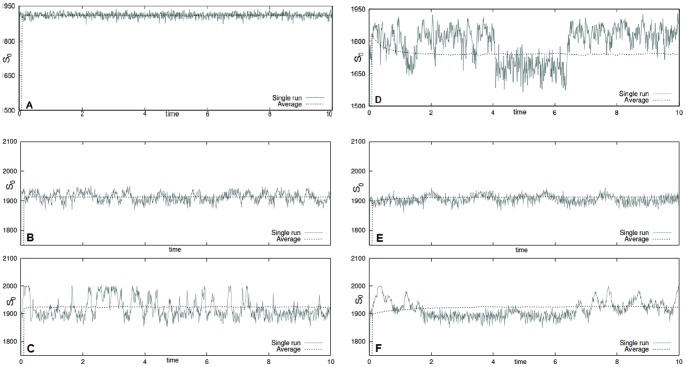

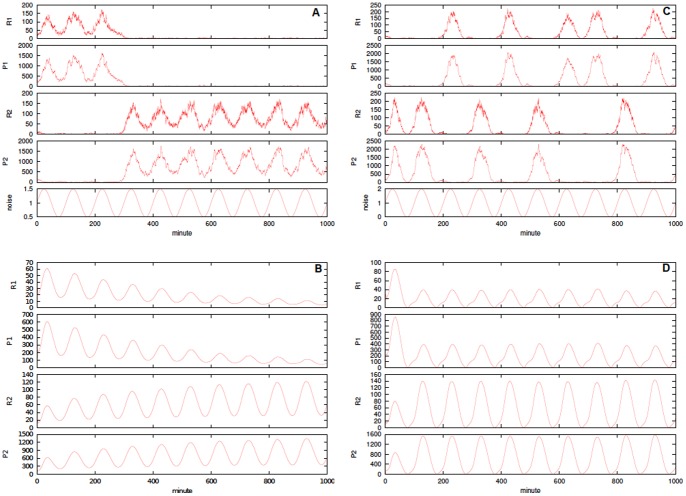

In Figure 5 a single run and averages of  simulations for the futile cycle models are shown. In this case the simulation times span in range from

simulations for the futile cycle models are shown. In this case the simulation times span in range from  to

to  , thus making the choice of good parameters more crucial than in the other cases. In Figure 5 the substrate

, thus making the choice of good parameters more crucial than in the other cases. In Figure 5 the substrate  is plotted, and

is plotted, and  behaves complementarily. In top panels the noise-free (top) and the cycle unimodal noise as

behaves complementarily. In top panels the noise-free (top) and the cycle unimodal noise as  (bottom). In bottom panels the cycle with bounded noise and autocorrelation

(bottom). In bottom panels the cycle with bounded noise and autocorrelation  in (left) and

in (left) and  in (right). In both cases in the top panel the noise intensity is

in (right). In both cases in the top panel the noise intensity is  (top) and

(top) and  (bottom). The initial configuration is always

(bottom). The initial configuration is always  and the kinetic parameters are

and the kinetic parameters are  ,

,  ,

,  ,

,  ,

,  for the noise-free and the bounded noise case, and

for the noise-free and the bounded noise case, and  ,

,  and

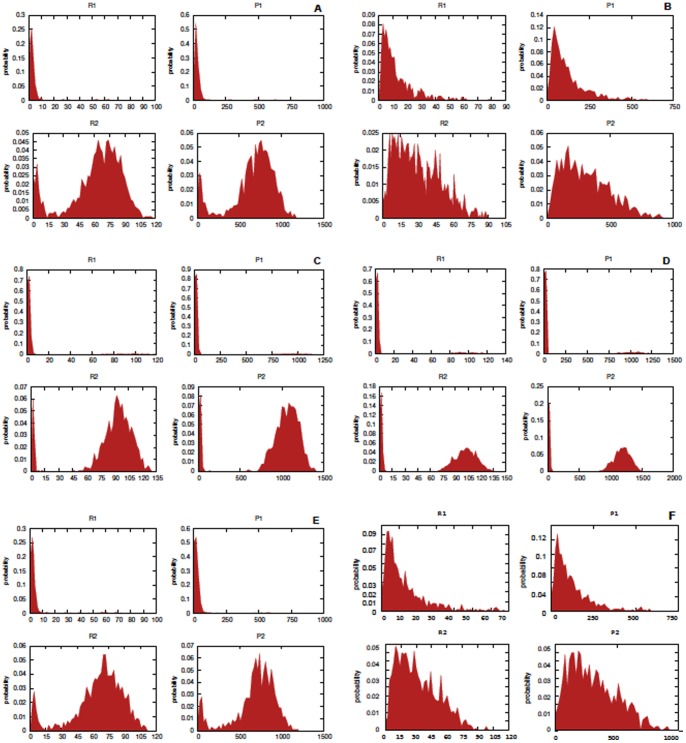

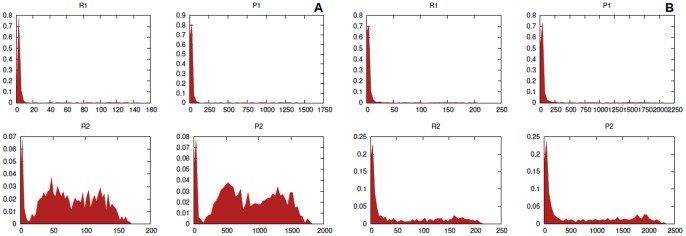

and  [33]. We also show in Figure 6 the empirical probability density function for the concentration of

[33]. We also show in Figure 6 the empirical probability density function for the concentration of  , i.e.

, i.e.  given the considered initial configuration, at

given the considered initial configuration, at  after

after  simulations for the futile cycle models with the parameter configurations considered in Figure 5. The analysis of such distributions outline that for the noise-free system the distributions are clearly unimodal, whereas for noisy futile cycle, in both cases, they are bi-modal. Moreover, it is important to notice that the smallest peak of the distribution, i.e. the rightmost, has a bigger variance when

simulations for the futile cycle models with the parameter configurations considered in Figure 5. The analysis of such distributions outline that for the noise-free system the distributions are clearly unimodal, whereas for noisy futile cycle, in both cases, they are bi-modal. Moreover, it is important to notice that the smallest peak of the distribution, i.e. the rightmost, has a bigger variance when  is considered, rather than when a bounded noise is considered.

is considered, rather than when a bounded noise is considered.

Figure 5. Stochastic models of futile cycles.

Single run and averages of  simulations for substrate

simulations for substrate  of the futile cycle models. In panel A the noise-free futile cycle and in panel D the extended noise-free model including the additional species

of the futile cycle models. In panel A the noise-free futile cycle and in panel D the extended noise-free model including the additional species  . In bottom plots the cycle affected by bounded Sine-Wiener noise with: in B

. In bottom plots the cycle affected by bounded Sine-Wiener noise with: in B

and

and  , in C

, in C

and

and  , in E

, in E

and

and  , in F

, in F

and

and  . The initial configuration is always

. The initial configuration is always  ; the kinetic parameters are

; the kinetic parameters are  ,

,  ,

,  ,

,  and

and  (noise-free and the bounded noise case), and

(noise-free and the bounded noise case), and  ,

,  and

and  [33].

[33].

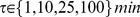

Figure 6. Stochastic models of futile cycles.

Empirical probability density function for  at

at  after

after  simulations for the futile cycle models with the parameter configurations considered in Figure 5. In panel A the noise-free cycle, in B the cycle affected by sine-Wiener noise with

simulations for the futile cycle models with the parameter configurations considered in Figure 5. In panel A the noise-free cycle, in B the cycle affected by sine-Wiener noise with  and

and  , in C the noise-free modified cycle including the additional species

, in C the noise-free modified cycle including the additional species  . In bottom panels the cycle affected by sine-Wiener noise with: in D

. In bottom panels the cycle affected by sine-Wiener noise with: in D

and

and  , in E

, in E

and

and  and in F

and in F

and

and  .

.

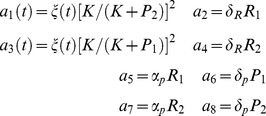

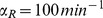

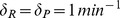

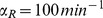

Bistable kinetics of gene expression

Let us consider a model by Zhdanov [24], [86] where two genes  and

and  , two RNAs

, two RNAs  and

and  and two proteins

and two proteins  and

and  are considered. In such a model synthesis and degradation correspond to

are considered. In such a model synthesis and degradation correspond to

Such a reaction scheme is a genetic toggle switch if the formation of  and

and  is suppressed by

is suppressed by  and

and  , respectively [18], [25], [83]–[85]. Zhdanov further simplifies the schema by considering kinetically equivalent genes, and by assuming that the mRNA synthesis occurs only if

, respectively [18], [25], [83]–[85]. Zhdanov further simplifies the schema by considering kinetically equivalent genes, and by assuming that the mRNA synthesis occurs only if  regulatory sites of either

regulatory sites of either  or

or  are free. The deterministic model of the simplified switch when synthesis is perturbed is

are free. The deterministic model of the simplified switch when synthesis is perturbed is

| (33) |

where the perturbation is

Here  ,

,  ,

,  and

and  are the rate constants of the reactions involved, term

are the rate constants of the reactions involved, term  is the probability that

is the probability that  regulatory sites are free and

regulatory sites are free and  is the association constant for protein

is the association constant for protein  . Notice that here perturbations are given in terms of a time-dependent kinetic function for synthesis, rather than a stochastic differential equation. Before introducing a realistic noise in spite of a perturbation we perform some analysis of this model. As in [86] we re-setted model (33) in a stochastic framework by defining the reactions described in Table 6. Notice that in there two reactions have a time-dependent propensity function, i.e.

. Notice that here perturbations are given in terms of a time-dependent kinetic function for synthesis, rather than a stochastic differential equation. Before introducing a realistic noise in spite of a perturbation we perform some analysis of this model. As in [86] we re-setted model (33) in a stochastic framework by defining the reactions described in Table 6. Notice that in there two reactions have a time-dependent propensity function, i.e.  and

and  modeling synthesis.

modeling synthesis.

Table 6. Toggle switch model.

|

|

The bistable model of gene expression in [86]: the stoichiometry matrix (rows in order  ,

,  ,

,  ,

,  ) and the propensity functions.

) and the propensity functions.

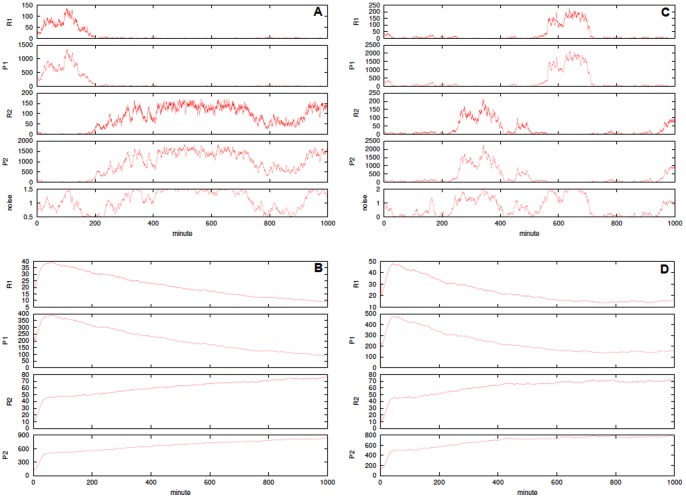

In the top panels of Figure 7 we show single runs for Zhdanov model where simulations are performed with the exact SSA with time-dependent propensity function. In [86] an exact SSA [39] is used to simulated the model under the assumption that variations in the propensity functions are slow between two stochastic jumps. This is true for  as in [86], but not true in general for small values of

as in [86], but not true in general for small values of  . We considered an initial configuration with only

. We considered an initial configuration with only  RNAs

RNAs  . As in [86] we set

. As in [86] we set  ,

,  ,

,  ,

,  and

and  ; notice that this parameters are realistic since, for instance, protein and mRNA degradation usually occur on the minute time-scale [87]. We considered two possible noise intensities, i.e.

; notice that this parameters are realistic since, for instance, protein and mRNA degradation usually occur on the minute time-scale [87]. We considered two possible noise intensities, i.e.  in left and

in left and  in right and, as expected, when

in right and, as expected, when  increases the number of switches increases. To investigate more in-depth this model we performed

increases the number of switches increases. To investigate more in-depth this model we performed  simulations for both the configurations. In the bottom panels of Figure 7 the averages of the simulations are shown. The average of our simulations evidences a major expression of protein

simulations for both the configurations. In the bottom panels of Figure 7 the averages of the simulations are shown. The average of our simulations evidences a major expression of protein  against

against  , for both values of

, for both values of  , with dumped oscillations for

, with dumped oscillations for  and almost persistent oscillations for

and almost persistent oscillations for  .

.

Figure 7. Periodically perturbed toggle switch.

In the top panels a single run for Zhdanov model (33) with  (A) and

(A) and  (C). In bottom plots averages of

(C). In bottom plots averages of  simulations are shown with

simulations are shown with  (B) and

(B) and  (D). In all cases

(D). In all cases  ,

,  ,

,  ,

,  and

and  and the initial configuration is

and the initial configuration is  . The noise realization is plotted for the single runs.

. The noise realization is plotted for the single runs.

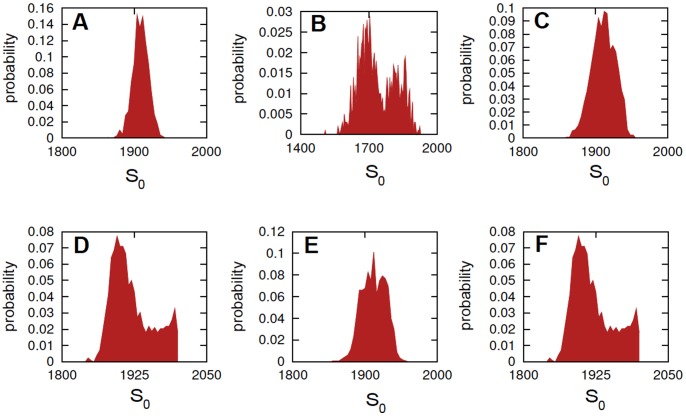

In Figure 8 we plot the empirical probability density function of the species concentrations, i.e.  given the considered initial configuration, at

given the considered initial configuration, at  as obtained by

as obtained by  simulations. Interestingly, these bi-modal probability distributions immediately evidence the presence of stochastic bifurcations in the more expressed populations

simulations. Interestingly, these bi-modal probability distributions immediately evidence the presence of stochastic bifurcations in the more expressed populations  and

and  . In addition, the distributions for the protein seem to oscillate with period around

. In addition, the distributions for the protein seem to oscillate with period around  , i.e. for

, i.e. for  they are unimodal at

they are unimodal at  and bi-modal at

and bi-modal at  .

.

Figure 8. Periodically perturbed toggle switch.

Empirical probability density function at various times, after  simulations for Zhdanov model with the parameter configurations considered in Figure 7. In A

simulations for Zhdanov model with the parameter configurations considered in Figure 7. In A

and

and  , in B

, in B

and

and  , in C

, in C

and

and  , in D

, in D

and

and  , in E

, in E

and

and  and in F

and in F

and

and  .

.

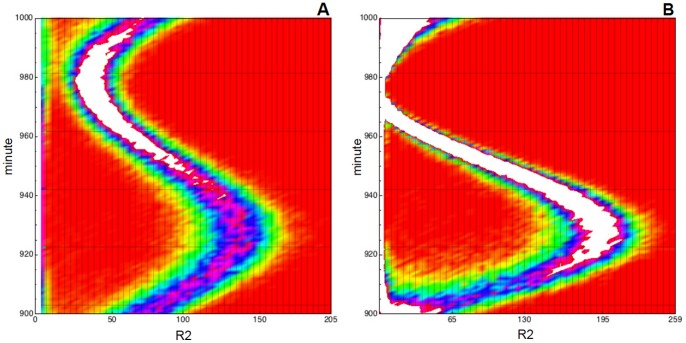

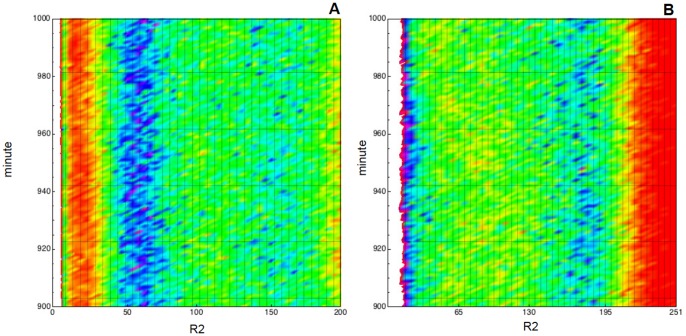

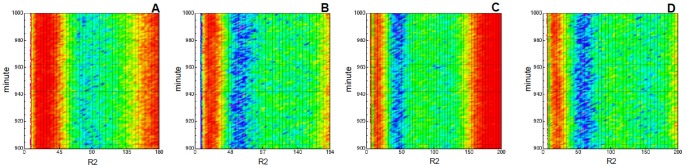

For the sake of confirming this hypothesis in Figure 9 the probability density function of  is plotted against time, i.e. the probability of being in state

is plotted against time, i.e. the probability of being in state  at time

at time  , for any reachable state

, for any reachable state  and time

and time  . In there we plot a heatmap with time on the

. In there we plot a heatmap with time on the  -axis and protein concentration on the

-axis and protein concentration on the  -axis; in the figure the lighter gradient denotes higher probability values. Clearly, this figure shows the oscillatory behavior of the probability distributions for both value of

-axis; in the figure the lighter gradient denotes higher probability values. Clearly, this figure shows the oscillatory behavior of the probability distributions for both value of  and, more important, explains the uni-modality of the distribution at

and, more important, explains the uni-modality of the distribution at  and

and  with

with  , i.e. the higher variance of the rightmost peak at

, i.e. the higher variance of the rightmost peak at  makes the two modes collapse. Finally, we omit to show but, as one should expect, the oscillations of the probability distribution, which are caused by the presence of a sinusoidal perturbation in the parameters, are present and periodic over all the time window

makes the two modes collapse. Finally, we omit to show but, as one should expect, the oscillations of the probability distribution, which are caused by the presence of a sinusoidal perturbation in the parameters, are present and periodic over all the time window  .

.

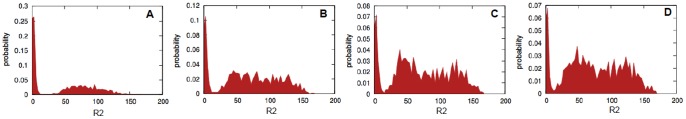

Figure 9. Periodically perturbed toggle switch.

Empirical probability density function for  plotted against time, i.e. the probability of being in any reachable state

plotted against time, i.e. the probability of being in any reachable state  for

for  . Lighter gradient denotes higher probability values. We used data collected with

. Lighter gradient denotes higher probability values. We used data collected with  simulations of model (33) where

simulations of model (33) where  and two perturbation intensities are used,

and two perturbation intensities are used,  in A and

in A and  in B. In the

in B. In the  -axis the species amount is represented, in the

-axis the species amount is represented, in the  -axis the time (in minutes) is given.

-axis the time (in minutes) is given.

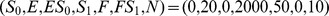

Bounded noises

We investigated the effect of a Sine-Wiener noise affecting protein synthesis rather than a perturbation, i.e. a new  is considered

is considered

|

with  a Wiener process. One the one hand we compared the periodic perturbation proposed by Zhdanov with the sine-Wiener noise because they share three important features:

a Wiener process. One the one hand we compared the periodic perturbation proposed by Zhdanov with the sine-Wiener noise because they share three important features:  the finite amplitude of the perturbation,

the finite amplitude of the perturbation,  a well-defined time-scale (the period for the sinusoidal perturbation, and the autocorrelation time for the bounded noise),

a well-defined time-scale (the period for the sinusoidal perturbation, and the autocorrelation time for the bounded noise),  the sinusoidal nature (in one case the sinus is applied to a linear function of time, in the other case is applied to a random walk). On the other hand, especially in control and radio engineering, sinusoidal perturbations are a classical mean to represent external bounded disturbances.

the sinusoidal nature (in one case the sinus is applied to a linear function of time, in the other case is applied to a random walk). On the other hand, especially in control and radio engineering, sinusoidal perturbations are a classical mean to represent external bounded disturbances.

Here simulations are performed by using the SSAn where the reactions in Table 6 are left unchanged, and the propensity functions  and

and  are modified to

are modified to

For the sake of comparing the simulations with those in Figures 7, 8, 9, we used the same initial condition and the same values for  ,

,  ,

,  ,

,  and

and  . To make reasonable to compare the effect of a realistic noise against the original perturbation we simulated the system with the same values as required, i.e. the noise intensity

. To make reasonable to compare the effect of a realistic noise against the original perturbation we simulated the system with the same values as required, i.e. the noise intensity  in left and

in left and  in right of the top panels in Figure 10, and in both cases

in right of the top panels in Figure 10, and in both cases  . As expected, in this case the trajectories are more scattered than those in Figure 7, and the switches are still present. However, for maximum noise intensity

. As expected, in this case the trajectories are more scattered than those in Figure 7, and the switches are still present. However, for maximum noise intensity  time-slots emerge where the stochastic systems predicts a more complex outcome of the interaction. In fact, for

time-slots emerge where the stochastic systems predicts a more complex outcome of the interaction. In fact, for  neither protein

neither protein  nor