Abstract

Previous studies have shown that early posterior components of event-related potentials (ERPs) are modulated by facial expressions. The goal of the current study was to investigate individual differences in the recognition of facial expressions by examining the relationship between ERP components and the discrimination of facial expressions. Pictures of 3 facial expressions (angry, happy, and neutral) were presented to 36 young adults during ERP recording. Participants were asked to respond with a button press as soon as they recognized the expression depicted. A multiple regression analysis, where ERP components were set as predictor variables, assessed hits and reaction times in response to the facial expressions as dependent variables. The N170 amplitudes significantly predicted for accuracy of angry and happy expressions, and the N170 latencies were predictive for accuracy of neutral expressions. The P2 amplitudes significantly predicted reaction time. The P2 latencies significantly predicted reaction times only for neutral faces. These results suggest that individual differences in the recognition of facial expressions emerge from early components in visual processing.

Introduction

The goal of the current study was to investigate individual differences in neural activity related to recognition of facial expressions. Some people are good at recognizing facial expressions, but others are not. Previous studies have suggested that not only individuals with brain damage [1] or schizophrenia [2] have difficulty in recognizing facial expressions but also that there are individual differences in recognition among healthy adults. For example, age [3], gender [4], personality, and mental states [5], affect recognition of facial expressions. However, the neural basis of these individual differences has not been clarified. The current study examined the role of individual differences in neural activity in the recognition of facial expressions.

Compared with other objects, face perception is unique and has been investigated extensively [6]. One functional magnetic resonance imaging (fMRI) study found that an area within the fusiform gyrus, implicated in face perception, responds more to faces than to other objects [7]. fMRI has been used extensively to examine differences in the localization of activation to face and nonface stimuli [8]. An integrative study using fMRI, electroencephalogram and magnetoencephalography showed similar activation to face stimuli [9]. Event-related potential (ERP) studies have investigated a signal that is sensitive to face processing, known as N170. N170 is a large, posterior negative deflection that follows the visual presentation of the picture of a face, peaking at right occipitotemporal sites at around 170 ms [10]. Previous studies have shown that N170 is modulated by various factors, including inversion [11], contrast [12], and emotional expressions [13], [14]. Past studies showed that N170 is also modulated by certain disease states [15]–[17]. For example, individuals with schizophrenia are impaired in their ability to accurately perceive facial expressions, and they display significantly smaller N170 amplitudes [18].

P2, which is the component that peaks at occipital sites at around 220 ms, is thought to reflect deeper processing of stimuli [19]. One previous study reported that compared to healthy adults, individuals with schizophrenia also had reduced P2 amplitudes [18].

There is also evidence that both N170 and P2 are modulated by expert object learning [19]. For example, an enhanced N170 was observed when bird and dog experts categorized objects in their domain of expertise relative to when they categorized objects outside their domain of expertise [20].

Given that N170 and P2 are modulated by expertise and learning, these components should be indices of individual differences in the recognition of facial expressions. Some studies reported correlations between behavioral measures and ERPs elicited by face stimuli [21]–[23]. However, the neural basis of individual differences to recognize facial expressions has not been clarified. The aim of the current study was to investigate individual differences in neural activity related to the recognition of facial expressions. We examined whether facial expression discrimination was associated with early ERP components: N170 and P2.

Method

Ethics Statement: Written informed consent was obtained from each participant prior to the experiment. The ethics committee of The University of Tokyo approved this study.

Participants: Participants were recruited from local Japanese universities. Thirty-three healthy, right-handed paid volunteers (17 females and 16 males, aged 18–31 years, mean age = 22.3 years) participated in the experiment.

Stimuli: Stimuli consisted of photographs of facial expressions (2 females and 2 males posing angry, happy and neutral expressions) taken from the ATR Facial Expression Image Database (DB99). The database contains 4 females and 6 males and 3 pictures of each model posing each facial expression. The database also contains a result of a preliminary experiment, which is not published, to confirm validity of the database (see Supporting Information, Text S1). All stimuli were gray-scale pictures.

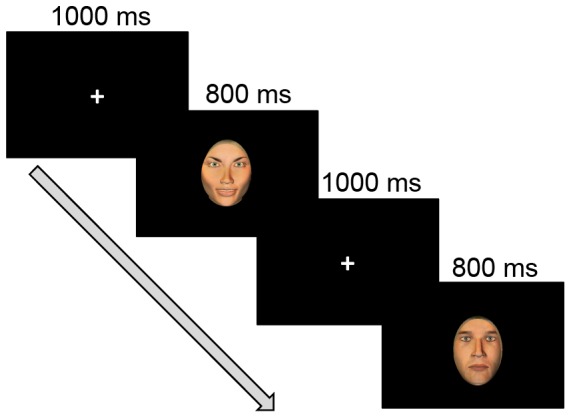

Procedure: The protocol consisted of four blocks. Each block contained 120 facial stimuli, 40 photographs of each expression. The stimuli were presented in random order. All photographs were 5.5×8 cm. The mean luminance was equal across stimuli. Stimuli were presented on a black background on a 17″ CRT computer screen (EIZO FlexScan F520) at a viewing distance of 80 cm for 800 ms with an ISI of 1000 ms (see Fig. 1). Participants were told that they would be shown a series of photographs and were asked to respond to each facial expression by pressing certain keys. Before the actual experiment, participants practiced the task with a short training block that included stimulus examples not present during the experimental blocks. It took about 30 minutes to complete all tasks including short breaks.

Figure 1. Illustration of the stimulus-presentation procedure used in the current experiment.

Each trial started with a fixation followed by a facial expression. The images of faces shown here do not depict the actual stimuli but are intended only as examples.

EEG recoding and data analysis: EEG was recorded from 65 electrodes with a Geodesic Sensor Net [24], sampled at 250 Hz with a 100 Hz low-pass filter. Electrode impedance was below 100 kΩ. The EEG recording system is high input impedance amplifier. The original study using the system [24] shows that with electrode impedance around 80 kΩ, the EEG data are still clear. Before recording, the experimenter severely checked the waveform on a screen. All recordings were initially referenced to the vertex and, later, digitally re-referenced to the average reference. In the off-line analysis, a 0.1–30 Hz band-pass filter was reapplied. All data were segmented into 800 ms epochs, including a 100 ms pre-stimulus baseline period, based on time markers for stimulus onset. All segments without eye movements and blinks and less than 75 microV in each channel were analyzed and baseline-corrected. Each component was measured at the area where the amplitude was maximal (see fig. 2). N170 was measured at electrodes P7 and P8 [10], [13]. P2 was measured at electrodes O1 and O2 [18], [19], [25]. Latencies were taken where the amplitude was maximal over each hemisphere, and amplitudes were measured at this latency. Peak amplitudes for each component were analyzed to compare the current results directly with relevant previous studies [15], [17], [19], [22]. A Greenhouse-Geisser adjustment of degrees of freedom, as well as a Bonferroni correction, was used when necessary.

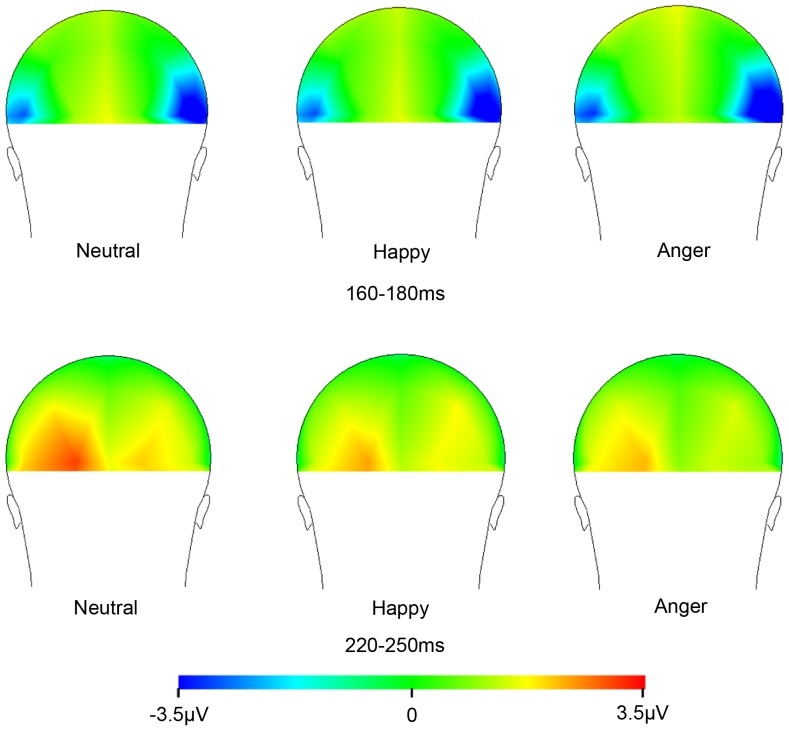

Figure 2. Topographical maps of N170 and P2 window for facial stimuli.

Maps from a back view perspective. Negativity is shown in blue.

Effect size was computed because it is recommended to include some index of effect size so that the reader fully understands the importance of the result [26], [27]

Results

Behavioral: Trials which contained ±2 SD reaction time were excluded from the analyses. Participants showed greater accuracy in recognizing angry (85%) as compared to happy faces (80%: F (1.5, 52.8) = 4.9, p = .018, ηp 2 = .12; MS = 72.2, df = 49.8, p<.001). Other comparisons were not significant (neutral and angry faces, p = 1.0; happy and neutral faces, p = .062; respectively). No significant effects were observed regarding reaction time (angry = 586 ms, happy = 597 ms, and neutral = 592 ms; F (2, 68) = 2.3, p = .106, ηp 2 = .06; MS = 543.7, df = 68; neutral and angry faces, p = 1.0; happy and neutral faces, p = .344; happy and angry faces, p = .058; respectively).

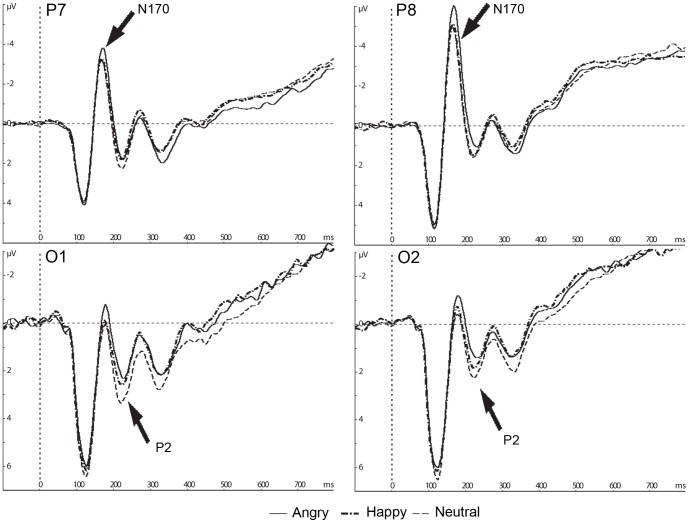

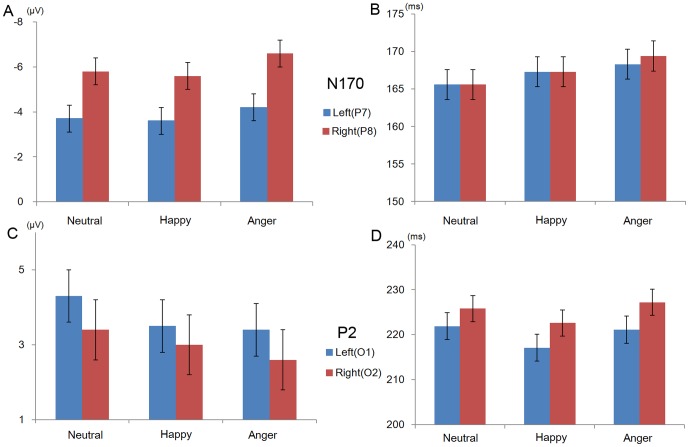

ERP: Grand averaged waveforms and topography of ERPs are illustrated in Figure 2 and 3. Figure 4 and table 1 show means and standard errors of ERPs. The average number of noise free trials included in the grand average per participants was 407 trials (angry, happy and neutral expressions, respectively; 136, 135 and 136 trials). N170 amplitudes varied with emotion (F (1.6, 56.7) = 13.6, p<.001, ηp 2 = .28) and hemisphere (F (1, 35) = 13.5, p<.001, ηp 2 = .28). N170 amplitudes for angry faces were larger than those for happy and neutral faces (MS = 1.2, df = 56.68, p<.001; p<.001, respectively). A comparison between happy and neutral faces was not significant (p = .34). N170 amplitudes were also larger over the right hemisphere than over the left hemisphere.

Figure 3. Grand average ERP waveforms of N170 and P2 amplitudes.

Electrode site P7 and P8 for N170, and O1 and O2 for P2 are displayed. Negative amplitudes are plotted upward.

Figure 4. Mean and standard error of ERP components elicited by facial expressions.

(A) Amplitudes of N170. Negative amplitudes are plotted upward. (B) Latencies of N170. (C) Amplitudes of P2. Positive amplitudes are plotted upward. (D) Latencies of P2.

Table 1. Mean and standard error of ERP components elicited by facial expressions.

| N170 | P2 | |||||||

| Amplitude(µV) | Latency(ms) | Amplitude(µV) | Latency(ms) | |||||

| Left(P7) | Right(P8) | Left(P7) | Right(P8) | Left(O1) | Right(O2) | Left(O1) | Right(O2) | |

| Neutral | −3.6(0.6) | −5.6(0.6) | 165.6(2.1) | 165.6(1.9) | 4.3(0.7) | 3.4(0.8) | 221.9(2.8) | 225.8(2.8) |

| Happy | −3.7(0.6) | −5.8(0.6) | 167.2(2.2) | 167.3(2.0) | 3.5(0.7) | 3.0(0.8) | 217.1(3.0) | 222.6(2.9) |

| Anger | −4.2(0.6) | −6.6(0.7) | 168.3(1.6) | 169.4(2.0) | 3.4(0.8) | 2.6(0.8) | 221.1(3.0) | 227.2(3.2) |

An effect of emotion was seen on N170 latencies (F (2, 70) = 11.3, p<.001, ηp 2 = .24). N170 latencies for neutral faces were shorter than for angry faces (MS = 17.67, df = 70, p<.001). Other comparisons were not significant (happy and angry faces, p = .12; happy and neutral faces, p = .12; respectively).

For P2 amplitudes, there was an effect of emotion (F (2, 70) = 12.1, p<.001, ηp 2 = .26), with larger amplitudes elicited by neutral faces rather than happy and angry faces (MS = 1.02, df = 70, p<.001; p<.001, respectively). A comparison between happy and angry faces was not significant (p = .72).

P2 latency data also showed an effect of emotion (F (2, 70) = 3.5, p = .035, ηp 2 = .09); however, post-hoc analyses did not reveal significant differences between any two emotions (happy and angry faces, MS = 119.1, df = 70, p = .07; happy and neutral faces,, p = .177; angry and neutral faces, p = 1.0, respectively).

To examine the relationship between neural activity and recognition of facial expressions, stepwise multiple regression analyses were conducted. For each of six dependent measures (accuracy rate and reaction time for each facial expression), the amplitudes and latencies of ERPs were entered as predictors. This analysis allows unbiased, hypothesis-free comparisons of task-related activation patterns [28].

For two reasons, only N170 components over the right hemisphere were analyzed. First, consistent with previous studies [10], [12], N170 amplitudes were larger over right hemisphere sites than over left hemisphere sites. One fMRI study found greater activation in the right than left fusiform gyrus when viewing facial expression [7]. Past studies showed that face stimuli evoked a larger N170 over the right hemisphere [10], [12], [19], [23], [29]; but see some reports of no significant bilateral effect: [30]. Second, there was no clear difference in the coefficient of determination between a model that included both hemispheres and a model that only included the right hemisphere. In contrast to N170, there was no effect of hemisphere on P2 components, so we analyzed P2 over both hemispheres.

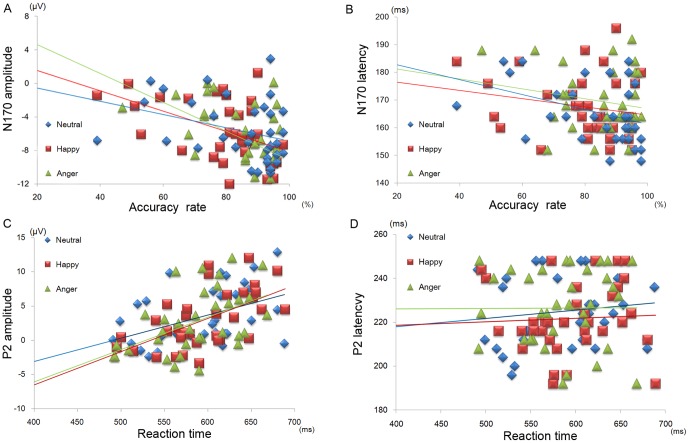

Figure 5 show the association between ERP components and the behavioral data. The N170 amplitudes were significant predictors for accuracy of angry and happy expressions, and N170 latencies were predictive for accuracy of neutral expressions (see Fig. 5a; angry, happy, and neutral, respectively; β = −1.41, 95% confidence interval (CI), −2.32 to −0.49, R 2 = .22, p = .004; β = −1.89, 95% CI, −3.14 to −0.63, R 2 = .22, p = .004; β = −.53, 95% CI, −0.99 to −0.08, R 2 = .14, p = .022; each β is unstandardized).

Figure 5. Scatter plot of the behavioral and ERP data.

(A) Relationship between accuracy rate and N170 components: N170 amplitudes for angry and happy faces, and N170 latencies for neutral faces. (B) Relationship between reaction times and P2 components. Each plot indicates each participant, and each line depicts a regression line for a facial expression.

P2 amplitudes significantly predicted reaction times (see Fig. 5b; angry, happy and neutral expressions, respectively; β = 5.44, 95% CI, 2.27 to 8.61, R 2 = .26, p = .001; β = 6.71, 95% CI, 3.34 to 10.08, R 2 = .33, p<.001; β = 4.38, 95% CI, 0.26 to 8.5, R 2 = .33, p = .038; each β is unstandardized). P2 latencies significantly predicted reaction times only for neutral faces (β = 1.25, 95% CI, 0.17 to 2.33 R 2 = .26, p = .025; each β is unstandardized).

To detect violation of the data assumptions (multicollinearity among the predictors), pearson correlation coefficients were computed between the predictors in each model. The results showed no correlation higher than 0.70 and validated the models.

Discussion

The current study investigated the relationship between individual differences in the recognition of facial expressions and neural activity among healthy adults. Results showed that the N170 and P2 components were related to recognition of facial expressions. N170 significantly predicted recognition accuracy; the larger N170 predicted higher accuracy rates. Some studies have suggested that N170 is a good neurophysiological index of face perception processes [10], [31]. Moreover, an enhanced N170 was found when bird and dog experts categorized objects in their domain of expertise [20]. Given these findings, the association between N170 and accuracy rates may reflect individual differences in the ability to discriminate facial expressions. Early emotional processing in young children differed from that observed in the adolescents, who approached adults [32]. In future studies, to examine whether the same pattern of individual differences are observed in children would be an interesting.

P2 was predictive of reaction times. Given that P2 had no direct link with accuracy rates, it is reasonable to assume that the P2 component did not directly reflect the categorization of facial expressions. A functional model of face cognition suggested the importance of distinguishing between speed and accuracy of face cognition [33]. P2 may reflect the activity related to speed of face cognition. As discussed above, P2 is also thought to reflect deeper processing of stimuli [19]. Therefore, our results indicate that variance in P2 is an index of individual differences in a deeper process such as second-order configural processing [22] and speed of face cognition that follows the categorization of facial expressions.

Many factors such as repetition [34]–[37] and familiarity [38] with facial stimuli used in a task affect the face processing. It is reasonable to assume that these factors had little effect on the current results. First, all stimuli were unfamiliar to participants. Second, the repetition effect diminished when different identities were presented continuously [39]. Facial stimuli used in the current study were taken from 4 different adults and presented in a random order. Moreover, all participants had the same task. Even if there was the repetition effect, all participants would be affected in the same way. Nevertheless, the present study showed individual difference in the recognition of facial expressions.

Our results are compatible with several clinical studies. Individuals with schizophrenia, who have great difficulty in recognizing facial expressions, have shown a specific reduction in N170 components and an increase in P2 components in response to faces, relative to healthy controls [18], [40]. The current study found that a low accuracy rate was linked to a reduction of N170 and that longer reaction times were associated with increases in P2 among healthy adults. These findings suggest that individual differences between clinical and non-clinical samples in the recognition of facial expressions may be continuous rather than discrete. To address this possibility, future studies should employ the same experimental procedure with clinical and non-clinical samples to examine potential discrepancies in facial processing and recognition.

Responses to neutral faces had a unique association with both N170 and P2. A previous study revealed significant differences between emotional faces and neutral faces only during an expression discrimination task. Results indicated that the difference was not due to the physical features of the stimuli but depended on cognitive aspects [25]. Difference between emotional and neutral faces may be elicited by different levels of arousal and not by the emotional content [14]. Our current findings may also reflect the unique cognitive processing of neutral faces.

Negative faces elicited larger amplitudes than positive faces. This result is consistent with the past studies [13], [14], though other studies reported that N170 amplitudes were larger following the positive as compared to the negative faces [16], [41]–[44]. The tasks and stimuli used in these previous studies are different from each other. Therefore, it is difficult to compare the effects directly and the result may be inconclusive. Because the focus of interest in the present study was on individual differences in the recognition of facial expressions, the present data do not deal explicitly with this question.

There are some limitations of the present study. One limitation relates to our stimuli. We employed three categories of emotional faces in order to avoid response conflicts among our participants. We are unsure whether a similar association would have been observed had we used other facial expressions. Since our interest was in recognition of facial expressions, all of the stimuli were faces. This raises the question as to whether our findings are specific to facial expressions or whether they can be generalized to other objects. This is unlikely to be the case, given that previous studies suggest a form of neural processing that is unique to face recognition in comparison to other objects [7], [10], [13,]. However, future studies should confirm the current findings with other types of stimuli in addition to faces.

Another limitation is the robustness of the current findings. Previous study suggested the importance to consider outliers in data [45]. In the current study, the effects of outliers would be small enough not to affect results. First, we excluded trials which contained ±2 SD reaction time in behavior data. Second, for EEG data, severe artifact rejection was conducted and more than 100 trials per condition were included in the grand average. However, the number of participants might be modest for a regression analysis. Future study will address these issues.

The aim of the current study was to investigate the relationship between individual differences in the recognition of facial expressions and neural activity. Therefore, we focused on the direct relationship between them and did not include any personal variable. Though the multiple regression models were significant, this does not mean that the models completely predict the recognition of facial expressions. Given that the cortical processing of facial emotional expression is modulated with many factors, such as executive function [46], social cognition skills [43] and disease [17], [18], [40], [41], further studies should build a more general model of recognition of facial expressions.

The present data sheds new light on individual differences in the neural activity associated with recognition of facial expressions. Models for face processing have been built [6], [47], [48] and previous studies investigated the neural basis [7] and time course [10], [13], [22]. Our findings suggest that individual differences in an important social skill, such as recognition of facial expressions, emerge during an early stage of information processing and give important implications for those models. Some people have difficulty recognizing facial expressions (e.g., individuals with brain damage [1], schizophrenia [18], neurodegenerative disease [49], [50], ADHD [41] and autism [51]). The current findings are an important first step toward providing potential explanations as to the mechanism(s) underlying facial emotion recognition impairments.

Supporting Information

psychological evaluation of the ATR Facial Expression Image Database and the number of facial stimuli used in the current study.

(DOC)

Funding Statement

Dr. Hiraki is supported by a Research Project Grant-in-aid for Scientific Research (A) (22240026) from the Ministry of Education, Culture, Sports, Science, and Technology of Japan Research and “Pedagogical Machine: Developmental cognitive science approach to create teaching/teachable machines” the Core Research for Evolutional Science and Technology (CREST), Japan Science and Technology Agency. Mr. Tamamiya has no disclosures. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Philippi CL, Mehta S, Grabowski T, Adolphs R, Rudrauf D (2009) Damage to association fiber tracts impairs recognition of the facial expression of emotion. J Neurosci 29: 15089–15099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Taylor SF, Kang J, Brege IS, Tso IF, Hosanagar A, et al. (2012) Meta-analysis of functional neuroimaging studies of emotion perception and experience in schizophrenia. Biol Psychiatry 71: 136–145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Isaacowitz DM, Löckenhoff CE, Lane RD, Wright R, Sechrest L, et al. (2007) Age differences in recognition of emotion in lexical stimuli and facial expressions. Psychol Aging 22: 147–159. [DOI] [PubMed] [Google Scholar]

- 4. Hall JA, Matsumoto D (2004) Gender differences in judgments of multiple emotions from facial expressions. Emotion 4: 201–206. [DOI] [PubMed] [Google Scholar]

- 5. Kahler CW, McHugh RK, Leventhal AM, Colby SM, Gwaltney CJ, et al. (2012) High hostility among smokers predicts slower recognition of positive facial emotion. Pers Individ Dif 52: 444–448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Haxby J, Hoffman E, Gobbini M (2000) The distributed human neural system for face perception. Trends Cogn Sci 4: 223–233. [DOI] [PubMed] [Google Scholar]

- 7. Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17: 4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Haxby JV, Ungerleider LG, Clark VP, Schouten JL, Hoffman Ea, et al. (1999) The effect of face inversion on activity in human neural systems for face and object perception. Neuron 22: 189–199. [DOI] [PubMed] [Google Scholar]

- 9. Halgren E, Raij T, Marinkovic K, Jousmäki V, Hari R (2000) Cognitive response profile of the human fusiform face area as determined by MEG. Cerebral cortex 10: 69–81. [DOI] [PubMed] [Google Scholar]

- 10. Bentin S, Allison T, Puce A, Perez E, McCarthy G (1996) Electrophysiological studies of face perception in humans. J Cogn Neurosci 8: 551–565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. James MS, Johnstone SJ, Hayward WG (2001) Event-related potentials, configural encoding, and feature-based encoding in face recognition. J Psychophysiol 15: 275–285. [Google Scholar]

- 12. Itier RJ, Taylor MJ (2002) Inversion and contrast polarity reversal affect both encoding and recognition processes of unfamiliar faces: a repetition study using ERPs. NeuroImage 15: 353–372. [DOI] [PubMed] [Google Scholar]

- 13. Batty M, Taylor MJ (2003) Early processing of the six basic facial emotional expressions. Cogn Brain Res 17: 613–620. [DOI] [PubMed] [Google Scholar]

- 14. Krombholz A, Schaefer F, Boucsein W (2007) Modification of N170 by different emotional expression of schematic faces. Biol Psychol 76: 156–162. [DOI] [PubMed] [Google Scholar]

- 15. Batty M, Meaux E, Wittemeyer K, Rogé B, Taylor MJ (2011) Early processing of emotional faces in children with autism: An event-related potential study. Journal of experimental child psychology 109: 430–444. [DOI] [PubMed] [Google Scholar]

- 16. Ibanez A, Cetkovich M, Petroni A, Urquina H, Baez S, et al. (2012) The neural basis of decision-making and reward processing in adults with euthymic bipolar disorder or attention-deficit/hyperactivity disorder (ADHD). PloS one 7: e37306. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Schefter M, Werheid K, Almkvist O, Lönnqvist-Akenine U, Kathmann N, et al. (2012) Recognition memory for emotional faces in amnestic mild cognitive impairment: An event-related potential study. Neuropsychology, development, and cognition Section B, Aging, neuropsychology and cognition 37–41. [DOI] [PubMed] [Google Scholar]

- 18. Herrmann MJ, Ellgring H, Fallgatter AJ (2004) Early-stage face processing dysfunction in patients with schizophrenia. Am J Psychiatry 161: 915–917. [DOI] [PubMed] [Google Scholar]

- 19. Latinus M, Taylor MJ (2005) Holistic processing of faces: learning effects with Mooney faces. J Cogn Neurosci 17: 1316–1327. [DOI] [PubMed] [Google Scholar]

- 20. Tanaka JW, Curran T (2001) A neural basis for expert object recognition. Psychol Sci 12: 43–47. [DOI] [PubMed] [Google Scholar]

- 21. Herzmann G, Kunina O, Sommer W, Wilhelm O (2010) Individual differences in face cognition: brain-behavior relationships. J Cogn Neurosci 22: 571–589. [DOI] [PubMed] [Google Scholar]

- 22. Latinus M, Taylor MJ (2006) Face processing stages: impact of difficulty and the separation of effects. Brain research 1123: 179–187. [DOI] [PubMed] [Google Scholar]

- 23. Vizioli L, Foreman K, Rousselet GA, Caldara R (2010) Inverting faces elicits sensitivity to race on the N170 component : A cross-cultural study. J Vis 10:15: 1–23. [DOI] [PubMed] [Google Scholar]

- 24. Tucker DM (1993) Spatial sampling of head electrical fields: the geodesic sensor net. Electroencephalogr Clin Neurophysiol 87: 154–163. [DOI] [PubMed] [Google Scholar]

- 25. Krolak-Salmon P, Fischer C, Vighetto a, Mauguière F (2001) Processing of facial emotional expression: spatio-temporal data as assessed by scalp event-related potentials. Eur J Neurosci 13: 987–994. [DOI] [PubMed] [Google Scholar]

- 26.American Psychological Association (2010) Publication Manual of the American Psychological Association. Sixth Edition. Washington, DC: American Psychological Association.

- 27. Pierce CA, Block RA, Aguinis H (2004) Cautionary Note on Reporting Eta-Squared Values from Multifactor ANOVA Designs. Educational and Psychological Measurement 64: 916–924. [Google Scholar]

- 28. Gordon EM, Stollstorff M, Vaidya CJ (2012) Using spatial multiple regression to identify intrinsic connectivity networks involved in working memory performance. Human brain mapping 33: 1536–1552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Rossion B, Gauthier I, Tarr MJ, Despland P, Bruyer R, et al. (2000) The N170 occipito-temporal component is delayed and enhanced to inverted faces but not to inverted objects: an electrophysiological account of face-specific processes in the human brain. Neuroreport 11: 69–74. [DOI] [PubMed] [Google Scholar]

- 30. Rossion B, Joyce CA, Cottrell GW, Tarr MJ (2003) Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuro Image 20: 1609–1624. [DOI] [PubMed] [Google Scholar]

- 31. Sagiv N, Bentin S (2001) Structural encoding of human and schematic faces: holistic and part-based processes. J Cogn Neurosci 13: 937–951. [DOI] [PubMed] [Google Scholar]

- 32. Batty M, Taylor MJ (2006) The development of emotional face processing during childhood. Dev Sci 9: 207–220. [DOI] [PubMed] [Google Scholar]

- 33. Wilhelm O, Herzmann G, Kunina O, Danthiir V, Schacht A, et al. (2010) Individual differences in perceiving and recognizing faces-One element of social cognition. J Pers Soc Psychol 99: 530–548. [DOI] [PubMed] [Google Scholar]

- 34. Galli G, Feurra M, Viggiano MP (2006) “Did you see him in the newspaper?” Electrophysiological correlates of context and valence in face processing. Brain research 1119: 190–202. [DOI] [PubMed] [Google Scholar]

- 35. Itier RJ, Taylor MJ (2004) Effects of repetition learning on upright, inverted and contrast-reversed face processing using ERPs. Neuroimage 21: 1518–1532. [DOI] [PubMed] [Google Scholar]

- 36. Kaufmann JM, Schweinberger SR, Burton a M (2009) N250 ERP correlates of the acquisition of face representations across different images. J Cogn Neurosci 21: 625–641. [DOI] [PubMed] [Google Scholar]

- 37. Maurer U, Rossion B, McCandliss BD (2008) Category specificity in early perception: face and word n170 responses differ in both lateralization and habituation properties. Frontiers in human neuroscience 2: 18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Caharel S, Fiori N, Bernard C, Lalonde R, Rebaï M (2006) The effects of inversion and eye displacements of familiar and unknown faces on early and late-stage ERPs. International journal of psychophysiology: official journal of the International Organization of Psychophysiology 62: 141–151. [DOI] [PubMed] [Google Scholar]

- 39. Campanella S, Hanoteau C, Dépy D, Rossion B, Bruyer R, et al. (2000) Right N170 modulation in a face discrimination task: an account for categorical perception of familiar faces. Psychophysiology 37: 796–806. [PubMed] [Google Scholar]

- 40. Ibáñez A, Riveros R, Hurtado E, Gleichgerrcht E, Urquina H, et al. (2012) The face and its emotion: Right N170 deficits in structural processing and early emotional discrimination in schizophrenic patients and relatives. Psychiatry Res 195: 18–26. [DOI] [PubMed] [Google Scholar]

- 41. Ibáñez A, Petroni A, Urquina H, Torralva T, Blenkmann A, et al. (2011) Cortical deficits in emotion processing for faces in adults with ADHD: Its relation to social cognition and executive functioning. Social Neuroscience 6: 464–481. [DOI] [PubMed] [Google Scholar]

- 42. Ibáñez A, Hurtado E, Riveros R, Urquina H, Cardona JF, et al. (2011) Facial and semantic emotional interference: a pilot study on the behavioral and cortical responses to the Dual Valence Association Task. Behavioral and brain functions : BBF 7: 8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Petroni A, Canales-Johnson A, Urquina H, Guex R, Hurtado E, et al. (2011) The cortical processing of facial emotional expression is associated with social cognition skills and executive functioning: a preliminary study. Neuroscience letters 505: 41–46. [DOI] [PubMed] [Google Scholar]

- 44. Schacht A, Sommer W (2009) Emotions in word and face processing: Early and late cortical responses. Brain Cogn 69: 538–550. [DOI] [PubMed] [Google Scholar]

- 45. Rousselet Ga, Pernet CR (2012) Improving standards in brain-behavior correlation analyses. Frontiers in human neuroscience 6: 119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Pessoa L (2009) How do emotion and motivation direct executive control? Trends Cogn Sci 13: 160–166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Bruce V, Young A (1986) Understanding face recognition. Brit J Psychol 77: 305–327. [DOI] [PubMed] [Google Scholar]

- 48. Vuilleumier P, Pourtois G (2007) Distributed and interactive brain mechanisms during emotion face perception: Evidence from functional neuroimaging. Neuropsychologia 45: 174–194. [DOI] [PubMed] [Google Scholar]

- 49. Hargrave R, Maddock RJ, Stone V (2002) Impaired recognition of facial expressions of emotions in Alzheimer's disease. J Neuropsych Clin Neurosci 14: 64–71 [DOI] [PubMed] [Google Scholar]

- 50. Clark US, Neargarder S, Cronin-Golomb A (2008) Specific impairments in the recognition of emotional facial expressions in Parkinson's disease. Neuropsychologia 46: 2300–2309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. McIntosh DN, Reichmann-Decker A, Winkielman P, Wilbarger JL (2006) When the social mirror breaks: deficits in automatic, but not voluntary, mimicry of emotional facial expressions in autism. Dev Sci 9: 295–302. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

psychological evaluation of the ATR Facial Expression Image Database and the number of facial stimuli used in the current study.

(DOC)