Abstract

Purpose: Optoacoustic tomography (OAT) is inherently a three-dimensional (3D) inverse problem. However, most studies of OAT image reconstruction still employ two-dimensional imaging models. One important reason is because 3D image reconstruction is computationally burdensome. The aim of this work is to accelerate existing image reconstruction algorithms for 3D OAT by use of parallel programming techniques.

Methods: Parallelization strategies are proposed to accelerate a filtered backprojection (FBP) algorithm and two different pairs of projection/backprojection operations that correspond to two different numerical imaging models. The algorithms are designed to fully exploit the parallel computing power of graphics processing units (GPUs). In order to evaluate the parallelization strategies for the projection/backprojection pairs, an iterative image reconstruction algorithm is implemented. Computer simulation and experimental studies are conducted to investigate the computational efficiency and numerical accuracy of the developed algorithms.

Results: The GPU implementations improve the computational efficiency by factors of 1000, 125, and 250 for the FBP algorithm and the two pairs of projection/backprojection operators, respectively. Accurate images are reconstructed by use of the FBP and iterative image reconstruction algorithms from both computer-simulated and experimental data.

Conclusions: Parallelization strategies for 3D OAT image reconstruction are proposed for the first time. These GPU-based implementations significantly reduce the computational time for 3D image reconstruction, complementing our earlier work on 3D OAT iterative image reconstruction.

Keywords: Optoacoustic tomography, photoacoustic tomography, thermoacoustic tomography, graphics processing unit (GPU), compute unified device architecture (CUDA)

INTRODUCTION

Optoacoustic tomography (OAT), also known as photoacoustic computed tomography, is an emerging imaging modality that has great potential for a wide range of biomedical imaging applications.1, 2, 3, 4 In OAT, a short laser pulse is employed to irradiate biological tissues. When the biological tissues absorb the optical energy, acoustic wave fields can be generated via the thermoacoustic effect. The acoustic wave fields propagate outward in three-dimensional (3D) space and are measured by use of ultrasonic transducers that are distributed outside the object. The goal of OAT is to obtain an estimate of the absorbed energy density map within the object from the measured acoustic signals. To accomplish this, an image reconstruction algorithm is required.

A variety of analytic image reconstruction algorithms have been proposed.5, 6, 7, 8 These algorithms generally assume an idealized transducer model and an acoustically homogeneous medium. Also, since they are based on discretization of continuous reconstruction formulae, these algorithms require the acoustic pressure to be densely sampled over a surface that encloses the object to obtain an accurate reconstruction. To overcome these limitations, iterative image reconstruction algorithms have been proposed.9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24 Although the optoacoustic wave intrinsically propagates in 3D space, when applying to experimental data, most studies have employed two-dimensional (2D) imaging models by making certain assumptions on the transducer responses and/or the object structures.10, 13, 15, 16, 19, 25 An important reason is because the computation required for 3D OAT image reconstruction is excessively burdensome. Therefore, acceleration of 3D image reconstruction will facilitate algorithm development and many applications including real-time 3D PACT.26, 27

A graphics processing unit (GPU) card is a specialized device specifically designed for parallel computations.28 Compute unified device architecture (CUDA) is an extension of the C/FORTRAN language that provides a convenient programming platform to exploit the parallel computational power of GPUs.29 The CUDA-based parallel programming technique has been successfully applied to accelerate image reconstruction in mature imaging modalities such as x-ray computed tomography (CT)30, 31, 32 and magnetic resonance imaging (MRI).33 In OAT, however, only a few works on utilization of GPUs to accelerate image reconstruction have been reported.20, 34 For example, the k-wave toolbox employs the NVIDIA CUDA Fast Fourier Transform library (cuFFT) to accelerate the computation of 3D FFT.34 Also a GPU-based sparse matrix-vector multiplication strategy has been applied to 3D OAT image reconstruction for the case that the system matrix is sparse and can be stored in memory.20 However, there remains an important need to develop efficient implementations of OAT reconstruction algorithms for general applications in which the system matrix is too large to be stored.

In this work, we propose parallelization strategies, for use with GPUs, to accelerate 3D image reconstruction in OAT. Both filtered backprojection (FBP) and iterative image reconstruction algorithms are investigated. For use with iterative image reconstruction algorithms, we focus on the parallelization of projection and backprojection operators. Specifically, we develop two pairs of projection/backprojection operators that correspond to two distinct discrete-to-discrete (D-D) imaging models employed in OAT, namely, the interpolation-based and the spherical-voxel-based D-D imaging models. Note that our implementations of the backprojection operators compute the exact adjoint of the forward operators, and therefore the projector pairs are “matched.”35

The remainder of the paper is organized as follows. In Sec. 2, we briefly review OAT imaging models in their continuous and discrete forms. We propose GPU-based parallelization strategies in Sec. 3. Numerical studies and results are described in Secs. 4, 5, respectively. Finally, a brief discussion and summary of the proposed algorithms are provided in Sec. 6.

BACKGROUND

Continuous-to-continuous (C-C) imaging models and analytic image reconstruction algorithms

A C-C OAT imaging model neglects sampling effects and provides a mapping from the absorbed energy density function A(r) to the induced acoustic pressure function p(rs, t). Here, t is the temporal coordinate, r ∈ V and rs ∈ S denote the locations within the object support V and on the measurement surface S, respectively. A canonical OAT C-C imaging model can be expressed as1, 14, 36

| (1) |

where δ(t) is the Dirac delta function, β, c0, and Cp denote the thermal coefficient of volume expansion, (constant) speed-of-sound, and the specific heat capacity of the medium at constant pressure, respectively. We introduce an operator notation to denote this C-C mapping.

Alternatively, Eq. 1 can be reformulated as the well-known spherical Radon transform (SRT)12, 37

| (2) |

where the function g(rs, t) is related to p(rs, t) as

| (3) |

The SRT model provides an intuitive interpretation of each value of g(rs, t) as a surface integral of A(r) over a sphere centered at rs with radius tc0.

Based on C-C imaging models, a variety of analytic image reconstruction algorithms have been developed.5, 6, 7, 8 For the case of a spherical measurement geometry, a FBP algorithm in its continuous form is given by6

| (4) |

where Rs denotes the radius of the measurement surface S.

Discrete-to-discrete imaging models and iterative image reconstruction algorithms

When sampling effects are considered, an OAT system is properly described as a continuous-to-discrete (C-D) imaging model14, 22, 35, 38, 39

| (5) |

where Q and K denote the total numbers of transducers (indexed by q) and the time samples (indexed by k) respectively. Sq is the surface area of the qth transducer, which is assumed to be a subset of S; he(t) denotes the acousto-electric impulse response (EIR) of each transducer that, without loss of generality, is assumed to be identical for all transducers; “*t” denotes a linear convolution with respect to time coordinate; and Δt is the temporal sampling interval. The vector u represents the lexicographically ordered measured voltage signals whose (qK + k)th element is denoted by [u]qK + k.

In order to apply iterative image reconstruction algorithms, a D-D imaging model is required, which necessitates the discretization of A(r). The following N-dimensional representation of the object function can be employed35, 38

| (6) |

where α is a coefficient vector whose nth element is denoted by [α]n and ψn(r) is the expansion function. On substitution from Eq. 6 into Eq. 5, where p(rs, t) is defined by Eq. 1, one obtains a D-D mapping from α to u, expressed as

| (7) |

where each element of the matrix H is defined as

| (8) |

Here, H is the D-D imaging operator also known as system matrix or projection operator. Note that the “≈” in Eq. 7 is due to the use of the finite-dimensional representation of the object function [i.e., Eq. 6]. No additional approximations have been introduced.

Below we describe two types of D-D imaging models that have been employed in OAT:14, 22, 39 the interpolation-based imaging model and the spherical-voxel-based imaging model. The quantities u, H, and α (or ψn) in the two models will be distinguished by the subscripts (or superscripts) “int” and “sph,” respectively.

Interpolation-based D-D imaging model

The interpolation-based D-D imaging model defines the coefficient vector as samples of the object function on the nodes of a uniform Cartesian grid

| (9) |

where rn = (xn, yn, zn)T specifies the location of the nth node of the uniform Cartesian grid. The definition of the expansion function depends on the choice of interpolation method.19 If a trilinear interpolation method is employed, the expansion function can be expressed as40

| (10) |

where Δs is the distance between two neighboring grid points.

In principle, the interpolation-based D-D imaging model can be constructed by substitution from Eqs. 9, 10 to Eq. 8. In practice, however, implementation of the surface integral over Sq is difficult for the choice of expansion functions in Eq. 10. Also, implementations of the temporal convolution and usually require extra discretization procedures. Therefore, utilization of the interpolation-based D-D model commonly assumes the transducers to be point-like. In this case, the implementation of Hint is decomposed as a three-step operation

| (11) |

where G, D, and He are discrete approximations of the SRT [Eq. 2], the differential operator [Eq. 3], and the operator that implements a temporal convolution with EIR, respectively. We implemented G in a way12, 41, 42 that is similar to the “ray-driven” implementation of Radon transform in x-ray CT,40 i.e, for each data sample, we accumulated the contributions from the voxels that resided on the spherical shell specified by the data sample. By use of Eqs. 2, 6, 9, 10, one obtains

| (12) |

where with specifying the location of the qth point-like transducer, and Ni and Nj denote the numbers of divisions over the two angular coordinates of a local spherical coordinate system shown in Fig. 1b. A derivation of Eq. 12 is provided in the Appendix. The differential operator in Eq. 3 is approximated as

| (13) |

where . Finally, the continuous temporal convolution is approximated by a discrete linear convolution as43

| (14) |

where .

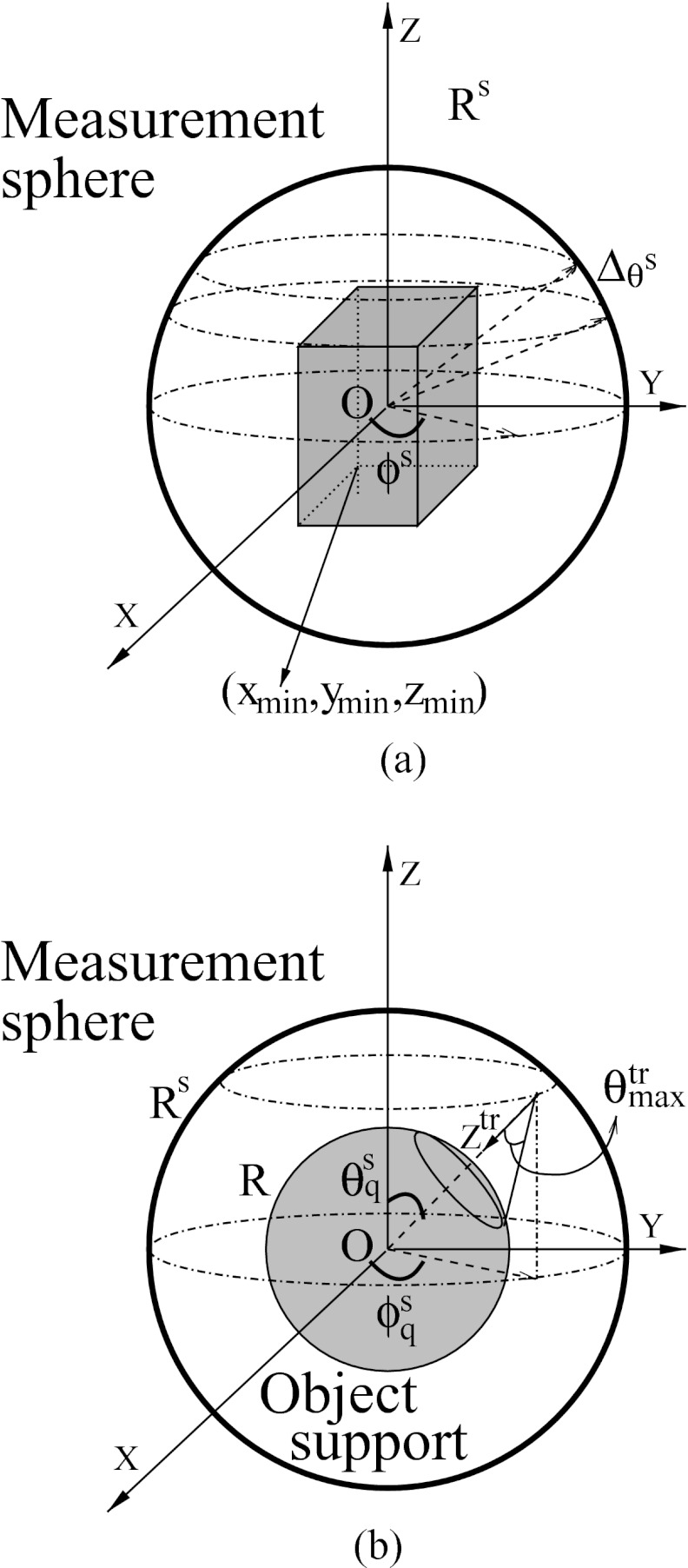

Figure 1.

(a) Schematic of the 3D OAT scanning geometry. (b) Schematic of the local coordinate system for the implementation of interpolation-based D-D imaging model.

Spherical-voxel-based D-D imaging model

The spherical-voxel-based imaging model is also widely employed in OAT.9, 11, 14, 22, 44 It employs the expansion functions

| (15) |

where rn is defined as in Eq. 9. The nth expansion function is a uniform sphere that is inscribed by the nth cuboid of a Cartesian grid. The nth component of the coefficient vector αsph is defined as

| (16) |

where Vcube and Vsph are the volumes of a cubic voxel of dimension Δs and of a spherical voxel of radius Δs/2, respectively.

Unlike the interpolation-based imaging model, by use of the expansion functions defined in Eq. 15, the surface integral over Sq and in Eq. 8 can be converted to a temporal convolution and calculated analytically.14, 22 To avoid utilizing excessively high sampling rates to mitigate aliasing, the spherical-voxel-based imaging model can be conveniently implemented in the temporal frequency domain as22

| (17) |

where Δf is the frequency sampling interval, and L denotes the total number of temporal-frequency samples indexed by l. A derivation of Eq. 17 can be found in Ref. 22. The function represents the temporal Fourier transform of the spatial impulse response (SIR) of the qth transducer for the source located at rn, expressed as

| (18) |

Also, is defined as

| (19) |

where is the EIR in temporal-frequency domain. In summary, the imaging model can be expressed in matrix form as

| (20) |

Adjoints of the system matrices

Iterative image reconstruction algorithms employ numerical implementations of the projection operator, i.e., the system matrix H, as well as its adjoint, denoted by H†.45 The adjoint is also referred to as the backprojection operator. Note that for most practical applications, H and H† are too large to be stored in the random access memory of currently available computers. Therefore, in practice, the actions of H and H† are almost always calculated on the fly. The same strategy was adopted in this work.

According to the definition of the adjoint operator,35, 43, where

| (21) |

| (22) |

and

| (23) |

It can also be verified that the adjoint operator is given by

| (24) |

where the superscript “*” denotes the complex conjugate. Unlike the unmatched backprojection operators46 that are obtained by discretization of the continuous adjoint operator, utilization of the exact adjoint operator facilitates the convergence of iterative image reconstruction algorithms.

GPU architecture and CUDA programming

The key features of GPU architecture and the basics of CUDA programming are briefly summarized in this section. We refer the readers to Refs. 28, 29 for additional details.

A GPU card contains multiple streaming multiprocessors. Each streaming multiprocessor is configured with multiple processor cores. For example, the Tesla C1060 possesses 30 streaming multiprocessors with 8 processor cores on each; and the Tesla C2050 possesses 14 streaming multiprocessors with 32 processor cores on each.28 The processor cores in each multiprocessor execute the same instruction on different pieces of data, which is referred to as “single instruction, multiple data” (SIMD) model of parallel programming. In order to fully exploit the computing power of GPUs, one of the major challenges is to design a parallelization strategy fitting in the SIMD framework such that the largest number of processor cores can execute the computation simultaneously.29

A GPU card has six types of memory that have varying capacities and different access rules and efficiencies: (1) Registers are assigned for each thread and have the fastest access. (2) Shared memory is assigned for each block and can be efficiently accessed by all threads in the block if designed appropriately. (3) Constant memory is read-only and can be accessed by all threads efficiently. (4) Texture memory is also read-only and is optimized for interpolation operations. (5) Global memory has the slowest access that takes hundred times more clock cycles than does the computation of basic arithmetic operations. (6) Local memory is assigned for each thread but has a slow access as does the global memory. Therefore, an efficient GPU-based implementation in general requires a limited number of global and local memory access.

CUDA is a platform and programming model developed by NVIDIA that includes a collection of functions and keywords to exploit the parallel computing power of GPUs.29 A CUDA parallel program is composed of a host program and kernels. The host program is executed by central processing units (CPUs) and launches the kernels, which are custom-designed functions executed by GPUs. A general parallel programming strategy is to launch multiple instances of a kernel and to run the multiple instances concurrently on GPUs. In CUDA, each instance of the kernel is named as a thread and processes only a portion of the data. A hierarchy of threads is employed: Threads are grouped into blocks, and blocks are grouped into a grid. Therefore, each thread is specified by a multi-index containing a block index and a thread index within the block.

GPU-ACCELERATED RECONSTRUCTION ALGORITHMS

In this section, we propose GPU-based parallelization strategies for the FBP algorithm and the projection/backprojection operations corresponding to the interpolation-based and the spherical-voxel-based D-D imaging models.

Measurement geometry

We employed a spherical measurement geometry shown in Fig. 1a. The measurement sphere was of radius Rs centered at the origin of the Cartesian coordinate system (or the equivalent spherical coordinate system). The polar angle θs ∈ [0, π] was equally divided with interval , starting from . At each polar angle, a ring on the sphere that was parallel to the plane z = 0 can be specified, resulting Nr rings. On each ring, Nv ultrasonic transducers were assumed to be uniformly distributed with azimuth angle interval . Hereafter, each azimuth angle will be referred to as a tomographic view. At each view, we assumed that Nt temporal samples were acquired and the first sample corresponded to time instance tmin. For implementations in temporal-frequency domain, we assumed that Nf temporal-frequency samples were available and the first sample corresponded to fmin. The region to be reconstructed was a rectangular cuboid whose edges were parallel to the axes of the coordinate system and the left-bottom-back vertex was located at (xmin, ymin, zmin). The numbers of voxels along the three coordinates will be denoted by Nx, Ny, and Nz, respectively, totally N = NxNyNz voxels. We also assumed the cuboid was contained in another sphere of radius R that was concentric with the measurement sphere shown in Fig. 1b.

Implementation of the FBP algorithm

Central processing unit-based implementations of continuous FBP formulae have been described in Refs. 5, 6, 7, 8. Though the discretization methods vary, in general, three approximations have to be employed. First, the first-order derivative term ∂p(rs, t)/∂t has to be approximated by a difference scheme up to certain order.47 Second, the measurement sphere has to be divided into small patches, and the surface integral has to be approximated by a summation of the area of every patch weighted by the effective value of the integrand on the patch. Finally, the value of the integrand at an arbitrary time instance t = |rs − r|/c0 has to be approximated by certain interpolation method.

In this study, we approximated the surface integral by use of the trapezoidal rule. As described earlier, the spherical surface was divided into NrNv patches. For the transducer indexed by q that was located at , the area of the patch was approximated by . The value at time instance was approximated by the linear interpolation from its two neighboring samples as

| (25) |

where , and k is the integer part of . Here, p is a vector of lexicographically ordered samples of the pressure function p(rs, t), which is estimated from the measured voltage data vector u. Also, the first-order derivative term was approximated by

| (26) |

By use of these three numerical approximations, the discretized FBP formula was expressed as

| (27) |

Unlike the implementations of FBP formulas in x-ray cone beam CT,31, 32 we combined the filter and the linear interpolation. This reduced the number of visits to the global memory in the GPU implementation described below.

We implemented the FBP formula in a way that is similar to the “pixel-driven” implementation in x-ray CT,32 i.e., we assigned each thread to execute the two accumulative summations in Eq. 27 for each voxel. We bound the pressure data p to texture memory because it is cached and has a faster accessing rate. Therefore, our implementation only requires access to texture memory twice and to global memory once. The pseudocodes are provided in Algorithms 1 and 2 for the host part and the device part, respectively. Note that the pseudocodes do not intend to be always optimal because the performance of the codes could depend on the dimensions of p and . For example, we set the block size to be (Nz, 1, 1) because for our applications, Nz was bigger than Nx and Ny and smaller than the limit number of threads that a block can support (i.e., 1024 for the NVIDIA Tesla C2050). If the values of Nx, Ny, and Nz change, we may need to redesign the dimensions of the grid and blocks. However, the general SIMD parallelization strategy remains.

Table .

Algorithm I. Implementation of the FBP algorithm (on host).

| Input:p |

| Output: |

| 1: {Precalculate the common coefficient} |

| 2: {Bound data to texture memory} |

| 3: K_fbp ⟨⟨⟨ (Ny, Nx), (Nz, 1, 1) ⟩⟩⟩ (ω, ) |

| 4: {Copy data from global memory to host} |

Table .

Algorithm II. Implementation of kernel K_fbp ⟨⟨⟨ (Ny, Nx), (Nz, 1, 1) ⟩⟩⟩.

| Input: ω, , |

| Output: |

| 1: x = (blockIdx.y)Δs + xmin; y = (blockIdx.x)Δs + ymin; z = (threadIdx.x)Δs + zmin |

| 2: Σ = 0 |

| 3: fornr = 0 toNr − 1 do |

| 4: ; zs = Rscos θs; rs = Rssin θs; w′ = wsin θs |

| 5: fornv = 0 toNv − 1 do |

| 6: ; xs = rscos ϕs; ys = rssin ϕs |

| 7: |

| 8: ; nt = floor(tn) |

| 9: {Fetch data from texture memory} |

| 10: endfor |

| 11: endfor |

| 12: |

Implementation of Hint and

The forward projection operation Hintαint is composed of three consecutive operations g = Gαint, pint = Dg, and uint = Hepint that are defined in Eqs. 12, 13, 14, respectively. Both the difference operator D and the one-dimensional (1D) convolution He have low computational complexities while the SRT operator G is computationally burdensome. Hence, we developed the GPU-based implementation of G while leaving D and He to be implemented by CPUs.

The SRT in OAT shares many features with the Radon transform in x-ray CT. Thus, our GPU-based implementation is closely related to the implementations of Radon transform that have been optimized for x-ray CT.30, 31, 32 The surface integral was approximated according to the trapezoidal rule. First, the integral surface was divided into small patches, which is described in the Appendix. Second, each patch was assigned an effective value of the object function by trilinear interpolation. The trilinear interpolation was calculated by use of the texture memory of GPUs that is specifically designed for interpolation. Finally, GPU threads accumulated the areas of patches weighted by the effective values of the object function and wrote the final results to global memory. The pseudocodes for implementation of G are provided in Algorithms 3 and 4 for the host part and the device part, respectively. Note that we employed the “one-level”-strategy,32 i.e., each thread calculates one data sample. Higher level strategies have been proposed to improve the performance by assigning each block to calculate multiple data samples,32 which, however, caused many thread idles in OAT mainly because the amount of computation required to calculate a data sample varies largely among samples for SRT.

Table .

Algorithm III. Implementation of g = Gαint (on host)

| Input:αint |

| Output:g |

| 1: {Bound data to texture memory} |

| 2: K_srt ⟨⟨⟨ (Nv, Nt), (Nr, 1, 1) ⟩⟩⟩ () |

| 3: {Copy data from global memory to host} |

Table .

Algorithm IV. Implementation of kernel K_srt ⟨⟨⟨ (Nv, Nt), (Nr, 1, 1) ⟩⟩⟩.

| Input:, |

| Output: |

| 1: ; ; |

| 2: |

| 3: Σ = 0; |

| 4: while θ′ > 0 do |

| 5: ; ; ϕ′ = 0 |

| 6: while ϕ′ < 2π do |

| 7: x′ = r′cos ϕ′; y′ = r′sin ϕ′ |

| 8: x = −x′sin θ′ − (z′ − Rs)cos θ′; y = y′; z = x′cos θ′ − (z′ − Rs)sin θ′ {Convert to global coordinate system} |

| 9: xn = (x − xmin)/Δs; yn = (y − ymin)/Δs; zn = (z − zmin)/Δs |

| 10: Σ + = tex3D(xn, yn, zn) {Tri-linear interpolation} |

| 11: ϕ′ = ϕ′ + Δs/r′ |

| 12: endwhile |

| 13: |

| 14: endwhile |

| 15: |

Implementation of the backprojection operator was very similar to the implementation of Hint. The operators D† and He† were calculated on CPUs while G† was calculated by use of GPUs. The pseudocodes are provided in Algorithms 5 and 6. We made use of the CUDA function “atomicAdd” to add weights to global memory from each thread.

Table .

Algorithm V. Implementation of (on host).

| Input:g′ |

| Output: |

| 1: {Bound data to texture memory} |

| 2: K_srtT ⟨⟨⟨ (Nv, Nt), (Nr, 1, 1) ⟩⟩⟩ () |

| 3: {Copy data from global memory to host} |

Table .

Algorithm VI. Implementation of kernel K_srtT ⟨⟨⟨ (Nv, Nt), (Nr, 1, 1) ⟩⟩⟩.

| Input:, |

| Output: |

| 1: ; ; |

| 2: ; |

| 3: while θ′ > 0 do |

| 4: ; ; ϕ′ = 0 |

| 5: while ϕ′ < 2π do |

| 6: x′ = r′cos ϕ′; y′ = r′sin ϕ′ |

| 7: x = −x′sin θ − (z′ − R′)cos θ; y = y′; z = x′cos θ − (z′ − R′)sin θ {Convert to global coordinate system} |

| 8: xn = (x − xmin)/Δs; yn = (y − ymin)/Δs; zn = (z − zmin)/Δs |

| 9: nx = floor(xn); ny = floor(yn); nz = floor(zn) |

| 10: {Add weights to one of |

| the eight neighboring nodes by use of ‘atomicAdd'; Repeat this operation for all other seven neighboring nodes} |

| 11: ϕ′ = ϕ′ + Δs/r′ |

| 12: endwhile |

| 13: |

| 14: endwhile |

Implementation of Hsph and

Implementation of the forward projection operation for the spherical-voxel-based imaging model is distinct from that of the interpolation-based model. The major difference is that calculation of each element of the data vector for the spherical-voxel-based imaging model requires the accumulation of the contributions from all voxels because the model is expressed in the temporal frequency domain. Because of this, the amount of computation required to calculate each data sample in the spherical-voxel-based imaging model is almost identical, simplifying the parallelization strategy.

We proposed a parallelization strategy that was inspired by one applied in advanced MRI reconstruction33 and is summarized as follows. Discrete samples of defined in Eq. 19 were precalculated and stored as a vector in constant memory. Because the size of the input vector αsph is often too large to fit in the constant memory, we divided αsph into subvectors that matched the capacity of the constant memory. We employed a CPU loop to copy every subvector sequentially to the constant memory and call the GPU kernel function to accumulate a partial summation. The major advantage of this design is that the total number of global memory visits to calculate one data sample is reduced to the number of subvectors.

Implementation of the projection operator for the spherical-voxel-based imaging model generally involves more arithmetic operations than does the interpolation-based imaging model. Moreover, the spherical-voxel-based imaging model has been employed to compensate for the finite aperture size effect of transducers,14, 22 which makes the computation even more burdensome. Because of this, we further developed an implementation that employed multiple GPUs. The pseudocodes of the projection operation are provided in Algorithms 7, 8, 9. We created Npth pthreads on CPUs by use of the “pthread.h” library. Here, we denote the threads on CPUs by “pthread” to distinguish from threads on GPUs. We divided the input vector αsph into Npth subvectors (denoted by αpth's) of equal size and declared an output vector of dimension NpthNrNvNf. By calling the pthread function “fwd_pthread,” Nsph pthreads simultaneously calculated the projection. Each pthread projected an αpth to a partial voltage data vector that filled in the larger vector . Once all pthreads finished filling their into , the projection data were obtained by a summation of the Npth's.

Table .

Algorithm VI. Implementation of (on host).

| Input:αsph, |

| Output: |

| 1: fornpth = 0 toNpth − 1 do |

| 2: parm_fwdarg[npth].npth = npth |

| 3: parm_fwdarg[npth]. = & |

| 4: parm_fwdarg[npth].αpth=&αsph[npthNxNyNz/Npth] |

| 5: parm_fwdarg[npth].= {Pass addresses |

| of arrays to each pthread} |

| 6: pthread_create(&pthreads[npth], NULL, fwd_pthread, (void *) |

| (parm_fwdarg+npth)) {Call function fwd_pthread} |

| 7: endfor |

| 8: fornpth = 0 toNpth − 1 do |

| 9: forn = 0 toNrNvNfdo |

| 10: |

| 11: endfor |

| 12: endfor |

Table .

Algorithm VIII. Implementation of function fwd_pthread (on host).

| Input:npth, , αpth, |

| Output: |

| 1: {Copy from host to constant memory} |

| 2: fornx = 0 toNx/Npth − 1 do |

| 3: x = (nx + npthNx/Npth)Δs + xmin |

| 4: forny = 0 toNy − 1 do |

| 5: y = nyΔs + ymin |

| 6: {Copy from host to constant |

| memory} |

| 7: K_fwdsph ⟨⟨⟨ (Nv, Nr), (Nf, 1, 1) ⟩⟩⟩ (x, y, D_) |

| 8: endfor |

| 9: endfor |

| 10: {Copy from global memory to host} |

Table .

Algorithm IX. Implementation of Kernel K_fwdsph ⟨⟨⟨ (Nv, Nr), (Nf, 1, 1) ⟩⟩⟩.

| Input:x, y, D_, C_αpth, C_ |

| Output: D_ |

| 1: ; ; f = (threadIdx.x)Δf + fmin |

| 2: zs = Rscos θs; xs = Rssin θscos ϕs; ys = Rssin θssin ϕs {Calculate locations of transducers} |

| 3: Σr = 0; Σi = 0 {Initiate the partial summation including the real and imaginary parts} |

| 4: fornz = 0 toNz − 1 do |

| 5: z = nzΔs + zmin |

| 6: d = ((x − xs)2 + (y − ys)2 + (z − zs)2)1/2 |

| 7: ; {Calculate SIR; Example here assumes point-like transducers} |

| 8: |

| 9: |

| 10: endfor |

| 11: |

| 12: |

Implementation of the backprojection operator was similar except the dividing and looping were over the vector instead of αsph. The pseudocodes for the backprojection operation are provided in Algorithms 10, 11, 12.

Table .

Algorithm X. Implementation of (on host).

| Input:, |

| Output: |

| 1: fornpth = 0 toNpth − 1 do |

| 2: parm_bwdarg[npth].npth = npth |

| 3: parm_bwdarg[npth]. = & |

| 4: parm_bwdarg[npth].=& |

| 5: parm_bwdarg[npth].= |

| {Pass addresses of arrays to each pthread} |

| 6: pthread_create(&pthreads[npth], NULL, bwd_pthread, |

| (void *)(parm_bwdarg+npth)) {Call function bwd_pthread} |

| 7: endfor |

| 8: fornpth = 0 toNpth − 1 do |

| 9: forn = 0 toNxNyNzdo |

| 10: |

| 11: endfor |

| 12: endfor |

Table .

Algorithm XI. Implementation of function bwd_pthread (on host).

| Input:npth, , , |

| Output: |

| 1: {Copy from host to constant memory} |

| 2: fornr = 0 toNr/Npth − 1 do |

| 3: ; zs = Rscos θs; rs = Rssin θs |

| 4: fornv = 0 toNv − 1 do |

| 5: ; xs = rscos ϕs; ys = rssin ϕs |

| 6: {Copy from host to constant memory} |

| 7: K_bwdsph ⟨⟨⟨ (Ny, Nx), (Nz, 1, 1) ⟩⟩⟩ (xs, ys, zs D_) |

| 8: endfor |

| 9: endfor |

| 10: {Copy from global memory to host} |

Table .

Algorithm XII. Implementation of Kernel K_bwdsph ⟨⟨⟨ (Ny, Nx), (Nz, 1, 1) ⟩⟩⟩.

| Input:xs, ys, zs, D_, C_, C_ |

| Output: D_ |

| 1: x = (blockIdx.y)Δs + xmin; y = (blockIdx.x)Δs + ymin; z = (threadIdx.x)Δs + zmin |

| 2: d = ((x − xs)2 + (y − ys)2 + (z − zs)2)1/2; Σ = 0 {Initiate the partial summation} |

| 3: fornf = 0 toNf − 1 do |

| 4: f = nfΔf + fmin |

| 5: ; {Calculate SIR; Example here assumes point-like transducers} |

| 6: |

| 7: endfor |

| 8: |

DESCRIPTIONS OF COMPUTER SIMULATION AND EXPERIMENTAL STUDIES

The computational efficiency and accuracy of the proposed GPU-based implementations of the FBP algorithm and projection/backprojection operators for use with iterative image reconstruction algorithms were quantified in computer simulation and experimental OAT imaging studies.

Computer-simulation studies

Numerical phantom

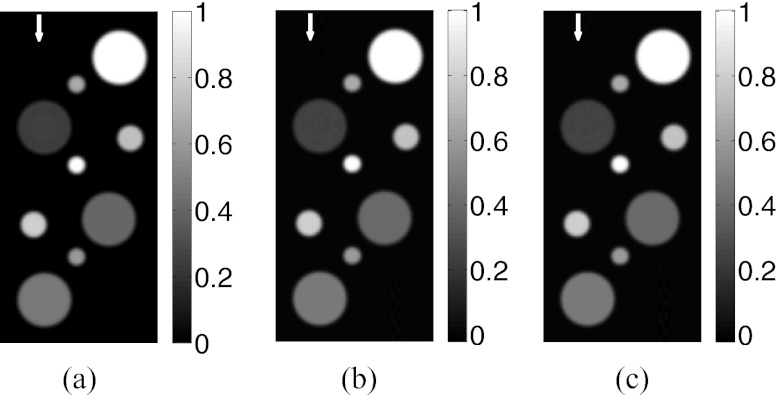

The numerical phantom consisted of nine uniform spheres that were blurred by a 3D Gaussian kernel possessing a full width at half maximum (FWHM) of 0.77 mm. The phantom was contained within a cuboid of size 29.4 × 29.4 × 61.6 mm3. A 2D image corresponding to the plane y = 0 through the phantom is shown in Fig. 2a.

Figure 2.

Slices corresponding to the plane y = 0 of (a) the phantom and the images reconstructed by use of (b) the CPU-based and (c) the GPU-based implementations of the FBP algorithm from the “128 × 90”-data.

Simulated projection data

The measurement surface was a sphere of radius Rs = 65 mm corresponding to an existing OAT imaging system.22, 48 As described in Sec. 3, ideal point-like transducers were uniformly distributed over 128 rings and 90 tomographic views. The 128 rings covered the full π polar angle, i.e., , while the 90 views covered the full 2π azimuth angle. The speed of sound was set at c0 = 1.54 mm/μs. We selected the Grüneisen coefficient as of arbitrary units (a.u.). For each transducer, we analytically calculated 1022 temporal samples of the pressure function at the sampling rate of fsam = 20 MHz by use of Eq. 1. Because we employed a smooth object function, the pressure data were calculated by the following two steps: First, we calculated temporal samples of pressure function pus(rs, t) that corresponds to the nine uniform spheres by1, 36

| (28) |

where ri, Ri, and Ai denote the center location, the radius, and the absorbed energy density of the ith sphere, respectively. Subsequently, we convolved pus(rs, t) with a 1D Gaussian kernel with FWHM = 0.5 μs (Ref. 49) to produce the pressure data. From the simulated pressure data, we calculated the temporal-frequency spectrum by use of FFT, from which we created an alternative data vector that contained 511 frequency components occupying (0, 5] MHz for each transducer. The simulated projection data in either the time domain or the temporal frequency domain will hereafter be referred to as “128 × 90”-data. By undersampling the “128 × 90”-data uniformly over rings and tomographic views, we created three subsets that contained varying number of transducers. These data sets will be referred to as “64 × 90”-data, “64 × 45”-data, and “32 × 45”-data, where the two numbers specify the number of rings and the number of tomographic views, respectively.

Reconstruction algorithms

The GPU accelerated FBP algorithm was employed to reconstruct the object function sampled on a 3D Cartesian grid with spacing Δs = 0.14 mm. The dimension of the reconstructed images was 210 × 210 × 440.

We employed an iterative image reconstruction algorithm that sought to minimize a penalized least-squares (PLS) objective.45, 50 Two versions of the reconstruction algorithm were developed that utilized the interpolation-based imaging model and the spherical-voxel-based imaging model, respectively. The two versions sought to solve the optimization problems by use of the linear conjugate gradient (CG) method51, 52

| (29) |

and

| (30) |

respectively, where R(α) is a regularizing penalty term whose impact is controlled by the regularization parameter μ. The penalty term was employed only when processing the experimental data as described in Sec. 4B. The reconstruction algorithms required computation of one projection and one backprojection operation at each iteration. Hereafter, the two reconstruction algorithms will be referred to as PLS-Int and PLS-Sph algorithms, respectively. We set Δs = 0.14 mm. Therefore, both the dimensions of and were 210 × 210 × 440.

Performance assessment

We compared the computational times of 3D image reconstruction corresponding to the GPU- and CPU-based implementations. The CPU-based implementations of the PLS-Int and PLS-Sph algorithms take several days to complete a single iteration even for the “32 × 45”-data. Therefore, we only recorded the computational time for the CPU-based implementations to complete a single iteration when the data vector contained a single transducer. We assumed that the computational times were linearly proportional to the number of transducers in the data sets because the CPU-based implementations are sequential.

The GPU-based implementations employed the single-precision floating-point format rather than the conventional double-precision utilized by CPU-based implementations. In order to quantify how the single-precision floating-point format would degrade the image accuracy, we calculated the root-mean-square-error (RMSE) between the reconstructed image and the phantom defined by

| (31) |

where α and are the samples of the phantom and the coefficients of the reconstructed images, respectively.

Hardware specifications

All implementations were tested on the platform consisted of dual quad-core Intel(R) Xeon (R) CPUs with a clock speed 2.40 GHz. The GPU-based implementations of the FBP and the PLS-Int algorithms were tested on a single Tesla C2050 GPU, while the PLS-Sph algorithm was tested on 8 Tesla C1060 GPUs.

Experimental studies

The FBP, PLS-Int, and PLS-Sph algorithms were investigated by use of an existing data set corresponding to a live mouse.22, 48 The scanning geometry and dimensions were the same as those employed in the computer-simulation studies except that only 64 rings were uniformly distributed over the polar angle ranging from 14° to 83°. The transducers were of size 2 × 2 mm2. The raw data were acquired at 180 tomographic views, which are referred to as “full data.” We undersampled the “full data” uniformly over the tomographic views, constructing a subset containing 45 tomographic views. The subset will be referred to as “quarter data.”

Unlike in the idealized computer-simulation studies, the transducer response has to be compensated for when processing the experimental data. When implementing the FBP algorithm, the EIR was compensated for by a direct Fourier deconvolution, expressed in temporal frequency domain as3

| (32) |

where is a window function for noise suppression. In this study, we adopted the Hann window function defined as

| (33) |

where the cutoff frequency was chosen as fc = 5 MHz. When applying iterative image reconstruction algorithms, the transducer effects were implicitly compensated for during iteration by employing imaging models that incorporates the transducer characteristics.14, 22 We incorporated the EIR into the interpolation-based imaging model while incorporating both the EIR and the SIR into the spherical-voxel-based imaging model.

For both PLS-Int and PLS-Sph algorithms, we employed a quadratic smoothness penalty to mitigate measurement noise50

| (34) |

where nx, ny, and nz were the indices of the neighboring voxels before the nth voxel along the three Cartesian axes, respectively.

RESULTS

Computational efficiency

As shown in Table 1, the GPU-based implementations took less than 0.1%, 0.8%, and 0.4% of the computational times required by corresponding CPU-based implementations for the FBP, the PLS-Int, and the PLS-Sph algorithms, respectively. The relative computational times for the GPU-based implementations are nearly linearly proportional to the amount of data. Note that the “64 × 90”-data and the “quarter data” are of the same size. However, the computational times of the “quarter data” are more than 1.8 times those of the “64 × 90”-data. This is because the calculation of the SIR increases the computational complexity of the reconstruction algorithm.

Table 1.

Computational times of the 3D image reconstructions by use of the CPU- and GPU-based implementations.

| Data sets | FBP [s] |

PLS-Int [min/iteration] |

PLS-Sph [min/iteration] |

|||

|---|---|---|---|---|---|---|

| CPU | GPU | CPU | GPU | CPU | GPU | |

| “32 × 45” | 6189 | 6 | 2448 | 20 | 7961 | 22 |

| “64 × 45” | 12 975 | 12 | 4896 | 35 | 15 923 | 43 |

| “64 × 90” | 26 190 | 23 | 9792 | 68 | 31 845 | 86 |

| “128 × 90” | 53 441 | 46 | … | … | … | … |

| “Quarter data” | 12 975 | 12 | 4896 | 35 | 19 776 | 78 |

| “Full data” | 53 441 | 46 | 19 968 | 137 | 79 177 | 313 |

Computational accuracy

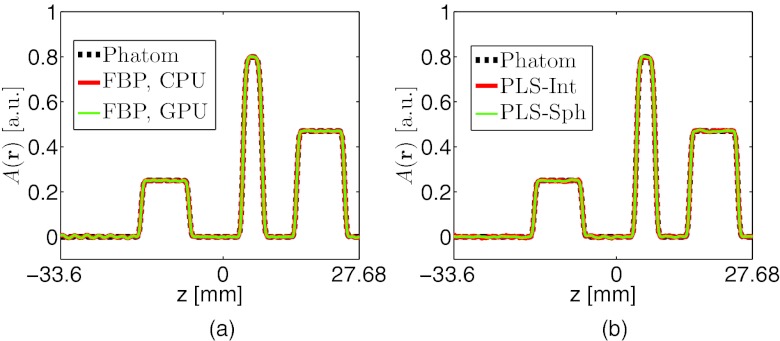

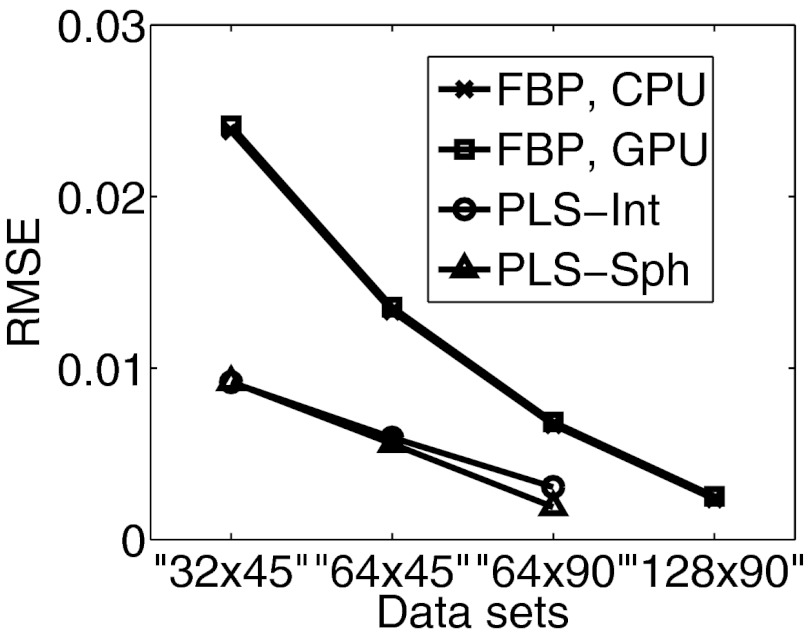

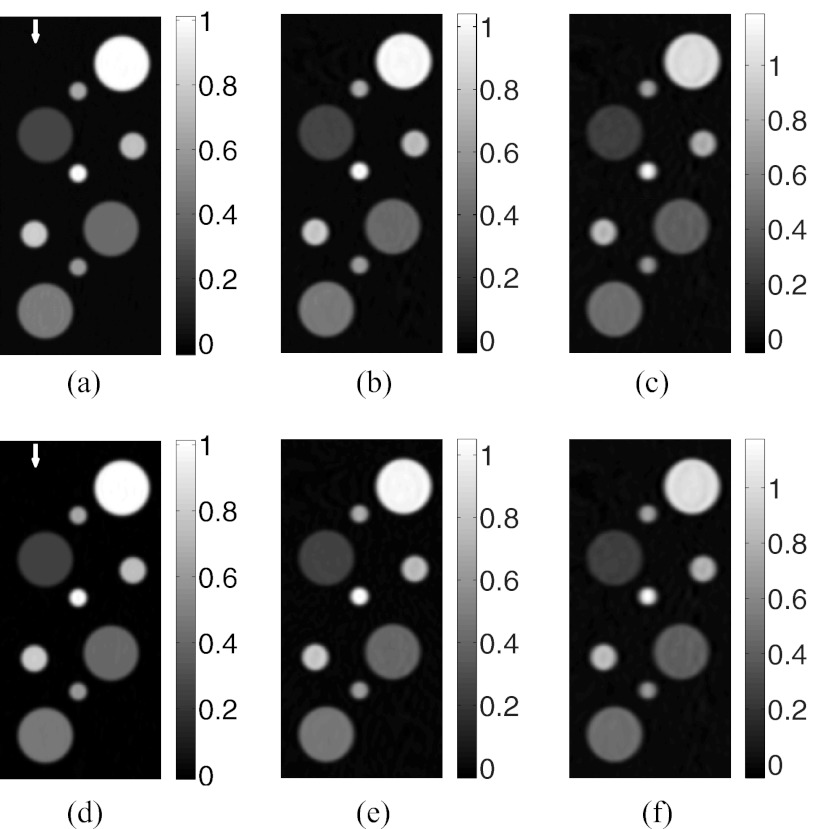

Images reconstructed by use of the CPU- and GPU-based implementations of the FBP algorithm are almost identical. From the “128 × 90”-data, in which case, transducers were densely distributed over the measurement surface, both implementations reconstructed accurate images, as shown in Figs. 2b, 2c. The profiles along the three arrows in Fig. 2 are plotted in Fig. 5a, suggesting a nearly exact reconstruction. As expected, when the amount of measurement data are reduced, the reconstructed images contain more artifacts as shown in Fig. 3. However, the images reconstructed by use of GPU- and CPU-based implementations remain indistinguishable. The plots of the RMSE versus the amount of measurement data employed in Fig. 6 overlap, also suggesting the single-precision floating-point format employed by the GPU-based implementation has little impact on the computational accuracy.

Figure 5.

Profiles along the line (x, y) = (−6.58, 0) mm of the images reconstructed by use of (a) the CPU- and GPU-based implementations of the FBP algorithm from the “128 × 90”-data, and (b) the GPU-based implementations of the PLS-Int and the PLS-Sph algorithms from the “64 × 90”-data.

Figure 3.

Slices corresponding to the plane y = 0 of the images reconstructed by use of the FBP algorithm with (a) the CPU-based implementation from the “64 × 90”-data, (b) the CPU-based implementation from the “64 × 45”-data, (c) the CPU-based implementation from the “32 × 45”-data, (d) the GPU-based implementation from the “64 × 90”-data, (e) the GPU-based implementation from the “64 × 45”-data, and (f) the GPU-based implementation from the “32 × 45”-data.

Figure 6.

Plots of the RMSE against the amount of data by use of the FBP, the PLS-Int and the PLS-Sph algorithms.

The GPU-based implementations of the PLS-Int and PLS-Sph algorithms both reconstructed accurate images as displayed in Fig. 4. As expected, the images reconstructed by use of both iterative algorithms contain fewer artifacts than those reconstructed by use of the FBP algorithm from the same amount of data. Unlike the images reconstructed by use of the FBP algorithm from the “64 × 90”-data [Figs. 3a or 3d], the images reconstructed by use of both iterative algorithms [Figs. 4a, 4d] appear to be identical to the numerical phantom. The profiles along the two arrows in Figs. 4a, 4d are plotted in Fig. 5b, further confirming the computational accuracy of iterative image reconstruction algorithms. The plots of the RMSE versus the amount of measurement data employed in Fig. 6 suggest the iterative image reconstruction algorithms in general outperform the FBP algorithm from the same amount of data.

Figure 4.

Slices corresponding to the plane y = 0 of the images reconstructed by use of the GPU-based implementations of (a) the PLS-Int algorithm from the “64 × 90”-data, (b) the PLS-Int algorithm from the “64 × 45”-data, (c) the PLS-Int algorithm from the “32 × 45”-data, (d) the PLS-Sph algorithm from the “64 × 90”-data, (e) the PLS-Sph algorithm from the “64 × 45”-data, and (f) the PLS-Sph algorithm from the “32 × 45”-data.

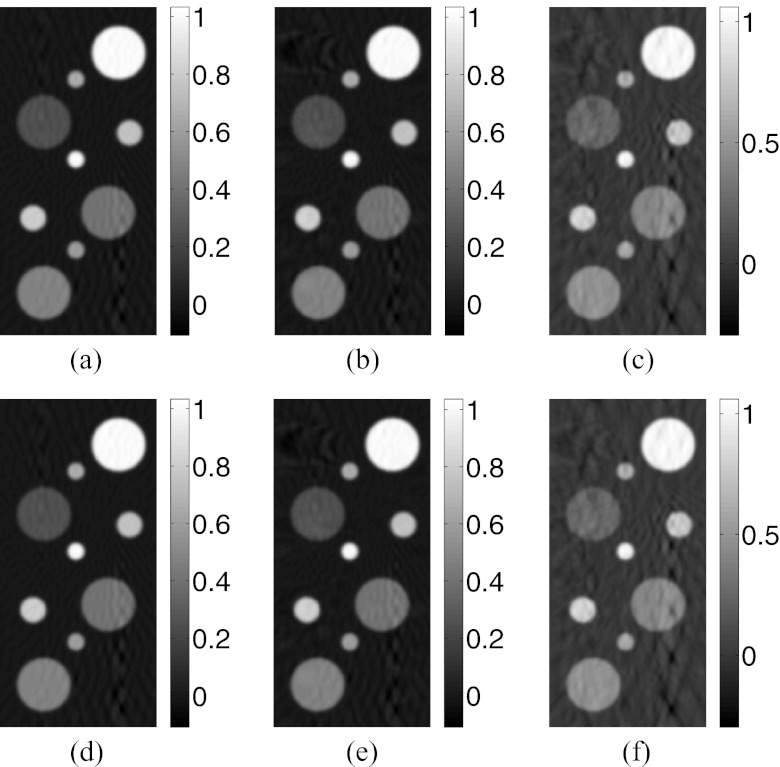

Experimental results

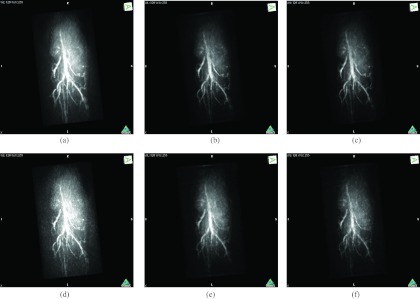

The maximum intensity projection (MIP) of the 3D mouse images reconstructed by use of the GPU-based implementations reveal the mouse body vasculature as shown in Fig. 7. Images reconstructed by use of both the PLS-Int and the PLS-Sph algorithms appear to have cleaner background than the images reconstructed by use of the FBP algorithm from the same amount of data. All images reconstructed by iterative algorithms were obtained by 20-iterations starting with uniform zeros as the initial guess. The PLS-Int algorithm took approximately a half day and 2 days to process the “quarter data” and the “full data,” respectively. The PLS-Sph algorithm took approximately 1 day and 4 days to process the “quarter data” and the “full data,” respectively. Alternatively, if the CPU-based implementations were utilized, the PLS-Int algorithm would take an estimated 68 days and 277 days to process the “quarter data” and the “full data,” respectively. The PLS-Sph algorithm would take an estimated 275 days and 1100 days to process the “quarter data” and the “full data,” respectively.

Figure 7.

MIP renderings of the 3D images of the mouse body reconstructed by use of the GPU-based implementations of (a) the FBP algorithm from the “full data,” (b) the PLS-Int algorithm from the “full data” with μ = 1.0 × 104, (c) the PLS-Sph algorithm from the “full data” with μ = 1.0 × 104, (d) the FBP algorithm from the “quarter data,” (e) the PLS-Int algorithm from the “quarter data” with μ = 1.0 × 103, and (f) the PLS-Sph algorithm from the “quarter data” with μ = 1.0 × 103. The grayscale window is [0,12.0].

DISCUSSION AND CONCLUSION

In this study, we developed and investigated GPU-based implementations of the FBP algorithm and two pairs of projection/backprojection operators for 3D OAT. Our implementation of the FBP algorithm improved the computational efficiency over 1000 times compared to the CPU-based implementation. This work complements our earlier studies that demonstrated the feasibility of 3D iterative image reconstruction in practice.21, 22

Our current implementations of the iterative image reconstruction algorithms still require several days to process the densely sampled data set, which, however, can be further improved. First, the amount of measurement data required for accurate image reconstruction can be further reduced by developing advanced image reconstruction methods.13, 15, 22, 53 Second, the number of iterations required can be reduced by developing fast-converging optimization algorithms.22, 54, 55

The proposed parallelization strategies by use of GPUs are of general interest. The implementation of the FBP algorithm6 can be adapted to other analytic image reconstruction algorithms, including those described in Refs. 5, 7, 56, 57, 58. We demonstrated the feasibility of PLS algorithm that utilized the proposed GPU-based implementations of the projection/backprojection operators. By use of these implementations, many advanced image reconstruction algorithms may also be feasible in practice.22 Though we described our parallelization strategies for the projection/backprojection operators that utilized two discrete-to-discrete imaging models, these strategies can also be applied to other D-D imaging models.9, 11, 20, 59 Therefore, the proposed algorithms will facilitate the further investigation and application of advanced image reconstruction algorithms in 3D OAT.

ACKNOWLEDGMENT

This research was supported in part by the National Institutes of Health (NIH) Award Nos. EB010049 and CA167446.

APPENDIX: DISCRETIZATION OF SRT

Derivation of Eq. 12

The integrated data function g(rs, t) in Eq. 2, evaluated at the qth transducer and the kth time instance, can be expressed as

| (A1) |

where denotes the location of the qth point-like transducer. We defined a local coordinate system, distinguished by a superscript “tr,” centered at the qth transducer with the ztr-axis pointing to the origin of the global coordinate system as shown in Fig. 1b. Assuming the object function A(r) is compactly supported in a sphere of radius R, the integral surface is symmetric about the ztr-axis. Thus, the orientations of the xtr- and ytr-axes can be arbitrary within the ztr = 0 plane. Representing the right-hand side of Eq. A1 in the local spherical coordinate system, one obtains

| (A2) |

where is half of the apex angle of the cone that corresponds to the intersectional spherical cap as shown in Fig. 1b. The polar angle θtr and the azimuth angle ϕtr were discretized with intervals and that satisfied

| (A3) |

Therefore, Eq. A2 can be approximated by

| (A4) |

where , , , and . We denoted by rk, i, j the location in the global coordinate system corresponding to the location vector in the local coordinate system in Eq. A4. On substitution from the finite-dimensional representation Eq. 6 into Eq. A4 with α and ψn(r) defined by Eqs. 9, 10, respectively, we obtained

| (A5) |

References

- Oraevsky A. A. and Karabutov A. A., “Optoacoustic tomography,” in Biomedical Photonics Handbook, edited by Vo-Dinh T. (CRC, Boca Raton, FL, 2003), Chap. 34. [Google Scholar]

- Wang L. V., “Tutorial on photoacoustic microscopy and computed tomography,” IEEE J. Sel. Top. Quantum Electron. 14, 171–179 (2008). 10.1109/JSTQE.2007.913398 [DOI] [Google Scholar]

- Kruger R., Reinecke D., and Kruger G., “Thermoacoustic computed tomography: Technical considerations,” Med. Phys. 26, 1832–1837 (1999). 10.1118/1.598688 [DOI] [PubMed] [Google Scholar]

- Cox B. T., Arridge S. R., Köstli K. P., and Beard P. C., “Two-dimensional quantitative photoacoustic image reconstruction of absorption distributions in scattering media by use of a simple iterative method,” Appl. Opt. 45, 1866–1875 (2006). 10.1364/AO.45.001866 [DOI] [PubMed] [Google Scholar]

- Kunyansky L. A., “Explicit inversion formulae for the spherical mean Radon transform,” Inverse Probl. 23, 373–383 (2007). 10.1088/0266-5611/23/1/021 [DOI] [Google Scholar]

- Finch D., Patch S., and Rakesh, “Determining a function from its mean values over a family of spheres,” SIAM J. Math. Anal. 35, 1213–1240 (2004). 10.1137/S0036141002417814 [DOI] [Google Scholar]

- Xu M. and Wang L. V., “Universal back-projection algorithm for photoacoustic computed tomography,” Phys. Rev. E 71, 016706 (2005). 10.1103/PhysRevE.71.016706 [DOI] [PubMed] [Google Scholar]

- Xu Y., Feng D., and Wang L. V., “Exact frequency-domain reconstruction for thermoacoustic tomography: I. Planar geometry,” IEEE Trans. Med. Imaging 21, 823–828 (2002). 10.1109/TMI.2002.801172 [DOI] [PubMed] [Google Scholar]

- Paltauf G., Viator J. A., Prahl S. A., and Jacques S. L., “Iterative reconstruction algorithm for optoacoustic imaging,” J. Acoust. Soc. Am. 112, 1536–1544 (2002). 10.1121/1.1501898 [DOI] [PubMed] [Google Scholar]

- Yuan Z. and Jiang H., “Three-dimensional finite-element-based photoacoustic tomography: Reconstruction algorithm and simulations,” Med. Phys. 34, 538–546 (2007). 10.1118/1.2409234 [DOI] [PubMed] [Google Scholar]

- Ephrat P., Keenliside L., Seabrook A., Prato F. S., and Carson J. J. L., “Three-dimensional photoacoustic imaging by sparse-array detection and iterative image reconstruction,” J. Biomed. Opt. 13, 054052 (2008). 10.1117/1.2992131 [DOI] [PubMed] [Google Scholar]

- Zhang J., Anastasio M., La Riviere P., and Wang L., “Effects of different imaging models on least-squares image reconstruction accuracy in photoacoustic tomography,” IEEE Trans. Med. Imaging 28, 1781–1790 (2009). 10.1109/TMI.2009.2024082 [DOI] [PubMed] [Google Scholar]

- Provost J. and Lesage F., “The application of compressed sensing for photo-acoustic tomography,” IEEE Trans. Med. Imaging 28, 585–594 (2009). 10.1109/TMI.2008.2007825 [DOI] [PubMed] [Google Scholar]

- Wang K., Ermilov S. A., Su R., Brecht H.-P., Oraevsky A. A., and Anastasio M. A., “An imaging model incorporating ultrasonic transducer properties for three-dimensional optoacoustic tomography,” IEEE Trans. Med. Imaging 30, 203–214 (2011). 10.1109/TMI.2010.2072514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo Z., Li C., Song L., and Wang L. V., “Compressed sensing in photoacoustic tomography in vivo,” J. Biomed. Opt. 15, 021311 (2010). 10.1117/1.3381187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang C., Oraevsky A. A., and Anastasio M. A., “Investigation of limited-view image reconstruction in optoacoustic tomography employing a priori structural information,” Proc. SPIE 7800, 780004 (2010). 10.1117/12.861005 [DOI] [Google Scholar]

- Xu Z., Li C., and Wang L. V., “Photoacoustic tomography of water in phantoms and tissue,” J. Biomed. Opt. 15, 036019 (2010). 10.1117/1.3443793 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xu Z., Zhu Q., and Wang L. V., “In vivo photoacoustic tomography of mouse cerebral edema induced by cold injury,” J. Biomed. Opt. 16, 066020 (2011). 10.1117/1.3584847 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buehler A., Rosenthal A., Jetzfellner T., Dima A., Razansky D., and Ntziachristos V., “Model-based optoacoustic inversions with incomplete projection data,” Med. Phys. 38, 1694–1704 (2011). 10.1118/1.3556916 [DOI] [PubMed] [Google Scholar]

- Bu S., Liu Z., Shiina T., Kondo K., Yamakawa M., Fukutani K., Someda Y., and Asao Y., “Model-based reconstruction integrated with fluence compensation for photoacoustic tomography,” IEEE Trans. Biomed. Eng. 59, 1354–1363 (2012). 10.1109/TBME.2012.2187649 [DOI] [PubMed] [Google Scholar]

- Wang K., Su R., Oraevsky A. A., and Anastasio M. A., “Investigation of iterative image reconstruction in optoacoustic tomography,” Proc. SPIE 8223, 82231Y (2012). 10.1117/12.909610 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang K., Su R., Oraevsky A. A., and Anastasio M. A., “Investigation of iterative image reconstruction in three-dimensional optoacoustic tomography,” Phys. Med. Biol. 57, 5399 (2012). 10.1088/0031-9155/57/17/5399 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang C., Nie L., Schoonover R. W., Guo Z., Schirra C. O., Anastasio M. A., and Wang L. V., “Aberration correction for transcranial photoacoustic tomography of primates employing adjunct image data,” J. Biomed. Opt. 17, 066016 (2012). 10.1117/1.JBO.17.6.066016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dean-Ben X., Buehler A., Ntziachristos V., and Razansky D., “Accurate model-based reconstruction algorithm for three-dimensional optoacoustic tomography,” IEEE Trans. Med. Imaging 31, 1922–1928 (2012). 10.1109/TMI.2012.2208471 [DOI] [PubMed] [Google Scholar]

- Huang C., Nie L., Schoonover R. W., Wang L. V., and Anastasio M. A., “Photoacoustic computed tomography correcting for heterogeneity and attenuation,” J. Biomed. Opt. 17, 061211 (2012). 10.1117/1.JBO.17.6.061211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang B., Xiang L., Jiang M. S., Yang J., Zhang Q., Carney P. R., and Jiang H., “Photoacoustic tomography system for noninvasive real-time three-dimensional imaging of epilepsy,” Biomed. Opt. Express 3, 1427–1432 (2012). 10.1364/BOE.3.001427 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buehler A., Deán-Ben X. L., Claussen J., Ntziachristos V., and Razansky D., “Three-dimensional optoacoustic tomography at video rate,” Opt. Express 20, 22712–22719 (2012). 10.1364/OE.20.022712 [DOI] [PubMed] [Google Scholar]

- Lindholm E., Nickolls J., Oberman S., and Montrym J., “Nvidia tesla: A unified graphics and computing architecture,” IEEE MICRO 28, 39–55 (2008). 10.1109/MM.2008.31 [DOI] [Google Scholar]

- NVIDIA CUDA Programming Guide 2.0 NVIDIA, 2008.

- Zhao X., Hu J.-J., and Zhang P., “GPU-based 3D cone-beam CT image reconstruction for large data volume,” J. Biomed. Imaging 2009(8), 1–8 (2009). 10.1155/2009/149079 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okitsu Y., Ino F., and Hagihara K., “High-performance cone beam reconstruction using CUDA compatible GPUs,” Parallel Comput. 36, 129–141 (2010). 10.1016/j.parco.2010.01.004 [DOI] [Google Scholar]

- Chou C.-Y., Chuo Y.-Y., Hung Y., and Wang W., “A fast forward projection using multithreads for multirays on GPUs in medical image reconstruction,” Med. Phys. 38, 4052–4065 (2011). 10.1118/1.3591994 [DOI] [PubMed] [Google Scholar]

- Stone S., Haldar J., Tsao S., Hwu W.-M. W., Sutton B., and Liang Z.-P., “Accelerating advanced MRI reconstructions on GPUs,” J. Parallel Distrib. Comput. 68, 1307–1318 (2008). 10.1016/j.jpdc.2008.05.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treeby B. E. and Cox B. T., “k-wave: plbibsc-matlab toolbox for the simulation and reconstruction of photoacoustic wave fields,” J. Biomed. Opt. 15, 021314 (2010). 10.1117/1.3360308 [DOI] [PubMed] [Google Scholar]

- Barrett H. and Myers K., Foundations of Image Science, Wiley Series in Pure and Applied Optics (John Wiley & Son, Hoboken, NJ, 2004). [Google Scholar]

- Wang L. V. and Wu H.-I., Biomedical Optics, Principles and Imaging (Wiley, Hoboken, NJ, 2007). [Google Scholar]

- Xu M. and Wang L. V., “Photoacoustic imaging in biomedicine,” Rev. Sci. Instrum. 77, 041101–041122 (2006). 10.1063/1.2195024 [DOI] [Google Scholar]

- Wang K., and Anastasio M. A., “Photoacoustic and thermoacoustic tomography: Image formation principles,” in Handbook of Mathematical Methods in Imaging, edited by Scherzer O. (Springer, New York, NY, 2011), Chap. 18. [Google Scholar]

- Rosenthal A., Ntziachristos V., and Razansky D., “Optoacoustic methods for frequency calibration of ultrasonic sensors,” IEEE Trans. Ultrason. Ferroelectr. Freq. Control 58, 316–326 (2011). 10.1109/TUFFC.2011.1809 [DOI] [PubMed] [Google Scholar]

- Kak A. C. and Slaney M., Principles of Computerized Tomographic Imaging (IEEE, New York, NY, 1988). [Google Scholar]

- Anastasio M. A., Zhang J., Pan X., Zou Y., Keng G., and Wang L. V., “Half-time image reconstruction in thermoacoustic tomography,” IEEE Trans. Med. Imaging 24, 199–210 (2005). 10.1109/TMI.2004.839682 [DOI] [PubMed] [Google Scholar]

- Anastasio M., Zhang J., Sidky E., Zou Y., Xia D., and Pan X., “Feasibility of half-data image reconstruction in 3-D reflectivity tomography with a spherical aperture,” IEEE Trans. Med. Imaging 24, 1100–1112 (2005). 10.1109/TMI.2005.852055 [DOI] [PubMed] [Google Scholar]

- Claerbout J. F., Earth Sounding Analysis: Processing Versus Inversion (Blackwell Scientific, Cambridge, MA, 1992). [Google Scholar]

- Khokhlova T. D., Pelivanov I. M., Kozhushko V. V., Zharinov A. N., Solomatin V. S., and Karabutov A. A., “Optoacoustic imaging of absorbing objects in a turbid medium: Ultimate sensitivity and application to breast cancer diagnostics,” Appl. Opt. 46, 262–272 (2007). 10.1364/AO.46.000262 [DOI] [PubMed] [Google Scholar]

- Aarsvold J. N., Emission Tomography: The Fundamentals of PET and SPECT (Elsevier Academic, San Diego, CA, 2004). [Google Scholar]

- Zeng G. and Gullberg G., “Unmatched projector/backprojector pairs in an iterative reconstruction algorithm,” IEEE Trans. Med. Imaging 19, 548–555 (2000). 10.1109/42.870265 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morton K. W. and Mayers D. F., Numerical Solution of Partial Differential Equations: An Introduction (Cambridge University Press, New York, NY, 2005). [Google Scholar]

- Brecht H.-P., Su R., Fronheiser M., Ermilov S. A., Conjusteau A., and Oraevsky A. A., “Whole-body three-dimensional optoacoustic tomography system for small animals,” J. Biomed. Opt. 14, 064007 (2009). 10.1117/1.3259361 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anastasio M. A., Zhang J., Modgil D., and La Riviere P., “Application of inverse source concepts to photoacoustic tomography,” Inverse Probl. 23, S21–S35 (2007). 10.1088/0266-5611/23/6/S03 [DOI] [Google Scholar]

- Fessler J. A., “Penalized weighted least-squares reconstruction for positron emission tomography,” IEEE Trans. Med. Imaging 13, 290–300 (1994). 10.1109/42.293921 [DOI] [PubMed] [Google Scholar]

- Shewchuk J. R., “An introduction to the conjugate gradient method without the agonizing pain,” Technical Report No. CMU-CS-94-125 (Carnegie Mellon University, Pittsburgh, PA, 1994).

- Fessler J. and Booth S., “Conjugate-gradient preconditioning methods for shift-variant PET image reconstruction,” IEEE Trans. Image Process. 8, 688–699 (1999). 10.1109/83.760336 [DOI] [PubMed] [Google Scholar]

- Meng J., Wang L. V., Ying L., Liang D., and Song L., “Compressed-sensing photoacoustic computed tomography in vivo with partially known support,” Opt. Express 20, 16510–16523 (2012). 10.1364/OE.20.016510 [DOI] [Google Scholar]

- Beck A. and Teboulle M., “Fast gradient-based algorithms for constrained total variation image denoising and deblurring problems,” IEEE Trans. Image Process. 18, 2419–2434 (2009). 10.1109/TIP.2009.2028250 [DOI] [PubMed] [Google Scholar]

- Boyd S., Parikh N., Chu E., Peleato B., and Eckstein J., “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Found. Trends Mach. Learn. 3, 1–122 (2011). 10.1561/2200000016 [DOI] [Google Scholar]

- Xu M. and Wang L. V., “Time-domain reconstruction for thermoacoustic tomography in a spherical geometry,” IEEE Trans. Med. Imaging 21, 814–822 (2002). 10.1109/TMI.2002.801176 [DOI] [PubMed] [Google Scholar]

- Finch D., Haltmeier M., and Rakesh, “Inversion of spherical means and the wave equation in even dimensions,” SIAM J. Appl. Math. 68, 392–412 (2007). 10.1137/070682137 [DOI] [Google Scholar]

- Elbau P., Scherzer O., and Schulze R., “Reconstruction formulas for photoacoustic sectional imaging,” Inverse Probl. 28, 045004 (2012). 10.1088/0266-5611/28/4/045004 [DOI] [Google Scholar]

- Rosenthal A., Razansky D., and Ntziachristos V., “Fast semi-analytical model-based acoustic inversion for quantitative optoacoustic tomography,” IEEE Trans. Med. Imaging 29, 1275–1285 (2010). 10.1109/TMI.2010.2044584 [DOI] [PubMed] [Google Scholar]