Abstract

The interactive capacity of the Internet offers benefits that are intimately linked with contemporary research innovation in the natural resource and environmental studies domains. However, e-research methodologies, such as the e-Delphi technique, have yet to undergo critical review. This study advances methodological discourse on the e-Delphi technique by critically assessing an e-Delphi case study. The analysis suggests that the benefits of using e-Delphi are noteworthy but the authors acknowledge that researchers are likely to face challenges that could potentially compromise research validity and reliability. To ensure that these issues are sufficiently considered when planning and designing an e-Delphi, important facets of the technique are discussed and recommendations are offered to help the environmental researcher avoid potential pitfalls associated with coordinating e-Delphi research.

Keywords: Delphi technique, e-research, Internet, Natural resources, Environmental management, Social values, Coastal zone

Introduction

The availability and adoption of Internet technology has increased phenomenally since its introduction in the late 1980s and has transformed the management and decision making that surrounds natural resources and the environment. The United Nations International Telecommunication Union (ITU) reports that the number of Internet users surpassed 2 billion in 2010, with projections indicating that half of the world’s population will have broadband access by 2015 (ITU News 2011; UN 2010). While the United States has historically maintained the most users on a per capita basis, growth is not limited to developed nations. In fact, the spatial reach or “penetration rate” of the Internet continues to grow within developing countries as well. China, for example, usurped the United States in 2006 with the most Internet users—160 million, as a result of their rapidly improving infrastructure and growing economy (China Internet Network Information Centre 2007; ITU News 2011). In the realm of natural resource and environmental management (NREM), these technological advances have given rise to online collaborative communities—virtual spaces that contain a wide range of new tools and services that facilitate local, regional, and global interactions between and among relevant stakeholders around the world. Within NREM, decision making represents a complex web of biophysical elements and social interest trade-offs which, as a process, relies on robust cooperation between stakeholders. The ecosystem of communication and data sharing that the online digital age offers is profoundly shifting how stakeholders are engaged, policy processes conducted, and management initiatives carried out; advances which NREM researchers (and practitioners) must adapt to.

Suffice to say, the Internet has changed the way that environmental data and information is shared, which has clear implications for the evolution of NREM research methodology. The Internet promises the potential for increased sample size and diversity, reduced administration costs and time investments, innovative data management and analysis tools, and a number of other appealing benefits (Donohoe and Needham 2009). In the NREM context, Dalcanale and others (2011) and Mahler and Regan (2011) report that advances in environmental management have paralleled the evolution of information technology, most notably the prevalence and integration of geographic information technologies in research and decision making. More information publicly available through digital mediums has resulted in a shift from centralized decision making to increasing movement toward participatory processes (Dalcanale and others 2011). While increased hardware and software complexity along with development of the World Wide Web “has resulted in a change from centralized mainframes, to the wide popularity of personal computers, collaborative sites, and online, social and professional networks” (Dalcanale and others 2011, p. 443), it has also come at a cost. Benfield and Szlemko (2006) warn that the growing use of Internet-based research methods, techniques, and tools is misleading because e-research remains marginal in size and scope, especially when compared to the wealth of existing traditional research (e.g., paper and pencil survey research, face-to-face interviews, focus groups, etc.) published over similar time periods.

Internet-based NREM research has yet to undergo critical methodological review, thus refinement to procedural and analytical practice is lean. The majority of peer-reviewed articles identified in Benfield and Szlemko’s meta-analysis of Internet-based data collection methods report little evidence supporting the appropriateness of the Internet as a tool for scientific inquiry. Dalcanale and others (2011) agree, arguing that despite efforts to capitalize on the potential of information technology for NREM research and practice, inadequate attention has been paid to how newly emerging e-research methods are being utilized. In the absence of such conversation, Roztocki (2001, p. 1) argues that “it is likely that the resulting academic research will be flawed or compromised in some manner.” Because of this, there is need for discussion on the amelioration of unique methodological issues confronting researchers operating in an increasingly electronic and interconnected world. Understanding how e-research methods have been applied in NREM studies may offer scholars unique insights on how to incorporate these burgeoning techniques into future studies. In that vein, this study contributes a critical examination of the e-Delphi technique, one technique within a growing list of online research methodologies. First, the character of Delphi as a consensus-building process, particularly its fit within NREM research and practice, are outlined. Next, we offer guidance, through a case analysis, on its use for NREM researchers.

The Delphi Technique

The Delphi Technique is one traditional research method now being operationalized via the Internet. The Delphi is used to systematically combine expert opinion in order to arrive at an informed group consensus on a complex problem (Linstone and Turoff 1975). In principle, the Delphi is a group method that is administered by a researcher or research team who assembles a panel of experts, poses questions, syntheses feedback, and guides the group toward its goal—consensus. Relative to traditional survey methods, in any form (online, in-person, mail, etc.) where the goal is mean finding and generalization among a given population, the Delphi is an iterative process, more akin to an extended series of focus groups, which leads an identified group of experts toward consensus. Claims of generalizability are often mum within Delphi application, with result validity rooted in the iterative process of expert opinion development and consensus building as opposed to statistical significance. The Delphi is a technique for organizing conflicting values and judgments through facilitating the incorporation of multiple opinions into consensus (Powell 2003). The payoff of a Delphi study is typically observed through expert concurrence in a given area where none existed previously (Sackman 1975). This is achieved using iterative rounds of sequential surveys interspersed with controlled feedback reports and the interpretation of experts’ opinions. Iteration with the above types of feedback is continued until convergence of opinion reaches some point of diminishing returns, which suggests agreement. While consensus is the goal of Delphi methodologies generally, it is important to note that survey iteration can conclude in a lack of agreement and consensus, a situation that researchers need to be aware of and sensitive to.

Delphi toolkits and meta-analyses are available in a breadth of fields including health education and behavior (de Meyrick 2003), nursing and health services (Cantrill and others 1996; Powell 2003; Keeney and others 2011), tourism management (Donohoe 2011; Green and others 1990), information and management systems (Okoli and Pawlowski 2004), and business administration (Day and Bobeva 2005; Duboff 2007; Hayes 2007). In the NREM domain, the technique has been used to investigate a variety of research topics and issues. Representative examples published in Environmental Management include but are not limited to: mediation of environmental disputes (Miller and Cuff 1986); citizen participation in decision making (Judge and Podgor 1983); environmental initiative prioritization (Gokhale 2001); exploration of diversity in contemporary ecological thinking (Moore and others 2009); and adaptation options in the face of climate change (Lemieux and Scott 2011).

The application of Delphi methods within NREM research contexts is ideal for two reasons. First, the Delphi is uniquely designed to explore issues and topics where minimal information or agreement exists, a relatively common situation within NREM across contexts and between disciplines. Second, Delphi allows for the introduction and integration of viewpoints, opinions, and insights from a wide array of stakeholders, including managers, citizens, public officials, and advocacy groups, a valuable asset within a majority of NREM that seeks diverse input yet often relies on institutional disciplinary expertise. For example, emergent NREM issues that commonly arise out of ever-changing policy requires new and innovative thinking, as relevant baseline knowledge may not exist, which often necessitates diverse experts. This need for assorted proficiencies, to provide a variety of opinions and assessments at the outset of issue brainstorming, is borne out of the increasing understanding of ecosystem complexity (i.e., systems thinking within NREM).

It is important to note that the particular value of Delphi application within NREM is consensus building around a topic where little agreement exists and not for defining a problem or deciding a course of action on a certain issue. Pragmatically, within NREM contexts, the Delphi is, therefore, the best for providing baseline information (perceptions, opinions, and insights) into a given topic where consensus can serve as a springboard to additional conversation and development within relevant resource management structures. Principally, NREM contexts are generally legally bound and politically driven to a point where issues of participants selection and consensus achievement standards within a Delphi would be problematic if applied as a decision making tool. Instead, Delphi findings in these setting are most beneficial for agenda insight and development along with determining what is important across stakeholder interests regarding a given topic.

The technique’s advantages and benefits to NREM researchers are summarized in Table 1 (adapted from Donohoe and Needham 2009); however, like other research tools, the Delphi is not without limitations. In addition to ensuring an e-Delphi’s legal and political amenability (i.e., making sure the method fits the questions and works within relevant rules, regulations, and guidelines) NREM researchers should be aware of pragmatic issues crucial to method implementation. The Delphi is highly sensitive to design and administrative decisions (e.g., panel design and survey architecture) which can affect the research outcome (Donohoe and Needham 2009). For example, the risk of specious consensus and participants simply conforming to the median judgment for compliance sake is a reality that must be addressed, standing as a common limitation within group techniques generally while mirroring drawbacks from silo oriented disciplinary expertise replete within NREM. There is also debate about how to determine “sufficient consensus” along with concomitant questions regarding the appropriate point in time to terminate the consensus-building exercise. Empirically, consensus has been determined by measuring the variance in Delphi panelists’ responses over rounds, with reduction in variance indicating greater consensus. Using variance reduction as a Delphi measure is so typical that the phenomenon of increased “consensus” no longer appears to be an issue of experimental interest (Rowe and Wright 1996).

Table 1.

Delphi advantages and benefits for natural resource and environmental management researchers

| Advantages | Benefits for natural resource and environmental management researchers |

|---|---|

| 1. It is well-suited for forecasting uncertain factors | It is well-suited for forecasting uncertain factors that may affect natural resource and environmental management |

| 2. It is anonymous | It provides opportunity for participants to express their opinions without being influenced by others and without fear of “losing face” among their peers |

| 3. It is dependent on expert judgment | It is particularly compatible with natural resource and environmental management experts as they are often in a position to be affected by or to operationalize the research product. Thus, they may be more willing to participate |

| 4. It is not limited by narrow expert definitions | It facilitates capacity building for expert interaction in an industry characterized by fragmentation and limited opportunities for interaction and knowledge exchange (i.e., increases interdisciplinary interaction and understanding) |

| 5. It is effective and efficient | It is a low-cost technique that is easily administered by natural resource and environmental management researchers and/or practitioners |

| 6. It is reliable and outcomes can be generalized | It is a legitimate technique for natural resource and environmental management research. The generalizability of research outcomes is well-suited for management forecasting |

| 7. It is non-linear by design | It offers an alternative research approach for addressing emergent and/or complex environmental management problems that do not benefit from the traditional linear approach |

| 8. It is insightful | It facilitates progress. Through iterative feedback, participants are part of the process, and the sum is much more than its parts. This is particularly complementary to natural resource and environmental management research where participants (managers, local stakeholders, etc.) are increasingly key/mandatory contributors to the research process and outcomes. Community-based natural resource research and collaborative stakeholder environmental management research are just a few examples |

Adapted from: Donohoe and Needham 2009, p. 420

Difficulty determining adequate consensus aside, the considerable time commitment required is identified as perhaps the most significant limitation for both the researcher and participant (Wagner 1997). For the researcher, the amount of time required to develop an expert panel, deliver communications, administer multiple survey rounds, and prepare interim reports is quite noteworthy and often underestimated. Traditional Delphi studies are paper-based with communications, surveys, and reports distributed by regular postal mail (Green and others 1990). MacEachren and others (2006) reported that a significant managerial burden is placed on the traditional Delphi administrator who must devote a substantial amount of time gathering, organizing, compiling, and synthesizing participant responses. For Delphi participants, the iterative, paper-based survey completion exercise is demanding by nature and can be a deterrent to participation acceptance and continuance. The time required to complete several rounds of surveys is further complicated by the traditionally long waits between survey rounds which can produce diminishing interest and frustration (Donohoe and Needham 2009). For these reasons, high attrition has been widely reported in the literature (Sinha and others 2011).

Given these limitations and others, Donohoe and Needham (2009) recognized that using the Internet for Delphi research presents a new and exciting research frontier. They ascertain that the e-Delphi could provide a promising alternative that may reduce time, costs, communication difficulties, consensus monitoring challenges, and participant attrition. But, having said this, they caution that Internet-based technology is a relatively new research opportunity and that “challenges common to Internet-based communications are present” (Donohoe and Needham 2009, p. 423). Researchers must be aware that the deficiencies in methodological discourse are certainly putting at risk the design, implementation, success, and evolution of e-Delphi research. Therefore, through a critical analysis of an e-Delphi case study, this paper contributes to the evolution of best-practice methods in e-Delphi research and provides sage guidance to the e-Delphi NREM researcher.

Delphi Within Natural Resource and Environmental Management

Ranging from issues of air pollution (de Steiguer and others 1990) to environmental agency effectiveness (Yasamis 2006), the Delphi method has been used extensively in the context of NREM research. Bunting (2010) describes the Delphi method to be particularly useful in facilitating interactive participation of varied and conceivably hierarchical, and often antagonistic, stakeholder groups that are inherent to NREM processes. Issues in NREM can often be distorted by dominating interests with specific agendas and relationships to the relevant resources; the overarching goal within a Delphi of combining disparate viewpoints into consensus building addresses this explicitly. Delphi processes provide information not accessible from either conventional questionnaire or interview methodologies common in NREM assessment (Egan and others 1995) and can contribute to a more refined joint analysis-consensus in complex social and institutional settings where minimal knowledge or agreement about a certain issue exists. These attributes are especially valuable in coastal zone management and planning, shown in the case analysis that follows, where multiple demands of stakeholders must be reconciled in the development of co-management strategies and environmental protection of land, wetland, and marine ecosystems. In addition, as was noted in the previous section, the Delphi technique applies best in situations with uncertainty and lack of general consensus regarding a particular topic, in our case an understanding of human impacts on and perceptions of the environment in the coastal zone, specifically the social values of ecosystems services that exist in those environments.

Natural resource management regimes in the United States, both generally and in coastal management and planning specifically, are well rooted in traditional ways of doing business [i.e., command-and-control management (Holling and Meffe 1996)]. The Delphi offers opportunities for stakeholders, whether they are managers or members of society, the chance to step outside the status quo considerations and understandings of natural resource use and explore alternatives which may not fit into applicable institutions (i.e., agency mandates, laws, regulations, etc.). The scientific and public policy literature is replete with examples of the disparity in conceptions of natural resource management between managers themselves and the public they purport to serve; the Delphi represents the ability for disparate parties to reach a consensus on issues that undoubtedly stretch across lines. Common efforts to establish a baseline for NREM issue consideration that is responsive to public interests is often absent or decided solely by managers and in-house officials without external input. The Delphi remedies this by providing a process of consensus building around a conceptual foundation that hopefully can act as a catalyst for further development. Again, the Delphi is best utilized in situations where no clear consensus exists among the variables of interest; acting not as a decision making tool per se but instead as a precursor to issue development and management mitigation actions.

The freedom of anonymity offered by the Delphi method allows expert participants to challenge entrenched, disciplinary assumptions that are often inherent to agencies, stakeholder groups, or even accepted scientific understandings (Donohoe and Needham 2009) and work toward a starting point consensus on a given issue that is representative of a wide range of interests. If NREM is to be looked upon broadly as the responsible stewardship of public goods, specifically the natural ecosystem processes that sustain us, then it stands that precursory agenda setting should be responsive and responsible to public interest that it serves. The Delphi can provide a starting point for this responsibility through representation of disparate viewpoints during agenda establishment on the front end, a process which contrasts with many NREM processes that rely on limited disciplinary assumptions followed by token public input measures.

There are two issues specific to NREM research contexts that must be considered when deciding on the use of the Delphi, whether conducted electronically or not, as a best-fit method. The first question that arises is how stakeholders should be identified and included (i.e., who makes up the expert panel) considering the political and legal realities often ascribed to NREM contexts? The second query, given those same circumstantial parameters, centers on the issue of determining adequate consensus. To the first question, as noted in the above sections, choosing to conduct a Delphi hinges on the basic premise that the question being asked, in our case the need to establish a typology of social values of ecosystem services in the coastal zone, does not have a generally accepted answer or lacks accord on baseline variables needed for further inquiry. The Delphi, in that regard, should be used to establish a foundation, or starting points, from which strategic planning and management efforts can occur and not as an action-decision tool. When used in this way, front end conceptual agendas can be rooted in consensus among disparate, and theoretically representative, viewpoints as opposed to disciplinary or institutional, and theoretically limited, perspectives.

Therefore, as a general baseline-generating process detached from any particular planning or management scenario, decisions on who to include as expert panelists avoids many of the political and legal requirements of NREM decision making that exist within specific situations. The focus of panel selection is then placed on gathering a wide array of viewpoints, as determined by the researcher given the question being asked, as opposed to concerns of representativeness that result from political and legal requirements. For example, in the case example presented above, the goal was not to decide on a typology of social values as action items in a particular environmental planning or management scenario but instead to create a collective listing of relevant social values for consideration within NREM initiatives in the coastal zone generally.

To contrast, if and when the Delphi is used in a specific strategic planning scenario, considerations of expert panel composition must adhere more closely to requirements of input representativeness, given that particular situation’s political and legal realities that are likely be different every time. It is the suggestion of the researchers who targeted action-decision scenarios (i.e., strategic planning and management) look elsewhere for consensus building (e.g., focus groups) or, at a minimum, tread lightly when deciding on whom to include as experts panelists if the Delphi method is chosen. In that type of case, the use of the e-Delphi loses one of its major advantages, the capacity of bringing together geographically dispersed individuals (as it would not necessarily be required), but can gain the advantage of bringing together disparate viewpoints while avoiding bias that can result from face-to-face meeting through the provision of anonymity over the course of iterative consensus building around a given topic.

The related question of determining when consensus has been reached again hinges on the appropriateness of the method in addressing a particular inquiry. Much like issues of panel selection outlined above, these determinations are likely context specific and are avoided, from a political and legal perspective, if the Delphi is applied in appropriate settings. A determination of consensus, similar to the iterative process that occurs throughout the Delphi exercise, is generally arrived at as a collective decision between expert panelists and the researcher (or research team). Most often, as was the case in the example that follows, indicators of consensus-reaching occurs when mean scores related to study variables stabilize and standard deviation measures decrease over the iterative survey rounds. Once that occurs, the last step in making the final consensus determination is confirmed by offering the expert panel a summary of results accompanied by a request for comment regarding the findings. When that request is made, any additional comments, if there are any, can be taken into account and a decision made on whether to resume the consensus-building process with additional iterative rounds. At the conclusion of the case presented here, for example, round three saw a stabilization of grand mean scores regarding the relevance of the social value typology and a reduction in standard deviation measures, indicating consensus. When the request was made for any additional comments regarding the determination that consensus had been reached no panelists responded, indicating to the researchers that indeed a decision on consensus was appropriate.

An e-Delphi Example

An e-Delphi was initiated to facilitate the development of a typology of social values of ecosystem services and a listing of important resource uses in coastal environments among international experts in coastal and marine management. This research focused directly on a critical gap in NREM research: the lack of consensus regarding theory and measurable variables of social values derived from ecosystem services, particularly in the coastal zone. A widely accepted typology of social values that contributes to ecosystem services discourse and management guidelines is important when describing the foundational elements of the human-environment relationship. In addition, such a typology aids in identifying facilitators and obstacles to more readily establish those connections globally. The development of a unique typology is judged to be the fundamental basis or starting point for a paradigm shift in NREM practice defined by ecosystem-specific parameters but plagued by non-inclusive knowledge and management systems. While much work has been done to establish such a typology in terrestrial environments (see Rolston and Coufal 1991; Reed and Brown 2003; Brown and Reed 2000; Brown and others 2004), the effort outlined here has been made to extend and modify this existing typology to fit coastal and marine ecosystems. To propose a unified vision for what represents the social values of ecosystem services in coastal environments, the Delphi technique was selected over more traditional methods (i.e., surveys, group interviews, and focus groups) due to it being judged a “best-fit” on the basis of the Delphi’s attributes/benefits (Table 1) and its congruency with the above-mentioned study objectives. As a method uniquely situated to address topics with minimal agreement on defining variables, in this case the lack of consensus on social values of ecosystem service in the coastal zone, the Delphi was deemed the best, most effective fit.

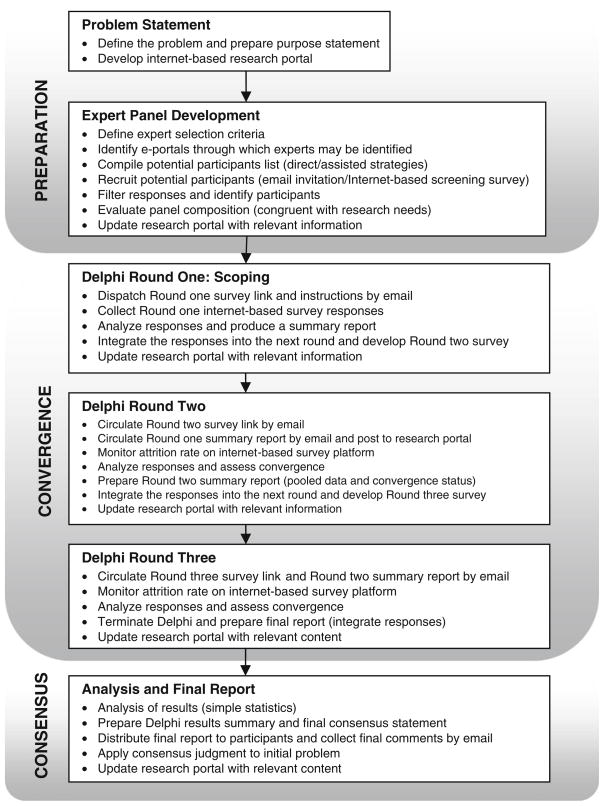

A three-stage Delphi exercise was adapted after Day and Bobeva’s (2005) Generic Delphi Toolkit (Fig. 1). In the preparation stage, a panel of international experts was assembled. The panel comprised two relevant expert groups: professionals from government, private industry, and non-governmental organizations; and academics engaged in related research and education. The inclusion of both professional and academic experts is substantiated by Briedenhann and Butts (2006) and Sunstein (2006) as a means for achieving a balance between differing perspectives on “knowledge” and for mitigating the existent divide between research and professional communities with regard to knowledge sharing, communications, priorities, epistemology, etc. A geographically diverse expert panel was also essential for capturing a diversity of perspectives on the issue at hand. In this vein, the Delphi endeavor achieves a more inclusive and relevant Delphi consensus. Through a review of peer-reviewed publications and investigation into several membership-based international companies and organizations, an initial set of 1,025 potential participants were identified.

Fig. 1.

Three-stage e-Delphi approach

Given the global nature of the study and the conventional constraints imposed on Delphi methodology, the use of the Internet for communications and data collection (i.e., e-Delphi) was appealing due to the time and cost savings it provided (Hung and Law 2011). To pilot test the utility of the Web technique, the Internet comprised the primary communication medium throughout the preparation stage. Before contacting potential participants, an Internet-based research portal was created to share information with research participants, supporters, and other stakeholders. A research problem statement was prepared and a research description—including human subjects protections (e.g., description of study purpose, informed consent, etc.), was posted to the research portal. A profile of the researchers—including contact information as well as research institution information was also posted. Once the primary e-portal was established, Delphi email invitations were disseminated to potential participants. The invitation contained a description of the research including ethics compliance statements, a link to the e-portal for more information, and a link to an expert screening e-survey. The survey requested that potential participants identify which, if any, of the pre-determined selection criteria they satisfied. Inclusion criteria mandated that study participants possess a minimum of five years of experience in the public, governmental, or private sectors, and/or exhibit evidence of professional productivity in terms of peer-reviewed or professional publications and research, participation in academic or industry symposia, and/or a related teaching portfolio in a specific domain.

The survey was administered using Qualtrics™, an Internet-based survey provider that offers a user-friendly interface for survey design and administration as well as for survey completion. This service provider was selected over others on the grounds that little to no training was required of users and there was no cost or obligation associated with the subscription due to institutional access for the researchers. Once the screening survey was developed, a unique URL or web address was created and included within the emailed Delphi invitation. When the potential participant clicked the link, they were directed to the initial survey; the internet-based survey software, Qualtrics™, tracks unique user IP addresses to ensure that each respondent only fills out one survey (for security and experimental control purposes). The screening survey proved to be simple to design and administer with only one technological difficulty reported (the inability to complete the survey if the link was received in a forwarded email) that was corrected within half an hour of sending the initial invitation email. From the initial email invite to 1,024 unique addresses, 154 potential participants completed the survey (Response rate = 15.04 %), and a review of the responses revealed a broad geographical distribution (14 countries were represented) as well as a range of expertise across the desired expertise spectrum (academics and professionals).

Those satisfying the selection criteria (127) were sent an email message requesting their participation for the duration of the e-Delphi exercise. A total of 27 individuals who did not satisfy the expert criterion were given an additional opportunity to clarify their answers to the scoping questionnaire. Two participants who completed the initial survey and were excluded based on their responses answered that message with clarification of their qualifications and were subsequently chosen as panel participants (bringing the expert panel total to 129 individuals). At this point, an interactive Internet-based map was created for the purpose of sharing information about the “virtual laboratory.” The research participants’ locations (city and country) and professional background (academic, commercial/private, government agency, non-governmental agency, or a combination) were marked on a Google map (all personal identifiers being excluded), with a link to the map posted on the e-portal. Though e-portal visits were not tracked, several of the research participants reported via email that they returned to the website frequently during the preparation stage and found it to be an important source of information about the research project and its participants. In light of the relative administrative success, the Internet was judged to be a “best-fit” for the study as it allowed the research team to: (a) access a geographically diverse expert panel; (b) communicate and share information conveniently and securely; and (c) collect data efficiently, in a timely manner, and at a low cost.

In the convergence stage, the 129 experts were invited to complete the Round one survey. Again, an email invitation with instructions and a link to the e-survey was included. The survey was purposefully designed as a scoping exercise to encourage participants to elaborate on the research problem and the task at hand (i.e., developing a typology of social values of ecosystem services and identifying priority issues of management in the coastal zone). An initial, working definition for social values of ecosystem services was provided to the panelists (based on a comprehensive review of the literature); it was purposefully broad and loosely structured so as to allow the group to inform the shape and content of the typology over the course of the e-Delphi exercise. Participants were provided with a two-week period in which to respond. A response rate of 45 % was achieved (n = 58). As was expected, Round one resulted in the submission of hundreds of valuable comments, suggestions, and critiques. When conducting a Delphi it is important to start the iterative consensus-building process with an open opportunity for expert panelists to share opinions and set the parameters for the study moving forward. Since Delphi methods are most often (and most constructively) applied to research situations where variables of interest are lacking broad based agreement, the first round open-ended process of establishing variables of interest, instead of responding to already established variables, is vital for the process to be inclusive of all expert viewpoints to start with.

In Round two, a Round one summary report was circulated to all responders that included pooled data and representative commentary. This was provided to participants by email and was also posted on the research portal for available download. The expert contributions and Round one results were used as an inclusive baseline and a second survey was circulated to solicit feedback regarding the appropriateness of the initial typology and listing of resource uses, along with observations and interpretations about the working definition of “social values of ecosystem services.” Again, participants were provided with a two-week period in which to respond and several reminder emails were dispatched as the deadline approached. The same procedure was followed for Round three. In order to assess the level of convergence between rounds, simple mean scores and associated standard deviations were calculated across all three rounds. Table 2 describes the e-Delphi panel composition, response rates, and convergence measures over the three e-survey Rounds. Between Rounds two and three, the typology enhancements were minimal, no significant change in the mean scores was observed, but the increase in convergence (reduction in standard deviation value) was noteworthy. To represent this convergence, the reported mean in Table 2 is a grand mean of the perceptions of relevance (on a 5-point Likert scale) for each recorded social value of ecosystem services by expert panelists, starting with open-ended response coalescence from Round one (19 original values) and subsequent refinement through Rounds two and three (16 final values). Scheibe and others (1975) state that measuring the stability of participant responses in successive iterations is a reliable method of assessing consensus, represented in Table 2 by consistent mean scores and a decreasing standard deviation between rounds two and three. In addition, the dispersion measures in the present study satisfied guidelines for establishing consensus by the Generic Delphi Toolkit and the Delphi literature (Day and Bobeva 2005; Donohoe and Needham 2009). Thus, it was decided that optimal consensus had been reached and that further rounds would not produce additional convergence of opinion.

Table 2.

e-Delphi panel composition, response rates, and convergence measures

| Round 1 | Round 2 | Round 3 | |

|---|---|---|---|

| Surveys delivered | 129 | 58 | 38 |

| Survey completed | 58 | 38 | 37 |

| Government panelists | 36 | 25 | 19 |

| Academic panelists | 39 | 14 | 9 |

| Non-governmental panelists | 25 | 10 | 5 |

| Commercial/Private panelists | 29 | 9 | 4 |

| Response rate | 45 % | 66 % | 97 % |

| Convergence measures | |||

| Mean scorea | n/a | 4.3 | 4.4 |

| Standard deviation | n/a | 0.85 | 0.71 |

Value typology relevance scores measured on a five-point Likert-type scale where: 5 = very appropriate, 4 = appropriate, 3 = somewhat appropriate, 2 = not very appropriate, 1 = not appropriate

It is important to note that the design of the data collection instrument evolved between rounds. This was informed by difficulties reported by participants when completing the e-surveys and by the challenges encountered by the administrator when compiling and analyzing the data. For example, in Round one, several participants reported a need to review answers made on previous pages to inform those later in the survey and could not go back to do so. The Delphi administrator worked with the participants (by email) to identify the source of the technical problems, and minor survey formatting alterations successfully eliminated existing technical problems (i.e., adding in a back button). Changes were also informed by the recommendations of Yang and Li (2005) and Day and Bobeva (2005) who suggest that the key to formulating Delphi surveys, and to mitigating attrition, is to ensure that the survey is technically unchallenging, that questions are clear, concise, and unambiguous, and that they are complimented with clear instructions for the panelists. The e-Delphi approach used in the study of social values of ecosystem services attempted to operationalize these recommendations by employing a combination of open and closed-ended questions. As well, the majority of consensus-building activities and measures were based on a 5-point Likert-type scale where panelists judged the relevance of the typology variables from “5” for very relevant to “1” very irrelevant. The e-survey platform provided a simple interface for organizing questions, designing question sequences, and for pilot testing the surveys. It also allowed the administrator the ability to monitor response rates and progress toward consensus. The enhanced functionality of Qualtrics™ produced simple statistics in “real-time” as participants submitted their judgments. The participants reported that the e-survey interface allowed them to jump easily between questions, modify their answers, quit and return to the survey at a later time (a function added between the expert qualifications survey and Round one), access technical support (through help functions), and respond to the survey when and where it was most convenient.

In the consensus stage, a draft final report was prepared and circulated by email to the expert panel. Before declaring “consensus,” final comments and suggestions on the report and its definitional content were requested. Panelists submitted their recommendations and the administrator integrated them into a final consensus statement. This process ensured that the typology and resource use listing had undergone sufficient testing and that a consensus judgement had been rendered regarding its value and appropriateness. A final Delphi report was then prepared and disseminated by email to the panelists, supporting organizations, and other interested stakeholders. It was also posted to the research portal. The total time to complete the e-Delphi exercise was just over 2 months.

Discussion

The Internet presents a new and exciting NREM research frontier, but caution is warranted as its use presents challenges. In the e-Delphi case, methodological guidance has yet to emerge in the literature (Donohoe and Needham 2009). Clearly, this is an important and evolving contribution area for Delphi research specifically, and e-research generally. While many of the issues can be considered characteristic of computers and the Internet, problems associated with traditional methods can be intensified by the conditions of the virtual landscape (Hung and Law 2011). These include perceived anonymity, respondent identity (real or perceived), and data accuracy (response selection control and transmission errors) (Baym and Markham 2009; Jones 1999; Roztocki 2001). Because of the problems associated with e-research and complimented by the issues revealed during the aforementioned research process, select issues—convenience, administration, control, and technology—are brought forward for discussion.

Convenience

The e-Delphi exercise revealed that the convenience of “anytime” or “anywhere” research was a pragmatic feature of the data collection process, given that the e-Delphi administrator and participants could access the research portal and surveys anytime and anywhere that Internet access was available. Participants could choose to respond when it was most convenient to do so, and the administrator could monitor response rates and respond to technical difficulties without being physically tied to the research center. Convenience was also revealed by the familiar interface offered by the personal computer via the e-survey provider. As e-surveys have become ubiquitously distributed to millions of people around the world, the standard format and user interface reduced the amount of training and/or instruction required for participants to complete the surveys. By extension, Internet technology made the collecting and sharing of group level information accessible and convenient across national boundaries and time zones. This global web phenomenon has been brought to light elsewhere as well (Hung and Law 2011).

Administration

In this case study, full access to the Qualtrics™ data management service for the duration of the active research phase was provided to the researchers through institutional licensing. This level of access allowed participants access to the statistical summary reports—thereby improving knowledge exchange and research transparency. It is important to note that other online survey management services are available at no cost or for minimal fees, making use of these technologies easily accessible and rarely cost prohibitive. As such, the use of an e-Delphi reduces the research costs of a traditional Delphi reliant on postal communications. In this case, the Internet expedited communications and recruitment so that the total amount of time between expert recruitment and consensus was just over two months. The time commitment would have been much greater if traditional communication methods were used. The data management advantages of the e-Delphi technique were also confirmed. The e-survey provider made available a large database for gathering and storing data electronically. The format also made it possible to easily move the data into Microsoft Excel (or other formats such as SPSS) for analysis. Survey software also monitored response rates and attrition, and where numerical data was captured, simple statistical reports were provided. To facilitate transparency, participants and other interested parties could access the statistical reports throughout the course of the e-Delphi through the established research web portal or via email request to the researchers (for instance, individuals not selected as expert panelists and attritive participants could opt to receive summary reports between rounds).

Control

The issue of experimental control did not present itself as a concern during the e-Delphi exercise. However, where control did appear to be an issue was in reducing participant distractions. While distractions can never be completely avoided, the researcher made efforts to identify and avoid known distractions (as recommended by Briedenhann and Butts 2006). The surveys were dispatched so as to capture the maximum availability of the panelists and to achieve the best possible response rate. For the e-Delphi, care was afforded to the timing of the surveys so that they did not coincide with traditional periods of occupation leave (in this case, initiation of the Delphi exercise was delayed to the first of the calendar year to avoid the typical holiday season of November and December).

Although attrition did occur, the response rate improved and attrition rates decreased as the rounds progressed. This may be the evidence of the panelists’ level of commitment to the study, but it may also suggest that efforts to avoid distractions through calculated timing may be an effective strategy. Representation was, however, identified as a potential issue given, “there is a high degree of uncertainty… in terms of ‘knowing’ the identity of the other” (Ward 1999, p. 5). To mitigate threats to sampling an ecologically valid and representative sample, efforts to corroborate participant expertise areas (self-identified experts) were completed in the early stages of expert identification (e.g., peer-reviewed portals, secondary verification, and the use of the screening survey). To control misrepresentation in survey responses, panelists were limited to one response per unique IP address. This provided some measure of control over identity but the researcher acknowledges that representation remains an issue yet unresolved in Internet-based inquiry. This limitation is confirmed by Hung and Law’s (2011) analysis of e-survey research in tourism and hospitality, for example.

Technology

Technological complications presented a challenge to participant recruitment and data collection. Several participants reported other technological difficulties such as an inability to open survey links, download the survey, or submit their responses. In most cases, difficulty was the result of user error and administrator assistance resolved the issue. In other cases, computer hardware such as operating systems, Internet connections, security filters, and technical failures were the source of difficulty. As an example, the initial invitation to fill out the qualifications survey used in determining expert panelists encouraged individuals to forward the included questionnaire link on to interested colleagues. However, unbeknownst to the researcher those links were limited to the IP address they were sent to causing them to fail when forwarded. In a positive reflection of the use of internet technologies, researchers were informed of the issue almost immediately via email response and able to remedy the problem within an hour of the initial message. Finally, some email addresses were inactive and this resulted in ~25 returned emails in the preparation stage. These challenges are not unique to the e-Delphi, but given the nature of the global e-laboratory, it is often the case that experts are not specifically familiar with the chosen questionnaire medium and technological difficulties present themselves.

On the basis of the practical limitations and issues revealed by the e-Delphi case study, a set of issues and recommendations are outlined in Tables 3, 4 for use in assisting the e-Delphi NREM researcher.

Table 3.

Final consensus typology: social values for coastal contexts (alphabetical order)

| Value | Definition |

|---|---|

| Access | Places of common property free from access restrictions or exclusive ownership/control |

| Esthetic | Enjoyable scenery, sights, sounds, smells, etc |

| Biodiversity | Provision of a variety and abundance of fish, wildlife, and plant life |

| Cultural | Place for passing down wisdom, knowledge, and traditions |

| Economic | Provision of fishery (commercial/recreational), minerals, and tourism industry that support livelihoods |

| Future | Allowance for future generation to know and experience healthy, productive, and sustainable coastal ecosystems |

| Historic | Place of natural and human history that matter to individuals, communities, societies, and nations |

| Identity/Symbolic | Places that engender a sense of place, community, and belonging; represent a distinctive “culture of the sea” |

| Intrinsic | Right to exist regardless of the presence; value based on existence (being rather than place) |

| Learning | Place of educational value through scientific exploration, observation, discovery, and experimentation |

| Life Sustaining | Provision of macro-environmental processes (i.e., climate regulation, hydrologic cycle, etc.) that support life, human and non-human |

| Recreation | Place for favorite/enjoyable outdoor recreation activities |

| Spiritual/Novel Experience | Places of sacred, religious, unique, deep, and/or profound experience where reverence/respect for nature is felt |

| Subsistence | Provision of basic human needs, emphasis on reliable, regular food/protein source from seafood |

| Therapeutic | Place that enhances feelings of well-being (e.g., “an escape,” “stress relief,” “comfort and calm”). |

| Wilderness | Place of minimal human impact and/or intrusion into natural environment |

Table 4.

Practical advice for e-Delphi application

| Issue | Recommendation |

|---|---|

| 1. Methodological fit | Critically assess the advantages and limitations of the Delphi as a method and the Internet as a research medium. The Delphi serves in establishing baseline variables to consider for pragmatic application, not as an action-decision tool. The use of e-research has distinct rewards, such as coalescing geographically dispersed responses, that must be analyzed against inherent limits, such as access issues |

| 2. Centralized communication | A website should be established for sharing information about the research to legitimize the project and serve as a portal for communication between the researchers, participants, and other stakeholders. Transparency regarding research design and material about the e-survey process, institutional affiliations, contact information, and findings reports can be shared |

| 3. Participant recruitment | Make use of Internet-based databases and listservs for recruiting potential participants. Select participants with high interest, as determined through an initial scoping survey, to reduce potential attrition over iterative survey rounds |

| 4. Misrepresentation | Screen scoping survey respondents by validating information through membership organizations, academic or professional institutions, and/or publications to capture and winnow out the most appropriate experts for the study. Also, shield e-survey access using unique IP addresses and/or password protection to limit responses to chosen expert panelists only |

| 5. e-Survey provision | Select software or a service provider that allows flexibility in survey design, reliable technological support, robust data analysis and sharing options, attrition monitoring and response tracking capability, high levels of security, and ease of usea |

| 6. Pilot testing | Check all communications (e.g., email, hyperlinks, etc.) and e-surveys in the ways that participants will use the software to avoid interpretation and technological difficulties. For instance, use a communication test group of immediate colleagues for assistance |

| 7. Record keeping | Maintain copies of all documents, data, and reports in case of hardware/software failure such as survey provider problems and data loss. Ensure participant anonymity and security of survey contributions through coding and password protection, respectively |

| 8. Timing | Identify known or possible distractions and time administration threats and plan timing of survey administration accordingly. Literature indicates distractions such as vacations, holiday periods, and disciplinary conferences as events that lead to low Delphi response rates (e.g., Briedenhann and Butts 2006; Donohoe 2011) |

In this regard, consult Wright’s (2005) comprehensive review of the twenty most popular software packages and e-survey services that are commonly used for academic research

Conclusion

The adoption of the Internet as a tool for conducting research is becoming more and more common in a variety of disciplines including NREM. Given its increasing popularity, it is expected that a growing proportion of future studies will be conducted online (Schonlau and others 2001). While it is unlikely that e-research will replace traditional research methods, it is certainly a viable alternative for the right research topic and target audience (Palmquist and Stueve 1996). However, e-research is still in its infancy and NREM researchers must be critical and calculated when adopting Internet-based tools and techniques (Hung and Law 2011). Importantly, however, in light of the practical advantages that the e-Delphi offers, and because of the evolving set of recommendations and best practices coming forth about the technique, analysis of this case study suggests that the benefits far outweigh (and will likely continue to outweigh) the costs. The e-Delphi is effective and efficient in terms of overcoming geographical barriers, saving time and money, and building group consensus. In this regard, the authors support Deshpande and others (2005, p. 55) claim that the e-Delphi is a “feasible, convenient and acceptable alternative to the traditional paper-based method.” However, the authors would add that the e-Delphi offers more than just an alternative to the traditional method. The interactive capacity of the Internet offers a range of benefits that are inextricably linked with contemporary Internet-based innovation and its growing popularity in the research domain.

Despite its value, it must be emphasized that without careful consideration of the advantages and limitations associated with e-Delphi administration specifically, and Internet-based research generally, NREM researchers are likely to face challenges that could potentially compromise research findings and their publication in peer-reviewed journals. Since methodological quality is a major assessment criterion for publication, an understanding of emerging e-research methods can potentially enhance scholars’ understanding of how the Internet can be utilized for NREM research. The critical analysis presented here may help researchers to make informed decisions when planning and designing their studies while keeping the advantages and disadvantages of e-research in mind. Given the increasing awareness and emerging critical discourse regarding e-research, this tutorial is a timely methodological contribution to the NREM literature. Clearly, there are opportunities here worthy of further exploration, which necessitates appropriate investments in methodological applications, reporting, and evaluation of the e-Delphi method within and beyond the boundaries of NREM research.

Acknowledgments

The authors wish to thank Dr. Robert Swett and Dr. Stephen Holland from the University of Florida; and Dr. Alisa Coffin from the Geosciences and Environmental Change Science Center—United States Geological Survey for their help and support with the research project. The project described herein was supported by Grant/Cooperative Agreement Number G11AC20428 from the United States Geological Survey. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the USGS. This work was supported, in part, by the NIH (NCATS) CTSA awards to the University of Florida UL1TR000064 and KL2TR000065.

Contributor Information

Zachary Douglas Cole, Email: zaccole@hhp.ufl.edu, Tourism, Recreation, and Sport Management, University of Florida, PO Box 118208, Gainesville, FL 32611, USA.

Holly M. Donohoe, Tourism, Recreation, and Sport Management, University of Florida, PO Box 118208, Gainesville, FL 32611, USA

Michael L. Stellefson, Health Education and Behavior, University of Florida, Gainesville, FL, USA

References

- Baym NK, Markham AN. Making smart choices on shifting ground. In: Markham A, Baym N, editors. Internet inquiry: conversations about method. Sage; Thousand Oaks: 2009. [Google Scholar]

- Benfield JA, Szlemko WJ. Internet-based data collection: promises and realities. J Res Pract. 2006;2(2):D1. [Google Scholar]

- Briedenhann J, Butts S. The application of the Delphi technique to rural tourism project evaluation. Curr Issues Tour. 2006;9(2):171–190. [Google Scholar]

- Brown G, Reed P. Validation of a forest values typology for use in national forest planning. For Sci. 2000;46(2):240–247. [Google Scholar]

- Brown G, Smith C, Aleesa L, Kliskey A. A comparison of perceptions of biological value with scientific assessment of biological importance. Appl Geogr. 2004;24(2):161–180. [Google Scholar]

- Bunting SW. Assessing the stakeholder Delphi for facilitating interactive participation and consensus building for sustainable aquaculture development. Soc Natur Resour. 2010;23(8):758–775. [Google Scholar]

- Cantrill JA, Sibbald B, Buetow S. The Delphi and nominal group techniques in health services research. Int J Pharm Pract. 1996;4(2):67–74. [Google Scholar]

- China Internet Network Information Centre. [Accessed online March 18 2008];Internet Statistics. 2007 Jun 30; http://www.cnnic.cn/en/index/0O/index.htm.

- Dalcanale F, Fontane D, Csapo J. A general framework for a collaborative water quality knowledge and information network. Environ Manag. 2011;47:443–455. doi: 10.1007/s00267-011-9622-7. [DOI] [PubMed] [Google Scholar]

- Day J, Bobeva M. A generic toolkit for the successful management of Delphi studies. Electron J Bus Res Methodol. 2005;3(2):103–116. [Google Scholar]

- de Meyrick J. The Delphi method and health research. Health Educ. 2003;103(1):7–16. [Google Scholar]

- de Steiguer JE, Pye JM, Love CS. Air pollution damage to US forests. J Forest. 1990;88(8):17–22. [Google Scholar]

- Deshpande AM, Shiffman RN, Nadkarni PM. Meta-driven Delphi rating the internet. Comput Methods Progr Biomed. 2005;77:49–56. doi: 10.1016/j.cmpb.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Donohoe HM. A Delphi toolkit for ecotourism research. J Ecotour. 2011;10(1):1–20. [Google Scholar]

- Donohoe HM, Needham RD. Moving best practice forward: Delphi characteristics, advantages, potential problems, and solutions. Int J Tour Res. 2009;11(5):415–437. [Google Scholar]

- Duboff RS. The wisdom of (expert) crowds. Harvard Business Review; 2007. Sep 28, [Google Scholar]

- Gokhale AA. Environmental initiative prioritization with a Delphi approach: a case study. Environ Manag. 2001;28(2):187–193. doi: 10.1007/s002670010217. [DOI] [PubMed] [Google Scholar]

- Green H, Hunter C, Moore B. Application of the Delphi technique in tourism. Ann Tour Res. 1990;17(2):270–279. [Google Scholar]

- Hayes T. Delphi study of the future of marketing of higher education. J Bus Res. 2007;60:927–931. [Google Scholar]

- Holling CS, Meffe GK. Command and control and the pathology of natural resource management. Conserv Biol. 1996;10(2):328–337. [Google Scholar]

- Hung K, Law R. An overview of internet-based surveys in hospitality and tourism journals. Tour Manag. 2011;32:717–724. [Google Scholar]

- ITU News. The world in 2010: ICT facts and figures. [Accessed online Jan 31 2011];ITU News Magazine. 2011 :10. http://www.itu.int/net/itunews/issues/2010/10/04.aspx.

- Jones S. Studying the net. In: Jones S, editor. Doing internet research: critical issues and methods for examining the net. Sage; Thousand Oaks: 1999. [Google Scholar]

- Judge RM, Podgor JE. Use of the Delphi in a citizen participation project. Environ Manag. 1983;7(5):399–400. [Google Scholar]

- Keeney S, McKenna H, Hasson F. The Delphi technique in nursing and health research. Wiley; West Sussex: 2011. [Google Scholar]

- Lemieux CJ, Scott DJ. Changing climate, challenging choices: identifying and evaluating climate change adaptation options for protected areas management in Ontario, Canada. Environ Manag. 2011;48(4):675–690. doi: 10.1007/s00267-011-9700-x. [DOI] [PubMed] [Google Scholar]

- Linstone HA, Turoff M. The Delphi method: techniques and applications. Addison-Wesley; London: 1975. [Google Scholar]

- MacEachren AM, Pike W, Yu C, Brewer I, Gahegan M, Weaver SD, Yarnal B. Building a geocollaboratory: supporting Human-Environment Regional Observatory (HERO) collaborative science activities. Comput Environ Urban Syst. 2006;30:201–225. [Google Scholar]

- Mahler J, Regan PM. Virtual intergovernmental linkage through the Environmental Information Exchange Network. Environ Manag. 2011;49:14–25. doi: 10.1007/s00267-011-9760-y. [DOI] [PubMed] [Google Scholar]

- Miller A, Cuff W. The Delphi approach to the mediation of environmental disputes. Environ Manag. 1986;10(3):321–330. [Google Scholar]

- Moore SA, Wallington TJ, Hobbs RJ, Ehrlich PR, Holling CS, et al. Diversity in current ecological thinking: implications for environmental management. Environ Manag. 2009;43(1):17–27. doi: 10.1007/s00267-008-9187-2. [DOI] [PubMed] [Google Scholar]

- Okoli C, Pawlowski SD. The Delphi method as a research tool: an example, design considerations and applications. Inf Manag Syst. 2004;42:15–29. [Google Scholar]

- Palmquist J, Stueve A. Stay plugged in to new opportunities. Mark Res. 1996;8(1):13–15. [Google Scholar]

- Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–382. doi: 10.1046/j.1365-2648.2003.02537.x. [DOI] [PubMed] [Google Scholar]

- Reed P, Brown G. Values suitability analysis: a methodology for identifying and integrating public perception of forest ecosystem values in national forest planning. J Environ Plan Manag. 2003;46(5):643–658. [Google Scholar]

- Rolston H, Coufal J. A forest ethic and multivalue forest management. J For. 1991;89:35–40. [Google Scholar]

- Rowe G, Wright G. The impact of task characteristics on the performance of structured group forecasting techniques. Int J Forecast. 1996;12:73–89. [Google Scholar]

- Roztocki N. Using internet-based surveys for academic research: opportunities and problems. American Society of Engineering; 2001. [Accessed online Feb 4 2011]. http://www2.newpaltz.edu/~roztockn/alabam01.pdf. [Google Scholar]

- Sackman H. Delphi critique. Lexington Books; Lexington: 1975. [Google Scholar]

- Scheibe M, Skutsch M, Schofer J. Experiments in Delphi methodology. In: Linstone HA, Turoff M, editors. The Delphi method: techniques and applications. Addison-Wesley; Reading: 1975. [Google Scholar]

- Sinha IP, Smyth RL, Williamson PR. Using the Delphi technique to determine which outcomes to measure in clinical trials: recommendations for the future based on a systematic review of existing studies. PLoS Med. 2011;8(1):e1000393. doi: 10.1371/journal.pmed.1000393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sunstein CR. Infotopia: how many minds produce knowledge. Oxford University Press; Oxford: 2006. [Google Scholar]

- United Nations. Number of internet users to surpass 2 billion by end of year, UN agency reports. [Accessed online Jan 31 2011];UN News Service. 2010 http://www.un.org/apps/news/story.asp?NewsID=36492&Cr=internet&Cr1.

- Wagner J. Estimating the economic impacts of tourism. Ann Tour Res. 1997;24(3):592–608. [Google Scholar]

- Ward KJ. The cyber-ethnographic (re)construction of two feminist online communities. Sociol Res Online. 1999;4(1):193–212. [Google Scholar]

- Wright KB. Researching internet-based populations: advantages and disadvantages of online survey research, online questionnaire authoring software packages, and web survey services. [Accessed online March 11 2011];J Comput Med Commun. 2005 10(3) http://jcmc.indiana.edu/vol10/issue3/wright.html. [Google Scholar]

- Yang GH, Li P. Touristic ecological footprint: a new yardstick to assess sustainability of tourism. Acta Ecol Sin. 2005;6:1475–1480. [Google Scholar]

- Yasamis FD. Assessing the institutional effectiveness of state environmental agencies in Turkey. Environ Manag. 2006;38(5):823–836. doi: 10.1007/s00267-004-2330-9. [DOI] [PubMed] [Google Scholar]