Abstract

Objective

When either real or simulated electric stimulation from a cochlear implant (CI) is combined with low-frequency acoustic stimulation (electric-acoustic stimulation [EAS]), speech intelligibility in noise can improve dramatically. We recently showed that a similar benefit to intelligibility can be observed in simulation when the low-frequency acoustic stimulation (low-pass target speech) is replaced with a tone that is modulated both in frequency with the fundamental frequency (F0) of the target talker and in amplitude with the amplitude envelope of the low-pass target speech (Brown & Bacon 2009). The goal of the current experiment was to examine the benefit of the modulated tone to intelligibility in CI patients.

Design

Eight CI users who had some residual acoustic hearing either in the implanted ear, the unimplanted ear, or both ears participated in this study. Target speech was combined with either multitalker babble or a single competing talker and presented to the implant. Stimulation to the acoustic region consisted of no signal, target speech, or a tone that was modulated in frequency to track the changes in the target talker’s F0 and in amplitude to track the amplitude envelope of target speech low-pass filtered at 500 Hz.

Results

All patients showed improvements in intelligibility over electric-only stimulation when either the tone or target speech was presented acoustically. The average improvement in intelligibility was 46 percentage points due to the tone and 55 percentage points due to target speech.

Conclusions

The results demonstrate that a tone carrying F0 and amplitude envelope cues of target speech can provide significant benefit to CI users and may lead to new technologies that could offer EAS benefit to many patients who would not benefit from current EAS approaches.

INTRODUCTION

Technological advances during the past 30 years have improved the efficacy of cochlear implants (CIs) dramatically from simply providing an awareness of sound or providing an aid to speechreading to enabling many patients to converse over the telephone. However, the world is a noisy place, and CI patients often experience sharp declines in speech recognition in the presence of a background noise.

Some CI patients who have at least some residual acoustic hearing in the low-frequency region of the unimplanted ear are able to combine the electric and acoustic stimulation (EAS) from the two ears to show significant improvements in speech understanding in noise over electric stimulation alone (Kong et al. 2005; Dorman et al. 2007). There are some patients with residual low-frequency hearing in the implanted ear. Some of these individuals have been implanted with shorter electrode arrays (10 to 20 mm in length) in an attempt to keep the apical region of the cochlea relatively intact, thus preserving the residual low-frequency hearing (Gantz & Turner 2004; Turner et al. 2004).* For these patients, EAS can be achieved in a single ear. Whether across ears or within the same ear, speech recognition via EAS is often greater than that predicted by simply summing the recognition scores from each type of stimulation.

Although it has been shown in simulation that low-frequency information restricted to below ~300 Hz can provide an EAS benefit (Chang et al. 2006; Qin & Oxenham 2006), it remains unclear how much residual hearing is necessary to observe a benefit. Recently, it has been suggested (Kong et al. 2005; Chang et al. 2006; Qin & Oxenham 2006) that the target talker’s fundamental frequency (F0) might be a cue for EAS, suggesting that there must be residual hearing up to 300 Hz or so (Peterson & Barney 1952). We (Brown & Bacon 2009) have recently demonstrated that F0 is indeed an important cue, at least in simulation. We used a four-channel sinusoidal vocoder to simulate electric stimulation (Shannon et al. 1995). In one condition, we measured speech recognition when we combined the output of the vocoder with 500-Hz low-pass speech in one ear, which simulated low-frequency acoustic hearing. In the other conditions, we replaced the low-pass speech with a tone that carried cues derived from the low-pass speech. Some of those conditions used a tone carrying the dynamic F0 information from the target speech, and the others used a tone carrying the amplitude envelope. Finally, another set of conditions used a tone carrying both cues. Using this EAS simulation, we demonstrated that both F0 and the amplitude envelope provide significant benefit to intelligibility over vocoder-alone stimulation and that combining both cues typically provides more benefit than either alone. The average benefit of a tone carrying both cues was between 24 and 57 percentage points over vocoder-only stimulation.

Another recent report (Kong & Carlyon 2007) also examined the effects of F0 and the amplitude envelope on intelligibility in simulated EAS. Although they found a significant benefit from the amplitude envelope, there was no benefit in the presence of the F0 cue. It is unclear why F0 was beneficial in our study but not in theirs. However, there are several key differences in the methods used by the two studies, and we are conducting follow-up experiments to determine whether these methodological differences can account for the different results obtained.

F0 and amplitude envelope cues have also been examined as aids to lipreading. These results have been somewhat mixed as well, although the discrepancies seem to be in the amount of benefit from F0, and not whether F0 is beneficial. For example, an average benefit of about 15 percentage points in lipreading scores was shown on a connected discourse task, when F0 and amplitude envelope cues were presented acoustically together (Faulkner et al. 1992). On the other hand, sentence intelligibility improved by 50 percentage points when lipreading was supplemented with F0 presented acoustically without amplitude envelope cues (Kishon-Rabin et al. 1996). Modest benefit (~4 to 11 percentage points) has also been demonstrated when F0 was delivered using either spatial-tactile (Kishon-Rabin et al. 1996) or vibrotactile displays (Eberhardt et al. 1990).

In simulations of EAS and tests of lipreading, F0 and amplitude envelope cues have been shown to improve speech intelligibility, although the efficacy of each cue and the magnitude of improvement have varied across studies. Moreover, in simulations of EAS, we (Brown & Bacon 2009) have shown that a tone carrying these cues can provide significant improvements in speech intelligibility in noise. It is important to determine whether such a tone can provide similar benefits to EAS patients because it is possible that for patients with especially limited residual hearing, the cues could be made more audible than they might be when embedded in speech. As detailed in the Discussion section, this could be the case for individuals whose hearing is restricted to frequencies below the range where the F0 for many speakers resides and for individuals whose audiometric thresholds are so elevated that most of the speech in the low-frequency region cannot be made audible even with amplification.

Thus, the goal of the present study was to determine whether CI patients can benefit from a tone that carries both F0 and amplitude envelope cues. Because the patients were available for a limited time (they were in town to participate in experiments being conducted by some of our colleagues), we did not evaluate the efficacy of each cue separately. That will be the focus of follow-up experiments should the tone prove beneficial.

SUBJECTS AND METHODS

Subjects

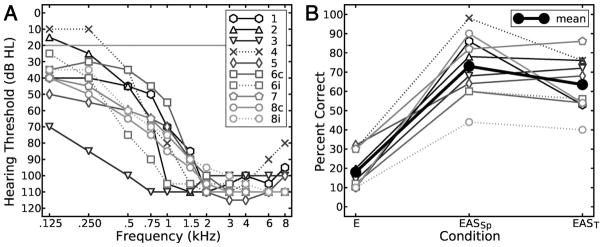

Eight postlingually deafened adults participated in the study. Figure 1A shows unaided audiometric thresholds for each subject. Patients 1, 2, 3, 5, and 7 had low-frequency acoustic hearing in their unimplanted ears, whereas patient 4 had residual acoustic hearing in the implanted ear. Patients 6 and 8 had some acoustic hearing in both the implanted and unimplanted ears, so each ear was tested separately in the experiment. Each patient’s demographic data, ear tested (relative to the implanted ear), and processor type are presented in Table 1. The mean age of participants was 63 years, and testing occurred at an average of 12 months postimplantation.

Fig 1.

A, Unaided audiometric thresholds for each ear tested of each subject. Each shade of gray and symbol represents a unique patient. Dashed lines represent performance when the ear ipsilateral to the implanted was tested; solid lines represent performance when the ear contralateral to the implanted was tested. Note that two patients (6 and 8) had some residual hearing in both their implanted and unimplanted ears. B, Mean percent correct scores. Plot shades, symbols, and lines are consistent with those from A. The bold plot with filled circles represents group mean performance. The processing conditions, depicted along the x axis, were electric-only stimulation (E), electric plus acoustically delivered target speech (EASSp), and electric plus acoustically delivered tone (EAST). We have connected each plot with lines for clarity even though the processing variable is not continuous.

TABLE 1.

Demographic data, and implant device and methodological information for each patient

| S | Ear tested | Gender | Age | Months Post-Op | Device | Active channels | Acoustic input | Target | Background | SNR |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | Contra | Male | 74 | 9 | Cochlear Nucleus Freedom | 6 | Aided | CID | Babble | 16 |

| 2 | Contra | Male | 86 | 23 | Advanced Bionics Clarion | 16 | Aided | CID | Babble | 24 |

| 3 | Contra | Female | 24 | 7 | Advanced Bionics Auria | 16 | Insert | CID | Babble | 8 |

| 4 | Ipsi | Female | 59 | 18 | Cochlear Nucleus Freedom | 6 | Insert | CUNY | Male | 15 |

| 5 | Contra | Female | 65 | 4 | Advanced Bionics Harmony | 12 | Insert | CUNY | Male | 21 |

| 6c | Contra | Male | 59 | 8 | Advanced Bionics Harmony | 12 | Insert | CUNY | Babble | 7 |

| 6i | Ipsi | Male | 59 | 8 | Advanced Bionics Harmony | 12 | Insert | CUNY | Babble | 7 |

| 7 | Contra | Male | 62 | 14 | Med-El Combi 40+ | 12 | Insert | IEEE | Babble | 4 |

| 8c | Contra | Female | 74 | 10 | Cochlear Nucleus Freedom | 6 | Insert | IEEE | Babble | 10 |

| 8i | Ipsi | Female | 74 | 10 | Cochlear Nucleus Freedom | 6 | Insert | IEEE | Babble | 10 |

Ear tested (Contra or Ipsi) is relative to the implanted ear. Post-Op refers to the number of months after implant surgery that testing occurred. Acoustic input refers to the method used to deliver acoustic signals to the subject, where aided indicates that the patient’s hearing aid was used in conjunction with a circumaural phone positioned 1 to 2 inches away from the pinna, and insert indicates the use of insert phones (unaided). Target refers to the target materials used. Both the CID and CUNY sets are considered to have high context, whereas the IEEE set is considered to have low context. Patients 6 and 8 had some residual acoustic hearing in both their implanted and unimplanted ears and are therefore listed twice.

Stimuli

The low-frequency stimulus was either a frequency- and amplitude-modulated tone or target speech. Before testing, the dynamic changes in the target talker’s F0 were extracted from each sentence using the YIN algorithm (de Cheveigné & Kawahara 2002) with a 40-msec window size and 10-msec step size. This extracted F0 information was used to frequency modulate a tone whose frequency was equal to the mean F0 of the target talker. In addition, the amplitude envelope of the target speech low-pass filtered at 500 Hz was extracted via half-wave rectification and low-pass filtering at 16 Hz (second-order Butterworth). The envelope was used to amplitude modulate the tone. The frequency- and amplitude-modulated tone was also turned on and off with voicing using 10-msec raised-cosine ramps, and the voicing transitions derived from the YIN algorithm were adjusted by hand when obvious errors were encountered. When the low-frequency cue was speech, unprocessed target speech was used and was limited in frequency by each patient’s audiometric configuration.

In our previous work, we (Brown & Bacon 2009) found a difference in the amount of improvement observed because of the tone that seemed to be related to the target sentence sets used. When the CUNY set was used, the tone modulated with both F0 and amplitude envelope cues was as effective as low-pass target speech. On the other hand, when the IEEE sentence set was used, the same tone provided significant benefit, but not as much as target speech. We (Brown & Bacon 2009) suggested that this difference may be caused in part by sentence context. As a result, in this study, patients were chosen at random to receive either low- or high-context stimuli. Low-context stimuli consisted of the IEEE sentences (IEEE 1969) produced by a female talker with a mean F0 of 184 Hz. High-context stimuli were either the CUNY sentences (Boothroyd et al. Reference Note 1) produced by a male talker with a mean F0 of 127 Hz, or the CID sentences (Davis & Silverman 1978) produced by a male talker with a mean F0 of 111 Hz. Our purpose was not to evaluate the effects of context per se, but to sample different contexts in evaluating the benefit of the tone versus speech on sentence intelligibility.

For all but listeners 4 and 5, the background was four-talker babble (Auditec 1997). For these two subjects, the babble stimulus was not available at the time of testing, so the AZBio sentence set (Spahr & Dorman 2004) produced by a male talker with a mean F0 of 139 Hz was used instead. See Table 1 for details of the different speech stimuli that were used for each subject. The background was never present in the low-frequency region. The target speech began 150 msec after the onset of the background and ended 150 msec before the background offset. Before testing, the signal to noise ratio (SNR) required to achieve approximately 20 to 30% correct in electric-only stimulation was estimated for each subject, as described below. The SNRs ranged from +4 to +24 dB, with an average of +12 dB (see Table 1).

All processing was performed digitally via software routines in Matlab, and stimuli were presented with either an Echo Gina 3G or an Echo Indigo sound card (16-bit precision, 44.1-kHz sampling rate). Signals were delivered to the implant via direct/auxiliary input; subjects used their normal program, and microphone sensitivity was minimized.† Subjects received input in the low-frequency region either unaided using Etymotic Research 3A insert earphones or aided using each subject’s typical hearing aid setting and a Sennheiser HD250 earphone positioned 1 to 2 inches from the pinna of the ear to be tested (see Table 1). In all cases, comfortable listening levels were set before testing.

Conditions

The target-plus-background mixture was always presented to the implant. In the low-frequency acoustic region, there was either no stimulation (E), target speech (EASSp), or a tone that was modulated both in frequency by the dynamic changes in F0 and in amplitude by the amplitude envelope of the low-pass speech (EAST). As noted earlier, the background was never present in the low-frequency region. Although including background in the electric region but not in the acoustic region is not natural, previous studies (Kong & Carlyon 2007; Brown & Bacon 2009) have used this manipulation in simulation to increase measurement sensitivity, by increasing the chances that listeners could use the F0 cue. Although we have found in simulation (Brown & Bacon 2009) that performance was unaffected by the presence of the background speech or tone, it is unclear whether this is the case for CI patients, so we excluded the background in low-frequency region.

Procedure

Participants were seated in a double-walled sound booth with an experimenter, who scored responses and controlled stimulus presentation. Responses were made verbally, and participants were instructed to repeat as much of the target sentence as they could. No feedback was provided.

Participants first heard 10 unprocessed broadband target sentences presented in quiet to the implant to familiarize them with the target talker’s voice. In addition, 40 to 60 sentences were presented at various SNRs, to determine the SNR that would yield approximately 20 to 30% correct.

There were 50 keywords (10 sentences) per test condition, and the presentation order of the conditions was randomized for each subject. No sentence was heard more than once.

RESULTS

Figure 1B shows individual data along with group mean percent correct results. On average, the scores were 18% correct for E, 73% for EASSp, and 64% for EAST. A single-factor repeated-measures analysis of variance revealed a significant main effect (p < 0.001) of processing condition. A post-hoc Tukey analysis showed that performance in both EASSp and EAST was significantly greater than that in E (p < 0.001) and that the scores in EASSp and EAST were not significantly different from one another (p = 0.21). However, care should be taken in interpreting these results; the relatively low statistical power that resulted from the small number of subjects used may be the cause of the statistical equivalence between the EASSp and EAST groups. Despite this caveat, the benefit of the tone is clear.

Although methodological and other incidental constraints such as time limitations prevented us from gathering data on the individual contributions of the F0 and envelope cues from all CI patients tested, we did collect these data from patients 5 and 6c, in addition to the E, EASSp, and EAST conditions in which all patients participated. The three additional conditions combined electric stimulation with the tone carrying the voicing cue only (a tone fixed in frequency at the target talker’s mean F0, and turned on and off with voicing; EAST-V), the F0 cue (a tone turned on and off with voicing but otherwise fixed in level, and modulated in frequency with the target talker’s F0; EAST-F0), or the amplitude envelope cue (EAST-A). Note that the voicing cue was present in all of the tone conditions. Mean performance was 31.5, 48, and 48% correct in EAST-V, EAST-F0, and EAST-A, respectively. For comparison, the mean performance for these two patients in the E, EASSp, and EAST conditions was 22, 62, and 61% correct, respectively. Although one should not draw conclusions from only two sets of data, the pattern of results is nearly identical to what we obtained in simulation (Brown & Bacon 2009), in that each cue provided a benefit to intelligibility, and combining the cues provided more benefit than any alone. These results suggest that both F0 and amplitude envelope cues are important for EAS.

DISCUSSION

On average, the results of the current experiment show that the tone provided 46 percentage points of improvement over electric stimulation alone. This benefit was nearly as much as the 55 percentage points of improvement that was provided by target speech in the 10 sets of data collected (from eight CI listeners). The smallest improvement observed with tone was 30 percentage points, whereas the largest was 58 percentage points.

Although a lack of statistical power makes it as yet unclear whether the tone is actually as effective as target speech, it is nevertheless clear that the tone provides a large benefit. Indeed, this benefit over electric-only stimulation was statistically significant, even with limited statistical power. The significant improvement in intelligibility with the addition of the modulated tone occurred even though most of the patients could tell that the tone was not speech when it was heard in isolation; that is, it sounded qualitatively different from target speech in the low-frequency region.

Our work in simulation (Brown & Bacon 2009) indicates that F0 and amplitude envelope cues contribute equal and somewhat complementary information. However, a recent report (Kong & Carlyon 2007) presented data in which F0 did not make a significant contribution to intelligibility under simulated EAS conditions. It is unclear why Kong and Carlyon found no benefit from F0, and follow-up experiments in simulation are being conducted to examine several methodological differences between the two studies. However, the data collected from patients 5 and 6c on the contributions of the individual cues provide support for our previous findings (Brown & Bacon 2009). Although we plan to characterize fully the individual contributions of each cue in subsequent experiments, we view the data presented here as an important first step in establishing the viability of the processing and procedures used to generate and deliver the tone.

In simulation (Brown & Bacon 2009), the tone contributed about 31 percentage points of improvement over simulated electric stimulation alone when target sentence context was low (IEEE sentences) and about 58 percentage points when context was high (CUNY sentences). In this study, both high- and low-context sentences were used. The goal was not to compare performance systematically under different levels of context, but to show that the tone could be effective in both high- and low-context conditions. Although conclusive statements about the relationship between sentence context and EAS benefit cannot be made based on the present data, the results clearly show that the tone can be as effective (or nearly as effective) as target speech for sentences of both low and high context. Although it is not clear why there does not seem to be a context effect for CI patients, one possible reason is their greater experience listening to frequency-restricted speech signals. We have conducted a pilot (Brown & Bacon 2008) experiment in which normal-hearing listeners were exposed to 1.5 hrs of EAS simulations per day for 3 wks. Although more research needs to be performed, there was clear evidence that the benefit resulting from the modulated tone increased with exposure.

In addition to demonstrating the importance of F0 and the amplitude envelope for EAS, the results of the current experiment also have important practical implications for many CI patients. Current EAS involves combining electric stimulation from a CI with acoustic stimulation received through residual hearing (Gantz et al. 2006; Dorman et al. 2007). Although amplification via hearing aids often helps CI patients with somewhat limited residual hearing achieve EAS benefit (Gantz et al. 2005, 2006), many CI patients may not possess enough residual hearing to show a benefit, even with amplification.

It is possible that CI patients with especially elevated low-frequency thresholds could benefit more from the tonal cue than from speech itself because of the narrow-band nature of the tone. For a given amount of gain, as from a hearing aid, higher sound pressure levels can be achieved in a particular frequency region when all of the energy is concentrated into that narrow frequency region. As a result, it may be possible to make the relatively narrow-bandwidth tone more audible than speech. Thus, patients who might not benefit from “traditional” EAS might benefit from replacing speech with an intense low-frequency tone. This could be achieved by a processor designed to extract F0 and amplitude envelope information in real time. As mentioned in the Introduction section, this type of processing has been shown to provide significant benefit to profoundly hearing-impaired individuals as a lipreading aid (Faulkner et al. 1992).

The tone may prove more beneficial than speech for another group of impaired listeners, in particular, those individuals with extremely low audiometric “corner frequencies.” If a given listener only has residual acoustic hearing up to, for example, 100 Hz, he or she may not show much EAS benefit because the F0 of most talkers (particularly females and children) would be in a frequency region where it would be inaudible (Peterson & Barney 1952). However, it may be possible to apply F0 and the amplitude envelope of the low-pass speech to a tone lower in frequency than the target talker’s mean F0, thereby facilitating EAS benefit. We have collected data (Scherrer et al. Reference Note 2) from normal-hearing subjects listening in simulated EAS, which confirm that a tone carrying F0 and the amplitude envelope can be shifted down in frequency and still provide benefit. Although promising, this effect must be more fully characterized and confirmed in CI patients.

SUMMARY

When a tone modulated in frequency with the dynamic changes in F0 of the target talker and in amplitude with the amplitude envelope of low-pass target speech is presented to the acoustic region of EAS patients, an average benefit to intelligibility of 46 percentage points was observed over electric-only stimulation.

This benefit was nearly as much as the 55 percentage points of improvement observed when electric stimulation was combined with target speech in the low-frequency region.

All CI patients showed a benefit with the tone. The smallest benefit observed was 30 percentage points and the largest was 58 percentage points.

Further research is needed to determine whether the tone can be more effective than speech for individuals with even less residual hearing in the low-frequency region.

Acknowledgments

The authors acknowledge Michael Dorman for insightful discussions about this work and Tony Spahr and Rene Gifford for their help with data collection. They also thank all three for graciously making their CI patients available for testing.

This work was supported by grants from the National Institute of Deafness and Other Communication Disorders (DC01376 and DC008329) (to S.P.B.).

Footnotes

The human cochlea is approximately 35 mm in length and is tonotopically organized (Greenwood 1990) such that high-frequency stimulation occurs closer to the base and low-frequency stimulation occurs nearer the apex. Traditional electrode arrays, which are inserted from the base of the cochlea, can be as long as 31 mm and usually destroy any residual low-frequency hearing that might be present.

Where possible, microphone input was turned off completely. However, this was not possible with the Cochlear Corp. Nucleus device. Technicians from the company advised us that the best option was turning down microphone sensitivity to its lowest setting. For patient 1, who received aided acoustic input, we tested the sensitivity of their implant by placing the circumnaural phone onto a manikin head that was situated close to the patient’s head. We removed this patient’s aid, double plugged his unimplanted ear, and set the sensitivity of his processor microphone to its lowest setting. We then presented speech tokens at the presentation level used during testing and asked him to indicate whether he could hear the speech. He could not.

References

- 1.Auditec. Auditory Tests (Revised) (Compact Disc) St. Louis: Auditec; 1997. [Google Scholar]

- 2.Brown CA, Bacon SP. Learning effects in simulated electric-acoustic hearing. 31st Midwinter Meeting of the Association for Research in Otolaryngology; Phoenix, AZ. 2008. [Google Scholar]

- 3.Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. J Acoust Soc Am. 2009;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chang JE, Bai JY, Zeng F. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans Biomed Eng. 2006;53:2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- 5.Davis H, Silverman SR. Hearing and Deafness. New York: Holt, Rhinehart, and Winston; 1978. [Google Scholar]

- 6.de Cheveigné A, Kawahara H. YIN, a fundamental frequency estimator for speech and music. J Acoust Soc Am. 2002;111:1917–1930. doi: 10.1121/1.1458024. [DOI] [PubMed] [Google Scholar]

- 7.Dorman MF, Gifford RH, Spahr AJ, et al. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurootol. 2007;13:105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Eberhardt SP, Bernstein LE, Demorest ME, et al. Speechreading sentences with single-channel vibrotactile presentation of voice fundamental frequency. J Acoust Soc Am. 1990;88:1274–1285. doi: 10.1121/1.399704. [DOI] [PubMed] [Google Scholar]

- 9.Faulkner A, Ball V, Rosen S, et al. Speech pattern hearing aids for the profoundly hearing impaired: Speech perception and auditory abilities. J Acoust Soc Am. 1992;91:2136–2155. doi: 10.1121/1.403674. [DOI] [PubMed] [Google Scholar]

- 10.Gantz BJ, Turner CW. Combining acoustic and electrical speech processing: Iowa/Nucleus hybrid implant. Acta Otolaryngol. 2004;124:344–347. doi: 10.1080/00016480410016423. [DOI] [PubMed] [Google Scholar]

- 11.Gantz BJ, Turner C, Gfeller KE. Acoustic plus electric speech processing: Preliminary results of a multicenter clinical trial of the Iowa/Nucleus Hybrid implant. Audiol Neurootol. 2006;11(Suppl 1):63–68. doi: 10.1159/000095616. [DOI] [PubMed] [Google Scholar]

- 12.Gantz BJ, Turner CW, Gfeller KE, et al. Preservation of hearing in cochlear implant surgery: Advantages of combined electrical and acoustical speech processing. Laryngoscope. 2005;115:796– 802. doi: 10.1097/01.MLG.0000157695.07536.D2. [DOI] [PubMed] [Google Scholar]

- 13.Greenwood DD. A cochlear frequency-position function for several species—29 years later. J Acoust Soc Am. 1990;87:2592–2605. doi: 10.1121/1.399052. [DOI] [PubMed] [Google Scholar]

- 14.IEEE. IEEE recommended practice for speech quality measurements. IEEE Trans Audio Electroacoust. 1969;17:225–246. [Google Scholar]

- 15.Kishon-Rabin L, Boothroyd A, Hanin L. Speechreading enhancement: A comparison of spatial-tactile display of voice fundamental frequency (F0) with auditory F0. J Acoust Soc Am. 1996;100:593– 602. doi: 10.1121/1.415885. [DOI] [PubMed] [Google Scholar]

- 16.Kong Y, Carlyon RP. Improved speech recognition in noise in simulated binaurally combined acoustic and electric stimulation. J Acoust Soc Am. 2007;121:3717–3727. doi: 10.1121/1.2717408. [DOI] [PubMed] [Google Scholar]

- 17.Kong Y, Stickney GS, Zeng F. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351–1361. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- 18.Peterson GE, Barney HL. Control methods used in a study of the vowels. J Acoust Soc Am. 1952;24:175–184. [Google Scholar]

- 19.Qin MK, Oxenham AJ. Effects of introducing unprocessed low-frequency information on the reception of envelope-vocoder processed speech. J Acoust Soc Am. 2006;119:2417–2426. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- 20.Shannon RV, Zeng FG, Kamath V, et al. Speech recognition with primarily temporal cues. Science. 1995;270:303–304. doi: 10.1126/science.270.5234.303. [DOI] [PubMed] [Google Scholar]

- 21.Spahr AJ, Dorman MF. Performance of subjects fit with the Advanced Bionics CII and Nucleus 3G cochlear implant devices. Arch Otolaryngol Head Neck Surg. 2004;130:624– 628. doi: 10.1001/archotol.130.5.624. [DOI] [PubMed] [Google Scholar]

- 22.Turner CW, Gantz BJ, Vidal C, et al. Speech recognition in noise for cochlear implant listeners: Benefits of residual acoustic hearing. J Acoust Soc Am. 2004;115:1729–1735. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

REFERENCE NOTES

- 23.Boothroyd A, Hanin L, Hnath T. Internal Report RCI 10. New York: Speech & Hearing Sciences Research Center, City University of New York; 1985. A Sentence Test of Speech Perception: Reliability, Set Equivalence, and Short Term Learning. [Google Scholar]

- 24.Scherrer N, Brown C, Bacon S. Effect of Fundamental Frequency on Intelligibility in Simulated Electric-Acoustic Listening. Scottsdale, AZ: American Auditory Society; 2007. [Google Scholar]