Abstract

Background

Large cross-disciplinary scientific teams are becoming increasingly prominent in the conduct of research.

Purpose

This paper reports on a quasi-experimental longitudinal study conducted to compare bibliometric indicators of scientific collaboration, productivity, and impact of center-based transdisciplinary team science initiatives and traditional investigator-initiated grants in the same field.

Methods

All grants began between 1994 and 2004 and up to 10 years of publication data were collected for each grant. Publication information was compiled and analyzed during the spring and summer of 2010.

Results

Following an initial lag period, the transdisciplinary research center grants had higher overall publication rates than the investigator-initiated R01 (NIH Research Project Grant Program) grants. There were relatively uniform publication rates across the research center grants compared to dramatically dispersed publication rates among the R01 grants. On average, publications produced by the research center grants had greater numbers of coauthors but similar journal impact factors compared with publications produced by the R01 grants.

Conclusions

The lag in productivity among the transdisciplinary center grants was offset by their overall higher publication rates and average number of coauthors per publication, relative to investigator-initiated grants, over the 10-year comparison period. The findings suggest that transdisciplinary center grants create benefits for both scientific productivity and collaboration. (Am J Prev Med 2012;42(2):157–163) Published by Elsevier Inc. on behalf of American Journal of Preventive Medicine

Background

The rapid proliferation of scholarly knowledge and the increasing complexity of social and scientific problems have prompted growing investments in team science initiatives.1–8 These initiatives typically last 5 to 10 years and are dispersed across different departments, institutions, and geographic locations.5,9–11 Many of these initiatives are based on the belief that team-based research integrating the strengths of multiple disciplines may accelerate progress toward resolving complex societal and scientific problems.12,13 The health sciences, in particular, have embraced this approach to address pervasive public health threats such as those associated with smoking, obesity, and environmental carcinogens.14–16

Cross-disciplinary collaboration ranges from the leastintegrative form of team science, multidisciplinary collaboration, to the most-integrative, transdisciplinary collaboration, with interdisciplinary collaboration falling between those.17,18 Participants in multidisciplinary and interdisciplinary collaborations remain conceptually and methodologically anchored in their respective disciplines, although some exchange of diverse perspectives occurs among research partners. Participants in transdisciplinary collaborations transcend their disciplines, engaging in a collaborative process to develop a shared conceptual framework that integrates and extends beyond the contributing disciplinary perspectives.

These research initiatives create a “melting pot” for different disciplinary cultures, theoretic and methodologic approaches, and technologies. However, there is limited empirical evidence concerning whether these initiatives enhance innovation, productivity, or other research outcomes. The present study explicitly compared the scientific productivity of traditional investigator-initiated research with that of center-based initiatives conducted by transdisciplinary science teams.19

The National Cancer Institute (NCI) within the NIH has supported several transdisciplinary center initiatives20–23 over the past decade, along with related evaluation activities to better understand the impacts of these initiatives.11,24,25 The first of these initiatives, the Transdisciplinary Tobacco Research Use Centers (TTURC), was developed because tobacco use research was becoming increasingly restricted to disciplinary silos, and there appeared to be a decline in scientific breakthroughs and related innovations in health interventions.26 The TTURC initiative26,27 was launched in 1999 and renewed in 2005, ultimately supporting eight geographically dispersed centers. The grant mechanism used encouraged within- and between-center collaboration.20,24,27

The structure of the TTURCs was designed explicitly to promote transdisciplinary research. Each center was required to: (1) have at least three primary research subprojects, each similar in size, duration, budget, and scope to a study supported by a traditional NIH grant (R01); (2) provide career development opportunities for new and established investigators; (3) provide developmental funds for innovative pilot projects; (4) establish shared administrative, technical, statistical, and other infrastructure (referred to as “cores”) to support the scientific subprojects; and (5) collaborate with other TTURCs. Centers were encouraged to collaborate with other partners such as NCI tobacco experts, community organizations, and policymakers. In addition, unlike other centergrantinitiatives such as NIH P01s, P50s and SPORES, the TTURC initiative introduced explicit expectations related to transdisciplinary knowledge synthesis, including the development of transdisciplinary conceptual models, methodologic approaches, and translational applications that would advance the science of tobacco prevention and control.

The present study examines whether the TTURC initiative produced greater scientific collaboration, productivity, and impact than traditional investigator-initiated research conducted in the same field and funding period. It had three principal research questions: (1) Are there differences in scientific collaboration, productivity, and impact between TTURC center grants and R01 grants for tobacco use research, including the volume and timing of productivity? (2) Are there within-group differences in scientific productivity among the two types of grants? (3) What factors account for differences in between- and within-group scientific productivity among the grant types?

Methods

This study used a quasi-experimental design incorporating three comparison groups.28 The first group included the six TTURC centers with continuous funding from 1999 to 2009; these centers encompassed 39 distinct primary research subprojects that lasted for either 5 (n=33) or 10 (n=6) years. The second and third components consisted of two comparison groups encompassing investigator-initiated tobacco use research grants funded through the NIH R01 grant mechanism. These groups were generated using an NIH-wide grants management database and subsequently screened by tobacco scientists to identify grants that matched the TTURC primary research subprojects on duration, timing, scope, and topical focus. The longitudinal R01 (LR01) award comparison group (n=21) was designed to match the 10-year duration and consistent institutional infrastructure and resources of the six TTURCs. The stacked R01 (SR01) award comparison group (n=39) was designed to match the duration and funding periods of the 39 TTURC subprojects.

The study incorporated bibliometric indicators of scientific productivity, collaboration, and impact as the main dependent variables. These were number of publications, number of coauthors per publication, and journal impact factors associated with these publications. Publication data were obtained and analyzed in 2010 from two NIH databases that link grant records to publication records in MEDLINE. Journal Citation Reports29 was used to obtain annual journal impact factors.

To compareTTURC subprojects to R01 grants, publications were linked to the individual TTURCs through acknowledgement of a center-based grant number and then assigned to a specific subproject using a series of algorithms as well as manual review of the annual progress reports. Publications assigned to the cores, developmental pilot projects, and multicenter collaborations were included in overall analyses of the TTURCinitiative but excluded from analyses at the subproject level because, on manual review, they were found to be qualitatively different from publications that resulted directly from TTURC scientific subprojects and R01 grants. To account for differences in grant start dates, publications were linked to project years (e.g., Year 1 of a given study). Pairwise comparison t-tests and chi-square analyses were conducted to test for between-group differences in bibliometric outcomes and selected covariates. Appendix A (available online at www.ajpmonline.org) provides a more detailed description of these methods.

Results

Comparability of the Transdisciplinary Tobacco Research Use Center and R01 Groups

Table 1 provides descriptive characteristics of the TTURC subprojects and the two groups of R01 grants, including type of research study, number of additional grants led by the PI at the time of the award, and academic rank of the PI at the time of the award. There were no differences in any of these covariates across groups.

Table 1.

Descriptive characteristics of TTURC subprojects and R01 grants, n (%) unless otherwise noted

| Covariate | Group | x2 | p | ||

|---|---|---|---|---|---|

|

| |||||

| TTURC (n=39) |

LR01 (n=21) |

SR01 (n=39) |

|||

| Type of research study | |||||

|

| |||||

| Policy research | 1 (3) | 2 (9) | 0 (0) | 9.11 | NS |

|

| |||||

| Clinical | 15 (38) | 10 (48) | 25 (64) | — | — |

|

| |||||

| Laboratory/basic animal | 12 (31) | 5 (24) | 8 (21) | — | — |

|

| |||||

| Epidemiology/surveillance | 11 (28) | 4 (19) | 6 (15) | — | — |

|

| |||||

| Number of additional grants led by principal investigator at time of award | |||||

|

| |||||

| 0 | 10 (26) | 4 (19) | 13 (33) | — | — |

|

| |||||

| 1 or 2 | 19 (49) | 12 (57) | 18 (46) | 2.45 | NS |

|

| |||||

| 3 or 4 | 7 (18) | 4 (19) | 7 (18) | — | — |

|

| |||||

| 5 or 6 | 3 (8) | 1 (5) | 1 (3) | — | — |

|

| |||||

| Academic rank of principal investigator at time of award | |||||

|

| |||||

| Professor | 21 (54) | 10 (48) | 14 (36 | 9.51 | NS |

|

| |||||

| Associate professor | 4 (10) | 4 (19) | 14 (36) | — | — |

|

| |||||

| Assistant professor | 10 (26) | 3 (14) | 6 (15) | — | — |

|

| |||||

| Other | 4 (10) | 4 (19) | 5 (13) | — | — |

LR01, longitudinal R01 grant; NS, not significant; R01, NIH Research Project Grant Program; SR01, stacked R01 grant; TTURC, Transdisciplinary Tobacco Research Use Center

All three groups had the same pattern of results for type of study and number of additional grants at the start of the award. Across the groups, the order of frequency for type of study was clinical studies (comprising the majority of the studies, at 38%–64%) followed by laboratory/basic animal studies (21%–31%); epidemiology/surveillance studies (15%–28%); and policy research (0%–9%). The majority of PIs in all three groups had one or more additional funded grants at the time of the TTURC (75%) or R01 award (LR01, 81%; SR01, 67%). Among these, most had one or two grants, followed in frequency by PIs who had no other grants at the time of the award.

Between-group differences were found in the PI's academic rank at the time of the award. In all three groups, the most common rank at the time of the award was Professor (36%–54%). In the TTURC group, the second most common rank was Assistant Professor (36%), whereas in the two R01 groups the second most common rank was Associate Professor (LR01, 19%; SR01, 36%).

Differences in Scientific Productivity, Collaboration, and Impact

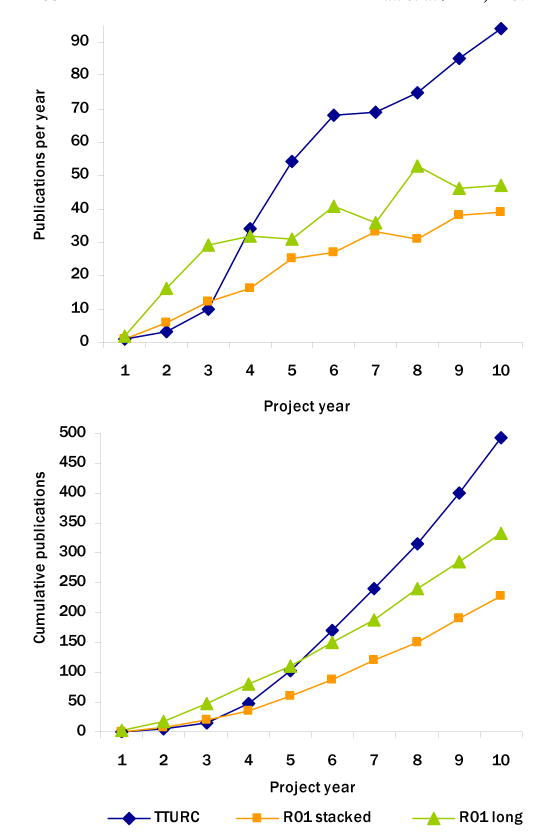

The top half of Figure 1 shows the total number of publications per year for each group across the 10 years of TTURC funding. By Year 2, the LR01 group was producing at a higher rate (n=28 publications) than the TTURC (n=6) or SR01 group (n=9). However, by Year 3 the TTURC group was producing more publications (n=31) than both comparison groups (LR01: n=21, SR01: n=15), and this higher rate of productivity increased over the remaining project years. An analysis of cumulative publications for each group, by project year, shows that in earlier project years, the LR01 group produced more publications than both the TTURC and SR01 groups (Figure 1, bottom). However, by Year 3 the TTURC group (n=39) out-produced the SR01 group (n=28), and by Year 5 the TTURC group (n=161) out-produced the LR01 group (n=128). By Year 10, the TTURC group (n=579) out-produced the SR01 group (n=251) by more than 100% and the LR01 group (n=359) by approximately 40%.

Figure 1.

Annual and cumulative numbers of publications across comparison groups

Long, longitudinal; R01, NIH Research Project Grant Program; TTURC, Transdisciplinary Tobacco Research Use Center

Average number of coauthors per publication and average journal impact factor per publication were assessed as indicators of collaboration and scientific impact, respectively. With the exceptions of Years 1 and 10, the TTURC group had higher average numbers of coauthors on publications per year (M=6.04, SD=3.44) than both the LR01 (M=4.02, SD=2.48) and SR01 (M=4.94, SD=2.70) groups. These differences were significant (TTURC vs LR01: t=9.62,p<0.0001, df=936; TTURC vs SR01: t=4.48, p<0.0001, df=828). Average journal impact factor was slightly higher in the SR01 and LR01 groups in the first 2 project years. However, when averaged across the full 10 years, there were no differences in average journal impact factor among the TTURC (M=3.82, SD=3.28); LR01 (M=3.78, SD=3.53); and SR01 (M=4.10, SD=2.64) groups (Appendixes B and C, available online at www.ajpomonline.org).

Within- and Between-Group Differences in Productivity Among Transdisciplinary Tobacco Research Use Center Subprojects and R01 Grants

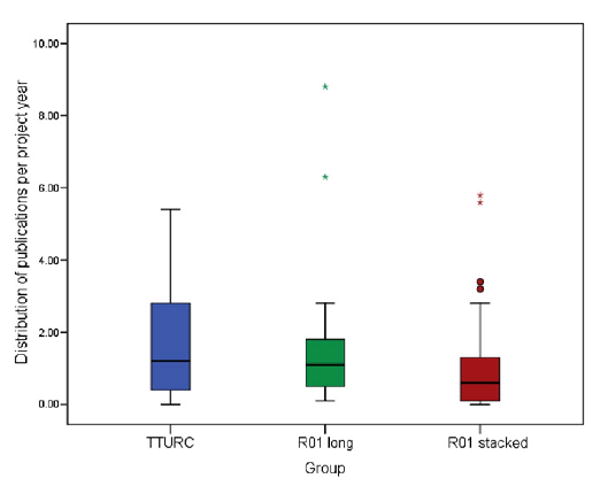

Analyses comparing the productivity of individual TTURC subprojects to R01 grants found that, on average, the TTURC subprojects produced slightly fewer publications per project year than the LR01 group grants (0.04) and slightly more than the SR01 group grants (0.65) (Figure 2). The mean number of yearly publications across the three groups was 1.42 (TTURC: M=1.66; LR01: M=1.70; SR01: M=1.01). Approximately 38.5% of the TTURC subprojects produced more publications than the across-group mean compared to 38.1% of the LR01 grants and 23.1% of the SR01 grants. This difference was not significant.

Figure 2.

Average number of yearly publications by TTURC subprojects and R01 grants

Note: The top of the box represents the 75th percentile for that group whereas the bottom of the box represents the 25th percentile for that group. The black line across the center of the box represents the median number of publications for that group (TTURC=1.2; LR01=1.1; SR01=0.6). The whiskers represent the highest and lowest values within the group that are not outliers. The circles represent high-performing outliers with the average number of publications per year falling between 1.5 and 3 IQR (interquartile range) units above the 75th percentile in their group. The asterisks represent extremely high-performing outliers with the average number of publications per year falling more than 3 IQR units above the 75th percentile in their group.

Long, longitudinal; R01, NIH Research Project Grant Program; TTURC, Transdisciplinary Tobacco Research Use Center

Low-performing outliers were defined as those grants that produced zero publications across their funding period. They included one TTURC subproject (2%) and 10 SR01 grants (25%). High-performing outliers were defined as subprojects or grants with publication rates between 1.5 and 3 interquartile range (IQR) units above the 75th percentile of their group. They included two SR01 grants with 3.2 and 3.4 average publications per year (represented by the circles in Figure 2). Extremely high-performing outliers were defined as subprojects or grants with publication rates more than 3 IQR units above the 75th percentile of their group. They included two LR01 grants with 8.8 and 6.3 average publications per year, and two SR01 grants with 5.8 and 5.6 average publications per year (represented by the asterisks in Figure 2).

Discussion

This study demonstrated how a longitudinal quasi-experimental design, incorporating comparison groups and bibliometric indicators, can be used to evaluate the comparative outcomes of center-based and individualinvestigator funding mechanisms for scientific productivity, collaboration, and impact. Analyses revealed differences in number and timing of publications, as well as coauthorship patterns, between NIH-funded transdisciplinary center grants and investigator-initiated research grants in the same field, suggesting that despite an initial lag in productivity, the transdisciplinary center grant-funding mechanism afforded overall advantages for productivity and collaboration.

This observed lag in productivity may reflect circumstances that required substantial investments of start-up time among center grants, which are typically absent in investigator-initiated projects. These include establishing the specific infrastructure required by the TTURC initiative, such as centerwide training programs and administrative cores, and mobilizing the organizational resources, processes, and policies needed to support collaborations among large teams of researchers both within and across funded centers. Examples include institutional support structures to facilitate communication, data sharing, and collaborative analyses, and cross-institutional collaboration policies.30 Moreover, this lag may reflect the fact that the TTURCs included more junior investigators than did the two R01 groups. The presence of more junior investigators among the TTURCs also makes the overall productivity advantage of the TTURCs more striking.

Additional start-up processes that may delay publications in a transdisciplinary context include the need to develop collaborative strategies, including articulating shared goals, developing shared language for discussing scientific objectives and methods, and integrating research questions and methodologic approaches from diverse fields in efforts to advance the science. Previously published data gathered during the first 3 years of the TTURC initiative support this hypothesis. The study documented challenges in the centers related to conflict resolution, meeting productivity, communication, project initiation, personnel turnover, and associated time burdens, which highlights potential causes of productivity lags.11,25

The differences in average number of coauthors per publication between the TTURCs and R01 grants also may reflect unique features of the TTURC initiative. The center structure, center-level training opportunities, shared cores, and grantee meetings produced opportunities to create connections within and across centers, whereas funding agency expectations for transdisciplinary science likely encouraged collaboration within and across TTURC centers.

The lack of significant between-group differences in average journal impact factor may be a reflection of effective sampling strategies, yielding comparison groups with such similar research foci as those addressed by the TTURCs that findings were published in the same set of journals. This phenomenon also may reflect features of the tobacco field.31 Specifically, the existence of a well-established set of journals devoted to tobacco-related research reduces the potential variability in impact factors for publications related to tobacco. Yet, other research suggests that collaboration may enhance scientific impact as measured by citation rates.32 Given the limitations of journal impact factors as criteria of scientific impact, future research would benefit from additional methods for evaluating scientific productivity and influence33. Ex-pert panels and science mapping techniques, including maps of citation patterns and diffusion of key concepts, are alternative methods that could be used to assess the relative impact of center grants and investigator-initiated grants.

Three notable patterns emerged from the subproject-level analyses: (1) the TTURC subprojects had more consistent annual publication rates than R01 grants; (2) average annual productivity in both R01 groups was influenced heavily by high-performing outliers; and (3) ten 5-year R01 grants produced zero publications during the study period. Plausible explanations for more-consistent annual publication rates among the TTURC subprojects include (1) the additional levels of expectations, oversight, and visibility created by the center structure; (2) the requirement to present research progress and findings at semi-annual grantee meetings; (3) a formal midcourse review by the funding agency; and (4) site visits by funding agency program staff and advisory committee members. The average number of annual publications in the LR01 group decreased from 1.70 to 1.09 when two extremely high-performing R01 grants with the same PI were removed from the sample. An important direction for future research is to identify investigator-level and institutional-level factors that account for variations in productivity among grants, especially R01s.

As noted earlier, 25% (n=10) of the SR01 group grants—all 5-year R01s—produced zero publications over the study period, whereas this was the case in only one TTURC subproject, and in no LR01s. There are several possible explanations for this pattern. First, like the TTURCs, the LR01 group grants may have been supported by infrastructure and resources that were established over 10 years of consecutive funding. For instance, the TTURC infrastructure (e.g., dedicated face-to-face cross-center meetings, administrative cores), likely increased the coordination mechanisms used to facilitate collaboration, which may have lead to a greater number of papers.34,35 This hypothesis is supported also by the fact that the LR01s outpaced the SR01s in Project Years 6 through 10 (Figure 1).

Another possible explanation is that peer reviewers tend to score renewal applications higher when there is evidence of productivity (e.g., publications) during the first 5 years of the project. The LR01 group may include grants that demonstrated high productivity. This hypothesis is supported by the fact that the LR01 group outpaced the SR01 group (comprised primarily of 5-year R01s) in cumulative publications through Project Year 5. Competing renewals are known to produce more papers than newly funded research.36 Multiple methods to gauge scientific productivity would help offset limitations inherent in these bibliometric assessments, including their tendency to under-represent productivity when investigators neglect to cite their grant numbers, resulting in the omission of relevant publications from MEDLINE and other automated databases. It will be important for future research to capture additional forms of productivity that are not reflected in publication counts. In addition, mixed-method approaches to measurement and evaluation are needed.

These findings are relevant to the design of future team science grants, including but not limited to center grants, as well as R01 grants. Funding agencies may be able to enhance support for collaboration in future team science grant initiatives by including requirements for collaboration as well as guidelines and technical assistance to implement best practices for successful collaboration. They also could provide initiative-level infrastructure to support collaboration within and across funded groups such as support for a coordinating center as in the NCI-supported Transdisciplinary Research in Energetics and Cancer (TREC) center initiative.37

Additional resources that promote effective collaboration for investigators funded either through center grants or mechanisms that support investigator-initiated research include web-based portals where investigators can access information about best practices in team science,30,38,39 and cyber-infrastructures that enable cross-disciplinary networking (e.g., Research Networking Tools and Expertise Profiling Systems) and cross-project data sharing and analyses.10,31,40

Evaluation of alternative funding durations and grant mechanisms is critically important as a basis for enhancing scientific and societal returns on future research investments. The cumulative scientific impact of particular grant initiatives can take decades to emerge. Yet, the present study demonstrates how bibliometric analyses can be used as an interim evaluation strategy for comparing alternative funding mechanisms on a variety of outcome measures. Advances in methods to evaluate the merits of different funding strategies will help to build the evidence base for crafting future funding mechanisms that maximize returns on research investments and ultimately accelerate efforts to successfully address their scientific and societal goals.

Supplementary Material

Acknowledgments

We thank James Corrigan (NCI), Lawrence Solomon (NCI), Joshua Schnell (Discovery Logic Inc.), and Laurel Haak (Discovery Logic Inc.) for their assistance and helpful comments in the preparation of this manuscript. This work was supported by contract number HHSN-276-2007-00235U. This project was funded, in whole or in part, with federal funds from the National Cancer Institute, NIH, under contract no. HHSN261200800001E. The content of this publication does not necessarily reflect the views or policies of the DHHS, nor does mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government.

Footnotes

No financial disclosures were reported by the authors of this paper.

Stephen Marcus was employed at the National Cancer Institute when this research was completed.

Appendix Supplementary data: Supplementary data associated with this article can be found, in the online version, at doi:10.1016/j.amepre.2011.10.011.

Contributor Information

Kara L. Hall, The Division of Cancer Control and Population Sciences, National Cancer Institute.

Daniel Stokols, The School of Social Ecology, University of California, Irvine, Irvine, California.

Brooke A. Stipelman, The Division of Cancer Control and Population Sciences, National Cancer Institute.

Amanda L. Vogel, Clinical Research Directorate/CMRP, SAIC-Frederick, Inc., NCI-Frederick, Frederick, Maryland.

Annie Feng, Feng Consulting, Livingston, New Jersey.

Beth Masimore, Discovery Logic, Rockville, Maryland.

Glen Morgan, The Division of Cancer Control and Population Sciences, National Cancer Institute.

Richard P. Moser, The Division of Cancer Control and Population Sciences, National Cancer Institute.

Stephen E. Marcus, The Center for Bioinformatics and Computational Biology, National Institute of General Medical Sciences, NIH, Bethesda, SAIC-Frederick, Inc., NCI-Frederick, Frederick, Maryland.

David Berrigan, The Division of Cancer Control and Population Sciences, National Cancer Institute.

References

- 1.Croyle RT. The National Cancer Institute's transdisciplinary centers and the need for building a science of team science. Am J Prev Med. 2008;35(2S):S90–S93. doi: 10.1016/j.amepre.2008.05.012. [DOI] [PubMed] [Google Scholar]

- 2.Adler NE, Stewart J. Using team science to address health disparities: MacArthur network as case example. Ann NY Acad Sci. 2010;1186:252–60. doi: 10.1111/j.1749-6632.2009.05335.x. [DOI] [PubMed] [Google Scholar]

- 3.NAS. Facilitating interdisciplinary research. Washington DC: The National Academy of Sciences, National Academies Press; 2005. [Google Scholar]

- 4.Wuchty S, Jones BF, Uzzi B. The increasing dominance of teams in production of knowledge. Science. 2007;316:1036–8. doi: 10.1126/science.1136099. [DOI] [PubMed] [Google Scholar]

- 5.NCRR. Clinical and translational science awards. 2010 ctsaweb.org/

- 6.Esparza J, Yamada T. The discovery value of “Big Science”. J Exp Med. 2007;204:701–4. doi: 10.1084/jem.20070073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.NAS. The NAS-Keck initiative to transform interdisciplinary research. www.keckfutures.org.

- 8.RobertWoodJohnsonFoundation. Active living research. www.active-livingresearch.org/

- 9.NCI. Science of team science. cancer.gov/brp/sci-enceteam/index.html.

- 10.Olson GM, Zimmerman A, Bos N, editors. Scientific collaboration on the Internet. Cambridge MA: MIT Press; 2008. [Google Scholar]

- 11.Trochim WM, Marcus S, Masse LC, Moser R, Weld P. The evaluation of large research initiatives: a participatory integrated mixed-methods approach. Am J Eval. 2008;29:8–28. [Google Scholar]

- 12.Borner K, Contractor N, Falk-Krzesinski HJ, et al. A multi-level perspective for the science of team science. Sci Transl Med. 2010;2(49):49cm24. doi: 10.1126/scitranslmed.3001399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Crow MM. Organizing teaching and research to address the grand challenges of sustainable development. BioScience. 2010;60(7):488–9. [Google Scholar]

- 14.NIH. BECON 2003 symposium on catalyzing team science (day 1) videocast.nih.gov/launch.asp?9924.

- 15.NIH. BECON 2003 symposium on catalyzing team science (day 2) videocast.nih.gov/launch.asp?9925.

- 16.NRC. Interdisciplinary research: promoting collaboration between the life sciences and medicine and the physical sciences and engineering. Washington DC: IOM, National Academy Press; 1990. [Google Scholar]

- 17.Kessel FS, Rosenfield PL, Anderson NB, editors. Interdisciplinary research: case studies from health and social science. New York: Oxford University Press; 2008. [Google Scholar]

- 18.Rosenfield PL. The potential of transdisciplinary research for sustaining and extending linkages between the health and social sciences. Soc Sci Med. 1992;35:1343–57. doi: 10.1016/0277-9536(92)90038-r. [DOI] [PubMed] [Google Scholar]

- 19.Stokols D, Hall KL, Moser RP, Feng A, Misra S, Taylor BK. Evaluating cross-disciplinary team science initiatives: conceptual, methodological, and translational perspectives. In: Frodeman R, Klein JT, Mitcham C, editors. The Oxford handbook on interdisciplinarity. New York: Oxford University Press; 2010. pp. 471–93. [Google Scholar]

- 20.National Cancer Institute. Transdisciplinary tobacco use research. centers.dccps.nci.nih.gov/tcrb/tturc/

- 21.NCI. Transdisciplinary research on energetics and cancer. www.compass.fhcrc.org/trec/

- 22.NCI. Centers for population health and health disparities. cancercontrol.cancer.gov/populationhealthcenters/

- 23.NCI. Health communication and informatics research: NCI centers of excellence in cancer communications research. www.cancercontrol.cancer.gov/hcirb/ceccr/ceccr-index.html.

- 24.Mâsse LC, Moser RP, Stokols D, et al. Measuring collaboration and transdisciplinary integration in team science. Am J Prev Med. 2008;35(2S):S151–S160. doi: 10.1016/j.amepre.2008.05.020. [DOI] [PubMed] [Google Scholar]

- 25.Stokols D, Fuqua J, Gress J, et al. Evaluating transdisciplinary science. Nicotine Tob Res. 2003;5(S1):S21–S39. doi: 10.1080/14622200310001625555. [DOI] [PubMed] [Google Scholar]

- 26.Turkkan JS, Kaufman NJ, Rimer BK. Transdisciplinary tobacco use research centers: a model collaboration between public and private sectors. Nicotine Tob Res. 2000;2:9–13. doi: 10.1080/14622200050011259. [DOI] [PubMed] [Google Scholar]

- 27.Abrams DB, Leslie FM, Mermelstein R, Kobus K, Clayton RR. Transdisciplinary tobacco use research. Nicotine Tob Res. 2003;5(S1):S5–S10. doi: 10.1080/14622200310001625519. [DOI] [PubMed] [Google Scholar]

- 28.Cook TD, Campbell DT. Quasi-experimentation: design and analysis issues for field settings. Chicago IL: Rand McNally College Publishing; 1979. [Google Scholar]

- 29.Thomson-Reuters. Journal citation reports. doi: 10.4248/IJOS11003. thomsonreuters.com/products_services/science/science_products/a-z/journal_citation_reports/ [DOI] [PMC free article] [PubMed]

- 30.NIH. Collaboration and team science. ccrod.cancer.gov/confluence/display/NIHOMBUD/Home.

- 31.Hays T. The science of team science: commentary on measurements of scientific readiness. Am J Prev Med. 2008;35(2S):S193–S195. doi: 10.1016/j.amepre.2008.05.016. [DOI] [PubMed] [Google Scholar]

- 32.Gazni A, Didegah F. Investigating different types of research collaboration and citation impact: a case study of Harvard University's publications. Scientometrics. 2011;87(2):251–65. [Google Scholar]

- 33.Vanclay JK. Bias in the journal impact factor. Scientometrics. 2008;78(1):3–12. [Google Scholar]

- 34.Cummings JN, Kiesler S. Coordination costs and project outcomes in multi-university collaborations. Res Policy. 2007;36(10):1620–34. [Google Scholar]

- 35.Cummings JN, Kiesler S. Who collaborates successfully? Prior experience reduces collaboration barriers in distributed interdisciplinary research. Proceedings of the ACM 2008 Conference on Computer Supported Cooperative Work 2008; San Diego CA. 2008. pp. 437–46. [Google Scholar]

- 36.Druss B, Marcus S. Tracking publication outcomes of National Institutes of Health grants. Am J Med. 2005;118(6):658–63. doi: 10.1016/j.amjmed.2005.02.015. [DOI] [PubMed] [Google Scholar]

- 37.Hall KL, Stokols D, Moser RP, et al. The collaboration readiness of transdisciplinary research teams and centers findings from the National Cancer Institute's TREC Year-One evaluation study. Am J Prev Med. 2008;35(2S):S161–S172. doi: 10.1016/j.amepre.2008.03.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.NCI. Team science toolkit. www.teamsciencetoolkit.cancer.gov/public/home.aspx?js=1.

- 39.COALESCE. CTSA online assistance for leveraging the science of collaborative effort (COALESCE) Department of Preventive Medicine, Feinberg School of Medicine, Northwestern University. www.preventivemedicine.northwestern.edu/researchprojects/coalesce.htm.

- 40.Carusi A, Reimer T. Virtual research environment collaborative landscape study; funded by the UK Joint Information Systems Committee (JISC) London, UK: e-Research Centre, University of Oxford and Centre for e-Research, Kings College London; 2010. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.