Abstract

Practice in most sensory tasks substantially improves perceptual performance. A hallmark of this ‘perceptual learning' is its specificity for the basic attributes of the trained stimulus and task. Recent studies have challenged the specificity of learned improvements, although transfer between substantially different tasks has yet to be demonstrated. Here, we measure the degree of transfer between three distinct perceptual tasks. Participants trained on an orientation discrimination, a curvature discrimination, or a ‘global form' task, all using stimuli comprised of multiple oriented elements. Before and after training they were tested on all three and a contrast discrimination control task. A clear transfer of learning was observed, in a pattern predicted by the relative complexity of the stimuli in the training and test tasks. Our results suggest that sensory improvements derived from perceptual learning can transfer between very different visual tasks.

Keywords: perceptual learning, transfer, specificity, visual form processing

Introduction

Practice considerably enhances our ability to detect, discriminate or identify visual stimuli. Long-term perceptual improvements have been reported in a wide range of visual attributes, from low-level orientation or positional discrimination tasks (e.g., Fahle, 1997; Poggio, Fahle, & Edelman, 1992; Vogels & Orban, 1985) to high-level face or object recognition tasks (e.g., Furmanski & Engel, 2000; Gauthier & Tarr, 1997; Gold, Bennett, & Sekuler, 1999). A hallmark of this perceptual learning is that the improvements are strongly coupled to the trained stimulus and task, a characteristic that could limit its utility as a therapeutic tool. Early studies of transfer of learning found it to be highly specific for retinal position (Karni & Sagi, 1991; Poggio et al., 1992), orientation (Fahle, 1997; Fahle & Edelman, 1993; Vogels & Orban, 1985) and trained eye (Karni & Sagi, 1991), with no transfer between similar tasks (Fahle, 1997; Fahle & Morgan, 1996). Given that early visual neurons with small receptive fields tend to show a larger degree of specificity for such features, low-level neural substrates were suspected to play a key role in perceptual learning, a theory supported by electrophysiological findings from monkey V1 (Crist, Li, & Gilbert, 2001; Schoups, Vogels, Qian, & Orban, 2001).

Recent studies have questioned whether the modest changes seen in early visual neurons are sufficient to account for the large behavioral improvements observed in psychophysical experiments (e.g., Ghose, Yang, & Maunsell, 2002). Other possible mechanisms include reweighting frameworks (e.g., Dosher & Lu, 1998, 1999; Law & Gold, 2008; Mollon & Danilova, 1996; Petrov, Dosher, & Lu, 2005; Sotiropoulos, Seitz, & Series, 2011), in which learning optimizes the “read-out” by enhancing connections from the most informative neurons for a given task, and frameworks that involve distributed plasticity across multiple levels of cortical analysis (e.g., Ahissar & Hochstein, 1997, 2004; Kourtzi & DiCarlo, 2006). As more studies emerge detailing situations in which transfer of perceptual learning can occur, these theories appear to have an advantage over early processing interpretations, by not ruling out the possibility of transfer.

If the often-observed specificity of perceptual learning is not due to anatomical constraints, the question remains: what controls whether perceptual learning is specific or transfers? According to one popular theory (Ahissar & Hochstein, 1997, 2004), learning is a top-down process, which begins at the top of the cortical hierarchy and gradually progresses “downstream” to recruit the most informative neurons to encode the stimulus. For coarse or “easy” discriminations, stimuli are processed at high cortical levels where neurons are broadly tuned for orientation and retinal position, leading to a high degree of transfer across these dimensions. In the case of difficult or “fine” discriminations, however, learning occurs at low levels of the hierarchy where neurons have a better signal-to-noise ratio but are also more tightly tuned for orientation and retinal position. Extending this work, Jeter and colleagues demonstrated that task precision, rather than task difficulty, determined the level of transfer in perceptual learning (Jeter, Dosher, Petrov, & Lu, 2009). Specifically, they showed that improvements are specific to the trained conditions for high, but not low precision transfer tasks. Other studies have shown that the length of training has an impact on the degree of generalization. For instance, in visual perceptual learning, transfer is more likely to occur with few training sessions (Jeter, Dosher, Liu, & Lu, 2010; but see Wright, Wilson & Sabin, 2010 for differences in auditory perceptual learning) and when those sessions have few trials (Aberg, Tartaglia, & Herzog, 2009), and it has been argued that this pattern of transfer may arise through the dynamics of long-term potentiation (Aberg & Herzog, 2012).

Here, we investigated whether the type of visual task may be a determinant in the specificity or generalization of perceptual learning. We examined the degree of transfer between three related tasks: orientation discrimination, curvature discrimination, and a “global form” coherence task. All three tasks require the participant to identify the orientation of the elements. In addition, the curvature task requires the subject to identify the change in orientation across space, while the ‘global form' stimuli represent a change in curvature across space. Since the curvature and global form tasks rely on the resolution of orientation cues, an improvement in orientation discrimination might have a beneficial, knock-on effect on the other tasks. Similarly, training on the curvature discrimination task could aid performance on the global form task. The reverse conditions are less clear; whether the discrimination of curvature, for instance, would improve performance on orientation discriminations.

In a similar study, Fahle (1997) failed to find any transfer of perceptual improvements between orientation, curvature and vernier discrimination tasks. Fahle viewed this result as evidence that the tasks used in these experiments were tapping different, unrelated sets of neurons to make their judgments. The stimuli used in his study were simple lines, however. In order to maximize the possibility of transfer, the current experiment used a flexible array of oriented Gabor elements, which could be arranged into different configurations. These arrays cover a larger area of the visual field, recruiting more neural resources. As a result, all tasks used similar low-level neural inputs but required very different judgments to be made.

Observers in all groups demonstrated substantial learning on their trained task. Furthermore, a clear transfer of learning between tasks was observed, in a pattern predicted by the relative complexity of the training-test pairing. That is, those trained on orientation discrimination transferred most to the curvature task, and less to global form, while those trained on global form transferred most to curvature and less to the orientation discrimination task. These results demonstrate that perceptual learning can transfer from one task to another provided there is an overlap in part of their processing and that the complexity of the training and test tasks is a contributing factor to transfer.

Methods

Participants

Twenty-four participants with normal or corrected-to-normal vision took part in the experiment. All participants were naïve to the purposes of the experiment and were aged between 18 and 30 years old.

Stimuli

Stimuli consisted of different sets of Gabor arrays (Figure 1), which could take one of three different forms (see Procedure) and were generated using the open-source package PsychoPy (Peirce, 2007). The arrays were presented on a 19-inch LCD monitor (Belinea, Linz, Germany 101,920) at a resolution of 1024 × 768 pixels and a refresh rate of 60 Hz. Each array consisted of 200 Gabor elements randomly positioned within a circular field that measured 8 degrees in diameter. Each Gabor element within the array had a Michelson contrast of 0.9, a spatial frequency of 6 c/° and was presented in a Gaussian envelope with a standard deviation of 7 arc minutes of visual angle (diameter = 42 arc minutes where contrast fell below 1%). Each stimulus array was presented for 200 ms, separated by a 200-ms inter-stimulus interval containing a blank screen of mean luminance and a fixation point. Observers viewed the display at a fixed distance of 57 cm and their heads were held in a fixed position with a chin-rest.

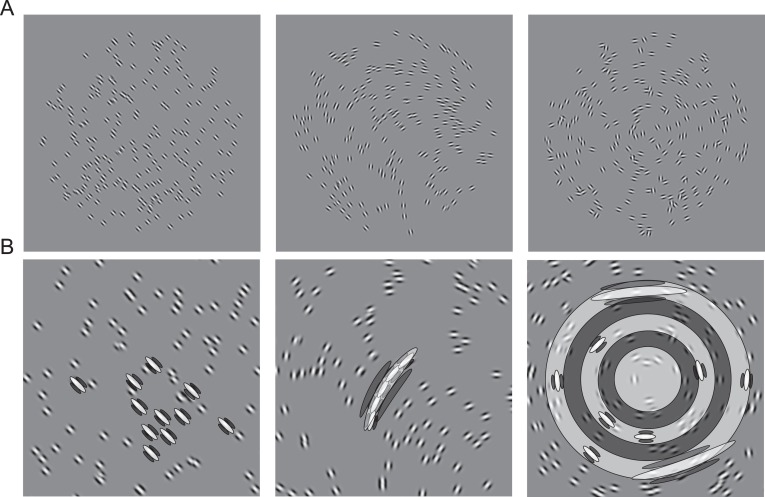

Figure 1.

(A) Example stimuli for perceptual learning. Participants discriminated between two arrays of tilted Gabor elements that differed in their mean orientation (left), mean curvature (middle) or global form (right). (B) Example of the filters that might be used to resolve the task at hand. In some cases different filters may overlap. For instance, in the curvature task the elongated curvature filter overlaps many small orientation filters. Equally, orientation and curvature filters overlap with filters responsible for detecting global form.

Procedure

On the first day of the experiment, observers' thresholds were measured on all three experimental tasks (orientation, curvature and global form) using a staircase procedure. Initially, the difficulty of the task varied via a one-down, one-up staircase, until the first incorrect response, at which point it switched to a three-down, one-up staircase, which tracked an accuracy level of 79.3%. Staircases terminated after 100 trials and thresholds were calculated as the mean of the last eight reversals. Thresholds were also measured on an unrelated contrast discrimination task in order to estimate the amount of procedural learning obtained over the training period. All tasks used a two-interval forced-choice (2IFC) procedure; observers were required to judge which of two sequentially presented arrays was the target stimulus, as defined by each task. The spatial location of each Gabor element in the array was newly generated on each interval to avoid unintended motion signals, and participants did not perceive the stimulus as moving. All tasks were performed in a random order in the pretest, with a newly generated order for the posttest.

To optimize the chances that learning would generalize, there were also four variants of each task. Stimuli in the orientation and curvature tasks varied in their overall orientation, while stimuli in the global form task varied along the continuum of radial/concentric forms. These variants were blocked during training, with a 100-trial staircase being conducted in its entirety, for each variant in a random order. During the pre- and posttest, however, the variants ran on interleaved staircases (a randomly selected base angle or form from trial to trial), such that the participant could not anticipate exactly what base angle or form the next stimulus pair would take.

Orientation discrimination

In the orientation task, the angle of each Gabor element was adjusted such that the majority of elements were oriented at a particular angle and the observer had to discriminate whether the global orientation of the standard or the comparison was most anti-clockwise. The proportion of coherent Gabor elements in comparison and standard stimuli was fixed at 90% to encourage the observer to distribute their attention across the entire array, rather than focusing on individual elements, to make their judgments. In the orientation task, the average orientation of the elements in the standard stimulus was 5°, 50°, 95°, or 140°. The orientation of the comparison stimulus was determined by the staircase.

Curvature discrimination

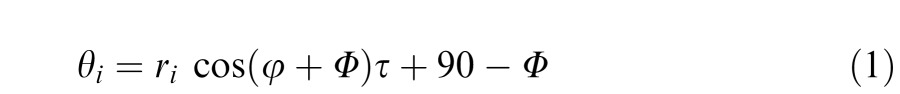

For the curvature task, the orientation of the coherent elements followed a curve and observers had to report which of the two arrays had the greater curvature. As in the orientation task, 90% of the Gabor elements in standard and comparison stimuli were coherent while the remainder took random orientations. The orientation, θ, of each coherent element, i, was determined by the equation:

|

where φ and r represent the angle and distance between the center of the stimulus array and the center of the element. τ determines the degree of curvature of the stimulus (specifically the difference in orientation between the outermost elements in the array) and Φ determines the overall orientation of the array. The four variants of the task differed in orientation, with Φ = 45°, 135°, 225°, and 315°. The curvature of the comparison stimulus varied across trials according to the staircase.

Global form task

The ‘global form' task used here was based on that of Achtman, Hess, and Wang (2003) which was, in turn, based on the study of coherence thresholds of Glass patterns as a measure of global form processing. The method differs from Glass patterns only in that the local orientation signals, required for the first stage of processing in the task (Dakin, 1997), are carried by Gabor elements rather than dot dipoles. Although the task could potentially be performed by detecting continuity between elements, the fact that sensitivity is dependent on the nature of the global structure (e.g., Dakin & Bex, 2001; Kurki & Saarinen, 2004; Wilson & Wilkinson, 1998) indicates that the overall form is actually used in the task.

Gabor elements were arranged such that one of the arrays had an underlying global structure (e.g., concentric circle, spiral), while the other was comprised of randomly oriented elements. The observer had to judge which interval contained the structure. In this task, the base spiral pitches were 0°, 30°, 60°, and 90°, where 0° produced concentric global structure, while 90° produced radial structure. Examples of the global form stimuli used in the current study can be seen in Achtman et al. (2003, the bottom row of Figure 2). The orientation, θ, of each element, i, is given by:

|

where φ is the angle between the center of the stimulus array and the center of the element, and τ determines the form of the global form stimulus. In this task, the coherence of the Gabor elements forming the global structure of the comparison stimulus varied across trials according to the staircase.

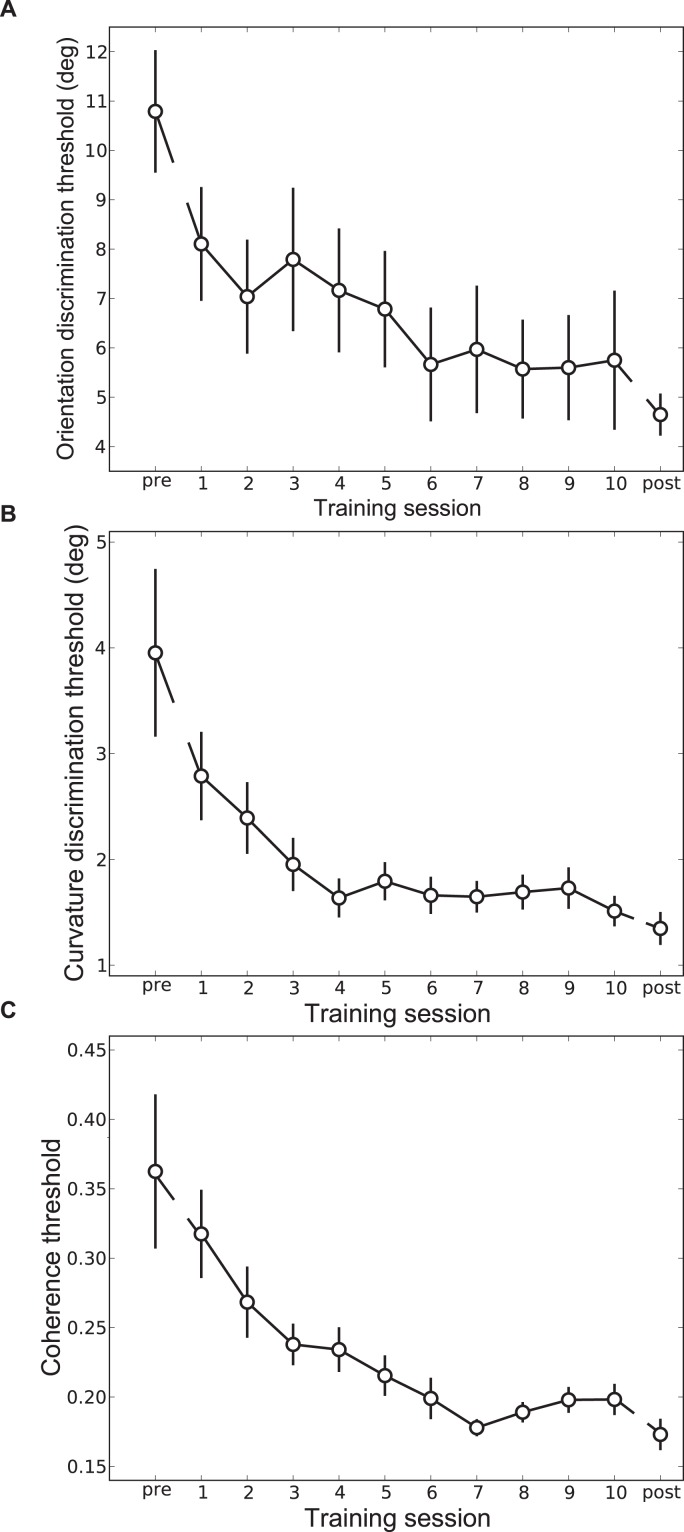

Figure 2.

Group-averaged learning curves for (A) orientation, (B) curvature and (C) global form training groups. All groups demonstrate significant learning of their respective task. Error bars indicate SEM across observers.

Contrast discrimination (control)

In the control task, observers had to indicate which of the two Gabor arrays had the higher contrast. Here, the contrast of the comparison stimulus varied according to the staircase, while the standard stimulus randomly took one of four contrasts (0.3, 0.4, 0.5 or 0.6 Michelson contrast). In this task, both the standard and comparison stimuli consisted of elements with random orientations.

Observers were randomly assigned to one of the three experimental conditions (orientation, curvature or global form) with eight observers in each group. The training period lasted 10 days, where each observer completed four non-interleaved staircases (totaling 400 trials per training session), one for each angle/form, on their assigned task each day. An average daily threshold was calculated from the four thresholds' measurements. On the last day of the experiment (posttraining day), observers' thresholds were again measured on all four tasks in a random order. Transfer from one task to another was calculated by dividing the threshold measured on the last day by the first day to provide a threshold ratio. Throughout the experiment the color of the fixation point indicated whether the observer had gotten the previous trial correct (green) or incorrect (red).

Results

The first objective of the experiment was to determine the extent to which practice enhances the performance of observers on their trained task. Daily estimates of threshold were obtained for all three groups. Figure 2 illustrates mean data for all three groups across all training sessions including pre- and posttraining measurements. The data show marked learning effects in all groups. For instance, the ability of observers in the orientation group to discriminate which of two Gabor arrays was more anticlockwise improved twofold during the 10 days of training. Similar improvements were observed in the other training groups.

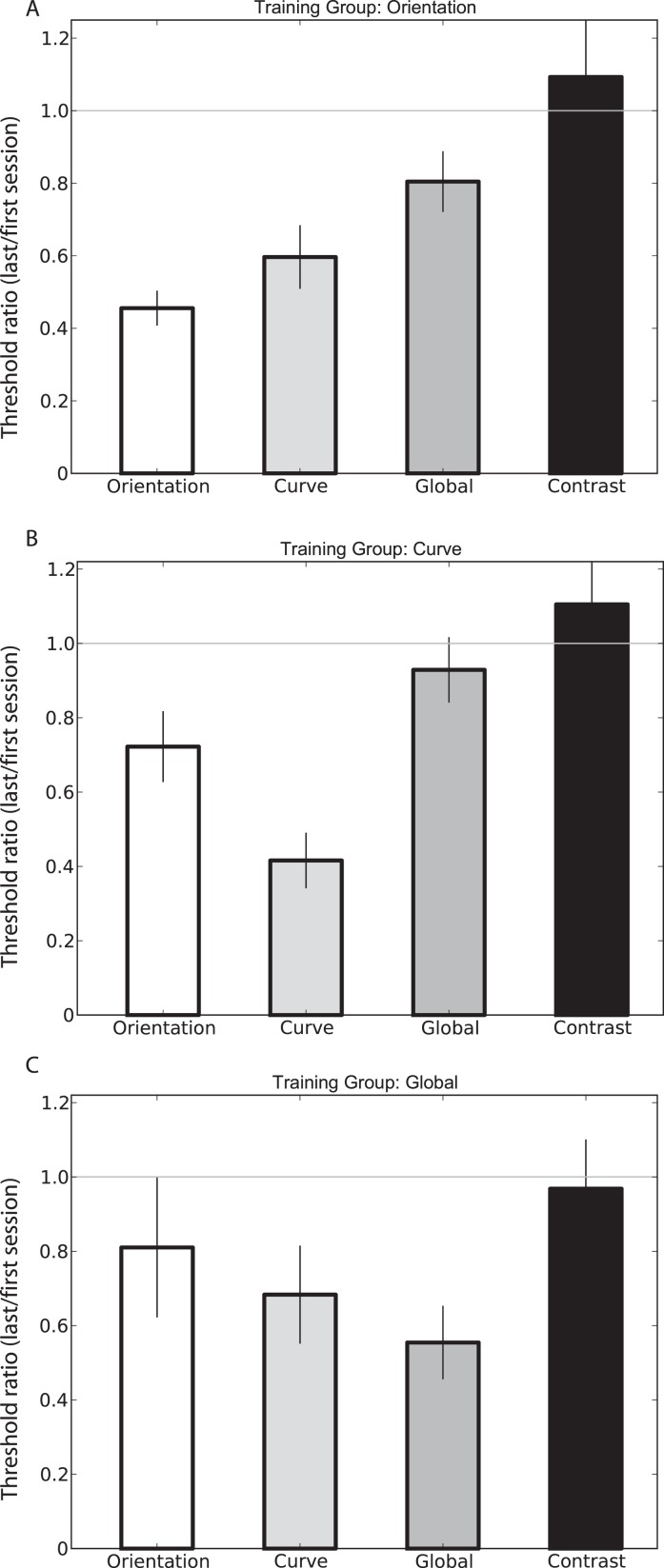

The next objective was to determine to what extent performance improvements obtained on a particular training task transferred to the other tasks. Figure 3 illustrates the relationship of transfer between the different training groups. Data are represented as threshold ratios, calculated by dividing the posttraining threshold by the pretraining threshold, averaged across all observers in each group. In these plots, a value of one (marked by a gray line) indicates that overall performance across subjects neither improved nor deteriorated, while a value less than one represents improved performance following the training period.

Figure 3.

Transfer of learned improvements between tasks represented as pre/posttest threshold ratios. Learned improvements gained from one task transfer to other related tasks. The amount of transfer seems to rely on the relative complexity of the training-test task pairing (see text for further details). Error bars indicate SEM across observers.

Transfer effects for the orientation group on all four tasks are shown in Figure 3A. As expected, those trained on the orientation task show the largest improvements in this condition. Observers did, however, also show substantial improvement in the curvature task and significant, but lesser, learning in the global form task. This particular pattern of transfer is interesting given the hierarchical relationship of the different stimulus configurations. For instance, it might be expected that curvature discrimination would improve following training on the orientation task given that orientation cues are also used to perform the curvature task. Potentially this pattern of results may arise through an inheritance of perceptual improvement by later processing stages that receive input from early stages of analysis, although this interpretation is controversial.

Interestingly, a monotonic pattern of transfer is also observed in the global form trained group (Figure 3C). Again, observers in this group demonstrate the largest improvement on their trained task. On this occasion, however, learned improvements in task performance transferred to less complex tasks, with curvature thresholds showing the next largest improvements, with lesser improvements on orientation thresholds. These findings indicate that perceptual improvements derived from training with a relatively high-level stimulus can also transfer to simpler tasks that rely on the coding of orientation cues.

Although a clear pattern of transfer is observed from global form to curvature, the results did not appear to show a reciprocal trend (Figure 3B). Whereas orientation thresholds clearly improved following training on the curvature task, global form thresholds did not show statistically significant improvement. The reason for this result is unclear. One possibility is that the population of neurons recruited for the curvature task is insufficient to represent global form alone. That is, without orientation cues, training on curvature alone does little to improve performance in detecting global form. Note that this does not necessarily preclude transfer of improvements from the global form to curvature task, as the mechanisms recruited for the global form task may be sufficient to resolve the curvature task.

It is clear from the data that perceptual learning can transfer from a relatively simple task (e.g., orientation) to more complex tasks (e.g., global form) and vice versa; that is, there is no clear direction of transfer. However, it does appear that the complexity of the training and test tasks helps determine the level of generalization caused by learning. For instance, those trained on the orientation task appear to transfer more to the curvature task than to the global form task. To test this hypothesis, we ranked our tasks in order of complexity (the orientation task being the simplest, the global form task most complex) and divided our transfer data into groups of “one task away” and “two tasks away” from the trained task. While the averaged threshold ratios for the “one task away” group were significantly less than one (p < 0.0001), the “two task away” threshold ratios approached, but did not reach significance (p = 0.07164). Thus, it appears that the relative complexity of the training and test tasks help govern the degree of transfer between tasks.

The pattern of transfer observed here cannot be attributed to procedural learning. Although a substantial degree of transfer is seen between tasks that share orientation as a common cue, perceptual improvements did not transfer to the contrast discrimination task in any of the training groups, which shared the basic procedure of attending to the screen and pressing the same keys in response to a target. This suggests that any transfer between the different tasks should be explained by some degree of experience-dependent plasticity, rather than observers improving on the response demands involved in making the perceptual judgment.

Discussion

A key characteristic of perceptual learning is thought to be its specificity for basic visual attributes, such as orientation (e.g., Schoups, Vogels, & Orban, 1995), spatial frequency (e.g., Fiorentini & Berardi, 1980) and retinal position (e.g., Karni & Sagi, 1991). Learning has also been shown to be specific for the trained task suggesting a low-level neural substrate, although an alternative hypothesis is that the tasks activate distinct sensory units with little overlap in their neural representations. Here, we show that learned improvements can transfer to other, distinctly different, tasks provided they share a common cue for their processing. All training groups demonstrated substantial learning on their trained tasks. Moreover, perceptual improvements transferred to the other tasks in a manner determined by the relative complexity of the trained and transfer tasks. For instance, those trained to discriminate orientation also improved on the curvature task, but less on the global form task, whereas those trained on global form improved on the curvature task but less on the orientation task. Below we compare our results to other studies concerned with the generalization/specificity of perceptual learning and speculate on how our results fit with current models of perceptual learning.

Generalization of perceptual learning

The current results add to other recent studies challenging the long-held view that perceptual learning does not transfer to other tasks, stimuli or locations (e.g., Webb, Roach, & McGraw, 2007). For instance, Zhang and colleagues have recently shown that the use of particular training protocols (double training or training-plus-exposure) allow for full transfer of perceptual learning to different retinal locations (Xiao et al., 2008; Zhang et al., 2010) and across different orientations (Zhang et al., 2010). Other studies have attempted to elucidate the conditions under which improvements transfer to other orientations or retinal positions (Jeter et al., 2009; Jeter et al., 2010), and highlight the precision of the transfer task and the length of the training period as important contributing factors. Specifically, tasks with low precision demands (Jeter et al., 2009) and short training regimes (Jeter et al., 2010) were conducive for transfer of learned improvements. Neither of these criteria appear to be critical for transfer across tasks. Our paradigm involves high precision (orientation/curvature discrimination) and low precision (global form coherence) tasks, with no significant difference in the degree of transfer to these tasks (p = 0.1). Equally, all groups trained for a relatively long period of time (10 days) and demonstrated significant transfer.

Of the previous studies that have investigated generalization of perceptual learning across tasks, most have reported a lack of transfer (Crist, Kapadia, Westheimer, & Gilbert, 1997; Fahle, 1997; Meinhardt, 2002; Petrov & Van Horn, 2012; Saffell & Matthews, 2003; Shiu & Pashler, 1992). While some of the tasks in question are likely to recruit distinct populations of neurons to perform the required judgments, and were therefore never likely to lead to transfer, it is less clear why some studies demonstrated specificity. Saffell and Matthews (2003) showed that training on speed or direction discriminations task failed to transfer to one another, or to a luminance discrimination control task. As in the current study, the stimulus was the same for all tasks (random-dot kinematograms) and the experimental tasks can claim to have at least partially overlapping neural representations (e.g., Rodman & Albright, 1987). While there is evidence that a subpopulation of neurons in visual area MT demonstrate selectivity for velocity (i.e., neurons tuned for both speed and direction, Rodman & Albright, 1987), speed and direction discrimination tasks can be performed independent of each other. For instance, our ability to perform a speed discrimination task does not depend on the direction of motion (Matthews & Qian, 1999), while the speed of the stimulus has a relatively small influence on performance in a direction discrimination task (De Bruyn & Orban, 1988). This is not the case for our tasks; a precursor to performing the curvature task is to resolve the orientation of the Gabor elements. Similarly, the orientation and curvature of the Gabor elements must be resolved to complete the global form task. Interestingly, in one of the few studies to demonstrate transfer between different tasks, Huang, Lu, Tjan, Zhou, and Liu (2007) showed that training on a direction discrimination task transferred to a motion detection task, but not vice versa. The authors use a similar rationale to explain their results; whereas the discrimination task requires the detection of the coherent motion signal, the exact direction of motion is irrelevant when performing the detection task. Together these results suggest that the relationship between different tasks is an important factor to consider in designing perceptual learning paradigms.

Perhaps the most relevant study to the current one is that of Fahle (1997), who trained participants on orientation, curvature and vernier discrimination tasks. Based on the explanation above, one might expect to see transfer between these tasks, as all three tasks are believed to share orientation as a common cue (Wilson, 1986). However, despite observers demonstrating significant training effects, this learning did not transfer to the other tasks. Similarly, Fahle and Morgan (1996) found a complete lack of transfer between a bisection and an alignment task, although learned improvements do generalize between these tasks if there is a common spatial axis to the judgment (Webb et al., 2007).

The apparent discrepancy between these previous reports and the current study may be due to the different methodological approaches employed. Observers in the studies by Fahle (1997) and Fahle and Morgan (1996) practiced on all training tasks and the degree of transfer was determined by observer performance directly following a switch of task. Although perceptual improvements did not generalize from one task to another, initial performance on the second task routinely deteriorated relative to initial performance on the first task. That is, observers frequently performed worse on the second task relative to the first, although all tasks were counterbalanced and training conditions were separated by at least one day. This trend was statistically significant and is consistent with the idea that interference or “negative transfer” can occur between tasks that share common representational coding (Dosher & Lu, 2009; Petrov et al., 2005).

One question remains with this interpretation: why does the learning-induced performance change manifest as a switch cost in Fahle's study, while we find an improvement in thresholds across tasks? The most notable differences in the studies are that (a) Fahle used simple line stimuli whereas we have used the more complex element arrays (see Models of perceptual learning) and (b) in the current study, participants completed 10 days of training before the posttest session, whereas those in Fahle's study completed only a single day. As described above, the number of training sessions is an important determinant in whether learned improvements generalize to other experimental conditions (Wright et al., 2010; Jeter et al., 2010), while consolidation processes, such as sleep (e.g., Mednick et al., 2002; Stickgold, James, & Hobson, 2000), are also known to play an important role in advancing learning processes. Potentially, the extra days of consolidation in the current study may help to transform the switch cost to the transfer task, as seen in Fahle's study, into the benefit seen in the current study. A further study measuring the time course of transfer between tasks will be required to test this hypothesis.

Models of perceptual learning

While it is difficult to ascertain the degree of overlap between the underlying neural representations of the different tasks, it is likely that the orientation and global form tasks are partly processed in different visual areas given that neither V1 nor V2 is capable of representing global structure (Smith, Bair, & Movshon, 2002; Smith, Kohn, & Movshon, 2007). Rather, it seems that neural populations capable of encoding global structure first appear in V4 (Gallant, Braun, & Van Essen, 1993), whereas the encoding of curvature might occur as early as V2 (Hegde & Van Essen, 2000). Thus, the current findings point to models of perceptual learning that advocate distributed plasticity across different visual areas (e.g., Kourtzi & DiCarlo, 2006). According to one such theory (Ahissar & Hochstein, 1997, 2004), learning begins at the top of the cortical hierarchy and works backwards searching for the most informative neurons to resolve the task. This search stops at the highest level found to contain mechanisms with a suitable task-related signal, and a retuning or reweighting of neurons at this level manifests as a perceptual improvement in the relevant task. This modification of neural responses at low levels of analysis not only affects representations associated with these levels, but also influences higher levels that receive the same low-level input. Therefore, higher-level representations will also attain a better signal-to-noise ratio as a result of the improved signal from downstream. Presumably, however, the theory does not predict that low-level neurons will benefit from training on a more complex, high-level task, since the “selection guidance” process fails to retreat far enough downstream to affect these neurons. Thus, the reverse hierarchy theory appears difficult to reconcile with the current dataset, given that it seems to predict a single direction of transfer. Crucially, our data show that perceptual learning can transfer from a relatively simple task to a higher-level task and vice versa, suggesting that the direction of transfer is not a limiting factor.

One general issue with distributed plasticity models of perceptual learning is the manner through which they are achieved computationally. Most of these theories assume a modification of neural representations at multiple levels, usually through a sharpening of tuning of visual neurons (e.g., Gilbert, Sigman, & Crist, 2001). However, persistent experience-based retuning of neurons could be problematic, especially at the level of V1. Given that early visual neurons are the basis of most, if not all, visual tasks, benefits gained due to cortical reorganization on one particular task are likely to interfere with others (Fahle, 2004; Petrov et al., 2005). Furthermore, electrophysiological estimates of retuning in V1 (Crist et al., 2001; Schoups et al., 2001) and V4 (Yang & Maunsell, 2004) do not appear to be able to account for the large behavioral improvements of perceptual learning.

An alternative solution is that perceptual learning may be modulated by a central mechanism that reweights the inputs from lower-level stages of analysis with experience (Dosher & Lu, 1998, 1999; Mollon & Danilova, 1996). Here, perceptual learning strengthens the connections between the early neural representations and the decision unit that guides behavior, without modifying the neural representations themselves. Such a mechanism neatly sidesteps any issues regarding the persistent changes of low-level visual neurons. On first glance, it appears that our data are at odds with such interpretations. While it is likely that there is some overlap between the low-level processing of our chosen tasks (Wilson, 1986), they presumably rely on different decision structures, criteria that should rule out the possibility of transfer (Petrov et al., 2005). A related alternative is that the learning takes place in the neural circuits responsible for integrating the orientation signals across space. This process could be achieved by modifying the integrating neurons themselves or through a selective reweighting of the signals coming from the most informative orientation-tuned neurons. Both mechanisms avoid the need for frequent retuning of low-level neurons and, since the contrast discrimination task does not require that signals are integrated across space, either is consistent with the finding of no transfer to this condition.

Whichever the mechanism, it seems clear that learning can transfer, not just between stimulus locations or eye of origin, but between tasks requiring entirely different judgments to be made.

Acknowledgments

This work was supported by a Wellcome Trust project grant to JWP (085444/Z/08/Z) and a Wellcome Trust Career Development Fellowship to BSW (WT085222).

Commercial relationships: none.

Corresponding author: David P. McGovern.

Email: david.mcgovern@gmail.com.

Address: Nottingham Visual Neuroscience, School of Psychology, The University of Nottingham, Nottingham, UK.

Contributor Information

David P. McGovern, Email: mcgoved1@tcd.ie.

Ben S. Webb, Email: ben.webb@nottingham.ac.uk.

Jonathan W. Peirce, Email: jonathan.peirce@nottingham.ac.uk.

References

- Aberg K. C., Herzog M. H. (2012). About similar characteristics of visual perceptual learning and LTP. Vision Research , 61, 100–106 [DOI] [PubMed] [Google Scholar]

- Aberg K. C., Tartaglia E. M., Herzog M. H. (2009). Perceptual learning with chevrons requires a minimal number of trials, transfers to untrained directions, but does not require sleep. Vision Research , 49(16), 2087–2094 [DOI] [PubMed] [Google Scholar]

- Achtman R. L., Hess R. F., Wang Y. Z. (2003). Sensitivity for global shape detection. Journal of Vision , 3(10):4, 616–624, http://www.journalofvision.org/content/3/10/4, doi:10.1167/3.10.4. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Ahissar M., Hochstein S. (1997). Task difficulty and the specificity of perceptual learning. Nature , 387(6631), 401–406 [DOI] [PubMed] [Google Scholar]

- Ahissar M., Hochstein S. (2004). The reverse hierarchy theory of visual perceptual learning. Trends in Cognitive Sciences , 8(10), 457–464 [DOI] [PubMed] [Google Scholar]

- Crist R. E., Kapadia M. K., Westheimer G., Gilbert C. D. (1997). Perceptual learning of spatial localization: Specificity for orientation, position, and context. Journal of Neurophysiology , 78(6), 2889–2894 [DOI] [PubMed] [Google Scholar]

- Crist R. E., Li W., Gilbert C. D. (2001). Learning to see: Experience and attention in primary visual cortex. Nature Neuroscience , 4(5), 519–525 [DOI] [PubMed] [Google Scholar]

- Dakin S. C. (1997). The detection of structure in glass patterns: Psychophysics and computational models. Vision Research , 37, 2227–2246 [DOI] [PubMed] [Google Scholar]

- Dakin S. C., Bex P. J. (2001). Local and global visual grouping: Tuning for spatial frequency and contrast. Journal of Vision , 1(2):4, 99–111, http://www.journalofvision.org/content/1/2/4, doi:10.1167/1.2.4. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- De Bruyn B., Orban G. A. (1988). Human velocity and direction discrimination measured with random dot patterns. Vision Research , 28(12), 1323–1335 [DOI] [PubMed] [Google Scholar]

- Dosher B. A., Lu Z.-L. (1998). Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proceedings of the National Academy of Sciences of the United States of America , 95(23), 13988–13993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher B. A., Lu Z.-L. (1999). Mechanisms of perceptual learning. Vision Research , 39(19), 3197–3221 [DOI] [PubMed] [Google Scholar]

- Dosher B. A., Lu Z.-L. (2009). Hebbian reweighting on stable representations in perceptual learning. Learning & Perception , 1(1), 37–58 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fahle M. (1997). Specificity of learning curvature, orientation, and vernier discriminations. Vision Research , 37(14), 1885–1895 [DOI] [PubMed] [Google Scholar]

- Fahle M. (2004). Perceptual learning: A case for early selection. Journal of Vision , 4(10):4, 879–890, http://www.journalofvision.org/content/4/10/4, doi:10.1167/4.10.4. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Fahle M., Edelman S. (1993). Long-term learning in vernier acuity: Effects of stimulus orientation, range and of feedback. Vision Research , 33(3), 397–412 [DOI] [PubMed] [Google Scholar]

- Fahle M., Morgan M. (1996). No transfer of perceptual learning between similar stimuli in the same retinal position. Current Biology , 6(3), 292–297 [DOI] [PubMed] [Google Scholar]

- Fiorentini A., Berardi N. (1980). Perceptual learning specific for orientation and spatial frequency. Nature , 287(5777), 43–44 [DOI] [PubMed] [Google Scholar]

- Furmanski C. S., Engel S. A. (2000). Perceptual learning in object recognition: Object specificity and size invariance. Vision Research , 40(5), 473–484 [DOI] [PubMed] [Google Scholar]

- Gallant J. L., Braun J., Van Essen D. C. (1993). Selectivity for polar, hyperbolic, and Cartesian gratings in macaque visual-cortex. Science , 259(5091), 100–103 [DOI] [PubMed] [Google Scholar]

- Gauthier I., Tarr M. J. (1997). Becoming a “Greeble” expert: Exploring mechanisms for face recognition. Vision Research , 37(12), 1673–1682 [DOI] [PubMed] [Google Scholar]

- Ghose G. M., Yang T., Maunsell J. H. R. (2002). Physiological correlates of perceptual learning in monkey V1 and V2. Journal of Neurophysiology , 87(4), 1867–1888 [DOI] [PubMed] [Google Scholar]

- Gilbert C. D., Sigman M., Crist R. E. (2001). The neural basis of perceptual learning. Neuron , 31(5):681–697 [DOI] [PubMed] [Google Scholar]

- Gold J., Bennett P. J., Sekuler A. B. (1999). Signal but not noise changes with perceptual learning. Nature , 402(6758), 176–178 [DOI] [PubMed] [Google Scholar]

- Hegde J., Van Essen D. C. (2000). Selectivity for complex shapes in primate visual area V2. Journal of Neuroscience , 20(5), RC61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang X., Lu H., Tjan B. S., Zhou Y., Liu Z. (2007). Motion perceptual learning: When only task-relevant information is learned. Journal of Vision , 7(10):4, 1–10, http://www.journalofvision.org/content/7/10/14, doi:10.1167/7.10.14. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Jeter P. E., Dosher B. A., Liu S. H., Lu Z. L. (2010). Specificity of perceptual learning increases with increased training. Vision Research , 50(19), 1928–1940 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeter P. E., Dosher B. A., Petrov A., Lu Z. L. (2009). Task precision at transfer determines specificity of perceptual learning. Journal of Vision , 9(3):1, 1–13, http://www.journalofvision.org/content/9/3/1, doi:10.1167/9.3.1. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A., Sagi D. (1991). Where practice makes perfect in texture discrimination: Evidence for primary visual cortex plasticity. Proceedings of the National Academy of Sciences of the United States of America , 88(11), 4966–4970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z., DiCarlo J. J. (2006). Learning and neural plasticity in visual object recognition. Current Opinion in Neurobiology , 16(2), 152–158 [DOI] [PubMed] [Google Scholar]

- Kurki I., Saarinen J. (2004). Shape perception in human vision: Specialized detectors for concentric spatial structures? Neuroscience Letters , 360:100–102 [DOI] [PubMed] [Google Scholar]

- Law C.-T., Gold J. I. (2008). Neural correlates of perceptual learning in a sensory-motor, but not a sensory, cortical area. Nature Neuroscience , 11(4), 505–513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matthews N., Qian N. (1999). Axis-of-motion affects direction discrimination, not speed discrimination. Vision Research , 39(13), 2205–2211 [DOI] [PubMed] [Google Scholar]

- Mednick S. C., Nakayama K., Cantero J. L., Atienza M., Levin A. A., Pathak N. et al. (2002). The restorative effect of naps on perceptual deterioration. Nature Neuroscience , 5, 677–681 [DOI] [PubMed] [Google Scholar]

- Meinhardt G. (2002). Learning to discriminate simple sinusoidal gratings is task specific. Psychological Research , 66(2), 143–156 [DOI] [PubMed] [Google Scholar]

- Mollon J. D., Danilova M. V. (1996). Three remarks on perceptual learning. Spatial Vision , 10, 51–58 [DOI] [PubMed] [Google Scholar]

- Peirce J. W. (2007). PsychoPy–Psychophysics software in Python. Journal of Neuroscience Methods , 162(1-2), 8–13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrov A. A., Dosher B. A., Lu Z.-L. (2005). The dynamics of perceptual learning: An incremental reweighting model. Psychological Review , 112(4), 715–743 [DOI] [PubMed] [Google Scholar]

- Petrov A. A., Van Horn N. M. (2012). Motion aftereffect duration is not changed by perceptual learning: Evidence against the representation modification hypothesis. Vision Research , 61, 4–14 [DOI] [PubMed] [Google Scholar]

- Poggio T., Fahle M., Edelman S. (1992). Fast perceptual learning in visual hyperacuity. Science , 256(5059), 1018–1021 [DOI] [PubMed] [Google Scholar]

- Rodman H. R., Albright T. D. (1987). Coding of visual stimulus velocity in area MT of the macaque. Vision Research , 27(12), 2035–2048 [DOI] [PubMed] [Google Scholar]

- Saffell T., Matthews N. (2003). Task-specific perceptual learning on speed and direction discrimination. Vision Research , 43(12), 1365–1374 [DOI] [PubMed] [Google Scholar]

- Schoups A. A., Vogels R., Orban G. A. (1995). Human perceptual learning in identifying the oblique orientation: Retinotopy, orientation specificity and monocularity. The Journal of Physiology , 483(Pt 3), 797–810 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A. A., Vogels R., Qian N., Orban G. (2001). Practicing orientation identification improves orientation coding in V1 neurons. Nature , 412(6846), 549–553 [DOI] [PubMed] [Google Scholar]

- Shiu L. P., Pashler H. (1992). Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & Psychophysics , 52(5), 582–588 [DOI] [PubMed] [Google Scholar]

- Smith M. A., Bair W., Movshon J. A. (2002). Signals in macaque striate cortical neurons that support the perception of glass patterns. Journal of Neuroscience , 22(18), 8334–8345 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith M. A., Kohn A., Movshon J. A. (2007). Glass pattern responses in macaque V2 neurons. Journal of Vision , 7(3):5, 1–15, http://www.journalofvision.org/content/7/3/5, doi:10.1167/7.3.5. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sotiropoulos G., Seitz A. R., Series P. (2011). Perceptual learning in visual hyperacuity: A reweighting model. Vision Research , 51(6), 585–599 [DOI] [PubMed] [Google Scholar]

- Stickgold R., James L., Hobson J. A. (2000). Visual discrimination learning requires sleep after training. Nature Neuroscience , 3, 1237–1238 [DOI] [PubMed] [Google Scholar]

- Vogels R., Orban G. A. (1985). The effect of practice on the oblique effect in line orientation judgments. Vision Research , 25(11), 1679–1687 [DOI] [PubMed] [Google Scholar]

- Webb B. S., Roach N. W., McGraw P. V. (2007). Perceptual learning in the absence of task or stimulus specificity. PLoS ONE , 2(12), e1323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson H. R. (1986). Responses of spatial mechanisms can explain hyperacuity. Vision Research , 26(3), 453–469 [DOI] [PubMed] [Google Scholar]

- Wilson H. R., Wilkinson F. (1998). Detection of global structure in Glass patterns: Implications for form vision. Vision Research , 38, 2933–2947 [DOI] [PubMed] [Google Scholar]

- Wright B. A., Wilson R. M., Sabin A. T. (2010). Generalization lags behind learning on an auditory perceptual task. Journal of Neuroscience , 30, 11635–11639 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiao L.-Q., Zhang J.-Y., Wang R., Klein S. A., Levi D. M., Yu C. (2008). Complete transfer of perceptual learning across retinal locations enabled by double training. Current Biology , 18(24), 1922–1926 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang T., Maunsell J. H. R. (2004). The effect of perceptual learning on neuronal responses in monkey visual area V4. Journal of Neuroscience , 24(7), 1617–1626 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J.-Y., Zhang G.-L., Xiao L.-Q., Klein S. A., Levi D. M., Yu C. (2010). Rule-based learning explains visual perceptual learning and its specificity and transfer. The Journal of Neuroscience , 30(37), 12323–12328 [DOI] [PMC free article] [PubMed] [Google Scholar]