Abstract

Object identities (“what”) and their spatial locations (“where”) are processed in distinct pathways in the visual system, raising the question of how the what and where information is integrated. Because of object motions and eye movements, the retina-based representations are unstable, necessitating nonretinotopic representation and integration. A potential mechanism is to code and update objects according to their reference frames (i.e., frame-centered representation and integration). To isolate frame-centered processes, in a frame-to-frame apparent motion configuration, we (a) presented two preceding or trailing objects on the same frame, equidistant from the target on the other frame, to control for object-based (frame-based) effect and space-based effect, and (b) manipulated the target's relative location within its frame to probe frame-centered effect. We show that iconic memory, visual priming, and backward masking depend on objects' relative frame locations, orthogonal of the retinotopic coordinate. These findings not only reveal that iconic memory, visual priming, and backward masking can be nonretinotopic but also demonstrate that these processes are automatically constrained by contextual frames through a frame-centered mechanism. Thus, object representation is robustly and automatically coupled to its reference frame and continuously being updated through a frame-centered, location-specific mechanism. These findings lead to an object cabinet framework, in which objects (“files”) within the reference frame (“cabinet”) are orderly coded relative to the frame.

Keywords: nonretinotopic processing, object/frame-centered processing, object/frame-based processing, iconic memory, priming, masking, perceptual continuity, retinotopic representation, spatiotopic representation, frametopic representation

Introduction

As objects in a typical scene are distributed on different locations, daily acts such as perception, navigation, and action require knowing both what the objects are and where they are located. Object identities and object locations are thought to be subserved by distinct ventral and dorsal pathways in the human brain, respectively (Ungerleider & Mishkin, 1982). Despite this distributed nature of identity and location representations, our phenomenal experience of the world is seamless and stable and at the same time retains the spatial relations among objects. How does the visual system achieve this stable topographic perception, without confusing and mixing identity and location information?

Although topographic perception may benefit from the ubiquitous retinotopic (eye-centered) representation in the early visual system (Wurtz, 2008), retinotopic representation is unstable, compromised by eye movements and object motions. Disruption of the retinotopic coordinate by eye movements may be compensated by spatiotopic (world-centered) mechanisms (Burr & Morrone, 2011; Duhamel, Colby, & Goldberg, 1992), which are anchored in stable external world coordinates (Figure 1). Disruption by object motions, however, presents a unique challenge that cannot be solved by spatiotopic mechanisms: the object representation is no longer anchored in world coordinates. Therefore, maintaining visual stability in face of object movements requires a more flexible, nonretinotopic coding and integration mechanism that can operate independently of eye movement contingent processes.

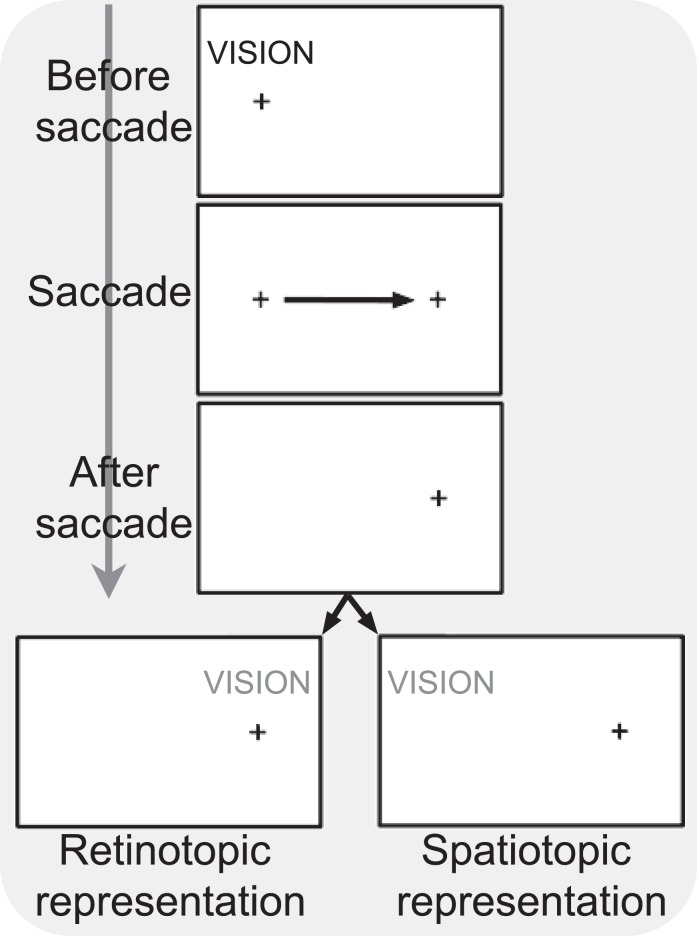

Figure 1.

Retinotopic representation versus spatiotopic representation after a saccade. First fixate on the initial fixation cross on the left, then make a saccade to fixate on the fixation cross on the right. The retinotopic and spatiotopic representations of the word “VISION” are illustrated on the lower left and right panels, respectively.

In the natural environment, objects usually appear within contextual frames (Bar, 2004; Oliva & Torralba, 2007), making it highly adaptive to exploit these structural regularities. For instance, in visual short-term memory, spatial configuration among items may provide a glue for enhancing memory capacity and is aptly exploited (Hollingworth, 2007; Hollingworth & Rasmussen, 2010; Jiang, Olson, & Chun, 2000; I. R. Olson & Marshuetz, 2005); similarly, in spatial vision, task irrelevant contextual frames have been shown to modulate spatial localization, as illustrated by the induced Roelofs effect (Bridgeman, Peery, & Anand, 1997; Roelofs, 1935).

Does the visual system automatically exploit structural regularities as a heuristic to maintain visual stability in face of object movements? Here we test an automatic frame-centered mechanism in object vision—whether object representation is robustly and automatically coupled to its reference frame and updated during motion in a frame-centered, location-specific manner. Conceptually, although a frame-centered representation entails the representation be based on the frame, an important connotation of “frame-centered” is that the frame is treated as a structure with intrinsic spatial relations among the parts, which can be completely missing from the usage of “frame-based” (e.g., a frame can as well be treated as a unit or whole, as sometimes implied in object-based attention, see Figure 2). By being sensitive to the relative locations within contextual frames, frame-centered object representations are uniquely suited for maintaining stable topographic perception in face of motions and may provide a perceptual foundation for configural encoding in memory and contextual effects in general.

Figure 2.

Distinguishing frame-based and frame-centered representations. The use of “frame-based” means that a frame is treated as a unit, approximated as its center of mass, whereas the use of “frame-centered” emphasizes that the frame is treated as a structure with intrinsic spatial relations among the parts.

Previous studies show that one can voluntarily direct attention to a remote, frame-centered location (Barrett, Bradshaw, & Rose, 2003; Boi, Vergeer, Ogmen, & Herzog, 2011; Umilta, Castiello, Fontana, & Vestri, 1995) and learn to allocate attention to a relative location within a frame (Kristjansson, Mackeben, & Nakayama, 2001; Maljkovic & Nakayama, 1996; C. R. Olson & Gettner, 1995), but empirical evidence for automatic frame-centered object representation is bleak, perhaps owing to the challenge in isolating frame-centered processes from object-based (frame-based) and space-based processes. Our conceptual approach to isolating frame-centered effect is to (a) present two preceding or trailing objects on the same frame and equidistant from the target, effectively controlling object-based (frame-based) effect and space-based effect, and (b) manipulate the target's relative location within its frame.

We implemented this approach by capitalizing on perceptual continuity between the preceding/trailing frame and the target frame through apparent motion. It is recognized that because of the low temporal resolution of saccadic eye movements, traditional approaches, by manipulating eye movements, are ill-suited for probing short-lived processes during the updating of continuously moving frames (Boi, Ogmen, Krummenacher, Otto, & Herzog, 2009). In our procedure, two frames were presented in sequence and, because of apparent motion, perceived as one frame moving from one location to another (Figure 3A). To directly probe frame-centered object representation and integration, we explicitly manipulated objects' relative frame locations across the two frames. We presented the target randomly above or below the fixation along the vertical meridian and the two task irrelevant, uninformative preceding (Experiment 1A–1D and Experiment 2) or trailing (Experiment 3A–3C) objects on the left and right sides of the fixation along the horizontal meridian, thus ensuring the same retinal distance between the two preceding or trailing objects and the target. Critically, we also manipulated the target's relative location within its frame. If object representation is robustly coupled to its reference frame and continuously being updated in a frame-centered fashion, target performance should be strongly modulated by which one of the two preceding or trailing objects shared the same relative frame location as the target, even though they were equidistant from the target (i.e., controlling space-based effects) and on the same frame (i.e., controlling object/frame-based effects).

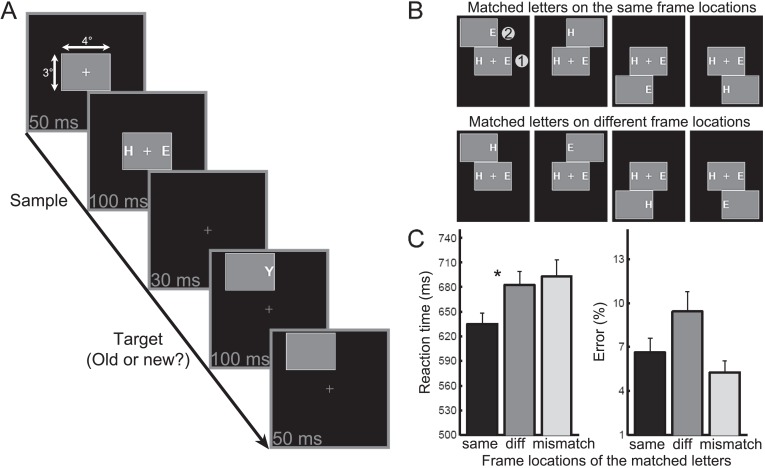

Figure 3.

Procedure, stimuli, and results from Experiment 1A. (A) Procedure: Observers fixated on a central cross throughout the experiment. In an apparent motion configuration, the first frame was presented at the center for 150 ms, with two prime letters presented during the last 100 ms along the horizontal meridian. After an interstimulus interval of 30 ms, the second frame was presented on one of four corners for 150 ms, with a target letter presented during the initial 100 ms along the vertical meridian. The task was to indicate whether the target was one of the two primes. (B) Superimposed sequential displays where the target matched one of the two prime letters: The first row illustrates displays where the target and the matched prime letter occupied the same relative frame location; the second row illustrates matched letters on different frame locations. (C) Results (n = 17): Responses were faster when the target and the matched prime occupied the same relative frame location than different frame locations. Error bars: standard error of the mean. *Statistically significant difference.

To test automatic frame-centered representation and updating, we applied this approach to iconic memory (Experiment 1A–1D), priming (Experiment 2), and masking (Experiment 3A–3C). Thus, the automatic frame-centered mechanism predicts that, for example, target performance should be facilitated if the target and the prime share the same relative frame location, and should be impaired if the target and the mask share the same relative frame location. Across the experiments, we observed nonretinotopic, frame-centered iconic memory, priming, and backward masking effects, demonstrating that object representation is robustly coupled to its reference frame without conscious intent and continuously being updated through a frame-centered, location-specific mechanism. Robust incidental coding of contextual frames may serve as a foundation of contextual effects in everyday vision.

Methods

Observers and apparatus

Seventeen human observers (10 women) with normal or corrected-to-normal vision from the University of Minnesota community participated in Experiment 1A; 19 (nine women) in Experiment 1B; 13 (six women) in Experiment 1C; 14 (seven women) in Experiment 1D; 17 (eight women) in Experiment 2; 12 (six women) in Experiment 3A; 13 (six women) in Experiment 3B; and 12 (seven women) in Experiment 3C in return for money or course credit. All signed a consent form approved by the university's institutional review board.

The stimuli were presented on a black-framed, gamma-corrected 22-inch CRT monitor surrounded by a black curtain and wallpaper (model: Hewlett-Packard p1230; refresh rate: 100 Hz; resolution: 1024 × 768 pixels; Hewlett-Packard, Palo Alto, CA) using MATLAB Psychophysics Toolbox (Brainard, 1997; Pelli, 1997). Observers sat approximately 57 cm from the monitor with their heads positioned in a chin rest in an almost dark room while the experimenter (ZL) was present.

Stimuli and procedure

To train observers to maintain stable fixation, observers took part in a fixation training session before the main experiment, in which they viewed a square patch of black and white noise that flickered in counterphase (i.e., each pixel alternated between black and white across frames). Each eye movement during the viewing would lead to perception of a flash, and observers were asked to maintain fixation using the flash perception as feedback (i.e., to minimize the perception of flashes; Guzman-Martinez, Leung, Franconeri, Grabowecky, & Suzuki, 2009). Each experiment included 32 practice trials (in one block) and 320 experimental trials (in five blocks; for Experiments 1A–1D and 2) or 192 experimental trials (in three blocks; for Experiments 3A–3C).

The basic structure of each trial in all the experiments involved a sequence of two frames against a black background: in the iconic memory (Experiments 1A–1D) and priming (Experiment 2) experiments, the first frame was presented on the center and the second frame with the target was presented on one of four peripheral locations; in the masking (Experiment 3A–3C) experiments, the first frame with the target was presented on one of four peripheral locations and the second frame was presented on the center.

In Experiment 1A (Figure 3A), after 800-ms presentation of a fixation cross (length: 0.23°; width: 0.08°; luminance: 80.2 cd/m2), the first frame (size: 4° horizontal × 3° vertical; luminance: 40.1 cd/m2 outlined by a white rectangle), centered on the fixation cross, was presented for 150 ms with two different sample letters (randomly selected from the following list: K, M, P, S, T, Y, H, and E; font: Lucida Console; size: 24; luminance: 80.2 cd/m2) presented on two sides of the frame (center-to-center distance between letters: 3°) during the last 100 ms. After an interstimulus interval of 30 ms, the second frame (shift from the first frame: ±1.5° × ±3°) was presented for 150 ms with a target letter (randomly selected from the same sample letter list) presented on one side of the frame but always right above or below the fixation (i.e., equidistant from the two sample letters) during the first 100 ms. Observers were asked to indicate whether the target letter, whose relative frame location corresponded to either the left or the right sample letter, was one of the two sample letters (i.e., either match or mismatch, a variant the match-to-sample task) as quickly and accurately as possible. When the target was one of the two sample letters, the target and the same sample letter could occupy either the same relative frame location or different locations.

Experiment 1B (Figure 4A) was the same as Experiment 1A except that only one sample letter was presented during the first frame.

Figure 4.

Stimuli and results from Experiment 1B. (A) Stimuli: As in Experiment 1A, the fixation frame was presented first, and the task was to indicate whether the first letter and the second letter were the same or not. The first row illustrates sequential displays where the two letters matched; the second row illustrates mismatched letters. Letters on the first column occupied the same relative frame location; letters on the second column were on different frame locations. (B) Results (n = 19): Responses were faster for the same relative frame location condition than different relative frame locations condition only when the two letters matched. Error bars: standard error of the mean. *Statistically significant difference.

Experiment 1C (Figure 5A) was the same as Experiment 1A except that the first frame was presented until the offset of the second frame.

Figure 5.

Procedure and results from Experiment 1C and 1D. (A, C) Procedure for Experiment 1C and 1D: In A, the same design was used in Experiment 1C as in Experiment 1A, except that the first frame stayed until the offset of the second frame, thus disrupting the apparent motion of the frames; in C, the same design was used as in A with the addition of two letter Os during the presentation of the target, thus disrupting the apparent motion of both the frames and the letters. (B, D) Results for 1C (n = 13) and 1D (n = 14): In both cases, responses were faster when the target and the matched sample occupied the same relative frame location than different frame locations. Error bars: standard error of the mean. *Statistically significant difference.

Experiment 1D (Figure 5C) was the same as Experiment 1C except that two additional letters O were added during the presentation of the target letter.

In Experiment 2 (Figure 6A), the letters on the first frame (lowercase b and p with the order randomized) were task irrelevant and observers were asked to indicate whether the target letter (randomly selected from the following list: b, B, p, and P) presented on the second frame was a letter b/B or p/P, regardless of its case. Two extra fixation crosses (luminance: 30.1 cd/m2) were added along the vertical meridian to aid target localization. The frame duration was 200 ms and the letter duration was 150 ms. Other aspects were the same as Experiment 1A.

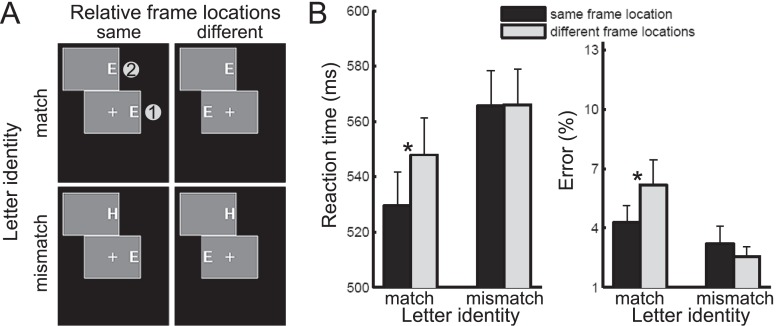

Figure 6.

Frame-centered priming in Experiment 2. (A) Procedure: Observers fixated on a central cross throughout the experiment. In an apparent motion configuration, the fixation frame with two task irrelevant, uninformative lowercase letters, b and p (random order, along the horizontal meridian), was presented first, followed by the target frame on one of four corners (upper left shown) with a lower- or uppercase target letter equidistant from the two prime letters (along the vertical meridian). The task was to categorize whether the target letter was b (B) or p (P), regardless of the case. (B) Design: The first row illustrates sequential displays where the target letters were lowercase (b and p); the second row illustrates uppercase targets (B and P). The first column shows sequential displays with the same letter identities on the same frame locations; the second column shows distinct letters on the same frame locations. (C) Results (n = 17): Responses were faster when the target letter shared the same relative frame location as the same identity prime letter; the effect was larger when the target and the primes were of the same case than of different cases. Error bars: standard error of the mean. *Statistically significant difference.

In Experiment 3A (Figure 7A), after 1500-ms presentation of a fixation cross (length: 0.23°; width: 0.08°; luminance: 40.1 cd/m2), the first frame (size: 4° × 3°; luminance: 40.1 cd/m2 outlined by a white rectangle; shift from the central fixation: ±1.3° × ±2.6°) was presented for 200 ms with a target letter (either the letter N or its mirror-reversal: 0.6° × 0.6° ; line width: 0.04°; luminance: 70.2 cd/m2) presented on one side of the frame but always right above or below the fixation during the last 10 ms. Immediately after the offset of the first frame (i.e., interstimulus interval of 0 ms), the second frame, centered on the fixation cross, was presented for 200 ms with two masks (weak mask: a circle of 0.16° × 0.16°; strong mask: a cross of 0.78° × 0.78° superimposed with three Xs of 0.55° × 0.78°, 0.78° × 0.78°, and 0.78° × 1.01° each; line width: 0.04°; luminance: 80.2 cd/m2) presented on two sides of the frame (center-to-center distance between masks: 2.6°) during the first 30 ms. Observers were asked to indicate whether the target letter, whose relative frame location corresponded to either the left or the right mask, was the normal letter N or its mirror reversal as accurately as possible without emphasis on reaction time.

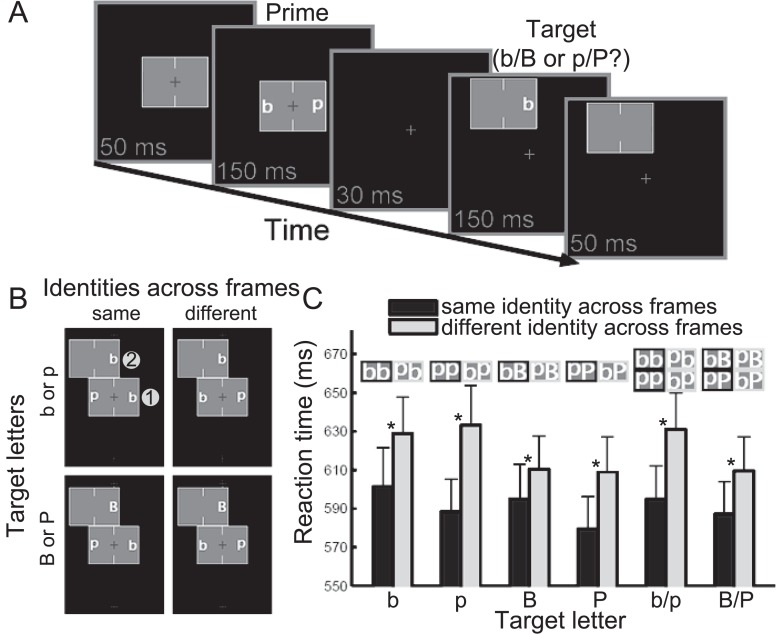

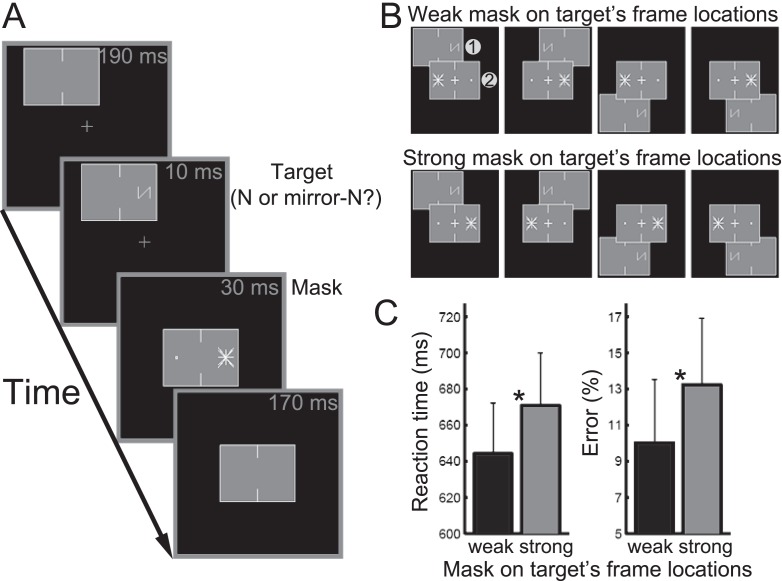

Figure 7.

Frame-centered masking in Experiment 3A. (A) Procedure: The first frame was presented either on the upper left, upper right, lower left or lower right corner for 200 ms, during the last 10 ms of which a target letter was presented either within the left or the right side of the display but always right above or below the fixation. Immediately after the first frame, the second frame was presented at the center for 200 ms, during the initial 30 ms of which two masks, one strong and one weak, were presented. The task was to indicate whether the target was N or mirror-N. (B) Sequential displays of the target and the masks: The mask on the same relative frame location as the target could be a weak one (first row) or a strong one (second row). (C) Results (n = 12): Responses were faster and more accurate when the target was followed by a weak mask than by a strong mask on the same relative frame location. Error bars: standard error of the mean. *Statistically significant difference.

In Experiment 3B (Figure 8A), similar to Experiment 3A, a visual search task was used. Each frame was now enlarged (size: 8° × 4°; shift from the central fixation for the first frame: ±2.4° × ±4.8°). The search display consisted of four lines (center-to-center distance between lines: 1.6°; line length: 1.0°; line width: 0.08°; luminance: 60.2 cd/m2); on half of the trials, all lines were rightward tilted 45° relative to vertical and on the other half of the trials, three were rightward tilted 45° and one was leftward tilted 45°. The search display was presented during the last 50 ms of the first frame. Two search display-like masks were presented on the second frame (weak mask: a filled square of 0.08° × 0.08°; strong mask: a vertical line of 0.98° superimposed centrally with an X of 0.98° × 0.98°; line width: 0.08°; luminance: 80.2 cd/m2 center-to-center distance between masks: 2.4°). The mask display was presented during the first 50 ms of the second frame. Observers were asked to indicate whether there was a leftward titled bar on the search display as accurately as possible.

Figure 8.

Frame-centered masking in Experiments 3B–3C. (A, C) Sample stimuli for Experiments 3 and 4: A visual search detection task was used. The task was to detect whether the search display on the first frame (on one of four corners) had an odd, left-tilted target bar or not. The mask on the same relative frame location as the search display on the second frame (centered on the fixation) could be a weak one or a strong one. (B, D) Results for Experiment 3B (n = 13) and 3C (n = 12): In both cases, perceptual sensitivity but not response bias was higher when the target was followed by a weak mask on the same frame-centered location than a strong mask. Error bars: standard error of the mean. *Statistically significant difference.

In Experiment 3C (Figure 8C), the same search display and task were used as in Experiment 3B. The weak mask now consisted of four right triangles (leg length: 0.7°; line width: 0.08°) with the hypotenuses rightward tilted 45°; the strong mask now consisted of an X (0.98° × 0.98°) and a vertical bar (length: 0.98°) touching right on the left side of the X.

Results

Experiments 1A–1D: Frame-centered iconic memory

Experiment 1A: A frame-centered iconic memory effect

Letters were used in keeping with research on the sensory storage of iconic memory (Sperling, 1960). In a variant of match-to-sample tasks, first, two sample letters were always presented on the left and right sides of the fixation, and the subjects were asked to hold the letter identity information in memory. After a brief delay, a target letter equidistant from the two sample letters was presented randomly above or below the fixation (Figure 3A). The task was to indicate whether the target letter was one of the two sample letters (either matched or mismatched). Critically, although the target was always equidistant from the two sample letters, the target's relative frame location corresponded to either the left or the right sample letter, so that in the match trials, the two matched letters occupied either the same relative locations or different frame locations (Figure 3B). There were thus three conditions: match-same, match-different, and mismatch. Although objects' locations were task irrelevant and uninformative for the task, a repeated-measures ANOVA revealed that response times (RTs) differed significantly among these three conditions, F(2, 32) = 19.48 , p < 0.001, = 0.549 (Figure 3C). Importantly, when the target matched one of the two sample letters, observers performed significantly faster when the matched letters occupied the same relative frame location than when they occupied different locations, t(16) = −5.67, p < 0.001, Cohen's d = −1.38, suggesting that objects' relative locations within their spatial frames were robustly encoded during object iconic memory. The error rate data were consistent with the RT data, with a lower error rate in the same frame location condition than the different frame locations condition for the matched letters, t(16) = −2.04, p = 0.058, d = −0.50. These results demonstrate that, even though object's location is irrelevant and uninformative for the task, object's identity (e.g., shape) is bound to its reference frame and continuously being updated in a frame-centered manner in iconic memory, thereby revealing a frame-centered iconic memory effect.

Experiment 1B: The role of object competition and response bias

An alternative account for this frame-centered iconic memory effect is response bias based on the motion congruency between letters and frames: the matched letters on the same frame location moved in the same direction as the frame motion, whereas the matched letters on different frame locations did not. This explanation is not likely because apparent motion directions tend to be unstable when two objects are followed by one object (Ullman, 1979). Nevertheless, to directly test this motion congruency account, we generated clear letter apparent motion direction by presenting only one letter during the first frame, followed by another letter on the second frame. This design also had an added benefit of allowing us to test whether encoding more than one object during the first frame was necessary for the frame-centered priming effect (presenting more than one object, as in Experiment 1A, entails an intrinsic relation and order among the objects, which might encourage the visual system to bind objects to their corresponding locations in iconic memory, even when location information is task irrelevant).

The letters on the two frames could either matched or mismatched, which the subjects were asked to indicate. Importantly, the relative frame locations of the two letters were also manipulated orthogonally, so that the letters could be on the same or different frame locations (Figure 4A). Both the frame-centered representation account and the motion congruency account predicted better performance when the matched letters (“same” identities) were on the same relative frame location than on different locations. However, the motion congruency account also predicted better performance when the mismatched letters (“different” identities) were on different relative frame locations than on the same frame locations. A repeated-measures ANOVA on RTs revealed a significant interaction between these two factors, (F(1, 18) = 5.83, p = 0.027, = 0.245, Figure 4B). Consistent with both accounts, observers performed significantly faster when the matched letters occupied the same relative frame location than when they occupied different locations, t(18) = −3.46, p = 0.003, d = −0.79. However, for the mismatched letters, there was no difference between the same relative frame location condition and the different locations condition, t(18) = −0.07, p = 0.944, d = −0.02. This result is thus inconsistent with the motion congruency account and provides further support for our frame-centered representation account by extending the frame-centered iconic memory effect to displays imposing no object competition. The error rate data were consistent with the RT data, with a lower error rate in the same frame location condition than the different frame locations condition for the matched letters, t(16) = −2.20, p = 0.041, d = −0.50, but not for the mismatched letters, t(16) = 0.86, p = 0.399, d = 0.20.

Experiment 1C and 1D: The role of apparent motion

In Experiment 1A and 1B, apparent motion was used as a tool to facilitate perceptual continuity and uncover frame-centered object representation and updating. One may ask: To what extent does frame-centered representation and updating depend on motion mechanisms? To address this, in Experiment 1C, the apparent motion of frames was disrupted by continuously presenting the first frame until the offset of the second frame (Figure 5A); in Experiment 1D, the apparent motion of letters was also disrupted by presenting two letter Os during the target presentation (Figure 5C). Removing the apparent motion of frames did not alter the basic pattern of frame-centered memory effect, although the effect was weaker than the effect with apparent motion in Experiment 1A as shown in Figure 5B, same versus different frame locations of the matched letters in RTs, t(12) = −3.42, p = 0.005, d = −0.95; error rate: t(12) = −0.94, p = 0.368, d = −0.26. Further removing the apparent motion of letters weakened but did not abolish the frame-centered memory effect as shown in Figure 5D, same versus different frame locations of the matched letters in RTs: t(13) = −2.37, p = 0.034, d = −0.63; error rate: t(13) = −1.22, p = 0.242, d = −0.33. These results thus demonstrate that apparent motion, exploited as a tool in facilitating perceptual continuity, contributes to but is not necessary for frame-centered iconic memory.

Experiment 2: Frame-centered visual priming

The match-to-sample task (e.g., whether a later object is one of the previous objects) used in Experiments 1A–1D, though popular in research on perceptual continuity (Kruschke & Fragassi, 1996), may induce rapid learning of allocating attention to a relative location within a frame (Kristjansson et al., 2001; Maljkovic & Nakayama, 1996; C. R. Olson & Gettner, 1995). To investigate the automaticity of frame-centered representations, here we designed a new procedure without memory or attention demand: two task-irrelevant, uninformative prime letters b and p (order random) along the horizontal meridian were presented first, followed by a target letter b, B, p, or P along the vertical meridian. The prime letters were within a frame centered on the fixation; the target was within another frame that was presented on one of four corners. Observers were asked to categorize whether the target letter was b/B or p/P, regardless of the case. Because both b and p were presented on the first frame and the potential targets b/B and p/P were presented equally often on the second frame, the prime letters provided no information for the task. Critically, although equidistant from the two prime letters, the target's relative frame location corresponded to either the left or the right prime letter. Thus, there were two factors in our design (Figure 6A): target case (lower- vs. uppercase) and prime letter identity on the same relative frame location as the target's (same vs. different). Uppercase targets were introduced to probe the feature specificity of frame-centered representation.

Level of representations during frame-centered priming

For all the targets, observers' RTs were consistently faster when the target and the matched prime occupied the same relative frame location than different relative frame locations (Table 1), demonstrating a nonretinotopic, frame-centered priming effect. Frame-centered priming effect was evident for not only lowercase targets, t(16) = −7.76, p < 0.001, d = −1.88, but also uppercase targets, t(16) = −3.81, p = 0.002, d = −0.92, revealing that the frame-centered representation in the priming effect retains a higher level, more abstract component that can survive case transformation (Figure 6C). A repeated-measures ANOVA on RTs further revealed a significant interaction between target case and identity across frames, F(1, 16) = 8.71, P = 0.009, = 0.352, indicating that case consistency (i.e., low-level visual featural overlap) also plays a substantial role in frame-centered priming (Figure 6C).

Table 1.

Descriptive statistics (mean reaction time [RT] and error rate, with standard error of the mean within parentheses) and inferential statistics (two-tailed t tests of the difference between “same” and “different” frame-centered identity conditions, i.e., frame-centered priming effect) in Experiment 2.

|

Target |

Frame-centered identity |

Frame-centered priming |

||

|

Same |

Different |

t (two-tailed) |

p |

|

| b | ||||

| RT (ms) | 601.5 (19.4) | 628.9 (18.2) | −4.18 | <0.001 |

| Error (%) | 4.2 (1.1) | 4.6 (1.4) | −0.22 | 0.83 |

| p | ||||

| RT (ms) | 588.4 (16.4) | 633.2 (20.0) | −5.76 | <0.001 |

| Error (%) | 6.3 (1.4) | 7.1 (1.3) | −0.63 | 0.54 |

| B | ||||

| RT (ms) | 594.9 (17.6) | 610.1 (17.2) | −3.13 | 0.006 |

| Error (%) | 3.3 (0.7) | 3.4 (0.9) | −0.05 | 0.96 |

| P | ||||

| RT (ms) | 579.2 (16.4) | 608.9 (17.7) | −3.13 | 0.004 |

| Error (%) | 4.0 (1.1) | 5.6 (1.4) | −1.43 | 0.17 |

Because lowercase p and uppercase P were visually quite similar, we analyzed trials with target B separately to further assess whether low-level visual features alone can account for the frame-centered priming effect we observed. Specifically, in the letter pairs b-B and p-B, B is physically equidistant from b and p (i.e., the overlap in low-level visual features between the letters is identical between the two pairs), but conceptually B is more similar to b than p (Lupyan, Thompson-Schill, & Swingley, 2010). Thus, accounts based solely on low-level visual features would predict similar performances when the target B shared the same relative frame location as b than as p. Although letter pairs b-B and p-B had the same visual feature similarities, RTs to the target B were much faster when it shared the same relative location as letters b than p, t(16) = −3.13, p = 0.006, d = −0.76, further demonstrating that the frame-centered priming effect cannot be attributed to accounts based solely on low-level visual features or motion analysis and must also involve higher level representations. No differences were found on the error rates. Clearly, these results reveal a frame-centered priming effect that cannot be explained by working memory (Hollingworth, 2007; Jiang et al., 2000) or attentional learning (Barrett et al., 2003; Boi, Vergeer, et al., 2011; Umilta et al., 1995). In short, frame-centered priming reflects both low-level visual featural representation and high-level identity representation.

Experiment 3A–3C: Frame-centered backward masking

In Experiment 2, the prime objects were presented before the target object, making it possible that, in anticipation of the target, processes responsible for target categorization might have been activated before target presentation and thus acted upon the prime objects. It thus remains unclear whether such high-level anticipation processes are necessary for the frame-centered updating mechanism to operate. One way to address this issue is to present the target first, followed by two masking objects equidistant from the target, and assess whether backward masking is determined by the relative frame-centered locations of the target and the two masks. The detrimental nature of backward masking also allows us to further test the relative automaticity of frame-centered representation and updating, as observers would have no incentive to integrate objects across frames and be better off not integrating across frames.

Several forms of backward masking have been characterized in the literature. Among them, metacontrast masking (wherein the mask surrounds but does not overlap the target) and object substitution masking (wherein the mask, composed of four dots, is presented around the target among several distractors and trail in the display after target offset) can be thought as instances of inattentional blindness (Mack & Rock, 1998) as they depend on relatively higher levels processes such as attention, perceptual grouping, and perceptual continuity (Breitmeyer, 1984; Lleras & Moore, 2003; Ramachandran & Cobb, 1995). We thus used a traditional form of backward masking thought to be tapping into early vision (Breitmeyer, 1984). Unlike object substitution masking, for instance, which critically depends on target uncertainty with multiple items needed to be simultaneously presented with the target (Enns & Di Lollo, 1997; Jiang & Chun, 2001), in our study only the target was presented alone, as in traditional backward masking.

Experiment 3A: A frame-centered backward masking effect

In Experiment 3A, a target letter, either N or mirror-N, was presented randomly above or below the fixation, immediately followed by two masks, a strong one and a weak one, one on each side of the fixation (Figure 7A). The task was to indicate whether the target letter was N or mirror-N, with emphasis on accuracy. Critically, although equidistant from the two masks, the target's relative frame location corresponded to that of either the left or the right mask, depending on the location of the target frame (first frame). Thus, the target could occupy the same relative frame location as the strong mask or the weak mask (Figure 7B). Figure 7C shows that observers performed both significantly faster and more accurately when the target was followed by a weak mask than by a strong mask on the same relative frame location, RTs: t(11) = −2.54, p = 0.028, d = −0.73; error rates: t(11) = −2.80, p = 0.017, d = −0.81, revealing a nonretinotopic, frame-centered backward masking effect. These results demonstrate that, even when it is detrimental to do so, object representation is strongly and obligatorily coupled to its reference frame, with masking effects determined by the relative frame-centered locations of the target and the masks. Moreover, because targets were presented before the masks, the frame-centered masking effect demonstrates that frame-centered updating can operate without high-level anticipation processes and can be based on low level, relatively automatic processes.

Experiment 3B: Perceptual sensitivity, not bias

How does frame-centered, nonretinotopic backward masking affect perceptual sensitivity and response bias? To address this, in Experiment 3B we employed a detection task on visual search, with signal (a left tilted bar) present on half of the trials and absent on the other half (Figure 8A). Observers were asked to detect, as accurately as possible, whether the search display on the first frame (randomly on one of four corners) contained an odd, left-tilted bar. The search display was followed by two mask displays, one strong and one weak, on the second frame centered on the fixation. The mask on the same relative frame location as the search display could be the strong one or the weak one, with the mask strength determined by physical energy. Using signal detection theory (Green & Swets, 1966), perceptual sensitivity was measured by d′, based on hit rate and false alarm rate (i.e., Z[HR] − Z[FAR]), with higher d′ meaning better perceptual sensitivity; response bias was measured by c, based on hit rate and false alarm rate {i.e., −[Z(HR) + Z(FAR)]/2}, with lower absolute c meaning lower bias towards a particular type of response. Using d′ and c, we found that observers' perceptual sensitivity was higher when the target was followed by a weak mask than by a strong mask, t(12) = 3.41, p = 0.005, d = 0.95, but response bias was not affected by the type of mask, t(12) = −0.51, p = 0.617, d = −0.14 (Figure 8B). These results thus indicate that frame-centered backward masking acts by modifying perceptual sensitivity rather than response bias.

Experiment 3C: Form integration during frame-centered backward masking

The results of Experiment 3B prompted us to examine a more intriguing case of cross frame integration: can form information be integrated across frames in a frame-centered fashion (Hayhoe, Lachter, & Feldman, 1991)? To test this, in Experiment 3C we extended the object superiority effect (Weisstein & Harris, 1974), in which adding a noninformative part to each search element can significantly enhance visual search performance, into a form of nonretinotopic object superiority effect. In our configurations (Figure 8C), the search displays were again tilted bars, which shared the same relative frame location with either four triangles, which, if integrated with the targets, would result in a traditional retinotopic object superiority effect, or four knots matched in the number of lines with the triangles, which, if integrated with the targets, would produce no object superiority effect. Thus, form integration, if it occurs, would render the four triangles mask as a relatively weak mask and the four knots mask as a strong mask, for otherwise the four triangles mask and the four knots mask are equally noneffective as (nonretinotopic) masks.

Strikingly, the pattern of results paralleled that of Experiment 3B (Figure 8D): observers' perceptual sensitivity was higher when the target was followed by four triangles (weak mask) than by four knots (strong mask), t(11) = 3.85, p = 0.003, d = 1.11, with response bias unaffected by the type of mask, t(12) = −0.08, p = 0.937, d = −0.02. These results indicate that certain form information can be integrated across frames in a frame-centered fashion (c.f. a nonretinotopic form priming effect, Ogmen, Otto, & Herzog, 2006).

Discussion

Our study is the first to demonstrate robust and automatic frame-centered representation and updating in object perception. Although the effect of contextual frames is well documented in spatial representation (Burgess, 2006; Kerzel, Hommel, & Bekkering, 2001; Mou, McNamara, Valiquette, & Rump, 2004; Wang & Spelke, 2002; Witkin & Asch, 1948), such effect has been typically confined to spatial tasks, with seemingly no relevance to object perception (e.g., Zhou, Liu, Zhang, & Zhang, 2012). For instance, coding of locations can be influenced by contextual frames through a biased egocentric midline, known as the induced Roelofs effect (Bridgeman et al., 1997; Dassonville & Bala, 2004; Dassonville, Bridgeman, Kaur Bala, Thiem, & Sampanes, 2004; Roelofs, 1935). Here, using a moving frame technique, we show that objects are robustly and automatically coupled to their reference frames through a frame-centered mechanism, resulting in nonretinotopic, frame-centered iconic memory, visual priming, and backward masking effects. Such a mechanism of frame-centered representation and integration is highly adaptive for perception, navigation, and action, enabling object information to be continuously updated relative to a reference frame as the frame moves. By tagging objects to the reference frame, frame-centered mechanisms underlie the crucial perceptual continuity and individuation of objects when multiple objects are moving, which is vital for proper and accurate actions toward moving objects (e.g., a sniper aiming at a driver emerging from behind a building benefits from a car-centered person representation mechanism). It may also be ideal for coping with disruption of retinotopic representation by eye movements, in which case objects are updated in reference with the world.

It is an old observation that perception and cognition is modulated by context. It is also intuitive that we can voluntarily direct attention to a remote, frame-centered location, e.g., when the cues on the initial frame are predictive of the target on the subsequent frame (Barrett et al., 2003; Boi, Vergeer, et al., 2011; Umilta et al., 1995),1 or learn to allocate attention to a relative location within a frame, e.g., when the target repeatedly appears within a fixed, frame-centered location (Kristjansson et al., 2001; Maljkovic & Nakayama, 1996; C. R. Olson & Gettner, 1995). Our finding of automatic frame-centered representation and integration, however, provides a striking demonstration in object representation that frame-centered effect can be incidental and automatic rather than voluntary and that it can penetrate both relatively early processes such as backward masking and later processes such visual priming and iconic memory. Such an automatic mechanism may underlie our ability in learning to direct attention toward a relative location within an object or a spatial configuration (Barrett et al., 2003; Kristjansson et al., 2001; Maljkovic & Nakayama, 1996; Miller, 1988; Umilta et al., 1995).

Spatial structure in perceptual continuity: An object cabinet framework

Previous research suggests that nonretinotopic information integration during motion can be object-based. For instance, perceiving an object is suggested to induce a temporary, episodic representation in an object file. Re-perceiving that object automatically retrieves its object file, so that perception is enhanced when all of the attributes match those in the original object file and impaired if some attributes are changed (Treisman, 1992). Similar mechanism has been suggested for lower level feature processing, such as color integration (Nishida, Watanabe, Kuriki, & Tokimoto, 2007; Watanabe & Nishida, 2007). What can be integrated in such an object-based manner remains unclear. Low-level features such as motion and color can be integrated through attention tracking in continuous apparent motion (Cavanagh, Holcombe, & Chou, 2008), and form and motion can be integrated across frames during Ternus-Pikler apparent motion (Boi et al., 2009), but tilt and motion aftereffects cannot transfer across frames (Boi, Ogmen, & Herzog, 2011). The differences in the specific paradigms used notwithstanding, our results show that both low-level (e.g., orientation) and high-level (e.g., form) information can actually be integrated across frames. Most importantly, previous research on mobile updating has been limited to a fixed relative position and thus unable to reveal the effect of reference frame; by directly manipulating the relative frame locations (i.e., object/frame-based influences are controlled in the conditions compared as the objects are on the same contextual frame but differ in their relative locations within the frame), our results reveal that such mobile integration relies on a frame-centered, location-specific mechanism. These results naturally lead to the metaphor of an object “cabinet” by analogy to object “files,” in which objects (the files) are orderly coded in their relative spatial relations to the frame (the cabinet). This object cabinet coding heuristic may be the foundation of scene perception, which involves coding the spatial and contextual relations among objects and their frames. A hierarchical file and cabinet system might thus underlie our ability to integrate multiple objects into a scene and help to keep track of moving objects, particularly when visual information is not readily available (e.g., due to occlusion).

This object cabinet framework is not necessarily limited to integration across motion, and can be seen as an extension of earlier concepts in memory research, including schemata (Biederman, Mezzanotte, & Rabinowitz, 1982; Hock, Romanski, Galie, & Williams, 1978; Mandler & Johnson, 1976), scripts (Schank, 1975), and frames (Minsky, 1975), wherein objects and their contextual information are bound in representation. For example, according to Minsky, encountering a new situation evokes the retrieval of a particular structure from memory—called a frame—so that the old frame can be adapted to accommodate new information. However, unlike frames, which are conceptualized as representations of stereotyped situations (e.g., a living room, a birthday party), our object cabinet concept is more general, including not only familiar situations but also novel objects and novel situations, and constrains not only higher level memory processes but also lower level visual perception. Thus, the object cabinet framework has important implications for both memory and perception. For instance, nonretinotopic integration across successive, moving frames (transframe integration) is functionally similar to integration across locations on the retina during eye movements (transsaccadic integration) (Irwin, 1996; Melcher & Colby, 2008). It is possible that a similar frame-centered representation could account for transsaccadic integration such that information is integrated across eye movements in a frame-center, location-specific manner. Thus, the frame-centered mechanism can cope with changes in retinotopic representation induced by object movements by updating the object's representation in reference with the frame, and may similarly cope with changes in retinotopic representation induced by eye movements by updating objects in reference with the world. Such a nonretinotopic frame-centered updating mechanism would be advantageous for coping with ubiquitous object movements and eye movements and thus holds promise as a parsimonious account for maintaining visual stability in everyday vision and action.

Motion-based grouping and a mobile spatiotopic representation: A “frametopic” representation?

Maintaining perceptual continuity is fundamental to our coherent perception of the dynamic world. An important tool in the kit is grouping based on motion. Motion-based grouping affects a range of visual processing, suggesting that visual processing goes with the perceived motion percept. For instance, in metacontrast masking, wherein the mask surrounds but does not overlap the target, it is demonstrated that when the flanking masks are perceived to participate in apparent motion with features in the preceding frame, masking effect is considerably reduced (Ramachandran & Cobb, 1995). Similarly, in object substitution masking (OSM), wherein the mask, composed of four dots, is presented around the target among several distractors and trail in the display after target offset, it is found that motion-based grouping affects OSM: even when the target display is presented without any mask, OSM persists if the target display is perceived to participate in apparent motion with features (e.g., dots) in a subsequent frame (Lleras & Moore, 2003).

This idea that visual processing may go with the perceived motion percept has also been fruitfully applied to Ternus-Pikler displays to test nonretinotopic processing (Boi et al., 2009; Ogmen et al., 2006). In a typical Ternus-Pikler display, a first frame of three elements is followed, after an interval, by a second frame of a spatially shifted version of the elements of the first frame. When the interval is short, the leftmost element in the first frame is perceived to move to the rightmost element in the second frame, and the two central elements remain stationary (“element motion”), whereas when the interval is long, the three elements in the first frame are perceived as moving as a group to the corresponding elements in the second frame (“group motion”). It is thus reported that the percept of the second element in the second frame is strongly biased by the preceding, second element in the first frame only when group motion is perceived (Ogmen et al., 2006), demonstrating the important role of motion-based grouping in nonretinotopic processing. This logic of contrasting perception in group motion against that in element motion (or “no motion” when the first element in the first frame and the third element in the second frame are omitted) is further proposed as a “litmus” test for teasing retinotopic versus nonretinotopic processing, so that nonretinotopic processing is revealed if accuracy is better in the group motion condition than the no motion condition (Boi et al., 2009).

The current series of experiments support the general notion regarding the important role of motion-based grouping in visual processing from a new angle. Our experiments go beyond asking whether a phenomenon occur retinotopically or nonretinotopically, which can be addressed, for example, by adopting the litmus method of contrasting group motion against element motion or no motion (Boi et al., 2009; Ogmen et al., 2006). Our core motivation, rather, is to understand the spatial structure in visual representation and computation by asking whether a process is automatically constrained by contextual frames through a frame-centered mechanism. Our approach to isolating frame-centered effect is by manipulating the object's relative location within its frame while controlling for object/frame-based effect and space-based effect in a simple, one-to-one apparent motion configuration (for instance, see Figure 3A). Thus, this procedure is distinct from the litmus method with Ternus-Pikler displays—e.g., omitting the surrounding elements in the Ternus-Pikler display to become a one-to-one apparent motion configuration would render it impossible to differentiate the group motion condition and the element motion condition and thus the method of no use.

Our finding of automatic frame-centered representation and updating might be described as a form of a frametopic representation—i.e., a mobile spatiotopic representation through motion-based grouping. Traditionally, spatiotopic representations are anchored in stable external world coordinates (Burr & Morrone, 2011; Duhamel et al., 1992). If one replaces the “world” with a contextual frame and considers a static frame as a special case of a moving frame, then the frame-centered representation is essentially a mobile spatiotopic representation that can as well be called a frametopic representation.

Neural mechanisms of frame-centered representation and integration

In object perception, it has been documented that features and parts are encoded in reference to the object under explicit task requirements (Marr & Nishihara, 1978; Olshausen, Anderson, & Van Essen, 1993). Unit recordings in monkeys, for example, show that some neurons in V4 are tuned for contour elements at specific locations relative to a larger shape (Pasupathy & Connor, 2001), and, in a delayed sample-to-target saccade task, some neurons in the Supplementary Eye Field are selective for a particular side of an object independent of the retinal location of the object (C. R. Olson & Gettner, 1995). These findings in monkeys are consistent with observations from hemineglect patients (who are impaired in orienting spatial attention to information on the contralateral side of the brain lesion): these patients neglect the contralesional side of an object regardless of its position in egocentric coordinates (Driver, Baylis, Goodrich, & Rafal, 1994; Tipper & Behrmann, 1996). These findings thus suggest that voluntary attention enables objects to be encoded to the reference frame (C. R. Olson, 2003). Our results extend these findings by showing that object representation is obligatorily and robustly coupled to its reference frame, independent of eye movements and task requirements, and when the frame moves, such frame-centered representation can be continuously updated through a frame-centered, location-specific mechanism.

What are the neural mechanisms for the relative automatic frame-centered representation and integration as revealed in iconic memory, visual priming, and backward masking? Recent modeling work on motion integration using the litmus Ternus-Pikler displays suggests that nonretinotopic motion integration as observed by Boi et al. (2009) may be achieved through basic motion filters oriented in space–time in the early visual cortex (Pooresmaeili, Cicchini, Morrone, & Burr, 2012). However, the automatic frame-centered representation and integration as revealed here is unlikely to be explained by such basic motion filters alone, but instead may be accounted for by a combination of motion (e.g., V1 and MT), object (e.g., later ventral cortex), and space (e.g., parietal and prefrontal cortex) mechanisms. First, although frame-centered representation and integration during iconic memory is strengthened by apparent motion, frame-centered iconic memory does not necessarily depend on motion. Second, frame-centered priming effect is stronger when the letter case is consistent across frames than when the cases are different, suggesting the involvement of feature representation; at the same time, frame-centered priming effect is evident even when the feature similarity is controlled and the conceptual category is manipulated, suggesting the involvement of conceptual, letter identity representation. Finally, backward masking as used in our study is typically thought to arise from interference at a lower level than object substitution masking (Enns & Di Lollo, 2000), such as at V1 and V2 (Breitmeyer, 1984), suggesting that early neural mechanisms are involved in frame-centered backward masking. At the same time, it is possible that mechanisms for motion correspondence of frames might act upon target identification before the target discrimination process is fully completed, suggesting an interactive loop of motion correspondence and target identification. Thus, frame-centered representation and integration may reflect the involvement of both early and late mechanisms important for the analyses of motion, object, and space.

The neural mechanisms for automatic frame-centered representation and integration may be closely related to those in object and space integration. On the one hand, frame-centered representation and integration provides a new perspective to object and space integration. Although object identity information is relatively invariant, space information is intrinsically relative and must be specified in a given coordinate. This frame-sensitive nature of space coding is surprisingly overlooked in previous research studying the neural mechanisms in identity and space integration (Cichy, Chen, & Haynes, 2011; Rao, Rainer, & Miller, 1997; Schwarzlose, Swisher, Dang, & Kanwisher, 2008; Voytek, Soltani, Pickard, Kishiyama, & Knight, 2012), with space defined only in the retinotopic coordinate. Retinotopic coordinate, though widespread in the early visual system, is insufficient because of the ubiquitous eye movements and object movements in natural vision. Our finding of automatic frame-centered representation reveals the context-sensitive nature of identity and space integration, suggesting the importance in understanding object and space integration in a dynamic environment. On the other hand, studies in object and space integration have revealed the important contribution from the prefrontal cortex (Rao et al., 1997; Voytek et al., 2012), raising the possibility that this prefrontal mechanism might work with motion mechanisms (such as V1 and MT) for maintaining a dynamic frame-centered object representation.

Conclusions and future directions

Contextual frames have been previously shown to bias egocentric midline and consequently spatial localization. Using iconic memory, visual priming, and backward masking, here we demonstrate that objects are robustly and automatically represented and updated in reference to the contextual frames through a frame-centered mechanism. Automatically anchoring and updating object representations in reference to contextual frames may be viewed as frametopic representation—in the same sense as objects are anchored in the external world in spatiotopic representation. Such an automatic frame-centered representation and updating mechanism in object perception leads to the metaphor of an object cabinet: objects (the files) within the contextual frame (the cabinet) are orderly coded in their relative spatial relations to the frame. Future research should examine the implications of frame-centered representation and updating for other processes such as spatial perspective taking, visual tagging, and attentional selection and access.

Acknowledgments

ZL conceived, designed, and conducted the research; ZL and SH wrote the manuscript. This work was supported by National Institutes of Health (NIH EY015261 and T32EB008389) and National Science Foundation (NSF BCS-0818588). We thank A Holcombe, YV Jiang, and M Herzog for helpful comments.

Commercial relationships: none.

Corresponding authors: Jun Saiki; Hayaki Banno.

Email: saiki@cv.jinkan.kyoto-u.ac.jp; banno@cv.jinkan.kyoto-u.ac.jp

Address: Graduate School of Human and Environmental Studies, Kyoto University, Kyoto, Japan.

Footnotes

Note that, although the Boi et al. (2011) study claims to find evidence for nonretinotopic exogenous attention, the findings actually reflect the effect of voluntary attention. This is because demonstration of exogenous attention requires that the cues be uninformative about target positions (cf. Folk et al., 1992), yet the cues in their experiments 1, 2, 3, and 5 predicted the target location, and the cues in their experiment 4, while not predicting the target location within the frame, predicted the location of the target frame (i.e., the target frame was always on the opposite side to the cue frame). Another limitation that renders their experiment 4 invalid is that the three subjects tested all had participated in experiment 1, where cues predicted the target location; in other words, after learning of cue-target relationship in experiment 1, subjects could well develop a tendency to use the cues in experiment 4 even when the cues became uninformative of the target location.

Contributor Information

Zhicheng Lin, Email: zhichenglin@gmail.com.

Sheng He, Email: sheng@umn.edu.

References

- Bar M. (2004). Visual objects in context. Nature Reviews Neuroscience , 5 (8), 617–629 [DOI] [PubMed] [Google Scholar]

- Barrett D. J., Bradshaw M. F., Rose D. (2003). Endogenous shifts of covert attention operate within multiple coordinate frames: Evidence from a feature-priming task. Perception , 32 (1), 41–52 [DOI] [PubMed] [Google Scholar]

- Biederman I., Mezzanotte R. J., Rabinowitz J. C. (1982). Scene perception: Detecting and judging objects undergoing relational violations. Cognitive Psychology , 14 (2), 143–177 [DOI] [PubMed] [Google Scholar]

- Boi M., Ogmen H., Herzog M. H. (2011). Motion and tilt aftereffects occur largely in retinal, not in object, coordinates in the Ternus-Pikler display. Journal of Vision , 11 (3):7, 1–11, http://www.journalofvision.org/content/11/3/7, doi:10.1167/11.3.7. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boi M., Ogmen H., Krummenacher J., Otto T. U., Herzog M. H. (2009). A (fascinating) litmus test for human retino- vs. non-retinotopic processing. Journal of Vision, 9 (13):5, 1–11, http://www.journalofvision.org/content/9/13/5, doi:10.1167/9.13.5. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boi M., Vergeer M., Ogmen H., Herzog M. H. (2011). Nonretinotopic exogenous attention. Current Biology , 21 (20), 1732–1737 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D. H. (1997). The pyschophysics toolbox. Spatial Vision , 10 , 433–4369176952 [Google Scholar]

- Breitmeyer B. G. (1984). Visual masking: An integrative approach. New York: Oxford University Press [Google Scholar]

- Bridgeman B., Peery S., Anand S. (1997). Interaction of cognitive and sensorimotor maps of visual space. Perception and Psychophysics , 59 (3), 456–469 [DOI] [PubMed] [Google Scholar]

- Burgess N. (2006). Spatial memory: How egocentric and allocentric combine. Trends in Cognitive Sciences , 10 (12), 551–557 [DOI] [PubMed] [Google Scholar]

- Burr D. C., Morrone M. C. (2011). Spatiotopic coding and remapping in humans. Philosophical Transactions of the Royal Society of London . Series B, Biological Sciences , 366 (1564), 504–515 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanagh P., Holcombe A. O., Chou W. (2008). Mobile computation: Spatiotemporal integration of the properties of objects in motion. Journal of Vision , 8 (12):1, 1–23, http://www.journalofvision.org/content/8/12/1, doi:10.1167/8.12.1. [PubMed] [Article] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy R. M., Chen Y., Haynes J. D. (2011). Encoding the identity and location of objects in human LOC. Neuroimage , 54 (3), 2297–2307 [DOI] [PubMed] [Google Scholar]

- Dassonville P., Bala J. K. (2004). Perception, action, and Roelofs effect: A mere illusion of dissociation. PLoS Biol , 2 (11), e364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dassonville P., Bridgeman B., Kaur Bala J., Thiem P., Sampanes A. (2004). The induced Roelofs effect: Two visual systems or the shift of a single reference frame? Vision Research , 44 (6), 603–611 [DOI] [PubMed] [Google Scholar]

- Driver J., Baylis G. C., Goodrich S. J., Rafal R. D. (1994). Axis-based neglect of visual shapes. Neuropsychologia , 32 (11), 1353–1365 [DOI] [PubMed] [Google Scholar]

- Duhamel J. R., Colby C. L., Goldberg M. E. (1992). The updating of the representation of visual space in parietal cortex by intended eye movements. Science , 255 (5040), 90–92 [DOI] [PubMed] [Google Scholar]

- Enns J. T., Di Lollo V. (1997). Object substitution: A new form of masking in unattended visual locations. Psychological Science , 8 (2), 135–139 [Google Scholar]

- Enns J. T., Di Lollo V. (2000). What's new in visual masking? Trends in Cognitive Sciences , 4 (9), 345–352 [DOI] [PubMed] [Google Scholar]

- Folk C. L., Remington R. W., Johnston J. C. (1992). Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology: Human Perception and Performance , 18, 1030–1044 [PubMed] [Google Scholar]

- Green D. M., Swets J. A. (1966). Signal detection theory and psychophysics. New York: Wiley [Google Scholar]

- Guzman-Martinez E., Leung P., Franconeri S., Grabowecky M., Suzuki S. (2009). Rapid eye-fixation training without eyetracking. Pyschonomic Bulletin & Review, 16 (3), 491–496 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayhoe M., Lachter J., Feldman J. (1991). Integration of form across saccadic eye movements. Perception , 20 (3), 393–402 [DOI] [PubMed] [Google Scholar]

- Hock H. S., Romanski L., Galie A., Williams C. S. (1978). Real-world schemata and scene recognition in adults and children. Memory & Cognition , 6 (4), 423–431 [Google Scholar]

- Hollingworth A. (2007). Object-position binding in visual memory for natural scenes and object arrays. Journal of Experimental Psychology: Human Perception and Performance , 33 (1), 31–47 [DOI] [PubMed] [Google Scholar]

- Hollingworth A., Rasmussen I. P. (2010). Binding objects to locations: The relationship between object files and visual working memory. Journal of Experimental Psychology: Human Perception and Performance , 36 (3), 543–564 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Irwin D. E. (1996). Integrating information across saccadic eye movements. Current Directions in Psychological Science , 5, 94–100 [Google Scholar]

- Jiang Y., Chun M. M. (2001). Asymmetric object substitution masking. Journal of Experimental Psychology: Human Perception and Performance , 27 (4), 895–918 [PubMed] [Google Scholar]

- Jiang Y., Olson I. R., Chun M. M. (2000). Organization of visual short-term memory. Journal of Experimental Psychology: Learning, Memory, and Cognition , 26 (3), 683–702 [DOI] [PubMed] [Google Scholar]

- Kerzel D., Hommel B., Bekkering H. (2001). A Simon effect induced by induced motion and location: Evidence for a direct linkage of cognitive and motor maps. Perception & Psychophysics , 63 (5), 862–874 [DOI] [PubMed] [Google Scholar]

- Kristjansson A., Mackeben M., Nakayama K. (2001). Rapid, object-based learning in the deployment of transient attention. Perception , 30 (11), 1375–1387 [DOI] [PubMed] [Google Scholar]

- Kruschke J. K., Fragassi M. M. (1996). The perception of causality: Feature binding in interacting objects. In Proceedings of the Eighteenth Annual Conference of the Cognitive Science Society (pp 441–446) Hillsdale, NJ: Erlbaum [Google Scholar]

- Lleras A., Moore C. M. (2003). When the target becomes the mask: Using apparent motion to isolate the object-level component of object substitution masking. Journal of Experimental Psychology: Human Perception and Performance , 29 (1), 106–120 [PubMed] [Google Scholar]

- Lupyan G., Thompson-Schill S. L., Swingley D. (2010). Conceptual penetration of visual processing. Psychological Science , 21 (5), 682–691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mack A., Rock I. (1998). Inattentional blindness. Cambridge, MA: MIT Press [Google Scholar]

- Maljkovic V., Nakayama K. (1996). Priming of pop-out: II. The role of position. Perception and Psychophysics , 58 (7), 977–991 [DOI] [PubMed] [Google Scholar]

- Mandler J. M., Johnson N. S. (1976). Some of the thousand words a picture is worth. Journal of Experimental Psychology: Human Learning and Memory , 2 (5), 529–540 [PubMed] [Google Scholar]

- Marr D., Nishihara H. K. (1978). Representation and recognition of the spatial organization of three-dimensional shapes. Proceedings of the Royal Society of London . Series B: Biological Sciences , 200 (1140), 269–294 [DOI] [PubMed] [Google Scholar]

- Melcher D., Colby C. L. (2008). Trans-saccadic perception. Trends in Cognitive Sciences , 12 (12), 466–473 [DOI] [PubMed] [Google Scholar]

- Miller J. (1988). Components of the location probability effect in visual search tasks. Journal of Experimental Psychology . Human Perception and Performance , 14 (3), 453–471 [DOI] [PubMed] [Google Scholar]

- Minsky M. (1975). The psychology of computer vision. In Winston P. (Ed.), The psychology of computer vision (pp 211–277) New York: McGraw-Hill [Google Scholar]

- Mou W., McNamara T. P., Valiquette C. M., Rump B. (2004). Allocentric and egocentric updating of spatial memories. Journal of Experimental Psychology: Learning, Memory, and Cognition , 30 (1), 142–157 [DOI] [PubMed] [Google Scholar]

- Nishida S., Watanabe J., Kuriki I., Tokimoto T. (2007). Human visual system integrates color signals along a motion trajectory. Current Biology , 17 (4), 366–372 [DOI] [PubMed] [Google Scholar]

- Ogmen H., Otto T. U., Herzog M. H. (2006). Perceptual grouping induces non-retinotopic feature attribution in human vision. Vision Research , 46 (19), 3234–3242 [DOI] [PubMed] [Google Scholar]

- Oliva A., Torralba A. (2007). The role of context in object recognition. Trends in Cognitive Sciences , 11 (12), 520–527 [DOI] [PubMed] [Google Scholar]

- Olshausen B. A., Anderson C. H., Van Essen D. C. (1993). A neurobiological model of visual attention and invariant pattern recognition based on dynamic routing of information. Journal of Neuroscience , 13 (11), 4700–4719 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson C. R. (2003). Brain representation of object-centered space in monkeys and humans. Annual Review of Neuroscience , 26, 331–354 [DOI] [PubMed] [Google Scholar]

- Olson C. R., Gettner S. N. (1995). Object-centered direction selectivity in the macaque supplementary eye field. Science , 269 (5226), 985–988 [DOI] [PubMed] [Google Scholar]

- Olson I. R., Marshuetz C. (2005). Remembering “what” brings along “where” in visual working memory. Perception & Psychophysics , 67 (2), 185–194 [DOI] [PubMed] [Google Scholar]

- Pasupathy A., Connor C. E. (2001). Shape representation in area V4: Position-specific tuning for boundary conformation. Journal of Neurophysiology , 86 (5), 2505–2519 [DOI] [PubMed] [Google Scholar]

- Pelli D. G. (1997). The VideoToolbox software for visual pyschophysics: Transforming numbers into movies. Spatial Vision , 10, 437–4429176953 [Google Scholar]

- Pooresmaeili A., Cicchini G. M., Morrone M. C., Burr D. (2012). “Non-retinotopic processing” in Ternus motion displays modeled by spatiotemporal filters. Journal of Vision , 12 (1):10, 1–15, http://www.journalofvision.org/content/12/1/10, doi:10.1167/12.1.10. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Ramachandran V. S., Cobb S. (1995). Visual attention modulates metacontrast masking. Nature , 373 (6509), 66–68 [DOI] [PubMed] [Google Scholar]

- Rao S. C., Rainer G., Miller E. K. (1997). Integration of what and where in the primate prefrontal cortex. Science , 276 (5313), 821–824 [DOI] [PubMed] [Google Scholar]

- Roelofs C. (1935). Optische Localisation. Archives fur Augenheilkunde , 109, 395–415 [Google Scholar]

- Schank R. C. (1975). Conceptual information processing. New York: Elsevier [Google Scholar]

- Schwarzlose R. F., Swisher J. D., Dang S., Kanwisher N. (2008). The distribution of category and location information across object-selective regions in human visual cortex. Proceedings of the National Academy of Sciences of the United States of America , 105 (11), 4447–4452 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperling G. (1960). The information available in brief visual presentations. Psychological Monographs: General and Applied , 74, 1–29 [Google Scholar]

- Tipper S. P., Behrmann M. (1996). Object-centered not scene-based visual neglect. Journal of Experimental Psychology: Human Perception and Performance , 22 (5), 1261–1278 [DOI] [PubMed] [Google Scholar]

- Treisman A. (1992). Perceiving and re-perceiving objects. American Psychologist , 47 (7), 862–875 [DOI] [PubMed] [Google Scholar]

- Ullman S. (1979). The interpretation of visual motion. Cambridge, MA: MIT Press [Google Scholar]

- Umilta C., Castiello U., Fontana M., Vestri A. (1995). Object-centred orienting of attention. Visual Cognition , 2 (2/3), 165–181 [Google Scholar]

- Ungerleider L. G., Mishkin M. (1982). Two cortical visual systems. In Ingle D. J., Goodale M. A., Mansfield R. J. W. (Eds.), Analysis of Visual Behavior (pp 549–586). Cambridge, MA: MIT Press [Google Scholar]

- Voytek B., Soltani M., Pickard N., Kishiyama M. M., Knight R. T. (2012). Prefrontal cortex lesions impair object-spatial integration. PLoS ONE , 7 (4), e34937. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang R., Spelke E. (2002). Human spatial representation: Insights from animals. Trends in Cognitive Sciences , 6 (9), 376. [DOI] [PubMed] [Google Scholar]

- Watanabe J., Nishida S. (2007). Veridical perception of moving colors by trajectory integration of input signals. Journal of Vision , 7 (11):3, 1–16, http://www.journalofvision.org/content/7/11/3, doi:10.1167/7.11.3. [PubMed] [Article] [DOI] [PubMed] [Google Scholar]

- Weisstein N., Harris C. S. (1974). Visual detection of line segments: An object-superiority effect. Science , 186 (4165), 752–755 [DOI] [PubMed] [Google Scholar]

- Witkin H. A., Asch S. E. (1948). Studies in space orientation; Further experiments on perception of the upright with displaced visual fields. Journal of Experimental Psychology , 38 (6), 762–782 [DOI] [PubMed] [Google Scholar]

- Wurtz R. H. (2008). Neuronal mechanisms of visual stability. Vision Research , 48 (20), 2070–2089 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou Y., Liu Y., Zhang W., Zhang M. (2012). Asymmetric influence of egocentric representation onto allocentric perception. Journal of Neuroscience , 32 (24), 8354–8360 [DOI] [PMC free article] [PubMed] [Google Scholar]