Abstract

Stimuli of high emotional significance such as social threat cues are preferentially processed in the human brain. However, there is an ongoing debate, whether or not these stimuli capture attention automatically and weaken the processing of concurrent stimuli in the visual field. This study examined continuous fluctuations of electrocortical facilitation during competition of two spatially separated facial expressions in high and low socially anxious individuals. Two facial expressions were flickered for 3000 ms at different frequencies (14 Hz and 17.5 Hz) to separate the electrocortical signals evoked by the competing stimuli (“frequency-tagging”). Angry faces compared to happy and neutral expressions were associated with greater electrocortical facilitation over visual areas only in the high socially anxious individuals. This finding was independent of the respective competing stimulus. Heightened electrocortical engagement in socially anxious participants was present in the first second of stimulus viewing, and was sustained for the entire presentation period. These results, based on a continuous measure of attentional resource allocation, support the view that stimuli of high personal significance are associated with early and sustained prioritized sensory processing. These cues, however, do not interfere with the electrocortical processing of a spatially separated concurrent face, suggesting that they are effective at capturing attention, but are weak competitors for resources.

Keywords: spatial attention, competition, steady-state visual evoked potentials, facial expressions, social threat stimuli

Introduction

Strong emotional stimuli have been shown to attract attention involuntarily, enabling prioritized responses to survival-relevant information. This has been described as motivated attention (Lang, Bradley, & Cuthbert, 1997), which is accompanied by facilitated sensory processing (Keil, Moratti, Sabatinelli, Bradley, & Lang, 2005; Lang et al., 1998). Preferential processing in visual cortex has been reported for stimuli bearing high emotional significance for the observer, such as highly arousing emotional scenes (Bradley et al., 2003) or phobic stimuli (Öhman & Mineka, 2001). Sensory facilitation for emotional compared to neutral stimuli is in line with contemporary models of selective attention. For instance, the biased competition model of attention (Desimone, 1998) posits that stimuli compete for sensory processing resources and the allocation of resources to one object occurs at the expense of a concurrent object. Thus, the emotional significance of a given stimulus may result in a bias signal in favor of this stimulus, at the cost of non-emotional concurrent stimuli. Accordingly, recent research has discussed an extensive attentional bias towards phobic information, even amidst complex arrays, as a potential mechanism to account for the hyper-vigilance seen in some anxiety disorders (for a review, see Bar-Haim, Lamy, Pergamin, Bakermans-Kranenburg, & van Ijzendoorn, 2007).

For instance, angry faces have been hypothesized to be privileged for access to the human fear system (Öhman, 1986). Moreover, because they directly signal strong social disapproval, angry facial expressions have been conceptualized as particularly effective triggers of disorder-relevant threat in social anxiety. A two-stage model of information processing (Mogg, Bradley, De Bono, & Painter, 1997) has been offered to characterize the nature of attentional biases to emotional social cues in social anxiety: anxious individuals are hypervigilant to threatening information in the initial stage of processing and avoid such information during a later stage (vigilance–avoidance hypothesis). In terms of the biased competition model of selective attention, competition between threat and neutral faces may occur such that angry expressions dominate, potentially due to re-entrant bias signals originating from deep structures such as the amygdala (Evans et al., 2008; Phan, Fitzgerald, Nathan, & Tancer, 2006; Stein, Goldin, Sareen, Zorrilla, & Brown, 2002; Straube, Mentzel, & Miltner, 2005), and thus show heightened neural mass activity in visual cortex. As a simultaneous consequence, the response to concurrent non-threatening facial expressions should be reduced.

Attentional bias in social anxiety

Most evidence in favor of the theory of attention biases to threatening material in anxiety disorders comes from behavioral spatial attention paradigms such as the modified dot-probe task. In that task, faster reaction times to a probe replacing threat cues (e.g., angry faces, pictures of spiders) than to a probe replacing non-threat cues are typically taken to indicate automatic attention capture by threatening stimuli (Bar-Haim et al., 2007). An early attentional bias in socially anxious individuals, both sub-clinical and clinical, toward angry facial expressions was observed in several studies using dot-probe paradigms with two competing stimuli, i.e., stimulus pairs (Bradley, Mogg, Falla, & Hamilton, 1998; Bradley, Mogg, & Millar, 2000; Mogg & Bradley, 2002). In contrast, there are reports of an initial avoidance of threatening facial expressions in sub-clinical socially anxious samples (Mansell, Clark, Ehlers, & Chen, 1999) as well as in social phobia patients (Chen, Ehlers, Clark, & Mansell, 2002; but see Sposari & Rapee, 2007). Taken together, these data suggest that vigilance for threatening faces in socially anxious persons occurs especially in situations with concurrent facial stimuli (i.e., the simultaneous presentation of two facial expressions). High socially anxious participants seem to initially avoid the critical facial expression if there is an unthreatening alternative, non-facial, object to look at (Chen et al., 2002; Mansell et al., 1999). However, concerns have been raised in terms of the validity of this paradigm to detect attentional biases. First, response times to visual probes provide a snapshot view of attention allocation, specifically for the time range in which the probe replaces the cue. Second, the task makes it difficult to distinguish between (i) effects indicating vigilance towards the stimulus as opposed to (ii) impaired disengagement of attention from threat, which both may underlie the observed response time differences (Mogg et al., 1997). Third, as most theories of attentional bias in anxiety disorders make different assumptions regarding early and late processing stages of threatening stimuli, a more detailed account of the temporal dynamics of the attentional bias, and as a consequence, a continuous measure of attentive processing is warranted. In addition to these methodological issues it has recently been suggested that dysfunctional engagement and disengagement are differentially related to fear and anxiety and that neurophysiological methods are required to disentangle these processes (Koster, Crombez, Verschuere, & De Houwer, 2004). To account for these issues, several studies employed eye tracking to assess the effects of social anxiety on attentional processes during viewing of competing facial expressions (Garner, Mogg, & Bradley, 2006; Mogg, Garner, & Bradley, 2007; Wieser, Pauli, Weyers, Alpers, & Mühlberger, 2009). In two experiments, it was found that only under social-evaluative stress conditions (anticipation of a speech), high socially anxious participants showed a rapid initial orienting to emotional (both happy and angry) compared to neutral faces, but showed reduced maintenance of attention on emotional faces (Garner et al., 2006). In a study with social phobia patients, Mogg and colleagues detected initial eye movements toward fearful and angry faces in high social fear, but only when these faces were rated as highly intense (Mogg et al., 2007). This study, however, did not include happy facial expressions, which may be relevant in the light of recent reports suggesting that socially anxious individuals display an attentional bias for happy facial expressions (Wieser et al., 2009). Taken together, research in social anxiety has not yet provided a clear account as to the mechanisms involved in the perception of social threat cues (for a recent review, see Schultz & Heimberg, 2008). Although results from the aforementioned studies predominantly point to a heightened vigilance for angry faces in social anxiety (Juth, Lundqvist, Karlsson, & Öhman, 2005), the evidence so far for a vigilance-avoidance pattern of attentive processing appears weak. As previously surmised by Schultz and Heimberg, “clear conclusions regarding attentional process in social anxiety are not possible given the nature of the research conducted thus far, as several of the paradigms used to explore attention in social anxiety are limited” (Schultz & Heimberg, 2009, p. 1218).

Although behavioral and eye tracking studies have provided important evidence for dysfunctions of attentional orienting and overt visual attention in social anxiety, they are not sensitive to covert shifts of attention. Furthermore, disengagement from or perceptual avoidance of previously identified stimuli is difficult to quantify, as typically several saccades are involved (Garner et al., 2006). Thus, neurophysiological methods may provide a useful avenue to study the time course of fear processing, as they are sensitive to covert attention processes and provide continuous measures of attention fluctuations that can also be related to oculomotor, behavioral and autonomic measures (Keil et al., 2008; Moratti, Keil, & Miller, 2006).

Neurophysiological studies on face processing in social anxiety

Numerous studies on the perception of social threat cues in social anxiety have capitalized on event-related brain potentials (ERPs), which are obtained by means of time-domain averaging over multiple segments of electrocortical activity. In a series of ERP studies, Kolassa and colleagues investigated the electrocortical dynamics when participants diagnosed with social phobia viewed emotional faces (Kolassa, Kolassa, Musial, & Miltner, 2007; Kolassa & Miltner, 2006; Kolassa et al., 2009). They found that angry expressions elicited an enhanced right temporo-parietal N170 in the patient group (Kolassa & Miltner, 2006), which has also been reported in sub-clinical socially anxious individuals (Mühlberger et al., 2009). Additionally, the patients in the former study showed enlarged P100 amplitudes across facial expressions indicating heightened vigilance for faces in general. When comparing patients with different anxiety disorders, the same research team reported generally enhanced P100 amplitudes, but no group differences in N170 and later ERP components between social phobic, spider phobic and control participants, when schematic emotional faces were presented (Kolassa et al., 2007). Enhanced ERP responses (P300/LPP) to threatening faces, however, have been reported in other studies (Moser, Huppert, Duval, & Simons, 2008; Sewell, Palermo, Atkinson, & McArthur, 2008). Recently, it also has been shown that state social anxiety as induced in a fear of public speaking paradigm is associated with larger N170 amplitudes and subsequent ERP components to angry faces (Wieser, Pauli, Reicherts, & Mühlberger, 2010).

Hemodynamic imaging studies parallel the electrophysiological evidence in that they tend to suggest activation of the brain’s fear circuitry when socially anxious participants view social stimuli. Using fMRI, several studies have found enhanced amygdala reactions to angry expressions (Evans et al., 2008; Phan et al., 2006; Stein et al., 2002; Straube et al., 2005), but also to neutral (Birbaumer et al., 1998; Veit et al., 2002) and even happy faces (Straube et al., 2005), pointing to a more general fear system activation bias for faces in social anxiety. Furthermore it has been shown that amygdala activation in socially anxious individuals may depend more on the intensity of facial expressions than on the particular emotion expressed (Yoon, Fitzgerald, Angstadt, McCarron, & Phan, 2007). Taken together, ERP and fMRI data found only limited evidence for specific engagement of neural activity to threat faces. However, all of these studies investigated the processing of isolated stimuli, with no alternative or competing stimulus present. This makes conclusions on preferential selection versus perceptual avoidance of threat stimuli difficult.

The present study

When stimuli compete for resources in sensory cortex, selection of one specific stimulus is assumed to involve amplification of activity that represents that location, feature, or object (Hillyard, Vogel, & Luck, 1998). This process has been related to re-entrant bias signals into sensory cortex, favoring processing of the selected stimulus (Desimone, 1998). Steady-state visual evoked potentials (ssVEPs) represent an excellent method for the study of such cortical amplification and its temporal dynamics. The ssVEP is elicited by a stimulus that is modulated in luminance (i.e. flickered) at a certain fixed frequency for several seconds or more (Regan, 1989). The ssVEP can be recorded by means of EEG as an ongoing oscillatory electrocortical response having the same fundamental frequency as the driving stimulus. Recent studies have shown that the generators of the ssVEP can be localized in extended visual cortex (Müller, Teder, & Hillyard, 1997), with strong contributions from V1 and higher-order cortices (Di Russo et al., 2007). Evidence has amassed suggesting that the time-varying ssVEP amplitude indicates states of heightened cortical activation, such as when selectively attending a stimulus. One advantage of this paradigm is that multiple stimuli flickering at different frequencies can be presented simultaneously to the visual system, but their electrocortical signature can be separated (a technique referred to as “frequency tagging”). Thus, attention to independent objects and spatial locations can be tracked, and it is possible to quantify the competition between stimuli for attentional resources (Morgan, Hansen, & Hillyard, 1996; Müller & Hübner, 2002; Müller, Malinowski, Gruber, & Hillyard, 2003). With respect to emotional stimulus features, it has been demonstrated that highly arousing affective stimuli generate greater ssVEPs than neutral pictures in visual and fronto-parietal networks (Keil, Moratti, & Stolarova, 2003), pointing to an involvement of higher-order attentional mechanism in processing of pictures bearing high motivational relevance (Kemp, Gray, Eide, Silberstein, & Nathan, 2002; Moratti, Keil, & Stolarova, 2004). It has also been demonstrated that task-irrelevant, highly arousing, pictures withdraw more resources from an ongoing detection task than neutral pictures (Müller, Andersen, & Keil, 2008). To summarize, ssVEPs reflect stimulus driven neural oscillations in early visual cortex that can be top-down modulated by more anterior cortical networks (for examples of ssVEP or ssVEF modulation, see Moratti et al., 2004; Perlstein et al., 2003).

In the present study, frequency tagging by means of ssVEPs was employed to study the natural selection of facial expressions in response to a compound stimulus array containing two spatially separated, simultaneously presented faces. As outlined above, phobic stimuli should be especially prone to draw attention and act as competitors. To this end, the processing of angry, happy and neutral faces was investigated in students high and low in social anxiety assuming that angry faces should have specific relevance for individuals with elevated interpersonal apprehension. The participants viewed pairs of facial expressions flickering at different tagging frequencies in a passive viewing paradigm. Based on the literature reported above, we expected socially anxious participants to show hypervigilance for angry facial expressions, resulting in enhanced ssVEP amplitudes over visual cortical areas. If such hypervigilance to social threat cues is at the cost of concurrent processing, a second prediction is that stronger competition costs should occur for face stimuli that compete with angry, compared to other expressions (for instance, reduced ssVEPs amplitudes were expected for neutral and happy faces when viewed in competition with an angry expression. Given the limited empirical support for the avoidance of angry faces later in the attentional processing stream, two possible predictions were made regarding the time dynamics of competition: (1) reduction of ssVEP amplitudes in response to angry faces in the later stages of attentional processing (perceptual avoidance of angry facial expressions) or (2) sustained enhancement of ssVEP amplitudes over the time course of stimulus presentation when viewing angry faces.

Methods

Participants

Participants were undergraduate students recruited from General Psychology classes at the University of Florida, who received course credit for participation. Based on their pre-screening results (n = 174, M = 36.3, SD = 22.4) on the self-report form of the Liebowitz Social Anxiety Scale (LSAS-SR, Fresco et al., 2001), participants scoring in the upper 20% (corresponding to a total score of 53) were identified as high socially anxious (HSA) and invited to take part in the study. Students scoring below the median (md = 33) were considered as low socially anxious (LSA). Thirty-four participants (17 per group) attended the laboratory session. To ensure that the screening procedure was successful, participants completed the LSAS-SR again upon arrival at the day of testing, prior to the experimental session. As expected, the groups differed significantly in their total scores of the LSAS-SR, t(32) = 9.6, p < .001, LSA: M = 28.5, SD = 10.5, HSA: M = 65.4, SD = 11.9. The groups did not differ in terms of gender ratio (LSA: 5 males, HSA: 2 males, χ2(1, N = 34) = 1.6, p = .20), ethnicity (44.1% Caucasian, 26.5 % Afro-American, 20.6% Hispanic, 5.9% Asian, 2.9% Other), and age (LSA: M = 18.9, SD = 1.0, HSA: M = 21.5, SD = 6.0, t(32) = 1.7, p = .10). All of the participants were right-handed, had no family history of photic epilepsy, and reported normal or corrected-to-normal vision. All participants gave written informed consent and received course credits for their participation. All procedures were approved by the institutional review board of the University of Florida.

Design and Procedure

Guided by a recent validation study (Goeleven, De Raedt, Leyman, & Verschuere, 2008), 72 pictures were selected from the Karolinska directed emotional faces (KDEF) database (Lundqvist, Flykt, & Öhman, 1998), showing faces with angry, happy and neutral expressions in 12 female and 12 male actors, respectively. All stimuli were converted to grayscales to minimize luminance and color spectrum differences across pictures. Using presentation software (Neurobehavioral Systems, Inc., Albany, CA, USA), faces were displayed against a gray background on a 19- inch computer monitor with a vertical refresh rate of 70 Hz.

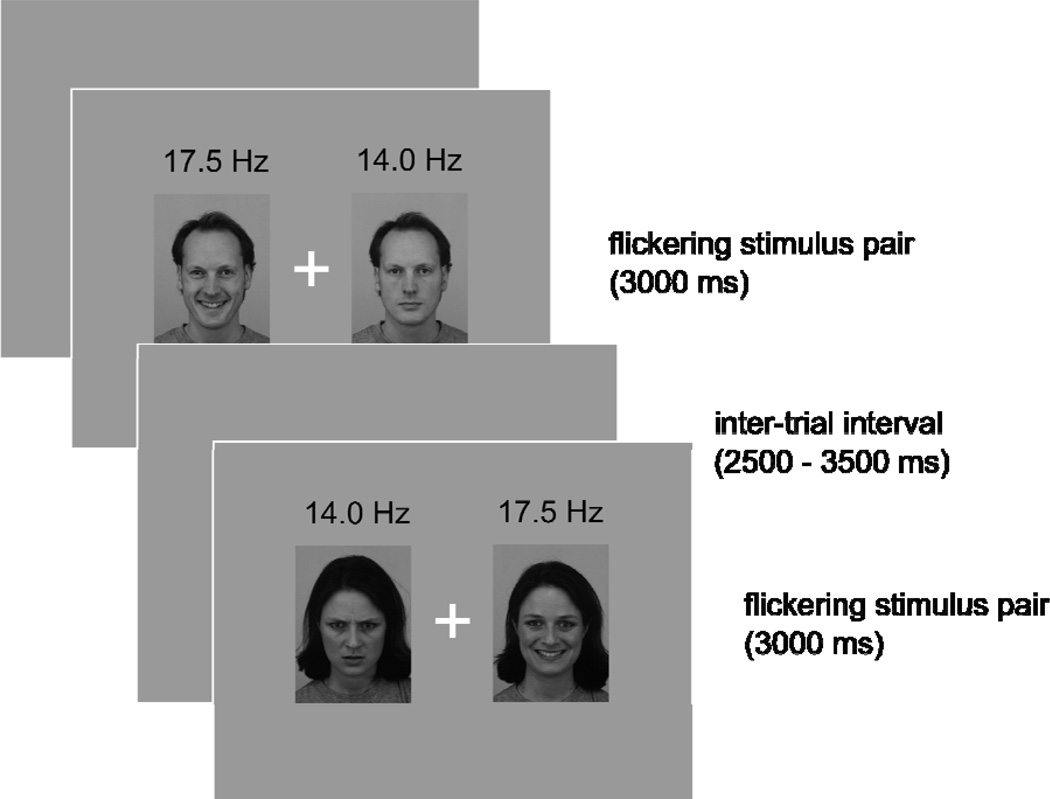

To induce competition on each experimental trial, two pictures were simultaneously presented parafoveally to both hemifields for 3000 ms. Faces were shown in a flickering mode to evoke ssVEPs with one picture flickering at a driving frequency of 14 Hz, and the other picture at a driving frequency of 17.5 Hz (see figure 1), to enable distinct tagging of each hemi-field stream. The array containing the two pictures subtended a horizontal visual angle of 9.1 degrees, the eccentricity of the center of the pictures to either side being 2.6 degrees. The distance between the screen and the participants’ eyes was 1.2 m. A central fixation point was present at the center of the screen throughout the experiment.

Figure 1.

Schematic representation of 2 experimental trials. A fixation point in the center of the screen was present at all times during the experiment. Inter-trial intervals varied randomly between 2500 and 3500 ms. In each trial, two stimuli showing the same actor were assigned to one visual hemifield respectively, flickering at 14 Hz and 17.5 Hz.

Participants were asked to maintain gaze on the fixation cross and to avoid eye movements. In order to facilitate central fixation, a simple change detection task was introduced, for which participants were asked to press a button whenever the color of the fixation cross changed from white to gray. Color changes appeared very rarely (3–5 times per session), and occurred only during inter-trial intervals, in order to avoid contamination of ongoing the ssVEP by motor potentials and transient responses to the task stimulus. Inter-trial intervals, in which the fixation cross was presented against a gray background, had durations between 2500 and 3500 ms. All expressions were combined and were fully crossed over visual hemifields, resulting in 9 conditions (angry-angry, angry-happy, angry-neutral, happy-neutral, happy-angry, happy-happy, neutral-happy, neutral-angry, neutral-neutral). Twenty-four stimulus pairs were created per condition, in which the two pictures were always taken from the same actor, resulting in 24 trials per condition. In one half of the trials of each condition, the left picture was presented at 14 Hz, and vice versa. This resulted in a total of 216 trials (24 trials × 9 conditions). The order of trials was pseudo-randomized. After the EEG recordings, subjects viewed the 72 different pictures again in a randomized order and were asked to rate the respective picture on the dimensions of affective valence and arousal on the 9-point Self-Assessment Manikin (SAM, Bradley & Lang, 1994). In this last block, neither hemifield presentation nor flickering was used; subjects viewed each picture for 6 s before the SAM scale was presented on the screen for rating.

EEG recording and data analysis

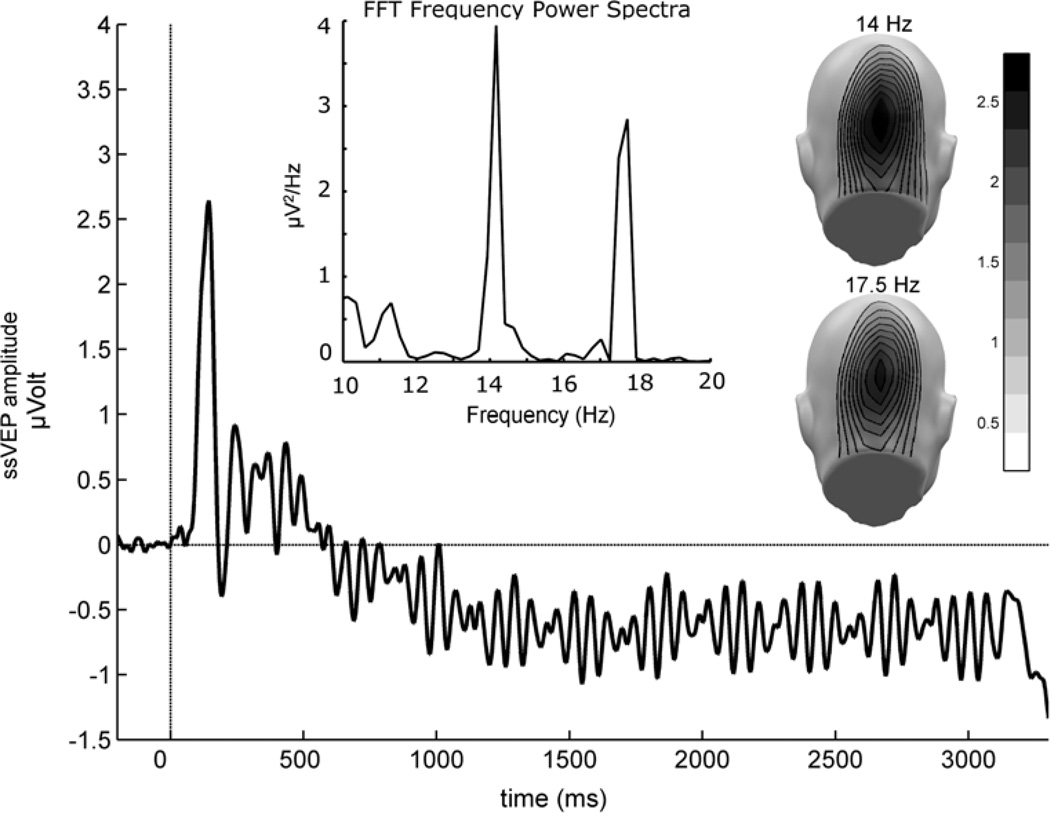

The Electroencephalogram (EEG) was continuously recorded from 129 electrodes using a Electrical Geodesics system (EGI, Eugene, OR, USA), referenced to Cz, digitized at a rate of 250 Hz, and online band-pass filtered between 0.1 and 50 Hz. Electrode impedances were kept below 50 kΩ, as recommended for the Electrical Geodesics high-impedance amplifiers. Offline, a low-pass filter of 40 Hz was applied. Epochs of 600 ms pre-stimulus and 3600 ms post-stimulus onset were extracted off-line. Artifact rejection was also performed off-line, following the procedure proposed by Junghöfer et al. (2000). Using this approach, trials with artifacts were identified based on the distribution of statistical parameters of the EEG epochs extracted (absolute value, standard deviation, maximum of the differences) across time points, for each channel, and - in a subsequent step - across channels. Sensors contaminated with artifacts were replaced by statistically weighted, spherical spline interpolated values. The maximum number of approximated channels in a given trial was set to 20. Such strict rejection criteria also allowed us to exclude trials contaminated by vertical and horizontal eye movements. Due to the long epochs and these stringent rejection criteria, the mean rejection rate across all conditions was 36%. The numbers of remaining trials did not differ between experimental conditions and groups. For interpolation and all subsequent analyses, data were arithmetically transformed to the average reference. Artifact-free epochs were averaged separately for the 18 combinations of hemifield and stimulus pairs to obtain ssVEPs containing both driving frequencies. The raw ssVEP for a representative electrode (POz), the Fast Fourier Transformation on this ssVEP and the spatial topography of the two driving frequencies averaged across all subjects and conditions are shown in Figure 2.

Figure 2.

Grand mean steady-state visual evoked potential averaged across all participants and conditions, recorded from an occipital electrode (approximately corresponding to POz of the extended 10–20 system). Note that the ssVEP in the present study contains a superposition of two driving frequencies (14 Hz and 17.5 Hz), as shown by the frequency domain representation of the same signal (Fast Fourier Transformation of the ssVEP in a time segment between 100 and 3000 ms) in the upper panel. The mean scalp topographies of both frequencies show clear medial posterior activity, over visual cortical areas.

The ssVEP amplitude for each condition was extracted by means of complex demodulation, which extracts a modulating signal from a carrier signal (Regan, 1989) using in-house written MATLAB scripts (for a more detailed description, see Müller et al., 2008). The analysis used the driving frequencies of the stimuli, 14.0 and 17.5 Hz, as target frequencies, and a third-order Butterworth low-pass filter with a cut-off frequency at 1 Hz (time resolution 140 ms full width at half maximum) was applied during complex demodulation. The time course of the ssVEPs was baseline corrected using a baseline interval of 240 ms (from −380 until −140 ms) prior to stimulus onset. This baseline was chosen to avoid subtraction of any post-stimulus activity together with the baseline, given the temporal smearing of the complex demodulated signal (see above). The overall mean ssVEP amplitude was calculated for the time window between 100 ms and 3000 ms after pictures onset. For each frequency, the face tagged with that same frequency was considered the target, and the picture tagged with the other frequency was considered the competitor. For example, in a given angry-happy trial, the angry face was presented at 14 Hz, and the happy face at 17.5 Hz. Complex demodulation at 14 Hz thus reflected the response to the angry face, with the happy face being the competing stimulus. Fully crossing face conditions with stimulation frequencies, all permutations of target faces and competing stimuli were extracted from the compound ssVEP signal. Thus, a time-varying measure of the amount of processing resources devoted to one stimulus in presence of another stimulus (competitor) was obtained. In order to examine the time course of early and late stages of attentional engagement, ssVEP amplitudes were averaged across time points in four time regions, between 100–500 ms, 500–1000 ms, 1000–2000 ms, and 2000–3000 ms after picture onset in addition to the mean interval over the whole time window.

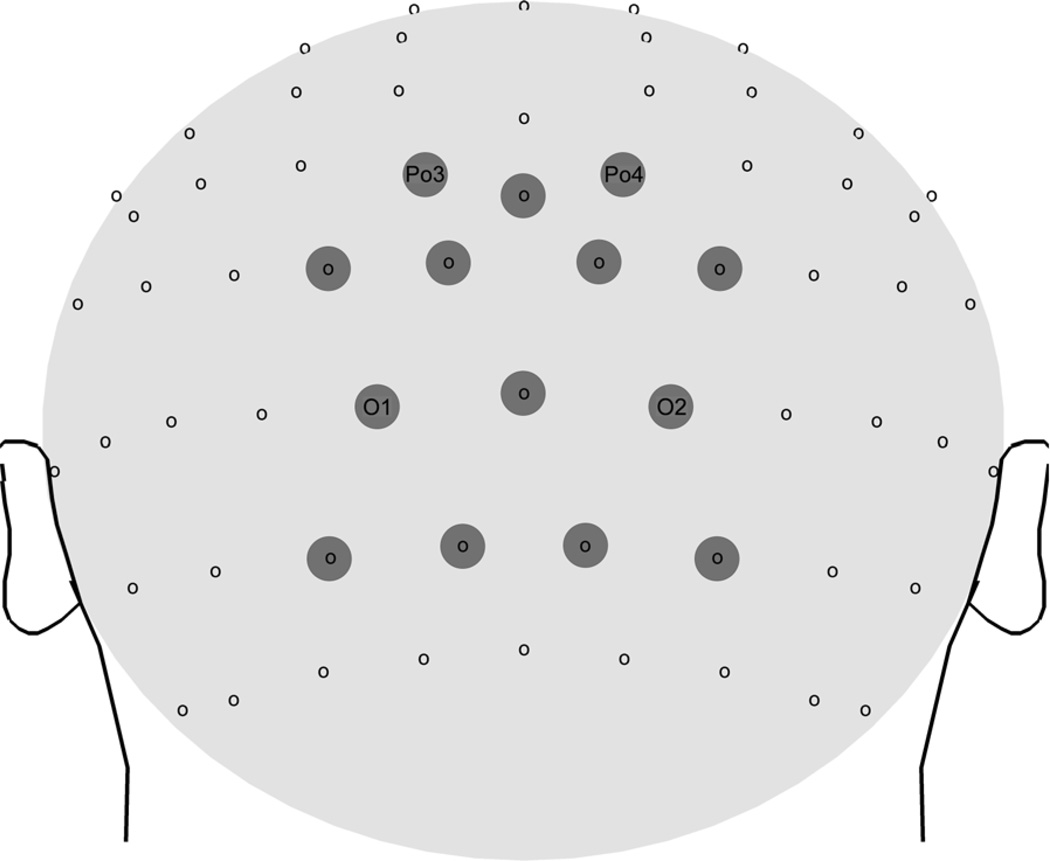

As was seen in previous work (e.g., Müller et al., 2008) amplitudes of the ssVEPs were most pronounced at electrode locations near Oz, over the occipital pole. We therefore averaged all signals spatially, across a medial-occipital cluster comprising site Oz and its 12 nearest neighbors (see Figure 3).

Figure 3.

Layout of the dense electrode array. Locations of the electrodes grouped for regional means (used for statistical analysis) are marked by gray circles. Approximations of electrode names of the international 10–20 system positions are given.

Statistical analysis

Mean ssVEP amplitudes were analyzed by repeated-measures ANOVAs (SPSS, Version 16.0., Chicago, IL, USA) containing the between-subject factor group (HSA vs LSA), and the within-subject factors tagged facial expression (angry vs. neutral vs. happy), competitor facial expression (angry vs. neutral vs. happy), and hemifield (left vs. right). Significant effects including facial expression were followed-up by simple planned contrast analyses with neutral facial expressions as reference. Significant effects including group were followed up by separate repeated-measures ANOVAs for each group.

In a second step and in order to have a more detailed analysis of the effects of the competitors on the ssVEP amplitudes, only trials including competing stimuli (i.e., angry-happy, angry-neutral, happy-neutral) were analyzed. It was predicted that in HSA the angry faces would act as strongest competitors in terms of subtracting processing resources. To this end, the mean ssVEP amplitudes for faces with angry expressions as competitors should be attenuated compared to faces competing with neutral and happy faces, especially for HSA participants. Thus, repeated-measures ANOVAs with Group as between-subject factor and stimulus-competitor combinations as within-subjects factor (neutral-happy, neutral-angry, happy-angry, happy-neutral, angry-happy, angry-neutral), and hemifield (left, right) were performed.

SAM ratings were averaged per facial expression and submitted to separate ANOVAs for valence and arousal ratings, containing the between-subject factor Group (LSA vs. HSA), and the within-subject factor facial expression (angry vs. neutral vs. happy).

A significance level of .05 (two-tailed) was used for all analyses. Throughout this manuscript, the uncorrected degrees of freedom, the corrected p-values, the Greenhouse-Geisser-ε, and the partial η2 (ηp2) are reported (Picton et al., 2000).

Results

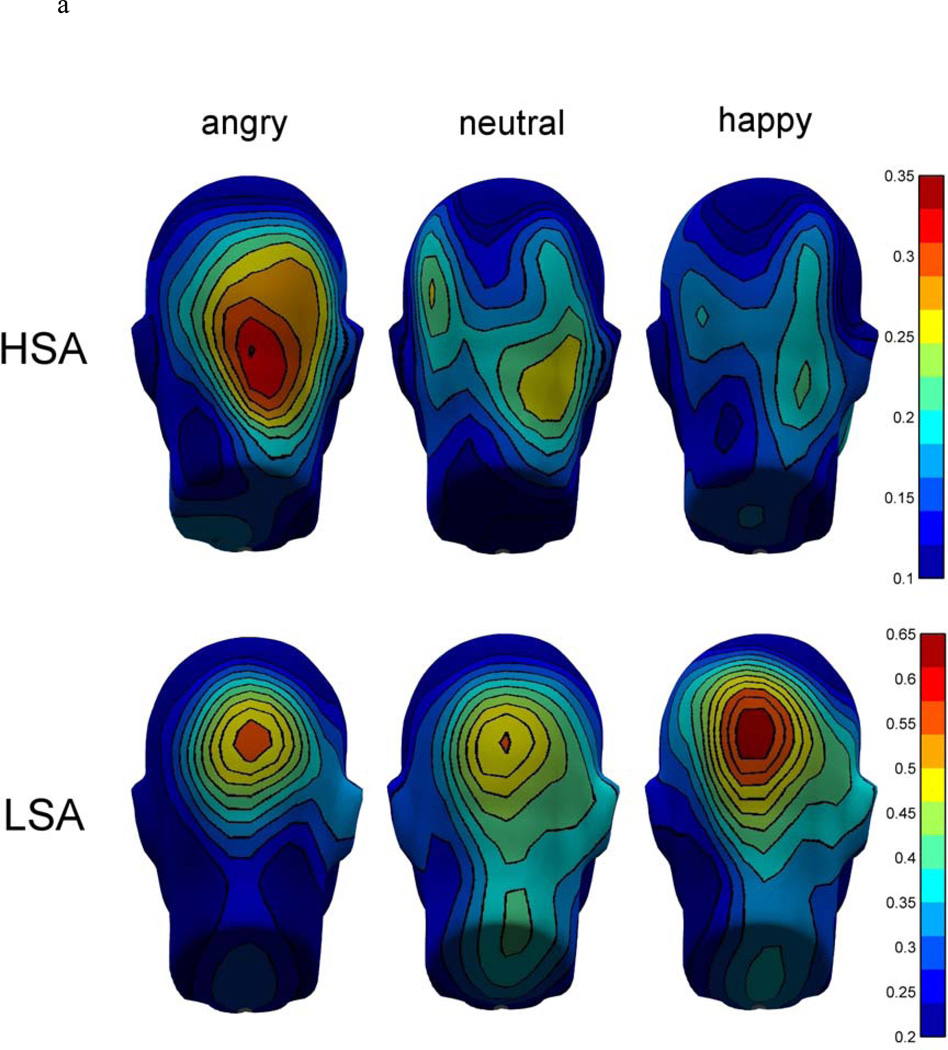

ssVEP amplitudes

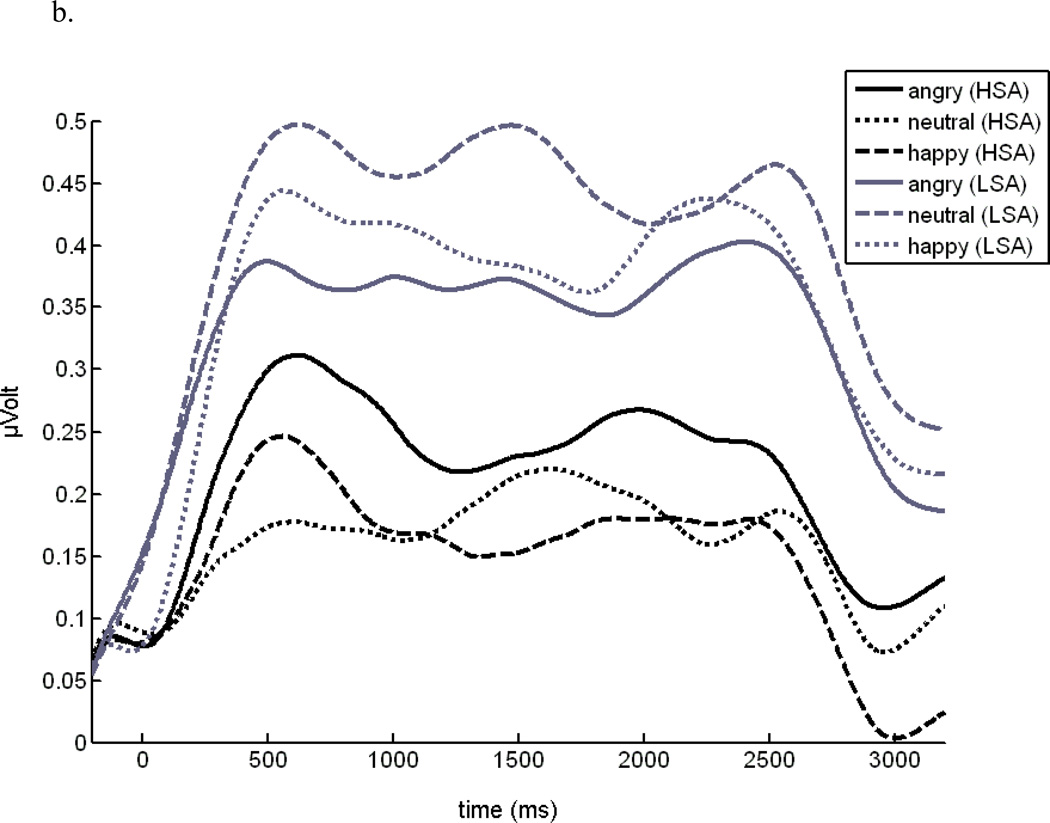

The analysis of the mean ssVEP amplitude over the whole time window of the stimulus presentation (100 – 3000 ms) revealed no significant overall difference between groups, F(1,32) = 2.30, p = .139, ηp2 = .05, but a significant Tagged facial expression × Group interaction, F(2,64) = 4.20, p = .029, GG-ε = .77, ηp2 = .12 1. The time course of the ssVEP amplitudes in both experimental groups and the mean topographical distributions of the ssVEP amplitudes are shown in Figure 4a and b.

Figure 4.

a. Grand mean topographical distribution of the ssVEP amplitudes in response to angry, neutral, and happy facial expressions, shown separately for high socially anxious (HSA) and low socially anxious (LSA) participants, collapsed over the three competitor conditions and both hemifields. Grand means are averaged across a time window between 100 and 3000 ms after stimulus onset. Note that scales used for both groups are different.

b. Mean time course of ssVEP amplitudes elicited by angry, happy, and neutral facial expressions collapsed over competitor and hemifield condition, separated for high socially (HSA) and low socially anxious (LSA) participants.

Separate ANOVAs performed for each group revealed that this interaction was due to a significant main effect of tagged facial expression in the HSA group, only, F(2,32) = 3.45, p = .043, ηp2 = .18. Planned contrasts showed that angry facial expressions regardless of the competing stimulus caused larger ssVEP amplitudes compared to happy faces as well as neutral faces, F(1,16) = 4.54, p = .048, ηp2 = .22, and F(1,16) = 4.01, p = .063, ηp2 = .20, respectively, whereas no differences emerged between happy and neutral faces. This effect was independent of the visual hemifield, in which the facial expressions were presented (mean amplitudes for each time interval are given in table 1).

Table 1.

Means (SD) of ssVEP amplitudes (µVolt) averaged across given time intervals by condition and group.

| tagged - competitor face |

angry angry |

angry neutral |

angry happy |

neutral angry |

neutral neutral |

neutral happy |

happy angry |

happy neutral |

happy happy |

|---|---|---|---|---|---|---|---|---|---|

| 100 – 3000 ms | |||||||||

| LSA | 0.38 (0.58) | 0.36 (0.47) | 0.42 (0.52) | 0.41 (0.66) | 0.38 (0.65) | 0.40 (0.65) | 0.50 (0.63) | 0.45 (0.61) | 0.46 (0.62) |

| HSA | 0,30 (0.27) | 0,22 (0.22) | 0,22 (0.21) | 0,15 (0.21) | 0,18 (0.24) | 0,19 (0.23) | 0,14 (0.23) | 0,22 (0.28) | 0,14 (0.30) |

| 500 – 1000 ms | |||||||||

| LSA | 0.36 (0.59) | 0.37 (0.53) | 0.46 (0.50) | 0.43 (0.67) | 0.46 (0.71) | 0.45 (0.68) | 0.54 (0.68) | 0.53 (0.66) | 0.48 (0.56) |

| HSA | 0.35 (0.27) | 0.30 (0.27) | 0.26 (0.25) | 0.18 (0.25) | 0.15 (0.32) | 0.20 (0.25) | 0.20 (0.27) | 0.23 (0.35) | 0.23 (0.37) |

| 1000 – 2000 ms | |||||||||

| LSA | 0.35 (0.55) | 0.34 (0.46) | 0.41 (0.59) | 0.43 (0.75) | 0.36 (0.60) | 0.46 (0.71) | 0.50 (0.67) | 0.42 (0.63) | 0.45 (0.71) |

| HSA | 0.28 (0.34) | 0.22 (0.29) | 0.19 (0.33) | 0.17 (0.32) | 0.15 (0.29) | 0.08 (0.25) | 0.16 (0.26) | 0.16 (0.32) | 0.13 (0.39) |

| 2000 – 3000 ms | |||||||||

| LSA | 0.35 (0.55) | 0.34 (0.46) | 0.41 (0.59) | 0.43 (0.75) | 0.36 (0.60) | 0.46 (0.71) | 0.50 (0.67) | 0.42 (0.63) | 0.45 (0.71) |

| HSA | 0.28 (0.34) | 0.22 (0.29) | 0.19 (0.33) | 0.17 (0.32) | 0.15 (0.29) | 0.08 (0.25) | 0.16 (0.26) | 0.16 (0.32) | 0.13 (0.39) |

Note. LSA = low social anxiety. HSA = high social anxiety.

In the first time interval window of the ssVEP (100 – 500 ms), no significant effects were detected. A 3-way interaction of Hemifield × Tagged facial expression × Group, F(2,64) = 2.98, p = .058, ηp2 = .09, did not reach significance.

The analysis of the time interval from 500 to 1000 ms showed significant 2-way interactions of Tagged facial expression × Group, F(2,64) = 5.63, p = .006, ηp2 = .15, and Tagged facial expression × Hemifield, F(2,64) = 3.55, p = .034, ηp2 = .10, which were further qualified by the significant 3-way interaction Hemifield × Tagged facial expression × Group, F(2,64) = 3.78, p = .028, ηp2 = .11. This interaction was followed up by ANOVAs for each group. For the LSA group, the interaction Hemifield × Tagged facial expression reached statistical significance, F(2,32) = 3.82, p = .032, ηp2 = .19. This effect was due to a significant main effect of the tagged facial expression for the right hemifield condition, F(2,32) = 5.45, p = .009, ηp2 = .25, indicating larger ssVEP amplitudes for happy compared to angry, and to a minor extent, neutral faces, F(1,16) = 13.94, p = .002, ηp2 = .47, and F(1,16) = 4.42, p = .052, ηp2 = .22, respectively. For the HSA group, the ANOVA yielded a main effect of tagged facial expression, F(2,32) = 5.96, p = .002, ηp2 = .27, and the significant interaction Hemifield × Tagged facial expression, F(2,32) = 3.53, p = .041, ηp2 = .18. The ssVEP was only modulated by facial expressions presented in the left hemifield, F(2,32) = 7.27, p = .003, ηp2 = .31. Angry, but not happy faces elicited larger ssVEP amplitudes compared to neutral faces, F(1,16) = 11.92, p = .003, ηp2 = .25. For the time interval 1000 – 2000 ms, a significant interaction Tagged facial expression × Group, was found, F(2,64) = 4.66, p = .013, ηp2 = .13. Separate ANOVAs for the groups, however, did not yield significant results, although the main effect of the tagged facial expression was marginally significant in LSA, F(2,32) = 2.75, p = .079, ηp2 = .14. For the last time window, 2000–3000 ms, statistical analysis also yielded a significantly different modulation of ssVEPs by facial expressions across groups, F(2,64) = 3.97, p = .034, ηp2 = .11. Post-hoc ANOVAs for each group revealed that ssVEP amplitudes were modulated by facial expressions only in HSA, F(2,32) = 3.34, p = .048, ηp2 =.17. In this group, angry faces elicited larger ssVEP amplitudes than neutral faces, and although to a lesser extent larger than happy faces, F(1,16) = 5.72, p = .029, ηp2 = .26, and F(1,16) = 3.61, p = .076, ηp2 = .18, respectively.

Taken together, the separate analysis of the consecutive time intervals supports the results of the overall ANOVA (see table 1), showing sustained preferential processing of angry faces, but did not suggest specific dynamic changes over time.

Competition analysis

The specific analysis of potential effects of the competing stimulus on the processing of facial expressions was carried out only for the stimulus pairs containing different faces for all the time windows as used in the main analysis. This analysis did not yield any significant results involving the factors stimulus-competitor combination, hemifield, or the group factor. Hence, no evidence for effects of competition of facial expressions was detected. With regards to the statistical power, the effect size (ηp2 = .04) for the interaction group × stimulus combination indicates that the non-significance may not be due to low statistical power.

Carrier frequencies

In order to exclude the possibility that the different tagging frequencies (14 Hz and 17.5 Hz) affected the modulation of the ssVEPs, we also conducted the omnibus ANOVA on the whole time window as shown above containing the additional within-subject factor carrier frequency (14 vs. 17.5 Hz). No associated effects were revealed.

SAM ratings

The analysis of the valence and arousal ratings of the facial expressions did not yield any differences between HSA and LSA. Overall, participants rated the emotional expressions as differentially arousing, F(2,64) = 9.00, p = .001, ηp2 = .22. Post-hoc t tests revealed that angry (M = 4.66, SD = 1.51) as well as happy faces (M = 4.87, SD = 1.26) were rated as more arousing than neutral ones (M = 3.98, SD = 1.11), t(33) = 3.47, p = .001, and t(33) = 4.56, p < .001, whereas no difference was found between angry and happy facial expressions, t(33) = 0.84, p = .40. As expected, pleasantness/unpleasantness ratings varied with facial expression, F(2,64) = 107.41, GG-ε = .60, p < .001, ηp2 = .77. Planned contrasts indicated that happy faces (M = 3.64, SD = 0.90) were rated as more pleasant compared to neutral (M = 5.45, SD = 0.37) as well as angry faces (M = 6.37, SD = 0.87), t(33) = 11.92, p < .001, and t(33) = 10.75, p < .001, respectively. Furthermore, angry faces were rated as more unpleasant than neutral faces, t(33) = 6.16, p < .001. Taken together, emotional faces were rated as more arousing than neutral faces, and as more pleasant (happy faces) and unpleasant (angry faces), respectively.

Discussion

The present study investigated continuous fluctuations of selective electrocortical facilitation during competition of two simultaneously presented facial expressions in high and low socially anxious individuals by means of steady-state VEPs in a passive viewing paradigm. We found that angry facial expressions elicited enhanced ssVEP amplitudes, compared to happy and neutral expressions, specifically in the high socially anxious individuals. No reliable amplitude differences were found for low socially anxious individuals. This pattern of results was independent of the facial expression presented as the competing stimulus. Heightened electrocortical engagement in the socially anxious participants was present in the first second of stimulus array presentation, and was sustained for the entire viewing period. Thus, the present results support the idea that, under passive viewing conditions of simultaneously presented facial expressions, angry facial expressions are preferentially selected by high socially anxious individuals. Furthermore, and in contrast to a vigilance-avoidance pattern of attention allocation, this attentional capture of angry faces was sustained for the entire stimulation period without any evidence of perceptual avoidance in later stages of attentive processing. These findings are in line with research demonstrating heightened perceptual vigilance for social threat (angry faces) in social anxiety (see for a review, Schultz & Heimberg, 2008), but extend previous behavioral and eye-tracking studies by virtue of employing a continuous neurophysiological measure of perceptual processing under competition: The finding of a sustained ssVEP facilitation for angry faces in the high anxious group is consistent with the notion that socially anxious individuals have difficulty disengaging attention from social threat cues (Fox, Russo, & Dutton, 2002; Georgiou et al., 2005; Schutter, de Haan, & van Honk, 2004), and it shows that this deficit exists on the level of sensory processing. In addition, the amount of perceptual competition between two face stimuli was quantified at high temporal resolution, based on frequency tagging of the stimuli. The temporal analysis of the time-varying ssVEP amplitude demonstrated that the sustained prioritization of visual processing of the threat cue appears early and rapidly in socially anxious individuals, as it is present during the build-up phase of the driven oscillation that is the ssVEP and maintained throughout the viewing epoch.

Strikingly, the ssVEP amplitudes in the LSA were higher than in HSA, albeit not statistically significant. This is due to much larger variability in the ssVEP amplitudes in the LSA group compared to the HSA group. Most likely, individuals with medium to low scores in social anxiety measures show a high variability in their response to facial expressions. There is an ongoing debate whether or not non-anxious individuals show any sign of preferential processing of angry or happy facial expressions (e.g., Juth et al., 2005; Öhman, Lundqvist, & Esteves, 2001), whereas high anxious participants show more uniformly an attentional bias for threatening faces (Bar-Haim et al., 2007), as also revealed in our data. Consequently, the higher variability in ssVEP amplitudes can be expected for the low, compared to the high socially anxious individuals.

A central question of the present study was related to the competition for resources between the two frequency-tagged stimuli in the field of view. If angry faces capture and hold resources, then they should act as competitors, interfering with processing of a concurrent stimulus. Alternatively, weak cues may bias attention to a particular location or visual feature, but may not significantly tap into the resources necessary to process the competing visual object. Examining both allocation and cost effects associated with viewing two competing faces, we found no evidence for resource sharing effects imposed by a competing face, irrespective of facial expression and group. Together with the enhancement effect observed for angry faces in the high anxious, this result suggests that the presence of a social threat cue results in allocation of additional resources, that are not at the cost of a competing social (face) stimulus. Such additive effects of attended cues and their emotional content have been observed in earlier work with ssVEPs (Keil et al., 2005), which has been taken to indicate that affective cues in non-attended areas of the visual field are associated with facilitated cortical processing (Keil et al., 2001). We also found that addition of an angry face in the non-tagged hemifield did not additionally increase the amplitude as opposed to the explicit spatial attention manipulation in Keil et al., (2005). This emphasizes an important difference of whether attention is directed to a task in a given hemifield as opposed to whether the spontaneous sharing of resources across the hemifields is measured by means of frequency-tagging.

Another explanation for the lack of competition effects might be that faces in general are not as emotionally arousing as other emotional stimuli (complex scenes), which has been demonstrated in several psychophysiological and brain imaging studies (Bradley, Codispoti, Cuthbert, & Lang, 2001; Britton, Taylor, Sudheimer, & Liberzon, 2006). Furthermore, research on face processing has shown that competition effects are more likely to be observed under conditions where a non-face object is competing with a face (Langton, Law, Burton, & Schweinberger, 2008). As measured by ssVEPs, resources are devoted to the angry face in social anxiety; however, this does not attenuate processing of other, competing, facial expressions. It has to be noted that given the passive viewing design in our study, no strong inferences may be drawn with regards to the behavioral relevance of such competition effects, as there was no explicit attention task involving the face stimuli. Such a task would be a critical condition to quantify any distracting effects of the competitor face on the behavioral level: For instance, emotionally arousing but task-irrelevant background pictures (complex scenes) have been demonstrated to withdraw processing resources from a challenging motion detection task (Müller et al., 2008).

In addition to examining the effects of the nature of competing stimuli and tasks, future research will also systematically evaluate the role of their spatial arrangement. Using ssVEPs it has been shown that the attentional focus can be divided in non-continguous zones of the visual field (Müller et al., 2003). Thus, the lack of competition effects observed in our study might reflect the ability to allocate resources across the field of view, to facilitate perception of the spatially separated social stimuli. In a similar vein, ERP studies of emotion-attention interactions have suggested that the late positive potential (LPP), an electrocortical measure of emotional engagement, varies with content, even when competing tasks are present in a spatially overlapping fashion (Hajcak, Dunning, & Foti, 2007). When shifting the focus of spatial attention between arousing and non-arousing elements of the same picture stimulus, the LPP was still relatively enhanced when attending to affectively neutral elements in an otherwise arousing stimulus (Dunning & Hajcak, 2009). Interestingly, if unattended emotional stimuli were presented at spatially separated sites, content differences were no longer reflected in the LPP amplitude (MacNamara & Hajcak, 2009).

In terms of neurophysiology of the present results, one candidate structure potentially underlying the enhanced visual processing of angry facial expressions in socially anxious observers is the amygdaloidal complex (Sabatinelli, Lang, Keil, & Bradley, 2007). Neuroanatomically, there are dense re-entrant connections from amygdaloid nuclei to all sensory cortical areas (Amaral, Behniea, & Kelly, 2003), possibly facilitating processing in visual cortex and thus underlying attention to potential sources of threat (Sabatinelli, Lang, Bradley, Costa, & Keil, 2009). In the context of the present study, angry compared to happy and neutral facial expressions may serve as discriminant stimuli with high predictive value for negative outcomes (such as negative feedback and disapproval) and thus may be associated with amplified sensory processing to facilitate detection of social threat.

Recent work with oculomotor and elelctrophysiological data has suggested that sustained sensory processing of the angry faces might also be due to deficits in top-down control of attention in socially anxious individuals (Wieser, Pauli, & Mühlberger, 2009). Theoretical models of clinical anxiety posit for instance that anxiety disorders are associated with impaired attentional control capabilities (Eysenck, Derakshan, Santos, & Calvo, 2007). Heightened anxiety has been theoretically related to diminished top-down regulation of amygdala responses to threatening stimuli by prefrontal cortical areas (Bishop, 2007), and a recent fMRI study reported that in socially anxious individuals, the activation of prefrontal brain areas is reduced during viewing of facial expressions, whereas activations in a widespread emotional network are enhanced (Gentili et al., 2008). Taken together, enhanced sensory processing of phobic stimuli may be due to various modulating connections from cortical and sub-cortical structures to early visual systems, leading to a preferential selection of angry faces without costs for processing other stimuli present in the visual field.

With regards to the vigilance-avoidance hypothesis of social anxiety, our present findings do not support the assumption of perceptual avoidance, but point to anxious observers’ tendency to overengage attention to cues of social threat (Rapee & Heimberg, 1997), and to do so in a sustained fashion. Similar findings have been reported when socially anxious participants performed a task with a live audience and were shown to more vigilantly monitor audience behavior indicative of negative evaluation such as frowning and yawning (Veljaca & Rapee, 1998). Research such as this supports Rapee and Heimberg’s (1997) model of social anxiety, in which a continuous monitoring of social threat is assumed as an important factor contributing to maintaining the symptoms of the disorder. In this model, detection of threat in the environment (e.g., threatening faces in an audience) leads to an enhanced focus on the internal representation of the self (e.g., cognitions about how incompetent one appears to others), which in turn further augments the vigilance for threat cues in the environment that likely confirm the person’s fears (Heimberg & Becker, 2002).

One reason for the lack of evidence for perceptual avoidance of angry faces in our study may be related to the fact that the relevant stimulus array consisted of two faces in all conditions. Perceptual avoidance, however, may require a non-face option in the visual field (Chen et al., 2002). In the present experimental design, avoiding one face would likely involve attending another face, which might be an uncomfortable alternative for the socially anxious participants (Wieser et al., 2009). Additionally, it is worth noting that support for perceptual avoidance of threatening faces has primarily been revealed in dot-probe experiments including social threat induction with anticipation of an upcoming speech performance (Chen et al., 2002; Mansell et al., 1999), a manipulation not part of the present study.

Despite the differences in the electrocortical responses to angry faces between groups, no such differences were observed in the subjective ratings of facial expressions. This is in line with former studies, in which affective ratings of facial expressions revealed no difference between healthy controls and social phobia patients (Kolassa et al., 2009). Likewise, a recent study found modulation of electrocortical responses to angry versus neutral faces in anxious participants, but ratings of the faces were unaffected by state social anxiety (Wieser et al., 2010), a dissociation the neural and behavioral level that has been repeatedly reported (Bar-Haim, Lamy, & Glickman, 2005; Moser et al., 2008).

Overall, the present results support the hypothesis that angry facial expressions are preferentially processed in socially anxious individuals, only. No preferential processing was found in non-anxious participants, challenging the assumption of a general processing advantage of angry facial expressions. Under passive viewing conditions, this selective processing takes place throughout the entire presentation of an angry face. This supports the notion that angry faces bear high relevance for socially anxious individuals and for a perceptual subset of fear networks attract attention involuntarily through re-entrant modulation of sensory stimulus processing most likely to allow for prioritized responses to threat stimuli. Such sustained vigilance to threat may also reflect an impaired habituation of socially anxious individuals to threat (Eckman & Shean, 1997; Mauss, Wilhelm, & Gross, 2003), a putative factor in the maintenance of pathological anxiety.

Social threat stimuli were selectively processed over longer viewing periods. The lack of competition effects across locations in space suggests that emotional faces attract resources and facilitate perceptual and attentive processing, but not at the cost of concurrent processing. Thus, the overall signal energy allocated to the visual array as a whole is increased if a threat cue is present. Future research may use concurrent ERP and ssVEP measures to examine the temporal development of these effects at higher temporal resolution. A pertinent clinical research question will be whether the time course of attentional resource allocation is reliably related to symptom severity in patients with social phobia, and whether it can be used to characterize the perceptual/attention dysfunctions commonly seen in this disorder.

Acknowledgments

The authors would like to thank Raena Marder and Hailey Waddell for help in data acquisition.

This research was supported by a fellowship within the Postdoc-Program of the German Academic Exchange Service (DAAD) and a Research Training Award by the Society for Psychophysiological Research (SPR) awarded to M.J.W.

Footnotes

In order to test for the contribution of pairs where both facial expressions were the same (i.e., angry - angry, neutral - neutral, happy - happy), an ANOVA with the between-subject factor group, and the within-subject factors Tagged facial expression was conducted. A significant Tagged facial expression × Group interaction was found, F(2,64) = 3.17, p = .049, ηp2 = .09, Post-hoc comparions in each group showed a marginally significant modulation of ssvEPs only in HSA, F(2,64) = 3.12, p = .058, ηp2 = .16, which was due to larger ssVEP amplitudes in response to angry facial expressions compared to neutral facial expression pairs, F(1,16) = 4.53, p = .049, ηp2 = .16. Given this small effect size compared to the overall deisgn, this analysis strongly suggests that the significant effect in the overall ANOVA is not merely due to the inclusion of the pairs where both facial expressions were the same.

References

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118(4):1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Lamy D, Glickman S. Attentional bias in anxiety: a behavioral and ERP study. Brain and Cognition. 2005;59(1):11–22. doi: 10.1016/j.bandc.2005.03.005. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Lamy D, Pergamin L, Bakermans-Kranenburg MJ, van Ijzendoorn MH. Threat-related attentional bias in anxious and nonanxious individuals: A meta-analytic study. Psychological Bulletin. 2007;133(1):1–24. doi: 10.1037/0033-2909.133.1.1. [DOI] [PubMed] [Google Scholar]

- Birbaumer N, Grodd W, Diedrich O, Klose U, Erb M, Lotze M, et al. fMRI reveals amygdala activation to human faces in social phobics. Neuroreport. 1998;9(6):1223–1226. doi: 10.1097/00001756-199804200-00048. [DOI] [PubMed] [Google Scholar]

- Bishop SJ. Neurocognitive mechanisms of anxiety: An integrative account. Trends in Cognitive Sciences. 2007;11(7):307–316. doi: 10.1016/j.tics.2007.05.008. [DOI] [PubMed] [Google Scholar]

- Bradley BP, Mogg K, Falla SJ, Hamilton LR. Attentional bias for threatening facial expressions in anxiety: Manipulation of stimulus duration. Cognition and Emotion. 1998;12(6):737–753. [Google Scholar]

- Bradley BP, Mogg K, Millar NH. Covert and overt orienting of attention to emotional faces in anxiety. Cognition and Emotion. 2000;14(6):789–808. [Google Scholar]

- Bradley MM, Codispoti M, Cuthbert BN, Lang PJ. Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion. 2001;1(3):276–298. [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: The Self-Assessment Manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Sabatinelli D, Lang PJ, Fitzsimmons JR, King W, Desai P. Activation of the visual cortex in motivated attention. Behavioral Neuroscience. 2003;117(2):369–380. doi: 10.1037/0735-7044.117.2.369. [DOI] [PubMed] [Google Scholar]

- Britton JC, Taylor SF, Sudheimer KD, Liberzon I. Facial expressions and complex IAPS pictures: Common and diffferential networks. Neuroimage. 2006;31:906–919. doi: 10.1016/j.neuroimage.2005.12.050. [DOI] [PubMed] [Google Scholar]

- Chen YP, Ehlers A, Clark DM, Mansell W. Patients with generalized social phobia direct their attention away from faces. Behaviour Research and Therapy. 2002;40(6):677–687. doi: 10.1016/s0005-7967(01)00086-9. [DOI] [PubMed] [Google Scholar]

- Desimone R. Visual attention mediated by biased competition in extrastriate visual cortex. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 1998;353(1373):1245–1255. doi: 10.1098/rstb.1998.0280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Di Russo F, Pitzalis S, Aprile T, Spitoni G, Patria F, Stella A, et al. Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Human Brain Mapping. 2007;28(4):323–334. doi: 10.1002/hbm.20276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunning JP, Hajcak G. See no evil: Directing visual attention within unpleasant images modulates the electrocortical response. Psychophysiology. 2009;46(1):28–33. doi: 10.1111/j.1469-8986.2008.00723.x. [DOI] [PubMed] [Google Scholar]

- Eckman PS, Shean GD. Habituation of cognitive and physiological arousal and social anxiety. Behaviour Research and Therapy. 1997;35(12):1113–1121. [PubMed] [Google Scholar]

- Evans KC, Wright CI, Wedig MM, Gold AL, Pollack MH, Rauch SL. A functional MRI study of amygdala responses to angry schematic faces in social anxiety disorder. Depression and Anxiety. 2008;25(6):496–505. doi: 10.1002/da.20347. [DOI] [PubMed] [Google Scholar]

- Eysenck MW, Derakshan N, Santos R, Calvo MG. Anxiety and cognitive performance: Attentional control theory. Emotion. 2007;7(2):336–353. doi: 10.1037/1528-3542.7.2.336. [DOI] [PubMed] [Google Scholar]

- Fox E, Russo R, Dutton K. Attentional bias for threat: Evidence for delayed disengagement from emotional faces. Cognition & Emotion. 2002;16(3):355–379. doi: 10.1080/02699930143000527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fresco DM, Coles ME, Heimberg RG, Leibowitz MR, Hami S, Stein MB, et al. The Liebowitz Social Anxiety Scale: A comparison of the psychometric properties of self-report and clinician-administered formats. Psychological Medicine. 2001;31(6):1025–1035. doi: 10.1017/s0033291701004056. [DOI] [PubMed] [Google Scholar]

- Garner M, Mogg K, Bradley BP. Orienting and maintenance of gaze to facial expressions in social anxiety. Journal of Abnormal Psychology. 2006;115(4):760–770. doi: 10.1037/0021-843X.115.4.760. [DOI] [PubMed] [Google Scholar]

- Gentili C, Gobbini MI, Ricciardi E, Vanello N, Pietrini P, Haxby JV, et al. Differential modulation of neural activity throughout the distributed neural system for face perception in patients with Social Phobia and healthy subjects. Brain Research Bulletin. 2008;77(5):286–292. doi: 10.1016/j.brainresbull.2008.08.003. [DOI] [PubMed] [Google Scholar]

- Georgiou GA, Bleakley C, Hayward J, Russo R, Dutton K, Eltiti S, et al. Focusing on fear: Attentional disengagement from emotional faces. Visual Cognition. 2005;12(1):145–158. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeleven E, De Raedt R, Leyman L, Verschuere B. The Karolinska directed emotional faces: A validation study. Cognition and Emotion. 2008;22(6):1094–1118. [Google Scholar]

- Hajcak G, Dunning JP, Foti D. Neural response to emotional pictures is unaffected by concurrent task difficulty: An event-related potential study. Behavioral Neuroscience. 2007;121(6):1156–1162. doi: 10.1037/0735-7044.121.6.1156. [DOI] [PubMed] [Google Scholar]

- Heimberg RG, Becker RE. Cognitive-behavioral group therapy for social phobia: Basic mechanisms and clinical strategies. New York: The Guilford Press; 2002. [Google Scholar]

- Hillyard SA, Vogel EK, Luck SJ. Sensory gain control (amplification) as a mechanism of selective attention: electrophysiological and neuroimaging evidence. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 1998;353(1373):1257–1270. doi: 10.1098/rstb.1998.0281. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37(4):523–532. [PubMed] [Google Scholar]

- Juth P, Lundqvist D, Karlsson A, Öhman A. Looking for foes and friends: Perceptual and emotional factors when finding a face in the Crowd. Emotion. 2005;5(4):379–395. doi: 10.1037/1528-3542.5.4.379. [DOI] [PubMed] [Google Scholar]

- Keil A, Moratti S, Sabatinelli D, Bradley MM, Lang PJ. Additive effects of emotional content and spatial selective attention on electrocortical facilitation. Cerebral Cortex. 2005;15(8):1187–1197. doi: 10.1093/cercor/bhi001. [DOI] [PubMed] [Google Scholar]

- Keil A, Moratti S, Stolarova M. Sensory amplification for affectively arousing stimuli: Electrocortical evidence across multiple channels. Journal of Psychophysiology. 2003;17(3):158–159. [Google Scholar]

- Keil A, Muller MM, Gruber T, Wienbruch C, Stolarova M, Elbert T. Effects of emotional arousal in the cerebral hemispheres: a study of oscillatory brain activity and event-related potentials. Clinical Neurophysiology. 2001;112(11):2057–2068. doi: 10.1016/s1388-2457(01)00654-x. [DOI] [PubMed] [Google Scholar]

- Keil A, Smith JC, Wangelin BC, Sabatinelli D, Bradley MM, Lang PJ. Electrocortical and electrodermal responses covary as a function of emotional arousal: A single-trial analysis. Psychophysiology. 2008;45(4):516–523. doi: 10.1111/j.1469-8986.2008.00667.x. [DOI] [PubMed] [Google Scholar]

- Kemp AH, Gray MA, Eide P, Silberstein RB, Nathan PJ. Steady-state visually evoked potential topography during processing of emotional valence in healthy subjects. NeuroImage. 2002;17(4):1684–1692. doi: 10.1006/nimg.2002.1298. [DOI] [PubMed] [Google Scholar]

- Kolassa I-T, Kolassa S, Musial F, Miltner WHR. Event-related potentials to schematic faces in social phobia. Cognition and Emotion. 2007;21(8):1721–1744. [Google Scholar]

- Kolassa I-T, Miltner WHR. Psychophysiological correlates of face processing in social phobia. Brain Research. 2006;1118(1):130–141. doi: 10.1016/j.brainres.2006.08.019. [DOI] [PubMed] [Google Scholar]

- Kolassa IT, Kolassa S, Bergmann S, Lauche R, Dilger S, Miltner WHR, et al. Interpretive bias in social phobia: An ERP study with morphed emotional schematic faces. Cognition & Emotion. 2009;23(1):69–95. [Google Scholar]

- Koster EHW, Crombez G, Verschuere B, De Houwer J. Selective attention to threat in the dot probe paradigm: differentiating vigilance and difficulty to disengage. Behaviour Research and Therapy. 2004;42:1183–1192. doi: 10.1016/j.brat.2003.08.001. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Motivated attention: Affect, activation, and action. In: Lang PJ, Simons RF, Balaban MT, editors. Attention and Orienting: Sensory and Motivational Processes. Mahwah, NJ: Erlbaum; 1997. pp. 97–135. [Google Scholar]

- Lang PJ, Bradley MM, Fitzsimmons JR, Cuthbert BN, Scott JD, Moulder B, et al. Emotional arousal and activation of the visual cortex: an fMRI analysis. Psychophysiology. 1998;35(2):199–210. [PubMed] [Google Scholar]

- Langton SRH, Law AS, Burton AM, Schweinberger SR. Attention capture by faces. Cognition. 2008;107(1):330–342. doi: 10.1016/j.cognition.2007.07.012. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. Karolinska directed emotional faces (KDEF) Stockholm: Karolinska Institutet; 1998. [Google Scholar]

- MacNamara A, Hajcak G. Anxiety and spatial attention moderate the electrocortical response to aversive pictures. Neuropsychologia. 2009;47(13):2975–2980. doi: 10.1016/j.neuropsychologia.2009.06.026. [DOI] [PubMed] [Google Scholar]

- Mansell W, Clark DM, Ehlers A, Chen YP. Social anxiety and attention away from emotional faces. Cognition and Emotion. 1999;13(6):673–690. [Google Scholar]

- Mauss IB, Wilhelm FH, Gross JJ. Autonomic recovery and habituation in social anxiety. Psychophysiology. 2003;40(4):648–653. doi: 10.1111/1469-8986.00066. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. Selective orienting of attention to masked threat faces in social anxiety. Behaviour Research and Therapy. 2002;40(12):1403–1414. doi: 10.1016/s0005-7967(02)00017-7. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP, De Bono J, Painter M. Time course of attentional bias for threat information in non-clinical anxiety. Behaviour Research and Therapy. 1997;35(4):297–303. doi: 10.1016/s0005-7967(96)00109-x. [DOI] [PubMed] [Google Scholar]

- Mogg K, Garner M, Bradley BP. Anxiety and orienting of gaze to angry and fearful faces. Biological Psychology. 2007;76(3):163–169. doi: 10.1016/j.biopsycho.2007.07.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Keil A, Miller GA. Fear but not awareness predicts enhanced sensory processing in fear conditioning. Psychophysiology. 2006;43(2):216–226. doi: 10.1111/j.1464-8986.2006.00386.x. [DOI] [PubMed] [Google Scholar]

- Moratti S, Keil A, Stolarova M. Motivated attention in emotional picture processing is reflected by activity modulation in cortical attention networks. Neuroimage. 2004;21(3):954–964. doi: 10.1016/j.neuroimage.2003.10.030. [DOI] [PubMed] [Google Scholar]

- Morgan ST, Hansen JC, Hillyard SA. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc Natl Acad Sci. 1996;93(10):4770–4774. doi: 10.1073/pnas.93.10.4770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moser JS, Huppert JD, Duval E, Simons RF. Face processing biases in social anxiety: An electrophysiological study. Biological Psychology. 2008;78(1):93–103. doi: 10.1016/j.biopsycho.2008.01.005. [DOI] [PubMed] [Google Scholar]

- Mühlberger A, Wieser MJ, Hermann MJ, Weyers P, Tröger C, Pauli P. Early cortical processing of natural and artificial emotional faces differs between lower and higher socially anxious persons. Journal of Neural Transmission. 2009;116:735–746. doi: 10.1007/s00702-008-0108-6. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen SK, Keil A. Time Course of Competition for Visual Processing Resources between Emotional Pictures and Foreground Task. Cereb. Cortex. 2008;18(8):1892–1899. doi: 10.1093/cercor/bhm215. [DOI] [PubMed] [Google Scholar]

- Müller MM, Hübner R. Can the spotlight of attention be shaped like a doughnut? Psychological Science. 2002;13(2):119–124. doi: 10.1111/1467-9280.00422. [DOI] [PubMed] [Google Scholar]

- Müller MM, Malinowski P, Gruber T, Hillyard SA. Sustained division of the attentional spotlight. Nature. 2003;424(6946):309–312. doi: 10.1038/nature01812. [DOI] [PubMed] [Google Scholar]

- Müller MM, Teder W, Hillyard SA. Magnetoencephalographic recording of steadystate visual evoked cortical activity. Brain Topography. 1997;9(3):163–168. doi: 10.1007/BF01190385. [DOI] [PubMed] [Google Scholar]

- Öhman A. Face the beast and fear the face: Animal and social fears as prototypes for evolutionary analyses of emotion. Psychophysiology. 1986;23(2):123–145. doi: 10.1111/j.1469-8986.1986.tb00608.x. [DOI] [PubMed] [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Öhman A, Mineka S. Fears, phobias, and preparedness: toward an evolved module of fear and fear learning. Psychological Review. 2001;108(3):483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Perlstein WM, Cole MA, Larson M, Kelly K, Seignourel P, Keil A. Steady-state visual evoked potentials reveal frontally-mediated working memory activity in humans. Neuroscience Letters. 2003;342(3):191–195. doi: 10.1016/s0304-3940(03)00226-x. [DOI] [PubMed] [Google Scholar]

- Phan KL, Fitzgerald DA, Nathan PJ, Tancer ME. Association between amygdala hyperactivity to harsh faces and severity of social anxiety in generalized social phobia. Biological Psychiatry. 2006;59(5):424–429. doi: 10.1016/j.biopsych.2005.08.012. [DOI] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, Donchin E, Hillyard SA, Johnson R, Jr, et al. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology. 2000;37(2):127–152. [PubMed] [Google Scholar]

- Rapee RM, Heimberg RG. A cognitive-behavioral model of anxiety in social phobia. Behaviour Research and Therapy. 1997;35(8):741–756. doi: 10.1016/s0005-7967(97)00022-3. [DOI] [PubMed] [Google Scholar]

- Regan D. Human Brain Electrophysiology: Evoked Potentials and Evoked Magnetic Fields in Science and Medicine. New York: Elsevier; 1989. [Google Scholar]

- Sabatinelli D, Lang PJ, Bradley MM, Costa VD, Keil A. The Timing of Emotional Discrimination in Human Amygdala and Ventral Visual Cortex. Journal of Neuroscience. 2009;29(47):14864–14868. doi: 10.1523/JNEUROSCI.3278-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Keil A, Bradley MM. Emotional Perception: Correlation of Functional MRI and Event-Related Potentials. Cerebral Cortex. 2007;17(5):1085–1091. doi: 10.1093/cercor/bhl017. [DOI] [PubMed] [Google Scholar]

- Schultz LT, Heimberg RG. Attentional focus in social anxiety disorder: Potential for interactive processes. Clinical Psychology Review. 2008;28(7):1206–1221. doi: 10.1016/j.cpr.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Schutter DJLG, de Haan EHF, van Honk J. Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. International Journal of Psychophysiology. 2004;53(1):29–36. doi: 10.1016/j.ijpsycho.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Sewell C, Palermo R, Atkinson C, McArthur G. Anxiety and the neural processing of threat in faces. Neuroreport. 2008;19:1339–1343. doi: 10.1097/WNR.0b013e32830baadf. [DOI] [PubMed] [Google Scholar]

- Sposari JA, Rapee RM. Attentional bias toward facial stimuli under conditions of social threat in socially phobic and nonclinical participants. Cognitive Therapy and Research. 2007;31:23–37. [Google Scholar]

- Stein MB, Goldin PR, Sareen J, Zorrilla LTE, Brown GG. Increased amygdala activation to angry and contemptuous faces in generalized social phobia. Archives of General Psychiatry. 2002;59(11):1027–1034. doi: 10.1001/archpsyc.59.11.1027. [DOI] [PubMed] [Google Scholar]

- Straube T, Mentzel HJ, Miltner WHR. Common and distinct brain activation to threat and safety signals in social phobia. Neuropsychobiology. 2005;52:163–168. doi: 10.1159/000087987. [DOI] [PubMed] [Google Scholar]

- Veit R, Flor H, Erb M, Hermann C, Lotze M, Grodd W, et al. Brain circuits involved in emotional learning in antisocial behavior and social phobia in humans. Neuroscience Letters. 2002;328(3):233–236. doi: 10.1016/s0304-3940(02)00519-0. [DOI] [PubMed] [Google Scholar]

- Veljaca KA, Rapee RM. Detection of negative and positive audience behaviours by socially anxious subjects. Behaviour Research and Therapy. 1998;36(3):311–321. doi: 10.1016/s0005-7967(98)00016-3. [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Mühlberger A. Probing the attentional control theory in social anxiety: An emotional saccade task. Cognitive, Affective, & Behavioral Neuroscience. 2009;9(3):314–322. doi: 10.3758/CABN.9.3.314. [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Reicherts P, Mühlberger A. Don’t look at me in anger! - Enhanced processing of angry faces in anticipation of public speaking. Psychophysiology. 2010;47:241–280. doi: 10.1111/j.1469-8986.2009.00938.x. [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Weyers P, Alpers GW, Mühlberger A. Fear of negative evaluation and the hypervigilance-avoidance hypothesis: An eye-tracking study. Journal of Neural Transmisson. 2009;116:717–723. doi: 10.1007/s00702-008-0101-0. [DOI] [PubMed] [Google Scholar]

- Yoon KL, Fitzgerald DA, Angstadt M, McCarron RA, Phan KL. Amygdala reactivity to emotional faces at high and low intensity in generalized social phobia: A 4-Tesla functional MRI study. Psychiatry Research Neuroimaging. 2007;154:93–98. doi: 10.1016/j.pscychresns.2006.05.004. [DOI] [PubMed] [Google Scholar]