Abstract

What does it mean to “know” what an object is? Viewing objects from different categories (e.g., tools vs. animals) engages distinct brain regions, but it is unclear whether these differences reflect object categories themselves or the tendency to interact differently with objects from different categories (grasping tools, not animals). Here we test how the brain constructs representations of objects that one learns to name or physically manipulate. Participants learned to name or tie different knots and brain activity was measured whilst performing a perceptual discrimination task with these knots before and after training. Activation in anterior intraparietal sulcus, a region involved in object manipulation, was specifically engaged when participants viewed knots they learned to tie. This suggests that object knowledge is linked to sensorimotor experience and its associated neural systems for object manipulation. Findings are consistent with a theory of embodiment in which there can be clear overlap in brain systems that support conceptual knowledge and control of object manipulation.

Keywords: Action learning, Object representation, Perception, Neuroimaging, Parietal

1. Introduction

In daily life, we encounter an array of objects that we are able to effortlessly identify and interact with, based on prior experience with these objects. Converging evidence from research with neurological patients (Buxbaum, 2001; De Renzi, Faglioni, & Sorgato, 1982; Johnson, 2000; Johnson, Sprehn, & Saykin, 2002; Rothi & Heilman, 1997), non-human primates (Gardner, Babu, Ghosh, Sherwood, & Chen, 2007; Gardner, Ro, Babu, and Ghosh 2007; Gardner, Ro, Babu, & Ghosh, 2007; Sakata, Tsutsui, & Taira, 2005; Ungerleider & Mishkin, 1982), and neurologically healthy individuals (Bellebaum et al., 2012; Frey, 2007; Grol et al., 2007; Mahon et al., 2007; Tunik, Rice, Hamilton, & Grafton, 2007) underscores an important feature of visual object perception: a rich array of information pertaining to an object is automatically retrieved whenever that object is encountered. However, what it means to actually know what an object is remains a matter for debate. Once basic visual features of an object are constructed (such as the object’s shape, size, texture, and colour), knowledge can then come from both linguistic and practical experience with that object (Martin, 2007). A fundamental question is whether these two sources of knowledge are distinguishable at a behavioural or neural level. Here we make use of a knot tying paradigm, which incorporates both linguistic and practical training procedures, to examine the emergence of experience-specific object representations. Participants learned either a knot’s name, how it is tied, or both its name and how it is tied for a collection of knots that were novel to them before the experiment began. Such a paradigm enables us to test whether dissociable types of knowledge can be generated for the same class of novel objects and how this is manifest in the brain.

Object knowledge is related to the way we experience objects, through perceptual, linguistic, or motor modalities (Barsalou, Kyle Simmons, Barbey, & Wilson, 2003). Two rival theories of how object knowledge is organized in the brain have been proposed. The sensorimotor feature/grounded cognition model posits that object knowledge is organized based on sensory (form/motion/colour) and motor (use/touch) features (Martin, 2007). Such an account predicts that object knowledge should be closely tied to one’s experience with a given object. In contrast, amodal accounts of object representation suggest that object knowledge is organized in the brain according to conceptual category (Caramazza & Mahon, 2003; Caramazza & Shelton, 1998; Mahon et al., 2007). In support of this theory, proponents cite an ever-increasing number of neuroimaging studies that provide evidence for dedicated neural tissue for category-specific processing of tools, plants, animals, and people as evolutionarily-salient domains (Mahon & Caramazza, 2009). However, directly comparing these theories can be problematic, as data can be used to support both camps (Grafton, 2009; Martin, 2007). In the present study, rather than attempting to falsify these general accounts of object knowledge or pit them against each other, we aim to further delineate which types of object knowledge are grounded in the systems used to gain that knowledge (sensorimotor vs. linguistic structures). Further, we investigate how de novo sensorimotor or linguistic information is encoded in the brain and examine which brain structures are engaged when performing a task that does not require explicit recall of such knowledge or experience.

Abundant data provide evidence for activation of parietal, premotor, and temporal cortices when viewing objects that are associated with a particular action, such as tools (Boronat et al., 2005; Canessa et al., 2008; Grezes & Decety, 2002; Johnson-Frey, Newman-Norlund, & Grafton, 2005; Kiefer, Sim, Liebich, Hauk, & Tanaka, 2007; Martin, Wiggs, Ungerleider, & Haxby, 1996). However, most of the prior research on perception of functional objects has measured brain and behavioural responses to familiar, every-day objects, which means each participant comes into the laboratory with individual sensorimotor histories with any given object. In an attempt to extend this prior work and explore how object knowledge is constructed, several recent experiments have sought to control the amount of action experience participants have with an object through employing laboratory training procedures (Bellebaum et al., 2012; Creem-Regehr, Dilda, Vicchrilli, Federer, & Lee, 2007; Kiefer et al., 2007; Weisberg, van Turennout, & Martin, 2007). Using a particularly innovative paradigm, Weisberg et al. (2007) taught participants how to use a set of novel tools across a three-session training period. By doing so, participants gained knowledge about each object’s function. Participants were scanned before and after acquiring action experience with these novel objects, and their task in the scanner was simply to decide whether two photographs featured the same or different novel tool. Therefore, the task during scanning required a judgement of visual similarity and did not explicitly instruct participants to retrieve information gained from the training period. The authors reported training-specific increases within parietal, temporal, and premotor cortices when participants performed the perceptual discrimination task. This evidence, along with that reported by a recent study employing similar procedures (Bellebaum et al., 2012) suggests that brief experience learning how to use a novel object can lead to action-related object representations that are accessed in a task-independent manner.

Another feature that can be accessed when viewing an object is the name or linguistic label for that object. Portions of the left inferior frontal gyrus and the middle temporal gyrus are implicated in mediating linguistic knowledge about familiar objects, whether accessed in a deliberate or spontaneous manner (Chao & Martin, 2000; Ferstl, Neumann, Bogler, & Yves von Cramon, 2007; Grafton, Fadiga, Arbib, & Rizzolatti, 1997; Shapiro, Pascual-Leone, Mottaghy, Gangitano, & Caramazza, 2001; Tyler, Russell, Fadili, & Moss, 2001). As with studying the function of objects, most of the research to date that has investigated naming knowledge for objects has studied the perception of well-known, every day objects. Of the few researchers who have investigated de novo name learning for novel objects, they report generally consistent results, demonstrating inferior frontal and middle temporal cortical activations when accessing newly learned linguistic representations of objects (Gronholm, Rinne, Vorobyev, & Laine, 2005; James & Gauthier, 2003, 2004). What remains underexplored is whether such cortical activity is present when participants perform a task independent of the linguistic information learned about an object, and how name learning compares to learning action-related information about an object.

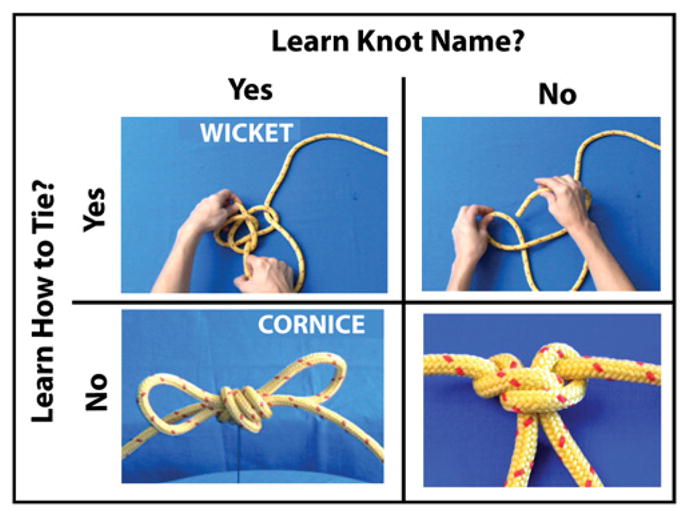

To address these outstanding issues and further delineate how object knowledge is constructed in the human brain, we measured participants’ neural activity before and after they learned to construct and name a set of novel objects. Importantly for the purposes of the present experiment, all objects had the same function (knots) and category membership. The task was a simple perceptual discrimination task (after Weisberg et al., 2007 and Bellebaum et al., 2012), which enables direct comparison of the influence of linguistic or action experience on task performance, and is not biased towards any one type of experience. Importantly, both the function and visual familiarity of all objects used in this study are held constant. All that is manipulated is prior exposure to an object’s name or how to create it, using a two by two factorial design (Fig. 1). Thus, participants’ experience with each object fit into one of four training categories: (1) knowledge about a knot’s name and how to tie it; (2) knowledge about a knot’s name only; (3) knowledge about how to tie a knot only; or (4) no knowledge concerning a knot’s name or how to tie it. When performing the perceptual discrimination task, it has been argued that participants automatically draw upon whatever associated knowledge systems are available (Martin, 2007). We hypothesize that both perceptual-motor experience and linguistic knowledge play important roles in object knowledge, and that both kinds of experience will be differentially represented in the brain during object perception.

Fig. 1.

Two-by-two factorial design for training procedures. Participants spent approximately the same amount of time watching video stimuli for knots they were meant to learn to tie, to name, and to both name and tie.

2. Materials and methods

2.1. Subjects

Thirty right-handed (Oldfield, 1971), neurologically healthy undergraduate and graduate students participated in the behavioural portion of this study. These participants ranged in age from 17 to 27 years (mean age 19.03 years; parental consent was obtained for the one participant under the legal age of consent), and 23 were female.

Of the 30 participants who completed the behavioural training procedures, 28 of these individuals participated in the functional imaging portion of the study. Eight of these subjects were excluded from final data analyses due to unacceptably high levels of noise in the MRI data due to scanner malfunction. Of the 20 participants (14 females) who composed the final fMRI sample, all were right handed (determined by the Edinburgh handedness inventory; Oldfield, 1971) and had a mean age of 19.4 years (range 17–27 years). Informed consent was obtained in compliance with procedures set forth by the Committee for the Protection of Human Subjects at Dartmouth College. All participants were compensated for their involvement with cash or course credit. The local ethics committee approved this study. Critically, no participant had significant prior experience with tying knots or rope work.

2.2. Design

The event structure for the experiments in this study was a two by two factorial design with factors Linguistic Experience (levels: learned knot’s name; did not learn knot’s name) and Action Experience (levels: learned to tie knot; did not learn to tie knot). Thus, there were two factors, each with two levels (Fig. 1). A within-subjects design was used to avoid confounds due to individual differences, and to determine whether it is possible to establish distinct action and linguistic representations for similar objects within the same participants. With this design, participants learned to tie a group of 10 knots, name a group of 10 knots, and tie and name a third group of 10 knots. Participants had no experience with the remaining 10 knots from the group of 40. Each group of knots for training was pseudo-randomly assembled and assigned to participants to learn.

2.3. Stimuli

The stimuli were 40 different knots, each formed by tying a single strand of rope. The 40 knots were chosen based on a short pilot study where 10 knot-naïve participants (who did not participate in the present study) attempted to tie and ranked the tying difficulty of a group of 50 knots. The 40 that were chosen ranged in tying difficulty, and each of the four groups of 10 knots constructed for testing included a selection of easy and hard to tie knots, whose difficulty levels (based on the pilot study) did not statistically differ between groups (p>0.05). To construct the videos, a 68.6 cm length of 11 mm yellow sailing cord was filmed on a neutral blue background in all contexts. Each knot was filmed in several different ways. To construct the instructional videos to teach the tying procedures, each knot was filmed being tied once at a moderate pace. These instructional videos varied in length from 5 to 20 s (depending on the complexity of the knot), and were filmed over the tyer’s hands to provide an egocentric tying perspective. For the knot naming training, it was important that participants learn to recognize the knots they would be naming from all angles and perspectives, and that their visual experience with the knots in their ‘name’ group was equivalent to that of the knots in the ‘tie’ and ‘both’ groups in every manner, except that they never had any perceptual-motor or motor experience with the ‘name’ knots. In order to achieve this, we constructed videos of each knot being rotated 360° on a turntable, and the name of each knot was added to these videos with Final Cut Pro™ (Apple Inc., Cupertino, CA) video editing software. Each of the naming videos was 12 s in length.

The knots used in this experiment came from four general knot categories (bends, slips, loops, and stoppers). While each knot was different in terms of tying procedure and appearance, the fact that we selected several bowline knots (e.g., Eskimo bowline, cowboy bowline, French bowline) and several loop knots (e.g., packer’s loop, artillery loop, and farmer’s loop) meant that there was considerable overlap among the knot names. In addition, some of the knots had descriptive names (e.g., figure of 8 knot, the hangman’s noose), while others had names that were less descriptive (e.g., running eye open carrick, stevedore’s knot). In order to sidestep naming confounds, we randomly assigned each knot a new name (a noun in the English language) generated from the MRC Psycholinguistic Database version 2.0 (http://www.psy.uwa.edu.au/mrcdatabase/uwamrc.htm). Knots were assigned new names according to the following criteria: nouns with two syllables, with a medium-high familiarity rating, a medium concreteness rating, and a medium imageability rating. The MRC database returned 64 nouns meeting the specified criteria, and 40 were randomly selected and assigned as the new names for the knot stimuli. Examples of new knot names include rhombus, polka, spangle, and fissure (see also Fig. 1).

For knots in the both name and tie condition, the name of the knot being tied was added to the individual tying videos. A total of 120 videos were constructed (40 videos for the ‘tie’ condition, 40 videos for the ‘name’ condition, and 40 videos for the ‘both’ condition). The purpose of teaching participants to name, tie, or name and tie knots with video clips was to ensure consistency across training methods for instilling both linguistic and action experience, and also to eliminate confounds of perceptual-motor experience for knots whose names participants were assigned to learn.

In addition to the videos, high-resolution still photographs were taken of each knot from three camera angles, resulting in 120 different photographs that were used in the perceptual discrimination task for behavioural training and the fMRI portions of the study. All images were cropped and resized to 3″ × 3″.

2.4. Behavioural training procedure

The behavioural training comprised two distinct tasks: learning to tie and name knots, and learning to perform a speeded perceptual discrimination task on pairs of knot photographs. All participants completed each of these tasks across five consecutive days, and each day of training lasted approximately one hour. For the training sessions, each participant was assigned to learn to tie or name knots chosen from each of four different sets of knots, such that each participant learned to tie 10 knots, name 10 knots, and tie and name 10 knots. Participants had no experience with the remaining 10 knots from the group of 40. During each day of behavioural training, participants performed four tasks. First, they completed 80 trials of the perceptual discrimination task. Following this, they learned to tie, name, and both tie and name their assigned knots. The video portions of the training procedure were self-paced by the participants, and participants were assigned to complete tying, naming and both tying and naming practice in a randomized order over the five days of training. Next, participants completed 80 more trials of the perceptual discrimination task. Following this, an experimenter evaluated each participant’s naming and tying knowledge for their assigned knots. All portions of the training procedures were closely supervised in order to ensure that participants did not encounter difficulty with the procedures.

Naming training—For knots participants learned to name, they watched a short video of each knot slowly rotating with the name of that knot on the top right corner of the screen. Participants were instructed to watch each video only once per day of training, and to try to associate each printed name with the knot it was paired with.

Tying training—In order to cater to each individual’s speed of learning, participants were able to start, stop, and restart the training videos as needed in order to recreate each knot from the ‘tie’ category. Participants were instructed to correctly tie each of the ten knots in this group once per day of training, and to focus on memorizing how to tie each of these knots.

Both naming and tying training—For the knots participants were assigned to learn to name and how to tie, the procedure was identical to that used for tying training, with the exception that the name of the knot to be tied appeared in the top right corner of the screen throughout the tying video. Participants were instructed to correctly tie each knot once per day of training, and to try to associate the name with the knot they were learning to tie.

Knot naming/tying evaluation—At the end of each training session, participants’ learning was evaluated with several behavioural measures. For the knots from the ‘tie’ condition, the experimenter presented the participant with a printed colour photograph of each knot in a random order across days and asked the participant to tie the knot. Participants were allowed two attempts to tie each knot, and each attempt was scored on a 0–5 scale, with 0 corresponding no attempt, 1 corresponding to a poor attempt, 2 corresponding to getting the first several steps right, 3 corresponding to half-completing the knot, 4 corresponding to almost accurately tying the knot (i.e., the final knot might be missing a loop or might not be finished neatly), and 5 corresponding to tying the knot perfectly, as demonstrated on the training video. For knots that participants learned to name, colour photographs of each knot were presented and participants were asked for the corresponding name they had learned. Participants were assigned a score of zero for missing the name, and a score of one for an accurate response. Evaluation of performance for knots participants learned to both tie and name combined elements of both procedures outlined above. Participants received two scores per knot in the ‘both’ category—a naming score and a tying score. Participants could receive credit in the appropriate category for knowing only the name or only how to tie a knot in this group.

Perceptual discrimination task—Before and after the knot-training portion of the daily training sessions, participants performed a forced-choice knot-matching task, comprising 80 trials per testing session. The instructions for this task were to decide if the two photographs were of the same knot or different knots. Importantly, each pair of knots was from the same learning class (e.g., tie, name, both, or untrained), and when both photographs pictured the same knot, the viewing angle of the two photographs always differed so that the two visual inputs were never identical. Participants were instructed to press the ‘z’ key of the keyboard if the two views were of different knots, and the ‘m’ key if the two photographs were of matching knots. Of the 80 trials participants completed for both the pre- and post-knot training sessions, half of the trials were of two matching knots, and the other half were of two non-matching knots. Stimulus presentation, response latency, and accuracy recording for this portion of the experiment were realized using Matlab Cogent 2000 Software (Wellcome Department of Imaging Neuroscience, London, UK; (Romaya, 2000).

2.5. Behavioural training analyses

Participants’ ability to tie, name, or both tie and name their group of assigned knots was evaluated by averaging their scores for each day, and then combining averages across the group to create group averages. Learning across the five days of training was quantified through use of repeated-measures ANOVAs with factors Name and Tie, each with two levels; trained and untrained (see Fig. 1).

For the perceptual discrimination task, the dependent variables included mean response time and accuracy. Response time analyses were restricted to only correct responses, and of these usable trials, the fastest and slowest 10% of trials were eliminated from further analyses for each testing session of 80 trials. This was done in order to eliminate outlying data points in a systematic, unbiased manner. We focus on data from the second session of participants’ daily performance of the perceptual discrimination task and illustrate full findings for both within- and between-session effects in Supplemental Fig. 1.

2.6. Functional magnetic resonance imaging

Each participant completed one magnetic resonance imaging session prior to the training procedures and an identical scanning session immediately following the five days of training. Participants completed three functional runs each day of scanning, lasting 5 min and 20 s and containing 10 blocks of 10 trials each. Eighty percent of trials were of knot pairs and the remaining 20% of trials were of scrambled image pairs. Within each block, half of the trials were of matching pairs of images, and half were of non-matching pairs. The stimulus blocks and matching and mismatching trials were arranged in a pseudo-random order. In each trial, a pair of knots or scrambled images was presented side by side for 2500 ms, followed by a 500 ms inter-stimulus interval, during which a white fixation cross appeared on the screen. As with the behavioural version of this task, participants were instructed to respond as quickly and accurately as possible once the images appeared, and their response time was recorded from the moment the pictures appeared until the moment a response was made.

During scanning, participants performed a similar perceptual discrimination task to the one they performed at the beginning and end of each day of behavioural training. Subjects pressed the button in their right hand if the two knots matched, and they pressed a button held in the left hand if the two knots were different. Knot-matching trials were grouped into separate blocks based on how they would be trained for each participant. For example, participants would perform a block of 10 trials for knots they would learn to tie, and then a block of 10 trials for knots they would learn to name, and so on. The same grouping procedures were also used for the post-training fMRI session. Debriefing procedures after the second fMRI session revealed that participants were not aware that trials had been grouped according to training condition. Interspersed within blocks of knots were blocks of trials using phase-scrambled versions of the knot photographs. Participants were instructed to make the same perceptual discrimination decision during these trials—to decide whether the two scrambled images were identical (Fig. 2A). This was selected as a baseline task, because all other task parameters are held constant including attentional demands, behavioural instructions and the mean luminance of the pairs of images.

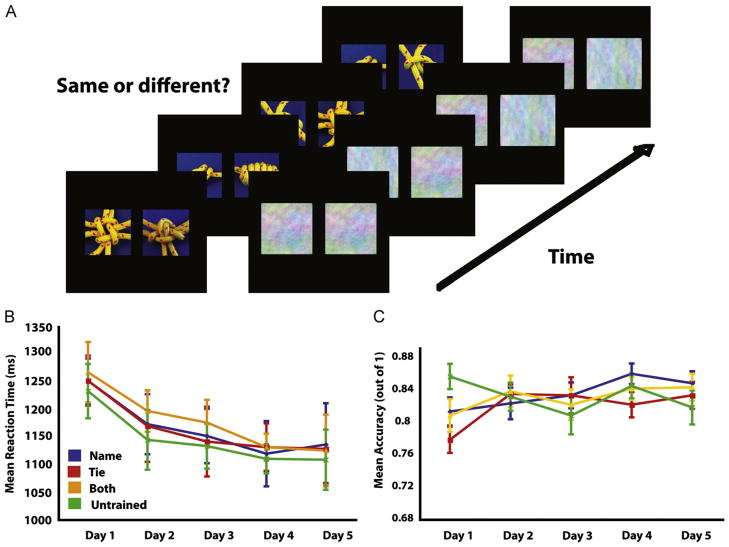

Fig. 2.

Panel 2A illustrates the perceptual discrimination (knot-matching) task used during behavioural training (left portion of the figure), as well as the scrambled knot images that were used in addition to the knot photographs to establish baseline visual activation during the fMRI portion of the experiment. For the perceptual discrimination task, participants’ objective was to respond as quickly and accurately as possible whether the two photographs were of the same or different knots. Panel 2B illustrates participants’ performance on the perceptual discrimination task across the five days of behavioural training. Panel 2C illustrates participants’ mean accuracy across the five days of behavioural training. Error bars represent standard error of the mean.

Stimuli presentation and response recording was done by a Dell Inspiron laptop computer running Matlab Cogent 2000 Software. Stimuli were rear-projected onto a translucent screen viewed via a mirror mounted on the head coil. The experiment was carried out in a 3T Philips Intera Achieva scanner using an eight channel phased array coil and 30 slices per TR (3.5 mm thickness, 0.5 mm gap); TR: 1988 ms; TE: 35 ms; flip angle: 90°; field of view: 24 cm; matrix 80 × 80. For each of three functional runs, the first two brain volumes were discarded, and the following 160 volumes were collected and stored.

2.7. fMRI data processing and statistical analyses

Neuroimaging data from each scanning session were first analysed separately. Data were realigned and unwarped in SPM2 and normalized to the Montreal Neurological Institute (MNI) template with a resolution of 3 × 3 × 3 mm. A design matrix was fitted for each subject with the knots in the four cells of the two-by-two factorial design, modelled by a standard hemodynamic response function (HRF) and its temporal and dispersion derivatives. Scrambled images were modelled in the same way, adding a fifth column to the design matrix. The design matrix weighted each raw image according to its overall variability to reduce the impact of movement artefacts (Diedrichsen & Shadmehr, 2005). After estimation, a 9 mm smoothing kernel was applied to the beta images.

Three main sets of analyses were performed. The first analysis was designed to create a task-specific search volume from the pre-training imaging data that could be used to constrain analyses of the post-training data in an unbiased manner (for a similar procedure, see Cross, Kraemer, Hamilton, Kelley, & Grafton,, 2009). This was achieved by comparing neural activity in the pre-training scan session when participants performed the matching task for all knot categories (when all knots were effectively untrained) to neural activity engaged when performing the same matching task on the visually-matched scrambled baseline images. The results from this analysis were used as a small volume correction for the subsequent analyses. In addition, we also report exploratory whole-brain results without this mask.

Next we evaluated the main effects of the factorial design. The main effect of tying experience was evaluated by comparing brain activity when performing the knot matching task on knots from the ‘tie’ and ‘both tie and name’ conditions to knots from the ‘name’ and ‘untrained’ conditions. The main effect of learning a knot’s name was assessed by comparing activity associated with naming experience (‘name’ and ‘both tie and name’ conditions) and no naming experience (‘tie’ and untrained’ conditions). The final set of analyses assessed the impact of training experience across the imaging sessions. We evaluated this by searching for brain regions that demonstrated a greater difference between trained and untrained knots during the post-training scan compared to the pre-training scan. These interaction analyses were evaluated separately for knot tying training and knot naming training. As no regions emerged that met a p<0.01 FWE-corrected threshold, the interaction results are presented at the puncorrected<0.005 level. To further illustrate the nature of the BOLD response in regions that emerged from the main effects and interaction analyses, beta-estimates were extracted from a sphere with a 3 mm radius centred on the peak voxel from each experimental condition (name, tie, both, untrained), from each week of scanning (pre-training and post-training). The beta-estimates are plotted to illustrate the observed effects across the span of the experiment, and analogous procedures for evaluation of pre- and post-training parameter estimates are reported elsewhere (Cross et al., 2009). For visualization purposes, t-images for the task-specific search volume are displayed on partially inflated cortical surfaces using the PALS dataset and Caret visualization tools (Fig. 4; http://brainmap.wustl.edu/caret). To most clearly visualize the activations from the main effects and interaction analyses, the t-images are visualized on a mean high-resolution structural scan, created from participants’ anatomical scans (Figs. 5 and 6). Anatomical localization of all activations was assigned based on consultation of the Anatomy Toolbox in SPM (Eickhoff et al., 2005; Eickhoff, Schliecher, Zilles, & Amunts, 2006), in combination with the SumsDB online search tool (http://sumsdb.wustl.edu/sums/).

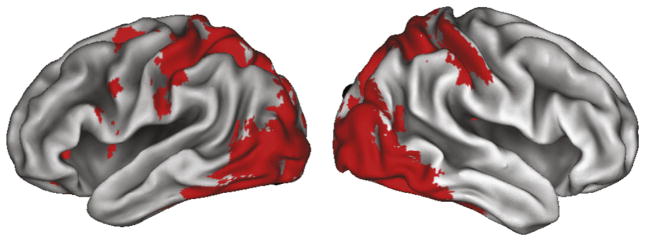

Fig. 4.

The task-specific mask designed to constrain the search volume for evaluating the effects of the factorial design from the Week 2 post-training fMRI session is illustrated here. This mask was made by comparing all knot-judgment conditions to the scrambled baseline condition from the pre-training scan session (Week 1), and is evaluated at the liberal statistical threshold of k=0 voxels, p<0.05, uncorrected.

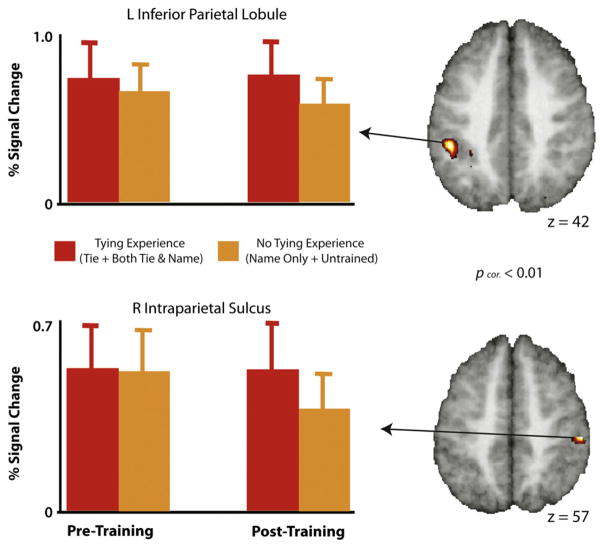

Fig. 5.

Main effect of tying experience, as assessed by the post-training scanning session. The two regions from the action knowledge (learned to tie+learned to both name and tie) compared to no action knowledge (learned to name+untrained) experimental conditions emerged with a small volume correction of the all knots>visual baseline contrast from the pre-training scan session, and survive a statistical threshold of pcorr <0.01. Parameter estimates extracted from the pre- and post-scanning sessions are included for illustrative purposes only.

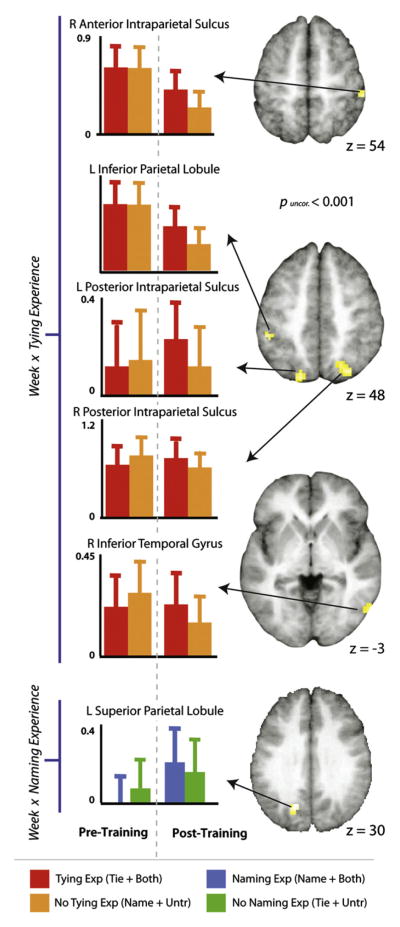

Fig. 6.

Imaging results illustrating the interaction between training experience and scanning session. The top three brains and five plots illustrate regions more responsive when perceiving knots that participants gained tying experience with (in the tying training condition and in the both tying and naming training condition), compared to those knots for which participants had no tying experience (knots in the name only and the untrained categories). The bottom brain and plot illustrates the region more responsive when perceiving knots participants had associated some kind of name with (from the naming training condition and the both naming and tying training condition), compared to knots for which participants had learned not naming information (knots in the tie only and the untrained categories). t-Maps are thresholded at t>3.48, puncorr. <0.001, and survived a small volume correction interrogating only those voxels that were more responsive when viewing knots than scrambled images during the pre-training scan (see Section 2). Parameter estimates were extracted from each cluster from both the pre-training and post-training scan sessions and are plotted to the left of the t-maps for illustration purposes only.

3. Results

3.1. Behavioural training results

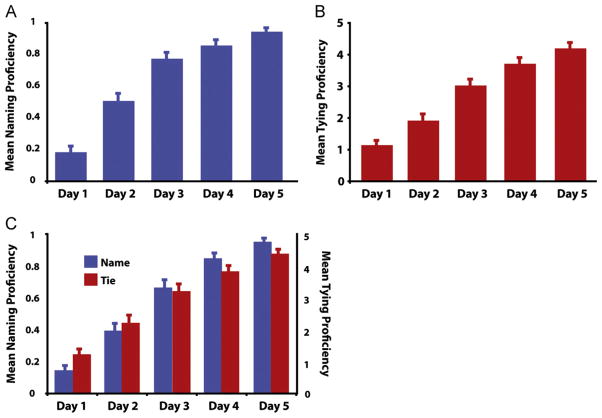

Fig. 3 illustrates participants’ performance on learning to tie and name knots across five consecutive days of behavioural training. For knots participants learned to name, they demonstrated a main effect of training day, F4,112=138.42, p<0.0001. Within-subjects contrasts reveal this pattern to be a linear trend of monotonic ascent across days of training, F1,28=410.33, p<0.0001 (Fig. 3A). For the knots participants learned to tie, a significant effect of training day also emerged, F4,112=101.01, p<0.0001. Here as well, the linear test of within-subjects contrasts was highly significant, F1,28=283.62, p<0.0001 (Fig. 3B). For the knots that participants trained to both name and tie, there was a main effect of training day for naming scores, F4,112=150.02, p<0.0001, and for tying scores, F4,112=85.76, p<0.0001. The linear test of within subjects contrasts was highly significant, F1,28=467.62, p<0.0001, as was the main effect of tying scores, F1,28=239.85, p<0.0001 (Fig. 3C).

Fig. 3.

Results from the knot training procedures. Panel 3A illustrates mean accuracy for recalling the names of knots whose names were studied. Panel 3B illustrates participants’ tying proficiency across training days. Panel 3C illustrates participants’ naming and tying ability for those knots they trained to both name and tie. Error bars represent the standard error of the mean.

To evaluate how learning two pieces of information about a novel object (i.e., how to name and tie a knot) compares with learning just one new feature about an object (i.e., how to just name or tie a knot), we ran two additional analyses comparing participants performance on knot naming and tying in the ‘both’ compared to the ‘naming only’ and ‘tying only’ conditions. For knots participants trained to name, participants demonstrate a main effect of single vs. dual feature learning (F1,29=5.33, p=0.028), with better naming performance for those knots they only learned to name (M=0.66) compared to those they learned to both name and tie (M=0.60). For knots participants trained to tie, a non-significant trend emerged in the opposite direction (F1,29=2.64, p=0.115), where participants tended to tie knots slightly better that they had learned to name and tie (M=0.60) compared to those they only learned to tie (M=0.56). These analyses imply that brain systems supporting linguistic and perceptual-motor forms of learning are not fully independent. This opens a number of possibilities for future studies to investigate how learning of multiple features about an object in parallel interacting systems compares to learning one feature at a time.

During the five days of behavioural training, and also during the pre- and post-training fMRI sessions, participants also performed a simple perceptual discrimination task wherein they decided whether two photographs were of the same or different knots (Fig. 2A)1. Participants’ performance on this task was evaluated both at the beginning and end of each daily training session. Here we focus on performance from the second testing session of each day, because by the post-training session, performance is more consistent and reflects the learned state (Weisberg et al., 2007). Moreover, it is possible to capture training effects with just the second testing session from each training day, and doing so also enables a simpler statistical model. The five (training day) by four (training condition) repeated-measures ANOVA performed on response times revealed a main effect of training day, F4,92 =10.17, p <0.0001, indicating that participants performed the matching task increasingly quickly as the days progressed (Fig. 2B). A main effect of training condition also emerged, F3,69 =3.22, p<0.003, revealing that participants responded significantly faster to pairs of untrained knots compared to those they learned to name (p=0.007), tie (p=0.020), or both name and tie (p=0.002). This suggests that the linguistic and functional knowledge that participants rehearsed each day might be accessed during testing. Such access takes measureable time, a notion consistent with work demonstrating automatic activation of associated knowledge structures when perceiving familiar objects (Peissig, Singer, Kawasaki, & Sheinberg, 2007). No significant interaction was present between training day and training condition for response times, nor did any main effects or interactions emerge from the accuracy analysis (Fig. 2C).

3.2. Functional MRI results

For the first neuroimaging result, we identified brain regions specific to object perception in an unbiased and paradigm-specific manner. This contrast revealed a pattern of activation spanning predominantly visual and parietal cortices (Fig. 4). When thresholded at puncorrected <0.05, k =0 voxels, this contrast was used to label a reduced number of voxels to constrain the search volume for all subsequent analyses. Within this search volume, we report all subsequent results at puncorrected <0.005, k=10 voxels and focus our discussion of the main effects on the subset of these regions which also meet the pcorrected <0.01 threshold (Friston et al., 1996).

To examine the influence of training experience, we evaluated the main effects of tying experience and naming experience within the factorial design during the post-training scan session. To evaluate the effects of acquisition of tying knowledge, we combined the ‘tie’ and the ‘both’ conditions into one condition (Tying Experience condition), and compared this to a combination of the ‘name’ and ‘untrained’ conditions (No Tying Experience condition). Two cluster-corrected regions emerged within the right and left intraparietal sulci (Fig. 5, Table 1a), areas that are classically associated with object praxis and the manipulation of tools (Frey, 2007; Martin, 2007; Martin, Haxby, Lalonde, Wiggs, & Ungerleider, 1995). When we performed a similar analysis to evaluate the Naming Experience>No Naming Experience main effect, no supra-threshold clusters emerged within the search volume of the all>baseline contrast. An exploratory whole-brain analysis of this contrast revealed two clusters located outside the small volume area (uncorrected for multiple comparisons); a cluster within the left posterior inferior frontal gyrus, and another cluster located within the right postcentral gyrus (see Supplementary Table 1).

Table 1.

Regions associated with acquiring tying experience (compared to no tying experience) and acquiring naming experience (compared to no naming experience).

| Region | BA | MNI coordinates

|

Putative functional name | t-value | Cluster size | Pcorr value | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| (a) Tying experience>no tying experience | ||||||||

| R postcentral gyrus | 7 | 54 | −27 | 57 | aIPS | 5.05 | 154 | 0.001 |

| R supramarginal gyrus | 2 | 54 | −21 | 36 | IPS | 3.94 | ||

| R supramarginal gyrus | 40 | 63 | −24 | 36 | IPL | 3.92 | ||

| L inferior parietal lobule | 2 | −42 | −36 | 42 | IPL | 4.72 | 138 | 0.002 |

| L inferior parietal lobule | 40 | −54 | −36 | 54 | IPL | 3.57 | ||

| L inferior parietal lobule | 7 | −33 | −45 | 42 | IPS | 3.56 | ||

| L fusiform gyrus | 37 | −33 | −66 | −15 | V4 | 4.26 | 14 | 0.821 |

| L lingual gyrus | 19 | −33 | −63 | −6 | V8 | 3.32 | ||

| R inferior parietal lobule | 40 | 33 | −48 | 48 | pIPS | 4.17 | 33 | 0.324 |

| L rolandic operculum | 13 | −45 | −3 | 12 | 4.16 | 22 | 0.584 | |

| L middle occipital gyrus | 19 | −30 | −66 | 36 | 4.09 | 54 | 0.099 | |

| L superior parietal lobule | 7 | −18 | −78 | 48 | SPL | 3.77 | ||

| R inferior temporal gyrus | 37 | 54 | −66 | −9 | ITG | 3.95 | 40 | 0.218 |

| R superior occipital gyrus | 19 | 30 | −84 | 39 | 3.93 | 86 | 0.019 | |

| R superior parietal lobule | 7 | 21 | −66 | 57 | SPL | 3.31 | ||

| R superior parietal lobule | 7 | 24 | −63 | 51 | SPL | 3.30 | ||

| R middle occipital gyrus | 19 | 45 | −87 | 6 | LOC | 3.90 | 83 | 0.022 |

| R middle occipital gyrus | 19 | 42 | −90 | 9 | LOC | 3.80 | ||

| R middle occipital gyrus | 19 | 36 | −84 | 18 | LOC | 3.80 | ||

| R postcentral gyrus | 1 | 39 | −39 | 69 | S1 | 3.40 | 12 | 0.875 |

| (b) Naming experience>no naming experience | ||||||||

| No suprathreshold clusters emerged from this analysis | ||||||||

Results from the tying>no tying experience and the naming>no naming experience contrasts, from the post-training scanning session, with a small volume correction of all knots>baseline (at p<0.01) from the Week 1 (pre-training) fMRI session. Significance at all sites for each contrast was tested by a one-sample t test on β values averaged over each voxel in the cluster, with a significance threshold of p<0.005 and k=10 voxels. Coordinates are from the MNI template and use the same orientation and origin as found in Talairach and Tournoux (1988) atlas. Activations in boldface denotes significance at the p<0.01 cluster-corrected level. Up to three local maxima are listed when a cluster has multiple peaks more than 8mm apart. Cluster-corrected activations are illustrated in Fig. 5.

Abbreviations for brain regions: aIPS, anterior intraparietal sulcus; IPS, intraparietal sulcus; IPL, inferior parietal lobule; V4, extrastriate visual cortex; V8, extrastriate visual cortex; pIPS, posterior intraparietal sulcus; SPL, superior parietal lobule; ITG, inferior temporal gyrus; LOC, lateral occipital cortex; S1, primary somatosensory cortex.

Next, we examined the interactions between training week and training experience, to determine brain regions whose change in response from the pre-training to post-training scan was associated with a specific kind of training experience. The first interaction evaluated brain regions showing a greater response difference during the post-training scan session than the pre-training scan session when performing the perceptual discrimination task on knots with associated tying knowledge compared to knots with no tying knowledge. This interaction revealed relatively greater activity within bilateral parietal cortices as well as the right inferior temporal gyrus when performing the matching task (Fig. 6, Table 2a). The interaction that evaluated naming experience and training week revealed one cluster within the left superior parietal lobule, which showed an increased response after training when participants matched knots whose names they had learned compared to knots whose names were unlearned. To rule out the possibility that the same parietal region is part of the tying experience interaction (which could be possible, as the ‘both tying and naming’ experience is part of both interaction analyses), we performed a conjunction analysis to search for overlapping voxels between both interaction analyses discussed above. This analysis revealed no overlapping voxels within the left superior parietal lobule (Supplementary Fig. 3), or anywhere else in the brain. An exploratory whole-brain analysis of the training week and naming experience interaction revealed additional cortical activity in parietal and ventral frontal regions, but no activity was found within regions commonly associated with language learning (Supplemental Table 2).

Table 2.

Regions associated with the interaction between training experience (tying experience; naming experience) and test session (pre-training scan; post-training scan).

| Region | BA | MNI coordinates

|

Putative functional name | t-value | Cluster size | Pcorr. value | ||

|---|---|---|---|---|---|---|---|---|

| x | y | z | ||||||

| (a) Post training (tying experience >no tying experience)> Pre training (tying experience>no tying experience | ||||||||

| R superior parietal lobule | 7 | 30 | −72 | 42 | pIPS | 4.22 | 67 | 0.057 |

| R inferior parietal lobule | 40 | 54 | −33 | 54 | aIPS | 4.05 | 12 | 0.880 |

| L superior parietal lobule | 7 | −18 | −81 | 48 | pIPS | 3.72 | 21 | 0.631 |

| L inferior parietal lobule | 40 | −51 | −39 | 48 | IPL | 3.63 | 18 | 0.717 |

| R inferior temporal gyrus | 19 | 60 | −66 | −3 | ITG | 3.48 | 34 | 0.328 |

| R inferior occipital gyrus | 19 | 39 | −78 | 3 | LOC | 3.38 | ||

| R middle occipital gyrus | 19 | 42 | −90 | 6 | LOC | 3.15 | ||

| (b) Post training (naming experience>no naming experience)>Pre training (naming experience>no naming experience) | ||||||||

| L superior parietal lobule | 7 | −18 | −69 | 30 | SPL | 3.73 | 19 | 0.695 |

Results from the interaction analyses that assessed regions responsive to training experience and scan session. Table 2a lists those regions that showed an interaction between tying experience (knots in the ‘tied’ and the ‘both tied and named’ training conditions vs. knots in the ‘named’ and the ‘untrained’ training conditions) and scanning session (post training scan vs. pre training scan). Table 2b lists the region that showed an interaction between naming experience (knots in the ‘named’ and the ‘both tied and named’ training conditions vs. knots in the ‘tied’ and the ‘untrained’ training conditions) and scanning session (post training scan vs. pre training scan). Significance at all sites for each contrast was tested by a one-sample t test on β values averaged over each voxel in the cluster, with a significance threshold of p<0.005 and k=10 voxels. All regions also survived a small volume correction from the all knots>baseline (at p<0.01) contrast from the Week 1 pre-training fMRI session. Coordinates are from the MNI template and use the same orientation and origin as found in Talairach and Tournoux (1988) atlas. Up to three local maxima are listed when a cluster has multiple peaks more than 8mm apart. Activations are illustrated in Fig. 6.

Abbreviations for brain regions: pIPS, posterior intraparietal sulcus; aIPS, anterior intraparietal sulcus; IPS, intraparietal sulcus; IPL, inferior parietal lobule; ITG, inferior temporal gyrus; LOC, lateral occipital cortex; SPL, superior parietal lobule.

4. Discussion

To investigate the effects of action and linguistic experience on the neural circuitry engaged during object perception, we measured the neural activity of participants whilst performing an object discrimination task before and after they learned to tie or name a group of knots. We found that one week of behavioural training induced changes in a subset of brain regions that were generally recruited while performing the perceptual discrimination task. Bilateral regions of IPS showed a greater response when participants discriminated between knots they had learned to tie compared to those for which they had acquired no tying knowledge. This result has two critical implications for understanding the role of parietal cortex in object representations. First, de novo object representations based simply on perceptual-motor experience are represented in bilateral regions of IPS. Second, neural sensitivity in parietal cortex based on perceptual-motor experience can be accessed in a task-independent manner; that is, without explicit instruction to consider how one would interact with such an object. Together, these findings suggest that experience associated with creating novel objects constructs part of an object representation in parietal cortex, which is accessed without explicit instruction when discriminating between objects. By contrast, naming experience did not recruit the expected brain regions associated with language, such as the inferior frontal and middle temporal gyri. Instead, one week of learning to associate a new word with a new object resulted in increased activity within left posterior IPS. Below we discuss some possible reasons for this pattern of results.

4.1. The role of perceptual-motor experience and parietal cortex during object perception

Converging lines of evidence associate the parietal cortex with tactile and perceptual-motor experience. For example, work with patients and healthy adults demonstrates parietal involvement in tool use and object manipulation (Binkofski et al., 1999; Buxbaum & Saffran, 2002; Culham & Valyear, 2006; Johnson-Frey, 2004) and suggests that the parietal cortex provides a “pragmatic” description of objects for action (Jeannerod, 1994; Jeannerod, Arbib, Rizzolatti, & Sakata, 1995). The present results further delineate the role of parietal cortex in object perception in two main ways. First, we demonstrate that object representations within the intraparietal sulci can be constructed de novo from perceptual-motor experience of how to create an object without concomitant learning of new functional knowledge (i.e., learning what an object does or what it is used for). By doing so, we extend recent findings that showed an object’s function maps onto a distributed set of neural regions within IPS, middle temporal and premotor cortices (Weisberg et al., 2007). The authors in this previous study demonstrated that these brain regions were engaged more during a perceptual discrimination task after participants learned how to use novel tools. Here we hold putative object function constant (as far as our participants were concerned, all knots had the same function) and manipulated only perceptual-motor experience. At test, during a perceptual discrimination task, we find greater activation within parietal cortex bilaterally, including anterior and posterior segments of IPS. This suggests that newly acquired object manipulation information is one feature of object knowledge that is coded within IPS and engaged when discriminating between two objects. Together, these results suggest that parietal cortex is involved in constructing de novo object representations based on at least two types of action experiences: what an object is used for and how to create it.

Second, the current results show that newly acquired perceptual-motor experience shapes object perception, even when such experience is task-irrelevant. Parietal cortex sensitivity to perceptual-motor experience has previously been demonstrated using a task that explicitly requires retrieval of object manipulation information (Kellenbach, Brett, & Patterson, 2003). In the current study, we show parietal cortex sensitivity to perceptual-motor experience with a task that required a comparison of visual features and did not explicitly require recall of the associated tactile knowledge. This suggests that during object perception, parietal cortex responds spontaneously based on one’s history of manipulating that object (i.e., this portion of parietal cortex responds despite participants’ knot-tying experience being task-irrelevant).

Such a suggestion fits well with the affordance literature, which explores how certain objects evoke in an observer the way the observer could interact with the object, based on the object’s properties and the observer’s prior experience with the object (e.g., Gibson, 1979). A wealth of literature has demonstrated that mere perception of a tool or other functional object engages brain regions required to carry out the associated motor act with the tool or object (e.g., Creem-Regehr & Lee, 2005; Grafton et al., 1997; Valyear, Cavina-Patresi, Stiglick & Culham, 2007). Our findings extend the previous affordance literature by suggesting that viewing pictures of simple knots engages activity in the same brain regions required to transform a length of rope into a knot, even though, unlike tools, the knots in this study had no functional value beyond being the endpoint of a series of actions performed with a piece of rope. A number of future avenues are open for pursuit, including whether viewing an unknotted piece of rope also affords knot-tying actions in experienced knot-tyers, or whether learning to construct other “non-functional objects”, such as origami, might induce similar activity within the motor system.

Although access to perceptual-motor knowledge occurred without the task demanding it, an additional processing cost was incurred: on average over the week of training, RTs were greater for objects one had perceptual-motor experience manipulating compared to untrained objects. Together, these findings suggest that during object perception perceptual-motor experience is accessed in a spontaneous but relatively time consuming manner. Future work may wish to use a dual task paradigm in order to further interrogate the degree to which these processes are automatic or controlled.

The present findings are consistent with the view that object concepts can be grounded in perception and action experience with these physical objects (Allport, 1985; Barsalou, 1999, 2008; Martin, 1998), rather than the view that conceptual representations are purely amodal and distinct from perceptual and motor systems (Fodor, 1998). We provide additional evidence for which action experiences are accessed when perceiving objects and how such knowledge is encoded in the brain. We show that despite all objects being from the same general class of objects (knots), and thus functionally equivalent for the sake of this experiment, participants’ prior perceptual-motor manipulation experience modulated responses in IPS. Thus, conceptual knowledge for these objects is intermixed with object praxis; that is, one feature of object knowledge is how one has previously interacted with the object. In sum, IPS incorporates perceptual-motor experience, in addition to functional knowledge (Weisberg et al., 2007; Bellebaum et al., 2012), when learning about novel objects. How these experience-dependent knowledge forms interact with category-based knowledge (Anzellotti, Mahon, Schwarzbach, & Caramazza, 2011; Caramazza & Mahon, 2003; Caramazza & Shelton, 1998; Mahon & Caramazza, 2011; Mahon et al., 2007) remains an open question for future research.

4.2. Experience naming objects

When knots participants learned to name were directly compared to those for which no names were learned, one cluster demonstrated a training week by training experience interaction within the left superior parietal lobule, along the posterior intraparietal sulcus (Fig. 6). Importantly, this cluster demonstrated no overlapping voxels with the pIPS cluster that was found for the tying experience by training week interaction. Even when responses across the whole brain are considered, we still find no evidence of naming experience-induced changes in activity within inferior frontal or superior temporal gyri for the training experience by scanning week interaction. While we initially predicted a linguistic experience-induced change in such regions based on abundant prior work that implicates these regions in mediating names for objects (Cornelissen et al., 2004; Gronholm et al., 2005; Hulten, Vihla, Laine, & Salmelin, 2009; Liljestrom, Hulten, Parkkonen, & Salmelin, 2009; Mechelli, Sartori, Orlandi, & Price, 2006; Price, Devlin, Moore, Morton, & Laird, 2005; Pulvermuller, 2005), we speculate that we did not find stronger activation of these regions classically associated with storage of linguistic knowledge when viewing knots whose names were learned for two possible two reasons.

First, the only linguistic knowledge participants learned about knots in the present experiment was an arbitrary, non-descriptive name. Findings reported by James and Gauthier (2004) shed light on why such names might fail to activate areas classically associated with object names. These authors investigated neural representations of arbitrary, non-descriptive names for novel objects (i.e., John, Stuart) compared to richer semantic associations for the same novel objects (i.e., sweet, nocturnal, friendly, loud). These authors found comparatively greater activation within inferior frontal and superior temporal regions when comparing perception of objects for which participants learned richer semantic information than those objects whose simple, non-descriptive names were learned (James & Gauthier, 2004). Also, in children, active interaction with the object only modulated motor responses when learning names for novel objects; passive observation did not (James & Swain, 2011). In the present study, participants only passively viewed those objects for which naming experience was acquired. Continuing this line of reasoning, we would predict that a variation of our task with stronger linguistic or semantic knowledge associates with novel objects should demonstrate more dramatic learning-related changes for linguistic information.

A second explanation for the observation that only a small cluster within SPL demonstrated an interaction between naming experience and scanning session is that rather than reflecting a linguistic process per se, the engagement of this region may reflect experience encoding/retrieving names from memory, which was a necessary part of our naming training procedures. That is, for objects whose names were learned, participants had five days of experience encoding novel names and having to retrieve them when prompted, whereas in the tying and untrained conditions, this type of encoding/retrieval process was absent. Posterior parietal cortex (along with other regions) has been frequently associated with novel name encoding and retrieval processes (e.g., Duarte, Ranganath, Winward, Hayward, & Knight, 2004; Mangels, Manzi, & Summerfield, 2010; Uncapher, Otten, & Rugg, 2006; Van Petten & Senkfor, 1996; Van Petten, Senkfor, & Newberg, 2000). Thus, when discriminating between objects with arbitrary names associated with them, experience of novel name encoding or retrieval may have been spontaneously engaged.

4.3. Limitations

One limitation of the present study concerns the possibility that participants automatically generated some form of linguistic or action representation for knots for which they had no linguistic or action training. If this were the case, then findings from the name-only and tie-only conditions could be contaminated by subject-generated names or thoughts about how to tie the untrained knots. Debriefing following the post-training fMRI session revealed that no participants had a specific or conscious strategy to do this, per se. However, several subjects did mention that they would recognize several more “interesting-looking” knots for which they had no naming or no training knowledge for as “the knot with four loops”, but no subject reported assigning discrete names for unnamed knots, nor specific ideas about how to tie knots for which they did not receive tying training. Moreover, the fact that the present data still reveal distinct circuits when observing named or tied knots suggests that the training manipulations were nonetheless robust and effective in establishing some degree of modality-specific representations.

5. Conclusion

In summary, linguistic and especially perceptual-motor experience with novel objects can markedly change how these objects are represented in the brain. Learning to name a knot resulted in a modest increase of activity within the left superior parietal lobule, while learning how to tie a knot resulted in robust bilateral activation along the intraparietal sulcus. Our use of a perceptual discrimination task that does not require explicit access of training experience enables us to conclude that participants’ experiences with these novel objects became an integral part of the object representations, which were automatically accessed when the knots were seen. The findings thus lend support to an embodied notion of object perception by demonstrating that experience with an object influences that object’s perceptually-driven representation in the brain.

Supplementary Material

Acknowledgments

The authors would like to thank Megan Zebroski for her outstanding help with video preparation and participant testing and Gideon Caplovitz for the phase-scrambling MATLAB script. This work was supported by an NRSA predoctoral fellowship to E.S.C. (F31-NS056720).

Appendix A. Supporting information

Supplementary data associated with this article can be found in the online version at http://dx.doi.org/10.1016/j.neuropsychologia.2012.09.028.

Footnotes

Due to a computer malfunction, complete data sets for the perceptual discrimination task were collected for only 12 participants for pre-training and post-training scanning sessions. These data are presented for completeness in Supplemental Fig. 2.

References

- Allport DA. Distributed memory, modular subsystems and dysphasia. In: Newman S, Epstein R, editors. Current perspectives in dysphasia. New York: Churchill Livingston; 1985. pp. 207–244. [Google Scholar]

- Anzellotti S, Mahon BZ, Schwarzbach J, Caramazza A. Differential activity for animals and manipulable objects in the anterior temporal lobes. Journal of Cognitive Neuroscience. 2011;23:2059–2067. doi: 10.1162/jocn.2010.21567. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral Brain Sciences. 1999;22:577–609. doi: 10.1017/s0140525x99002149. discussion 610–560. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Grounded cognition. Annual Review of Psychology. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Barsalou LW, Kyle Simmons W, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends in Cognitive Science. 2003;7:84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Bellebaum C, Tettamanti M, Marchetta E, Della Rosa P, Rizzo G, Daum I, et al. Neural representations of unfamiliar objects are modulated by sensorimotor experience. Cortex: A Journal Devoted to the Study of the Nervous System and Behavior. 2012 doi: 10.1016/j.cortex.2012.03.023. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buccino G, Stephan KM, Rizzolatti G, Seitz RJ, Freund HJ. A parieto-premotor network for object manipulation: evidence from neuroimaging. Experimental Brain Research. 1999;128:210–213. doi: 10.1007/s002210050838. [DOI] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, et al. Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Brain Research: Cognitive Brain Research. 2005;23:361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ. Ideomotor apraxia: a call to action. Neurocase. 2001;7:445–458. doi: 10.1093/neucas/7.6.445. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain and Language. 2002;82:179–199. doi: 10.1016/s0093-934x(02)00014-7. [DOI] [PubMed] [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, et al. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cerebral Cortex. 2008;18:740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Mahon BZ. The organization of conceptual knowledge: the evidence from category-specific semantic deficits. Trends in Cognitive Science. 2003;7:354–361. doi: 10.1016/s1364-6613(03)00159-1. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain the animate-inanimate distinction. Journal of Cognitive Neuroscience. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Cornelissen K, Laine M, Renvall K, Saarinen T, Martin N, Salmelin R. Learning new names for new objects: cortical effects as measured by magnetoencephalography. Brain and Language. 2004;89:617–622. doi: 10.1016/j.bandl.2003.12.007. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Dilda V, Vicchrilli AE, Federer F, Lee JN. The influence of complex action knowledge on representations of novel graspable objects: evidence from functional magnetic resonance imaging. Journal of the International Neuropsychological Society: JINS. 2007;13:1009–1020. doi: 10.1017/S1355617707071093. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Lee JN. Neural representations of graspable objects: are tools special? Brain Research: Cognitive Brain Research. 2005;22(3):457–469. doi: 10.1016/j.cogbrainres.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Cross ES, Kraemer DJ, Hamilton AF, Kelley WM, Grafton ST. Sensitivity of the action observation network to physical and observational learning. Cerebral Cortex. 2009;19:315–326. doi: 10.1093/cercor/bhn083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Valyear KF. Human parietal cortex in action. Current Opinion in Neurobiology. 2006;16:205–212. doi: 10.1016/j.conb.2006.03.005. [DOI] [PubMed] [Google Scholar]

- De Renzi E, Faglioni P, Sorgato P. Modality-specific and supramodal mechanisms of apraxia. Brain: A Journal of Neurology. 1982;105:301–312. doi: 10.1093/brain/105.2.301. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Shadmehr R. Detecting and adjusting for artifacts in fMRI time series data. Neuroimage. 2005;27:624–634. doi: 10.1016/j.neuroimage.2005.04.039. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duarte A, Ranganath C, Winward L, Hayward D, Knight RT. Dissociable neural correlates for familiarity and recollection during the encoding and retrieval of pictures. Brain Research: Cognitive Brain Research. 2004;18:255–272. doi: 10.1016/j.cogbrainres.2003.10.010. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, et al. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Schliecher A, Zilles K, Amunts K. The human parietal operculum. I. Cytoarchitectonic mapping of subdivisions. Cerebral Cortex. 2006;16:254–267. doi: 10.1093/cercor/bhi105. [DOI] [PubMed] [Google Scholar]

- Ferstl EC, Neumann J, Bogler C, Yves von Cramon D. The extended language network: a meta-analysis of neuroimaging studies on text comprehension. Human Brain Mapping. 2007;29(5):581–593. doi: 10.1002/hbm.20422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fodor JA. Concepts: where cognitive science went wrong. Oxford: Oxford University Press; 1998. [Google Scholar]

- Frey SH. What puts the how in where? Tool use and the divided visual streams hypothesis. Cortex. 2007;43:368–375. doi: 10.1016/s0010-9452(08)70462-3. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Price CJ, Fletcher P, Moore C, Frackowiak RS, Dolan RJ. The trouble with cognitive subtraction. Neuroimage. 1996;4:97–104. doi: 10.1006/nimg.1996.0033. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Ghosh S, Sherwood A, Chen J. Neurophysiology of prehension. III. Representation of object features in posterior parietal cortex of the macaque monkey. Journal of Neurophysiology. 2007;98:3708–3730. doi: 10.1152/jn.00609.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Reitzen SD, Ghosh S, Brown AS, Chen J, et al. Neurophysiology of prehension. I. Posterior parietal cortex and object-oriented hand behaviors. Journal of Neurophysiology. 2007;97:387–406. doi: 10.1152/jn.00558.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Ro JY, Babu KS, Ghosh S. Neurophysiology of prehension. II. Response diversity in primary somatosensory (S-I) and motor (M-I) cortices. Journal of Neurophysiology. 2007;97:1656–1670. doi: 10.1152/jn.01031.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. Hillsdale: Lawrence Erlbaum Associates, Inc; 1979. [Google Scholar]

- Grafton ST. Embodied cognition and the simulation of action to understand others. Annals of the New York Academy of Sciences. 2009;1156:97–117. doi: 10.1111/j.1749-6632.2009.04425.x. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Fadiga L, Arbib MA, Rizzolatti G. Premotor cortex activation during observation and naming of familiar tools. Neuroimage. 1997;6:231–236. doi: 10.1006/nimg.1997.0293. [DOI] [PubMed] [Google Scholar]

- Grezes J, Decety J. Does visual perception of object afford action? Evidence from a neuroimaging study. Neuropsychologia. 2002;40:212–222. doi: 10.1016/s0028-3932(01)00089-6. [DOI] [PubMed] [Google Scholar]

- Grol MJ, Majdandzic J, Stephan KE, Verhagen L, Dijkerman HC, Bekkering H, et al. Parieto-frontal connectivity during visually guided grasping. Journal of Neuroscience. 2007;27:11877–11887. doi: 10.1523/JNEUROSCI.3923-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gronholm P, Rinne JO, Vorobyev V, Laine M. Naming of newly learned objects: a PET activation study. Brain Research: Cognitive Brain Research. 2005;25:359–371. doi: 10.1016/j.cogbrainres.2005.06.010. [DOI] [PubMed] [Google Scholar]

- Hulten A, Vihla M, Laine M, Salmelin R. Accessing newly learned names and meanings in the native language. Human Brain Mapping. 2009;30:976–989. doi: 10.1002/hbm.20561. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James KH, Swain SN. Only self-generated actions create sensorimotor systems in the developing brain. Developmental Science. 2011;14:673–678. doi: 10.1111/j.1467-7687.2010.01011.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James TW, Gauthier I. Auditory and action semantic features activate sensory-specific perceptual brain regions. Current Biology. 2003;13:1792–1796. doi: 10.1016/j.cub.2003.09.039. [DOI] [PubMed] [Google Scholar]

- James TW, Gauthier I. Brain areas engaged during visual judgments by involuntary access to novel semantic information. Vision Research. 2004;44:429–439. doi: 10.1016/j.visres.2003.10.004. [DOI] [PubMed] [Google Scholar]

- Jeannerod M. The representing brain: neural correlates of motor intention and imagery. Behavioural Brain Sciences. 1994;17:187–245. [Google Scholar]

- Jeannerod M, Arbib MA, Rizzolatti G, Sakata H. Grasping objects: the cortical mechanisms of visuomotor transformation. Trends in Neuroscience. 1995;18:314–320. [PubMed] [Google Scholar]

- Johnson SH. Imagining the impossible: intact motor representations in hemiplegics. Neuroreport. 2000;11:729–732. doi: 10.1097/00001756-200003200-00015. [DOI] [PubMed] [Google Scholar]

- Johnson SH, Sprehn G, Saykin AJ. Intact motor imagery in chronic upper limb hemiplegics: evidence for activity-independent action representations. Journal of Cognitive Neuroscience. 2002;14:841–852. doi: 10.1162/089892902760191072. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH. The neural bases of complex tool use in humans. Trends in Cognitive Science. 2004;8:71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cerebral Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: the importance of manipulability and action in tool representation. Journal of Cognitive Neuroscience. 2003;15:30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Liebich S, Hauk O, Tanaka J. Experience-dependent plasticity of conceptual representations in human sensory-motor areas. Journal of Cognitive Neuroscience. 2007;19:525–542. doi: 10.1162/jocn.2007.19.3.525. [DOI] [PubMed] [Google Scholar]

- Liljestrom M, Hulten A, Parkkonen L, Salmelin R. Comparing MEG and fMRI views to naming actions and objects. Human Brain Mapping. 2009;30:1845–1856. doi: 10.1002/hbm.20785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. Concepts and categories: a cognitive neuropsychological perspective. Annual Review of Psychology. 2009;60:27–51. doi: 10.1146/annurev.psych.60.110707.163532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. What drives the organization of object knowledge in the brain? Trends in Cognitive Sciences. 2011;15:97–103. doi: 10.1016/j.tics.2011.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Milleville SC, Negri GA, Rumiati RI, Caramazza A, Martin A. Action-related properties shape object representations in the ventral stream. Neuron. 2007;55:507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mangels JA, Manzi A, Summerfield C. The first does the work, but the third time’s the charm: the effects of massed repetition on episodic encoding of multimodal face-name associations. Journal of Cognitive Neuroscience. 2010;22:457–473. doi: 10.1162/jocn.2009.21201. [DOI] [PubMed] [Google Scholar]

- Martin A. The organization of semantic knowledge and the orign of words in the brain. In: Jablonski N, Aiello L, editors. The origins and diversification of language. San Francisco: California Academy of Sciences; 1998. pp. 69–98. [Google Scholar]

- Martin A. The representation of object concepts in the brain. Annual Review of Psychology. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science. 1995;270:102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- Mechelli A, Sartori G, Orlandi P, Price CJ. Semantic relevance explains category effects in medial fusiform gyri. Neuroimage. 2006;30:992–1002. doi: 10.1016/j.neuroimage.2005.10.017. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Peissig JJ, Singer J, Kawasaki K, Sheinberg DL. Effects of long-term object familiarity on event-related potentials in the monkey. Cerebral Cortex. 2007;17:1323–1334. doi: 10.1093/cercor/bhl043. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT, Moore CJ, Morton C, Laird AR. Meta-analyses of object naming: effect of baseline. Human Brain Mapping. 2005;25:70–82. doi: 10.1002/hbm.20132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermuller F. Brain mechanisms linking language and action. Nature Reviews Neuroscience. 2005;6:576–582. doi: 10.1038/nrn1706. [DOI] [PubMed] [Google Scholar]

- Romaya J. Cogent 2000 and cogent graphics software. 2000 http://www.vislab.ucl.ac.uk/Cogent/

- Rothi LJGH, Heilman KM. Apraxia: the neuropsychology of action. Hove: Psychology Press; 1997. [Google Scholar]

- Sakata H, Tsutsui K, Taira M. Toward an understanding of the neural processing for 3D shape perception. Neuropsychologia. 2005;43:151–161. doi: 10.1016/j.neuropsychologia.2004.11.003. [DOI] [PubMed] [Google Scholar]

- Shapiro KA, Pascual-Leone A, Mottaghy FM, Gangitano M, Caramazza A. Grammatical distinctions in the left frontal cortex. Journal of Cognitive Neuroscience. 2001;13:713–720. doi: 10.1162/08989290152541386. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar Stereotaxic Atlas of the Human Brain. New York: Thieme; 1988. [Google Scholar]

- Tunik E, Rice NJ, Hamilton A, Grafton ST. Beyond grasping: representation of action in human anterior intraparietal sulcus. Neuroimage. 2007;36(2):T77–T86. doi: 10.1016/j.neuroimage.2007.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler LK, Russell R, Fadili J, Moss HE. The neural representation of nouns and verbs: PET studies. Brain: A Journal of Neurology. 2001;124:1619–1634. doi: 10.1093/brain/124.8.1619. [DOI] [PubMed] [Google Scholar]

- Uncapher MR, Otten LJ, Rugg MD. Episodic encoding is more than the sum of its parts: an fMRI investigation of multifeatural contextual encoding. Neuron. 2006;52:547–556. doi: 10.1016/j.neuron.2006.08.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungerleider LG, Mishkin M. Two cortical visual systems. In: MAGRJWM, Ingle DI, editors. Analysis of visual behavior. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- Valyear KF, Cavina-Patresi C, Stiglick AJ, Culham JC. Does tool-related fMRI activity within the intraparietal sulcus reflect the plan to grasp? Neuroimage. 2007;36(2):T94–T108. doi: 10.1016/j.neuroimage.2007.03.031. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Senkfor AJ. Memory for words and novel visual patterns: repetition, recognition, and encoding effects in the event-related brain potential. Psychophysiology. 1996;33:491–506. doi: 10.1111/j.1469-8986.1996.tb02425.x. [DOI] [PubMed] [Google Scholar]

- Van Petten C, Senkfor AJ, Newberg WM. Memory for drawings in locations: spatial source memory and event-related potentials. Psychophysiology. 2000;37:551–564. [PubMed] [Google Scholar]

- Weisberg J, van Turennout M, Martin A. A neural system for learning about object function. Cerebral Cortex. 2007;17:513–521. doi: 10.1093/cercor/bhj176. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.