Abstract

MacCallum, Browne, and Cai (2006) proposed a new framework for evaluation and power analysis of small differences between nested structural equation models (SEMs). In their framework, the null and alternative hypotheses for testing a small difference in fit and its related power analyses were defined by some chosen root-mean-square error of approximation (RMSEA) pairs. In this article, we develop a new method that quantifies those chosen RMSEA pairs and allows a quantitative comparison of them. Our method proposes the use of single RMSEA values to replace the choice of RMSEA pairs for model comparison and power analysis, thus avoiding the differential meaning of the chosen RMSEA pairs inherent in the approach of MacCallum et al. (2006). With this choice, the conventional cutoff values in model overall evaluation can directly be transferred and applied to the evaluation and power analysis of model differences.

Keywords: structural equation model, model comparison, RMSEA, equi-discrepancy line, power analysis

Comparison of nested structural equation models (SEMs) was traditionally based on the null hypothesis that competing models have the same fit in population. By far, the classical normal theory-based likelihood ratio (NTLR) test (e.g., Jöreskog, 1971; Steiger, Shapiro, & Browne, 1985) is the most common method used for testing this null hypothesis. Unquestionably, the power of the NTLR test to detect any difference in fit between competing models should be an important issue for this null hypothesis testing.

Despite the popularity of this approach, MacCallum, Browne, and Cai (2006) suggested that the traditional null hypothesis that competing models have the same fit in population is always false and will be rejected by the NTLR test in practice when the sample size (N = n + 1) is large enough. Instead, they advocated that a null hypothesis of testing some small difference in fit between nested models should replace the traditional one for comparison of SEMs. Under this new null hypothesis, the restricted model can be selected if the NTLR test doesn't detect a large enough difference in fit between two nested models. Similarly, for this type of null hypothesis testing, the power of the NTLR test to detect a large enough difference in fit between two nested models should be an important issue, too.

MacCallum et al. (2006) proposed a unified approach to such model comparison and power analysis. In their approach, the null and alternative hypotheses needed for model comparison and power analysis are defined by pairs of root-mean-square error of approximation (RMSEA; Browne & Cudeck, 1993; Steiger & Lind 1980) values chosen for competing models. Under these newly defined hypotheses, the power of the NTLR test and the comparison of models by the NTLR test can be investigated.

In this article, we first review this new approach to model comparison and power analysis. Then we propose a new method to quantify the RMSEA pairs chosen for the model comparison and the power analyses in MacCallum et al. (2006). By this new method, the choice of single RMSEA values is suggested to replace the choice of RMSEA pairs to define the null and alternative hypotheses needed for model comparison and power analysis. Our new method is then compared with the choices of RMSEA pairs by MacCallum et al. (2006) and applied to some empirical examples in the literature, followed by a discussion at the end of the article.

An Approach to Model Comparison and Power Analysis

Let us assume for the moment that the model is estimated by the normal theory-based maximum likelihood (ML) discrepancy function, which is defined as

where S is the sample covariance matrix, Σ̂ is the covariance matrix implied by the model, and q is the number of observed variables. Let two models M1 and M2 have degrees of freedom ν1 and ν2 respectively and M2 be nested in M1. Let F̂1 and F̂2, respectively, be the minimized FML values of M1 and M2 in sample. Let F1 and F2 be the corresponding population values of F̂1 and F̂2 respectively. Suppose for the moment that the data are normal, and two nested models are not badly specified and satisfy the so-called population drift assumptions (see Steiger et al., 1985, for detail). The well-known NTLR statistic TML = nF̂2 − nF̂1 will asymptotically follow the noncentral distribution with degrees of freedom ν2 − ν1 and noncentrality parameter nF2 − nF1.

In SEM, analysis of statistical power of TML to reject H0 : F2 − F1 = 0, the null hypothesis of no difference in fit between nested models, is a traditional issue. For this power analysis, because the value of F2 − F1 is generally unknown, we need an alternative hypothesis H1 : F2 − F1 = δ, where δ is a positive chosen value. Then TML will have an approximate central distribution with degrees of freedom ν2 − ν1 under H0 and an approximate noncentral distribution with degrees of freedom ν2 − ν1 and noncentrality parameter nδ under H1. Let us use α = .05. The critical value for TML to reject H0 will be the 95 percent quantile of the central distribution. Let us denote this critical value by . The approximate power of TML to reject H0 under H1 is the probability of TML greater than under H1. For this power analysis, given ν2 − ν1, n, and α, the distribution of TML and the critical value are fixed under H0. However, a value must be chosen for δ to define the noncentral distributions of TML under H1.

MacCallum et al. (2006) defined the value of δ as follows. Let r1 and r2 denote the population RMSEAs of M1 and M2 respectively and be defined as and . Then F2 − F1 can be expressed as

| (1) |

Mimicking the expression of F2 − F1 in (1), MacCallum et al. (2006) defined

| (2) |

where r1δ and r2δ are some RMSEA values chosen for M1 and M2, respectively, to define δ in H1 for the power analysis.

MacCallum et al. (2006) stated that H0 : F2 − F1 = 0 is too restrictive and always would be false in practice. As an alternative, they advocated the good enough principle (Serlin & Lapsley, 1985) for model comparison, and proposed a new null hypothesis of the form

| (3) |

where δ* is some chosen small positive value. They argued that this null hypothesis of small difference in fit between nested models is more realistic than the null hypothesis of no difference in fit between nested models for SEM model comparison in practice. Under this null hypothesis, M2 can be selected as long as the difference in fit between M1 and M2 is small enough. Under this null hypothesis, the critical value for TML to reject H0 now becomes the 95 percent quantile of the noncentral distribution and can be denoted by . If TML is greater than , M1 would be preferred. Otherwise, M2 would be preferred. MacCallum et al. (2006) defined

| (4) |

where r1δ* and r2δ* are some RMSEA values chosen for M1 and M2, respectively, to determine the value of δ*.

As with the power of rejecting H0 : F2 − F1 = 0, the statistical power of TML to reject H0 : F2 − F1 ≤ δ* should be an important issue for testing the null hypothesis H0 : F2 − F1 ≤ δ*. Notice that the power of TML in this context no longer refers to the probability of detecting any difference in fit between competing models as in traditional power analysis. Instead, it now becomes the probability of detecting a large enough difference in fit between models.

For this type of power analysis, an alternative hypothesis H1 : F2 − F1 = δ** is needed. Under this alternative hypothesis, TML has an approximate noncentral distribution. The approximate power of TML to reject H0 : F2 − F1 ≤ δ* is the probability of TML greater than under the alternative hypothesis. MacCallum et al. (2006) again defined δ** in terms of a RMSEA pair as

| (5) |

where r1δ** and r2δ** are some RMSEA values chosen for M1 and M2, respectively, to determine δ**. Notice that δ** here must be greater than δ* for this type of power analysis.

A New Method for the Choice of RMSEAs

From the description above, it is clear that the choice of RMSEA pairs, such as (r1δ, r2δ) in (2), (r1δ*, r2δ*) in (4), and (r1δ**, r2δ**) in (5), is crucial to the power analyses and model comparison methodology proposed in MacCallum et al. (2006). In this article, we propose a way to quantify those RMSEA pairs chosen for model comparison and power analysis and suggest a new method to replace the choice of RMSEA pairs to define δ, δ* or δ** across models and conditions.

We use δC to represent the chosen value for δ, δ* or δ**, and use (r1C, r2C) to represent the chosen values for the defining RMSEA pairs in (2), (4), and (5). Then the equations in (2), (4), and (5) can be expressed in a general equation, that is,

| (6) |

We use Figure 1 to illustrate our idea. Let us assume for moment that ν1 = 20 and ν2 = 22. Let the horizontal and vertical axes in Figure 1 represent r1C and r2C respectively. Then in Figure 1 the area of any reasonable (r1C, r2C) pair to define δ, δ* or δ** should be above Line 0, where Line 0 is the line with δC = 0. This is because the minimum value of F2 − F1 is zero and δC defined by (r1C, r2C) must be greater than zero. In addition, Line 0 clearly should be lower than the diagonal line since M2 is assumed to be nested in M1 and .

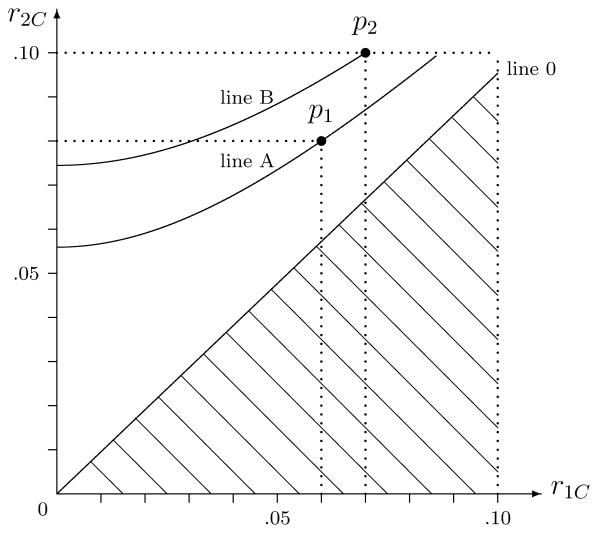

Figure 1.

The equi-discrepancy lines with ν1 = 20 and ν2 = 22

Let p1 denote the point (r1C, r2C) = (.06,.08) in Figure 1 and be our choice of RMSEA pair, for example for (r1δ**, r2δ**). There should exist one line, Line A in Figure 1, which consists of a series of points (r1C′, r2C′) in Figure 1 and satisfying the following equality

| (7) |

Similarly, when the point p2 = (.07,.10) is chosen for (r1δ**, r2δ**), Line B exists in Figure 1 by (7). In fact, to any RMSEA pair chosen for such as (r1δ, r2δ) in (2), (r1δ*, r2δ*) in (4), and (r1δ**, r2δ**) in (5), there will always exist a line in the figure consisting of infinitely many RMSEA pairs satisfying (7). Let us call this line the equi-discrepancy line because all RMSEA pairs on this line have the same value of δC and represent the same degree of discrepancy between two nested models.

It is not hard to see that Line 0 is also a line satisfying (7), where all RMSEA pairs represent the same zero discrepancy between nested models. Suppose that the points on Line 0 are chosen as (r1C, r2C) for model comparison. The null hypothesis of no difference in fit between nested models can be redefined as and can be interpreted as a null hypothesis of whether the true RMSEA pair (r1, r2) is on Line 0 or not. In addition, combining the identity in (1) with (3) and (4), we obtain a null hypothesis equivalent to (3), that is,

| (8) |

By (8), testing the null hypothesis of small difference in fit between nested models can be interpreted as testing if the true RMSEA pair (r1, r2), which we don't know exactly, is inside the area between the equi-discrepancy line defined by the chosen RMSEA pair (r1δ*, r2δ*) and Line 0 or not. When the chosen RMSEA pair (r1δ*, r2δ*) represents a higher equi-discrepancy line, the area of testing is larger, δ* is larger, and a greater discrepancy is allowed between nested models. In contrast, when (r1δ*, r2δ*) represents a lower line, the area of testing is smaller, δ* is smaller and less discrepancy is allowed.

This is also the case for power analysis. When the chosen RMSEA pair (r1C, r2C) for defining δ or δ** in the alternative hypothesis represents a higher equi-discrepancy line, TML will have more power of rejection given n, ν2 − ν1, α and a fixed null hypothesis. Otherwise, it will have less power of rejection.

Furthermore, no matter whether a RMSEA pair is chosen for model comparison or power analysis, its corresponding equi-discrepancy line in Figure 1 by (7) will cross the vertical axis and have a point for which . In Figure 1, for example, for Line A, and for Line B, illustrate this phenomenon. Combining with (6) and (7), we obtain

| (9) |

By (9), any discrepancy defined by a chosen RMSEA pair (r1C, r2C) for model comparison or power analysis is equivalent to a discrepancy between the saturated model and a close-fitting model with degrees of freedom ν2 and true . For example, p1 on Line A can be considered to represent an overall discrepancy of a model with degrees of freedom 22 and true RMSEA=.056 against the saturated model, while for p2 on Line B, this close-fitting model has degrees of freedom 22 and a true RMSEA=.074. By (9), we translate the choice of (r1C, r2C) for model comparison and power analysis into an equivalent choice of for a close-fitting reference model with degrees of freedom ν2.

Given this equivalence, we can be free from a two-dimensional choice of (r1C, r2C) and instead define δ, δ* or δ** for model comparison and power analysis based on the choice of . By definition, RMSEA indicates discrepancy per degree of freedom. Choosing to define δ, δ* or δ** would retain the same meaning for δ, δ* or δ** in spite of the change of ν1 or ν2. Specifically, even though the value of δ, δ* or δ** and the shape of the corresponding equi-discrepancy line may change with ν1 or ν2 by (7) and (9), the δ, δ* or δ** defined by a specific means the same average discrepancy over degrees of freedom across studies.

In contrast, this is not the case when a two-dimensional choice of (r1C, r2C) is used for defining δ, δ* or δ**. For example, MacCallum et al. (2006) selected r1δ* = .05 and r2δ* = .06 for δ* in (3) to test small difference between models for two published empirical studies: Joireman, Anderson, and Strathman (2003) and Dunkley, Zuroff, and Blankstein (2003). In Joireman et al. (2003), ν1 = 10 and ν2 = 12. By (9), with r1δ* = .05 and r2δ* = .06, the corresponding . In Dunkley et al. (2003), ν1 = 337 and ν2 = 338. With r1δ* = .05 and r2δ* = .06, correspondingly. By our method, we find that the values of δ* defined by r1δ* = .05 and r2δ* = .06 as in MacCallum et al. (2006) vary in terms of and hence have different meaning across these two studies. The same phenomenon could happen to δ or δ** when a constant RMSEA pair (r1C, r2C) is selected for the power analyses across studies.

In addition to eliminating this differential meaning of RMSEA pairs inherent in the approach of MacCallum et al. (2006), the choice of also allows us to utilize the RMSEA cutoff criteria, established in SEM for a close-fitted reference model, for our model comparison and power analyses. It is well-known that RMSEAs equal to .056 or .074 may represent discrepancies between some mildly misspecified models and the corresponding saturated models. Thus, p1 and p2 by their corresponding equal to .056 and .074 respectively can be considered to allow too much discrepancy to define δ* in (3) for small difference evaluation. In SEM, .05 is the widely used RMSEA cutoff value for model close fit (e.g., Browne & Cudeck, 1993). It can be used as to define δ* for model comparison.

MacCallum, Browne, and Sugawara (1996) used to define the alternative hypothesis and estimated the power, or the minimum N, of the likelihood ratio test to reject the exact fit of SEM models with degrees of freedom ν2. In this article, by (9) and the equi-discrepancy lines above, we demonstrated the connection between choices of for model overall evaluation and for model comparison. This connection also bridges our power analysis with MacCallum et al. (1996). By the same rationale, we could use to define δ in the alternative hypothesis and then estimate the power, or the minimum N, of TML to reject the null hypothesis of no difference in fit between nested models.

Moreover, with δ* defined by such as in the null hypothesis, the for defining δ** in the alternative hypothesis should be greater than .05. With such choice of , the corresponding δ** may represent some degree of discrepancy between a mildly misspecified model and the saturated model. MacCallum et al. (1996) used for the alternative hypothesis and estimated the power of the likelihood ratio test to reject model close fit. Similarly, we could use to define δ** and then estimate the power of TML to reject the null hypothesis of a small difference in fit between nested models.

Of course, we need to emphasize here that the cutoff values above borrowed from the model overall evaluation are merely guidelines. We have no intention to insist any constant RMSEA value for all situations. For example, some other values less than .05 such as .033 or .039 mentioned above can be selected as for model comparison too. MacCallum et al. (2006) provided a good discussion on how these cutoff criteria for model overall evaluation may not consistently yield valid conclusions in practice. In total, the choices of for model difference evaluation and power analysis still depend upon the scrutiny of investigators for their particular application. However, no matter what values they choose, our method is still valid and there would be no contradiction between their choices and our method.

Another interesting point we should mention here is that the interval between Line 0 and any other equi-discrepancy line always shrinks as r1C increases as in Figure 1. Substantively, this means that under the same tolerable discrepancy for two nested models, the model comparison as in (8) allows more restriction or parsimony (larger r2 − r1) when the general model contains less misspecification (smaller r1), or equivalently, less restriction (smaller r2 − r1) when the general model becomes less trustable (larger r1). Although we do not know the values of r1 and r2, the model comparison as in (8) automatically sets a corresponding standard for r1 and r2 by the equi-discrepancy lines.

Relevant Developments

The equi-discrepancy line above is defined by a series of infinite equivalent alternatives to an arbitrary pair of RMSEA values. In fact, the equivalence of infinitely many RMSEA pairs is not a completely new idea. MacCallum, Lee, and Browne (2010) used a series of infinite alternatives to an arbitrary RMSEA pair to define their isopower contour. For any RMSEA pair on the isopower contour, the power of the likelihood ratio statistic to reject model overall fit (either exact or close fit) remains the same. The isopower contours in their Figures 9 and 10 look similar to our equi-discrepancy lines in Figure 1.

However, we need to point out that the equivalence of their infinite alternatives is defined by a power calculation which requires (among other things) a choice of discrepancy function, a test statistic, the distribution of the test statistic under the null hypothesis, the distribution of the test statistic under the alternative hypothesis, sample size N, and a choice of α. Each point on the isopower contour represents the power of a statistic for model overall evaluation. On the other hand, the equivalence of our infinite alternatives requires none of these and is defined by (7) only. The points on our equi-discrepancy lines do not yield a plot for the power of a test statistic without further information.1 Although coming from different directions, the idea of the equivalence of infinitely many RMSEA pairs is shared by the two studies and may be applicable to other SEM contexts beyond these studies in the future.

Comparison and Application to Issues in SEM

Our new method quantifies the RMSEA pairs chosen for the model comparison and the power analyses proposed by MacCallum et al. (2006) and allows discrepancies defined by different RMSEA pairs to be compared with each other quantitatively in a common metric. By our method, the choice of a single is suggested to replace the choice of a RMSEA pair (r1C, r2C) for the model comparison and the power analyses proposed by MacCallum et al. (2006). In this section, we reexamine the RMSEA pair choices for the examples in MacCallum et al. (2006) using our method and apply our choice of to issues in SEM.

Quantification of the RMSEA pair choices

MacCallum et al. (2006) suggested to use a large set of pairs of r1C and r2C in a reasonable range to define δ or δ** for power analysis. For example, in their Table 1 and Table 2, given ν1 = 20 and ν2 = 22, MacCallum et al. (2006) selected r1δ from .03 to .09 and r2δ from .04 to .10 to define δ in the alternative hypothesis and estimated the power, or minimum N, of TML to reject the null hypothesis of no difference in fit between nested models. We apply our method to compare those choices and use (9) to calculate the values for the pairs of r1δ and r2δ selected by MacCallum et al. (2006) in their tables. The R code used for the calculation is given in the Appendix and the calculated values are presented in our Table 1.

Table 1.

The for the selected pairs of r1C and r2C with ν1 = 20 and ν2 = 22.

| r1C | |||||||

|---|---|---|---|---|---|---|---|

| r2C | .03 | .04 | .05 | .06 | .07 | .08 | .09 |

| .04 | .028 | ||||||

| .05 | .041 | .032 | |||||

| .06 | .053 | .046 | .036 | ||||

| .07 | .064 | .059 | .051 | .040 | |||

| .08 | .075 | .070 | .064 | .056 | .044 | ||

| .09 | .085 | .082 | .076 | .069 | .060 | .048 | |

| .10 | .096 | .092 | .088 | .082 | .074 | .065 | .051 |

In our Table 1, eight pairs of r1δ and r2δ have values less than .05, while all other twenty pairs have values greater than .05. By our method, we would say that for this power analysis eight pairs of r1δ and r2δ suggested by MacCallum et al. (2006) may represent a discrepancy between a close-fitting model and the saturated model while all other twenty pairs may represent a larger discrepancy. Furthermore, in our Table 1, with the same ν1 and ν2, the RMSEA pairs at the higher end of the RMSEA scale such as (.08,.10) have larger values and represent larger discrepancies than the pairs at the lower end of the RMSEA scale with the same difference of r1δ and r2δ such as (.03,.05) or (.04,.06). This phenomenon in turn accounts for the increasing statistical power in detecting model difference or the decreasing minimum N required for the same power along the diagonal bands of Table 1 and Table 2 in MacCallum et al. (2006).

The choice of for issues in SEM

The choice of was recommended in this article to replace the choice of RMSEA pairs to define δ, δ* and δ** for model comparison and power analysis. MacCallum et al. (2006) selected r1δ = 0.4 and r2δ = .06 to define δ in the alternative hypothesis H1 : F2 − F1 = δ and conduct power analysis for two published empirical studies (Manne & Glassman, 2000; Sadler & Woody, 2003). In Sadler and Woody (2003), ν1 = 24 and ν2 = 27. In Manne and Glassman (2000), ν1 = 19 and ν2 = 26. Suppose that we choose a single value to define δ for power analysis. By (9), for Sadler and Woody (2003) and for Manne and Glassman (2000). Given these δ s, we can calculate the power of TML to reject H0 : F2 − F1 = 0 in each study. As mentioned before, TML would have an approximate central distribution under H0 : F2 − F1 = 0 and have an approximate noncentral distribution under H1 : F2 − F1 = δ. With α = .05, this power is the probability of TML to exceed the critical value under H1 : F2 − F1 = δ. In Sadler and Woody (2003), ν2 − ν1= 3 and N = 112. Given α = .05, the power is .62. In Manne and Glassman (2000), ν2 − ν1= 7 and N = 191. Given α = .05, the power is .73. The steps of power calculation for the two studies are coded in R and given in the Appendix.

Both Sadler and Woody (2003) and Manne and Glassman (2000) failed to detect a significant difference between their nested models by TML. As a result, both studies selected the restricted models after comparison. Given the power analyses above, the nonsignificance of TML in both studies, as mentioned by MacCallum et al. (2006), may partially be due to the moderate power of rejection in both studies. Given this possibility, we decided to calculate the minimum N to achieve a .80 power of rejection. With α = .05, this minimum N is the minimum sample size that the probability of TML to exceed under H1 : F2 − F1 = δ is equal to or greater than .80. In Sadler and Woody (2003), given ν2 − ν1= 3 and δ defined by above, we find that this minimum N is 163. In Manne and Glassman (2000), given ν2 − ν1= 7 and δ defined by above, we find that this minimum N is 222. The steps for the calculation of minimum N s are coded in R and given in the Appendix. As in MacCallum et al. (2006), our minimum N s calculated here suggest that more additional subjects are needed for TML in both studies to reach a rejection power of .80.

We demonstrated before that when the δ* in H0 : F2 − F1 ≤ δ* is defined by r1δ* = .05 and r2δ* = .06 as in MacCallum et al. (2006), the corresponding would be equal to .039 for Joireman et al. (2003) and be equal to .033 for Dunkley et al. (2003). Now we instead select a single value, for example , to define δ*. By (9), for Joireman et al. (2003). With ν2 − ν1= 2, α = .05 and N = 154, the critical value for rejecting H0 : F2 − F1 ≤ δ* is . The observed value of TML = 19.13 yields a significant level of p < .05, indicating rejection of the null hypothesis of small difference in fit for this study. For Dunkley et al. (2003), δ* = 338×.0332 = .368 by (9). With ν2 − ν1= 1, α = .05 and N = 163, the critical value is . The observed value of TML =3.84 yields a nonsignificant level of p >.99, suggesting that the null hypothesis of small difference in fit is acceptable. The steps for the calculation of critical and p -values are coded in R and given in the Appendix.

Both Joireman et al. (2003) and Dunkley et al. (2003) observed the significance of TML when they compared a general model with a more parsimonious and interpretable model under the traditional approach. As a result, Joireman et al. (2003) selected the general model while Dunkley et al. (2003) selected the parsimonious one despite the significance of TML. Like the traditional one, our model comparison under the null hypothesis of small difference in fit supports the choice of the general model for Joireman et al. (2003). However, for Dunkley et al. (2003), it is different from the traditional approach and supports the parsimonious model, the choice of the authors in their article.

For our model comparisons above, a related issue is the power of TML to reject the null hypothesis of small difference in fit. In their example with ν1= 20 and ν2= 22, MacCallum et al. (2006) selected r1δ** = .05 and r2δ** = .08 to define δ** in the alternative hypothesis H1 : F2 − F1 = δ**. From our Table 1, we find the corresponding . As a result, we set to define δ** for our power analysis. By (9), for Joireman et al. (2003) and for Dunkley et al. (2003). Given these δ** s and the δ* s defined by above, we can calculate the power of TML to reject H0 : F2 − F1 ≤ δ* in the two studies. In this case, TML would have an approximate noncentral distribution under H0: F2 − F1 ≤ δ** and have an approximate noncentral distribution under H1 : F2 − F1 = δ**. With α = .05, this power is the probability of TML to exceed the critical value under H1 : F2 − F1 = δ**. Our steps of power calculation for the two studies are coded in R and given in the Appendix. Our calculation shows that the power of rejection is .35 for Joireman et al. (2003) and is 1 for Dunkley et al. (2003). Notice that the degrees of freedom of competing models in Dunkley et al. (2003) are high. This may explain the higher power in their study with the same . The value may be adjusted downward given the high degrees of freedom of their models (see p. 32 of MacCallum et al., 2006).

Our power analysis results above strengthen the selection of the general model for Joireman et al. (2003) and the restricted model for Dunkley et al. (2003). In Joireman et al. (2003), the observed TML is significant even though the power for this study is just .35. In contrast, the observed TML in Dunkley et al. (2003) is nonsignificant even though the power is 1. Given the low power in Joireman et al. (2003), we calculate the minimum N for TML to reach a .80 power of rejection in this study. The steps for this calculation are coded in R and given in the Appendix. Our calculation shows that this minimum N is 555. Some additional subjects are needed for this study to gain a desirable power.

Discussion and Recommendations

In this article, we followed the footsteps of MacCallum, Browne, and Cai (2006) and developed a new method for conducting model comparison and power analysis consistent with the approach of MacCallum et al. (2006). In this development, we defined an equi-discrepancy line by equation (7). Our equi-discrepancy line reminds investigators that there always exists a series of infinite alternatives to their chosen RMSEA pair to equivalently define the null or alternative hypothesis for model comparison and power analysis as in MacCallum et al. (2006). With these infinite alternatives, testing no or a small difference between nested models can be graphically translated or understood as testing whether the true RMSEA pair of nested models falls on Line 0 or the region below the equi-discrepancy line in Figure 1.

Among these infinite alternatives, we also identified a unique RMSEA pair that is at the cross of the equi-discrepancy line and the vertical axis in Figure 1. With this unique RMSEA pair, any chosen RMSEA pair can be quantified into the RMSEA value of a reference SEM model. Consequently, for investigators, their choices of RMSEA pairs for model comparison and power analysis can be compared quantitatively. This comparison as in our Table 1 also provides a reminder to investigators that the RMSEA pairs at the different parts of the RMSEA scale, for example, (.03,.05) vs. (.08,.10) in our Table 1, would represent different discrepancies for model comparison and have different implications for power analysis even though they have the same difference of RMSEA values.

More importantly, given this quantification, the choice of RMSEA pairs in MacCallum et al. (2006) now is recommended to be replaced by the choice of a single RMSEA value instead. For model comparison and power analysis following MacCallum et al. (2006), this choice of single RMSEA value can eliminate the differential meaning of the RMSEA pairs inherent in the original approach of MacCallum et al. (2006). This choice also bridges the two branches of SEM model evaluation, model overall evaluation and model difference evaluation, and makes possible for the criteria established for model overall evaluation to be transferred and applied to the issues of SEM model difference evaluation and power analyses.

As a result, in our new procedure illustrated in the examples above, we propose to first choose some reasonable RMSEA values to define the null and alternative hypothesis for model comparison and power analysis as per MacCallum et al. (2006). These choices could be made based on some established criteria for model overall evaluation (e.g., Browne & Cudeck, 1993; MacCallum et al., 1996) or some other values upon the scrutiny of investigators. Once these defining RMSEA values are chosen, model comparison and power analysis as in MacCallum et al. (2006) can be conducted under the corresponding null and alternative hypotheses as illustrated in our examples above.

Of course, as a followup to MacCallum et al. (2006), our proposed method for model comparison and power analysis would inevitably inherit many issues encountered by MacCallum et al. (2006). These issues include assumptions such as TML to be central or non-central chi-square distributed, estimation methods other than the ML discrepancy function, limitations of RMSEA cutoff criteria for practical use, different approaches to model comparison (e.g., Satorra & Saris, 1985), etc. For all these issues, please refer to MacCallum et al. (2006) for a thorough discussion in the context of the general framework. In addition, in multisample SEM studies, due to constraints across groups, Steiger (1998) proposed a modification of the definition of RMSEA. That is, and for M1 and M2 respectively, where G is the number of groups. Under this definition, by dividing the right side of (2), (4), (5) or (6) by G, our proposed method could be still valid to define the value of δC for model comparison and power analysis in MacCallum et al. (2006). Of course, RMSEA in this case doesn't have the interpretation as discrepancy per degree of freedom as pointed out by MacCallum et al. (2006).

MacCallum et al. (2006) pointed out that their framework can be generalized into some fit indices other than RMSEA. Correspondingly, our developments are also applicable to those fit indices. For example, let γ1C and γ2C denote the values of Steiger's (1989) γ chosen for M1 and M2 respectively. Then δC can be defined as δC = (p/2) · (1/γ2C − 1/γ1C) (see Kim, 2005). Similarly, let Mc1C and Mc2C denote the values of McDonald's (1989) fit index chosen for M1 and M2 respectively. Then δC can be defined as δC = −2log(Mc2C) + 2log(Mc1C) (see Kim, 2005). Following the same logic for RMSEAs in (7) and (9), we obtain

and

where γ1C and γ2C′ constitute infinite alternatives to γ1C and γ2C, Mc1C′ and Mc2C′ constitute infinite alternatives to Mc1C and Mc2C, and and are the corresponding values of Steiger's γ and McDonald's fit index for the reference model. Again, by these infinite alternatives, different equi-discrepancy lines can be drawn. By these equations, the choice of and can replace the choice of pairs of Steiger's γ and McDonald's fit index to define δ, δ* and δ** in the null and alternative hypotheses for model comparison and power analysis. Similarly, some conventional cutoff values of these fit indices (e.g., Hu & Bentler, 1999) can be used for the choice of or for model comparison and power analysis.

Acknowledgments

Research supported in part by grants DA00017, DA01070 and P30 DA016383 from the National Institute on Drug Abuse. Part of this article has been presented at the 2008 annual international meeting of Psychometric Society, Durham, NH. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Drug Abuse.

Appendix

-

The R code to calculate the values for the pairs of r1δ and r2δ selected by MacCallum, Browne, and Cai (2006) in their Table 1 and Table 2:

dfM1<-20 # degrees of freedom of M1 dfM2<-22 # degrees of freedom of M2 r1<-seq(.03,.09,.01) # RMSEA choice for M1 r2<-r1+.01 # RMSEA choice for M2 Fn<-matrix(0,length(r2),length(r1)) for (i in 1:length(r2)) { for (j in 1:i) { Fn[i,j]<-sqrt((r2[i]ˆ2*dfM2-r1[j]ˆ2*dfM1)/dfM2) } } print(Fn,digits=2) -

The R code to calculate the power of TML to reject H0 : F2 − F1 = δ for Sadler and Woody (2003):

n<-111 # (Sample size-1) dfM1<-24 # degrees of freedom of M1 dfM2<-27 # degrees of freedom of M2 delta<-dfM2*.05ˆ2 # define delta in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1) # Critical value under the null hypothesis power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta) print(power,digits=2) For Manne and Glassman (2000):

n<-190 # (Sample size-1) dfM1<-19 # degrees of freedom of M1 dfM2<-26 # degrees of freedom of M2 delta<-dfM2*.05ˆ2 # define delta in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1) # Critical value under the null hypothesis power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta) print(power,digits=2) -

The R code to calculate the minimum N for TML to achieve the .80 power of rejecting H0 : F2 − F1 = 0 in Sadler and Woody (2003):

dfM1<-24 # degrees of freedom of M1 dfM2<-27 # degrees of freedom of M2 delta<-dfM2*.05ˆ2 # define delta in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1) # Critical value under the null hypothesis for (n in 1:100000) { power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta) if (power>=.80) break # reach the desirable power } N<-n+1 print(N) For Manne and Glassman (2000):

dfM1<-19 # degrees of freedom of M1 dfM2<-26 # degrees of freedom of M2 delta<-dfM2*.05ˆ2 # define delta in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1) # Critical value under the null hypothesis for (n in 1:100000) { power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta) if (power>=.80) break # reach the desirable power } N<-n+1 print(N) -

The R code to calculate the p -value for TML to reject H0 : F2 − F1 ≤ δ* in Joireman, Anderson, and Strathman (2003):

Tml<-19.13 # the observed value of the likelihood ratio test n<-153 # (Sample size-1) dfM1<-10 # degrees of freedom of M1 dfM2<-12 # degrees of freedom of M2 delta<-dfM2*.033ˆ2 # define delta* in the null hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1,n*delta) # Critical value under H0 pvalue<-1-pchisq(Tml,df=dfM2-dfM1,ncp=n*delta) print(cv,digits=4) print(pvalue,digits=2) For Dunkley, Zuroff, and Blankstein (2003):

Tml<-3.84 # the observed value of the likelihood ratio test n<-162 # (Sample size-1) dfM1<-337 # degrees of freedom of M1 dfM2<-338 # degrees of freedom of M2 delta<-dfM2*.033ˆ2 # define delta* in the null hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1,n*delta) # Critical value under H0 pvalue<-1-pchisq(Tml,df=dfM2-dfM1,ncp=n*delta) print(cv,digits=4) print(pvalue,digits=2) -

The R code to calculate the power of TML to reject H0 : F2 − F1 ≤ δ* for Joireman et al. (2003):

n<-153 # (Sample size-1) dfM1<-10 # degrees of freedom of M1 dfM2<-12 # degrees of freedom of M2 delta1<-dfM2*.033ˆ2 # define delta* in the null hypothesis delta2<-dfM2*.064ˆ2 # define delta** in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1,n*delta1) # Critical value under H0 power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta2) print(power,digits=2) n<-162 # (Sample size-1) dfM1<-337 # degrees of freedom of M1 dfM2<-338 # degrees of freedom of M2 delta1<-dfM2*.033ˆ2 # define delta* in the null hypothesis delta2<-dfM2*.064ˆ2 # define delta** in the alternative hypothesis alpha<-.05 cv<-qchisq(1-alpha,df=dfM2-dfM1,n*delta1) # Critical value under H0 power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta2) print(power,digits=2) -

The R code to calculate the minimum N for TML to achieve the .80 power of rejecting H0 : F2 − F1 ≤ δ* in Joireman et al. (2003):

dfM1<-10 # degrees of freedom of M1 dfM2<-12 # degrees of freedom of M2 delta1<-dfM2*.033ˆ2 # define delta* in the alternative hypothesis delta2<-dfM2*.064ˆ2 # define delta** in the alternative hypothesis alpha<-.05 for (n in 1:100000) { cv<-qchisq(1-alpha,df=dfM2-dfM1,ncp=n*delta1) # Critical value under H0 power<-1-pchisq(cv,df=dfM2-dfM1,ncp=n*delta2) if (power>=.80) break # reach the desirable power } N<-n+1 print(N) -

The difference between the isopower contour of MacCallum, Lee and Browne (2010) and our equi-discrepancy line:

The purpose of the description below is to help researchers distinguish those two concepts in the two studies and facilitate the application of both of them. As a result, the first difference we want to point out is that the context of the isopower contour of MacCallum et al. (2010) is model overall evaluation (exact or close fit). Instead, our equi-discrepancy line applies to comparison of any pair of nested SEM models. For further illustration, we consider a special situation where the less restricted model M1 is a saturated model with ν1= 0, F1 = 0 and r1 = 0, and the more restricted one M2 is a model with degrees of freedom, for example, ν2 = 20 as assumed in Figure 9 of MacCallum et al. (2010)2. Notice that the isopower contour and the equi-discrepancy line are now discussed in the same context of overall evaluation of M2.

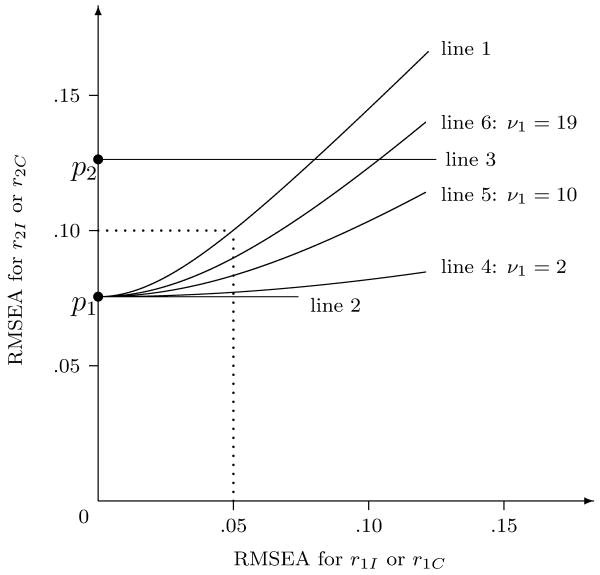

Let (r1I, r2I) be a pair of RMSEA values to define the null hypothesis H0 : r2 ≤ riI and its alternative one H1 : r2 = r2I for overall evaluation of M2 as in MacCallum et al. (2010). With a choice of α and sample size N, the central or noncentral χ2 distribution of TML under H0 and H1 can be determined and the power of TML to reject H0 under H1 can be calculated. MacCallum et al. (2010) argued that TML can reach the same rejection power when H0 and H1 are defined by another RMSEA pair among an infinite number of isopower alternatives to (r1I, r2I). Let α = .05, N = 200, r1I =.05 and r2I = .10 as in Figure 9 of MacCallum et al. (2010). Then we calculated some of those isopower alternatives and replicated the isopower contour in their Figure 9 as Line 1 in our Figure 23. The rejection power of TML under any set of H0 and H1 defined by the points on Line 1 would be the same as .84.

For model comparison and power analysis by our method, r1C and r2C are selected for M1 and M2 respectively to define δ in H1 : F2 − F1 = δ, or δ* in H0 : F2 − F1 ≤ δ*, or δ** in H1 : F2 − F1 = δ**. According to MacCallum et al. (2006), r1C and r2C should be some reasonable RMSEA values representing the misspecification of two nested models. When M1 is assumed to be a saturated model with ν1= 0, F1 = 0 and r1 = 0, the only reasonable choice of RMSEA value for r1C (and its alternative r1C′) should be zero. Correspondingly, r2C and its alternative r2C′ should be the same by the definition of the equi-discrepancy line in (7). Consequently, in the context of overall evaluation of M2 as in MacCallum et al. (2010), our equi-discrepancy line is no longer a line and reduces to a point on the vertical axis in Figure 2. For example, when .076 or .126 is selected for r2C, our equi-discrepancy line reduces to the point p1 or p2 in Figure 2, respectively.

In fact, even when r1C or r1C′ is not limited to zero and can be any value greater than zero, r2C and r2C′ should still be the same to satisfy (7) because M1 is a saturated model and ν1= 0 at this time. For example, when ν1= 0, ν2= 20, and the point p1 = (.00,.076) is selected as (r1C, r2C), r1C′ should always be .076 by (7) in spite of the value of r1C′. As a result, the equi-discrepancy line in this case would be Line 2 in Figure 2, which is a straight line parallel to the horizontal axis. Similarly, when the point (.08,.126) is selected as (r1C, r2C), the equi-discrepancy line would be Line 3 in Figure 2, which crosses the vertical axis at p2.

Furthermore, in spite of the value of (r1C, r2C) or (r1C′, r2C′) on the equi-discrepancy line, equation (7) now would reduce to because ν1= 0 and (r1C, r2C′). Given F1 = 0 now, substituting this δC into the null and alternative hypotheses, H1 : F2 − F1 = δ could reduce to H1 : r2 = r2C, H0 : F2 − F1 ≤ δ* could reduce to H0 : r2 ≤ r2C, and H1 : F2 − F1 = δ** could reduce to H1 : r2 = r2C. Obviously, in the context of model overall evaluation, the points (r1C, r2C) and (r1C′, r2C′) on an equi-discrepancy line (if this line exists) equivalently define either riI of H0 : r2 ≤ riI or r2I of H1 : r2 = r2I in MacCallum et al. (2010), instead of both.

Finally, when ν1 becomes some positive values rather than zero and M1 is no longer a saturated model, the isopower contour of MacCallum et al. (2010) doesn't apply while our equi-discrepancy line still exists. We plotted the equi-discrepancy line when ν1 is equal to 2, 10 and 19, as Line 4, Line 5 and Line 6 in Figure 2, respectively. The equi-discrepancy lines now become some curves similar to the isopower contour of MacCallum et al. (2010). However, they can not coincide with each other because the isopower contour does not exist in this situation. In addition, notice that the points on an equi-discrepancy line can equivalently define the null or alternative hypothesis for model comparison and its related power analysis. However, unlike the points on the isopower contours, they do not represent a plot of power for any test statistic without further information.

Figure 2.

The equi-discrepancy line vs. the isopower contour with ν2 = 20

Footnotes

To further distinguish the difference between the isopower contour and our equi-discrepancy line, please see the Appendix.

We thank James Steiger for this valuable suggestion.

We thank Taehun Lee for providing their SAS code to replicate this isopower contour.

Publisher's Disclaimer: The following manuscript is the final accepted manuscript. It has not been subjected to the final copyediting, fact-checking, and proofreading required for formal publication. It is not the definitive, publisher-authenticated version. The American Psychological Association and its Council of Editors disclaim any responsibility or liabilities for errors or omissions of this manuscript version, any version derived from this manuscript by NIH, or other third parties. The published version is available at www.apa.org/pubs/journals/met

Contributor Information

Libo Li, UCLA Integrated Substance Abuse Programs, University of California, Los Angeles.

Peter M. Bentler, Departments of Psychology and Statistics, University of California, Los Angeles

References

- Browne MW, Cudeck R. Alternative ways of assessing model fit. In: Bollen KA, Long JS, editors. Testing structural equation models. Newbury Park, CA: Sage; 1993. pp. 136–162. [Google Scholar]

- Dunkley DM, Zuroff DC, Blankstein KR. Self-critical perfectionism and daily affect: Dispositional and situational influences on stress and coping. Journal of Personality and Social Psychology. 2003;84:234–252. [PubMed] [Google Scholar]

- Hu LT, Bentler PM. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternative. Structural Equation Modeling. 1999;6:1–55. [Google Scholar]

- Joireman J, Anderson J, Strathman A. The aggression paradox: Understanding links among aggression, sensation seeking, and the consideration of future consequences. Journal of Personality and Social Psychology. 2003;84:1287–1302. doi: 10.1037/0022-3514.84.6.1287. [DOI] [PubMed] [Google Scholar]

- Jöreskog KG. Simultaneous factor analysis in several populations. Psychometrika. 1971;36:409–426. [Google Scholar]

- Kim KH. The relation among fit indexes, power, and sample size in structural equation modeling. Structural Equation Modeling. 2005;12:368–390. [Google Scholar]

- MacCallum RC, Browne MW, Cai L. Testing differences between nested covariance structure models: Power analysis and null hypotheses. Psychological Methods. 2006;11:19–35. doi: 10.1037/1082-989X.11.1.19. [DOI] [PubMed] [Google Scholar]

- MacCallum RC, Browne MW, Sugawara HM. Power analysis and determination of sample size for covariance structure modeling. Psychological Methods. 1996;1:130–149. [Google Scholar]

- MacCallum RC, Lee T, Browne MW. The issue of isopower in power analysis for tests of structural equation models. Structural Equation Modeling. 2010;17:23–41. [Google Scholar]

- Manne S, Glassman M. Perceived control, coping efficacy, and avoidance coping as mediators between spouses unsupportive behaviors and cancer patients' psychological distress. Health Psychology. 2000;19:155–164. doi: 10.1037//0278-6133.19.2.155. [DOI] [PubMed] [Google Scholar]

- McDonald RP. An index of goodness-of-fit based on noncentrality. Journal of Classification. 1989;6:97–103. [Google Scholar]

- Sadler P, Woody E. Is who you are who you're talking to? Interpersonal style and complementarity in mixed-sex interactions. Journal of Personality and Social Psychology. 2003;84:80–96. [PubMed] [Google Scholar]

- Satorra A, Saris WE. Power of the likelihood ratio test in covariance structure analysis. Psychometrika. 1985;50:83–90. [Google Scholar]

- Serlin RC, Lapsley DK. Rationality in psychological research: The good-enough principle. American Psychologist. 1985;40:73–83. [Google Scholar]

- Steiger JH. EzPATH: A supplementary module for SYSTAT and SYGRAPH. Evanston, IL: SYSTAT; 1989. [Google Scholar]

- Steiger JH. A note on multiple sample extensions of the RMSEA fit index. Structural Equation Modeling. 1998;5:411–419. [Google Scholar]

- Steiger JH, Lind JC. Statistically-based tests for the number of common factors. Paper presented at the annual meeting of the Psychometric Society; Iowa City, IA. 1980. [Google Scholar]

- Steiger JH, Shapiro A, Browne MW. On the multivariate asymptotic distribution of sequential chi-square statistics. Psychometrika. 1985;50:253–264. [Google Scholar]