Abstract

A current topic in neuroscience addresses the link between brain activity and visual awareness. The electroencephalogram (EEG), which uses non-invasive high temporal resolution scalp recordings to measure brain activity, is a common tool used to probe this question. EEG recordings, however, are difficult to implement in the curriculum of laboratory-based courses. Thus, undergraduate students often lack experience with EEG experiments.

We report here an EEG program (Virtual EEG) that can be used in undergraduate courses to analyze averaged EEG data, termed Event Related Potentials (ERPs). The program was designed so that students can generate hypothesis-driven studies that address how the brain encodes categories of visual stimuli. The Virtual EEG is a large database of EEG recordings consisting of 32 channels taken from real human subjects who were shown 256 pictures of visual stimuli. The program provides a number of possible ways to group the stimuli. After selecting the appropriate stimuli, the program constructs graphs of the ERPs. The channels can be selected for statistical analysis. Because the program uses real data, students are encouraged to interpret their results in light of previously published work. Thus, students have the opportunity to discover something new about how the brain processes visual information.

This article also includes a tutorial and summarizes the results of an assessment survey. Finally, we include information regarding the companion Virtual EEG website. The Virtual EEG has been used successfully for the past six years at Indiana University with over a thousand undergraduate students in a research methods course, and the assessment results illustrate its strengths and limitations.

Keywords: electroencephalogram (EEG); event related potentials (ERPs); visual processing; object recognition; neuroscience education, laboratory course

Neuroscience techniques such as EEG and fMRI produce large amounts of data that often overwhelm students when they first confront the problem of interpreting their data from an experiment. The chief difficulty seems to lie in the fact that while the experiments are easy to design, it can be difficult to know where in the data one should look to answer a particular research question. Students, however, typically do not arrive at this realization until after they have collected their data, at which point changes to the design are difficult to accomplish. To address these challenges, we developed a virtual environment that allows students to analyze existing EEG data in the form of event related potentials (ERPs). The environment is constrained enough so that we can provide advanced statistical analyses to aid in data interpretation, but flexible enough to allow students to ask questions that are quite likely different from those of other students.

One particular problem sets EEG apart from other imaging techniques. Unlike MRI recording, EEG has only coarse spatial information at the individual electrode level, which robs it of a natural interpretation in terms of brain regions (Ryynänen et al., 2004). Temporal information, however, is extremely accurate, and to exploit this fact investigators have to come up with questions that play into the strengths of the technology. Researchers also must learn the language of EEG such as how features are named and identified in order to make contact with the literature. When working with students on projects with real EEG data, we have noticed that students often undergo an initial stage during which the vastness of the data seems overwhelming: Students often do not know where to begin to analyze their data. We try to capitalize on these moments to guide students through the process of helping them understand what they do not know. We then make connections with the literature to demonstrate how other researchers have solved this problem. Together, the data and the literature often provide a guide to the eventual answer to the question posed by the student. This process reinforces the concepts that knowledge is cumulative, and that interesting research questions are often reached by designing an experiment in such a way that the data make contact with the literature. Not only does this suggest how to analyze the data, since analyses are spelled out in prior articles, but it also helps the interpretation of the results since the literature provides additional constraints that aid in theory building. Inherently, the instructional strategy of this framework is based on the goals and principles of problem-based learning, which places students in problem solving situations (Albanese and Mitchell, 1993; Savery and Duffy, 1996; Schuh and Busey, 2001).

With these issues in mind, we created the Virtual EEG program, a simulated EEG recording environment using research-grade data collected in a picture perception experiment. This program allows students to make mistakes in a painless way, and provides the conceptual knowledge leap that helps them understand that experiments must be designed with the analysis in mind.

To create the program, data from 33 subjects was obtained while they viewed 256 different pictures, each shown four times to each subject. The data is stored in a java-based program that allows the user to categorize the pictures into groups, to see how and when the brain distinguishes between different categories. This environment has two advantages:

Data analysis is extremely fast, requiring only as long as it takes to generate the graphs. This allows the user to run multiple simulated experiments in a short period.

The results are presented in graphs that are similar to those found in the literature, which allows the user to make connections to the literature and also forces the user to think about how EEG/ERP data can be used to draw conclusions about brain processes.

We believe that the relative lack of spatial information in EEG data and an emphasis on making contact with existing literature helps students focus on brain mechanisms rather than on brain areas. We have used the Virtual EEG program with over a thousand undergraduates, and have discovered that the program helps students make important connections between experimental design and data analysis. They discover that psychology and neuroscience are comparative sciences, as opposed to other sciences like physics, where quantities like the speed of light are measured. Students have asked questions such as “When does the brain first begin to distinguish between animals and people?” and are surprised to discover that perception is not instantaneous, but may take up to a fifth of a second. Students with leanings towards the social sciences have asked questions like: “How do men and women differ in how they view positively and negatively arousing pictures?”, “Is arousal level or picture valence represented in the brain?” When properly analyzed, EEG data provides answers to all of these questions, and the ability to ask and answer questions in real time provides students with the opportunity to make mistakes without penalties, to receive feedback from instructors, and correct these mistakes all in the matter of a two- or three-week unit of a course.

In the sections below, we describe how the data were collected and provide a comprehensive tutorial. We next give a brief overview of the Virtual EEG unit objectives. We conclude with a summary and discussion of the results from an assessment survey.

MATERIALS AND METHODS

Participants

Thirty-three right-handed Indiana University undergraduates (14 male) participated in the study. All had normal or corrected-to-normal vision and their participation constituted part of their lab work or coursework. All were knowledgeable of the purpose and details of the experiment, and gave informed consent to allow their data to be published anonymously according to the procedures of human subject compliance at Indiana University.

Apparatus

The EEG was sampled for 32 channels at 1,000 Hz and down-sampled to 250 Hz. It was amplified by a factor of 20,000 (Sensorium amps) and low pass filtered below 50 Hz. Signal recording sites included PO7 and PO8, with a nose reference and forehead ground. The 10-10 electrode configuration was used for all data collection. All channels had below 5-kOhm impedance and recording was done inside a Faraday cage. Data was analyzed using the EEGLab toolbox which finds independent components through independent component analysis that were readily identifiable as related to artifacts such as eye-blinks, eye-movements, and muscle artifacts. The first two types of artifacts were identified with the help of blink and eye-movement calibration trials at the beginning of the experiment as well as their topographical representation on the scalp. Components relating to muscle artifacts were identified by their high frequency amplitude spectrum and topographical representation. We typically removed between three and eight components for each subject which were subtracted from the raw EEG to eliminate the artifacts. Images were shown on a 21-inch (53.34 cm) Mitsubishi color monitor model THZ8155KL running at 120 Hz. Images were approximately 44 inches (112 cm) from the participant.

Stimuli and Task

Each of the 256 pictures was shown twice during the experiment, and each subject was run twice in the experiment. The pictures were shown for one second each, after which the participant pressed the ‘1’ key if the subject in the picture could move by itself, and the ‘2’ key if it could not.

TUTORIAL

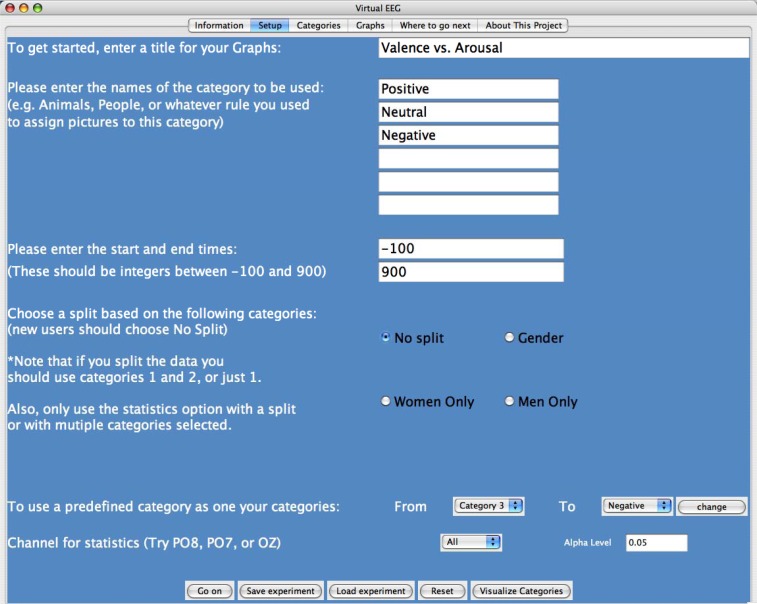

To develop a Virtual EEG experiment, students define up to six categories of pictures which they select based on pre-defined categories or by manually selecting from a set of 256 pictures (Fig. 1). If students choose to select their own categories, they navigate to the next page and browse the list of pictures, adding as many pictures as they like to each category. Pictures include neutral images, emotional images, humans, animals, foods, inanimate objects, upright and inverted faces, images of many colors, and many other images which could be divided in numerous ways across a continuum of dimensions.

Figure 1.

The Virtual EEG setup page. This page allows one to: change the title of the graphics, change category names, change start and stop times (here it is −100 to 900 ms), select gender settings, or select from predefined categories. One can select an EEG channel to run statistics on. There is also an option to change the alpha level for the statistical test to minimize alpha inflation problems.

Students also have the opportunity to look for differences in EEG recordings based on gender. For example, the data can be split by gender, allowing them to look for differences in the EEG recordings between males and females. This allows students to answer questions such as, “Do males and females respond differently to emotional images?” If a gender split is selected, subjects are limited to choosing only two categories. In addition to these parameters, students can change the range of time points they are interested in examining (from 100 ms before the onset of the stimulus up to 900 ms after the stimulus).

Statistics can be computed in order to test whether (and where) significant differences between conditions occur. Statistics can be performed on either a single channel (fast computation) or multiple channels (slow computation) to reveal significant differences across all 32 channels. For no split, male only, and female only, a one-way ANOVA is conducted with up to six levels (one for each category selected). When a gender split is selected, statistics are computed by a resampling method (Efron and Tibshirani, 1993) that finds the significance level of the interaction term between gender and the two categories (if only one category is selected, the program computes a between-subject t-test at the specified alpha level).

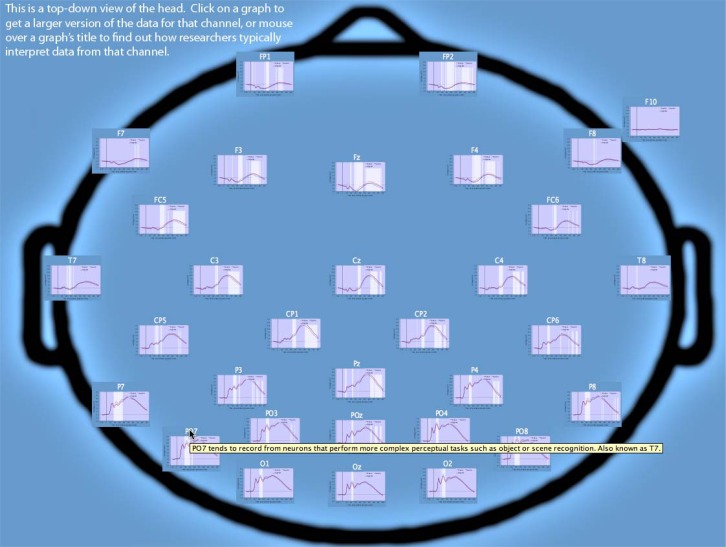

Once students have finished selecting the parameters and categories to analyze, they may click on the “graphs” page in order to view the results of their experiment. Thirty-two channels of data are presented according to their rough spatial location on the scalp (Fig. 2). If the statistics tool was selected, students may also view the time points at which significant differences between conditions were found. Students may click on a graph in order to see a larger version and may easily save graphs as jpeg files (Fig. 3). In addition, subjects may save the entire experiment so that the same graphs can be regenerated.

Figure 2.

Virtual EEG output of results. Schematic (cranial view) of the electrodes (channels) and their approximate location on the scalp. Each EEG graph can be enlarged individually by clicking on the graph of interest. Once enlarged, the figure can be printed, saved, or altered in many ways. Tooltips (yellow) helps students make contact with the literature.

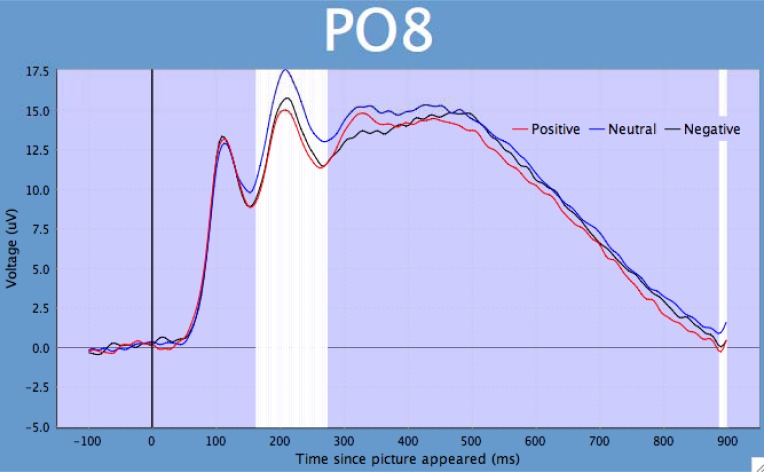

Figure 3.

Example of an enlarged ERP graph from a single electrode (PO8). The x-axis = voltage and the y-axis = time. The white vertical bars represent times where there were significant (p = 0.05) differences in the ERP waves. The alpha level can be adjusted by students.

A video-guided Virtual EEG tour presented in QuickTime format is available as supplemental information (S1).

PROJECT AND OBJECTIVES

The Virtual EEG has been implemented as either a two- or three-week unit. One of the goals of the unit is to provide students the opportunity to follow all the steps of the scientific process. Thus, the unit is designed so that students generate hypothesis-driven data by designing meaningful EEG experiments in light of the literature.

The first week of the EEG unit is designed to introduce EEG concepts as well as the basics of brain recording. The idea of the brain as an electrochemical device is at first foreign to many students, but analogies such as oscilloscopes and electrocardiograms help scaffold difficult concepts. Applications such as guilty knowledge tests (known in the popular literature as ‘brain fingerprinting’) also make a compelling case for the technology (Farwell and Donchin, 1991).

Students are then asked to select among several different articles in the literature that focus on related questions, such as the speed of object recognition and the representation of valence and arousal in EEG/ERP data. Because most neuroscience investigations require both control and experimental conditions we reinforce the idea of analyses that involve comparisons between categories of images. Thus, a priori predictions about whether one condition will produce more or less voltage (magnitude) than another are not warranted, but testing where and when differences occur provides information about how fast the brain can distinguish between different categories of images, and which general parts of the brain are responsible for this computation. To get a general feel of what the categories look like, students can click on the ‘visualize categories’ button, which displays the selected pictures under the appropriate category label. Often this not only helps students design their categories, but reveals important confounds as well.

Because each experiment takes at most several minutes to conduct, students are encouraged to try out several different virtual experiments. We note that the statistical displays are turned off by default, which encourages students to focus their attention on observing where and when differences between conditions may occur. Once students find differences, they can go back and re-run the experiment with statistics applied to the channel of interest. Individual graphs can be enlarged with a mouse-click, and students can save these images for their reports.

Because EEG data is complex, the program contains different kinds of help information. For example, the channel labels contain helpful links to both the literature and EEG interpretation, including names for particular components that might represent helpful terms in search engines. Thus, students can try to quickly replicate existing studies using similar stimuli from the Virtual EEG database, and then extend these by looking for gender differences or adding additional categories. Both VanRullen and Thorpe (2001) and Schupp et al. (2003) are examples of articles we have used to illustrate the kinds of questions that one can address using EEG recording. These two articles also provide common definitions used in EEG experiments.

The rapid design/analysis procedure allows for a very tight feedback loop that can be much slower in actual experiments. As a result, mistakes made in this context have little penalty and are quickly corrected. We have found that few of the reports written by students contain the kind of painful last paragraph in which they conduct an obligatory self-flagellation that describes all the problems of their experiment. Instead, most papers provide a comprehensive discussion of where and when differences between conditions occur, and the better papers make an attempt to link these differences with theoretical accounts of brain activity. For example, in an experiment with positive, neutral and negative conditions, students can ask whether valence (positive vs. negative) has more effect on brain activity than arousal (positive vs. neutral). Surprisingly, students find that the brain seems to encode whether something is arousing or not rather than whether it is good or bad. This can be seen in Figure 3, where the difference between positive and negative is relatively small, but the neutral condition differs from the two arousing images. Note that because different images were used in each condition, the image groups themselves may have different properties, leading to confounds. For example, neutral images may be visually less complex or less likely to contain human forms. The Visualize Categories button helps students think about whether they see stimulus confounds, and if they do, what pictures to eliminate or include in order to balance the categories on different criteria.

To conclude the unit, students write comprehensive and publication-formatted laboratory reports. We ask them to describe a research question that is motivated by existing literature and that can be used to select categories of images. We provide the methods section of the paper that describes the recording procedures. They interpret their results and include individual graphs of channels that highlight differences between their conditions. We then ask students to draw either temporal conclusions (e.g. how quickly can the brain distinguish between categories) or mechanistic conclusions (e.g. does the brain represent arousal or valence to a greater degree?). Students have also drawn rough spatial location conclusions, which can be supported by drawing from brain imaging studies with related stimuli.

EEG ASSESSMENT SURVEY

We have used successfully the Virtual EEG program with more than 1,000 undergraduates. To provide a semi-quantitative assessment of the Virtual EEG program we developed a survey that tested students’ (N = 67) knowledge on how to interpret EEG data generated by the program. The assessment survey also provided feedback about how the students perceived this unit of the course. The EEG Assessment Survey and all responses to the questions are provided as supplemental material (S2).

Generally, students had a positive experience with the Virtual EEG unit. For example, we asked, “When we developed the Virtual EEG unit, our goal was to provide a tool that could be used by you to discover how to use EEG data to answer questions about brain activity. How well do you think we achieved this goal? Please be as specific as you can about your current understanding of EEG data analysis” (see S2). Fifty-seven percent of students gave an overall positive response. One response, for example, emphasized the ease of utility and wide range of experiments one could use with the program: “The Virtual EEG was very thorough and easy to use. A wide variety of pictures and their content allow for an endless amount of possible hypotheses to test.” Another response emphasized the fact that the Virtual EEG can be used effectively: “I think you were very successful in achieving your goal. The program quickly gave us results to be analyzed. The data in the graphs were very clear and easy to work with.” This response also suggested that students can work easily with the Virtual EEG data.

There were, however, several repeating concerns that arose from some negative feedback provided in the surveys. Probably the most recurring theme was that students were generally overwhelmed by the complexity of the EEG data, which ultimately led to some confusion about several core aspects of the Virtual EEG. Part of the confusion likely began with the fact that there were 256 pictures to choose from and numerous ways to group them into various categories (six maximum). After selecting the pictures and arranging them into categories, it takes only one button click to generate the ERP data and only a few more clicks to analyze specific channels. Although this utility allows for high-throughput experiments and provides the ability to generate different data sets efficiently, it also can create an environment where students spend more energy producing numerous data sets without first attempting to design meaningful experiments, which ultimately can become a very serious drawback.

Some students also had trouble interpreting the EEG/ERP data/figures (i.e., voltage over time). This frustration usually stems from a lack of basic knowledge about the electrical activity of the brain; a body of knowledge that, in most cases, is covered in lower level neuroscience courses. Interpreting ERPs, moreover, like many time series data, can be challenging for students and scientists at all stages of their careers. For example, there has been a seemingly endless increase (thanks in part to computing power) in novel techniques based on software-based applications for analyzing time series data (for example, see Cui et al., 2008, and the Chronux software at http://chronux.org). Indeed, much of the confusion may arise from the simple fact that most undergraduate students have limited experience with time series data. Another related concern was that students, especially those early in their academic careers, had difficulty relating their EEG results with what has been previously reported.

Most of these concerns, we believe, often arise from students who have chosen their stimuli based purely on their own speculations and are forced into a scientific fishing expedition. The more successful students take the opportunity to perform preliminary literature searches and expand on existing studies. The instructor must reinforce the notion that science is a cumulative exercise and very few studies are interpretable by themselves.

Here, we suggest one way to circumvent at least some of the confusion that students encounter with the Virtual EEG. First, begin by getting students familiar with the Virtual EEG by using a simple analysis. For example, select only two of the predefined categories (i.e., people and manmade). Have students focus on only one channel (e.g., PO8). Next, have them enlarge the graph of PO8 and identify where the ERPs might be different. Note that it is important to remind students that the ERP waves are constructed by averaging numerous EEG recordings from many participants viewing the pictures multiple times (i.e., they represent population averages of single EEG recordings). Finally, re-run the experiment with the statistics turned on for the same channel and have students discuss the possible implications of the results. Instructors, moreover, are encouraged to explore their own experiments prior to the class meeting. Instructors can generate experiments that might be relevant to the course curriculum. The goal with this first-pass in the Virtual EEG environment is to simplify each step of the process so that instructors can more thoroughly explain each aspect of the Virtual EEG.

Another section in the assessment survey provided students with a mock experiment which included the categories (pictures) and the resulting graph of one of the EEG channels (see S2 for details). Students were asked a question pertaining to the experiment. The question had a specific right or wrong answer. Seventy-two percent of the students responded correctly. The second part of the question asked students to explain some possible problems with the experiment and to come up with alternative designs to improve it. Most students (approximately 71%) provided thoughtful responses, many of which included several ingenious ways to improve the experiment. Interestingly, the students that got the first part of the question right were also able to provide a clear response to the second part. Moreover, we believe the design of the question was such that students had to incorporate several theoretical aspects of EEG and experimental design to answer the question correctly. Thus, it appears that a large proportion of students are able to comprehend the overall objective of the Virtual EEG experiment. See S2 for complete details. All surveys were obtained with informed consent.

EVALUATION AND DISCUSSION

The overall positive feedback from students indicates that the Virtual EEG program is a useful tool in teaching undergraduates how to successfully interpret real neuroscience data. Within the context of the Virtual EEG program, students must decide for themselves what question to ask and how to answer the question. The rapid link between design and results often leads to initial confusions and ultimately the desire to seek help either from the instructor or from the literature. We have used this program as part of a second-year undergraduate psychology major methods course, although because relatively little time is spent on the biophysics of the brain, EEG is still a little mysterious to these students. Some of these problems may stem from students’ lack of knowledge about basic principles of neuroscience.

We believe, however, that the Virtual EEG can be successfully incorporated into many types of neuroscience-based courses, which most often provide an interdisciplinary approach to scientific learning (see Ramirez, 1997). For example, the Virtual EEG might be well-suited for a number of courses in various disciplines, including cognitive neuroscience, biological psychology, and clinical psychology. From our own experience, this tool is also useful in junior/senior laboratory-based methods courses. It is also noteworthy that the Virtual EEG can be used in graduate level courses in any related discipline. It will especially be easy to incorporate the Virtual EEG if faculty currently, or are willing to, incorporate technology into the classroom (see Griffin, 2003). Finally, we believe the Virtual EEG, by providing a problem-based learning environment (Schuh and Busey, 2001), enhances students’ ability to learn independently and to become proficient problem solvers.

CONCLUSION

We provide a Virtual EEG program designed to introduce undergraduate students to EEG recording and analyses. The Virtual EEG program is an effective, user-friendly, and inexpensive means of introducing EEG into the curriculum of undergraduate courses in the neuro- and psychological sciences. The program is easily installed on any personal or shared-use computer that runs Java, including both PCs and Macs. The Virtual EEG program provides students with the opportunity to work with real data and because of the large number of stimuli the choice of conditions is likely unlike any other comparison done previously. The students, therefore, are encouraged to interpret their results in a meaningful way because they are discovering something that was previously unknown. Because the data interpretation requires help from existing studies, students also learn to generate hypothesis-driven questions in light of previously published work.

COMPANION WEBSITE

The Virtual EEG can be downloaded from: http://virtualeeg.psych.indiana.edu. Java can be downloaded from: java.com.

Acknowledgments

This work was supported by The National Science Foundation through a grant to the Mind Project at Illinois State University.

REFERENCES

- Albanese MA, Mitchell S. Problem-based learning: A review of literature on its outcomes and implementation issues. Acad Med. 1993;68:52–81. doi: 10.1097/00001888-199301000-00012. [DOI] [PubMed] [Google Scholar]

- Efron B, Tibshirani R. An introduction to the bootstrap. Boca Raton, FL: Chapman & Hall; 1993. [Google Scholar]

- Farwell LA, Donchin E. The truth will out: interrogative polygraphy (“lie detection”) with event-related brain potentials. Psychophysiology. 1991;28:531–547. doi: 10.1111/j.1469-8986.1991.tb01990.x. [DOI] [PubMed] [Google Scholar]

- Griffin JD. Technology in the teaching of neuroscience: Enhanced student learning. Adv Physiol Educ. 2003;27:146–155. doi: 10.1152/advan.00059.2002. [DOI] [PubMed] [Google Scholar]

- Cui J, Xu L, Bressler SL, Ding M, Liang H. BSMART: A Matlab/C toolbox for analysis of multichannel neural time series. Neural Netw. 2008;21:1094–1104. doi: 10.1016/j.neunet.2008.05.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramirez JJ. Undergraduate education in neuroscience: A model of interdisciplinary study. Neuroscientist. 1997;3:166–168. [Google Scholar]

- Ryynänen O, Hyttinen J, Malmivuo J. Study on the spatial resolution of EEG—effect of electrode density and measurement noise. Conf Proc IEEE Eng Med Biol Soc. 2004;6:4409–4412. doi: 10.1109/IEMBS.2004.1404226. [DOI] [PubMed] [Google Scholar]

- Savery JR, Duffy TM. Problem based learning: An instructional model and its constructive framework. In: Wilson BG, editor. Constructivist learning environments: Case studies in instructional design. Englewood Cliffs, NJ: Educational Technology Publications; 1996. pp. 135–148. [Google Scholar]

- Schuh K, Busey TA. Implementation of a problem-based approach in an undergraduate cognitive neuroscience course. College Teaching. 2001;49:153–159. [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. Emotional facilitation of sensory processing in the visual cortex. Psychol Sci. 2003;14:7–13. doi: 10.1111/1467-9280.01411. [DOI] [PubMed] [Google Scholar]

- VanRullen R, Thorpe SJ. The time course of visual processing: from early perception to decision-making. J Cogn Neurosci. 2001;13:454–461. doi: 10.1162/08989290152001880. [DOI] [PubMed] [Google Scholar]