Abstract

How we find what we are looking for in complex visual scenes is a seemingly simple ability that has taken half a century to unravel. The first study to use the term visual search showed that as the number of objects in a complex scene increases, observers’ reaction times increase proportionally (Green and Anderson, 1956). This observation suggests that our ability to process the objects in the scenes is limited in capacity. However, if it is known that the target will have a certain feature attribute, for example, that it will be red, then only an increase in the number of red items increases reaction time. This observation suggests that we can control which visual inputs receive the benefit of our limited capacity to recognize the objects, such as those defined by the color red, as the items we seek. The nature of the mechanisms that underlie these basic phenomena in the literature on visual search have been more difficult to definitively determine. In this paper, I discuss how electrophysiological methods have provided us with the necessary tools to understand the nature of the mechanisms that give rise to the effects observed in the first visual search paper. I begin by describing how recordings of event-related potentials from humans and nonhuman primates have shown us how attention is deployed to possible target items in complex visual scenes. Then, I will discuss how event-related potential experiments have allowed us to directly measure the memory representations that are used to guide these deployments of attention to items with target-defining features.

Keywords: visual attention, visual working memory, long-term memory, visual search, electrophysiology, event-related potentials

1.1 Introduction

One of the most stunning aspects of the primate visual system is our ability to rapidly analyze the blooming, buzzing confusion of information that typically lands on our retina (James, 1890). For example, when we look out on a group of children, knowing that our son has a blue costume on, we can fairly rapidly narrow in on his location in our field of view (see Figure 1). In the laboratory, vision scientists have used tasks known as visual search to study how we process these complex scenes that we constantly encounter in the real world. To my knowledge, the first paper to use the term visual search was published by Green and Anderson (1956). Green and Anderson demonstrated several fundamental phenomena of observers’ behavior during visual search tasks that have since been replicated countless times. In the present paper, I will describe how during the last half century we appear to have figured out the nature of the cognitive and neural mechanisms that underlie the effects reported in the first visual search paper ever published.

Figure 1.

Example of a common visual search task outside the laboratory. In this case you can quickly locate your child in the blue monster costume. However, you might be slowed in finding him because of looking at the other children dressed in blue and your reaction time (RT) would be even slower if a larger group of children dressed in blue were visible when you rounded the corner.

The study of Green and Anderson (1956) made two significant observations that I will focus on in the present article. First, both of their experiments clearly show that as the number of possible targets increased observers’ reaction times (RTs) increased. For example, in Experiment 1 of their study, when the set size (i.e., the number of potential targets in the search array) increased from 10 to 60 items, the RTs increased from approximately 1800 ms to 4500 ms. This means that each additional possible target added about another 50 ms to the time required to process the array of items. This set size effect is easily measured as the slope of the function relating the manipulations of set size to RT. Second, if observers were told the color in which the target would appear, then RTs were almost completely determined by the number of items of that color. For example, when the observers were told that the target digits would appear in red in the upcoming array, the RTs varied as a function of the number of red items in the array and were essentially insensitive to the number of green items in the search array. These findings demonstrated that when observers adopted what Green and Anderson called a set for a given object feature, that set enabled processing to be focused on just the items in the scene with that feature. Modern theories describe this phenomenon, which Green and Anderson termed the set effect, as being due to observers having a representation of the target item that guides processing to be focused on the potentially task-relevant items (Wolfe and Horowitz, 2004). These representations go by various names in the literature. These include attentional template (Duncan and Humphreys, 1989, Bundesen, 1990, Desimone and Duncan, 1995, Bundesen et al., 2005), attentional set (Leber and Egeth, 2006), attentional control settings (Folk et al., 1992), or simply an attention guiding representation (Wolfe and Horowitz, 2004). This seminal paper also discussed issues of the heterogeneity of the items in the array (see also Eriksen, 1952) and their density, issues that are central to modern models of visual search and theories attention (Duncan and Humphreys, 1989, Bundesen, 1990, Cohen and Ivry, 1991, Bundesen et al., 2005, Wolfe, 2007). However, the present paper will focus on describing what we have learned during the last 60 years about the mechanisms of visual attention that lead to the set size effect and how knowledge of the target features modulates this set size effect (i.e., the set effect) as described since this first paper on visual search.

For both the set size and the set effects that Green and Anderson (1956) reported during visual search, I will predominately focus on the accumulated evidence from cognitive neuroscience techniques that enables us to understand the nature of processing underlying these behavioral effects. The observers in the experiments of Green and Anderson (1956) were shown an array of digit pairs and were instructed to verbally report target detection (i.e., a specific pair of digits) as quickly as possible and were also required to localize the target using a flashlight pointer so that accuracy could be measured. Much of what we have inferred about how subjects can rapidly process complex scenes comes from behavioral studies of visual search in the laboratory (e.g., Neisser, 1964, Egeth et al., 1972, Potter, 1975, Schneider and Shiffrin, 1977, Treisman, 1977, Sperling and Melchner, 1978, Yantis and Jonides, 1984, Pashler, 1987, Klein, 1988, McLeod et al., 1988, Luck et al., 1989, Theeuwes, 1991, He and Nakayama, 1992, Nothdurft, 1993, Duncan, 1995, Kim and Cave, 1995, Chun and Wolfe, 1996, Chun and Jiang, 1998, Horowitz and Wolfe, 1998, Wolfe, 1998a, 1998b, McElree and Carrasco, 1999, Krummenacher et al., 2001, Rauschenberger and Yantis, 2001, Vickery et al., 2005, Kristjansson, 2006). In addition, models have been developed to account for how an additional distractor in a visual search array can add 50 ms to the ultimate behavioral RT (e.g., Shiffrin and Schneider, 1977, Treisman and Gelade, 1980, Treisman, 1988, Duncan and Humphreys, 1989, Bundesen, 1990, Treisman and Sato, 1990, Humphreys and Muller, 1993, Palmer et al., 2000, Wolfe, 2003, 2007). But is it possible to more directly observe processes that unfold so quickly?

If we are interested in studying a process that happens very quickly, such as the dynamics of processing a complex scene with multiple stimuli, then few techniques other than those that record electrophysiological responses will have the temporal resolution to reveal how the brain is working. For example, based on Green and Anderson (1956) we might expect that the processing of each item takes approximately 50 ms because the addition of each possible target adds that much time to RTs. If the underlying processing of an item is hypothesized to take 50 ms, then we need to measure the processing of information in the brain that can track that time course. Due to this necessity to measure fast processes, the goal of this paper is to describe several key properties of how the visual system selectively processes information that have been revealed through electrophysiological experiments. I will focus on how the visual system finds targets in scenes that vary in complexity because of having different numbers of objects (i.e., the set size effects of Green & Anderson, 1956) and describe how electrophysiological studies have shown how we tune attention to select certain objects in those scenes (i.e., the set effects of Green & Anderson, 1956).

2.1 Time to process the scene increases with the set size of the objects in the scene

One of the primary findings of Green and Anderson (1956) was that as the set size of the number of possible targets increased, the observers’ RTs increased. This basic finding has been central in shaping models of how information is processed during visual search. Neisser (1967) was one of the first to propose that these visual search arrays are initially processed through a stage that extracts the basic feature information across the entire visual field in parallel and is not limited in capacity. This intuitively fits with the evidence that the neurons in primary visual cortex appear to detect the presence of simple feature attributes such as line orientations, colors, disparity, etcetera, as soon as information is sent to the cortex (Hubel and Wiesel, 1959, Zeki, 1978, Hubel and Livingstone, 1987, Pouget et al., 2012). Neisser then proposed that this simple feature information was feed into a limited-capacity stage in which attention is shifted in a serial manner between the possible targets until the target was found. Treisman and colleagues (Treisman et al., 1977, Treisman and Gelade, 1980, Treisman, 1988, Treisman and Sato, 1990, Treisman, 2006) proposed a similar variant in which the second stage of serial shifts of attention serves to bind together the features of the attended objects, providing an extremely influential explanation of what a mechanism of attention actually does beyond filtering irrelevant information (Broadbent, 1957) or increasing the speed or efficiency with which attended information is processed (Posner and Snyder, 1975). The general framework of a parallel stage of simple feature processing followed by a serial stage of attentional deployment to possible targets provides an effective account of the behavioral RT and accuracy data of Green and Anderson (1956) and subsequent studies (Wolfe, 1994, 1996, 2007). These models provide an intuitive account for why RTs increase with each additional possible target. If attention must be shifted to each item in a serial manner, then adding an additional possible target will require an additional shift of attention to process all of the items in the array. However, the models that propose attention shifts between items in a serial manner are not unique in being able to account for the behavioral data from visual search tasks.

Contrary to the models of processing during visual search that involve the serial deployment of attention, it is also possible to account for the increase in RTs with set size by proposing that attention is deployed to the possible targets in a purely parallel manner. That is, a parallel stage of feature extraction followed by a stage in which attention is deployed to multiple items in parallel. A host of models have proposed that attention is simultaneously spread across the possible targets in an array of objects (Duncan and Humphreys, 1989, Bundesen, 1990, Duncan, 1996, Bundesen et al., 2005) instead of having a unitary focus of attention that shifts between items in a scene. Similarly, models based on a signal-detection theory framework have effectively accounted for behavioral data by proposing that decision noise accompanies the processing of each item with more simultaneously processed items resulting in more noise (Palmer et al., 2000). In this way signal-detection models can account for increases in RT or lower accuracy when the set size of an array increases. The exception to this are visual search tasks in which targets are defined by spatial configurations of features with such search tasks resulting in particularly steep slopes relating RTs to the set size of the array (Palmer, 1994). The debate between serial versus parallel models of attentional deployment during visual search has proven difficult to settle using behavioral data alone because these classes of models often mimic one another. In addition, a serial model is really a special case of the larger category of parallel models (Townsend, 1990). Because both serial and parallel models of visual attention can effectively account for the behavioral RTs and accuracy found across the visual search literature, it was necessary to test these models with converging evidence from recordings of brain activity.

2.2 Distinguishing between serial and parallel models of the set size effect in visual search

Steve Luck and I set out to distinguish between the models of visual attention that explain the set size effect shown by Green and Anderson (1956) by measuring event-related potentials (ERPs) from human observers performing visual search (Woodman and Luck, 1999). An observer’s ERPs are measured by averaging the continuous electroencephalogram (EEG) relative to an event of interest, such as the onset of a visual search array. The ERP technique is well suited to study the nature of attentional deployment during visual search because of the temporal resolution of the technique. Specifically, ERPs can measure millisecond-by-millisecond changes in neural activity within a trial (Luck, 2005, Woodman, 2010). To determine whether visual attention was spreading across items in the visual field or shifting rapidly between items, we needed to be able to measure the shifts of attention that were hypothesized to be brief neural events (e.g., the 50 ms time between shifts that can be inferred from Experiment 1 of Green and Anderson, 1956).

To measure the deployment of visual attention to objects in visual search arrays we focused on a specific component of the observer’s ERPs. This component is known as the N2pc. This abbreviation indicates that this component typically begins in the time range of the second negative going waveform (i.e., Negative 2) and has a posterior and contralateral distribution relative to where attention is focused in the visual field. That is, if an observer focuses attention on an item in the left visual field, then the posterior electrodes over the right hemisphere become more negative than those over the right hemisphere approximately 175–200 ms after the onset of the stimulus array (Luck and Hillyard, 1994a, 1994b, Eimer, 1996, Luck, 2012). Our goal was to use this index of the deployment of attention to determine if attention was being shifted between items in a serial manner or distributed across the possible targets in a parallel manner during visual search.

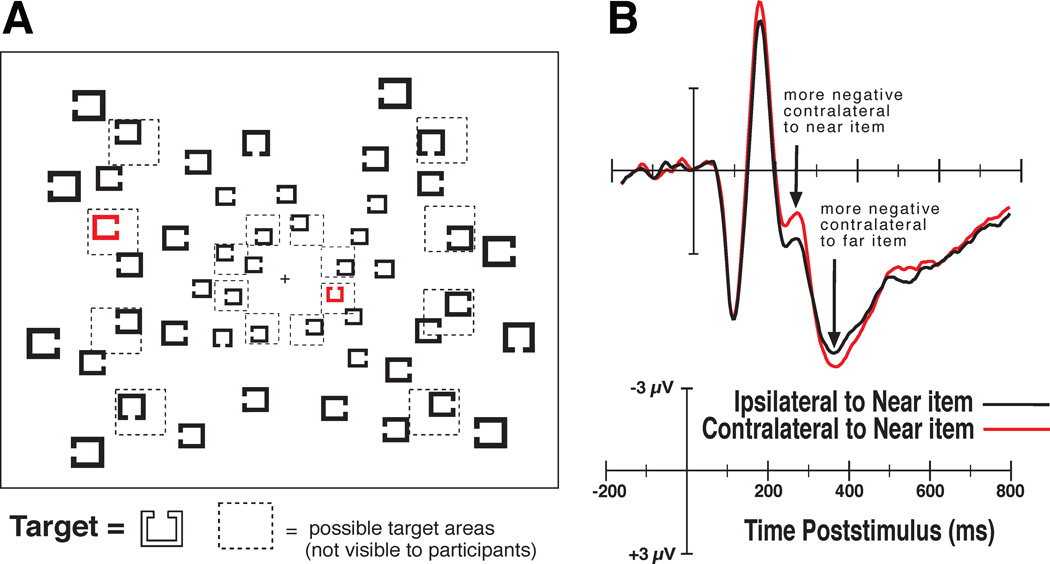

Figure 2A shows an example of the type of visual search arrays we presented to the observers. In these arrays we presented possible targets of a given color, such as red, using a paradigm similar to that of Green and Anderson (1956) in which the possible targets were distinguished by color. Note that one of the possible targets was closer to the fixation point than the other. We used this manipulation so that we could predict how attention would likely be shifted, given a serial model, or spread across items, given a parallel model. Previous experiments had shown that targets presented nearer fixation are responded to more quickly and accurately than targets that appear at more eccentric locations (Carrasco et al., 1995; 1998), but this behavioral effect is reduced or eliminated in some experiments by scaling the stimuli using the cortical magnification factor that compensates for the greater density of receptors near fixation (Carrasco and Frieder, 1997; Wolfe et al., 1998). Thus, if we shift attention between possible targets (e.g., the red items) in a serial manner, then we should be able to watch the N2pc first emerge contralateral to the possible target nearest to fixation and then flip between hemispheres as attention is shifted to the possible target further from fixation in the other hemifield. In contrast, if attention is spread across the possible target objects in a parallel manner, then we should not observe the dynamic flip of the N2pc, but instead we should observe a slightly greater N2pc contralateral to the near possible target, accounting for the RT benefit for near possible targets during visual search.

Figure 2.

Example of the stimuli and the results from Woodman & Luck (1999; 2003). A) Search arrays composed of Landolt-square stimuli in which subjects searched for a target with a gap to the top among distractors with gaps on the left, right and bottom. Observers made a speeded button press response to indicate whether the target was present or absent on each trial. They knew the target would be one of the red items if present. B) The ERP waveforms show that first the near possible target (i.e., the red item) elicited an N2pc, then, the far possible target elicited an N2pc. Because these items were in opposite hemifields the N2pc was observed bouncing between hemispheres. Adapted with permission of the American Psychological Association and Nature Publishing Group.

Figure 2B shows the waveforms we recorded from observers searching for a target Landolt square with a gap to the top. On half of the trials the target was present and on the other randomly interleaved half of trials it was absent. Participants pressed one of two buttons on a gamepad as quickly and accurately as possible to indicate whether the target was present or absent on that trial. The waveforms shown are from target absent trials in which both items would need to be processed to respond correctly (i.e., both possible targets must be processed to report that the target was absent). These findings were consistent with predictions of a serial model of attentional deployment to items in the search array. In subsequent experiments, we placed one of the possible targets on the vertical midline between hemispheres so that we could isolate and measure just the N2pc to each item (Woodman and Luck, 2003). Then we compared the time course of attentional deployment to near items and far items using different array configurations that isolated these two types of deployments to see if they overlapped. In that case, we again found that the time course of the N2pc to each item did not overlap, as predicted by the serial models of visual attention. Our findings represent a pattern of electrophysiological results that are difficult to account for with parallel models, except those that can be configured to operate in a serial mode of visual processing (e.g., Bundesen, 1990). These findings provide an example of how measurements of the electrical activity of the brain can be used to distinguish between models of visual processing that account for behavioral data with similar success.

The ERP results that I just discussed used the timing of the N2pc effect to distinguish between models of attention. However, the research I will turn to now was focused on trying to understand the substrates of the ERP components that we measure noninvasively from human subjects. This work allows us to answer questions about the nature of the mechanism indexed by ERP components, like the N2pc. For example, which structures in the brain are generating this electrophysiological index of attentional deployment during visual search?

2.3 What is the nature of the network underlying the observer’s N2pc component?

The great advantage of recording ERPs from humans is that we can noninvasively measure brain activity with millisecond-to-millisecond temporal resolution. However, the great disadvantage of this neuroscience technique is that it does not provide definitive information about the neural generators of the effects measured at the scalp. This is because there are an infinite number of mathematical models of activity in the brain for any pattern of voltage measured outside the head (Helmholtz, 1853). Although mathematical algorithms exist for estimating possible neural generators of ERP and EEG effects, none of these can overcome the basic limitations of the physics of electrical fields in the brain (Nunez and Srinivasan, 2006). However, it is possible to understand the neural circuitry underlying ERP and EEG effects if we can get microelectrodes into the heads of our observers and simultaneously record the ERPs and EEG from the surface electrodes outside the head. Because this type of simultaneous recording of activity both inside and outside the head is only possible with human subjects undergoing treatment for epilepsy (Halgren et al., 1980, Halgren et al., 2002, Canolty et al., 2006) and other neurological conditions (Voytek et al., 2010) our strategy has been to use a nonhuman primate model.

Several years ago, I was surprised to learn that the type of EEG signals that we commonly record from humans has very rarely been recorded from nonhuman primates (for a review see Woodman, 2012). This is surprising because measuring the EEG and the averaged ERPs from monkeys can provide a validation of the nonhuman primate model of the human visual system. In addition, the nonhuman primate model provides the opportunity to record EEG and ERPs, like we do with human subjects, but also record intracranial activity to determine the nature and source of the activity within the brain that underlies the electrophysiological effects that we use as tools to study human visual processing. Our research that has pursued this strategy began with experiments examining the N2pc component described earlier.

The first step in studying an ERP effect in monkeys that was initially discovered in human observers is to determine if monkeys exhibit such an effect. To determine if monkeys have ERP components that are homologous to those in humans there are three primary criteria that we use. The first is the timing of the effect in the general sequence of visual ERP components. The second is the distribution of the ERP component across the head. The third, and probably most importantly, is the sensitivity of the ERP component to manipulations of task or cognitive demands that are known to modulate the homologous ERP in humans.

Our first step in studying the N2pc in macaque monkeys was to determine whether a component exists in the monkeys satisfying these three criteria. We found that macaques do exhibit an ERP component with the same relative timing, distribution, and sensitivity to task manipulations as the human N2pc (Woodman et al., 2007). Interestingly, the macaque homologue of the N2pc appears as a positivity over posterior electrodes contralateral to targets in visual search arrays, however, this is likely due to differences in the pattern of folding across species. The polarity of a given ERP component is arbitrary and simply indicates which end of the electrical dipole generated in the brain is oriented toward the electrodes from which the effect is measured.

When the human N2pc was discovered, it was proposed that this ERP index of visual attention might be generated in ventral extrastriate cortex, such as the human equivalent of V4 or inferotemporal cortex (Luck and Hillyard, 1994b). This idea is consistent with the observation that several factors that influence the firing rates of neurons in the ventral visual stream also influence the amplitude of the N2pc. Specifically, the N2pc amplitude is larger when distractors are presented nearer the attended target compared to more distant locations, is larger when the target needs to be localized with a saccadic eye movement compared with being responded to with a manual response, and is larger for conjunction search tasks than simple feature tasks. These characteristics mirror the characteristics of single neuron responses in studies of attention effects in area IT (Luck et al., 1997). However, it was also initially proposed that the N2pc might be due to feedback from an attentional control structure (Luck and Hillyard, 1994b). This assumption follows from the timing of the N2pc relative to the significantly earlier attention effects observed on the P1 and N1 components when the location of task-relevant information is known prior to the onset of the stimuli (Mangun, 1995). Our simultaneous recordings of monkey ERPs and intracranial activity afforded us the unique opportunity to test the hypothesis that the N2pc was due to feedback within the visual system.

To test the hypothesis that the macaque N2pc (or m-N2pc) was due to feedback, we simultaneously recorded the ERPs and the activity in the frontal eye field (or FEF) of monkeys performing visual search (Cohen et al., 2009). Figure 3A shows a schematic diagram of our recording set up and the search task the monkeys performed. The macaques searched for a T among Ls on one day and an L among Ts on the next. Microelectrodes were lowered into FEF so that we could record the action potentials of individual neurons as well as the local-field potentials (or LFPs). The LFPs recorded from a microelectrode in the brain are believed to be a weighted average of the postsynaptic potentials generated within approximately 1 millimeter of the electrode tip or contact point (e.g., Katzner et al., 2009). Measurement of these LFPs is critical if we want to understand the neural generators of EEG and ERP effects. This is because LFPs are generated by the same post-synaptic potentials that give rise to the EEG and ERP measures. It is believed that the LFPs generated in the brain simply summate and propagate through the skull to become the EEG and ERP signals recorded at the surface of the head (Luck, 2005, Nunez and Srinivasan, 2006). Thus, by recording multiple electrophysiological signals concurrently we can understand how the electrical fields that are measured outside the head are produced inside of the brain.

Figure 3.

Example of simultaneous recordings of neurons and LFPs in FEF and posterior ERPs from Cohen et al. (2009). A) The stimuli during search for an L among Ts, dashed line show the neuron’s receptive field. B) An example of the firing rate of an FEF neuron, the LFPs, and simultaneously recorded m-N2pc. C) A summary of the timing of attentional selection using these three signals and reaction time (RT). Adapted with permission of the American Physiological Society.

We reasoned that if the N2pc was due to feedback from FEF in prefrontal cortex, then we should observe attentional selection within FEF just before the N2pc emerges over posterior ERP electrodes. As shown in the example recording session in Figure 3B, this was just what we found. That is, we first observed that the single unit activity of the neurons in FEF appeared to discriminate the location of the task-relevant target relative to the distractors composed of the same visual features of one vertical and one horizontal line segment. This neuronal metric of selection by visual attention is defined as the point at which the spike rate of the neuron is higher when a target is present in the neuron’s receptive field compared to when a distractor is presented in the receptive field. This signature of attentional selection in terms of higher firing rates for targets than distractors had been observed across many previous single unit studies and across a variety of visual areas (Chelazzi et al., 1993, Schall and Hanes, 1993, Thompson et al., 1996, Chelazzi et al., 1998, Bichot and Schall, 1999, McPeek et al., 1999, Chelazzi et al., 2001, Thompson et al., 2005, Buschman and Miller, 2007, Schall et al., 2007). After the neurons had selected the target in the visual search array, we observed that the LFP activity in FEF discriminated between targets versus distractors. Finally, we observed the emergence of the m-N2pc at the posterior electrodes of the simultaneously recorded ERPs. Figure 3C shows that this was consistently found across all measurements and that all of these metrics of selection by visual attention took place 75–100 ms before the ultimate saccadic response to the target was made. The relative timing of these electrophysiological indices of attentional selection were as expected if FEF were contributing to the generation of the N2pc via feedback.

Another prediction that follows from the hypothesis that feedback from FEF contributes to the generation of the N2pc is that the amplitude of the electrical activity measured in FEF should be related to the amplitude of the N2pc subsequently measured at the posterior electrode sites. To test this prediction we performed trial-by-trial correlations between the visual attention effects measured in FEF and with the ERP electrodes that exhibit the N2pc. We found that the amplitude of the LFP attention effect recorded in FEF was significantly correlated with the amplitude of the N2pc on a trial-by-trial basis. Interestingly, the spike rate of the FEF neurons was not related to the amplitude of the N2pc. These observations are consistent with the idea that it is postsynaptic activity that is more directly related to the generation of ERP components than is the spiking activity of neurons (e.g., Luck, 2005). However, they also challenge the simple hypothesis that LFPs are the input to the neurons in an area and spikes are the output of that area (Logothetis et al., 2001).

Our findings suggest that the attentional selection of the target in the visual search array is either computed locally within the circuitry of the FEF or between the FEF and another area that participates in attentional selection (such as the lateral intraparietal area, i.e. LIP, or the superior colliculus). Only following this local or inter-regional selection operation is the outcome fed back to posterior areas in which the electrical dipoles are generated which give rise to the observed distribution of the N2pc. This would help explain why the N2pc has such a posterior distribution that does not include lateralized activity above prefrontal areas. We recently found that this same orderly cascade of attentional selection across FEF neurons, FEF LFPs, and the m-N2pc occurs during ‘pop out’ visual search tasks (Purcell et al., 2010, Purcell et al., 2012). In pop-out search, the target is distinguished from distractors by a simple feature (e.g., red among green items), like that initial used by Green and Anderson (1956) to distinguish between possible targets in their search arrays. This observation demonstrates that the electrophysiological measures of attentional selection operate in the same way whether or not the search tasks exhibit search slopes that significantly increase with set size. These neuroscientific data appear to help settle another long-standing debate about whether pop-out search involves a qualitatively different mode of processing than the demanding search tasks in which RTs increase with set size (Treisman and Gelade, 1980, Duncan and Humphreys, 1989, Wolfe et al., 1989, Wolfe, 1998b). The power of our simultaneous recordings of intracranial activity and the monkey ERPs enables us to determine the nature of the neural network underlying specific ERP components, as well as understand the architecture of visual processing in the brain more generally.

2.4 The tips of the icebergs

At this point, I feel that it is necessary to highlight a basic conclusion of this work that may be unsettling to some readers. Then I will discuss a puzzling aspect of the story that remains from our intracranial and surface recordings of activity from nonhuman primates. The basic conclusion is that when we human electrophysiologists measure an ERP component on the surface of the head we are really only seeing the tip of what is a large iceberg of electrical activity under the skull. The N2pc appears on the scalp as a very focused negativity compared with much more broadly distributed components related to other cognitive processes (Rugg and Coles, 1995, Woodman, 2010, Luck, 2012). This scalp distribution would suggest that the N2pc might have a neural generator that is circumscribed and does not include a broad network of areas. However, our work recording ERPs and intracranial activity from nonhuman primates has demonstrated that even a component like the N2pc is due to activity that is broadly spread throughout multiple regions of the brain. As I discuss below, this calls for much more work including the use of causal techniques to determine which nodes of the network are essential for the generation of a component like the N2pc. However, the conclusion from our work is clear. We cannot easily model the generation of ERP components using algorithms that assume a single dipole or even a simple configuration of electrical fields underlying an effect measured at the surface of the head. Instead, previous suggestions that ERPs and the EEG are due to the pattern of activity across the entire head or cortical sheet (Nunez and Srinivasan, 2006) appear closer to the truth.

The most salient puzzle regards the nature of the wiring between FEF in prefrontal cortex and posterior visual areas. Much of our visual neurophysiology focuses on areas that are retinotopically mapped. For example, V4 and TEO are areas that are hypothesized to contribute to the generation of the N2pc (Luck and Hillyard, 1994b) and recent studies have focused on responses in V4 that appear to be due to feedback from FEF (Moore and Armstrong, 2003, Noudoost et al., 2010). Area V4 is retinotopically mapped such that there is an orderly relationship between the spatial arrangement of the neurons and the spatial layout of their receptive fields across the visual field. However, the spatial arrangement of neurons in the FEF is not retinotopic (Suzuki and Azuma, 1983). It is easy to imagine how connections between two retinotopically mapped areas could be reciprocal and allow for clear feedforward and feedback interactions between these areas. It is significantly more difficult to imagine how one retinotopically mapped area (i.e., V4) is connected to another area (i.e., FEF) without this organizing principle. Even more complex is that projections from V4 and TEO to FEF are convergent in nature because the FEF receptive fields are much larger than those in ventral stream visual areas like V4, whereas the feedback from FEF to V4 is fairly sparse (Schall et al., 1995, Bullier et al., 1996). This means that a spike from a V4 neuron can precisely target the relevant FEF neuron, but an output spike from FEF might not be able to find its way back during the feedback sweep of information processing. A related issue with regard to the lack of retinotopy of FEF is that we often conceptualize this area of prefrontal cortex as instantiating a salience map, or attentional priority map (Schall, 2004). Indeed, our own data are consistent with that explanation. But the internal wiring of FEF must be fairly complex if neurons that neighbor each other in FEF do not have spatially adjacent receptive fields. One might argue that the FEF has an orderly map of saccadic eye point and that attention might be controlled by activity in motor neurons that also govern saccadic eye movements (Rizzolatti et al., 1987). However, it does not appear that the neuronal connections exist to directly link the neurons in FEF that control gaze to extrastriate visual cortex with retinotopic mapping (Pouget et al., 2012). This necessary type of connectivity across longer chains of neurons in FEF is clearly possible and may include additional brain areas (Amiez and Petrides, 2009, Anderson et al., 2012), but the interface between an area like the FEF and retinotopically organized areas like V4 is a computational puzzle that deserves more measured consideration in the future.

In summary, our recordings of human ERPs have allowed us to determine the nature of the processing limitations that make visual search tasks difficult. Our ERP evidence indicates that we cannot attend to all of the possible targets in an array at the same time. Then, by establishing nonhuman primate homologues of these critical ERP components, we can determine the neural circuitry underlying their generation and address further theoretically important questions about how information from visual search arrays is processed in the brain. Our findings using these methods demonstrate that prefrontal areas control the shifts of attention to the possible targets in a visual search array, consistent with neuroimaging studies of humans (Yantis and Serences, 2003). As a result, we have made considerable progress understanding how visual attention is deployed in real time to possible target objects in the search arrays of Green and Anderson (1956) and subsequent vision researchers (Wolfe, 2003). The next question we turn to is how the observers in the experiments of Green and Anderson could limit their deployments of visual attention to items of a specific target color and be essentially unaffected by items that were not of that target color.

3.1 Attention can be guided to select to certain object features and not others, but how does this work?

Recall that one of the first observations in the visual search literature (Green & Anderson, 1956) is that the presence of items that do not have the necessary target defining features barely influence search performance. Only the set size of the possible target items increases the time it takes to perform visual search. If you know a target will be red, your RT to find the target is essentially insensitive to the number of green items in the array. This means that it must be possible to set attention so that it only shifts between possible targets as objects are processed in a visual search array, avoiding items that do not contain target features. This shows that we can tune our visual system to only processes inputs that are potentially task relevant. The next topic I will discuss is how we tune attention to select these potentially task-relevant items, excluding completely irrelevant items from processing by the limited-capacity mechanisms of visual attention.

Psychologists have hypothesized about the origin of attentional control for centuries. James (1890) proposed, “When… sensorial attention is at its height, it is impossible to tell how much of the percept comes from without and how much from within; but … the preparation we make for it always partly consists of the creation of an imaginary duplicate of the object in the mind, which shall stand ready to receive the outward impression” (pp. 439). Similarly, Pillsbury (1908) described at length how both primary memory representations (akin to short-term memory or working memory in modern theories) and secondary memory representations (or long-term memory) determine what visual information we process from the world when it meets our eye. The basic idea that memory representations are critical in determining what is selected by mechanisms of visual attention continues to dominate theoretical debates.

Modern theories of attention are built on the idea that the fundamental source of topdown control over visual attention is visual working memory.1 Various proposals have been made about the details of the mechanisms of perceptual attention. These include the explanation that visual attention is determined by salience (Baluch and Itti, 2011), that items simultaneously race to be categorized (Bundesen, 1990, Bundesen et al., 2005), that information competes for representation by neurons in the brain (Desimone and Duncan, 1995), and that attention spreads across items based on the inter-item similarity (Duncan and Humphreys, 1989). However, all of these models are similar in that they propose that what is selected by attention is determined by the content of visual working memory. This top-down control from visual working memory then engages the machinery studied in the work described above that allows visual attention to become focused on the task-relevant target in the search array (Yantis, 2008). Returning to our example of the Green and Anderson (1956) paper, this would mean that to perform this task we hold a representation of the red target digits in visual working memory. By maintaining such a representation in visual working memory, the mechanism of perceptual visual attention that shifts between possible targets would be focused on the red digits until a match is found between the target representation in memory and a specific visual input.

Although most modern theories of attention propose that visual working memory representations are the source of attentional control, typically known as top-down control of attention, the evidence for this idea is not overwhelmingly strong. The empirical observations that have most strongly motivated this theoretical mechanism of attentional control come from a single-unit recording study of monkeys performing visual search (Chelazzi et al., 1993, Chelazzi et al., 1998). In this work, macaque monkeys were first shown an object that they would need to find in an upcoming array of multiple objects. Three seconds after that target cue, the search array was presented and the monkeys either needed to make a saccade to the target or release a lever if that target appeared in the array. This study showed that during the interval between the presentation of the cue and the onset of the search array, the neurons recorded in the inferotemporal (IT) cortex exhibited elevated firing rates. This observation is consistent with the idea that the brain was actively maintaining the target representation in anticipation of searching for this object in the upcoming array of objects. As beautiful as these data are, they represent some of the only direct evidence that the top-down control of attention when we process complex scenes is made possible by maintaining target representations in visual working memory. Our goal in the next set of experiments I will discuss was to determine whether similar evidence could be found when humans perform search.

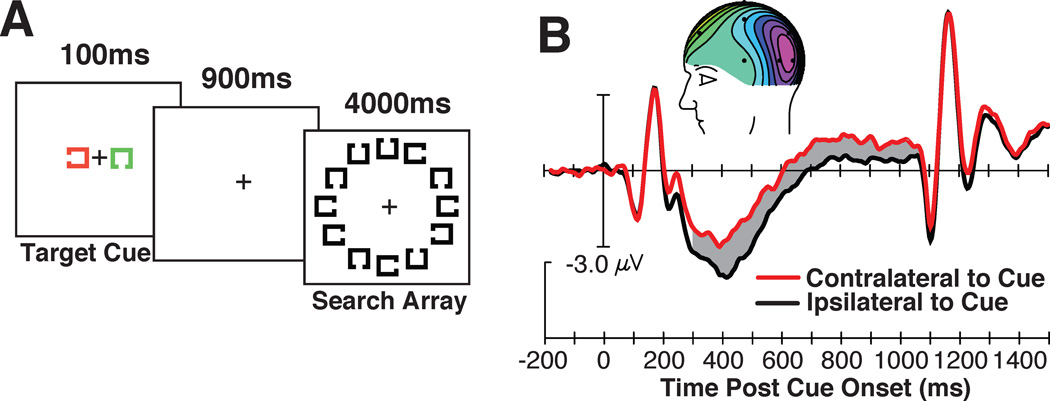

With the goal of determining whether human mechanisms of perceptual attention are controlled by representations in visual working memory, we designed a paradigm similar to that of Chelazzi and colleagues (1998; 2001). This involved presenting a target cue approximately 1 second before a search array. On each trial we cued observers to search for a different target shape, that is, a Landolt square with a gap on a different side. An example of one such stimulus sequence and visual search task is shown in Figure 4A. In this task, subjects were told that the cue would appear in one color (e.g., green) and that the potential cue of the other color could be ignored. This other item was simply presented so that equivalent sensory input appeared in both the left and right visual field because this prevents lateralized sensory confounds and allows us to measure the nature of the high-level representation of the target cue (Woodman, 2010).

Figure 4.

The stimuli, findings, and relationship between the cue-elicited ERPs and behavior. (A) Example of the stimulus sequence and the grand-average waveforms from electrodes T5/6, contralateral (red) and ipsilateral (black) to the location of the cue on each trial. The gray region shows the epoch in which the significant CDA was measured and inset shows the voltage distribution. (B) Visual search accuracy as a function of the CDA amplitude measured following the cue. Note that a more negative voltage equals a larger CDA. Each point represents the data from an individual subject and the dashed line represents the linear regression. Adapted from Woodman & Arita (2011), with permission from the Association for Psychological Science.

Presenting lateralized target cue stimuli was critical because one of the key ERP components used to directly measure what representations the visual system is maintaining in working memory is lateralized. Specifically, Vogel and colleagues (Vogel and Machizawa, 2004, Vogel et al., 2005, McCollough et al., 2007, Ikkai et al., 2010) have shown that when we hold a representation of an item in visual working memory that was initially seen in the left visual field, a sustained negativity is observed over right posterior electrodes relative to left posterior electrodes. If that to-be-remembered item was presented in the right visual field, then the left electrodes show this sustained negativity during the memory retention interval relative to electrodes on the right side of the head. Because this signal is observed during the delay period of a visual working memory task, this component was named the contralateral delay activity, or CDA. The amplitude of this ERP component increases with each additional object that an observer needs to remember (Vogel and Machizawa, 2004), with this amplitude increase reaching asymptote at each individual observer’s working memory capacity. This sensitivity to the active maintenance of information in visual working memory makes this ERP component an ideal tool with which to test theories about how visual working memory representations are used to process complex visual scenes.

In our experiments, we used this CDA component as a tool to determine whether the target representation was being held in visual working memory to control perceptual attention. The prediction was simple. If people guide the focus visual attention to target-like inputs by holding a representation of the target in visual working memory, then we should see that the target cue elicits a CDA that is sustained until the search array appears, just as Chelazzi and colleagues had observed in the spike rates of IT neurons.

Figure 4B illustrates the pattern of results observed across a series of experiments (Carlisle et al., 2011, Woodman and Arita, 2011). Following the presentation of the cue, we saw that a CDA emerged and was sustained until the search array appeared. In the experiment shown in Figure 4, we found that the amplitude of the cue-elicited CDA was highly correlated with the accuracy of the observers in this inefficient visual search task in which the cued target shape was difficult to find in the array of Landolt squares. In other experiments in which accuracy was at ceiling, but reaction time (RT) varied across observers, we found that the amplitude of the CDA before presentation of the search array was highly correlated with the observers’ RTs to the subsequent search arrays (Carlisle et al., 2011). These correlational relationships are what we expected if the amplitude of the CDA following the target cue provides a measure of the quality of the target representation. That is, a high amplitude CDA indicates that a more veridical target representation is being held in visual working memory. These are the first electrophysiological findings from humans to support the prediction that visual working memory representations serve to control visual attention and focus this limited-capacity mechanism on items that are similar to the sought after target.

3.2 Visual working memory is important, but what role does long-term memory play?

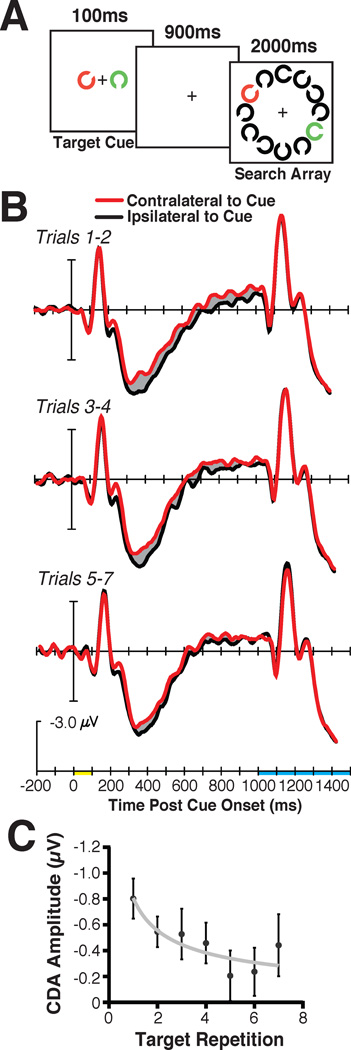

The findings I just discussed appear to support the popular view of attention researchers and theorists that the top-down control of visual attention is as simple as holding a target representation in visual working memory (for a review see Olivers et al., 2011). However, this conclusion seems to be at odds with the learning literature and theories of skill acquisition and automaticity. Specifically, we have known for decades that if you perform the same visual search task (i.e., look for the same target) trial after trial, the speed of your responses will decrease dramatically (Schneider and Shiffrin, 1977, Shiffrin and Schneider, 1977, Schneider and Chein, 2003). The dominant theoretical explanation for these effects is that visual processing and the selection of the task relevant response is initially controlled by working memory (Logan, 1988, Anderson, 2009). However, across practice we shift from using working memory to relying upon long-term memory to control processing. If we integrate this theoretical perspective with the findings we have just reviewed, it leads to the prediction that if we cue people to search the arrays of objects for the same target across a series of trials, then we should find that the CDA disappears as the attentional template is handed off to long-term memory.

My colleagues and I tested the prediction that visual working memory should only be used to control visual attention when the target is new, with long-term memory taking over control with each instance of search for a particular object (Carlisle et al., 2011). This involved essentially the same cued-search paradigm I have just discussed. That is, a target cue was presented on each trial before a search array in which that target would either be present or absent (see Figure 5A). The key manipulation was that we cued observers to search for the same target object across runs of trials that varied in length (i.e., 3–7 trials long). Figure 5B shows that for the first couple trials of searching for a particular object, we observed a large amplitude CDA following the target cue. However, as search continued across trials for this same target shape, the CDA progressively decreased in amplitude. The decrease in CDA amplitude across target repetitions mirrored the decrease in RTs averaged across observers. Moreover, the decrease in CDA amplitude for a given observer was significantly correlated with their speeding in RT across target repetitions. This is as expected if learning to search for a specific item, measured in terms of the RT decrease across trials, is due to how quickly the visual system of an observer transitions from relying upon visual working memory to relying upon long-term memory to control attention.

Figure 5.

The stimuli (A) and grand average ERP results (B), time locked to the cue presentation (yellow on time axis) until search array onset (shown in blue on the timeline) from consecutive groups of trials. Bottom panel (C) shows the CDA amplitude across consecutive trials with the same search target. The gray line shows the power-function fit and the error bars represent ±1 S.E.M. Reprinted from Carlisle et al. (2011), with permission from the Society for Neuroscience.

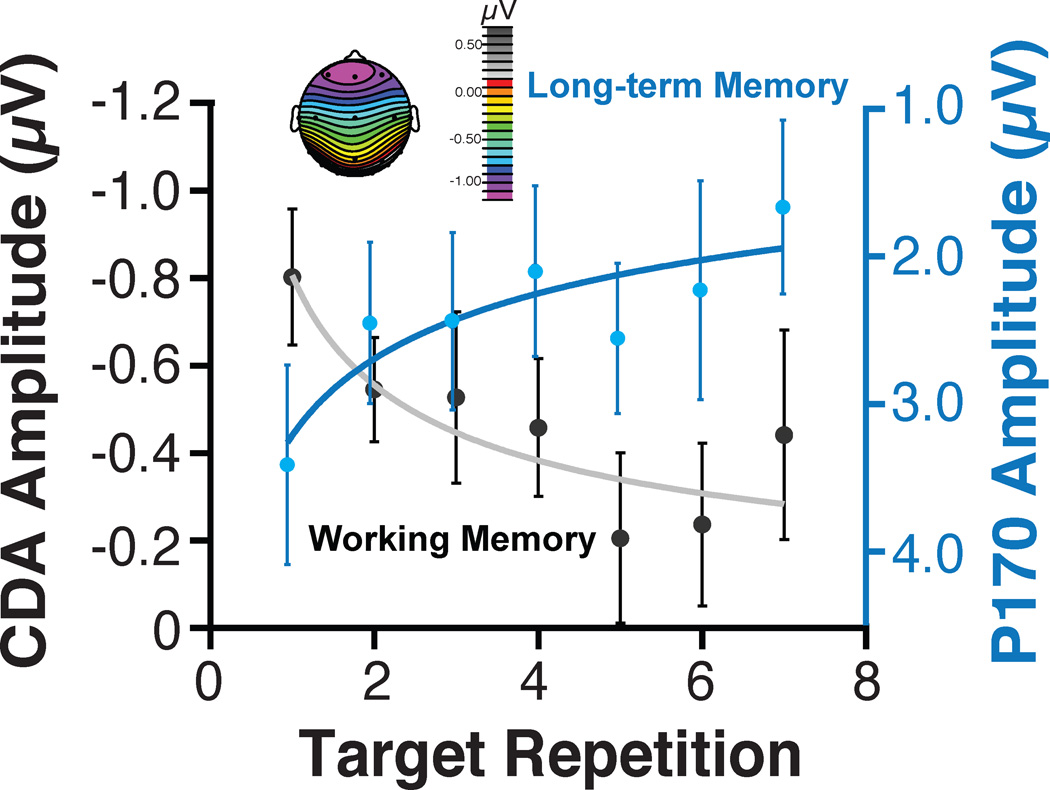

The CDA measure showing that visual working memory decreases across target repetitions is one observation that should be made if attention transitions from reliance on working memory to long-term memory. However, we would be more confident in our conclusions about this dynamic shift in the control of visual attention if we could simultaneously measure an ERP component that indexes the accumulation of information in long-term memory. To find such a component, we turned to the literature. This is when we came across evidence of a frontal ERP component that becomes increasingly negative when a previously seen object is presented again (Voss et al., 2010). Several studies have shown what Voss and colleagues (2010) called a P170 (Tsivilis et al., 2001, Duarte et al., 2004, Diana et al., 2005, Danker et al., 2008). This is a modulation of the first frontal positivity following stimulus onset (i.e., the anterior P1) that is more negative when elicited by a stimulus that the observer has previously seen. In addition, the modulation of this component is correlated with the RT benefit an individual observer shows when responding to a basic feature of an item that was previously shown at the beginning of the experiment compared to a completely novel item (i.e., priming from long-term memory, Voss et al., 2010).

This long-term memory ERP component appeared to be an ideal candidate for us to use to measure the accumulation of information in long-term memory while using the CDA to measure the involvement of visual working memory in controlling attention. First, this anterior P1 has a distribution that is confined to electrodes over frontal cortex, meaning that it does not spatially overlap with the CDA. Second, the timing of this long-term memory component does not overlap with when the CDA is observed, as we discuss further below. These two characteristics mean that we can measure these two indices of memory with essentially no crosstalk or interference.

Figure 6 shows the amplitude of the anterior P1 (or P170) that we measured across target repetitions at the same time that we recorded the systematic reduction of the CDA in Experiment 3 of Carlisle et al. (2011). As is evident, the amplitude of this ERP effect systematically varied across target repetition in the direction we would expect if each target repetition were laying down a long-term memory trace. One concern when recording ERP components that appear to tradeoff amplitude in the manner shown in Figure 6 is that we are measuring two ends of the same dipole. That is, the same electrical field might be measured at different points on the scalp. However, the fact that these two signals are observed in completely different time windows (i.e., 150–200 ms postcue for the P170 and 300–900 ms postcue for the CDA) indicates that this is not the case. Similarly, the observation that one of these effects is a lateralized negativity while the other is a midline effect is hard to reconcile with this account. Thus, our ERP findings indicate that we can measure two different components indexing the accumulation of representations in long-term memory and the active maintenance of representations in visual working memory as observers learn to search complex scenes for specific objects. Our recent work has shown that these ERP components can be studied in macaque monkeys (e.g., Reinhart et al., 2012), so that the network circuitry and neuronal mechanisms underlying these effects can be definitively determined.

Figure 6.

The ERP component amplitudes following the target cue onset as a function of target repetition. The P170 amplitude was measured from 150 postcue 200 ms postcue and the CDA from 300–900 ms postcue. The error bars show ±1 S.E.M. Adapted from Carlisle et al. (2011), with permission from the Society for Neuroscience.

4.0 Conclusions

The research that I have discussed here used electrophysiological measurements from humans and monkeys to bring two points into clear view. First, as predicted by certain theories of visual attention, limited-capacity perceptual processing mechanisms are focused on individual items, or perhaps small groups of items, in a serial fashion. This provides an explanation for why behavioral RTs increase as the complexity of scenes that need to be searched increases. When more possible targets are presented in the scene, then additional shifts of attention are required to process the array and determine whether and where the target is present. This explains one of the key findings of the first study of visual search (Green and Anderson, 1956). Second, the recordings of ERPs and intracranial activity from macaque monkeys show that these shifts of perceptual attention are carried out by a distributed network of frontal and posterior visual areas. These network-level properties of attentional selection are often noted in neuroimaging studies of visual attention (Corbetta and Shulman, 2002, Serences and Yantis, 2006). The combination of electrophysiological measures I discussed have the temporal and spatial resolution to determine the sequence of events within the network and address questions about whether the entire network works in concert or whether specific areas of the visual system send signals to others to direct this selection by visual attention.

The next set of studies I discussed addressed the other fundamental observation made by Green and Anderson (1956) that only items that shared features with the target object influenced observers’ behavioral RTs. To explain this type of finding, theories of attention have proposed that top-down control over the deployment of visual attention to items in a complex scene must exist. Specifically, visual attention is only deployed to items that match the target along certain feature dimensions (e.g., color, shape, size, disparity, motion). The ERP studies of humans performing search show that we guide attention to items defined by particular target features by holding a representation of that target in visual working memory. However, these experiments also showed that visual working memory only plays this controlling role under certain circumstances. Our experiments that involved measuring multiple memory-related ERP components show that visual working memory holds a representation of the target for the first handful of trials until the visual system transitions to being controlled by long-term memory representations. These findings unify predictions from two literatures. Whereas the visual attention literature has focused on the role of visual working memory in controlling attention, the learning and skill acquisition literature has emphasized the switch to the reliance upon long-term memory in accounting for behavioral effects. In the experiments of Green and Anderson (1956), the observers searched for the same targets across multiple trials. Our recent electrophysiological experiments indicate that these observers were relying on long-term memory representations to guide the deployment of attention to possible target items after only a handful of trials with the same target.

The experiments I have described here are really just a beginning. The simultaneous recordings of ERPs and intracranial activity in nonhuman primates have begun to reveal details about the nature of attentional selection in the brain, but much work remains to be done. Not only do we need to simultaneously record from a larger number of areas of the visual system while measuring the surface ERPs, but we also need to directly manipulate the activity within these areas to determine whether they play a causal role in the generation of the electrophysiological effects recorded from humans and nonhuman primates. The research described above also highlights the necessity to understand the nature and interplay of different types of memory representations to understand how visual attention works. It seems evident that the complexity of the questions and techniques needed to answer them will require the dedication of many more young vision scientists.

I present several enduring controversies regarding visual attention using visual search tasks.

I discuss how event-related potentials have shown that attention shifts between items during search.

I describe a technique for recording event-related potentials from monkeys to reveal their origin.

I describe how we can directly measure the memory representations that control attention.

Acknowledgements

I would like to thank Elsevier and the Vision Sciences Society. I am indebted to my mentors, colleagues, and collaborators who did most of the heavy lifting in performing the research that made this paper possible and in helping to formulate the ideas on which the work was based. In particular, Steve Luck, Jeff Schall, Jeremiah Cohen, Rich Heitz, Jason Arita, Nancy Carlisle, Robert Reinhart, and Julianna Ianni made possible the empirical work shown here. Ashleigh Maxcey-Richard provided constructive comments on a previous version of this manuscript. The research reported here is made possible by the National Eye Institute of the Nation Institutes of Health (RO1-EY019882, P30-EY008126) and the National Science Foundation (BCS-0957072).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

For all practical purposes, the terms visual short-term memory and visual working memory are different descriptions of the same cognitive construct. These essentially refer to the ability to temporarily store representations from the visual modality, with these more recent terms being elaborations of the primary memory terminology of James (1890). The general model of working memory proposes that the storage of information in visual working memory is distinct from verbal working memory in that there are separate subordinate systems for information acquired from these different modalities (Baddeley, 1986, 2003).

References

- Amiez C, Petrides M. Anatomical organization of the eye fields in the human and nonhuman primate frontal cortex. Progress in Neurobiology. 2009;89:220–230. doi: 10.1016/j.pneurobio.2009.07.010. [DOI] [PubMed] [Google Scholar]

- Anderson EJ, Jones DK, O'Gorman RL, Leemans A, Catani M, Husain M. Cortical network for gaze control in humans revealed using multimodal MRI. Cerebral Cortex. 2012;22:765–775. doi: 10.1093/cercor/bhr110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson JR. Cognitive Psychology and Its Implications. New York, NY: Worth Publishers; 2009. [Google Scholar]

- Baddeley AD. Working Memory. Oxford: Clarendon; 1986. [Google Scholar]

- Baddeley AD. Working memory: Looking back and looking forward. Nature Reviews Neuroscience. 2003;4:829–839. doi: 10.1038/nrn1201. [DOI] [PubMed] [Google Scholar]

- Baluch F, Itti L. Mechanisms of top-down attention. Trends in Neurosciences. 2011;34:210–224. doi: 10.1016/j.tins.2011.02.003. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Schall JD. Effects of similarity and history on neural mechanisms of visual selection. Nature Neuroscience. 1999;2:549–554. doi: 10.1038/9205. [DOI] [PubMed] [Google Scholar]

- Broadbent DE. A mechanical model for human attention and immediate memory. Psychological Review. 1957;64:205–215. doi: 10.1037/h0047313. [DOI] [PubMed] [Google Scholar]

- Bullier J, Schall JD, Morel A. Functional streams in occipito-frontal connections in the monkey. Behavioral Brain Research. 1996;76:89–97. doi: 10.1016/0166-4328(95)00182-4. [DOI] [PubMed] [Google Scholar]

- Bundesen C. A theory of visual attention. Psychological Review. 1990;97:523–547. doi: 10.1037/0033-295x.97.4.523. [DOI] [PubMed] [Google Scholar]

- Bundesen C, Habekost T, Kyllingsbaek S. A neural theory of visual attention: Bridging cognition and neurophysiology. Psychological Review. 2005;112:291–328. doi: 10.1037/0033-295X.112.2.291. [DOI] [PubMed] [Google Scholar]

- Buschman TJ, Miller EK. Top-down versus bottom-up control of attention in the prefrontal and posterior parietal cortices. Science. 2007;315:1860–1862. doi: 10.1126/science.1138071. [DOI] [PubMed] [Google Scholar]

- Canolty RT, Edwards E, Dalal SS, Soltani M, Nagarajan SS, Kirsch HE, Berger MS, Barbaro NM, Knight RT. High gamma power is phase-locked to theta oscillations in human neocortex. Science. 2006;313:1626–1628. doi: 10.1126/science.1128115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlisle NB, Arita JT, Pardo D, Woodman GF. Attentional templates in visual working memory. Journal of Neuroscience. 2011;31:9315–9322. doi: 10.1523/JNEUROSCI.1097-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carrasco M, Evert DL, Change I, Katz SM. The eccentricity effect: Target eccentricity affects performance on conjunction searches. Perception & Psychophysics. 1995;57:1241–1261. doi: 10.3758/bf03208380. [DOI] [PubMed] [Google Scholar]

- Carrasco M, Frieder KS. Cortical magnification neutralizes the eccentricity effect in visual search. Vision Research. 1997;37:63–82. doi: 10.1016/s0042-6989(96)00102-2. [DOI] [PubMed] [Google Scholar]

- Carrasco M, McLean TL, Katz SM, Frieder KS. Feature asymmetries in visual search: Effects of display duration, target eccentricity, orientation and spatial frequency. Vision Research. 1998;38:347–374. doi: 10.1016/s0042-6989(97)00152-1. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Duncan J, Miller EK, Desimone R. Responses of neurons in inferior temporal cortex during memory-guided visual search. Journal of Neurophysiology. 1998;80:2918–2940. doi: 10.1152/jn.1998.80.6.2918. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Miller EK, Duncan J, Desimone R. A neural basis for visual search in inferior temporal cortex. Nature. 1993;363:345–347. doi: 10.1038/363345a0. [DOI] [PubMed] [Google Scholar]

- Chelazzi L, Miller EK, Duncan J, Desimone R. Responses of neurons in macaque area V4 during memory-guided visual search. Cerebral Cortex. 2001;11:761–772. doi: 10.1093/cercor/11.8.761. [DOI] [PubMed] [Google Scholar]

- Chun MM, Jiang Y. Contextual cueing: Implicit learning and memory of visual context guides spatial attention. Cognitive Psychology. 1998;36:28–71. doi: 10.1006/cogp.1998.0681. [DOI] [PubMed] [Google Scholar]

- Chun MM, Wolfe JM. Just say no: How are visual searches terminated when there is no target present? Cognitive Psychology. 1996;30:39–78. doi: 10.1006/cogp.1996.0002. [DOI] [PubMed] [Google Scholar]

- Cohen A, Ivry RB. Density effects in conjunction search: Evidence for a coarse location mechanism of feature integration. Journal of Experimental Psychology: Human Perception and Performance. 1991;17:891–901. doi: 10.1037//0096-1523.17.4.891. [DOI] [PubMed] [Google Scholar]

- Cohen JY, Heitz RP, Schall JD, Woodman GF. On the origin of event-related potentials indexing covert attentional selection during visual search. Journal of Neurophysiology. 2009;102:2375–2386. doi: 10.1152/jn.00680.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nature Reviews Neuroscience. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Danker JF, Hwang GM, Gauthier L, Geller A, Kahana MJ, Sekuler R. Characterizing the ERP Old-New effect in a short-term memory task. Psychophysiology. 2008;45:784–793. doi: 10.1111/j.1469-8986.2008.00672.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Diana RA, Vilberg KL, Reder LM. Identifying the ERP correlate of a recognition memory search attempt. Cognitive Brain Research. 2005;24:674–684. doi: 10.1016/j.cogbrainres.2005.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duarte A, Ranganath C, Winward L, Hayward D, Knight RT. Dissociable neural correlates for familiarity and recollection during the encoding an retrieval of pictures. Cognitive Brain Research. 2004;18:255–272. doi: 10.1016/j.cogbrainres.2003.10.010. [DOI] [PubMed] [Google Scholar]

- Duncan J. Target and nontarget grouping in visual search. Perception & Psychophysics. 1995;57:117–120. doi: 10.3758/bf03211854. [DOI] [PubMed] [Google Scholar]

- Duncan J. Cooperating brain systems in selective perception and action. In: Inui T, McClelland JL, editors. Attention and performance XVI: Information integration in perception and communication. Cambridge, MA: MIT Press; 1996. pp. 549–578. [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Egeth H, Jonides J, Wall S. Parallel processing of multielement displays. Cognitive Psychology. 1972;3:674–698. [Google Scholar]

- Eimer M. The N2pc component as an indicator of attentional selectivity. Electroencephalography and clinical Neurophysiology. 1996;99:225–234. doi: 10.1016/0013-4694(96)95711-9. [DOI] [PubMed] [Google Scholar]

- Eriksen CW. Locations of objects in a visual display as a function of the number of dimensions on which the objects differ. Journal of Experimental Psychology. 1952;44:56–60. doi: 10.1037/h0058684. [DOI] [PubMed] [Google Scholar]

- Folk CL, Remington RW, Johnston JC. Involuntary covert orienting is contingent on attentional control settings. Journal of Experimental Psychology: Human Perception and Performance. 1992;18:1030–1044. [PubMed] [Google Scholar]

- Green BF, Anderson LK. Color coding in a visual search task. Journal of Experimental Psychology. 1956;51:19–24. doi: 10.1037/h0047484. [DOI] [PubMed] [Google Scholar]

- Halgren E, Boujon C, Clarke J, Wang C, Chauvel P. Rapid distributed fronto-parietooccipital processing stages during working memory in humans. Cerebral Cortex. 2002;12:710–728. doi: 10.1093/cercor/12.7.710. [DOI] [PubMed] [Google Scholar]

- Halgren E, Squires NK, Wilson CL, Rohrbaugh J, Babb TL, Crandall PH. Endogenous potentials generated in the human hippocampal formation and amygdala by infrequent events. Science. 1980;210:803–805. doi: 10.1126/science.7434000. [DOI] [PubMed] [Google Scholar]

- He ZJ, Nakayama K. Surfaces versus features in visual search. Nature. 1992;359:231–233. doi: 10.1038/359231a0. [DOI] [PubMed] [Google Scholar]

- Helmholtz Hv. Ueber einige Gesetze der Vertheilung elektrischer Ströme in körperlichen Leitern mit Anwendung auf die thierisch-elektrischen Versuche. Annalen der Physik und Chemie. 1853;89:211–233. 354–377. [Google Scholar]

- Horowitz TS, Wolfe JM. Visual search has no memory. Nature. 1998;394:575–577. doi: 10.1038/29068. [DOI] [PubMed] [Google Scholar]

- Hubel DH, Livingstone MS. Segregation of form, color, and stereopsis in primate area 18. Journal of Neuroscience. 1987;7:3378–3415. doi: 10.1523/JNEUROSCI.07-11-03378.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hubel DH, Wiesel TN. Receptive fields of single neurones in the cat's striate cortex. Journal of Physiology. 1959;148:574–591. doi: 10.1113/jphysiol.1959.sp006308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Muller HJ. Search via recursive rejection (SERR): A connectionist model of visual search. Cognitive Psychology. 1993;25:43–110. [Google Scholar]

- Ikkai A, McCollough AW, Vogel EK. Contralateral delay activity provides a neural measure of the number of representations in visual working memory. Journal of Neurophysiology. 2010;103:1963–1968. doi: 10.1152/jn.00978.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- James W. The Principles of Psychology. New York: Holt; 1890. [Google Scholar]

- Katzner S, Nauhaus I, Benucci A, Bonin V, Ringach DL, Carandini M. Local origin of field potentials in visual cortex. Neuron. 2009:35–41. doi: 10.1016/j.neuron.2008.11.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim M-S, Cave KR. Spatial attention in visual search for features and feature conjunctions. Psychological Science. 1995;6:376–380. [Google Scholar]

- Klein R. Inhibitory tagging system facilitates visual search. Nature. 1988;334:430–431. doi: 10.1038/334430a0. [DOI] [PubMed] [Google Scholar]

- Kristjansson A. Simultaneous priming along multiple dimensions in a visual search task. Vision Research. 2006;46:2554–2570. doi: 10.1016/j.visres.2006.01.015. [DOI] [PubMed] [Google Scholar]

- Krummenacher J, Mueller HJ, Heller D. Visual search for dimensionally redundant popout targets: Evidence for parallel-coactive processing of dimensions. Perception and Psychophysics. 2001;63:901–917. doi: 10.3758/bf03194446. [DOI] [PubMed] [Google Scholar]

- Leber AB, Egeth HE. Attention on autopilot: Past experience and attentional set. Visual Cognition. 2006;14:565–583. [Google Scholar]

- Logan GD. Toward an instance theory of automatization. Psychological Review. 1988;95:492–527. [Google Scholar]

- Logothetis NK, Pauls J, Augath MA, Trinath T, Oeltermann A. Neurophysiological investigation of the basis of the fMRI signal. Nature. 2001;412:150–157. doi: 10.1038/35084005. [DOI] [PubMed] [Google Scholar]

- Luck SJ. An introduction to the event-related potential technique. Cambridge, MA: MIT Press; 2005. [Google Scholar]

- Luck SJ. Electrophysiological correlates of the focusing of attention within complex visual scenes: N2pc and related ERP components. In: Luck SJ, Kappenman E, editors. The Oxford Handbook of Event-Related Potential Components. New York: Oxford University Press; 2012. pp. 329–360. [Google Scholar]

- Luck SJ, Girelli M, McDermott MT, Ford MA. Bridging the gap between monkey neurophysiology and human perception: An ambiguity resolution theory of visual selective attention. Cognitive Psychology. 1997;33:64–87. doi: 10.1006/cogp.1997.0660. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA. Electrophysiological correlates of feature analysis during visual search. Psychophysiology. 1994a;31:291–308. doi: 10.1111/j.1469-8986.1994.tb02218.x. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA. Spatial filtering during visual search: Evidence from human electrophysiology. Journal of Experimental Psychology: Human Perception and Performance. 1994b;20:1000–1014. doi: 10.1037//0096-1523.20.5.1000. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Hillyard SA, Mangun GR, Gazzaniga MS. Independent hemispheric attentional systems mediate visual search in split-brain patients. Nature. 1989;342:543–545. doi: 10.1038/342543a0. [DOI] [PubMed] [Google Scholar]

- Mangun GR. Neural mechanisms of visual selective attention. Psychophysiology. 1995;32:4–18. doi: 10.1111/j.1469-8986.1995.tb03400.x. [DOI] [PubMed] [Google Scholar]

- McCollough AW, Machizawa MG, Vogel EK. Electrophysiological measures of maintaining representations in visual working memory. Cortex. 2007;43:77–94. doi: 10.1016/s0010-9452(08)70447-7. [DOI] [PubMed] [Google Scholar]

- McElree B, Carrasco M. The temporal dynamics of visual search: Evidence for parallel processing in feature and conjunction searches. Journal of Experimental Psychology: Human Perception and Performance. 1999;25:1517–1539. doi: 10.1037//0096-1523.25.6.1517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McLeod P, Driver J, Crisp J. Visual search for a conjunction of movement and form is parallel. Nature. 1988;332:154–155. doi: 10.1038/332154a0. [DOI] [PubMed] [Google Scholar]

- McPeek RM, Maljkovic V, Nakayama K. Saccades require focal attention and are facilitated by a short-term memory system. Vision Res. 1999;39:1555–1566. doi: 10.1016/s0042-6989(98)00228-4. [DOI] [PubMed] [Google Scholar]

- Moore T, Armstrong KM. Selective gating of visual signals by microstimulation of frontal cortex. Nature. 2003;421:370–373. doi: 10.1038/nature01341. [DOI] [PubMed] [Google Scholar]

- Neisser U. Visual search. Scientific American. 1964;210:94–102. doi: 10.1038/scientificamerican0664-94. [DOI] [PubMed] [Google Scholar]

- Neisser U. Cognitive Psychology. New York: Appleton-Century-Crofts; 1967. [Google Scholar]

- Nothdurft H-C. Saliency effects across dimensions in visual search. Vision Research. 1993;33:839–844. doi: 10.1016/0042-6989(93)90202-8. [DOI] [PubMed] [Google Scholar]

- Noudoost B, Chang MH, Steinmetz NA, Moore T. Top-down control of visual attention. Current Opinion in Neurobiology. 2010;20:183–190. doi: 10.1016/j.conb.2010.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nunez PL, Srinivasan R. Electric fields of the brain: The neurophysics of EEG. Oxford: Oxford University Press, Inc.; 2006. [Google Scholar]

- Olivers CNL, Peters JC, Houtkamp R, Roelfsema PR. Different states in visual working memory: When it guides attention and when it does not. Trends in Cognitive Sciences. 2011;15:327–334. doi: 10.1016/j.tics.2011.05.004. [DOI] [PubMed] [Google Scholar]

- Palmer J. Set-size effects in visual search: The effect of attention is independent of the stimulus for simple tasks. Vision Research. 1994;34:1703–1721. doi: 10.1016/0042-6989(94)90128-7. [DOI] [PubMed] [Google Scholar]

- Palmer J, Verghese P, Pavel M. The psychophysics of visual search. Vision Research. 2000;40:1227–1268. doi: 10.1016/s0042-6989(99)00244-8. [DOI] [PubMed] [Google Scholar]

- Pashler H. Target-distractor discriminability in visual search. Perception & Psychophysics. 1987;41:285–292. [PubMed] [Google Scholar]

- Pillsbury WB. Attention. New York: Macmillan; 1908. [Google Scholar]

- Posner MI, Snyder CRR. Attention and cognitive control. In: Solso RL, editor. Information processing and cognition: The Loyola Symposium. Hillsdale, NJ: Lawrence Erlbaum Associates Inc.; 1975. [Google Scholar]

- Potter MC. Meaning in visual search. Science. 1975;187:965–966. doi: 10.1126/science.1145183. [DOI] [PubMed] [Google Scholar]

- Pouget P, Arita JT, Woodman GF. Primate visual attention: How studies of monkeys have shaped theories of selective processing. In: O L, et al., editors. How Animals See the World: Behavior, Biology, and Evolution of Vision. New York: Oxford University Press; 2012. pp. 335–350. [Google Scholar]

- Purcell B, Heitz RP, Cohen JY, Woodman GF, Schall JD. Timing of attentional selection in frontal eye field and event-related potentials over visual cortex during pop-out search. Journal of Vision. 2010;10:97. [Google Scholar]

- Purcell BA, Schall JD, Woodman GF. On the origin of event-related potentials indexing covert attentional selection during visual search: Timing of attentional selection in macaque frontal eye field and posterior event-related potentials during pop-out search. Journal of Neurophysiology. 2012 doi: 10.1152/jn.00549.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschenberger R, Yantis S. Masking unveils pre-amodal completion representation in visual search. Nature. 2001;410:369–372. doi: 10.1038/35066577. [DOI] [PubMed] [Google Scholar]

- Reinhart RMG, Heitz RP, Purcell BA, Weigand PK, Schall JD, Woodman GF. Homologous mechanisms of visuospatial working memory maintenance in macaque and human: Properties and sources. Journal of Neuroscience. 2012;32:7711–7722. doi: 10.1523/JNEUROSCI.0215-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rizzolatti G, Riggio L, Dascola I, Umilta C. Reorienting attention across the horizontal and vertical meridians: Evidence in favor of a premotor theory of attention. Neuropsychologia. 1987;25:31–40. doi: 10.1016/0028-3932(87)90041-8. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Coles MGH. The ERP and cognitive psychology: Conceptual issues. In: Rugg MD, Coles MGH, editors. Electrophysiology of Mind. New York: Oxford University Press; 1995. pp. 27–39. [Google Scholar]

- Schall JD. On the role of frontal eye field in guiding attention and saccades. Vision Research. 2004;44:1453–1467. doi: 10.1016/j.visres.2003.10.025. [DOI] [PubMed] [Google Scholar]

- Schall JD, Hanes DP. Neural basis of saccade target selection in frontal eye field during visual search. Nature. 1993;366:467–469. doi: 10.1038/366467a0. [DOI] [PubMed] [Google Scholar]