Abstract

Background

Development and targeting efforts by academic organizations to effectively promote research integrity can be enhanced if they are able to collect reliable data to benchmark baseline conditions, to assess areas needing improvement, and to subsequently assess the impact of specific initiatives. To date, no standardized and validated tool has existed to serve this need.

Methods

A web- and mail-based survey was administered in the second half of 2009 to 2,837 randomly selected biomedical and social science faculty and postdoctoral fellows at 40 academic health centers in top-tier research universities in the United States. Measures included the Survey of Organizational Research Climate (SORC) as well as measures of perceptions of organizational justice.

Results

Exploratory and confirmatory factor analyses yielded seven subscales of organizational research climate, all of which demonstrated acceptable internal consistency (Cronbach’s α ranging from 0.81 to 0.87) and adequate test-retest reliability (Pearson r ranging from 0.72 to 0.83). A broad range of correlations between the seven subscales and five measures of organizational justice (unadjusted regression coefficients ranging from .13 to .95) document both construct and discriminant validity of the instrument.

Conclusions

The SORC demonstrates good internal (alpha) and external reliability (test-retest) as well as both construct and discriminant validity.

Keywords: research integrity, organizational climate, reliability, validity, organizational survey

Introduction

Twenty years ago, it was not difficult for leaders in the science community to believe that the primary threats to the integrity of research were blatant, but extremely rare, instances of data fabrication, falsification or plagiarism(Panel on Scientific Responsibility and the Conduct of Research, 1992). At that time, a broader proposed definition of scientific misconduct in terms of misappropriation, interference and misrepresentation,(Integrity and Misconduct in Research: Report of the Commission on Research Integrity, 1995) was widely dismissed and condemned by science leaders(Rennie and Gunsalus, 2008). Research misconduct was explainable with a simple narrative of individual psychopathology(Hackett, 1994), referencing “bad apple” scientists(Sovacool, 2008), who would ultimately always be rooted out anyway, because of the “self-correcting” nature of science itself(Wenger et al., 1997). Given such confidence, it was also easy for organizational leaders to perceive an absence of high profile cases of misconduct on their watch as sufficient evidence that their own institutions evinced research integrity.

Numerous factors over the past two decades have made such beliefs much less tenable. Recent empirical research has documented high levels of undesirable research related behaviors among NIH funded scientists ranging from questionable, to irresponsible to formally defined misconduct(Martinson et al., 2005; Titus et al., 2008). In the popular press, there has been an ongoing drumbeat of high-profile cases of misconduct occurring in a broad spectrum of fields of science, alongside growing discussion and concern both nationally and internationally about other threats to integrity, including conflicts of interest, inadequate oversight of use of human and animal subjects in research, misappropriation of research funds, and failure to maintain the integrity of data and research records(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002; Council of Canadian Academies and The Expert Panel on Research Integrity, 2010; Irish Council for Bioethics, Rapporteur Group, 2010; Steneck and Mayer, 2010; Council of Graduate Schools, 2011). Such behaviors threaten damage to institutions’ reputations, and loss of public trust in the research process. Some of these behaviors, such as using inadequate or inappropriate research designs, surreptitiously dropping observations or data points, and other failures to maintain the integrity of one’s data also jeopardize the integrity of the research directly and the accuracy of the scientific record.

The narrative of individual impurity as a primary explanation for research misconduct has not entirely disappeared. In fact, preliminary empirical research has linked personality characteristics such as narcissism and cynicism with defects in ethical decision-making(Antes et al., 2007). Our own research has explored the potential role of individual difference measures such as intrinsic drive and identity as factors that may mediate associations of environmental circumstances and research-related behaviors.(Martinson et al., 2006, 2010) Yet, the rather simplistic “bad apple” explanations of the past have been challenged by alternative narratives that focus on institutional failings and structural crises in science, giving credence to the importance of the environments and contexts within which science is conducted for understanding the behavior of scientists(Mumford and Helton, 2001; Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002; Sovacool, 2008; Teitelbaum, 2008; DuBois et al., 2012). As early as the mid 1990s, Hackett referenced Robert Merton’s strain theory, with its emphasis on anomie12 and Max Weber’s observations regarding alienation among scientists resulting from the social organization of academic science, as potential theoretical frameworks for understanding misconduct in science(Hackett, 1994). The 2002 Institute of Medicine (IOM) report, “Integrity in Scientific Research: Creating an Environment that Promotes Responsible Conduct,” was a landmark publication in drawing attention to the theoretical importance of research environments in either fostering or undermining research integrity and responsible conduct of scientists(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002). At the time of that IOM report, and lamented by the committee members who drafted it, there was almost no existing empirical research on the topic. Since that time, our own research has documented associations between researchers’ self-reported behaviors and various features of organizational environments(Martinson et al., 2006, 2009, 2010; Anderson et al., 2007). Similarly, the work of Mumford and colleagues has explored the empirical associations of environmental influences on ethical decision-making among first year graduate students(Mumford et al., 2007). The 2002 IOM report referenced above also promoted a performance-based, self regulatory approach to fostering research integrity and research best practices(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002). Specifically, this report recommended that institutions seeking to create environments that promote responsible research should: (1) establish and continuously measure relevant structures, processes, policies, and procedures, (2) evaluate the institutional environment supporting integrity in the conduct of research and (3) use this knowledge for ongoing improvement(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002). At the time of the IOM publication, there were no gold-standard measures of institutional environments available to fill this need. In 2006, research by Thrush et al. laid the ground work for development of a survey responsive to the IOM’s call for such a measure; and provided evidence of content validity for items designed to assess the organizational climate for research integrity in academic health center (AHC) settings(Thrush et al., 2007).

Defining Research Integrity

Historically, terms such as “research integrity” and “responsible conduct of research,” have been ill- and variously defined(Steneck, 2006; Steneck and Bulger, 2007). One widely referenced definition of the responsible conduct of research was put forth in 2000 by the U.S. Public Health Service(Department of Health and Human Services, 2000) and consists of nine core areas considered important in RCR training and educational efforts. Yet even this definition has been controversial among those who teach and study RCR(Heitman and Bulger, 2005) and the policy was formally suspended little more than a year after its issuance(Department of Health and Human Services, 2001). Still, instruction in these areas has been adopted as a requirement for recipients of National Research Service Award (NRSA) grants(Heitman et al., 2005). Thus, some investigators(Pimple, 2002) have referred to these nine domains as a “federal view of the scope of the responsible conduct of research” (p. 192).

In the fall of 2010, however, no fewer than three definitions of research integrity were offered on the world stage nearly simultaneously: one from Canada(Council of Canadian Academies and The Expert Panel on Research Integrity, 2010), a second from Ireland(Irish Council for Bioethics, Rapporteur Group, 2010), and a third known as “The Singapore Statement”(Steneck and Mayer, 2010). The three definitions overlap considerably in what they refer to as the core values, principles and responsibilities defining research integrity and responsible conduct of research. The Canadian report, drafted by an Expert Panel of the Council of Canadian Academies, defined research integrity as “…the coherent and consistent application of values and principles essential to encouraging and achieving excellence in the search for, and dissemination of, knowledge. These values include honesty, fairness, trust, accountability, and openness”(Council of Canadian Academies and The Expert Panel on Research Integrity, 2010).

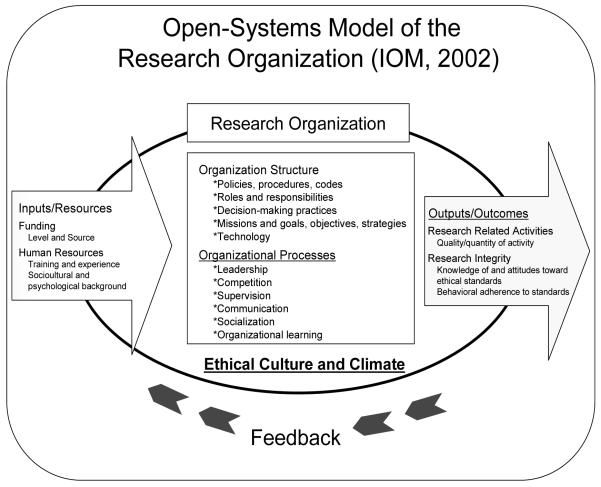

The development of the survey described in this paper, the Survey of Organizational Research Climate (SORC), is grounded in the IOM’s(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002) open systems conceptual framework for research integrity; central to which is organizational climate (ethical culture and climate), and which recognizes research integrity as an outcome of complex dynamic processes influenced by multiple factors(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002).

The framework, as shown in Figure 1, consists of inputs, structures, processes, and outcomes as conceptual factors important in affecting integrity in the research environment. The climate assessment tool we have developed, the psychometric properties of which we establish in this paper, reflects the key institutional level factors presented in the center of the IOM model, including measurement of concepts such as visible ethical leadership, socialization and communication processes, and the presence of policies, procedures, structures and processes to deal with risks to integrity.

Figure 1.

IOM Conceptual Model

In attempting to promote the integrity of scientific research, few would dispute that most research organizations would prefer an internal, self-regulatory approach over one favoring compliance with externally imposed mandates. How well self-regulation works for this purpose, however, and its variability across organizational settings, remain open questions. Appropriate targeting of educational interventions or organizational change initiatives to promote research integrity would be greatly facilitated by tools to allow organizations to collect reliable data to benchmark baseline conditions, to assess areas needing improvement, and to subsequently assess the impact of specific initiatives. In contrast with such a proactive, forward looking, self-regulatory approach, Geller and colleagues have recently argued that biomedical research demonstrates a compliance-oriented culture. Features of this culture/climate include a strong hierarchy, power differentials, discrepant perceptions about research training needs, hesitation to openly discuss research mistakes, and adversarial relations of researchers and IRBs(Geller et al., 2010).

In many ways, the logic behind the IOM’s proposal to use assessment and feedback mechanisms to foster research integrity is similar to the logic used to promote patient safety cultures and climates in hospitals and the logic used to improve the professional behavior of healthcare providers. Since the publication of the IOM report, “To Err is Human,”(Institute of Medicine and Committee on Quality of Health Care in, 2000) there have been extensive discussions and ongoing initiatives aimed at creating a “culture of patient safety” in medicine – particularly in hospital settings. In a March 2010 commentary, Dr. Lucian Leape, widely respected as an expert in patient safety and medical errors in the United States, made the case for and against several methods of trying to encourage hospitals to engage in safer practices to avoid infections. Of the several methods he discusses, he identifies reporting and feedback as the most potent method. As evidence of this, Leape points to findings from the National Surgical Quality Improvement Program, pioneered by the VA in the 1990s. In this system, physicians were required to provide data on their own performance, which was then summarized into self and comparative performance reports fed back to surgical specialty departments in the VA. Changes resulting from this process included large improvements in below-average units and system-wide declines in complication & mortality rates(p. 2)(Leape, 2010).

Under the “Reporting and Feedback” heading, Leape describes a strategy very similar in spirit and character to the approach our team has taken in developing, validating and propagating the SORC. The SORC is a self-assessment tool for use by organizations to assess their employees’ perceptions of responsible research practices and conditions in local environments. Appropriate for use in a broad range of fields/disciplines and statuses, this tool can provide institutional leaders with “baseline” assessments of research integrity climate, and provide metrics to assess aspects of their climates which are both mutable and should be subject to change in response to organizational change initiatives aimed at promoting research integrity.

The SORC provides a measure of how participants perceive the quality of the environments in which they are immersed and the extent to which their organizational units support responsible research practices and research integrity. The measure does not inform us about individuals’ behavior or performance, but, by aggregating responses within meaningful organizational units, the survey subscales can provide a picture of group-level perceptions of environmental conditions; what organizational researchers refer to as ‘organizational climate’. Within the fields of Industrial/Organizational Psychology, and Organizational Behavior, there is more than a 50 year history of study of the concepts of organizational climate and organizational culture(Landy and Conte, 2010). While distinctions and similarities between these overlapping, but distinct, concepts have been hotly debated, this manuscript is not the place to delve deeply into that discussion. Briefly, what we set out to measure with the SORC is what Schein would refer to as the first level of organizational culture – the visible organizational structure and processes which can be conceived of as “artifacts” of the organizational culture(Schein, 1991), which represents shared but underlying and often unspoken patterns of values and beliefs held by organizational members. By focusing on measurement of organizational structures and processes, the SORC is distinct from other recent efforts to measure “ethical work climates,” which have focused primarily on tapping the moral sensitivity and motivations of organizational members(Arnaud, 2010). The broad goal of our research was to complete development of a fixed-response survey tool appropriate for assessing the organizational research climate in academic health center (AHC) settings. The specific goal of this paper is to report our assessments of the reliability (both internal and test-retest) of this newly developed instrument and present evidence for its construct validity by comparing the SORC with similar, yet conceptually distinct constructs.

Method

Data collection

We obtained prior approval for this research from the Regions Hospital Institutional Review Board, the oversight body with responsibility for all research conducted at HealthPartners Institute for Education and Research, and from the University of Arkansas for Medical Sciences Institutional Review Board. In the second half of 2009, we conducted a web-based survey with mailed follow-up of 2,836 randomly selected biomedical and social science faculty and postdoctoral fellows from 251 departments across 40 academic health centers in top-tier research universities in the United States. We asked respondents to report their perceptions of the research climates at their university and in the department in which they had their primary affiliation using the SORC. We also asked for their responses to a series of questions about their perceptions of organizational justice with respect to a number of domains in their work.

Each faculty member and postdoctoral fellow in the sampling frame was sent a pre-notification email describing the goals of the survey and advising them that they would be receiving an invitation email and URL to the online survey in 3 business days. The invitation email that followed included a university-specific URL to the survey homepage where prospective respondents could learn more about the researchers, the goals of the project, and the protections in place to ensure respondent anonymity and data confidentiality as well as view the content of the survey prior to participating. Telephone numbers and email addresses of the Co-Principal Investigators (BCM, CRT) were provided in the invitation email so that prospective respondents could ask for additional information or opt out of receiving additional contacts from the research team.

When respondents began taking the survey, the web application stored the date and time the survey was accessed, the number of sections of the survey that were completed, the amount of time the respondent’s browser remained in each section of the survey, and the university-specific URL the respondent used to access the survey. The application also assigned a sequential number to each set of submitted survey responses that represented the order in which survey data were saved by the website. The application did not retain any server logs, IP addresses or identifiers more specific than the university-specific URL that was used. The URL enabled the survey data to be attributed to an institution, and respondents selected the type of department in which they worked from a standardized list (i.e., no department names). Even though identifying information was used to invite potential respondents to participate in the survey, none of this information was linked to the survey data so that survey responses remained anonymous.

The last page of the online survey asked respondents to indicate that they had completed the survey by sending an email to the survey center from the account at which they were contacted with the subject line ‘Climate Study Complete.’ This prompted the survey center to remove that email address from the distribution list and prevent any further contact. Reminder emails were sent 4, 8, 12 and 16 business days after the invitation email to prospective respondents who had not opted out and had not indicated that they had completed the survey.

A survey packet that included a cover letter, $2 token of appreciation, paper survey and postage-paid self-addressed return envelope was sent to prospective respondents who did not opt out of study participation and did not notify the survey center that they had completed the online survey. The cover letter reminded prospective respondents of the goals of the research and invited them to complete and return the paper survey or to complete the online survey using their institution-specific URL. Returned paper surveys were also assigned a sequential number in the order they were received.

Sample Frame Construction

The sampling frame was constructed to include N = 2500 academic faculty who were actively involved in academic research in disciplines that receive substantial funding from the National Institutes of Health at the premier academic health centers in the United States. A pool of eligible faculty members was created by first identifying the premier medical schools conducting academic research, the departments at each school that receive substantial NIH funding, and then drawing a random sample of 10 research faculty within each of these departments. Medical schools, and departments within medical schools, were assigned unique random identifiers. Medical schools were brought into the sampling frame in ascending order of their random identifier until the frame included 250 departments, the N = 2500 academic faculty housed in these departments, and the post-doctoral fellows supported in these departments. The details of this process are as follows.

The premier medical schools in the United States were identified by cross-referencing a list of 123 medical schools that conducted research funded by the National Institutes of Health, obtained from the AAMC website, with medical schools that were members of the Association of Academic Health Centers. This cross-referencing yielded a list of 91 medical schools, which was further reduced to 75 after removing schools that explicitly forbade use of their website for assembling an email distribution list and those with insufficiently detailed websites to support this activity. Remaining schools that were already participating in a related study conducted by the Council of Graduate Schools, several schools entertaining piloting of our survey, and one school selected for our test-retest sub-study were also excluded, leaving 66 medical schools.

Departments at the 66 medical schools were considered for eligibility based on available FY 2004 NIH extramural award rankings and department size. A listing of NIH FY2005 research grant awards were sorted within each medical school by the department type assigned by NIH. Department types at each school that received fewer than 5 research grant awards were removed from further consideration. The remainder was a listing of department types at each of the 66 medical schools to consider for eligibility.

Website links were identified for all departments that would be aptly described by each department type at each medical school. As websites permitted, we counted the number of research faculty at each department. Clinical faculty were counted toward the number of research faculty if their publication history available on the department website or via a PubMed search showed evidence of academic publication in the prior 3 years. A department was ineligible for further consideration if its website was insufficiently detailed to construct a distribution list for the research faculty housed in that department or if there were fewer than 11 research faculty in the department.

A random number was assigned to each research faculty member in each of the remaining departments. The ten faculty members with the lowest randomly assigned numbers from each of the remaining departments were considered eligible. Post doctoral fellows in each of the remaining departments were identified through the department website and a listing of recent NIH National Research Service Award recipients. All fellows were considered eligible and added to the pool of potential respondents from which the sampling frame was built.

After the pool of eligible research faculty within eligible departments within eligible medical schools was identified, the sampling frame was built in ascending order of the random number assigned to medical schools. If a medical school had fewer than 15 eligible departments, all departments and their research faculty and post doctoral fellows were added to the frame. For schools with more than 15 eligible departments, the departments with the 15 lowest random numbers (and their faculty and fellows) were added. Medical schools were added until 250 departments with 2500 faculty (and all fellows in these departments) were in the sampling frame.

Test-retest

Test-retest reliability was assessed in a sample of N = 150 research investigators at one medical school who were randomly selected from among all actively funded principal investigators at the institution. A survey packet was sent via campus mail inviting these investigators to complete the SORC on a scannable survey form and return it to one of the researchers. An email reminder to all investigators was sent one week later. This process was repeated 3-4 weeks after the baseline surveys were mailed, a time frame long enough to ensure that respondents would be unlikely to recall their responses to the survey but short enough to reduce the likelihood that respondents’ perceptions of the climate would change substantially and prompt different responses to the retest items. Baseline (N = 91) and retest (N = 65) surveys were coded with a random identifier allowing surveys from the same respondent to be linked without identifying the respondent. All surveys were entered into a database using a high speed scanner.

Measures

Survey of Organizational Research Climate (SORC)

We initially examined a pool of 42 items for inclusion in the SORC, the list of which we derived from a research study conducted to establish item content validity as rated by research integrity experts. As described in more detail elsewhere(Thrush et al., 2007), experts rated potential survey items for relevance and clarity, as well as overall comprehensiveness for measuring the constructs (inputs, structures, processes and outcomes for organizational research climate). Survey items ask about respondent’s perceptions of research climate in the organization as a whole and in one’s primary program or subunit (e.g., center, department) in the organization. Respondents rated each item on a 5-point scale: (1) Not at All, (2) Somewhat, (3) Moderately, (4) Very, and (5) Completely, to indicate their perceptions of the quantity of a specific property existing in their organization and unit, e.g., “How committed are advisers in your department to talking with advisees about key principles of research integrity.” A sixth response option, “No Basis for Judging,” is also offered to avoid forcing a response about a specific perception where none exists. We assigned this response option a missing value for our analyses. The instrument includes two items assessing global perceptions of the institutional environment and two items to assess global perceptions of one’s department/program. These four items were averaged to form an a priori, relatively generic global perception scale. The remaining items were included in the analyses reported below to create additional construct-specific subscales.

Organizational justice

Organizational justice is a concept pertaining to individuals’ perceptions about the “fairness” of decision-making and resource distribution outcomes within organizations(Adams, 1965; Pfeffer and Langton, 1993; Folger and Cropanzano, 1998; Colquitt, 2001; Clayton and Opotow, 2003; Tyler and Blader, 2003). In the context of academic research in the U.S., the most salient decision-making and distribution processes are those of tenure and promotion committees, regulatory oversight bodies (e.g. IRBs and Institutional Animal Control and Use Committees or IACUCs ), peer review committees for research grant proposals, and peer review of manuscripts. Five organizational justice composites, developed from previously validated measures(Colquitt, 2001), assessed respondents’ perceptions that procedural and distributional justice principles guided decisions made in their departments, universities, and regulatory oversight review boards (IRBs & IACUCs), and with respect to peer review of manuscripts and grant applications. Each composite was computed as the mean of respondents’ agreement rating (1 = strongly disagree to 7 = strongly agree) for each item in the composite, with higher values indicating perceptions of more justice. One can view perceptions of justice in these domains as evidence of favorable working conditions and environments. Because of its conceptual overlap, organizational justice should be positively related to organizational research climate, with stronger associations among measures assessing similar environments (e.g. department, university vs. environments outside one’s local institution).

Measures of professional-life classifications

Measures of professional status included years since doctoral degree, whether doctoral degree was received in the US, type of doctoral degrees earned, academic rank, tenure status and department of primary affiliation.

Demographic classification measures

Measures of demographic characteristics included gender, race and ethnicity.

Statistical analysis

Preparatory analyses

Respondents who provided valid responses for fewer than half of the preliminary set of SORC items were excluded from these analyses (n = 206). The remaining sample was split into random halves by placing respondents with an even random sequential identifier in an exploratory sample (n = 533) and an odd identifier in a confirmatory sample (n = 528). Measures of central tendency and dispersion were calculated for each of the 42 preliminary SORC items, and Pearson correlation coefficients were computed to assess the strength of the bivariate relationships among items.

Exploratory analyses

The goal of the exploratory analyses was to reduce the number of SORC items to those needed to construct a minimum number of conceptually and empirically distinct subscales that would each demonstrate conceptual and empirical internal consistency. The first step in meeting this objective was to perform an exploratory factor analysis (EFA) using data from the preliminary set of 42 SORC items without any specification as to the number of factors that should be extracted or which items should indicate each factor. In a second exploratory factor model, each item was assigned to the factor on which it had the highest loading in the EFA. The estimation of this model began an iterative process in which the results of each estimated factor model informed the specifications of the next. This process continued until a satisfactory factor model was obtained. The criteria for a good-fitting model were that model χ2 / df < 2, comparative fit index (CFI) > .90, root mean square error of approximation (RMSEA) < .08, and the standardized root mean residual (SRMR) < .10(Vandenberg and Lance, 2000).

The items in each factor model were specified to load on one latent factor (exceptions noted), their relationships with other items and factors were fixed at zero, and item residual correlations were fixed at zero. Structural correlations among latent factors were freely estimated. Decisions about improving the fit of each estimated model were guided by modification indices, residual variances, the number of items per factor and our conceptual understanding of each factor. Modification indices were used to identify latent factors that could be combined, items to move to a different factor, and cross-loading items to remove from the model. Items were also removed from the model if they were not predicted well by any of the latent factors or if their removal would not meaningfully reduce the internal consistency of their latent factor. Finally, when empirical evidence was ambiguous with respect to what modifications would improve model fit the most, items were associated with latent factors in a manner that would improve the conceptual clarity of the factor.

The initial EFA was estimated specifying maximum likelihood (ML) estimation, principal components extraction and varimax rotation. ML estimation was chosen so that the analyzed covariance matrix would include all available data from respondents who answered at least half of the SORC items. The remaining factor models in the exploratory and confirmatory analyses were estimated, again specifying maximum likelihood estimation and including all observations in the covariance matrix. It should be noted that although there was a hierarchical structure to the data (i.e., respondents nested within academic departments) there were only, on average, 5.3 respondents per department (IQR = 3-7). The small cluster size made it unlikely that a between-departments factor model could be accurately estimated, so the hierarchical structure was not incorporated into the analyses(Peugh and Enders, 2010).

Confirmatory Factor Analysis

A confirmatory factor model assessed the adequacy of model fit when the solution obtained from the final exploratory factor model was applied to the confirmatory data. The confirmatory model was specified to have precisely the same constraints and estimated paths as the final exploratory model (e.g., same latent factors, indicated by the same items), and no modifications were made to improve fit. The same criteria were used to assess the adequacy of the model fit (i.e., χ2 / df < 2, CFI > .90, RMSEA < .08, SRMR < .10).

Scale Construction and Reliability

SORC subscales representing each latent factor in the CFA were calculated as the average rating of items loading on the factor when the respondent provided ratings for at least half of the items in the subscale. Several considerations went into the decision to construct each subscale as the average of item ratings rather than as a factor score. There were no a priori expectations as to the relative importance of each item, and weighting each item equally most clearly reflected this lack of expectation. Using an average score avoids concerns about lack of generalizability should the factor loadings obtained in this sample not replicate in other samples. Practically, calculating a factor score may present a barrier to use, and the relative ease in calculating an average may increase adoption of the SORC. We acknowledge that the terms scale and subscale are often used to refer to composites derived from factor scores rather than average ratings. We make colloquial use of these terms in the interest of simplicity without intending to convey the use of factor scores or other weighting schemes in generating composite SORC measures.

The internal consistency of each subscale was calculated twice, once as Cronbach’s α and once using the standardized factor loadings from the confirmatory factor model (i.e., reliability = [(sum(sli))2] / [(sum(sli))2 + sum(ei)]), to ensure that the reliability estimates did not differ as a function of whether they were calculated from raw data or factor loadings. Pearson correlations between SORC subscales were calculated at baseline and retest. Intraclass correlations were calculated for each subscale to quantify the proportions of variance attributable to the department (within university) and to the university in which the respondent worked.

Discriminant validity assessment

General linear mixed models were estimated to separately predict perceptions of organizational justice in each of five distinct settings from each SORC subscale. The organizational justice construct was selected for these analyses because the climate of an environment is likely to temper perceptions of fairness in the rules that guide decisions and their application. Therefore organizational climate and organizational justice should be empirically related although conceptually distinct. The five settings were selected as a check of whether the SORC subscales discriminated between climates in a department, an institution and the research environment at large. It was expected that institution-based SORC subscales would more strongly predict university- and IRB/IACUC-based justice, that department-driven SORC subscales would most strongly predict department-based justice, and that none of the SORC subscales would strongly predict justice with respect to manuscript or grant review processes. Each general linear mixed model was estimated, specifying restricted maximum likelihood estimation, and an unstructured covariance matrix and random intercept for each departmental cluster. The fixed slope of each model represented the average bivariate relationship between the SORC subscale and organizational justice.

Results

The sampling frame consisted of N = 2500 faculty researchers and N = 336 post-doctoral fellows. It was reduced to a total of N = 2543 once undeliverable and ineligible elements were removed. There were 993 total website hits, of which 13 had data recorded that appeared to be duplicative from other website hits and 29 had no data recorded in the database, leaving n = 952 unique web respondents. In addition, 342 mailed surveys were returned but 26 appeared to duplicate website data, leaving n = 316 unique mailed survey respondents. These N=1267 web and mailed survey respondents, representing roughly 50% of the eligible sample, were the basis of the analyses reported here.

Personal and professional characteristics of the survey respondents are presented in Table 1. Because some respondents did not complete all sections of the survey, the number of valid observations is displayed for each variable. As shown, the respondents tended to be non-Hispanic White men who earned their PhD about 20 years ago from an institution in the United States and were currently in tenure track positions at the rank of associate or full professor.

Table 1.

Personal and professional characteristics of N=1267 survey respondents.

| n valid | M (SD) / % | |

|---|---|---|

|

|

||

| Male | 957 | 60.2% |

| Hispanic | 963 | 3.2% |

| Race | 946 | |

| White | 75.6% | |

| Asian | 13.7% | |

| African American | 1.3% | |

| Other | 1.2% | |

| Decline response | 8.3% | |

| Years since doctoral degree | 942 | 20.2 (11.7) |

| First doctoral degree in US | 965 | 80.0% |

| Earned doctoral degree(s) | 1191 | |

| PhD | 73.0% | |

| MD | 14.9% | |

| MD / PhD | 6.8% | |

| Other | 5.4% | |

| Department type | 1267 | |

| Basic sciences | 48.3% | |

| Medicine | 21.1% | |

| Applied health and sciences | 20.0% | |

| Missing | 10.6% | |

| Academic rank | 1176 | |

| Professor | 37.5% | |

| Associate professor | 24.9% | |

| Assistant professor | 23.4% | |

| Post-doctoral | 9.8% | |

| Other | 4.4% | |

| Tenure status | 1150 | |

| Tenured | 48.2% | |

| Not on tenure track | 33.9% | |

| Not yet tenured | 17.9% | |

We noted some differentials in the level of missing responses to SORC questions by sample sub-groups. Specifically, missing SORC data was more common among assistant professors than among full professors (p=.002), and among non-tenure track faculty than among tenured (p = 0.0002). Missing responses were lower among those whose research is reviewed by an IACUC (vs. not) (p = 0.05). In contrast, we did not observe significant differences in missing responses by type of highest degree (PhD vs. MD vs. Other) and by whether one’s research is reviewed by an IRB (vs. not) or by biosafety review body (vs. not).

The covariance matrix implied by the final EFA was an excellent fit to the exploratory data (χ2(325) = 612.9, χ2/df = 1.9, CFI = .96, RMSEA = 0.04, SRMR = 0.04). This factor model consisted of 7 correlated latent factors, 2 indicated by items that assessed climate in the respondent’s institution and 5 by items specific to their department or program. Table 2 summarizes the items that loaded on each of the 7 latent factors as well as the descriptive statistics and standardized factor loadings for each of the 28 items that were retained. Each item was specified to load on only one latent factor, but four sets of item residuals (i.e., 1i-1h, 2t-2f, 2i-2d, 2l-2r) were allowed to correlate (range 0.25-0.45) to improve model fit. The correlations among the latent factors were all statistically significant (p<.001, range 0.32-0.85, average = 0.61).

Table 2.

Descriptive statistics and standardized factor loadings from the EFA and CFA models for 28 SORC items and 7 latent factors.

| n | M (SD) | EFA loading |

CFA loading |

|

|---|---|---|---|---|

| Institutional Regulatory Quality | ||||

| 1c. How respectful to researchers are the regulatory committees or boards that review the type of research you do (e.g. IRB, IACUC, etc.)? |

995 | 3.90 (.87) |

.83 | .82 |

| 1e. How well do the regulatory committees or boards that review your research (e.g. IRB, IACUC, etc.) understand the kind of research you do? |

1000 | 3.55 (.96) |

.76 | .69 |

| 1j. How fair to researchers are the regulatory committees or boards that review the type of research you do (e.g. IRB, IACUC, etc.)? |

976 | 3.89 (.82) |

.85 | .87 |

|

| ||||

| Institutional RCR Resources | ||||

| 1d. How effectively do the available educational opportunities at your university teach about responsible research practices (e.g. lectures, seminars, web-based courses, etc.)? |

1021 | 3.74 (.92) |

.71 | .72 |

| 1f. How accessible are individuals with appropriate expertise that you could ask for advice if you had a question about research ethics? |

1001 | 3.93 (.94) |

.75 | .76 |

| 1g. How accessible are your university’s policies / guidelines that relate to responsible research practices? |

1025 | 4.05 (.85) |

.80 | .76 |

| 1h. How committed are the senior administrators at your university (e.g. deans, chancellors, vice presidents, etc.) to supporting responsible research? |

968 | 4.07 (.93) |

.72 | .72 |

| 1i. How effectively do the senior administrators at your university (e.g. deans, chancellors, vice presidents, etc.) communicate high expectations for research integrity? |

987 | 3.74 (1.06) |

.69 | .74 |

| 1k. How confident are you that if you needed to report a case of suspected research misconduct, you would know where to turn to determine what procedures to follow? |

1015 | 3.86 (1.05) |

.73 | .71 |

|

| ||||

| Departmental / Program Integrity Norms | ||||

| 2g. How consistently do people in your department obtain permission or give due credit when using another’s words or ideas? |

847 | 3.83 (.91) |

.71 | .70 |

| 2o. How consistently do research practices in your department follow established institutional policies? |

979 | 4.15 (.76) |

.78 | .77 |

| 2p. How valued is honesty in proposing, performing, and reporting research in your department? |

995 | 4.19 (.86) |

.84 | .80 |

| 2s. How committed are people in your department to maintaining data integrity and data confidentiality? |

957 | 4.12 (.79) |

.79 | .75 |

|

| ||||

| Departmental / Program Integrity Socialization | ||||

| 2h. How committed are advisors in your department to talking with advisees about key principles of research integrity? |

916 | 3.66 (1.00) |

.79 | .75 |

| 2j. How effectively are junior researchers socialized about responsible research practices? |

939 | 3.49 (1.01) |

.80 | .80 |

| 2k. How consistently do administrators in your department (e.g., chairs, program heads) communicate high expectations for research integrity? |

1006 | 3.50 (1.19) |

.81 | .80 |

| 2m. How consistently do advisors/supervisors communicate to their advisees/supervisees clear performance expectations related to intellectual credit? |

900 | 3.39 (1.02) |

.76 | .72 |

|

| ||||

| Departmental / Program Integrity Inhibitors – Reverse Coded | ||||

| 2d. How difficult is it to conduct research in a responsible manner because of insufficient access to human resources such as statistical expertise, administrative or technical staff? |

986 | 3.75 (1.14) |

.62 | .55 |

| 2f. How guarded are people in their communications with each other out of concern that someone else will "steal" their ideas. |

970 | 3.75 (1.10) |

.48 | .64 |

| 2i. How difficult is it to conduct research in a responsible manner because of insufficient access to material resources such as space, equipment, or technology? |

1018 | 3.84 (1.10) |

.54 | .53 |

| 2l. How true is it that pressure to publish has a negative effect on the integrity of research in your department? |

923 | 4.14 (1.12) |

.66 | .74 |

| 2r. How true is it that pressure to obtain external funding has a negative effect on the integrity of research in your department? |

923 | 4.09 (1.16) |

.73 | .75 |

| 2t. How true is it that people in your department are more competitive with one another than they are cooperative? |

999 | 4.01 (1.12) |

.66 | .72 |

|

| ||||

| Departmental / Program Advisor-Advisee Relations | ||||

| 2n. How fairly do advisors/supervisors treat advisees/supervisees? |

961 | 3.73 (.81) |

.87 | .85 |

| 2q. How respectfully do advisors/supervisors treat advisees/supervisees? |

981 | 3.86 (.83) |

.90 | .88 |

| 2u. How available are advisors/supervisors to their advisees/supervisees? |

975 | 3.76 (.82) |

.69 | .71 |

|

| ||||

| Departmental / Program Expectations | ||||

| 2c. How fair are your departments expectations of researchers for obtaining external funding? |

984 | 3.53 (.98) |

.80 | .79 |

| 2e. How fair are your departments expectations with respect to publishing? |

1019 | 3.75 (.87) |

.88 | .85 |

Note: All standardized factor loadings are significant at p<.001. Bold denotes factor loadings ≥ .60, bold and underlined denotes factor loadings ≥ .85

The confirmatory factor analysis (CFA) estimated on the second half of the data also resulted in a model that was a very good fit (χ2(325) = 693.2, χ2/df = 2.1, CFI = 0.95, RMSEA = 0.05, SRMR = 0.04), and produced a similar pattern of factor loadings and correlations. The final column in Table 2 shows that the factor loadings obtained from the CFA were very similar to those observed in the final EFA model. The correlations among item residuals (range 0.29-0.52) and latent factors (range 0.20-0.85, average = 0.56) were again statistically significant (p<0.001) and similar in magnitude to those estimated by the EFA.

Seven SORC subscales were calculated as the mean of responses to items loading on each latent factor that was identified in the EFA and CFA models (Table 3). Each subscale demonstrated acceptable internal consistency whether calculated from the raw responses, Cronbach’s α = 0.81 (Departmental / Program Expectations) to α = 0.87 (Institutional RCR Resources), or using the CFA factor loadings, reliability =.80 (Institutional Regulatory Quality) to reliability = 0.88 (Institutional RCR Resources). The subscales also demonstrated adequate test-retest reliability, Pearson r = 0.72 (Departmental / Program Advisor-Advisee Relations) to r = 0.83 (Institutional RCR Resources, Departmental / Program Expectations). There was significant systematic variation in subscale scores across departments (ICCdept= 0.07-0.21) and universities (ICCuniv = 0.00-0.03), consistent with the expectation that the subscales are sensitive to variation in climate from one local environment to the next. Also presented in Table 3 are descriptive, internal consistency and test-retest reliability statistics, and ICC for the a priori defined SORC Global Climate of Integrity composite .

Table 3.

Descriptive statistics (n, Mean, Standard Deviation), internal consistency (Cronbach’s α, reliability) and test-retest (Pearson r) coefficients, and department-level intraclass correlations (ICC) for SORC subscales and descriptive statistics for organizational justice measures in a sample of N=1267 academic scientists.

| n | M | SD | α | rel | r | ICCinst | ICCdept | |

|---|---|---|---|---|---|---|---|---|

|

|

||||||||

| SORC subscales (range 1-5) | ||||||||

| Institutional Regulatory Quality | 1048 | 3.78 | .78 | .84 | .80 | .78 | .000 | .092 |

| Institutional RCR Resources | 1104 | 3.88 | .77 | .87 | .88 | .83 | .017 | .108 |

| Dept / Prog Integrity Norms | 1010 | 4.08 | .69 | .85 | .85 | .77 | .000 | .212 |

| Dept / Prog Socialization | 999 | 3.50 | .90 | .86 | .86 | .81 | .001 | .130 |

| Dept / Prog Integrity Inhibitors | 1038 | 3.92 | .83 | .83 | .80 | .81 | .028 | .072 |

| Dept / Prog Advisor-Advisee Relations | 988 | 3.78 | .72 | .85 | .86 | .72 | .000 | .111 |

| Dept / Prog Expectations | 1057 | 3.64 | .87 | .81 | .82 | .83 | .005 | .116 |

| 1114 | 4.21 | .68 | .89 | n/a | .86 | .000 | .123 | |

| Organizational Justice domains (range 1-7) | ||||||||

| University | 947 | 3.90 | 1.39 | .93 | -- | -- | .024 | .045 |

| Department | 965 | 4.36 | 1.39 | .91 | -- | -- | .019 | .063 |

| IRB/ IACUC review | 901 | 5.57 | 1.10 | .94 | -- | -- | .000 | .089 |

| Manuscript review | 959 | 4.64 | 1.12 | .94 | -- | -- | .000 | .030 |

| Grant review | 728 | 4.35 | 1.30 | .96 | -- | -- | .000 | .052 |

Note. Test-retest reliability (r) calculated in test-retest sample at one medical school that was not included in the study sampling frame.

The patterns of relationships between the SORC subscales and organizational justice in five domains demonstrated that the SORC scales clearly discriminate between perceptions of intramural (university, department, IRB/IACUC) and extramural (manuscript, grant review) research climate, and to lesser extent between institutional (university, IRB-IACUC) and departmental climates (see Table 4). Six of the seven SORC subscales, and the Global Climate of Integrity composite, were positively related to, and not redundant with, organizational justice perceptions in one’s university (raw regression coefficient bs = 0.63-0.79). The Integrity Inhibitors subscale was the exception to this pattern, being only weakly related to university organizational justice. The institution-based SORC scales were modestly related to departmental organizational justice (bs = 0.48, 0.67) relative to the department-based SORC scales (bs = 0.72-0.95) Integrity Inhibitors was again less strongly related to organizational justice than the other department-based SORC subscales. The department-based Integrity Norms, Advisor-Advisee Relations and Expectation scales were so strongly related to departmental organization justice as to be redundant with it. Similarly, the Regulatory Quality subscale was highly redundant with perceptions of justice with respect to IRB/IACUC review at the institution (b = 0.90), and was the only SORC subscale to demonstrate such a strong relationship with this domain of organizational justice. The SORC subscales were, relatively speaking, only weakly related to perceptions of justice with respect to manuscript (bs = 0.12-0.39) or grant (bs = 0.23-0.38) reviews, both of which occur outside of both the respondents’ departments and institutions.

Table 4.

Unadjusted regression coefficients estimated in linear mixed models that separately predicted organizational justice in five domains from SORC subscales.

| Organizational Justice Domain | |||||

|---|---|---|---|---|---|

| University | Department | IRB/IACUC review |

Manuscript review |

Grant review |

|

|

|

|||||

| Institutional Regulatory Quality | .698 | .481 | .895 | .392 | .379 |

| Institutional RCR Resources | .787 | .674 | .587 | .324 | .380 |

| Dept/Prog Integrity Norms | .736 | .891 | .562 | .356 | .374 |

| Dept/Prog Socialization | .634 | .716 | .351 | .250 | .230 |

| Dept/Prog Integrity Inhibitors | .273 | .535 | .308 | .127 | .225 |

| Dept/Prog Advisor-Advisee Relations | .783 | .947 | .460 | .312 | .349 |

| Dept/Prog Expectations | .701 | .917 | .345 | .288 | .377 |

| Global Climate of Integrity | .674 | .810 | .521 | .327 | .343 |

Note. Bold denotes coefficients ≥ .60, bold and underlined denotes coefficients ≥ .85

Discussion

The SORC is the first full-scale survey designed to assess perceptions of those engaged in academic research about the organizational environment for responsible research practices both in their general organizational setting and in their specific affiliated working group or department. With the evidence presented here, we have established that the SORC is a measure of organizational research climate demonstrating good internal and external reliability and that it demonstrates construct and discriminant validity relative to existing measures of organizational justice.

The SORC fills a need identified in the 2002 IOM report, “Integrity in Scientific Research: Creating an Environment that Promotes Responsible Conduct”(Committee on Assessing Integrity in Research Environments (U.S.) et al., 2002), yielding an efficient tool that can be used for: baseline institutional self-assessment to ensure local organizational climates are conducive to ethical, professional, and sound research practices; monitoring the organizational research climate over time; and raising awareness among respondents about responsible research practices. We also hope that the tool will be useful for judging the impact of initiatives to sustain or improve the organizational environment for research integrity, though the utility of the SORC for this purpose is yet to be demonstrated.

The associations observed between the SORC subscales and measures of organizational justice tell us that to some extent the two instruments may be measuring similar aspects of organizational environments. One benefit of the SORC measures over the organizational justice measures is that they assess more specific topic areas and aspects of organizational environments that should be subject to institutional policy. To the extent that SORC scales identify organizational units in which good practices are taking place, institutional leaders might attempt to cultivate and disseminate these good practices more broadly through their institutions. In contrast, the SORC scales also give institutional leaders specific topic areas to target where organizational units may be underperforming.

As a tool for institutional self-assessment, the SORC can be used to generate comparative data about the perceived “performance” of sub-units (e.g. departments, centers, programs) within an institution. However, we recognize that the most salient comparisons may not be between departments across disparate fields or disciplines within one institution, but within fields across institutions. Thus, we believe that even greater value could be derived from the establishment of a national repository of appropriately de-identified data that would allow for the creation of national norms for the SORC scales. The scores from a local department of cellular biology could be compared to an aggregation of scores from other departments of cellular biology. By affording such comparisons, low performing sites that are not aware of their relative performance might become motivated to improve. Perhaps more importantly, being able to identify high performing sites could have obvious benefits for an institution that wishes to promote the sharing and emulation of best practices.

Some limitations of our work here must be acknowledged. The 50% response rate raises some concern about non-response bias but does not guarantee it(Groves, 2006). The present response rate is similar to that obtained in a similar survey by the Council of Graduate School’s Project on Scholarly Integrity (http://www.scholarlyintegrity.org/ShowContent.aspx?id=402), similar to our own prior work on research integrity conducted in two samples of academic scientists,(Martinson et al., 2005, 2006) and higher than a previous study we conducted in a similar sample of biomedical and social science researchers in which a thorough investigation did not find consistent evidence of response bias(Crain et al., 2008; Martinson et al., 2010). Sample limitations, in terms of the number of individuals responding per department, precluded our conducting hierarchical factor analyses. The evidence generated and presented in this report is derived from a national sample of faculty and postdoctoral fellows employed at the nation’s top academic health centers. Thus, the evidence here does not address whether the SORC is a valid and reliable measure of organizational research climates in a wider array of organizational types, disciplines, or statuses of individuals. This limitation is somewhat mitigated by the fact that in the recent Project on Scholarly Integrity, sponsored by the Council of Graduate Schools (http://www.scholarlyintegrity.org/), a similar version of the SORC was used in large samples of faculty, postdoctoral fellows and graduate students across a very broad array of fields in a smaller number of universities, and found similar subscales and similarly high internal reliabilities (unpublished manuscript currently in development).

Finally, of obvious interest is the question of whether our measures of organizational research climate are associated with the behavior of research personnel in these organizations. In a companion article in this journal issue, we present just such analyses. See Crain, Martinson and Thrush, in this issue.

We have posted a User’s Manual (https://sites.google.com/site/surveyoforgresearchclimate/) for readers interested in learning more about using the SORC, including considerations in defining appropriate sampling frames, survey fielding considerations, data cleaning and report generation.

Acknowledgments

The authors wish to acknowledge the excellent work of Shannon Donald in several key aspects contributing to this manuscript including project coordination and sample frame development.

Funding/Support: This research was supported by Award Number R21-RR025279 from the NIH National Center for Research Resources and the DHHS Office of Research Integrity through the collaborative Research on Research Integrity Program.

Footnotes

Other disclosures: None.

Ethical approval: The study protocol was approved by the Regions Hospital Institutional Review Board, the oversight body with responsibility for all research conducted at HealthPartners Institute for Education and Research, and by the University of Arkansas for Medical Sciences Institutional Review Board.

Disclaimers: The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Research Resources, the National Institutes of Health, or the Office of Research Integrity. There are no conflicts of interest for any of the coauthors of this manuscript.

Previous presentations: None.

Contributor Information

Dr. Brian C. Martinson, HealthPartners Institute for Education and Research, Minneapolis, Minnesota..

Dr. Carol R. Thrush, Office of Educational Development, University of Arkansas for Medical Sciences, Little Rock, Arkansas..

Dr. A. Lauren Crain, HealthPartners Institute for Education and Research, Minneapolis, Minnesota..

References

- Adams JS. Inequity in social exchange. In: Berkowitz L, editor. Advances in experimental social psychology. Academic Press; New York: 1965. pp. 267–299. [Google Scholar]

- Anderson MS, Horn AS, Risbey KR, Ronning EA, De Vries R, Martinson BC. What Do Mentoring and Training in the Responsible Conduct of Research Have To Do with Scientists’ Misbehavior? Findings from a National Survey of NIH-Funded Scientists. Academic Medicine. 2007;82:853–860. doi: 10.1097/ACM.0b013e31812f764c. [DOI] [PubMed] [Google Scholar]

- Antes AL, Brown RP, Murphy ST, Waples EP, Mumford MD, Connelly S, Devenport LD. Personality and Ethical Decision-Making in Research: The Role of Perceptions of Self and Others. Journal of Empirical Research on Human Research Ethics. 2007;2:15–34. doi: 10.1525/jer.2007.2.4.15. [DOI] [PubMed] [Google Scholar]

- Arnaud A. Conceptualizing and Measuring Ethical Work Climate: Development and Validation of the Ethical Climate Index. Business & Society. 2010;49:345–358. [Google Scholar]

- Clayton S, Opotow S. Justice and identity: changing perspectives on what is fair. Personality and Social Psychology Review. 2003;7:298–310. doi: 10.1207/S15327957PSPR0704_03. [DOI] [PubMed] [Google Scholar]

- Colquitt JA. On the dimensionality of organizational justice: A construct validation of a measure. Journal of Applied Psychology. 2001;86:386–400. doi: 10.1037/0021-9010.86.3.386. [DOI] [PubMed] [Google Scholar]

- Committee on Assessing Integrity in Research Environments (U.S.) National Research Council (U.S.) United States. Office of the Assistant Secretary for Health. Office of Research Integrity . Integrity in Scientific Research: Creating an Environment that Promotes Responsible Conduct. National Academies Press; Washington, D.C: 2002. [PubMed] [Google Scholar]

- Council of Canadian Academies. The Expert Panel on Research Integrity . Honesty, Accountability and Trust: Fostering Research Integrity in Canada. Council of Canadian Academies; Ottawa, ON: 2010. [Google Scholar]

- Council of Graduate Schools Project for Scholarly Integrity [WWW Document] 2011 URL http://www.scholarlyintegrity.org/ShowContent.aspx?id=402.

- Crain AL, Martinson BC, Ronning EA, McGree D, Anderson MS, DeVries R. Supplemental Sampling Frame Data as a Means of Assessing Response Bias in a Hierarchical Sample of University Faculty. Presented at Annual Meetings of the American Association For Public Opinion Research; New Orleans, LA. 2008. [Google Scholar]

- Crain AL, Martinson BC, Thrush CR. Relationships between the survey of organizational research climate (SORC) and self-reported research practices. Journal of Science and Engineering Ethics. 2012 doi: 10.1007/s11948-012-9409-0. doi:10.1007/s11948-012-9409-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Department of Health and Human Services NIH Guide for Grants and Contracts: ANNOUNCEMENT OF FINAL PHS POLICY ON INSTRUCTION IN THE RESPONSIBLE CONDUCT OF RESEARCH. 2000 ( http://grants.nih.gov/grants/guide/notice-files/NOT-OD-01-007.html)

- Department of Health and Human Services NIH Guide for Grants and Contracts: NOTICE OF SUSPENSION OF PHS POLICY. 2001 ( http://grants.nih.gov/grants/guide/notice-files/NOT-OD-01-020.html)

- DuBois JM, Anderson EE, Carroll K, Gibb T, Kraus E, Rubbelke T, Vasher M. Environmental Factors Contributing to Wrongdoing in Medicine: A Criterion-Based Review of Studies and Cases. Ethics & Behavior. 2012;22:163–188. doi: 10.1080/10508422.2011.641832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folger R, Cropanzano R. Organizational Justice and Human Resource Management. Sage Publishers; Thousand Oaks: 1998. [Google Scholar]

- Geller G, Boyce A, Ford DE, Sugarman J. Beyond “Compliance”: The Role of Institutional Culture in Promoting Research Integrity. Academic Medicine. 2010;85:1296–1302. doi: 10.1097/ACM.0b013e3181e5f0e5. [DOI] [PubMed] [Google Scholar]

- Groves RM. Nonresponse Rates and Nonresponse Bias in Household Surveys. Public Opinion Quarterly. 2006;70:646–675. [Google Scholar]

- Hackett EJ. A Social Control Perspective on Scientific Misconduct. The Journal of Higher Education. 1994;65:242–260. [PubMed] [Google Scholar]

- Heitman E, Anestidou L, Olsen C, Bulger RE. Do researchers learn to overlook misbehavior? Hastings Cent Rep. 2005;35:49. [PubMed] [Google Scholar]

- Heitman E, Bulger RE. Assessing the Educational Literature in the Responsible Conduct of Research for Core Content. Accountability in Research. 2005;12:207–224. doi: 10.1080/08989620500217420. [DOI] [PubMed] [Google Scholar]

- Kohn LT, Corrigan JM, Donaldson MS. To Err is human: Building a safer health system. The National Academies Press; Washington, DC: 2000. [PubMed] [Google Scholar]

- Integrity and Misconduct in Research: Report of the Commission on Research Integrity (Commission by U.S. Congress No. U.S. GOVERNMENT PRINTING OFFICE: 1996-746-425) U.S. Department of Health and Human Services, Public Health Service; Washington, D.C: 1995. [Google Scholar]

- Irish Council for Bioethics. Rapporteur Group . Recommendations for Promoting Research Integrity. The Irish Council for Bioethics; Dublin, Ireland: 2010. [Google Scholar]

- Landy FJ, Conte JM. Work in the 21st Century: An Introduction to Industrial and Organizational Psychology. Wiley; New York: 2010. [Google Scholar]

- Leape LL. Transparency and public reporting are essential for a safe health care system. The Commonwealth Fund; New York: 2010. [Google Scholar]

- Martinson BC, Anderson MS, Crain AL, De Vries R. Scientists’ Perceptions of Organizational Justice and Self-Reported Misbehaviors. Journal of Empirical Research on Human Research Ethics. 2006;1:51–66. doi: 10.1525/jer.2006.1.1.51. http://www.pubmedcentral.nih.gov/articlerender.fcgi?tool=pubmed&pubmedid=16810337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinson BC, Anderson MS, De Vries R. Scientists behaving badly. Nature. 2005;435:737–8. doi: 10.1038/435737a. [DOI] [PubMed] [Google Scholar]

- Martinson BC, Crain AL, Anderson MS, De Vries R. Institutions’ Expectations for Researchers’ Self-Funding, Federal Grant Holding and Private Industry Involvement: Manifold Drivers of Self-Interest and Researcher Behavior. Academic Medicine. 2009;84:1491–1499. doi: 10.1097/ACM.0b013e3181bb2ca6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinson BC, Crain AL, De Vries R, Anderson MS. The Importance of Organizational Justice in Ensuring Research Integrity. Journal of Empirical Research on Human Research Ethics. 2010;5:67–83. doi: 10.1525/jer.2010.5.3.67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mumford M, Helton WB. Organizational Influences on Scientific Integrity. Proceedings of the 2000 ORI Conference on Research on Research Integrity; Bethesda, MD: Investigating Research Integrity; Nov, 2001. pp. 73–90. [Google Scholar]

- Mumford MD, Murphy ST, Connelly S, Hill JH, Antes AL, Brown RP, Devenport LD. Environmental Influences on Ethical Decision Making: Climate and Environmental Predictors of Research Integrity. Ethics & Behavior. 2007;17:337–366. [Google Scholar]

- Panel on Scientific Responsibility and the Conduct of Research . Responsible Science. Volume I: Ensuring the Integrity of the Research Process. National Academy of Sciences; 1992. [Google Scholar]

- Peugh JL, Enders CK. Specification Searches in Multilevel Structural Equation Modeling: A Monte Carlo Investigation. Structural Equation Modeling: A Multidisciplinary Journal. 2010;17:42–65. [Google Scholar]

- Pfeffer J, Langton N. The effects of wage dispersion on satisfaction, productivity, and working collaboratively: Evidence from college and university faculty. Administrative Science Quarterly. 1993;38:382–407. [Google Scholar]

- Pimple KD. Six Domains of Research Ethics: A Heuristic Framework for the Responsible Conduct of Research. Science and Engineering Ethics. 2002;8:191–205. doi: 10.1007/s11948-002-0018-1. [DOI] [PubMed] [Google Scholar]

- Rennie D, Gunsalus CK. What is research misconduct? In: Wells FO, Farthing MJG, editors. Fraud and Misconduct in Biomedical Research. Royal Society of Medicine Press; London: 2008. [Google Scholar]

- Schein EH. What Is Culture? In: Frost PJ, Moore LF, Louis MR, Lundberg CC, Martin J, editors. Reframing Organizational Culture. Sage Publications, Inc.; Newbury Park, CA: 1991. pp. 243–253. [Google Scholar]

- Sovacool B. Exploring Scientific Misconduct: Isolated Individuals, Impure Institutions, or an Inevitable Idiom of Modern Science? Journal of Bioethical Inquiry. 2008;5:271–282. [Google Scholar]

- Steneck N, Mayer T. Singapore Statement on Research Integrity [WWW Document] 2010 URL http://www.singaporestatement.org/

- Steneck NH. Fostering integrity in research: definitions, current knowledge, and future directions. Science and Engineering Ethics. 2006;12:53–74. doi: 10.1007/pl00022268. [DOI] [PubMed] [Google Scholar]

- Steneck NH, Bulger RE. The History, Purpose, and Future of Instruction in the Responsible Conduct of Research. Academic Medicine. 2007;82:829–834. doi: 10.1097/ACM.0b013e31812f7d4d. [DOI] [PubMed] [Google Scholar]

- Teitelbaum MS. RESEARCH FUNDING: Structural Disequilibria in Biomedical Research. Science. 2008;321:644–645. doi: 10.1126/science.1160272. [DOI] [PubMed] [Google Scholar]

- Thrush CR, Vander Putten J, Rapp CG, Pearson LC, Berry KS, O’Sullivan PS. Content validation of the Organizational Climate for Research Integrity (OCRI) survey. Journal of Empirical Research on Human Research Ethics. 2007;2:35–52. doi: 10.1525/jer.2007.2.4.35. [DOI] [PubMed] [Google Scholar]

- Titus SL, Wells JA, Rhoades LJ. Repairing research integrity. Nature. 2008;453:980–2. doi: 10.1038/453980a. [DOI] [PubMed] [Google Scholar]

- Tyler TR, Blader SL. The group engagement model: procedural justice, social identity, and cooperative behavior. Personality and Social Psychology Review. 2003;7:349–61. doi: 10.1207/S15327957PSPR0704_07. [DOI] [PubMed] [Google Scholar]

- Vandenberg RJ, Lance CE. A Review and Synthesis of the Measurement Invariance Literature: Suggestions, Practices, and Recommendations for Organizational Research. Organizational Research Methods. 2000;3:4–70. [Google Scholar]

- Wenger NS, Korenman SG, Berk R, Berry S. The ethics of scientific research: an analysis of focus groups of scientists and institutional representatives. J Investig Med. 1997;45:371–80. [PubMed] [Google Scholar]