Abstract

As the primary providers of round-the-clock bedside care, nurses are well positioned to report on hospital quality of care. Researchers have not examined how nurses’ reports of quality correspond with standard process or outcomes measures of quality. We assess the validity of evaluating hospital quality by aggregating hospital nurses’ responses to a single item that asks them to report on quality of care. We found that a 10% increment in the proportion of nurses reporting excellent quality of care was associated with lower odds of mortality and failure to rescue; greater patient satisfaction; and higher composite process of care scores for acute myocardial infarction, pneumonia, and surgical patients. Nurse reported quality of care is a useful indicator of hospital performance.

Keywords: nursing, quality of care, public reporting

Nurses are in an ideal position to report on the quality of care in hospitals. They are the de facto surveillance system overseeing the patient care experience. The work nurses do as the primary bedside care provider and intermediary between patients and all other clinicians intimately involves them in all aspects of patient care. Examples include direct care giving, surveillance and monitoring of health status, emotional support for patients and families, assistance with activities of daily living, interprofessional team collaboration, and patient education. Thus, nurses’ perceptions of quality are built on more than an isolated encounter or single process—they are developed over time through a series of interactions and direct observations of care.

Quality of care has been at the forefront of researchers’ agenda for several decades because healthcare quality measures are integral to the decision-making of regulators, consumers, and purchasers (Chassin & Loeb, 2011; Donabedian, 1969, 1988; Institute of Medicine, 2001). Although there are well enumerated obstacles related to measuring healthcare quality (Loeb, 2004), there are also underexplored opportunities. One such opportunity is the use of nurses as informants about overall quality of care in the acute care hospital setting. There is ample evidence that hospitals that are known to be good places for nurses to work (e.g., Magnet hospitals and other hospitals with good practice environments) have better outcomes including nurse reported quality of care (L. Aiken et al., 2001, 2012; Leveck & Jones, 1996; Shortell, Rousseau, Gillies, Devers, & Simons, 1991).

Our purpose was to examine the association between nurse reported quality of care and standard indicators of quality including patient outcomes measures (i.e., mortality, failure to rescue, and patient satisfaction) and process of care measures. Establishing a correspondence between nurse reported quality of care and measures from other sources could demonstrate the validity of a single item quality of care measure.

Methods

Data and Sample

We conducted a retrospective secondary analysis of data to estimate the relationship between nurse reported quality of care and standard hospital quality measures. We focused our attention on adult, non-federal acute care hospitals from four states: California, Pennsylvania, Florida, and New Jersey. Our primary data source for nurse reported quality of care was the Multi-State Nursing Care and Patient Safety Study (L. Aiken et al., 2011)—a survey of nurses’ work conditions and quality of care carried out in the four study states in 2006–2007. The sampling approach has been detailed previously (L. Aiken et al., 2011). The nursing licensure lists in the four states established the sampling frame to survey nurses directly via mail. Patient care hospital nurses were surveyed about features of their work environment; they also provided the names of their employers, which allowed us to aggregate their responses by hospital. The aggregated nurse-reported measures of the hospital practice environment and quality of care were then linked to data from the 2006 to 2007 American Hospital Association Annual Survey of hospitals, which includes information on hospital structure, services, personnel, facilities, and financial performance from nearly all U.S. hospitals.

We used data from other sources to examine hospital quality from a variety of perspectives. We used the administrative databases based on hospital discharge abstracts for all patients from the four study states to identify the patient level outcomes of 30-day inpatient mortality and failure to rescue among surgical patients. These databases also provided patient demographic information and information on other diagnoses and procedures that allowed for risk adjustment.

Data on patient assessments of their care experience from the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey were obtained from the Centers for Medicare and Medicaid Services (CMS) publicly available Hospital Compare website. The 27-item HCAHPS survey asks a sample of patients in all Medicare participating hospitals to evaluate their short term, acute care hospital experience. The data are then risk-adjusted and aggregated to the hospital level before being released quarterly to the public (Giordano, Elliott, Goldstein, Lehrman, & Spencer, 2010).

We also used publicly available process of care data from CMS made available through the Hospital Compare website. As part of its Hospital Quality Initiative, CMS worked with the Hospital Quality Alliance to implement a national public reporting system of hospital quality measures endorsed by the National Quality Forum. Hospitals participating in the CMS Inpatient Prospective Payment System are required to publicly report these data in order to receive their entire annual payment update. Under the Value-Based Purchasing Program set forth in the Affordable Care Act, hospitals will receive incentive payments, not just for reporting, but for achieving standards and improving in many of these areas (Centers for Medicare & Medicaid Services, 2011). The hospital level data we used corresponded to the period of July 2006 to June 2007. Each hospital reported on core process of care measures related to heart failure, pneumonia, acute myocardial infarction, and surgical care (Table 1).

Table 1.

Process of Care Measures for Composite Score

| Indicators | Description |

|---|---|

| Heart failure | |

| Discharge instructions | If discharged home, received written discharge instructions or educational material addressing all of the following: activity level, diet, discharge medications, follow-up appointment, weight monitoring, and what to do if symptoms worsen |

| Left ventricular functional assessment | Evaluation of left ventricular function occurred before arrival, during hospitalization, or planned for after discharge |

| ACEI or ARB at discharge | If ventricular systolic dysfunction and no contraindications, prescribed an ACEI or ARB at discharge |

| Smoking cessation counseling | If a history of smoking cigarettes in the past year, received smoking cessation advice or counseling during the hospital stay |

| Pneumonia | |

| Appropriate initial antibiotic | Received initial antibiotic regimen consistent with current guidelines within first 24 hours |

| Initial antibiotic timing | Received first dose of antibiotics within 4 hours after arrival at the hospital |

| Initial blood culture timing | Initial blood culture specimen was collected before the first hospital dose of antibiotics |

| Influenza vaccination | Patients >50 years discharged October–February screened for influenza vaccine and vaccinated if indicated |

| Pneumococcal vaccination | Patients >65 years screened for pneumococcal vaccine status and vaccinated if indicated |

| Oxygenation assessment | Assessment of arterial oxygenation within 24 hours of arrival |

| Smoking cessation counseling | If a history of smoking in the past year, received smoking cessation counseling |

| Acute myocardial infarction | |

| Aspirin at arrival | Received aspirin within 24 hours before or after hospital arrival |

| Aspirin at discharge | Prescribed aspirin at hospital discharge |

| ACEI or ARB at discharge | If left ventricular systolic dysfunction, prescribed an ACEI or ARB at discharge |

| Beta-blocker on admission | Prescribed a beta-blocker at hospital admission |

| Beta-blocker at discharge | Prescribed a beta-blocker at discharge |

| Smoking cessation counseling | If a history of smoking cigarettes during the year before hospital arrival, received smoking cessation advice or counseling during the hospital stay |

| Fibrinolytic therapy within 30 minutes | If ST-segment elevation or left bundle branch block on theECG closest to arrival time, received fibrinolytic therapy within 30 minutes of arrival |

| Primary PCI within 30 minutes | If ST-segment elevation or left bundle branch block on theECG closest to arrival time received primary percutaneous coronary intervention within 30 minutes of arrival |

| Surgical care improvement project | |

| Prophylactic antibiotic within 1 hour prior to surgical incision | Prophylactic antibiotics initiated within 1 hour prior to surgical incision as indicated |

| Prophylactic antibiotic selection | Received prophylactic antibiotics consistent with current guidelines |

| Prophylactic antibiotics discontinued within 24 hours after surgery end time | Prophylactic antibiotics discontinued within 24 hours after anesthesia end time |

Note: ACEI, angtiotensin converting enzyme inhibitor; ARB, angiotensin II receptor blockers. All items are reported as aggregated hospital percentages.

Our final sample of hospitals (N = 396) was limited to adult, non-federal acute care hospitals with Hospital Compare data, at least 50 surgical discharges annually, and no fewer than 10 direct care nurse respondents to the survey. A total of 16,241 nurses—41 nurses per hospital on average—contributed to the aggregated hospital level measures of nurse reported quality of care. The study was approved by the university institutional review board.

Measures

Nurse reported quality

The quality of nursing care in each hospital was assessed using a single item that asked nurses to respond to the following question: “How would you describe the quality of nursing care delivered to patients in your unit?” The self-report responses 2 included: Excellent, Good, Fair, and Poor. Our hospital level measure is the percentage of nurses reporting that the quality of nursing care was excellent. We assessed the reliability of nurses’ report of quality by calculating the intraclass correlation (ICC [1,k]) using a one-way analysis of variance. The ICC was .61 indicating that there was adequate agreement among individual nurses to aggregate reports of quality to the hospital level (Glick, 1985). Researchers working with a different hospital sample have reported an ICC of .73 (Kutney-Lee, Lake, & Aiken, 2009). This single item aggregated to the hospital level has also been used in a number of empirical papers (L. Aiken et al., 2001, 2012; Sochalski, 2004). This measure was aggregated to the hospital level for all of our analyses.

Mortality and failure to rescue

We used the annual hospital discharge abstract databases available from the four states to measure 30-day inpatient mortality and failure to rescue. Failure to rescue is defined as the death of a surgical patient who experienced a complication. Our focus was on patients undergoing general, orthopedic, or vascular surgery between the ages of 21 and 85. Both 30-day inpatient mortality and failure to rescue were measured as nominal binary variables at the patient level.

To identify the subset of surgical patients who experienced complications for the failure to rescue measure, the ICD-9-CM secondary diagnosis and procedure codes were used to identify 39 clinical events indicating a complication (Silber et al., 2007). Discharges against medical advice were excluded. Surgical patients are an appealing population to study because they are prevalent in most general acute care hospitals, and risk adjustment approaches have been refined for use with administrative data related to outcomes in this population (Silber et al., 2007, 2009). Our models included patient level measures of sex, age, transfer status, Elixhauser’s 27 comorbidity indicators (excluding fluid and electrolyte disorders and coagulopathy; Elixhauser, Steiner, Harris, & Coffey, 1998; Glance, Dick, Osler, & Mukamel, 2006; Quan et al., 2005), and 61 dummy variables that indicate type of surgery in order to account for variation in patient characteristics.

Patient experience

The Hospital Compare website publicly reports a set of 10 HCAHPS measures that are aggregated to the hospital level and risk-adjusted prior to release. We used the two HCAHPS global measures indicating the percent of patients who rated the hospital 9 or 10 out of 10 (high), and the percent of patients who would definitely recommend the hospital to friends and family.

Process of care

The Hospital Compare website also reports on process of care—how frequently hospitals provide the treatments that are known to lead to the best outcomes for patients hospitalized for acute myocardial infarction, heart failure, pneumonia, and surgery. There were eight process measures for acute myocardial infarction (e.g., aspirin at arrival); four process measures for heart failure (e.g., smoking cessation counseling); seven measures for pneumonia (e.g., appropriate initial antibiotic selection); and three process measures for surgical care improvement (e.g., prophylactic antibiotic consistent with guidelines; Table 1). We used The Joint Commission’s (2007) approach to calculate a summary score across all measures for each condition at the hospital level. The summary score is a proportion that reflects how many times the hospital performed the appropriate action divided by the number of opportunities the hospital had to provide appropriate care.

Hospital covariates

Structural characteristics of hospitals have been associated with differences in patient quality outcomes (Ayanian & Weissman, 2002; Brennan et al., 1991; Hartz et al., 1989). Thus, we linked data from the 2006 to 2007 American Hospital Association annual survey of hospitals on structural characteristics of hospitals to account for these differences. We measured hospital size based on the number of staffed and licensed beds to create an ordinal measure of small (≤100 beds), medium (101–250 beds), and large hospitals (≥251 beds). Teaching intensity was measured as the ratio of the number of physician residents and fellows to hospital beds to create an ordinal measure of non-teaching (no residents), minor teaching (1:4 ratio or less), and major teaching (>1:4 ratio). We considered hospitals that performed open heart surgery, organ transplantation, or both, as having a greater capacity for technological services compared to other hospitals, resulting in a binary technology variable. We also included an indicator for the state where the hospital was located.

To put our findings regarding nurse reported quality in context, we examined the relationship between nurses’ reports of quality and hospitals known for nursing excellence—those with high scores on the Practice Environment Scale of the Nursing Work Index (PES-NWI) as well as hospitals recognized as Magnet hospitals. The PES-NWI is a National Quality Forum-endorsed Nursing Care Performance Measure (Lake, 2002). The instrument consists of five subscales: nurse participation in hospital affairs; nurse manager ability, leadership, and support of nurses; staffing and resource adequacy; nursing foundations for quality of care; and collegial nurse–physician relations. Nurses responding to the survey indicated their level of agreement with whether particular organizational features were present in their job. For each hospital, we calculated subscale measures by averaging the values of all items in the subscale for all the nurses in the hospital. We then created a categorical summary measure for each hospital that has demonstrated good predictive validity. Hospitals above the median on 4 or 5 subscales were classified as having good work environments; hospitals above the median on 2 or 3 subscales were classified as having mixed work environments; and hospitals above the median on only 1 or no subscales were classified as having poor work environments (L. Aiken, Clarke, Sloane, Lake, & Cheney, 2008; Friese, Lake, Aiken, Silber, & Sochalski, 2008).

Magnet hospitals are hospitals recognized by the American Nurses Credentialing Center (ANCC) for quality patient care and nursing excellence. The Magnet Recognition Program® is a voluntary process that has formalized standards for nursing leadership, strong representation of nursing in organization management, and interdisciplinary collaboration among healthcare professionals (Drenkard, 2010; McClure & Hinshaw, 2002). Magnet recognition has now been incorporated into national hospital ranking and benchmarking programs including U.S. News and World Report Best Hospitals rankings and the Leapfrog Group hospital ratings (The Leapfrog Group, 2011). We used data from the ANCC to categorize hospitals as Magnet versus non-Magnet hospitals, using a binary variable in the predictive models.

Analysis

Descriptive analyses including frequency distributions, measures of central tendency, dispersion, and bivariate correlations were evaluated. We examined how hospitals known for nursing excellence—those with high scores on the PES-NWI and Magnet hospitals—varied in terms of nurse reported quality of care. We then used logistic regression models to estimate the relationship between hospital level nurse reported quality of care and the patient level binary outcomes of mortality and failure to rescue. Standard errors and significance levels were estimated using procedures that corrected for heteroscedasticity and accounted for clustering of patients within hospitals (White, 1980; Williams, 2000). Ordinary least squares regression models were used where the outcomes were hospital level percentages, in the case of the HCAHPS scores, and hospital level proportions in the case of the process of care composite scores from the Hospital Compare database. Analyses were completed using SAS version 9.3 (SAS Institute, Cary, NC) and STATA version 12 (STATACorp, College Station, TX).

Results

There were 396 hospitals from California, Florida, Pennsylvania, and New Jersey (Table 2). Most of the hospitals in our sample were not for profit. Only a small number of hospitals were considered major teaching institutions. Most were either non-teaching or had a minor teaching role as reflected by their resident physician to bed ratio. At the hospital level, the mean percentage of nurses reporting that quality of care on their unit was excellent was 29% (SD = 12%).

Table 2.

Characteristics of Study Hospitals (N = 396)

| Hospital Characteristics | n | % |

|---|---|---|

| Hospital state | ||

| California | 169 | 43 |

| New Jersey | 39 | 10 |

| Pennsylvania | 88 | 22 |

| Florida | 100 | 25 |

| Teaching status | ||

| None | 196 | 50 |

| Minor teaching | 168 | 42 |

| Major teaching | 32 | 8 |

| Technology status | ||

| High | 198 | 50 |

| Low | 198 | 50 |

| Size (number of beds) | ||

| ≤100 | 28 | 7 |

| 101–250 | 171 | 43 |

| ≥251 | 197 | 50 |

| Ownership | ||

| For profit | 29 | 7 |

| Non-profit | 294 | 74 |

| Government, non-federal | 73 | 18 |

Note: Percentages may not sum to 100 due to rounding. All measures are at the hospital level.

Our regression models showed that nurse reported quality of care, measured at the hospital level as the percentage of nurses reporting quality as excellent, was a significant predictor of both outcomes and process measures that indicate quality care. These relationships persisted after controlling for potential confounds. In patient level outcomes models we found that after accounting for individual patient characteristics and hospital structural features, each 10% increment in the proportion of nurses in the hospital reporting that the quality of care on their unit was excellent was associated with 5% lower odds of both mortality (OR = 0.95, 95% CI = 0.92–0.98) and failure to rescue (OR = 0.95, 95% CI = 0.92–0.98) for surgical patients (Table 3).

Table 3.

Relationship Between Nurse Reported of Quality of Care and 30-Day Mortality and Failure to Rescue

| Unadjusted Estimates | Adjusted Estimates | |||||

|---|---|---|---|---|---|---|

| OR | 95% CI | p-Value | OR | 95% CI | p-Value | |

| Patient outcomes | ||||||

| 30-day mortality | 0.91 | 0.90–0.93 | <.001 | 0.95 | 0.92–0.98 | <.001 |

| 30-day failure to rescue | 0.92 | 0.91–0.94 | <.001 | 0.95 | 0.92–0.98 | <.001 |

Note: OR, odds ratio; CI, confidence interval. Estimates represent the odds of 30-day mortality and failure to rescue corresponding to each additional 10% in a hospital’s proportion of nurses rating quality of care as excellent. Adjusted models included hospital level characteristics: hospital size, teaching status, technology, and state where the hospital is located. Adjusted models also accounted for patient level characteristics: patient transfer status, patient gender, Diagnosis Related Group, and patient comorbidities.

Nurses’ reports of quality were also associated with hospital level scores indicating patients’ evaluations of their hospital care experiences (Table 4). Each additional 10% in the proportion of nurses reporting that the quality of care excellent was associated with a 3.7 point (p < .001) increase in the percentage of patients who would definitely recommend the hospital. Likewise, a 10% increase in nurse reported quality of care as excellent was associated with a 3.1 point (p < .001) increase in the percentage of patients rating the hospital as a 9 or 10 (on a scale of 0–10).

Table 4.

Relationship Between Nurse Reported Quality of Care and Patient Experience and Process of Care Scores

| Unadjusted Estimates | Adjusted Estimates | |||||

|---|---|---|---|---|---|---|

| B | SE | p-Value | B | SE | p-Value | |

| HCAHPS measure | ||||||

| Definitely recommend hospital to family/friends | 3.8 | 0.3 | <.001 | 3.7 | 0.3 | <.001 |

| High rating of hospital | 3.2 | 0.3 | <.001 | 3.1 | 0.3 | <.001 |

| Process of care composite scores | ||||||

| Acute myocardial infarction | 0.8 | 0.2 | <.001 | 0.6 | 0.2 | <.001 |

| Heart failure | 0.6 | 0.4 | .13 | 0.7 | 0.4 | .09 |

| Pneumonia | 0.7 | 0.3 | .005 | 0.9 | 0.3 | <.001 |

| Surgery | 1.6 | 0.4 | <.001 | 2.0 | 0.4 | <.0001 |

Note: Ordinary least squares regression models. Estimates represent the expected difference in the outcome corresponding to each additional 10% in a hospital’s proportion of nurses rating quality of care as excellent. Adjusted models accounted for hospital level characteristics: hospital size, teaching status, technology, and state.

Our results suggest that nurse reported quality is related to hospital level process of care composite measures for acute myocardial infarction, pneumonia, and surgery (Table 4). The relationship was not statistically significant for heart failure. The average myocardial infarction composite score was 93.96 (SD = 5). This suggests that hospitals performed the recommended care for myocardial infarction patients about 94% of the time on average. Our models suggest that each 10% increment in the proportion of nurses reporting that the quality of care was excellent was associated with a 0.6 point (SE = 0.2, p < .001) increase in the composite. The average composite score for pneumonia was 84.77 (SD = 6.5); each additional 10% increment in the proportion of nurses reporting that the quality of care on their unit was excellent was associated with a 0.9 point (SE = 0.3, p < .001) increase in the composite. Hospitals had an average surgical composite score of 76.57 (SD = 11.91). Our models suggest that the surgical composite score was 2.0 points (SE = 0.4, p < .001) higher for each additional 10% increase in the proportion of nurses reporting excellent quality.

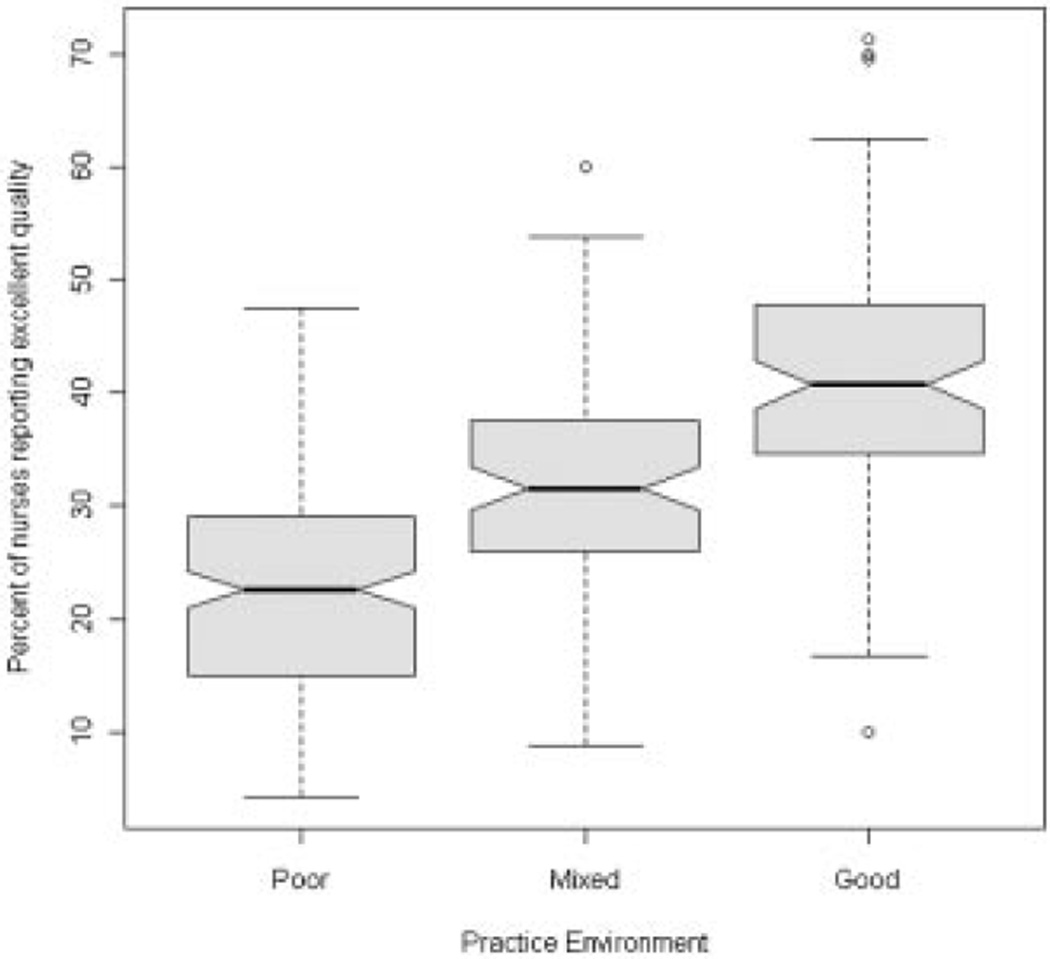

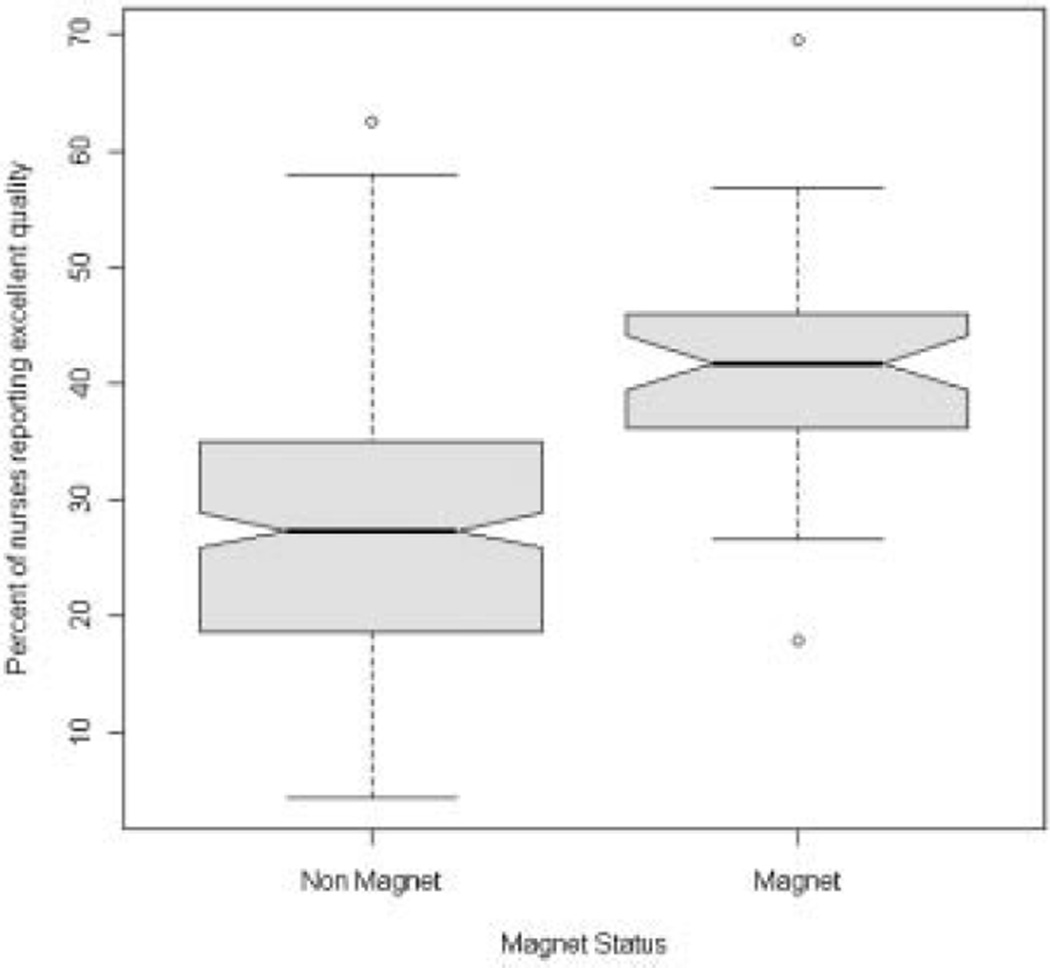

Finally, we wanted to confirm that nurses were reporting higher quality of care in the places where evidence suggests we should expect higher quality of care. Notched box plots (Fig. 1) show that the median proportion of nurses reporting quality as excellent was significantly different between hospitals with good, mixed, and poor practice environments as measured by the PES-NWI. The proportion of nurses who reported excellent quality was greater in hospitals with good compared to mixed practice environments and mixed compared to poor practice environments. There is strong evidence that any two medians differ at a statistically significant level where the notches of two plots do not overlap (McGill, Tukey, & Larsen, 1978). Similarly, when we examined Magnet compared to non-Magnet hospitals (Fig. 2), we found that nurses in Magnet hospitals had a statistically significant higher median proportion of nurses reporting excellent quality.

FIGURE 1.

Percentage of nurses reporting quality of care as excellent in hospital with poor, mixed, and good nurse practice environments. Note: If the notches of two plots do not overlap this is strong evidence that the two medians differ significantly at the 0.05 level.

FIGURE 2.

Percentage of nurses reporting quality of care as excellent in magnet recognized hospitals and non-magnet hospitals. Note: If the notches of two plots do not overlap this is strong evidence that the two medians differ significantly at the 0.05 level.

Discussion

Our findings illustrate a straightforward premise: asking nurses—the providers most familiar with patients’ bedside care experiences—to gauge hospital quality of care offers a reliable indication of quality that is reflected in patient outcomes and process of care measures.

Nurse reported quality was associated with all of the outcomes we assessed including mortality, failure to rescue, and patients’ reports of the care experience. Nurse reported quality was also consistent with process indicators related to the care of patients with pneumonia, acute myocardial infarction, and surgery. Compared to other hospitals, higher proportions of nurses working in hospitals with good practice environments and in Magnet recognized hospitals reported that the quality of care in their workplace was excellent.

Many studies have used nurses’ reports of quality as an outcome measure (L. Aiken et al., 2001, 2012; Kutney-Lee et al., 2009; Sochalski, 2004). Our findings suggest that nurse reported quality of care is indeed a valid indicator that reflects differences in quality as measured by standard patient outcomes and process indicators. The conceptual basis for using nurses as reliable and valid informants about the quality of care in the hospital in which they work is well grounded in organizational sociology (L. Aiken & Patrician, 2000; M. Aiken & Hage, 1968; Georgopoulos & Tannenbaum, 1957; Shortell et al., 1991). Quality is generally assessed through structural, process, or outcomes measures (Donabedian, 1966). The most direct way to evaluate quality is to examine the care process itself. Obtaining information from nurses takes advantage of their unique perspective within the caregiving context. Nurses have insight into aspects of quality—patient–provider interactions, patient and family education and support, interprofessional collaboration, interface of frontline staff with management, and integration of technology and information systems—that are not always documented in the medical record but often make the difference between good and bad outcomes.

Our findings highlight the potential utility of using nurses as informants regarding quality of care for future research and quality reporting. Although the patient’s perspective is the most relevant quality of care indicator, there are complementary benefits that could be gained by asking nurses to report on quality. Nurses are convenient and accessible as respondents. Their perspective and presence at virtually all points of patient care makes nurses a valuable source of information across the institution. Nurses can also report on quality in settings where patients may be less able to report such as in critical care, end of life, or pediatric settings. In many institutions, nurses are already providing data for benchmarking and quality improvement; an example is the American Nurses Association’s National Database of Nursing Quality Indicators™ (NDNQI®).

A single item measure of quality of care from nurses’ perspective may provide an efficient approach for quality monitoring, reporting, and benchmarking. Like Magnet recognition, the message to consumers and potential nurse employees could be informative—the nurses say that the quality of care at this hospital is excellent. The measure would complement existing multi-item scales related to the practice environment such as the PES-NWI, as well as the other measures collected by organizations, such as NDNQI, The Joint Commission, The Leapfrog Group, and CMS. Measures of the practice environment combined with process measures and measures of quality from multiple perspectives would provide a more complete picture of hospital performance.

A limitation of this study is that it was cross-sectional and only allowed us to examine associations. Although we do not have a national sample of hospitals, the hospitals in the four states we studied represent approximately 20% of annual hospitalizations in the U.S. The process measure composite scores in our sample are similar to national scores reported in the literature (The Joint Commission, 2007). The HCAHPS scores in our sample are slightly lower than national scores reported in the literature: 63% nationally for the overall hospital rating as high compared to 59% in our sample; and 67% nationally for the measure of recommending the hospital to family and friends compared to 65% for our sample (Centers for Medicare & Medicaid Services, 2012). Our numbers are comparable with those that CMS reported at the state level. Additional work that examines the relationship between nurse reported quality and other outcomes, as well as in other samples, including international contexts, is necessary.

Conclusion

Nurses’ presence at the bedside with patients, from admission through discharge, makes them reliable informants regarding the quality of patient care at a hospital. Our findings confirm that nurses’ perceptions of quality correspond with other indicators of quality including the outcomes measures of mortality, failure to rescue, and patient satisfaction, as well as process of care measures. For a complete picture of hospital performance, data from the practitioners who provide the majority of patient care is essential. Nurse reported quality of care can be a valuable indicator of hospital quality.

Acknowledgments

The authors would like to thank Mr. Tim Cheney for his contributions to the manuscript. This study was funded by Robert Wood Johnson Foundation Nurse Faculty Scholars program (McHugh) and National Institute of Nursing Research (R01-NR-004513, P30-NR-005043, and T32-NR-007104, Linda Aiken, PI).

References

- Aiken LH, Cimiotti JP, Sloane DM, Smith HL, Flynn L, Neff DF. Effects of nurse staffing and nurse education on patient deaths in hospitals with different nurse work environments. Medical Care. 2011;49:1047–1053. doi: 10.1097/MLR.0b013e3182330b6e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aiken LH, Clarke SP, Sloane DM, Lake ET, Cheney T. Effects of hospital care environment on patient mortality and nurse outcomes. Journal of Nursing Administration. 2008;38:223–229. doi: 10.1097/01.NNA.0000312773.42352.d7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aiken LH, Clarke SP, Sloane DM, Sochalski JA, Busse R, Clarke H, Shamian J. Nurses’ reports on hospital care in five countries. Health Affairs. 2001;20:43–53. doi: 10.1377/hlthaff.20.3.43. [DOI] [PubMed] [Google Scholar]

- Aiken LH, Patrician PA. Measuring organizational traits of hospitals: The Revised Nursing Work Index. Nursing Research. 2000;49:146–153. doi: 10.1097/00006199-200005000-00006. [DOI] [PubMed] [Google Scholar]

- Aiken LH, Sermeus W, Vanden Heede K, Sloane D, Busse R, McKee M, Kutney-Lee A. Patient safety, satisfaction, and quality of hospital care: Cross-sectional surveys of nurses and patients in 12 countries in Europe and the United States. BMJ. 2012;344:e1717. doi: 10.1136/bmj.e1717. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aiken M, Hage J. Organizational interdependence and intra-organizational structure. American Sociological Review. 1968;33:912–930. [Google Scholar]

- Ayanian JZ, Weissman JS. Teaching hospitals and quality of care: A review of the literature. Milbank Quarterly. 2002;80:569–593. doi: 10.1111/1468-0009.00023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brennan TA, Hebert LE, Laird NM, Lawthers A, Thorpe KE, Leape LL, Hiatt HH. Hospital characteristics associated with adverse events and substandard care. JAMA. 1991;265(24):3265–3269. [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. Final rule: Medicare program; hospital inpatient value-based purchasing program. Federal Register. 2011;76:26490–26547. [PubMed] [Google Scholar]

- Centers for Medicare & Medicaid Services. [Retrieved April 20, 2012];March 2008 Public Report: October 2006–June 2007 discharges. 2012 from http://www.hcahpsonline.org/Executive_Insight/files/Final-State%20and%20National%20Avg-March%20Report%208-20-2008.pdf. [Google Scholar]

- Chassin MR, Loeb JM. The ongoing quality improvement journey: Next stop, high reliability. Health Affairs. 2011;30:559–568. doi: 10.1377/hlthaff.2011.0076. [DOI] [PubMed] [Google Scholar]

- Donabedian A. Evaluating the quality of medical care. The Milbank Memorial Fund Quarterly. 1966;44:166–206. [PubMed] [Google Scholar]

- Donabedian A. Quality of care: Problems of measurement. II. Some issues in evaluating the quality of nursing care. American Journal of Public Health & the Nation’s Health. 1969;59:1833–1836. doi: 10.2105/ajph.59.10.1833. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donabedian A. The quality of care: How can it be assessed? JAMA. 1988;260:1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- Drenkard K. The business case for magnet. Journal of Nursing Administration. 2010;40(6):263–271. doi: 10.1097/NNA.0b013e3181df0fd6. [DOI] [PubMed] [Google Scholar]

- Elixhauser A, Steiner C, Harris DR, Coffey RM. Comorbidity measures for use with administrative data. Medical Care. 1998;36:8–27. doi: 10.1097/00005650-199801000-00004. [DOI] [PubMed] [Google Scholar]

- Friese CR, Lake ET, Aiken LH, Silber JH, Sochalski J. Hospital nurse practice environments and outcomes for surgical oncology patients. Health Services Research. 2008;43:1145–1163. doi: 10.1111/j.1475-6773.2007.00825.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Georgopoulos BS, Tannenbaum AS. A study of organizational effectiveness. American Sociological Review. 1957;22:534–540. [Google Scholar]

- Giordano LA, Elliott MN, Goldstein E, Lehrman WG, Spencer PA. Development, implementation, and public reporting of the HCAHPS Survey. Medical Care Research and Review. 2010;67:27–37. doi: 10.1177/1077558709341065. [DOI] [PubMed] [Google Scholar]

- Glance LG, Dick AW, Osler TM, Mukamel DB. Does date stamping ICD-9-CM codes increase the value of clinical information in administrative data? Health Services Research. 2006;41:231–251. doi: 10.1111/j.1475-6773.2005.00419.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glick W. Conceptualizing and measuring organizational and psychological climate: Pitfalls in multilevel research. Academy of Management Review. 1985;10:601–616. [Google Scholar]

- Hartz AJ, Krakauer H, Kuhn EM, Young M, Jacobsen SJ, Gay G, Rimm AA. Hospital characteristics and mortality rates. New England Journal of Medicine. 1989;321(25):1720–1725. doi: 10.1056/NEJM198912213212506. [DOI] [PubMed] [Google Scholar]

- Institute of Medicine. Crossing the quality chasm: A new health system for the 21st century. Washington, D.C.: National Academies Press; 2001. [PubMed] [Google Scholar]

- Kutney-Lee A, Lake ET, Aiken LH. Development of the hospital nurse surveillance capacity profile. Research in Nursing & Health. 2009;32:217–228. doi: 10.1002/nur.20316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lake ET. Development of the practice environment scale of the Nursing Work Index. Research in Nursing & Health. 2002;25:176–188. doi: 10.1002/nur.10032. [DOI] [PubMed] [Google Scholar]

- Leveck ML, Jones CB. The nursing practice environment, staff retention, and quality of care. Research in Nursing & Health. 1996;19:331–343. doi: 10.1002/(SICI)1098-240X(199608)19:4<331::AID-NUR7>3.0.CO;2-J. [DOI] [PubMed] [Google Scholar]

- Loeb JM. The current state of performance measurement in health care. International Journal for Quality in Health Care. 2004;16(Suppl. 1):i5–i9. doi: 10.1093/intqhc/mzh007. [DOI] [PubMed] [Google Scholar]

- McClure ML, Hinshaw AS. Magnet hospitals revisited: Attraction and retention of professional nurses. Kansas City, MO: American Nurses Association; 2002. [Google Scholar]

- McGill R, Tukey JW, Larsen WA. Variations of box plots. The American Statistician. 1978;32:12–16. [Google Scholar]

- Quan H, Sundararajan V, Halfon P, Fong A, Burnand B, Luthi JC, Ghali WA. Coding algorithms for defining comorbidities in ICD-9-CM and ICD-10 administrative data. Medical Care. 2005;43:1130–1139. doi: 10.1097/01.mlr.0000182534.19832.83. [DOI] [PubMed] [Google Scholar]

- Shortell SM, Rousseau DM, Gillies RR, Devers KJ, Simons TL. Organizational assessment in intensive care units (ICUs): Construct development, reliability, and validity of the ICU nurse–physician questionnaire. Medical Care. 1991;29:709–726. doi: 10.1097/00005650-199108000-00004. [DOI] [PubMed] [Google Scholar]

- Silber JH, Romano PS, Rosen AK, Wang Y, Even-Shoshan O, Volpp KG. Failure-to-rescue: Comparing definitions to measure quality of care. Medical Care. 2007;45:918–925. doi: 10.1097/MLR.0b013e31812e01cc. [DOI] [PubMed] [Google Scholar]

- Silber JH, Rosenbaum PR, Romano PS, Rosen AK, Wang Y, Teng Y, Volpp KG. Hospital teaching intensity, patient race, and surgical outcomes. Archives of Surgery. 2009;144:113–120. doi: 10.1001/archsurg.2008.569. discussion 121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sochalski J. Is more better?: The relationship between nurse staffing and the quality of nursing care in hospitals. Medical Care. 2004;42(2 Suppl.):II67–II73. doi: 10.1097/01.mlr.0000109127.76128.aa. [DOI] [PubMed] [Google Scholar]

- The Joint Commission. [Retrieved April 23];Improving America’s hospitals The Joint Commission’s Annual Report on Quality and Safety—2007. 2007 2012, from http://www.jointcommission.org/assets/1/6/2006_Annual_Report.pdf. [Google Scholar]

- The Leapfrog Group. [Retrieved April 15, 2012];Hospitals reporting Magnet status to the 2011 Leapfrog Hospital Survey. 2011 2012, from http://www.leapfroggroup.org/MagnetRecognition. [Google Scholar]

- White H. A heteroskedasticity-consistent covariance matrix estimator and a direct test for heteroskedasticity. Econometrica. 1980;48:817–838. [Google Scholar]

- Williams R. A note on robust variance estimation for cluster-correlated data. Biometrics. 2000;56:645–646. doi: 10.1111/j.0006-341x.2000.00645.x. [DOI] [PubMed] [Google Scholar]