Abstract

The frequency-lag hypothesis proposes that bilinguals have slowed lexical retrieval relative to monolinguals and in their nondominant language relative to their dominant language, particularly for low-frequency words. These effects arise because bilinguals divide their language use between 2 languages and use their nondominant language less frequently. We conducted a picture-naming study with hearing American Sign Language (ASL)–English bilinguals (bimodal bilinguals), deaf signers, and English-speaking monolinguals. As predicted by the frequency-lag hypothesis, bimodal bilinguals were slower, less accurate, and exhibited a larger frequency effect when naming pictures in ASL as compared with English (their dominant language) and as compared with deaf signers. For English there was no difference in naming latencies, error rates, or frequency effects for bimodal bilinguals as compared with monolinguals. Neither age of ASL acquisition nor interpreting experience affected the results; picture-naming accuracy and frequency effects were equivalent for deaf signers and English monolinguals. Larger frequency effects in ASL relative to English for bimodal bilinguals suggests that they are affected by a frequency lag in ASL. The absence of a lag for English could reflect the use of mouthing and/or code-blending, which may shield bimodal bilinguals from the lexical slowing observed for spoken language bilinguals in the dominant language.

More than a decade of research has established that spoken language bilingualism entails subtle disadvantages in lexical retrieval. Specifically, when compared with their monolingual peers, bilinguals who know two spoken languages have more tip-of-the-tongue (TOT) retrieval failures (Gollan & Acenas, 2004; Gollan & Silverberg, 2001), have reduced category fluency (Gollan, Montoya, & Werner, 2002; Portocarrero, Burright, & Donovick, 2007; Rosselli et al., 2000), name pictures more slowly (Gollan, Montoya, Fennema-Notestine, & Morris, 2005; Gollan, Montoya, Cera, & Sandoval, 2008; Ivanova & Costa, 2008), and name fewer pictures correctly on standardized naming tests such as the Boston Naming Test (Kohnert, Hernandez, & Bates, 1998; Roberts, Garcia, Desrochers, & Hernandez, 2002; Gollan, Fennema-Notestine, Montoya, & Jernigan, 2007). Crucially, bilingual naming disadvantages are observed even when bilinguals are tested exclusively in their dominant language (e.g., Gollan & Acenas, 2004; Gollan, Montoya et al., 2005; and first-learned language; Ivanova & Costa, 2008). However, bilinguals are not disadvantaged on all tasks. For example, bilinguals classify pictures (as either human-made or natural kinds) as quickly as monolinguals (Gollan, Montoya et al., 2005), and bilingual disadvantages also do not generalize to all language tasks (e.g., bilinguals are not disadvantaged for production of proper names; Gollan, Bonanni, & Montoya, 2005). In some nonlinguistic tasks, bilinguals exhibit significant processing advantages. For example, spoken language bilinguals are faster to resolve conflict between competing responses (see Bialystok, Craik, Green, & Gollan, 2009, for review).

Gollan and colleagues propose a frequency-lag account of slowed naming in bilinguals (also known as the “weaker links” account), which draws on the observation that by virtue of speaking each of the languages they know only part of the time, bilinguals necessarily speak each of their languages less often than do monolinguals (Gollan et al., 2008, 2011). Because bilinguals use words in each language less frequently, lexical representations in both languages will have accumulated less practice relative to monolinguals. Therefore, bilinguals are hypothesized to exhibit slower lexical retrieval times because of the same mechanism that leads to frequency effects in monolinguals, that is, words that are used frequently are more accessible than words that are used infrequently (e.g., Forster & Chambers, 1973; Oldfield & Wingfield, 1965). Because small differences in frequency of use can have profound effects on lexical accessibility at the lower end of the frequency range ( Murray & Forster, 2004), the frequency-lag hypothesis predicts that the bilingual disadvantage should be particularly large for low-frequency words (and relatively small for high-frequency words). Stated differently, bilinguals should show larger frequency effects than monolinguals. Similarly, within bilinguals’ two languages, because the nondominant language is used less frequently than the dominant language, the frequency-lag hypothesis also predicts that bilinguals should exhibit larger frequency effects in their nondominant than in their dominant language. Several studies have confirmed this prediction for visual word recognition (e.g., Duyck, Vanderelst, Desmet, & Hartsuiker, 2008) and picture naming (Gollan et al., 2008, 2011; Ivanova & Costa, 2008).

In this study, we investigated whether the frequency-lag hypothesis holds for bimodal bilinguals who have acquired a spoken language, English, and a signed language, American Sign Language (ASL). Although deaf ASL signers are bilingual in English (to varying degrees), we reserve the term “bimodal bilingual” for hearing ASL–English bilinguals who acquired spoken English primarily through audition and without special training. Using a picture-naming task, we explored whether bimodal bilinguals exhibit slower lexical retrieval times for spoken words and a larger frequency effect as compared with English-speaking monolinguals. Unlike spoken language bilinguals (i.e., unimodal bilinguals), bimodal bilinguals do not necessarily divide their language use between two languages because they can—and often do—code-blend, that is, produce ASL signs and English words at the same time (Bishop, 2006; Emmorey, Borinstein, Thompson, & Gollan, 2008; Petitto et al., 2001). Code-blending is a form of language mixing in which, typically, one or more ASL signs accompany an English utterance (in this case, English is the Matrix language; see Emmorey et al., 2008, for discussion). Recently, Pyers, Gollan, and Emmorey (2009) found that bimodal bilinguals exhibited more lexical retrieval failures (TOTs) than monolingual English speakers and the same TOT rate as Spanish–English bilinguals, suggesting that bimodal bilinguals are affected by frequency lag. However, bimodal bilinguals also exhibited slightly better lexical retrieval success than the unimodal bilinguals on other measures (e.g., they produced more correct responses and reported fewer negative or “false” TOTs). Pyers et al. (2009) attributed this in-between pattern of lexical retrieval success for bimodal bilinguals to more frequent use of English, possibly due to the unique ability to code-blend. Thus, the predictions of the frequency-lag hypothesis may not hold for English for bimodal bilinguals.

In addition, we investigated whether bimodal bilinguals exhibit slower lexical retrieval times for ASL signs and a larger ASL frequency effect as compared with deaf signers who use ASL as their primary language. Following Emmorey et al. (2008), we suggest that ASL is the nondominant language for the great majority of bimodal bilinguals, even for Children of Deaf Adults (CODAs) who acquire ASL from birth within deaf signing families. Although CODAs may be ASL dominant as young children, English rapidly becomes the dominant language due to immersion in an English-speaking environment outside the home. Such switched dominance also occurs for many spoken language bilinguals living in the United States (e.g., for Spanish–English bilinguals; Kohnert, Bates, & Hernandez, 1999). In contrast, ASL can be argued to be the dominant language for deaf signers, who sign ASL more often than they speak English. If these assumptions about language dominance are correct, the frequency-lag hypothesis makes the following predictions:

Bimodal bilinguals will exhibit slower ASL-naming times than deaf signers and slower English naming times than monolingual English speakers.

Bimodal bilinguals will exhibit a larger ASL frequency effect than deaf ASL signers and a larger English frequency effect than monolingual English speakers.

Bimodal bilinguals will exhibit a larger frequency effect for ASL than for English.

Late bilinguals (those who acquired ASL in adulthood) will exhibit the largest ASL frequency effect.

Unfortunately, no large-scale sign frequency corpora (i.e., with millions of tokens in the corpus) are currently available for ASL or for any sign language to our knowledge. Psycholinguistic research has relied on familiarity ratings by signers to estimate lexical frequency (e.g., Carreiras, Gutiérrez-Sigut, Baquero, & Corina, 2008; Emmorey, 1991). For spoken language, familiarity ratings are highly correlated with corpora-based frequency counts (Gilhooly & Logie, 1980), are consistent across different groups of subjects (Balota, Pilotti, & Cortese, 2001), and are sometimes better predictors of lexical decision latencies than objective frequency measures (Gernsbacher 1984; Gordon, 1985). Therefore, we relied on familiarity ratings as a measure of lexical frequency in ASL.

Recently, Johnston (2012 ) questioned whether familiarity ratings accurately reflect the frequency of use or occurrence of lexical signs. He found only a partial overlap between sign-familiarity ratings for British Sign Language (BSL; from Vinson, Cormier, Denmark, & Schembri, 2008) and the frequency ranking in the Australian Sign Language (Auslan) Archive and Corpus (Johnston, 2008; note that BSL and Auslan are argued to be dialects of the same language; Johnston, 2003). After adjusting for glossing differences between BSL and Auslan, Johnston (2012 ) found that of the 83 BSL signs with a very high-familiarity rating (6 or 7 on a 7-point scale), only 38 (12.5%) appeared in the 300 most frequent lexical signs in the Auslan corpus and only 14 (4.7%) appeared in the top 100 signs. Johnston (2012, p. 187) suggested that a subjective measure of familiarity for a lexical sign may be very high even though it may actually be a low-frequency sign because “it is very citable and ‘memorable’ (e.g., SCISSORS, ELEPHANT, KANGAROO), perhaps because there are relatively few other candidates contesting for recognition, that is, due to apparently modest lexical inventories.” However, if the above predictions of the frequency-lag hypothesis hold for ASL, then it will suggest that familiarity ratings are indeed an accurate reflection of frequency of use for signed languages and will suggest that the lack of overlap found by Johnston (2012 ) may be due to deficiencies in the corpus data—for example, limited tokens (thousands rather than millions), genre and register biases, etc..

To test these predictions, we compared English picture-naming times for bimodal bilinguals with those of English monolingual speakers and ASL picture-naming times with those of deaf ASL signers. In addition to naming pictures in English and in ASL, the bimodal bilinguals were also asked to name pictures with a code-blend (i.e., producing an ASL sign and an English word at the same time). The code-blend comparisons are reported separately in Emmorey, Petrich, and Gollan (2012). Here we report the group comparisons for lexical retrieval times and lexical frequency effects when producing English words or ASL signs.

Methods

Participants

A total of 40 hearing ASL–English bilinguals (27 female), 28 deaf ASL signers (18 female), and 21 monolingual English speakers (14 female) participated. We included both early ASL–English bilinguals (CODAs) and late bilinguals who learned ASL through instruction and immersion in the Deaf community. Two bilinguals (one early and one late) and three deaf signers were eliminated from the analyses because of high rates of fingerspelled, rather than signed responses (>2 SD above the group mean).

Table 1 provides participant characteristics obtained from a language history and background questionnaire. The early bimodal bilinguals (N = 18) were exposed to ASL from birth, had at least one deaf signing parent, and eight were professional interpreters. The late bimodal bilinguals (N = 20) learned ASL after age 6 (mean = 16 years; range: 6–26 years), and 13 were professional interpreters. All bimodal bilinguals used ASL and English on a daily basis. Self-ratings of ASL proficiency (1 = “not fluent” and 7 = “very fluent”) were significantly different across participant groups, F(2, 63) = 4.632, p = .014, η p 2 = .14, with lower ratings for the late bilinguals (mean = 5.7, SD = .8) as compared with both the deaf signers (mean = 6.5, SD = 0.7), Tukey’s HSD, p = .011, and the early bilinguals (mean = 6.4, SD = 0.8), Tukey’s HSD, p = .056. In addition, the bimodal bilinguals rated their proficiency in English as higher than in ASL, F(1,26) = 24.451, MSE = .469, p < .001, η p 2 = .48. There was also an interaction between participant group and language rating, F(1,26) = 5.181, MSE = .469, p = .031, η p 2 = .17, such that the early and late bilinguals did not differ in their English proficiency ratings, but the late bilinguals rated their ASL proficiency lower than did the early bilinguals, t(35) = 2.397, p = .022.

Table 1 .

Means and standard deviations for participant characteristics

| Age, years | Age of ASL exposure | ASL self-ratinga | English self-ratinga | Years of education | |

|---|---|---|---|---|---|

| Early ASL–English bilinguals (N = 18) | 27 (6) | Birth | 6.4 (0.8) | 6.8 (0.6) | 16.2 (2.8) |

| Late ASL–English bilinguals (N = 20) | 36 (10) | 16.4 (7.0) | 5.7 (0.8) | 7.0 (0.0) | 17.7 (2.7) |

| Deaf ASL signers (N = 24) | 24 (5) | 0.3 (0.7) | 6.5 (0.7) | — | 15.8 (2.3) |

| Monolingual English speakers (N = 21) | 24 (4) | — | — | — | 15.1 (2.0) |

Note. ASL = American Sign Language.

aBased on a scale of 1–7 (1 = “not fluent” and 7 = “very fluent”).

Among the deaf signers, 21 were native signers exposed to ASL from birth and four were near-native signers who learned ASL in early childhood (before age 7). The monolinguals were all native English speakers who had not been regularly exposed to more than one language before age six and had not completed more than four semesters of foreign language study (the minimum University requirement). Self-ratings of English proficiency were not collected for these participants.

Materials

Participants named 120 line drawings of objects taken from the CRL International Picture Naming Project (Bates et al., 2003; Székely et al., 2003). Bimodal bilinguals named 40 pictures in ASL only, 40 pictures in English only, and 40 pictures with an ASL–English code-blend (data from the code-blend condition are reported in Emmorey et al., 2012). The pictures were counter-balanced across participants, such that all pictures were named in each language condition, but no participant saw the same picture twice. The deaf participants named all 120 pictures in ASL, and the hearing English monolingual speakers named all pictures in English. For English, the pictures all had good name agreement based on Bates et al. (2003): mean percentage of target response = 91% (SD = 13%). For ASL, the pictures were judged by two native deaf signers to be named with lexical signs (English translation equivalents), rather than by fingerspelling, compound signs, or phrasal descriptions, and these signs were also considered unlikely to exhibit a high degree of regional variation. Half of the pictures had low-frequency English names (mean ln-transformed CELEX frequency = 1.79, SD = 0.69) and half had high-frequency names (mean = 4.04, SD = 0.74). Our lab maintains a database of familiarity ratings for ASL signs based on a scale of 1 (very infrequent) to 7 (very frequent), with each sign rated by at least 8 deaf signers (the average number of raters per sign was 15). The mean ASL sign-familiarity rating for the ASL translations of the low-frequency words was 2.93 (SD = 0.97) and 3.87 (SD = 1.23) for the high-frequency words. For ease of exposition, we will refer to these sets as low- and high-frequency signs, rather than as low- and high-familiarity signs.

Procedure

Pictures were presented using Psyscope Build 46 (Cohen, MacWhinney, Flatt, & Provost 1993) on a Macintosh PowerBook G4 computer with a 15-inch screen. English naming times were recorded using a microphone connected to a Psyscope response box. ASL-naming times were recorded using a pressure release key (triggered by lifting the hand) that was also connected to the Psyscope response box. Participants initiated each trial by pressing the space bar. Each trial began with a 1,000-ms presentation of a central fixation point “+” that was immediately replaced by the picture. The picture disappeared when the voice-key (for English) or the release-key (for ASL) triggered. All testing sessions were videotaped.

Participants were instructed to name the pictures as quickly and accurately as possible. Bimodal bilinguals named the pictures in three blocks: English only, ASL only, or ASL and English simultaneously (results from the last condition are presented in Emmorey et al., 2012). The order of language blocks was counter-balanced across participants. Within each block, half of the pictures had low-frequency and half had high-frequency words/signs, randomized within each block. Six practice items preceded each naming condition.

Results

Reaction times (RTs) that were 2 SDs above or below the mean for each participant for each language were eliminated from the RT analyses. This procedure eliminated 5.3% of the data for the early bilinguals, 5.6% for late bilinguals, 4.0% for deaf signers, and 4.5% for English monolinguals.

English responses in which the participant produced a vocal hesitation (e.g., “um”) or in which the voice-key was not initially triggered were eliminated from the RT analysis, but were included in the error analysis. ASL responses in which the participant paused or produced a manual hesitation gesture (e.g., UM in ASL) after lifting their hand from the response key were also eliminated from the RT analysis, but were included in the error analysis. Occasionally, a signer produced a target sign (e.g., SHOULDER) with their nondominant hand after lifting their dominant hand from the response key; such a response was considered correct, but was not included in the RT analysis. These procedures eliminated 2.4% of the English data and 0.5% of the ASL data.

Only correct responses were included in the RT analyses. Responses that were acceptable variants of the intended target name (e.g., Oreo instead of cookie, COAT instead of JACKET, or fingerspelled F-O-O-T instead of the sign FOOT) were considered correct and were included in both the error and RT analyses. Fingerspelled responses were not excluded from the analysis because (a) fingerspelled signs constitute a nontrivial part of the ASL lexicon (Brentari & Padden, 2001; Padden, 1998) and (b) fingerspelled signs are in fact the correct response for some items because either the participant always fingerspells that name or the lack of context in the picture-naming task promotes use of a fingerspelled name over the ASL sign (e.g., the names of body parts were often fingerspelled). The mean percent of fingerspelled responses was 15% for the early bilinguals, 15% for the late bilinguals, and 9% for the deaf signers. 1 Nonresponses and “I don’t know” responses were considered errors.

We did not directly compare RTs for ASL and English due to the confounding effects of manual versus vocal articulation. For each language, we conducted a 2×3 analysis of variance (ANOVA) with frequency (high, low) and participant group as the independent variables. Reaction time and error rate were the dependent variables. We report ANOVAs for both participant means (F1; collapsing across items) and item means (F2; collapsing across participants).

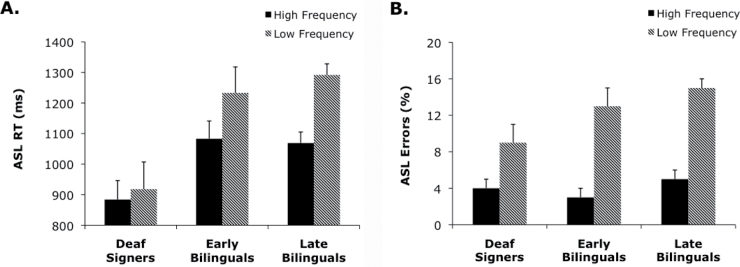

ASL

Figure 1 presents the mean RT and error rate data for ASL responses. Reaction times were significantly faster for high- than for low-frequency signs, F1(1,59) = 27.571, MSE = 20,386, p < .001, η p 2 = .32; F2(1,117) = 16.995, MSE = 122,775, p < .001, ηp 2 = .13. There was also a main effect of participant group, F1(2,59) = 5.258, MSE = 202,694, p = .008, η p 2 = .15; F2(1,117) = 111.886, MSE = 52,674, p < .001, η p 2 = .49. Post hoc Tukey’s HSD tests showed that the deaf participants named pictures significantly more quickly than both the early and late bilinguals (both p values <.05), but the early and late bilinguals did not differ from each other in RT (p = .974). Crucially, there was also a significant interaction between participant group and sign frequency, such that bilinguals exhibited larger frequency effects than deaf signers, F1(2,59) = 4.891, MSE =20,386, p = .011, η p 2 = .14; F2(1,117) = 13.799, MSE = 52,674, p = .004, η p 2 = .11.

Figure 1 .

(A) ASL naming latencies and (B) error rates are greater for bimodal bilinguals than for deaf signers and for low-frequency signs than for high-frequency signs. Error bars indicate standard error of the mean. ASL = American Sign Language; RT = reaction time.

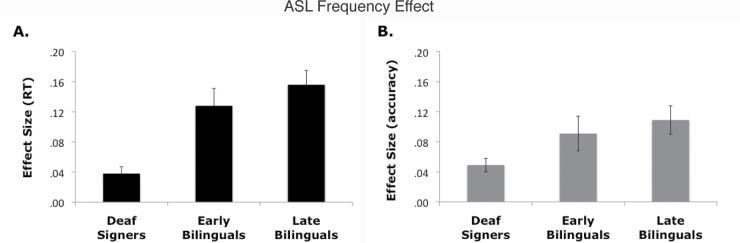

As illustrated in Figure 2A, the frequency effect was smallest for those most proficient in ASL—that is, the ASL-dominant deaf signers and largest for the least proficient in ASL—that is, the English-dominant late bilinguals, as we predicted. The size of the frequency effect was calculated for each participant as follows: [low-frequency mean RT − high-frequency mean RT]/total mean RT. The proportionally adjusted frequency effects reveal that the ASL frequency effect was more than 3 times as large in early bilinguals (mean = 12.8%) and late bilinguals (mean = 15.6%) as compared with deaf signers (mean = 3.8%), t(40) = 3.788, p < .001 and t(42) = 3.773, p < .001, respectively. Although numerically in the predicted direction, the size of the ASL frequency effect was not significantly larger for late bilinguals as compared with early bilinguals, t(36) < 1.

Figure 2 .

The size of the ASL frequency effect for (A) naming latencies and (B) error rates is larger for bimodal bilinguals than for deaf signers. Error bars indicate standard error of the mean. ASL = American Sign Language; RT = reaction time.

The results for error rates generally mirrored those for reaction time. Error rates were significantly lower for high- than for low-frequency signs, F1(1,59) = 68.773, MSE = .003 , p < .001, η p 2 = .54; F2(1,117) = 15.504, MSE = .032, p < .001, η p 2 = .12, and there was a main effect of participant group, F1(2,59) = 4.102, MSE = .004 , p = .021, η p 2 = .12; F2(1,117) = 6.242, MSE = .010, p = .014, η p 2 = .05. Post hoc Tukey’s HSD tests revealed that deaf signers had significantly lower error rates than the late bilinguals (p = .016), and the early bilinguals did not differ from either the deaf participants or the late bilinguals (both p values >.285). Again, there was a significant interaction between frequency and participant group—F1(2,59) = 3.449, MSE = .003, p = .038, η p 2 = .10; F2(1,117) = 3.708, MSE = .010, p = .057, η p 2 = .03.

As shown in Figure 2B, the size of the ASL frequency effect for accuracy mirrored that for response time. Frequency effect size was calculated for each participant as follows: [percent correct for low-frequency signs − percent correct for high-frequency signs]/total percent correct. The ASL frequency effect was greater for early bilinguals (mean = 9.1%) and late bilinguals (mean = 10.9%) as compared with the deaf signers (mean = 4.9%), t(40) = 1.919, p = .067 and t(42) = 3.075, p < .005, respectively. The size of the ASL frequency effect did not differ for the early and late bilinguals, t(36) < 1.

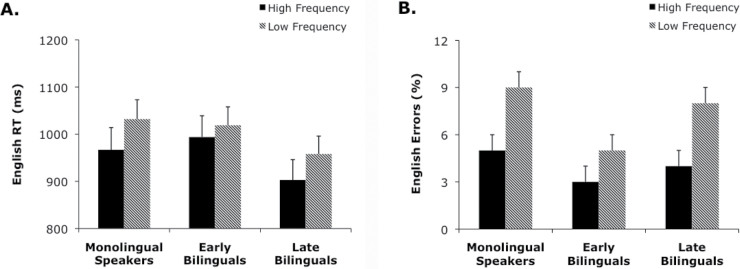

English

Figure 3 presents the mean RTs and error rates for picture naming in English. All speakers named pictures with high-frequency names more quickly than pictures with low-frequency names, F1(1,56) = 20.785, MSE = 3,364 , p < .001, η p 2 = .27; F2(1,117) = 3.850, MSE = 77,969, p = .052, η p 2 = .03. Naming times did not differ significantly across groups with the subject analysis, F1(2,56) = 1.044, MSE = 366,263, p = 0.359, η p 2 = .04, but the items analysis was significant, F2(1,117) = 41.542, MSE = 22,416, p < .001, η p 2 = .26. By items, late bilinguals were faster than early bilinguals, t(118) = 4.517, p < .001, and monolinguals, t(119) = 6.499, p < .001. 2 There was no interaction between frequency and participant group for English, F1(2,56) = 1.184, MSE = 3,364, p = .314, η p 2 = .04; F2(1,117) = 0.018, MSE = 22,416, p = .895, η p 2 = .00.

Figure 3 .

(A) English naming latencies and (B) error rates are greater for low-frequency words than for high-frequency words, but lexical frequency does not interact with participant group. Error bars indicate standard error of the mean. ASL = American Sign Language; RT = reaction time.

For error rate, there was a significant main effect of frequency by subjects, but not by items: error rates were lower for high-frequency than for low-frequency words, F1(1,56) = 17.086, MSE = .002 , p < .001, η p 2 = .23; F2(1,117) = 2.212, MSE = .025, p = .140, η p 2 = .02. There were no significant differences in error rate across participant groups, F1(2,56) = 1.938, MSE = .003 , p = .154, η p 2 = .07; F2(1,117) = 0.855, MSE = .004, p = .357, η p 2 = .01, and frequency did not interact with participant group, F1(2,56) = 1.169, MSE = .002, p = .318, η p 2 = .04; F2(1,117) = 0.332, MSE = .004, p = .565, η p 2 = .003.

The Size of the Frequency Effect in ASL Versus English

For the bimodal bilinguals, we compared the size of the frequency effect for ASL and for English. As predicted, the size of the frequency effect was larger for ASL than English for RT: 12.8% versus 3.6% for early bilinguals and 15.6% versus 6.0% for late bilinguals, t(35) = 3.021, p = .005, and t(39) = 2.987, p = .005, respectively. Similarly for error rate, the size of the frequency effect was larger for ASL than English: 9.1% versus 1.9% for early bilinguals and 10.9% versus 3.4% for late bilinguals, t(35) = 2.681, p = .011, and t(39) = 2.613, p = .013, respectively.

Error Rates for Deaf ASL Signers Versus Monolingual English Speakers

Although analyses directly comparing ASL and English naming latencies suffer from possible confounds of manual versus vocal articulation (e.g., the hand is a much larger and slower articulator than the tongue and lips), comparative analyses with error rate data do not. Therefore, we conducted a 2×2 ANOVA with participant group (deaf ASL signers, English monolingual speakers) as a between-group language factor and frequency (high-frequency items, low-frequency items) as a within-group factor. ASL-naming errors for deaf signers (mean = 6.2%) did not differ significantly from English naming errors for hearing monolingual speakers (mean = 6.6%), both Fs<1. As expected, error rates for low-frequency items (mean = 8.6%) were greater than for high-frequency items (mean = 4.2%), F1(1,43) = 42.164, MSE = .001, p < .001, η p 2 = .50; F2(1,118) = 7.883, MSE = .016, p = .006, η p 2 = .06. In contrast to the pattern of results for the bimodal bilinguals, there was no interaction between language group and lexical frequency, indicating similar-sized frequency effects occur when ASL is the dominant language (for signers) and when English is the dominant language (for speakers), F1(1,43) = .310, MSE = .001, p = .581, η p 2 = .007; F2(1,118) = 0.062, MSE = .007, p = .804, η p 2 = .001.

Interpreters Versus Non-interpreters

Finally, to examine whether interpreting experience had an effect on naming latencies, error rates, or the size of the frequency effect, we conducted ANOVAs for English and for ASL comparing the performance of professional ASL interpreters (N = 21) and bimodal bilinguals who are not interpreters (N = 17). The pattern of main effects for frequency and interactions between language and frequency reported above do not change. In addition, there were no main effects of group, and crucially, interpreting group did not interact with any variable, indicating that interpreting experience does not influence our findings (all p values >.125). For interpreters, the mean ASL and English naming latencies were 1,209ms (SE = 92) and 974ms (SE = 45), and their mean ASL and English error rates were 7.9% (SE = 1.2%) and 4.6% (SE = 0.9%), respectively. For non-interpreters, the mean ASL and English naming latencies were 1,122ms (SE = 83) and 957ms (SE = 50), and their mean error rates were 10.7% (SE = 1.3%) and 5.9% (SE = 1.0%), respectively.

Discussion

The results we reported demonstrate that English is the dominant language for both early bimodal bilinguals (CODAs) and late bimodal bilinguals. Both bilingual groups rated their proficiency in English as higher than in ASL and made more naming errors in ASL than in English. The results also supported the predictions of the frequency-lag hypothesis for ASL (the nondominant language), but not for English (the dominant language). Specifically, for ASL, bimodal bilinguals were slower, less accurate, and exhibited a larger frequency effect when naming pictures as compared with native deaf signers (Figures 1 and 2). In addition, bimodal bilinguals exhibited a larger frequency effect in ASL than in English. The larger ASL frequency effect for bimodal bilinguals likely reflects the fact that hearing ASL–English bilinguals use ASL significantly less often than spoken English, and significantly less often than deaf signers for whom ASL is the primary language. As hypothesized by the frequency-lag hypothesis, less frequent ASL use leads to slower lexical retrieval particularly for low-frequency signs for bimodal bilinguals.

Unlike previous studies in which spoken language bilinguals exhibited frequency-lag effects in their dominant language relative to monolinguals, bimodal bilinguals exhibited no evidence of frequency lag in English relative to English speaking monolinguals. They did not name pictures more slowly, and there was no group difference in the size of the frequency effect (Figure 3). These findings support our proposal that code-blending might help bimodal bilinguals avoid the effects of frequency lag on the dominant language (Pyers et al., 2009). That is, unlike unimodal bilinguals who must switch between languages, bimodal bilinguals can (and often do) produce both English words and ASL signs at the same time, which boosts the frequency of usage for both languages. In addition, ASL signs are quite frequently produced with English “mouthings” in which the mouth movements that accompany a manual sign are derived from the pronunciation of the translation equivalent in English (Boyes-Braem & Sutton-Spence, 2001; Nadolske & Rosenstock, 2007). Recent evidence from picture-naming and word-translation tasks with British Sign Language suggests that the mouthings that accompany signs have separate lexico-semantic representations that are based on the English production system (Vinson, Thompson, Skinner, Fox, & Vigliocco, 2010). The bilingual disadvantage for dominant language production may be reduced for bimodal bilinguals because code-blending and mouthing may allow them to produce words in their dominant language nearly as frequently as monolinguals. Thus, the frequency of accessing English during ASL production might ameliorate the bilingual disadvantage for English for ASL–English bilinguals, in contrast to Spanish–English bilinguals who are also English dominant but for whom mouthing and code-blending are not possible.

The fact that deaf ASL signers and monolingual English speakers had similar error rates when naming the same pictures indicates that the difference observed for bimodal bilinguals is not due to a lack of ASL signs for the target pictures or to some other property of the ASL-naming condition. In addition, the fact that language group did not interact with lexical frequency further supports the frequency-lag hypothesis. Assuming that ASL usage by native deaf signers is roughly parallel to spoken English usage by hearing speakers, the size of the frequency effect should be roughly the same for both groups.

Although self-ratings of ASL proficiency were significantly lower for the late bilinguals, late bilinguals were not significantly slower or less accurate to name pictures in ASL than early bilinguals. This pattern suggests that the two groups of bilinguals were relatively well matched in ASL skill and that the late bilinguals may have underestimated their proficiency. Baus, Gutiérrez-Sigut, Quer, and Carreiras (2008) also found no difference in naming latencies or accuracy between early and late deaf signers in a picture-naming study with Catalan Sign Language (CSL), and their participants were all highly skilled signers. It is possible that with sufficient proficiency, age of acquisition may have little effect on the speed or accuracy of lexical retrieval in picture naming (even for relatively low-frequency targets).In addition, we found no significant difference between interpreters and non-interpreters in lexical retrieval performance (naming latencies or error rates). This result is consistent with the findings of Christoffels, de Groot, and Kroll (2006) who reported that Dutch–English interpreters out-performed highly proficient bilinguals (Dutch–English teachers) on working memory tasks (e.g., reading span), but not on lexical retrieval tasks (e.g., picture naming). Christoffels et al. (2006) argue that performance on language tasks is determined by proficiency more than by general cognitive resources. Thus, if the non-interpreters in our study were highly proficient signers, then no group difference between interpreters and non-interpreters in lexical retrieval performance is expected.

Finally, these results validate the use of familiarity ratings as a substitute for corpora-based frequency counts for signed languages in psycholinguistic studies. Currently, there is no available ASL corpus, and one of the largest sign language corpora, the Auslan Corpus, contains only 63,436 signs produced by 109 different signers (as of March, 2011; Johnston, 2012). Although a corpus of over 60,000 annotated signs is an impressive achievement, it does not match the size of the corpora available for English and other spoken languages (e.g., nearly 18 million for English CELEX; Baayen, Piepenbrock, & Gulikers, 1995). But perhaps more importantly for the use of such a corpus for psycholinguistic studies, genre biases may be exaggerated in a corpus of this size. For example, the Auslan Corpus contains clips of many signers retelling the same narratives from prepared texts, cartoons, and picture-books (e.g., The Hare and the Tortoise; The Boy who Cried Wolf; Frog, Where are you?). Thus, the signs BOY, DOG, FROG, WOLF, and TORTOISE all appear within the top 50 most frequent signs, and Johnston (2012 ) recognizes this finding as a clear genre and text bias within the Auslan corpus. Given such biases, it is not too surprising that little overlap was observed between the familiarity rankings from Vinson et al. (2008) and the frequency rankings from the Auslan corpus. Nonetheless, as argued by Johnston (2012 ), familiarity ratings are likely to be problematic for classifier constructions and pointing signs (e.g., pronouns) whose use is dependent upon a discourse context.

In sum, the pattern of frequency effects in English and ASL for bimodal bilinguals, deaf ASL signers, and English monolingual speakers argue for the following: (a) English is the dominant language for bimodal bilinguals, even when ASL is acquired natively from birth; (b) less frequent use of ASL by bimodal bilinguals leads to a larger frequency effect for ASL, supporting the frequency-lag hypothesis, and c) the frequent use of mouthing and/or code-blending may shield bimodal bilinguals from the lexical slowing that occurs for spoken language bilinguals for their dominant language.

Funding

National Institutes of Health (NIH) (HD047736 to K.E. and San Diego State University and HD050287 to T.G. and the University of California San Diego).

Conflicts of Interest

No conflicts of interest were reported.

Acknowledgments

The authors thank Lucinda O’Grady, Helsa Borinstein, Rachael Colvin, Danielle Lucien, Lindsay Nemeth, Erica Parker, and Jennie Pyers for assistance with stimuli development, data coding, participant recruitment, and testing. We would also like to thank all of the deaf and hearing participants, without whom this research would not be possible.

Notes

The pattern of results does not change if fingerspelled responses are eliminated from the analyses.

It is not clear why late bilinguals responded more quickly on some items. A possible contributing factor is the slightly higher error rate for late bilinguals (Figure 3B), suggesting a speed-accuracy trade off (however, the difference in error rate was not significant).

References

- Baayen R. H., Piepenbrock R., Gulikers L. The CELEX lexical database (CD-ROM) Philadelphia: Philadelphia Linguistic Data Consortium, University of Pennsylvania; (1995). [Google Scholar]

- Balota D. A., Piloti M., Cortese M. J. (2001). Subjective frequency estimates for 2,938 monosyllabic words. Memory & Cognition, 29, 639–647doi:10.3758/BF03200465 [DOI] [PubMed] [Google Scholar]

- Bates E., D’Amico S., Jacobsen T., Szekely A., Andonova E., Devescovi A. … Tzeng O. (2003). Timed picture naming in seven languages. Psychonomic Bulletin & Review, 10, 344–380doi:10.3758/BF03196494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baus C., Gutiérrez-Sigut E., Quer J., Carreiras M. (2008). Lexical access in Catalan signed language (LSC) production. Cognition, 108, 856–865doi:10.1016/j.cognition.2008.05.012 [DOI] [PubMed] [Google Scholar]

- Bialystok E., Craik F. I. M., Grean D. W., Gollan T. H. (2009). Bilingual minds. Psychological Science in the Public Interest, 10 (3) 89–129doi:10.1177/1529100610387084 [DOI] [PubMed] [Google Scholar]

- Bishop M. (2006). Bimodal bilingualism in hearing, native users of American Sign Language. (Unpublished doctoral dissertation). Gallaudet University, Washington, DC; [Google Scholar]

- Boyes-Braem P., Sutton-Spence R. (Eds.) (2001). The hands are the head of the mouth: The mouth as articulator in sign languages Hamburg, Germany: Signum; [Google Scholar]

- Brentari D., Padden C. (2001). A lexicon of multiple origins: Native and foreign vocabulary in American Sign Language. In Brentari D. (Ed.), Foreign vocabulary in sign languages: A cross-linguistic investigation of word formation (pp. 87–119). Mahwah, NJ: Lawrence Erlbaum Associates; [Google Scholar]

- Carreiras M., Gutiérrez-Sigut E., Baquero S., Corina D. (2008). Lexical processing in Spanish sign language (LSE). Journal of Memory and Language, 58 (1) 100–122doi:10.1016/j.jml.2007.05.004 [Google Scholar]

- Christoffels I. K., de Groot A. M. B., Kroll J. F. (2006). Memory and language skills in simultaneous interpreters: The role of expertise and language proficiency. Journal of Memory and Language, 54, 324–345doi:10.1016/j.jml.2005.12.004 [Google Scholar]

- Cohen J. D., MacWhinney B., Flatt M., Provost J. (1993). PsyScope: An interactive graphic system for designing and controlling experiments in the psychology laboratory using Macintosh computers. Behavior Research Methods, Instruments, and Computers, 25 (2) 257–271doi:10.3758/BF03204507 [Google Scholar]

- Duyck W., Vanderelst D., Desmet T., Hartsuiker R. J. (2008). The frequency effect in second-language visual word recognition. Psychonomic Bulletin & Review, 15, 850–855doi: 10.3758/PBR.15.4.850 [DOI] [PubMed] [Google Scholar]

- Emmorey K. (1991). Repetition priming with aspect and agreement morphology in American Sign Language. Journal of Psycholinguistic Research, 20 (5) 365–388doi:10.1007/BF01067970 [DOI] [PubMed] [Google Scholar]

- Emmorey K., Borinstein H. B., Thompson R., Gollan T. H. (2008). Bimodal bilingualism. Bilingualism: Language and Cognition, 11 (1) 43–61doi:10.1017/S1366728907003203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K., Petrich J. A. F., Gollan T. H. (2012). Bilingual processing of ASL-English code-blends: The consequences of accessing two lexical representations simultaneously. Journal of Memory and Language, 67, 199–210doi:10.1016/j.jml.2012.04.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forster K. I., Chambers S. M. (1973). Lexical access and naming time. . Journal of Verbal Learning and Verbal Behavior, 12, 627–635doi:10.1016/S0022-5371(73)80042-8 [Google Scholar]

- Gernsbacher M. A. (1984). Resolving 20 years of inconsistent interactions between lexical familiarity and orthography, concreteness, and polysemy. Journal of Experimental Psychology: General, 113, 256–281doi: 10.1037/0096-3445.113.2.256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilhooly K. J., Logie R. H. (1980). Age of acquisition, imagery, concreteness, familiarity, and ambiguity measures for 1,944 words. Behavior, Research, Methods & Instrumentation, 12, 395–427doi: 10.3758/BF03201693 [Google Scholar]

- Gollan T. H., Acenas L. A. (2004). What is a TOT? Cognate and translation effects on tip-of-the-tongue states in Spanish–English and Tagalog–English bilinguals. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30, 246–269doi: 10.1037/0278-7393.30.1.246 [DOI] [PubMed] [Google Scholar]

- Gollan T. H., Bonanni M. P., Montoya R. I. (2005). Proper names get stuck on bilingual and monolingual speakers’ tip-of-the tongue equally often. Neuropsychology, 19, 278–287doi: 10.1037/0894-4105.19.3.278 [DOI] [PubMed] [Google Scholar]

- Gollan T. H., Fennema-Notestine C., Montoya R. I., Jernigan T. L. (2007). The bilingual effect on Boston Naming Test performance. Journal of the International Neuropsychological Society, 13, 197–208doi: 10.1017/S1355617707070038 [DOI] [PubMed] [Google Scholar]

- Gollan T. H., Montoya R., Cera C., Sandoval T. (2008). More use almost always means a smaller frequency effect: Aging, bilingualism, and the weaker links hypothesis. Journal of Memory and Language, 58, 787–814doi: 10.1016/j.jml.2007.07.00 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gollan T., Montoya R., Fennema-Notestine C., Morris S. (2005). Bilingualism affects picture naming but not picture classification. Memory & Cognition, 33 (7) 1220–1234doi:10.3758/BF0319322 [DOI] [PubMed] [Google Scholar]

- Gollan T. H., Montoya R. I., Werner G. A. (2002). Semantic and letter fluency in Spanish–English bilinguals. Neuropsychology, 16, 562–576doi: 10.1037/0894-4105.16.4.562 [PubMed] [Google Scholar]

- Gollan T. H., Silverberg N. B. (2001). Tip-of-the-tongue states in Hebrew-English bilinguals. Bilingualism: Language and Cognition, 4, 63–83doi:10.1017/S136672890100013X [Google Scholar]

- Gollan T. H., Slattery T. J., Goldenberg D., van Assche E., Duyck W., Rayner K. (2011). Frequency drives lexical access in reading but not in speaking: The frequency-lag hypothesis. Journal of Experimental Psychology: General, 140 (2) 186–209doi:10.1037/a0022256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gordon B. (1985). Subjective frequency and the lexical decision latency function: Implications for mechanisms of lexical access. Journal of Memory and Language, 24, 631–645doi: 10.1016/0749-596X(85)90050-6 [Google Scholar]

- Ivanova I., Costa A. (2008). Does bilingualism hamper lexical access in speech production? Acta Psychologica, 127, 277–288doi: 10.1016/j.actpsy.2007.06.003 [DOI] [PubMed] [Google Scholar]

- Johnston T. (2003). BSL, Auslan, and NZSL: Three signed languages or one?In Baker A., van den Bogaerde B., Crasborn O. (Eds.), Cross-linguistic perspectives in sign language research, selected papers form TISLR 2000 (pp. 47–69). Hamburg, Germany: Signum; [Google Scholar]

- Johnston T. (2008). The Auslan archive and corpus. In Nathan D. (Ed.), The endangered languages archive London: Hans Rausing Endangered Languages Documentation Project, School of Oriental and African Studies, University of London; Retrieved from xlink:href="http://elar.soas.ac.uk/languages">http://elar.soas.ac.uk/languages [Google Scholar]

- Johnston T. (2012). Lexical frequency in sign languages. Journal of Deaf Studies and Deaf Education, 17 (2) 163–193doi: 10.1093/deafed/enr036 [DOI] [PubMed] [Google Scholar]

- Kohnert K., Bates E., Hernandez A. E. (1999). Balancing bilinguals: Lexical-semantic production and cognitive processing in children learning Spanish and English. Journal of Speech, Language, and Hearing Research, 42 (6) 1400–1413doi:1092-4399/99/4206-1400 [DOI] [PubMed] [Google Scholar]

- Kohnert K. J., Hernandez A. E., Bates E. (1998). Bilingual performance on the Boston Naming Test: Preliminary norms in Spanish and English. Brain and Language, 65, 422–440doi: 10.1006/brln.1998.2001 [DOI] [PubMed] [Google Scholar]

- Murray W. S., Forster K. I. (2004). Serial mechanisms in lexical access: The rank hypothesis. Psychological Review, 111, 721–756 doi:10.1037/0033-295X.111.3.721 [DOI] [PubMed] [Google Scholar]

- Nadolske M. A., Rosenstock R. (2007). Occurrence of mouthings in American Sign Language: A preliminary study. In Perniss P. M., Pfau R., Steinbach M. (Eds.), Visible variation, (Vol. 188, pp. 35–62) Berlin, New York: Mouton de Gruyter; Retrieved from xlink:href="http://www.reference-global.com/doi/abs/10.1515/9783110198850.35">http://www.reference-global.com/doi/abs/10.1515/9783110198850.35 [Google Scholar]

- Oldfield R. C., Wingfield A. (1965). Response latencies in naming objects. Quarterly Journal of Experimental Psychology, 17, 273–281doi:10.1080/17470216508416445 [DOI] [PubMed] [Google Scholar]

- Padden C. (1998). The ASL lexicon. Sign Language & Linguistics, 1 (1) 39–60 [Google Scholar]

- Petitto L. A., Katerelos M., Levy B. G., Gauna K., Tetreault K., Ferraro V. (2001). Bilingual signed and spoken language acquisition from birth: implications for the mechanisms underlying early bilingual language acquisition. Journal of Child Language, 28 (2) 453–496doi:10.1017/S0305000901004718 [DOI] [PubMed] [Google Scholar]

- Portocarrero J. S., Burright R. G., Donovick P. J. (2007). Vocabulary and verbal fluency of bilingual and monolingual college students. Archives of Clinical Neuropsychology, 22, 415–422doi:10.1016/j.acn.2007.01.015 [DOI] [PubMed] [Google Scholar]

- Pyers J., Gollan T. H., Emmorey K. (2009). Bimodal bilinguals reveal the source of tip-of-the-tongue states. Cognition, 112, 323–329doi:10.1016/j.cognition.2009.04.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts P. M., Garcia L. J., Desrochers A., Hernandez D. (2002). English performance of proficient bilingual adults on the Boston Naming Test. Aphasiology, 16, 635–645doi:10.1080/02687030244000220 [Google Scholar]

- Rosselli M., Ardila A., Araujo K., Weekes V. A., Caracciolo V., Padilla M., Ostrosky-Solis F. (2000). Verbal fluency and repetition skills in healthy older Spanish-English bilinguals. Applied Neuropsychology, 7, 17–24doi:10.1207/S15324826AN0701_3 [DOI] [PubMed] [Google Scholar]

- Székely A., D’Amico S., Devescovi A., Federmeier K., Herron D., Iyer G. … Bates E. (2003). Timed picture naming: Extended norms and validation against previous studies. Behavioral Research Methods, Instruments, & Computers, 35 (4) 621–633doi:10.3758/BF03195542 [DOI] [PubMed] [Google Scholar]

- Vinson D. P., Cormier K. A., Denmark T., Schembri A., Vigliocco G. (2008). The British Sign Language (BSL) norms for acquisition, familiarity, and iconicity. Behavior Research Methods, 40, 1079–1087doi:10.3758/BRM.40.4.1079 [DOI] [PubMed] [Google Scholar]

- Vinson D. P., Thompson R. L., Skinner R., Fox N., Vigliocco G. (2010). The hands and mouth do not always slip together in British Sign Language: Dissociating articulatory channels in the lexicon. Psychological Science, 21 (8) 1158–1167doi:10.1177/0956797610377340 [DOI] [PubMed] [Google Scholar]