ABSTRACT

CONTEXT

The US Federal Government is investing up to $29 billion in incentives for meaningful use of electronic health records (EHRs). However, the effect of EHRs on ambulatory quality is unclear, with several large studies finding no effect.

OBJECTIVE

To determine the effect of EHRs on ambulatory quality in a community-based setting.

DESIGN

Cross-sectional study, using data from 2008.

SETTING

Ambulatory practices in the Hudson Valley of New York, with a median practice size of four physicians.

PARTICIPANTS

We included all general internists, pediatricians and family medicine physicians who: were members of the Taconic Independent Practice Association, had patients in a data set of claims aggregated across five health plans, and had at least 30 patients per measure for at least one of nine quality measures selected by the health plans.

IINTERVENTION

Adoption of an EHR.

MAIN OUTCOME MEASURES

We compared physicians using EHRs to physicians using paper on performance for each of the nine quality measures, using t-tests. We also created a composite quality score by standardizing performance against a national benchmark and averaging standardized performance across measures. We used generalized estimation equations, adjusting for nine physician characteristics.

KEY RESULTS

We included 466 physicians and 74,618 unique patients. Of the physicians, 204 (44 %) had adopted EHRs and 262 (56 %) were using paper. Electronic health record use was associated with significantly higher quality of care for four of the measures: hemoglobin A1c testing in diabetes, breast cancer screening, chlamydia screening, and colorectal cancer screening. Effect sizes ranged from 3 to 13 percentage points per measure. When all nine measures were combined into a composite, EHR use was associated with higher quality of care (sd 0.4, p = 0.008).

CONCLUSIONS

This is one of the first studies to find a positive association between EHRs and ambulatory quality in a community-based setting.

KEY WORDS: electronic health records, primary health care, quality of health care

BACKGROUND

Electronic health records (EHRs) have become a US national priority, with up to $29 billion in federal incentives for “meaningful use” of EHRs.1,2 If physicians only use EHRs to do the same tasks that they previously did on paper, such as gathering and storing information, EHRs may not improve health care quality.3 “Meaningful use” refers to the use of EHRs not only for gathering and storing information, but also for tracking and improving specific outcomes.4

Electronic health records could improve quality by bringing to bear on clinical medicine computing capabilities that support evidence-based decision making. Clinical decision support, for example, combines EHR-based patient characteristics with computerized knowledge bases and clinical algorithms, thereby generating patient-specific recommendations for physicians in real time.5

Studies on the effect of EHRs on ambulatory quality have been mixed. Several previous studies found no effect.6–10 Others found modest effects for some, but not all, of the quality measures considered.11–13 Still others were positive, but took place mostly in academic medical centers or integrated delivery systems with home-grown, rather than commercially available, EHRs.5,14 Few studies have taken place in communities with multiple payers and commercially available EHRs, which are typical of most American communities.15 Previous studies have also been limited by nonuniversal use of concurrent control groups,12 lack of adjustment for patient case mix,6–13 and small or unknown sample sizes of patients per quality measure per physician.6–13

We sought to determine the effect of commercially available EHRs on ambulatory quality in a multi-payer community, while addressing many of the other limitations of the previous literature.

METHODS

Overview

We conducted a cross-sectional study of EHRs and ambulatory quality among primary care physicians in the Hudson Valley region of New York in 2008. The Institutional Review Boards of Weill Cornell Medical College and Kingston Hospital approved the protocol. The study was registered with the National Institutes of Health Clinical Trials Registry (NCT00793065).

Setting

The Hudson Valley region is comprised of seven counties immediately north of New York City (Dutchess, Orange, Putnam, Rockland, Sullivan, Ulster and Westchester).

Context

This study took place in the context of an initiative led by THINC (the Taconic Health Information Network and Community), a nonprofit organization that convenes stakeholders to improve health care quality in the Hudson Valley.16 THINC’s efforts were supported by two grants from the New York State Department of Health: a $5,000,000 grant in 2006 to support EHR licenses for physicians in the community, and a $175,000 grant in 2007 to convene health plans for a pay-for-performance program.17,18 The pay-for-performance program allowed the health plans to select a common set of quality measures, which are studied here.

This study was also conducted as part of the Health Information Technology Evaluation Collaborative (HITEC), an academic consortium designated by New York State as the evaluation entity for health IT projects funded under the HEAL NY (Healthcare Efficiency and Affordability Law for New Yorkers) Capital Grant Program.19,20

Participants

We included all primary care physicians (general internists, pediatricians, and family medicine physicians) who were members of the Taconic Independent Practice Association (IPA), a not-for-profit organization whose membership is drawn from private practices and federally qualified health centers.21 We also included non-member physicians who volunteered for THINC’s initiative.

Electronic health records (EHRs)

We used data collected by the IPA in April 2008, regarding whether physicians had adopted an EHR or not. The IPA had previously formed MedAllies, a company that facilitates EHR implementation, among other services it offers.22 If physicians were known to MedAllies as having implemented an EHR, they were classified as physicians using EHRs. If physicians’ EHR status was unknown, the IPA surveyed them by phone and classified them based on their self-report. Practice management systems were specifically not considered EHRs. The community used at least five different major vendor products that were commercially available, all of which included computerized clinical decision support. Data on actual usage were not available.

Physician Characteristics

The following physician characteristics were obtained from the IPA: age, gender, degree (MD vs. DO), specialty, county, practice size, and adoption of a practice management system.

Data Aggregation

Five health plans (two national commercial plans, Aetna and United; two regional commercial plans, MVP Healthcare and Capital District Physicians’ Health Plan; and one regional Medicaid health maintenance organization (HMO), Hudson Health Plan), which together cover approximately 60 % of the community’s commercially insured population, contributed data. Health plans submitted data for the entire calendar year 2008 to a third-party data aggregator. The data aggregator ensured adherence to standardized specifications and applied attribution logic (Appendix) that blended two methods previously used by the health plans.

Additional Physician Characteristics

The data aggregator generated two physician characteristics: the number of patients attributed to that physician (panel size) and case mix. Case mix was derived using DxCG (also called DCG) software, which applied a previously validated algorithm that has been used widely,23,24 including by the Center for Medicare and Medicaid Services.25

Quality Measures

We considered the ten measures selected for the pay-for-performance program, which were drawn from the Healthcare Effectiveness Data and Information Set (HEDIS): 1–4) for patients with diabetes: eye exams, hemoglobin A1c testing, low-density lipoprotein (LDL) cholesterol testing, and nephropathy testing; 5) breast cancer screening; 6) chlamydia screening; 7) colorectal cancer screening; 8) appropriate medications for people with asthma; 9) testing for children with pharyngitis; and 10) treatment for children with upper respiratory infection. The first eight measures were applied to general internists and family physicians, and measures 6, 8, 9 and 10 were applied to pediatricians and family physicians. We used measure definitions from the National Committee for Quality Assurance (NCQA),26 except that data were derived only from claims (and not supplemented with manual chart review), due to the large sample size. Patients were each permitted to contribute data for more than one measure. For each physician, we considered only those measures for which s/he had at least 30 patients, as including fewer can result in unreliable estimates of quality.27,28 NCQA recommends this approach, even though fewer than half of physicians may be eligible at this level.27,28

Analysis

We considered the physician as the unit of analysis, because physicians are users of the intervention, namely EHRs. We used descriptive statistics to characterize the sample in terms of age, gender, degree, specialty, practice size, panel size, case mix, and adoption of a practice management system. We log-transformed panel size due to skewness. Using Census maps, we classified counties as rural versus urban/suburban. We stratified the sample into two groups, based on EHR adoption (yes/no). We compared the two groups, using t-tests for continuous variables and chi-squared tests for categorical variables.

We measured each physician’s performance on each quality measure as a proportion: the number of eligible patients receiving recommended care divided by the total number of eligible patients for that measure. We compared each physician’s performance on each measure to NCQA’s 2008 national benchmark for that measure,29 and then expressed the physician’s performance as the number of standard deviations from that benchmark. We created a composite quality score for each physician by taking the average of the standard deviations across measures. We chose this approach of standardizing performance against a benchmark, because this approach accounts for the fact that typical performance and the distribution of performance vary widely by measure. We calculated the average score for each study group and compared the two using a t-test.

To adjust for potential confounders, we used linear regression with the composite quality score as a continuous outcome. We determined bivariate relationships between physician characteristics, including EHR adoption, and the composite quality score. We identified all variables with bivariate p values ≤ 0.20 and entered them into a multivariate model. We used backwards stepwise elimination to select the most parsimonious model. We had complete data on all variables in the final model. We considered p values < 0.05 to be significant.

We conducted three separate sensitivity analyses. First, we restricted the analysis to only those measures expected to be directly affected by use of an EHR. For this analysis, we excluded two measures (appropriate testing for children with pharyngitis and appropriate treatment for children with upper respiratory infection), as performance on these measures depends largely on medical decision making informed by the history of present illness and physical examination, rather than on data pre-existing in the medical record.

A second sensitivity analysis considered only those measures defined by NCQA, based on their field testing, as “administrative-only measures,” excluding “hybrid measures.”30 Because hybrid measures were designed to include supplemental data from manual chart review, use of administrative data only for hybrid measures may underestimate quality.30 The five administrative measures were: breast cancer screening, chlamydia screening, use of appropriate medications for people with asthma, appropriate testing for children with pharyngitis, and appropriate treatment for children with upper respiratory infection.

Finally, we conducted an analysis without requiring physicians to have at least 30 patients per measure for at least one measure, thereby determining if this requirement introduced any unintended bias.

All analyses were conducted with SAS (version 9.2; Cary, NC).

RESULTS

Study Sample

The Taconic IPA database included a total of 4,403 providers, of whom 3,548 (81 %) practiced in the Hudson Valley (Fig. 1). Of those, 1,131 (32 %) were primary care physicians. Of those, 923 (82 %) had quality data on any of their patients represented in the aggregated claims. Restricting the sample to those with ≥ 30 patients for at least one quality measure, 466 physicians (50 %) qualified for inclusion and had a total of 74,618 unique patients contribute quality data.

Figure 1.

Derivation of the study sample. * The 7 Hudson Valley counties were: Dutchess, Orange, Putnam, Rockland, Sullivan, Ulster and Westchester. † Primary Care Providers were MDs or DOs in General Internal Medicine, Pediatrics and Family Medicine.

Physician Characteristics

Almost all (96 %) of the physicians were members of the Taconic IPA. The average physician was 52 years old (Table 1). Approximately one-third were women. Approximately half were general internists, one-third were family medicine physicians, and one-seventh were pediatricians. One-fifth practiced in a rural county. The median practice size was four physicians. The average number of patients per physician included in the data set was just under 400. The average case mix score for the physicians in the sample was 3.3, reflecting patients sicker than the national average. Approximately two-thirds of the physicians had electronic practice management systems. Approximately half of the physicians (N = 262; 56 %) were using paper records and half (N = 204; 44 %) were using EHRs.

Table 1.

Characteristics of Primary Care Physicians

| Physician characteristic | Total | Paper | EHR | p-value |

|---|---|---|---|---|

| N = 466 | N = 262 | N = 204 | ||

| Age in years*: mean (sd) | 52 (10) | 54 (10) | 49 (9) | < 0.001 |

| Gender, female: N (%) | 148 (32) | 79 (30) | 69 (34) | 0.40 |

| Degree, MD (vs. DO): N (%) | 421 (90) | 246 (94) | 175 (86) | 0.003 |

| Specialty: N (%) | ||||

| General internal medicine | 251 (54) | 141 (54) | 110 (54) | 0.84 |

| Pediatrics | 57 (12) | 34 (13) | 23 (11) | |

| Family medicine | 158 (34) | 87 (33) | 71 (35) | |

| Rural county: N (%) | 86 (18) | 45 (17) | 41 (20) | 0.42 |

| Practice size *: mean (sd) | 17 (32) | 3 (4) | 35 (41) | < 0.001 |

| Panel size†: mean (sd) | ||||

| Fewer than 200 | 123 (26) | 77 (29) | 46 (23) | 0.09 |

| 200–299 | 104 (22) | 63 (24) | 41 (20) | |

| 300–499 | 125 (27) | 60 (23) | 65 (32) | |

| 500 or more | 114 (24) | 62 (24) | 52 (25) | |

| Case mix score: mean (sd) | 3.3 (1.8) | 3.2 (1.9) | 3.4 (1.7) | 0.23 |

| Has electronic practice management system: N (%) | 295 (63) | 133 (51) | 162 (79) | < 0.001 |

EHR Electronic health record

* Age was missing for one provider. Practice size was missing for 65 providers

† Panel size reflects the number of the physician’s patients who are in the data set

Electronic health record users were more likely than paper users to be younger, have a larger practice size, and have a practice management system (Table 1). Electronic health record users were less likely than paper users to have an MD degree vs. DO degree. There were no significant differences by gender, specialty, county (rural vs. urban/suburban), panel size, or case mix.

Quality of Care

Of the ten HEDIS measures considered, only nine had enough patients for subsequent analysis. The asthma measure was dropped, as no physician had at least 30 patients with asthma. For the nine included measures, each physician contributed on average to 2.6 quality measures (median 2). On average, each patient contributed to 1.7 quality measures (median 1).

Differences in Quality

In terms of absolute performance, physicians using EHRs provided higher rates of recommended care than physicians using paper for four of the measures (Table 2). Electronic health record use was associated with significantly higher performance than paper for: hemoglobin A1c testing in diabetes (90.1 % vs. 84.2 %, p = 0.003), breast cancer screening (78.6 % vs. 74.2 %, p < 0.001), chlamydia screening (65.8 % vs. 53.0 %, p = 0.02) and colorectal cancer screening (51.3 % vs. 48.0 %, p = 0.01). Electronic health record use was associated with higher quality for two other measures, though these were not statistically significant: LDL testing in diabetes (87.6 % vs. 85.1 %, p = 0.26) and nephropathy screening in diabetes (68.2 % vs. 64.8 %, p = 0.33).

Table 2.

Absolute Performance on Quality Measures, by Measure and by Type of Medical Record (N = 466 Physicians, Requiring at Least 30 Patients for at Least One Measure Per Physician)

| Quality measure | Hudson Valley | National benchmark | ||||

|---|---|---|---|---|---|---|

| Performance* | ||||||

| N† | Overall | Paper | EHR | p-value‡ | ||

| Diabetes—eye visit | 86 | 34.1 % | 35.1 % | 32.7 % | 0.28 | 56.5 % |

| Diabetes—HgbA1c testing | 86 | 86.7 % | 84.2 % | 90.1 % | < 0.01 | 89.0 % |

| Diabetes—LDL testing | 86 | 86.2 % | 85.1 % | 87.6 % | 0.26 | 84.8 % |

| Diabetes—nephropathy screening | 86 | 66.3 % | 64.8 % | 68.2 % | 0.33 | 82.4 % |

| Breast cancer screening | 358 | 76.1 % | 74.2 % | 78.6 % | < 0.001 | 70.2 % |

| Chlamydia screening | 27 | 59.7 % | 53.0 % | 65.8 % | 0.02 | 41.7 % |

| Colorectal cancer screening | 391 | 49.4 % | 48.0 % | 51.3 % | 0.01 | 58.6 % |

| Appropriate testing for children with pharyngitis | 28 | 65.9 % | 74.2 % | 52.9 % | 0.05 | 75.6 % |

| Appropriate treatment for children with upper respiratory infection | 55 | 91.5 % | 93.0 % | 90.0 % | 0.19 | 83.9 % |

EHR electronic health record. Hgb A1c hemoglobin A1c. LDL low-density lipoprotein

* Performance is first calculated as the proportion of a provider’s patients who received the recommended intervention and then averaged across the providers in the stated group

† Of the 466 physicians, 315 contributed data for only breast cancer and colorectal cancer screening

‡ p-values were calculated with t-tests comparing the paper and EHR groups

Physicians using EHRs provided a lower rate of recommended care than physicians using paper for one of nine quality measures, appropriate testing for children with pharyngitis, which was marginally significant (52.9 % vs. 74.2 %, p = 0.05).

When we combined measures into a composite score, we found that EHR use was associated with higher quality than paper, both in unadjusted (p < 0.001) and adjusted (p = 0.008) models (Table 3). The magnitude of the adjusted effect was 0.37 standard deviations.

Table 3.

Association Between Physician Characteristics and Quality of Care, Measuring Quality as Standard Deviations Away from the National Benchmark and Averaged Across Measures (N = 465 Physicians,* Requiring at Least 30 Patients for at Least One Measure Per Physician)

| Physician characteristic | Bivariate models | Multivariate model† | ||

|---|---|---|---|---|

| Estimates (unadjusted) | p-value | Estimates (adjusted) | p-value | |

| EHR (vs. paper) | 0.488 | < 0.001 | 0.373 | 0.008 |

| Age (per 10-year increase) | −0.313 | < 0.001 | −0.238 | 0.001 |

| Female gender | 0.632 | < 0.001 | 0.422 | 0.005 |

| MD degree (vs. DO) | 0.175 | 0.48 | – | – |

| Specialty | ||||

| Pediatrics (vs. IM) | 0.797 | < 0.001 | 0.871 | 0.001 |

| Family medicine (vs. IM) | −0.660 | < 0.001 | −0.640 | < 0.001 |

| Rural (vs. suburban/urban) | −0.582 | 0.002 | – | – |

| Practice size | 0.009 | < 0.001 | – | – |

| Panel size | ||||

| 200–299 (vs. < 200) | 0.226 | 0.28 | – | – |

| 300–499 (vs. < 200) | 0.335 | 0.09 | ||

| 500 or more (vs. < 200) | 0.118 | 0.56 | ||

| Case mix score | −0.056 | 0.13 | 0.012 | 0.79 |

| Practice management system (vs. none) | 0.582 | < 0.001 | – | – |

* For the multivariable model, one physician was excluded due to missing data

† Physician characteristics were entered into the multivariable model if p ≤ 0.2 in the bivariate analyses. Backwards stepwise elimination was performed, with case mix manually retained in the final model

Sensitivity Analyses

When we restricted the analysis to the seven measures expected to be directly affected by EHRs, use of an EHR was independently associated with higher quality of care (sd 0.52, p < 0.001). Moreover, there was no difference in quality between EHR use and paper for measures not expected to be directly affected by EHRs (sd 0.10, p = 0.79).

When we restricted the analysis to administrative measures, use of an EHR was still independently associated with higher quality of care (sd 0.46, p = 0.01).

When we removed the requirement to have at least 30 patients per measure, the results persisted. We found that physicians using EHRs had significantly better performance than physicians using paper on five quality measures: hemoglobin A1c testing, LDL testing and nephropathy screening in diabetes; chlamydia screening, and colorectal cancer screening (p < 0.05, Table 4). Electronic health record use remained independently associated with higher composite quality scores (sd 0.91, p < 0.001, Table 5).

Table 4.

Absolute Performance on Quality Measures, by Measure and by Type of Medical Record (N = 923 Physicians,With No Minimum Number of Patients Per Measure)

| Quality measure | Hudson Valley | National benchmark | ||||

|---|---|---|---|---|---|---|

| Performance* | ||||||

| N | Overall | Paper | EHR | p-value† | ||

| Diabetes—eye visit | 602 | 38.0 % | 37.1 % | 39.2 % | 0.36 | 56.5 % |

| Diabetes—HgbA1c testing | 602 | 84.0 % | 81.1 % | 87.7 % | < 0.001 | 89.0 % |

| Diabetes—LDL testing | 602 | 83.5 % | 81.2 % | 86.6 % | 0.001 | 84.8 % |

| Diabetes—nephropathy screening | 602 | 62.8 % | 59.2 % | 67.4 % | < 0.001 | 82.4 % |

| Breast cancer screening | 648 | 74.4 % | 73.0 % | 76.1 % | 0.05 | 70.2 % |

| Chlamydia screening | 732 | 50.7 % | 48.5 % | 53.5 % | 0.03 | 41.7 % |

| Colorectal cancer screening | 639 | 47.5 % | 45.8 % | 49.6 % | 0.02 | 58.6 % |

| Appropriate testing for children with pharyngitis | 414 | 78.9 % | 77.8 % | 80.1 % | 0.46 | 75.6 % |

| Appropriate treatment for children with upper respiratory infection | 428 | 87.1 % | 85.3 % | 89.2 % | 0.10 | 83.9 % |

| Use of appropriate medications for people with asthma‡ | 513 | 93.6 % | 92.3 % | 95.1 % | 0.08 | 92.4 % |

EHR electronic health record. Hgb A1c hemoglobin A1c. LDL low-density lipoprotein

* Performance is first calculated as the proportion of a provider’s patients who received the recommended intervention and then averaged across the providers in the stated group

† p-values were calculated with t-tests comparing the paper and EHR groups

‡ Asthma re-enters the analysis when there is no minimum number of patients required per measure

Table 5.

Association Between Physician Characteristics and Quality of Care, Measuring Quality as Standard Deviations Away from the National Benchmark and Averaged Across Measures (N = 922 Physicians*, With No Minimum Number of Patients Per Measure)

| Physician characteristic | Bivariate models | Multivariate model† | ||

|---|---|---|---|---|

| Estimates (unadjusted) | p-value | Estimates (adjusted) | p-value | |

| EHR (vs. paper) | 0.814 | < 0.001 | 0.906 | < 0.001 |

| Age (per 10-year increase) | −0.179 | 0.02 | – | – |

| Female gender | 0.422 | 0.01 | – | – |

| MD degree (vs. DO) | −0.062 | 0.84 | – | – |

| Specialty | ||||

| Pediatrics (vs. IM) | 0.838 | < 0.001 | 0.512 | 0.02 |

| Family medicine (vs. IM) | −0.547 | 0.006 | −0.726 | < 0.001 |

| Rural (vs. suburban/urban) | −0.504 | 0.03 | – | – |

| Practice size * | 0.010 | < 0.001 | – | – |

| Panel size | ||||

| 200–299 (vs. < 200) | 0.225 | 0.34 | – | – |

| 300–499 (vs. < 200) | 0.454 | 0.04 | ||

| 500 or more (vs. < 200) | 0.176 | 0.47 | ||

| Case mix score | −0.124 | < 0.001 | −0.091 | < 0.001 |

| Practice management system (vs. none) | 0.634 | < 0.001 | – | – |

* For the multivariable model, one physician was excluded due to missing data

† Physician characteristics were entered into the multivariable model if p ≤ 0.2 in the bivariate analyses. Backwards stepwise elimination was performed

DISCUSSION

We found an association between EHR use and higher quality ambulatory care. Physicians using EHRs provided significantly higher rates of recommended care than physicians using paper for four quality measures: hemoglobin A1c testing for patients with diabetes, breast cancer screening, chlamydia screening, and colorectal cancer screening. The magnitude of the differences between EHR use and paper for these measures ranged from approximately 3 to 13 percentage points. When quality measures were combined via standardization against a national benchmark, physicians using EHRs provided higher rates of recommended care than physicians using paper (adjusted p < 0.01), with an effect size of 0.4 standard deviations.

This result is different from several national and statewide studies that have found no association between EHR use and ambulatory quality.6–10 The magnitude of the observed association is slightly less than the 12–20 % improvement in quality typical for inpatient EHRs and for some previous ambulatory EHR initiatives.14

Of the four diabetes measures in this study, the measure for hemoglobin A1c testing was significant, a finding consistent with previous work.31 Reminders for providers to order hemoglobin A1c in patients with diabetes are a common type of decision support in EHRs, as are reminders for breast cancer screening, chlamydia screening, and colorectal screening, which were also significant in this study.32 Rates of LDL testing and nephropathy screening were higher among physicians using EHRs than among those using paper, but these comparisons were not significant, perhaps due to limited statistical power for those measures. That the rates of eye visits were so low may reflect the limitation of administrative data alone for that measure.

The specific quality measures included in this study are highly relevant to national discussions. Of the seven quality measures expected to be affected by EHRs, all seven are included as clinical quality measures in the federal meaningful use program.33 There has been little evidence previously that using EHRs actually improves quality for these measures.

This study took place in a community with multiple payers. This is in contrast to integrated delivery systems, such as Kaiser Permanente,12,34 Geisinger,35 and the Veterans Administration,36 all of which have seen quality improvements with the implementation of health information technology. Most health care is delivered in “open” rather than integrated systems, thus increasing the potential generalizability of this study.

The physicians in this study had age, gender and specialty distributions similar to national averages.37 Overall performance on the quality measures was similar to the national benchmark for two measures, lower for four measures, and higher for three measures,29 thus illustrating no trend that is systematically different from national norms. The fact that only half of eligible physicians had at least 30 patients per measure for at least one measure is consistent with national trends.28

This study took place in a community with a concerted effort to implement EHRs and coordinate quality improvement goals. The grants that offset the costs of EHRs and the programs that coordinated the selection of quality measures in this community in 2008 were not dissimilar from the financial incentives and clinical quality measures in the meaningful use program. The pay-for-performance program that led to the selection of the quality measures studied here was ultimately combined with a demonstration project for implementation of the Patient-Centered Medical Home model of ambulatory care.38 Medical Home implementation started in 2009, after the data for this study were collected.

Several limitations merit discussion. First, like most previous studies, this study included self-reported adoption of EHRs in its predictor variable. This does not fully capture how and how much physicians actually use EHRs. Second, this study is cross-sectional; thus, we cannot prove that EHR usage caused higher quality of care. Several recent studies have also had cross-sectional designs,7–9,11,13 but future studies should include longitudinal designs. Third, the allocation to study groups is not random, and results could be confounded by unmeasured variables. This study cannot rule out the possibility that users of EHRs may provide higher quality of care for reasons other than the EHR itself. However, our finding that performance improved only on those measures expected to be affected by EHRs (and not on those measures not expected to be affected) suggests that EHR use may be contributing to better outcomes. A randomized controlled trial is also unlikely to take place, given current federal programs. Fourth, we relied on claims data for measuring quality. Ideally, we would have used more detailed clinical data from the EHRs and paper records, but this was not feasible. The small number of pediatric measures is notable and consistent with national calls for the development of more pediatric measures.39

In conclusion, we found that EHR use is associated with higher quality ambulatory care. This finding occurred in a multi-payer community with concerted efforts to support EHR implementation. In contrast to several recent national and statewide studies, which found no effect of EHR use, this study’s finding is consistent with national efforts to promote meaningful use of EHRs.

Acknowledgements

This work was supported by the Commonwealth Fund, the Taconic Independent Practice Association, and the New York State Department of Health (contract #C023699). The authors specifically thank A. John Blair III, MD, President of the Taconic IPA and CEO of MedAllies, and Susan Stuard, MBA, Executive Director of THINC. All authors have contributed sufficiently to be authors and have approved the final manuscript. The authors take full responsibility for the design and conduct of the study and controlled the decision to publish. The authors had full access to the data, and take responsibility for the integrity of the data and the accuracy of the data analysis. A full list of HITEC Investigators can be found at: www.hitecny.org/about-us/our-team/. This work was previously presented as a poster at the Annual Symposium of the American Medical Informatics Association on October 25, 2011.

Conflict of Interest

The authors declare that they do not have conflicts of interest.

Role of the Funding Agencies

The funding sources had no role in the study’s design, conduct or reporting.

APPENDIX

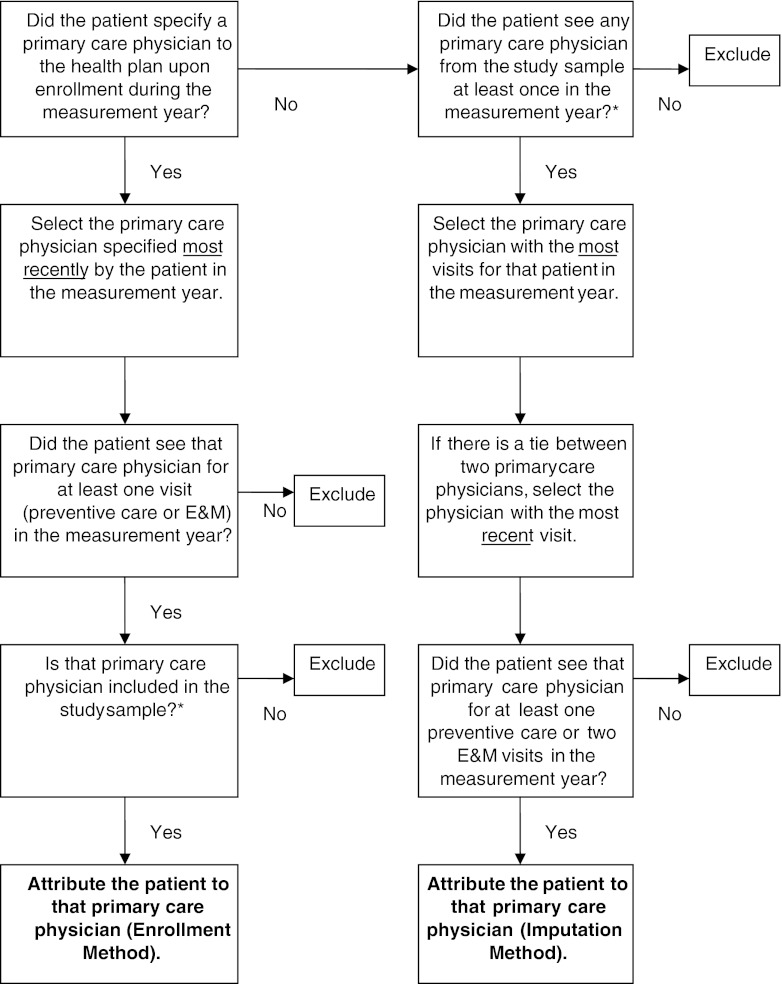

Figure 2.

Logic for attributing patients to primary care physicians. *See Figure 1 for a derivation of the study sample.

REFERENCES

- 1.American Recovery and Reinvestment Act of 2009, Pub L, No. 111-5, 123 Stat 115.

- 2.Steinbrook R. Health care and the American recovery and reinvestment act. N Engl J Med. 2009;360:1057–1060. doi: 10.1056/NEJMp0900665. [DOI] [PubMed] [Google Scholar]

- 3.McDonald C, Abhyankar S. Clinical decision support and rich clinical repositories: a symbiotic relationship: comment on “electronic health records and clinical decision support systems”. Arch Intern Med. 2011;171:903–905. doi: 10.1001/archinternmed.2010.518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010;363:501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 5.Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005;293:1223–1238. doi: 10.1001/jama.293.10.1223. [DOI] [PubMed] [Google Scholar]

- 6.Keyhani S, Hebert PL, Ross JS, Federman A, Zhu CW, Siu AL. Electronic health record components and the quality of care. Med Care. 2008;46:1267–1272. doi: 10.1097/MLR.0b013e31817e18ae. [DOI] [PubMed] [Google Scholar]

- 7.Linder JA, Ma J, Bates DW, Middleton B, Stafford RS. Electronic health record use and the quality of ambulatory care in the United States. Arch Intern Med. 2007;167:1400–1405. doi: 10.1001/archinte.167.13.1400. [DOI] [PubMed] [Google Scholar]

- 8.Poon EG, Wright A, Simon SR, et al. Relationship between use of electronic health record features and health care quality: results of a statewide survey. Med Care. 2010;48:203–209. doi: 10.1097/MLR.0b013e3181c16203. [DOI] [PubMed] [Google Scholar]

- 9.Romano MJ, Stafford RS. Electronic health records and clinical decision support systems: impact on national ambulatory care quality. Arch Intern Med. 2011;171:897–903. doi: 10.1001/archinternmed.2010.527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zhou L, Soran CS, Jenter CA, et al. The relationship between electronic health record use and quality of care over time. J Am Med Inform Assoc. 2009;16:457–464. doi: 10.1197/jamia.M3128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Friedberg MW, Coltin KL, Safran DG, Dresser M, Zaslavsky AM, Schneider EC. Associations between structural capabilities of primary care practices and performance on selected quality measures. Ann Intern Med. 2009;151:456–463. doi: 10.7326/0003-4819-151-7-200910060-00006. [DOI] [PubMed] [Google Scholar]

- 12.Garrido T, Jamieson L, Zhou Y, Wiesenthal A, Liang L. Effect of electronic health records in ambulatory care: retrospective, serial, cross sectional study. BMJ. 2005;330:581. doi: 10.1136/bmj.330.7491.581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Walsh MN, Yancy CW, Albert NM, et al. Electronic health records and quality of care for heart failure. Am Heart J. 2010;159:635–42 e1. doi: 10.1016/j.ahj.2010.01.006. [DOI] [PubMed] [Google Scholar]

- 14.Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144:742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 15.Buntin MB, Burke MF, Hoaglin MC, Blumenthal D. The benefits of health information technology: a review of the recent literature shows predominantly positive results. Health Aff (Millwood) 2011;30:464–471. doi: 10.1377/hlthaff.2011.0178. [DOI] [PubMed] [Google Scholar]

- 16.THINC: Taconic Health Information Network and Community. (Accessed August 31, 2012, at www.thincrhio.org.)

- 17.New York State Department of Health. Hudson Valley Region–Health Information Technology (HIT) Grants–HEAL NY Phase 1. 2007. (Accessed August 31, 2012, at www.health.state.ny.us/technology/awards/regions/hudson_valley.)

- 18.New York Governor’s Office. New York State provides $9.5 million for incentive program to promote high-quality, more affordable health care. 2007. (Accessed August 31, 2012, at www.nyqa.org/NYS-provides.pdf.)

- 19.Kern LM, Kaushal R. Health information technology and health information exchange in New York State: new initiatives in implementation and evaluation. J Biomed Inform. 2007;40:S17–S20. doi: 10.1016/j.jbi.2007.08.010. [DOI] [PubMed] [Google Scholar]

- 20.Health Information Technology Evaluation Collaborative (HITEC). (Accessed August 31, 2012, at www.hitecny.org.)

- 21.Taconic IPA. (Accessed August 31, 2012, at www.taconicipa.com.)

- 22.MedAllies. (Accessed August 31, 2012, at www.medallies.com.)

- 23.DxCG Intelligence. (Accessed August 31, 2012, at www.veriskhealth.com/solutions/enterprise-analytics/dxcg-intelligence.)

- 24.Ash AS, Ellis RP, Pope GC, et al. Using diagnoses to describe populations and predict costs. Health Care Financ Rev. 2000;21:7–28. [PMC free article] [PubMed] [Google Scholar]

- 25.Medicare Advantage–Rates and Statistics–Risk Adjustment. (Accessed August 31, 2012, at http://www.cms.gov/MedicareAdvtgSpecRateStats/06_Risk_adjustment.asp#TopOfPage.)

- 26.National Committee for Quality Assurance. HEDIS & Quality Measurement. (Accessed August 31, 2012, at http://www.ncqa.org/tabid/59/Default.aspx.)

- 27.Scholle SH, Roski J, Adams JL, et al. Benchmarking physician performance: reliability of individual and composite measures. Am J Manage Care. 2008;14:833–838. [PMC free article] [PubMed] [Google Scholar]

- 28.Scholle SH, Roski J, Dunn DL, et al. Availability of data for measuring physician quality performance. Am J Manage Care. 2009;15:67–72. [PMC free article] [PubMed] [Google Scholar]

- 29.National Committee for Quality Assurance. The state of health care quality: Reform, the quality agenda and resource use. 2010. (Accessed August 31, 2012, at http://www.ncqa.org/tabid/836/Default.aspx.)

- 30.Pawlson LG, Scholle SH, Powers A. Comparison of administrative-only versus administrative plus chart review data for reporting HEDIS hybrid measures. Am J Manage Care. 2007;13:553–558. [PubMed] [Google Scholar]

- 31.Cebul RD, Love TE, Jain AK, Hebert CJ. Electronic health records and quality of diabetes care. N Engl J Med. 2011;365:825–833. doi: 10.1056/NEJMsa1102519. [DOI] [PubMed] [Google Scholar]

- 32.Dexheimer JW, Talbot TR, Sanders DL, Rosenbloom ST, Aronsky D. Prompting clinicians about preventive care measures: a systematic review of randomized controlled trials. J Am Med Inform Assoc. 2008;15:311–320. doi: 10.1197/jamia.M2555. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.U.S. Department of Health and Human Services. Medicare and Medicaid Programs; Electronic Health Record Incentive Program; Final Rule. 75 Federal Register 44314 (2010) (42 CFR Parts 412, 413, 422 and 495). [PubMed]

- 34.Chen C, Garrido T, Chock D, Okawa G, Liang L. The Kaiser Permanente Electronic Health Record: transforming and streamlining modalities of care. Health Aff (Millwood) 2009;28:323–333. doi: 10.1377/hlthaff.28.2.323. [DOI] [PubMed] [Google Scholar]

- 35.Paulus RA, Davis K, Steele GD. Continuous innovation in health care: implications of the Geisinger experience. Health Aff (Millwood) 2008;27:1235–1245. doi: 10.1377/hlthaff.27.5.1235. [DOI] [PubMed] [Google Scholar]

- 36.Perlin JB, Kolodner RM, Roswell RH. The Veterans Health Administration: quality, value, accountability, and information as transforming strategies for patient-centered care. Am J Manage Care. 2004;10:828–836. [PubMed] [Google Scholar]

- 37.American Medical Association. Physician characteristics and distribution in the U.S., 2011 edition, Division of Survey and Data Resources, American Medical Association, 2011.

- 38.Bitton A, Martin C, Landon BE. A nationwide survey of patient centered medical home demonstration projects. J Gen Intern Med. 2010;25:584–592. doi: 10.1007/s11606-010-1262-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Institute of Medicine. Pediatric health and health care quality measures. 2010. (Accessed August 31, 2012, at http://www.iom.edu/Activities/Quality/PediatricQualityMeasures.aspx.)