Abstract

In resurgence, an operant behavior that has undergone extinction can return (“resurge”) when a second operant that has replaced it itself undergoes extinction. The phenomenon may provide insight into relapse that may occur after incentive or contingency management therapies in humans. Three experiments with rats examined the impact of several variables on the strength of the resurgence effect. In each, pressing one lever (L1) was first reinforced and then extinguished while pressing a second, alternative, lever (L2) was now reinforced. When L2 responding was then itself extinguished, L1 responses resurged. Experiment 1 found that resurgence was especially strong after an extensive amount of L1 training (12 as opposed to 4 training sessions) and after L1 was reinforced on a random ratio schedule as opposed to a variable interval schedule that was matched on reinforcement rate. Experiment 2 found that after 12 initial sessions of L1 training, 4, 12, or 36 sessions of Phase 2 each allowed substantial (and apparently equivalent) resurgence. Experiment 3 found no effect of changing the identity of the reinforcer (from grain pellet to sucrose pellet or sucrose to grain) on the amount of resurgence. The results suggest that resurgence can be robust; in the natural world, an operant behavior with an extensive reinforcement history may still resurge after extensive incentive-based therapy. The results are discussed in terms of current explanations of the resurgence effect.

Keywords: Extinction, Resurgence, Relapse, Instrumental Conditioning, Operant Conditioning

It is widely understood that extinction, the behavioral paradigm in which learned behavior decreases when a Pavlovian conditioned stimulus (CS) or an operant response occurs repeatedly without the reinforcer, does not erase the original learning. Consistent with this view, a number of response-recovery or “relapse” effects have been studied extensively after extinction in Pavlovian conditioning (e.g., Bouton, 2004; Bouton & Woods, 2008), and each of these suggests that the original learning has been at least partly saved through extinction. Similar response-recovery phenomena may also occur after the extinction of operant or instrumental learning (e.g., Bouton & Swartzentruber, 1991; Bouton, Vurbic, & Winterbauer, 2011; Bouton, Winterbauer, & Todd, 2012). For example, if the reinforcer is presented after an operant response has been extinguished, extinguished responding is “reinstated” (e.g., Baker, Steinwald, & Bouton, 1991; Reid, 1958; Rescorla & Skucy, 1969; Winterbauer & Bouton, 2011). In addition, if the context is changed after extinction has taken place, extinguished instrumental responding can be “renewed” (e.g., Bouton, Todd, Vurbic, & Winterbauer, 2011; Nakajima, Tanaka, Urushihara, & Imada, 2000; Welker & McAuley, 1978). These phenomena, among others, suggest that extinction after instrumental learning is not erasure, and that performance after instrumental extinction (like performance after Pavlovian extinction) depends in part on the context in which post-extinction testing occurs.

Resurgence is another relapse-like recovery phenomenon that occurs after extinction in instrumental learning (e.g., Leitenberg, Rawson, & Bath, 1970; Lieving & Lattal, 2003; Podlesnik & Shahan, 2009; Winterbauer & Bouton, 2010). In this paradigm, an operant behavior (e.g., pressing one lever) is first reinforced. Then, when that behavior undergoes extinction, a new behavior (e.g., pressing a second lever) is also reinforced. When the new behavior is then itself extinguished, the first behavior recovers or “resurges.” Like the other recovery phenomena, resurgence indicates that extinction is not erasure. It may also have unique translational or clinical significance. It suggests that “contingency management” (e.g., Higgins, Heil, & Lussier, 2004) or “incentive” (e.g., Fisher, Green, Calvert, & Glasgow, 2011) treatments that reinforce new behaviors while undesirable ones are extinguished might be prone to relapse when reinforcement for the replacement behavior is withdrawn. There are straightforward applications of the phenomenon to overeating and drug abuse. Consistent with the latter, extinguished operant behaviors that were reinforced with either alcohol (Podlesnik, Jimenez-Gomez, & Shahan, 2006) or cocaine (Quick, Pyszczynski, Colston, & Shahan, 2011) in rats have been shown to resurge when a behavior reinforced to replace them is itself extinguished.

At least three explanations of resurgence have been proposed. Winterbauer and Bouton (2010; see also Bouton & Swartzentruber, 1991) suggested that resurgence is a new example of the renewal effect. That is, the original behavior is extinguished in the new context of the alternative behavior being reinforced. When this context then changes, as it does when the second behavior is extinguished, an “ABC renewal” effect could occur (Bouton et al., 2011; Todd, Winterbauer, & Bouton, 2012). Such an analysis is consistent with evidence that reinforcers (e.g., Bouton, Rosengard, Achenbach, Peck, & Brooks, 1993) and instrumental actions (e.g., Weise-Kelly & Siegel, 2001) have stimulus properties that would allow them to function as contexts.

In a second explanation of resurgence, Leitenberg, Rawson, and their colleagues proposed that performing the new response during extinction might suppress the first behavior and thus prevent the subject from learning extinction (e.g., Leitenberg et al., 1970; Leitenberg, Rawson, & Mulick, 1975; Rawson, Leitenberg, Mulick, & Lefebvre, 1977). When the second behavior is extinguished, the first is released from competition, and can now return because it was not sufficiently extinguished. Although such a mechanism might plausibly operate under some conditions, Winterbauer and Bouton (2010) showed that resurgence can occur when reinforcement of the alternative behavior does not suppress the original response relative to that observed in a simple extinction control (see also Winterbauer & Bouton, 2012).

A third, quantitative, account of resurgence has been provided by Shahan and Sweeney (2011; see also Podlesnik & Shahan, 2009), who extended behavioral momentum theory (e.g., Nevin & Grace, 2000). Conceptually, when reinforcement of a new behavior is added to extinction, it adds to the disruption of the original behavior and increases a process that indirectly strengthens the original response. When the second behavior is then extinguished, resurgence of the first behavior can occur because the disruption is removed.

The present experiments were organized to explore the impact of several fundamental variables that might influence the strength of resurgence. Experiment 1 examined the extent to which resurgence is affected by the amount of Phase-1 training as well as the type of reinforcement schedule used in Phase 1. Experiment 2 then tested whether a 9-fold increase in the amount of Phase 2 training would weaken the resurgence effect, and Experiment 3 examined whether changing the reinforcer used during acquisition and extinction of the original behavior has an impact on resurgence. Each variable represents a condition that may be relevant to the clinical treatment of behavior outside the laboratory. Problematic behaviors (such as overeating or smoking) come to the clinic with different and often extensive histories of reinforcement (Experiment 1), incentive or contingency management therapies can be continued for extended periods of time (Experiment 2), and since reinforcers used in such therapies are different from those that reinforce the problem behavior to begin with (e.g., money vs. nicotine), it is worth knowing whether a reinforcer change between phases affects resurgence (Experiment 3). The experiments used methods developed by Winterbauer and Bouton (2010; 2012) that were based on those of Leitenberg et al. (1970, 1975): In Phase 1, rats were reinforced for pressing one lever (L1); in Phase 2, they were reinforced for pressing a second lever (L2) while L1 was extinguished; and in Phase 3 (testing), presses of L1 and L2 were monitored while neither was reinforced.

Experiment 1

Experiment 1 involved four groups in a factorial design that manipulated two aspects of Phase 1 training. Half the rats received four sessions of initial training (cf. Winterbauer & Bouton, 2010, who used four or five) and the other half received 12 sessions. Half the rats in each of these groups were reinforced for pressing L1 on a Random Ratio (RR) schedule of reinforcement, while the other half were reinforced on a Yoked Variable Interval (Yoked-VI) schedule. For each rat receiving the yoked-VI schedule, a food pellet was made available for the next response after a linked RR rat had earned a reinforcer. The procedure was thus designed to equate the rate and temporal distribution of reinforcers delivered to the RR and VI groups. Ratio schedules are nonetheless known to generate higher rates of responding than VI schedules with matched reinforcement rate (e.g., Catania, Matthews, Silverman, & Yohalem, 1977).

Following acquisition of L1 responding, all rats received sessions in which L1 responding was extinguished while presses on a second lever (L2) were reinforced on a fixed-ratio 10 (FR 10) schedule in which every 10th response was reinforced (e.g., Leitenberg et al., 1970). Finally, the rats received a single test session in which neither L1 nor L2 was reinforced. Since the method equated the groups on the reinforcement rate received in Phase 1, if the degree of resurgence merely reflects L1's reinforcement rate, then the ratio and interval groups should show equivalent resurgence. But if resurgence depends on the initial rate of the Phase 1 response, then group differences should emerge. Indeed, since both the ratio schedule and extended training were expected to produce a greater rate of L1 responding at the end of Phase 1, it was possible that rats given extended training with the ratio schedule were likely to show the most resurgence, and rats given less interval training would show the least.

Method

Subjects

The subjects were 48 female Wistar rats obtained from Charles River, Inc. (St. Constance, Quebec) run in two replications, the first composed of 32 animals and the second of 16. The rats were approximately 85–95 days old at the start of the experiment and were individually housed in suspended stainless steel cages in a room maintained on a 16:8-hr light:dark cycle. At the beginning of the experiment, all rats were food deprived to 80% of their free-feeding weight and maintained at that level throughout the experiment with a single feeding following each day's session.

Apparatus

Conditioning proceeded in two sets of four standard conditioning boxes (Med-Associates, St. Albans, VT) that were housed in different rooms of the laboratory. The sets had been modified as described below for use as separate contexts in other experiments (box context was randomly allocated to groups in all of the present experiments). Boxes from both sets measured 31.5 × 25.4 × 24.1 cm (l × w × h), with side walls and ceilings made of clear acrylic plastic and front and rear walls made of brushed aluminum. Recessed 5.1 × 5.1-cm foodcups with infrared photobeams positioned approximately 1.2 cm behind the plane of the wall and 1.2 cm above the bottom of the cup were centered in the front wall about 3 cm above the grid. In one set of four boxes, the floor was composed of stainless steel rods (0.5 cm in diameter) in a horizontal plane spaced 1.6 cm center to center, while in the other set of four boxes, the floor was composed of identical rods spaced 3.2 cm apart in two separate horizontal planes, one 0.6 cm lower than the other and horizontally offset by 1.6 cm. The boxes with the planar floor grid had a side wall with black panels (7.6 × 7.6 cm) placed in a diagonal arrangement, and there were diagonal stripes on both the ceiling and back panel, all oriented in the same direction, 2.9 cm wide, and about 4 cm apart. The other boxes, with the staggered floor, were not adorned in any way. Retractable levers (1.9 cm when extended) were positioned approximately 3.2 cm to the right and to the left of the magazine and 6.4 cm above the grid. Both sets of boxes were housed in sound-attenuating chambers, and were illuminated by two 7.5-W incandescent light bulbs (houselights) mounted above the plexiglass box top on the chamber ceiling. Food reward consisted of 45-mg MLab Rodent Tablets (TestDiet, Richmond, IN).

Procedure

Daily sessions were employed throughout the experiment. Each day's session began with approximately 10 hr of illuminated colony time remaining. Animals were placed into illuminated conditioning chambers, and the start of each session was indicated by the insertion of the lever(s) as appropriate. All sessions were 30 min in duration, and the end of the session was indicated by retraction of the lever(s).

Animals were divided into four groups of 12 animals (8 in the first replication and 4 in the second). Each group received one of the possible combinations of the length of training and schedule of reinforcement factors. Half of the animals received 12 sessions in Phase 1, while the other half received only four sessions. The start of training was delayed in the 4-session groups so that all animals finished Phase 1 (and thus began the remainder of the experiment) on the same calendar date.

Magazine training

All animals received magazine training on the day immediately prior to the beginning of Phase 1. At this time, the rats received a single session with both levers retracted. About 60 food pellets were delivered during this session on a Random Time 30-s (RT 30) schedule of reinforcement, where each second was terminated by delivery of a pellet with a uniform 1 in 30 probability.

L1 acquisition (Phase 1)

All animals then received instrumental conditioning over the next several days. Each session began with insertion of L1, which was the left lever in half the animals and the right lever for the other half, and ended with its retraction. During these sessions, lever presses delivered pellets on a Random Ratio (RR) 16 schedule of reinforcement for half the rats and a Yoked-VI schedule for the other half. On the RR 16 schedule, pellet delivery was triggered with a uniform 1 in 16 probability for each lever press. Each RR rat was yoked to an individual Yoked-VI rat. When the RR 16 rat earned a pellet, the computer program made a pellet available for the next lever press in the paired Yoked-VI rat. As noted earlier, rats received either 12 or 4 sessions of either type of training.

L1 extinction and L2 conditioning (Phase 2)

All animals then received four sessions of extinction of L1 and reinforcement of L2. Both the left and right levers were inserted throughout each session. L1 presses produced no pellets (i.e., were extinguished), but presses on the newly-inserted second lever (L2) earned pellets on an FR 10 schedule of reinforcement (i.e., every tenth press delivered a pellet). Animals now earned pellets independently of each other, but the groups were treated identically during Phase 2.

Resurgence test (Phase 3)

All animals then received a final test session with both levers inserted. In all groups, presses on both levers were not reinforced, and pellets were never delivered.

Statistical analysis

For this and following experiments, analysis of variance with a rejection criterion of p < .05 was employed in all inferential tests unless otherwise specified.

Results

Replication did not interact with any effects, and was therefore omitted from all of the analyses reported here. L1 acquisition results are presented in the top left panel of Figure 1. All animals acquired the L1 response, although as the figure suggests, both the schedule of reinforcement and the length of training influenced its rate of occurrence. The final four days of Phase 1 (the only sessions that all animals completed) were analyzed. There was a reliable increase in responding over Sessions, F(3, 132) = 60.43, MSE = 36.47. All other factors and interactions between factors were also reliable, with main effects of acquisition Length, Schedule type, and a Length × Schedule interaction, Fs(1, 44) ≥ 4.31, MSE = 601.00, and Session × Length, Session × Schedule, and Session × Length × Schedule interactions, Fs(3, 132) ≥ 5.27, MSE = 36.47. On the final day of Phase 1, animals given 12 sessions of conditioning pressed at a higher rate than animals given 4 sessions, F(1, 44) = 23.51, MSE = 241.80, and animals given the RR schedule also pressed faster than animals given the Yoked-VI schedule, F(1, 44) = 27.31, MSE = 241.80. The Length × Schedule interaction was not reliable, F < 1.

Figure 1.

Results of Experiment 1. The upper panels summarize responding on L1 during acquisition or Phase 1 sessions (left), extinction or Phase 2 sessions (middle), and resurgence testing compared to the final extinction session (right). The lower panels summarize responding on L2 during extinction or Phase 2 sessions (middle) and resurgence testing compared to the final extinction session (right). 12 Ratio = 12 acquisition sessions with the Random Ratio schedule; 4 Ratio = 4 acquisition sessions with the Random Ratio schedule; 12 Yoked-VI = 12 acquisition sessions with the Yoked-VI schedule; 4 Yoked-VI = 4 acquisition sessions with the Yoked-VI schedule.

The yoking procedure resulted in a VI 19.3-s schedule in the Yoked-VI groups in the final acquisition session. Analysis of the number of pellets earned in the final session indicated that the yoking procedure successfully equated the ratio and interval groups on pellet rate; there was neither an effect of Schedule nor of the Length × Schedule interaction on that measure, Fs < 1. In contrast, animals given more extensive conditioning earned more pellets in this final session, F(1, 44) = 20.46, MSE = 1254.30. Groups 12 Ratio, 12 VI, 4 Ratio, and 4 VI earned 121.8, 116.2, 75.5, and 70.0 pellets at this time, respectively.

The data from Phase 2 and the Test session are presented in the remaining panels of Figure 1. L1 responding during Phase 2 (upper middle panel) reliably extinguished over Sessions, F(2, 88) = 84.56, MSE = 14.02. Although the rate of extinction depended on the amount of training, as indicated by a reliable Session × Length interaction, F(2, 88) = 8.09, MSE = 14.02, it did not depend upon the reinforcement schedule, as suggested by nonreliable Session × Schedule and Session × Length × Schedule interactions, Fs < 1. The overall rate of L1 pressing in extinction was also not predicted by the Phase 1 Schedule, F < 1, or the Length × Schedule interaction, F(1, 44) = 1.38, MSE = 11.54, but again the overall effect of Length was reliable, F(1, 44) = 12.23, MSE = 11.54. Finally, L1 pressing was comparable among the groups in the final session of extinction; a factorial ANOVA on those data revealed nonreliable Length, Schedule, and Length × Schedule interaction effects, Fs(1, 44) ≤ 1.99, MSE = 1.15.

Responding on L2 during Phase 2 (lower middle panel of Figure 1) showed a different pattern. All animals increased L2 responding during Phase 2 Sessions, F(2, 88) = 195.88, MSE = 28.20. While there was no Session × Length interaction, F(2, 88) = 1.20, MSE = 28.20, the Session × Schedule, and Session × Length × Schedule interactions were both reliable, F(2, 88) ≥ 5.48, MSE = 28.20. There was also an overall Schedule effect, F(1, 44) = 8.00, MSE = 442.10, and a near-reliable effect of Length, F(1, 44) = 3.71, MSE = 442.10, p = .06, but no Length × Schedule interaction, F(1, 44) = 1.20, MSE = 442.10. The pattern is consistent with the possibility that differences in the rate of L1 achieved in Phase 1 carried over to the rate achieved on L2 in Phase 2.

There was robust resurgence on L1 in all groups, as shown in a reliable increase from the last day of Phase 2 to the Test session (upper right panel of Figure 1). An ANOVA comparing these two sessions confirmed a reliable Session effect, F(1, 44) = 92.66, MSE = 13.37. The degree of resurgence was affected by both training factors, as indicated by reliable Session × Length, F(1, 44) = 18.42, MSE = 13.37, and Session × Schedule, F(1, 44) = 5.46, MSE = 13.37, interactions. The Session × Length × Schedule interaction was not reliable, F < 1. The Length main effect, F(1, 44) = 20.41, MSE = 13.63, and Schedule main effect, F(1, 44) = 6.58, MSE = 13.63, were also reliable, although the Length × Schedule interaction was not, F < 1.

L2 showed reliable extinction during testing (lower right panel of Figure 1), with responding declining over Session, F(1, 44) = 318.58, MSE = 61.00. The Session × Length, F < 1, Session × Schedule, F(1, 44) = 1.06, MSE = 61.00, and Session × Length × Schedule interactions, F(1, 44) = 2.74, MSE = 61.00, were not reliable. The overall effect of Phase 1 Schedule persisted in these sessions, F(1, 44) = 5.36, MSE = 153.30, but neither Length, F(1, 44) = 2.28, MSE = 153.30, nor Length × Schedule interaction, F(1, 44) = 2.66, MSE = 153.30, was reliable.

Discussion

The results of Experiment 1 were clear. Both the amount of Phase 1 training and the schedule of reinforcement used in Phase 1 had an impact on (1.) performance at the end of training and (2.) responding during the resurgence test. In addition, the groups that received more extensive initial training had higher reinforcement rates, as well as higher response rates, at the end of Phase 1. In principle, either of these results might explain the higher levels of resurgence observed in the extended-training groups. However, other aspects of the data appear to question a focus on reinforcement rate. Specifically, the yoking procedure was successful at matching the reinforcement rates of the paired ratio and interval groups. Despite this, the ratio schedules still produced more responding than the yoked interval schedules during Phase 1 (see also Catania et al., 1977), and equally important, they also produced more responding during resurgence testing. Those results suggest that the final rate of responding on L1, rather than the rate of reinforcement, may be the better predictor of the level of resurgence.

The overall pattern can be conceptualized in at least two ways. First, extended training and ratio training might yield more response “strength”; the final level of resurgence might reflect that strength. Alternatively, the amount and schedule of training might yield different patterns of responding; what recovers in resurgence is the pattern (rather than the strength) of the response. For example, consistent with the latter possibility, Cançado and Lattal (2011) found that a response trained with a fixed-interval (FI) schedule of reinforcement in Phase 1 had a characteristic scallop reflecting the timing of the reinforcer during resurgence testing (more than did a response trained with a variable-interval schedule). In any case, the results of Experiment 1 clearly indicate that the strength of resurgence is affected by the reinforcement history of L1: Both the amount of training and the reinforcement schedule given L1 have an impact on the resurgence effect.

Experiment 2

The second experiment extended the first by asking whether the strength of resurgence is further affected by the amount of Phase 2 (extinction plus reinforcement of the alternative behavior) training. In Pavlovian learning, the theoretically-related renewal effect can survive substantial extinction (i.e., up to 160 extinction trials, Denniston, Chang, & Miller, 2003; Rauhut, Thomas, & Ayres, 2001; Thomas, Vurbic, & Novak, 2009), although evidence suggests that it might be weakened after “massive” extinction (i.e., 800 trials, Denniston et al., 2003). Leitenberg et al. (1975) previously studied the effects of the amount of extinction training on instrumental resurgence. They found that after five sessions of VI 30 training, a total of 27 Phase 2 sessions (in which L1 was extinguished while L2 was reinforced) weakened the resurgence effect. Unfortunately, the authors did not specify the duration of the sessions in either phase, or the reinforcement schedule used in Phase 2. However, given Experiment 1's clear indication that the amount of initial training is important, it seemed appropriate to investigate the effects of the amount of Phase 2 training after more extensive Phase 1 conditioning. In the present experiment, rats received 12 sessions of VI 30 training in Phase 1 that was followed by either 4, 12, or 36 30-min sessions in which L1 was extinguished and L2 was reinforced on FR 10.

Method

Subjects and Apparatus

The subjects were 32 female Wistar rats obtained from the same supplier and otherwise similar to those employed in Experiment 1. They were also housed and food deprived using the same methods as above, with food deprivation again occurring on the same date in all animals. The same conditioning chambers and pellets were also used.

Procedure

Twice-daily sessions were employed throughout the experiment, except as otherwise noted. Each day's first session began with approximately 8 hr of illuminated colony time remaining, while the first squad's second session began with approximately 5 hr of light remaining. Animals were placed into illuminated conditioning chambers, and the start of each session was indicated by the insertion of the lever(s) when appropriate. All sessions were 30 min in duration, and the end of the session was indicated by retraction of the lever(s). Animals were randomly divided into three groups that would receive different amounts of Phase 2 training: Group 36 (n = 11), Group 12 (n = 11), and Group 4 (n = 10).

Magazine training

As in Experiment 1, all animals received a magazine training session on the day before the beginning of Phase 1 conditioning (no second session was conducted on that day). The start of training was staggered so that all animals finished Phase 2, and were then tested, on the same calendar days.

Phase 1

All animals were then given 12 sessions of instrumental conditioning initiated by insertion of L1 2 min into the session. L1 was the left lever in half of the animals and the right lever in the other half. In all sessions, presses on L1 delivered pellets on a Random Interval 30 s (RI 30) schedule of reinforcement. As noted above, the treatment of the groups was staggered. Initially, Group 36 was run in two squads, twice per day. When Group 12 was added, animals were instead run in four squads, twice per day, with run order fixed to the final order. Therefore, in the final 14 days of the experiment all animals experienced the final run order, and groups were (to the extent possible) evenly represented in each session of the day.

Phase 2

All animals were then given sessions with both the left and right levers inserted. L1 presses during these sessions were extinguished, but presses on the novel lever (L2) earned pellets on an FR 10 schedule of reinforcement. The sessions again occurred twice daily, for 36 sessions (18 days) in Group 36, 12 sessions (6 days) in Group 12, and 4 sessions (2 days) in Group 4.

Phase 3

All animals were then given a final 30-min test session with both levers inserted but on extinction schedules.

Results

The results are presented in Figure 2. All rats acquired L1 pressing (top left panel), with a reliable increase in responding over Session, F(11, 219) = 43.75, MSE = 31.29. Although the increase did not interact with Group, F(22, 219) = 1.05, MSE = 31.29, there was an unexpected difference in overall level of L1 pressing, F(2, 29) = 5.56, MSE = 479.88. No difference was detected during the final training session, however, F(2, 29) = 1.61, MSE = 195.05.

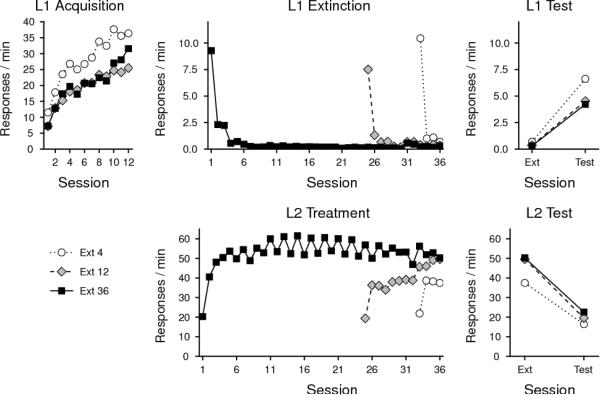

Figure 2.

Results of Experiment 2. The upper panels summarize responding on L1 during acquisition or Phase 1 sessions (left), extinction or Phase 2 sessions (middle), and resurgence testing compared to the final extinction session (right). The lower panels summarize responding on L2 during extinction or Phase 2 sessions (middle) and resurgence testing compared to the final extinction session (right). The extinction data are right-justified for clarity; no group actually received a break in the daily schedule while transitioning from Acquisition to Extinction. Ext 4 = 4 Extinction sessions; Ext 12 = 12 Extinction sessions; Ext 36 = 36 extinction sessions.

Extinction of L1 responding is shown in the top middle panel of Figure 2. L1 responding declined to zero fairly rapidly in all groups, and remained there for the remainder of training. L1 responding in the first four sessions of extinction was compared across groups. While lever pressing declined reliably over those Sessions, F(3, 87) = 72.03, MSE = 6.92, the decline did not interact with Group, F(3, 87) = 1.08, MSE = 6.92, and there was no overall difference between the groups, F(2, 29) = 1.44, MSE = 8.98. L2 responding (bottom middle panel of Figure 2) reliably increased over these same sessions, F(3, 87) = 52.17, MSE = 59.49, and while there was no overall effect of Group, F(2, 29) = 1.47, MSE = 551.31, the Group × Session interaction was reliable, F(3, 87) = 2.94, MSE = 551.31. Analyses that followed up on the interaction detected no differences between groups in the first three extinction sessions, Fs(2, 29) ≤ 2.27, MSE ≥ 154.67. The Group effect in the fourth extinction session was reliable, F(2, 29) = 5.31, MSE = 154.46, with Group 36 responding more in that session than either Group 4, F(1, 19) = 6.95, MSE = 126.20, or Group 12, F(1, 20) = 9.64, MSE = 154.43, which did not differ from one another, F < 1.

There was once again a robust resurgence on L1 in all groups (top right panel of Figure 2). This effect was reflected by a reliable increase from the last day of Phase 2 to the Test session, F(1, 29) = 48.71, MSE = 7.06. Importantly, there was no overall effect of Group, F(2, 29) = 2.25, MSE = 5.32, and no Group × Session interaction, F < 1. Because the groups differed modestly in their final L1 response rates at the end of acquisition, we also expressed responding during the resurgence test as a percentage of each animal's final baseline response rate (the means were 22.7, 17.3, and 14.1% for Groups Ext 4, 12, and 36, respectively). An ANOVA on these data found no group effect, F (2, 29) = 1.64, MSE = 121.3. There was thus no evidence that a nine-fold increase in the amount of Phase 2 training had no impact on the strength of resurgence.

The rate of L2 responding in these final Sessions (shown in bottom right panel of Figure 2), in contrast, depended on Group, F(2, 29) = 3.83, MSE = 136.72, with Groups 36 and 12 pressing more overall than Group 4, F(1, 19) = 5.86, MSE = 160.83, and F(1, 19) = 6.17, MSE = 99.77, respectively, but not differing from one another, F < 1. All groups nevertheless reliably extinguished L2 responding over Session, F(1, 29) = 163.74, MSE = 68.40. There was no Group × Session interaction, F(2, 29) = 1.65, MSE = 68.40.

Discussion

Resurgence created by the current methods stood up to a very extensive extinction treatment. There was no hint that a 9-fold increase in the amount of Phase 2 training did anything to decrease the strength of resurgence; after 36 Phase 2 sessions, resurgence still unequivocally occurred. The contrast with the results of Leitenberg et al. (1975) will be discussed further in the General Discussion. Here we would note that our method involved more sessions of Phase 1 training than theirs, and possibly, a different schedule of reinforcement in Phase 2. The results continue to suggest that resurgence can be robust, and that merely extending a clinical treatment over more sessions may not be an efficient way to make it more effective.

Experiment 3

The third experiment asked whether resurgence is affected by changing the reinforcer used in Phases 1 and 2. Contingency management and incentive treatments (e.g., Fisher et al., 2011; Higgins et al., 2004) differ from most laboratory studies of resurgence in that they use a reinforcer during treatment that is different from the reinforcer maintaining the original problem behavior (e.g., prizes or money vs. high fat food or nicotine). In the present experiment, rats received Phase 1 training with either the grain pellets used in the previous experiments or a 100% sucrose pellet. In Phase 2, L2 was then reinforced on the FR 10 schedule with either the same pellet or the alternative pellet.

Method

Subjects and Apparatus

The subjects were 32 female Wistar rats obtained from the same supplier as those in the previous experiments. The rats were housed and food deprived using the methods described above. The apparatus was also the same, except that 45-mg sucrose pellets (Sucrose Tablets, TestDiet, Richmond, IN) were used in addition to the 45-mg grain-based pellets used in the previous experiments. The different pellet types were delivered to the same food cup.

Procedure

Twice-daily sessions were employed throughout the experiment, except as noted. Each day's first session began with approximately 8 hr of illuminated colony time remaining, while the first second session began with approximately 5 hr of light remaining. Animals were placed into illuminated conditioning chambers, and the start of each session was indicated by the insertion of the lever(s) when appropriate. All sessions were 30 min in duration, and the end of the session was indicated by retraction of the lever(s).

Magazine training

Rats were divided into two equal groups (n = 16), one of which was trained with sucrose pellets, and the other of which was trained with grain pellets. Whichever pellet was employed, about 60 were delivered during a single magazine training session that was identical to those in the previous experiments.

Phase 1

All animals then received 12 sessions of instrumental conditioning initiated by insertion of L1, which was the left lever in half of the animals and the right in the other half. In all sessions, presses on L1 delivered the same pellet each group had experienced during magazine training, on a VI 30 schedule of reinforcement.

Phase 2

The animals were then split in half again and given four sessions of conditioning with both the left and right levers inserted. For all animals, L1 presses during these sessions were extinguished, but presses on L2 delivered pellets on an FR 10 schedule of reinforcement. In half the animals, L2 delivered the same pellet used in Phase 1 to reinforce pressing L1, while in the other half L2 delivered the opposite pellet. There were thus four final groups (n = 8) that differed in the pellets used in Phases 1 and 2 (respectively): Grain-Grain, Grain-Sucrose, Sucrose-Sucrose, and Sucrose-Grain.

Phase 3

All animals were then given a final test session with the levers inserted, but both were on an extinction schedule.

Results

Acquisition, which is shown in the left-hand panel of Figure 3, proceeded normally, with all groups showing a reliable increase in rate of L1 responding over sessions, F(11, 308) = 46.24, MSE = 52.15. There was no effect of Pellet type, F < 1, and Pellet type did not interact with Session, F < 1. Phase 2 Pellet type, a dummy variable at this point, also had no main effect, F(1, 28) = 2.11, MSE = 859.76, and there was no Phase 2 Pellet × Session or three-way interaction, Fs < 1. The results of Phase 1 suggest that the grain and sucrose pellets were about equally effective as reinforcers of the L1 response.

Figure 3.

Results of Experiment 3. The upper panels summarize responding on L1 during acquisition or Phase 1 sessions (left), extinction or Phase 2 sessions (middle), and resurgence testing compared to the final extinction session (right). The lower panels summarize responding on L2 during extinction or Phase 2 sessions (middle) and resurgence testing compared to the final extinction session (right). Grain Grain = grain pellets in both Phases 1 and 2; Suc Grain = sucrose pellets in Phase 1 and grain pellets in Phase 2; Grain Suc = grain pellets in Phase 1 and sucrose pellets in Phase 2; Suc Suc = sucrose pellets in both Phases 1 and 2.

The data from Phase 2 and the Test session are also presented in Figure 3. As shown in the middle top panel, Phase 2 responding on L1 extinguished over Sessions, F(3, 84) = 75.07, MSE = 8.74, and the Session effect did not interact with the Phase 1 Pellet, the Phase 2 Pellet, or their interaction, Fs < 1. There were also no main effects of Phase 1 Pellet, F < 1, the Phase 2 pellet, F(1, 28) = 2.63, MSE = 34.91, or their interaction, F(1, 28) = 1.23, MSE = 34.91. During the final session of extinction, although neither the Phase 1 Pellet effect nor the Phase 1 × Phase 2 Pellet interaction were reliable, Fs < 1, there was a near-reliable tendency for animals given sucrose in Phase 2 to press more, F(1, 28) = 3.86, MSE = 5.11, p = .059.

Responding on L2 during Phase 2 (middle lower panel of Figure 3) showed a somewhat different pattern. As expected, there was a reliable increase in responding over Sessions, F(3, 84) = 40.74, MSE = 55.24. There were no main effects of Phase 1 Pellet, F < 1, Phase 2 Pellet, F(1, 28) = 1.19, MSE = 708.19, or their interaction, F(1, 28) = 1.00, MSE = 708.19. The Phase 1 Pellet × Session interaction was also not reliable, F(3, 84) = 1.95, MSE = 55.24. However, the Phase 2 Pellet × Session interaction, F(3, 84) = 2.76, MSE = 55.24, and the Phase 1 Pellet × Phase 2 Pellet × Session interaction, F(3, 84) = 3.76, MSE = 55.24, were both reliable. Simple effects compared the groups after collapsing over Session. The groups given Sucrose in Phase 1 (Sucrose-Sucrose and Sucrose-Grain) acquired L2 responding similarly over Sessions, F < 1. In contrast, the groups reinforced with grain in Phase 1, and now given either grain or sucrose in Phase 2, acquired responding at different rates, F(3, 42) = 5.01, MSE = 70.47, with Group Grain-Sucrose responding more than Grain-Grain. The results thus suggest that the sucrose reinforcer had a bigger impact on Phase 2 responding if the Phase 1 reinforcer had been grain.

There was again robust resurgence on L1 in all groups (top right panel of Figure 3). There was a reliable increase from the last session of Phase 2 to the Test session, F(1, 28) = 21.60, MSE = 7.15. That increase did not depend, however, upon either the Phase 1 Pellet, Phase 2 Pellet, or their interaction, Fs < 1. Animals given Sucrose during Phase 2 showed a near-reliable tendency to make more L1 responses in both of these sessions, F(1, 28) = 4.06, MSE = 12.20, p = .054, but the Phase 1 Pellet, F < 1, and the Phase 1 Pellet × Phase 2 Pellet interaction, F(1, 28) = 1.61, MSE = 12.20, did not approach significance. Although pressing on L2 showed reliable extinction (shown in bottom right panel of the figure), F(1, 28) = 81.54, MSE = 100.60, that decrease did not depend upon Phase 1 Pellet, F < 1, Phase 2 Pellet, F(1, 28) = 2.34, MSE = 100.60, or their interaction, F(1, 28) = 1.49, MSE = 100.60. Effects of Pellet type on L2 overall were not detectable in these sessions, with Phase 1 Pellet, F < 1, Phase 2 Pellet, F(1, 28) = 2.55, MSE = 326.60, and the interaction, F(1, 28) = 2.81, MSE = 326.60 all falling short of reliability, all ps > .11.

Discussion

With the present method, switching the reinforcer from grain to sucrose or sucrose to grain had no detectable impact on the strength of the resurgence effect. One possibility is that the animals did not discriminate between the two pellet types. Although the results of the Phase 1 acquisition phase suggested that the two pellets had comparable reinforcing effects (i.e., they did not yield different levels of L1 responding in Phase 1), the rats switched from grain to sucrose pellets during Phase 2 pressed L2 at a higher rate than rats that continued to receive grain. This increase is reminiscent of a positive contrast effect (e.g., Flaherty, 1996), and suggests that the rats did perceive a difference between the pellets. Other experiments that are in progress in our laboratory also suggest that the grain and sucrose pellets are perceived as different. For example, rats that lever press for sucrose and are then switched to grain for the same response show a depressed level of responding for grain. Such results, and others, suggest it is unlikely that the rats failed to discriminate between the reinforcers used in Phases 1 and 2.

The lack of an effect of changing the reinforcer might pose a challenge for the context-switch account of resurgence (e.g., Winterbauer & Bouton, 2010). Reinforcing the new behavior with a new reinforcer in Phase 2 might create a greater contextual change between conditioning and extinction than not changing the reinforcer. Whether this should yield a greater renewal effect is not necessarily clear, however. Empirically, we know that generalization between contexts can play a role in instrumental renewal; Todd et al. (2012) found that ABC renewal is strengthened by stronger generalization between the conditioning and test contexts (A and C) and weakened by stronger generalization between the extinction and test contexts (B and C). However, we know of no work that has compared ABC renewal after manipulating the degree of similarity between Contexts A and B. A possible exception is a recent experiment by Laborda, Witnauer, and Miller (2011), who found that ABC renewal was stronger than AAB (“AAC”) renewal in a Pavlovian fear conditioning preparation. Note that in the resurgence paradigm, there is no direct equivalent of an AAB or AAC design, because even when the reinforcer does not change between Phases 1 and 2, there is an immediate change in stimulus conditions (e.g., a new lever is inserted and a new L2-pellet contingency is immediately in effect). The animal demonstrates its perception of the stimulus change by learning to press L2.

General Discussion

The present experiments investigated the effects of several fundamental variables on the strength of resurgence. The results of Experiment 1 indicate that resurgence is strengthened by increasing the number of sessions of Phase 1 training and by using ratio rather than an interval schedule of reinforcement. These results, along with our previous finding that resurgence requires that L1 responding actually be associated with reinforcement (Winterbauer & Bouton, 2010), clearly indicate that resurgence depends on the original behavior's reinforcement history. The results of Experiment 2 in turn indicate that, with Experiment 1's extended (12-session) training, resurgence was not affected by a 9-fold increase in the amount of Phase 2 training. That result further attests to the strength of the resurgence effect with the extended Phase 1 training protocol. Finally, the results of Experiment 3 suggest that the method also yielded a form of resurgence that was not affected by a change in the reinforcer identity between Phases 1 and 2. Overall, the results thus suggest that when more than a minimal amount of training occurs in Phase 1 (12 sessions as opposed to our previous 4 or 5), resurgence is robust against these variations in Phase 2 training.

It is important to note that, in Experiment 1, the reinforcement schedule and the amount of Phase 1 training strongly influenced resurgence despite the fact that the yoking procedure matched the comparison groups on reinforcement rate. Perhaps the simplest explanation is that resurgence reflects the response rate or pattern that was acquired in Phase 1; that is, beyond any effect of reinforcement rate, resurgence crucially recaptures an aspect of the L1 performance that was acquired during Phase 1. Interestingly, the groups' rates of L1 responding resurged to a similar percentage of the rate evident in the last Phase 1 session (23.5, 24.8, 16.8, and 20.7% for Groups 12 Ratio, 4 Ratio, 12 VI, and 4 VI, respectively). An ANOVA on the percentage data found no main effects or interaction, largest F(1,44) = 2.62, MSE = 134.9, p = .11 (for the length of training). Thus, the resurgence treatment caused all groups to recapture their original responding similarly. We previously noted that Cançado and Lattal (2011) have shown that animals trained with fixed interval reinforcement schedules in Phase 1 show characteristic scallops in their response rate during resurgence testing. Overall, the results thus suggest that some representation or aspect of the pattern and/or rate of the Phase 1 responding survives instrumental extinction and manifests itself in the pattern and/or rate of responding in resurgence.

Experiment 2's finding that extensive Phase 2 extinction did not abolish resurgence (Experiment 2) appears to contrast with the results of Leitenberg et al. (1975), who reported that 27 sessions of extinction plus alternative reinforcement reduced resurgence of a lever pressing response that had received five initial sessions of VI 30 training. Unfortunately, as we noted earlier, Leitenberg et al. did not report the durations of the training sessions in either phase of the experiment or the reinforcement schedule used in Phase 2. Among other differences, the present Experiment 2 studied the effects of extended extinction after our more extended-training protocol (12 rather than 4 sessions of initial VI 30 training). It is thus possible that the more extensive training yields a form of resurgence that is more robust against the amount of Phase 2 training. In addition, we used a rich and predictable FR 10 reinforcement schedule for L2 responding in Phase 2, and this might have made it easy for the animals to detect the change in conditions during the transition from Phase 2 to testing, when L2 underwent extinction. Winterbauer and Bouton (2012) found that thinning the L2 reinforcement schedule and holding it at a value of VI 120 for eight sessions significantly weakened the subsequent resurgence effect. The overall pattern of results across experiments is consistent with the possibility that the effect of the extent of Phase 2 training might interact with the amount of Phase 1 training and/or the schedule of reinforcement used in Phase 2.

It is worth noting that the context change account of resurgence, which holds that the animal will resurge when it fails to generalize from Phase 2 to testing, is compatible with a well-known account of extinction claiming that responding declines when the animal fails to generalize between conditioning and extinction. Capaldi (1967, 1994) has made especially elegant use of this argument in explaining the partial reinforcement extinction effect (PREE), in which intermittently-reinforced behaviors are slower to decline in extinction than those that have always (“continuously”) been reinforced. According to Capaldi's view, the PREE occurs because the conditions of partial reinforcement generalize better than continuous reinforcement to the conditions of extinction. The account has been extremely successful as an explanation of the PREE (e.g., see Mackintosh, 1974, for one review). Based on the connection between the context-change account of resurgence and this account of extinction, one can predict that reinforcement conditions that yield a strong PREE (and thus encourage generalization between conditioning and extinction) will also yield weak resurgence when they are used to reinforce L2 during Phase 2.

The present results might provide a new challenge to the context-change hypothesis, however. Specifically, reinforcer change between Phases 1 and 2 had little effect on resurgence (Experiment 3), even though it would presumably increase the difference between the conditioning and the extinction contexts. However, it is difficult to say how much the change in reinforcer adds to the contextual change that is already occurring on other dimensions (e.g., with L2 insertion and the new L2 contingency). And, as we noted earlier, although we know that contextual generalization is important in instrumental renewal (Todd et al., 2012), we presently have little information on whether instrumental ABC renewal is affected strongly by further increasing the difference between the conditioning and extinction contexts (A and B). The context change account of resurgence does predict that there should be testable parallels between effects observed in both ABC renewal (as well as the partial reinforcement extinction effect). Interestingly, like resurgence in Experiment 1, instrumental ABC renewal has been shown to increase with more extensive Phase 1 training (Todd et al., 2012, Experiment 2).

The results can also be viewed from the perspective of Shahan and Sweeney's (2011) quantitative model extending behavioral momentum theory. That model highlights the role of several variables in producing resurgence. In particular, it emphasizes the rate at which the first behavior was reinforced during Phase 1 (the higher, the more resurgence is expected), the rate of the original behavior at the end of Phase 1 (higher responding yields more resurgence), the rate of reinforcement for the second behavior during Phase 2 (higher rates of reinforcement of the alternative behavior cause more disruption and more indirect strengthening of the first behavior, and thus stronger resurgence), and the amount of time occupied by Phase 2 (more extensive extinction and reinforcement in the presence of the original and new behaviors should decrease final resurgence). These variables are each represented in an equation that combines them with several additional scaling parameters to predict response rate in Phase 2 and during resurgence testing. Although the model makes no prediction concerning the reinforcer change studied in the present Experiment 3, it does make predictions concerning Experiments 1 and 2. We therefore ran simulations with parameter values within the range used by Shahan and Sweeney (2011; c = 1.0, k = 0.05, d = 0.001, and b = 0.05). In Experiment 1, the model predicted levels of resurgence that exactly mirrored the end-of-acquisition rates of responding, fitting the statistical, if not quite the precise, pattern of group means that we observed (see Figure 1). But the model also predicted that the rates of L1 responding in extinction would preserve the same ordering. In contrast, L1 responding in extinction depended on the amount of its previous training, but not on its schedule of reinforcement. We therefore explored some changes in parameter values and found that the Phase 2 prediction could be improved without changing the resurgence prediction if we assumed that extended training yielded a response that is less easy to disrupt. That is, when we decreased the value of the model's k parameter (which represents sensitivity to disruption) for the extended-trained groups, the predicted Phase 2 response rates were more in line with the data.

The results of Experiment 2 are more difficult to reconcile with the Shahan-Sweeney model, however. In contrast to the results of Experiment 2, which showed no discernible impact of increasing the amount of Phase 2 training by a factor of nine, the model predicts that increased Phase 2 training should reduce the level of resurgence. (Interestingly, although changes in the amount of extinction can produce minimal changes in resurgence in the model, this is only seen under conditions in which the amounts of extinction all lead to very little resurgence; in the range of extinction amounts that continue to produce considerable resurgence, the model predicts that animals will be quite affected by small changes in the amount of L1 extinction.) We noted above that the effect of the amount of Phase 2 training might well interact with other variables, including the reinforcement schedule used in Phase 2 as well as the amount of original Phase 1 training. Further investigation is warranted.

The results have practical implications. It is especially noteworthy that resurgence was robust and resistant to elimination after extended training in Phase 1. An extended reinforcement history is a feature of many problematic instrumental behaviors, such as smoking and overeating. For example, the pack-a-day cigarette smoker smokes 7,300 cigarettes a year (or 73,000 a decade), a large amount of initial training that laboratory experiments may only barely approximate. The present results also imply that simply extending extinction training (Experiment 2) might not be the most efficient way to improve the efficacy of treatment. Instead, as noted above, the context change hypothesis suggests that treatments will be more effective if they make the conditions of treatment (extinction) as similar as possible to the conditions of relapse. One possibility is to thin the reinforcement schedule for the alternative behavior so that extinction becomes associated with longer and longer intervals between reinforcers, in a way that begins to approximate extinction and thus helps ensure generalization to the test conditions. Winterbauer and Bouton (2012) have recently presented data suggesting the value of exactly this approach.

Acknowledgments

This research was supported by Grant RO1 MH648437 from the National Institute of Mental Health to MEB. SL is at Philipps-Universität Marburg. We thank Grace Bouton for her help with data collection and Travis Todd and Drina Vurbic for their comments.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Baker AG, Steinwald H, Bouton ME. Contextual conditioning and reinstatement of extinguished instrumental responding. The Quarterly Journal of Experimental Psychology. 1991;43B:199–218. [Google Scholar]

- Bouton ME. Context and behavioral processes in extinction. Learning & Memory. 2004;11:485–494. doi: 10.1101/lm.78804. [DOI] [PubMed] [Google Scholar]

- Bouton ME, Rosengard C, Achenbach GG, Peck CA, Brooks DC. Effects of contextual conditioning and unconditional stimulus presentation on performance in appetitive conditioning. The Quarterly Journal of Experimental Psychology. 1993;46B:63–95. [PubMed] [Google Scholar]

- Bouton ME, Swartzentruber D. Sources of relapse after extinction in Pavlovian and instrumental learning. Clinical Psychology Review. 1991;11:123–140. [Google Scholar]

- Bouton ME, Todd TP, Vurbic D, Winterbauer NE. Renewal after the extinction of free-operant behavior. Learning & Behavior. 2011;39:57–67. doi: 10.3758/s13420-011-0018-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Todd TP. Relapse processes after the extinction of instrumental learning: Renewal, resurgence, and reacquisition. Behavioural Processes. 2012 doi: 10.1016/j.beproc.2012.03.004. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouton ME, Winterbauer NE, Vurbic D. Context and extinction: Mechanisms of relapse in drug self-administration. In: Haselgrove M, Hogarth L, editors. Clinical applications of learning theory. Psychology Press; East Sussex, UK: 2012. pp. 103–133. [Google Scholar]

- Bouton ME, Woods AM. Extinction: Behavioral mechanisms and their implications. In: Byrne JH, Sweatt D, Menzel R, Eichenbaum H, Roediger H, editors. Learning and memory: A comprehensive reference. Vol 1. Elsevier; Oxford, UK: 2008. pp. 151–171. [Google Scholar]

- Capaldi EJ. A sequential hypothesis of instrumental learning. In: Spence KW, Spence JT, editors. The psychology of learning and motivation. Academic Press; New York: 1967. pp. 67–156. [Google Scholar]

- Capaldi EJ. The sequential view: From rapidly fading stimulus traces to the organization of memory and the abstract concept of number. Psychonomic Bulletin & Review. 1994;1:156–181. doi: 10.3758/BF03200771. [DOI] [PubMed] [Google Scholar]

- Cançado CRX, Lattal KA. Resurgence of temporal patterns of responding. Journal of the Experimental Analysis of Behavior. 2011;95:271–287. doi: 10.1901/jeab.2011.95-271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Catania AC, Matthews TJ, Silverman PJ, Yohalem R. Yoked variable-ratio and variable-interval responding in pigeons. Journal of the Experimental Analysis of Behavior. 1977;28:155–161. doi: 10.1901/jeab.1977.28-155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denniston JC, Chang RC, Miller RR. Massive extinction attenuates the renewal effect. Learning and Motivation. 2003;34:68–86. [Google Scholar]

- Fisher EB, Green L, Calvert AL, Glasgow RE. Incentives in the modification and cessation of cigarette smoking. In: Schachtman TR, Reilly S, editors. Associative learning and conditioning theory: Human and non-human applications. Oxford University Press; Oxford: 2011. pp. 321–342. [Google Scholar]

- Flaherty CF. Incentive relativity. Cambridge University Press; New York: 1996. [Google Scholar]

- Higgins ST, Heil SH, Lussier JP. Clinical implications of reinforcement as a determinant of substance abuse disorders. Annual Review of Psychology. 2004;55:431–461. doi: 10.1146/annurev.psych.55.090902.142033. [DOI] [PubMed] [Google Scholar]

- Laborda MA, Witnauer JE, Miller RR. Contrasting AAC and ABC renewal: The role of context associations. Learning & Behavior. 2011;39:46–56. doi: 10.3758/s13420-010-0007-1. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Bath K. Reinforcement of competing behavior during extinction. Science. 1970;169:301–303. doi: 10.1126/science.169.3942.301. [DOI] [PubMed] [Google Scholar]

- Leitenberg H, Rawson RA, Mulick JA. Extinction and reinforcement of alternative behavior. Journal of Comparative and Physiological Psychology. 1975;88:640–652. [Google Scholar]

- Lieving GA, Lattal KA. Recency, repeatability, and reinforcement retrenchment: an experimental analysis of resurgence. Journal of Experimental Analysis of Behavior. 2003;80:217–233. doi: 10.1901/jeab.2003.80-217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mackintosh NJ. The psychology of animal learning. Academic Press; SanDiego, CA: 1974. [Google Scholar]

- Nakajima S, Tanaka S, Urushihara K, Imada H. Renewal of extinguished lever-press responses upon return to the training context. Learning and Motivation. 2000;31:416–431. [Google Scholar]

- Nevin JA, Grace RC. Behavioral momentum and the Law of Effect. Behavioral and Brain Sciences. 2000;23:73–90. doi: 10.1017/s0140525x00002405. [DOI] [PubMed] [Google Scholar]

- Podlesnik CA, Jimenez-Gomez C, Shahan TA. Resurgence of alcohol seeking produced by discounting non-drug reinforcement as an animal model of drug relapse. Behavioral Pharmacology. 2006;17:369–374. doi: 10.1097/01.fbp.0000224385.09486.ba. [DOI] [PubMed] [Google Scholar]

- Podlesnik CA, Shahan TA. Behavioral momentum and relapse of extinguished operant responding. Learning & Behavior. 2009;37:357–364. doi: 10.3758/LB.37.4.357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quick SL, Pyszczynski AD, Colston KA, Shahan TA. Loss of alternative non-drug reinforcement induces relapse of cocaine-seeking in rats: Role of dopamine D(1) receptors. Neuropsychopharmacology. 2011;36:1015–1020. doi: 10.1038/npp.2010.239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauhut AS, Thomas BL, Ayres JJB. Treatments that weaken Pavlovian conditioned fear and thwart its renewal in rats: Implications for treating human phobias. Journal of Experimental Psychology: Animal Behavior Processes. 2001;27:99–114. [PubMed] [Google Scholar]

- Rawson RA, Leitenberg H, Mulick JA, Lefebvre MF. Recovery of extinction responding in rats following discontinuation of reinforcement of alternative behavior: A test of two explanations. Animal Learning & Behavior. 1977;5:415–420. [Google Scholar]

- Reid RL. The role of the reinforcer as stimulus. British Journal of Psychology. 1958;49:202–209. doi: 10.1111/j.2044-8295.1958.tb00658.x. [DOI] [PubMed] [Google Scholar]

- Rescorla RA, Skucy JC. Effect of response-independent reinforcers during extinction. Journal of Comparative and Physiological Psychology. 1969;67:381–389. [Google Scholar]

- Shahan TA, Sweeney MM. A model of resurgence based on behavioral momentum theory. Journal of the Experimental Analysis of Behavior. 2011;95:91–108. doi: 10.1901/jeab.2011.95-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas BL, Vurbic D, Novak C. Extensive extinction in multiple contexts eliminates the renewal of conditioned fear in rats. Learning and Motivation. 2009;40:147–159. [Google Scholar]

- Todd TP, Winterbauer NE, Bouton ME. Effects of amount of acquisition and contextual generalization on the renewal of instrumental behavior after extinction. Learning & Behavior. 2012 doi: 10.3758/s13420-011-0051-5. in press. [DOI] [PubMed] [Google Scholar]

- Weise-Kelly L, Siegel S. Self-administration cues as signals: Drug self-administration and tolerance. Journal of Experimental Psychology:Animal Behavior Processes. 2001;27:125–136. [PubMed] [Google Scholar]

- Welker RL, McAuley K. Reductions in resistance to extinction and spontaneous recovery as a function of changes in transportational and contextual stimuli. Animal Learning & Behavior. 1978;6:451–457. [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence of an extinguished operant response. Journal of Experimental Psychology: Animal Behavior Processes. 2010;36:343–353. doi: 10.1037/a0017365. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Mechanisms of resurgence II: Response-contingent reinforcers can reinstate a second extinguished behavior. Learning and Motivation. 2011;42:154–164. doi: 10.1016/j.lmot.2011.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winterbauer NE, Bouton ME. Effects of thinning the rate at which the alternative behavior is reinforced on resurgence of an extinguished instrumental response. Journal of Experimental Psychology: Animal Behavior Processes. 2012;38:279–291. doi: 10.1037/a0028853. [DOI] [PMC free article] [PubMed] [Google Scholar]