Abstract

REDCap (Research Electronic Data Capture) is a web-based software solution and tool set that allows biomedical researchers to create secure online forms for data capture, management and analysis with minimal effort and training. The Shared Data Instrument Library (SDIL) is a relatively new component of REDCap that allows sharing of commonly used data collection instruments for immediate study use by 3 research teams. Objectives of the SDIL project include: 1) facilitating reuse of data dictionaries and reducing duplication of effort; 2) promoting the use of validated data collection instruments, data standards and best practices; and 3) promoting research collaboration and data sharing. Instruments submitted to the library are reviewed by a library oversight committee, with rotating membership from multiple institutions, which ensures quality, relevance and legality of shared instruments. The design allows researchers to download the instruments in a consumable electronic format in the REDCap environment. At the time of this writing, the SDIL contains over 128 data collection instruments. Over 2500 instances of instruments have been downloaded by researchers at multiple institutions. In this paper we describe the library platform, provide detail about experience gained during the first 25 months of sharing public domain instruments and provide evidence of impact for the SDIL across the REDCap consortium research community. We postulate that the shared library of instruments reduces the burden of adhering to sound data collection principles while promoting best practices.

Keywords: Validated instruments, Data Collection, Data Sharing, Informatics, Translational Research, REDCap

1. Introduction

The value of Electronic Data Capture (EDC) in clinical research is well established [1–3]. As a result, several commercially available enterprise-strength clinical trials management systems (CTMS) incorporate EDC in addition to workflow for the pharmaceutical industry. Although a good number of academic medical centers have adopted a variety of CTMS solutions [4], a commercial one-size-fits-all approach to EDC has been elusive. REDCap (Research Electronic Data Capture) has been instrumental in filling this gap [5, 6], and is widely used in many academic medical centers. REDCap was initially developed at Vanderbilt University, and is currently supported collaboratively by a large consortium of domestic and international partners. REDCap adoption has grown significantly since its release in 2004. At the time of this writing, it is in active use at over 414 institutions world-wide and supports more than 52,000 users.

Standardization remains a challenge for most EDC systems that focus primarily on flexibility and efficiency of data entry. Collecting data in a standardized fashion is integral to research quality, consistency, and reproducibility. However, choosing common data standards for clinical research is difficult due to a diversity of interests in the clinical and translational research community and due to technical challenges related to the structure and intended use of various candidate data and terminology standards [7, 8].

Research best practices stipulate the use of validated data collection instruments such as the RAND quality of life 36-Item Short Form Survey (SF-36) [9], the Patient Reported Outcomes Measurement Information System (PROMIS) [10], the Patient Health Questionnaire (PHQ) -9 depression module [11], and many others. The Shared Data Instrument Library (SDIL) was designed to promote the reuse of validated instruments by research teams across the entire REDCap consortium. In addition to technical infrastructure required to host and share instruments with REDCap users, a governance committee structure was assembled to oversee and guide operational aspects. The REDCap Library Oversight Committee (REDLOC) was created with the mission to review and prioritize library content and procedures and to promote the adoption of validated instruments and standards-based forms for data collection. This manuscript describes the overall REDCap SDIL approach to instrument sharing and the REDLOC procedural approach to instrument identification and accrual. We also report here lessons learned and metrics related to researcher uptake of shared instruments during the initial 25 months of SDIL operation.

2. Background

REDCap was designed to allow researchers with a robust data management plan to quickly define project-specific data capture forms and launch protocol data collection in an accelerated period of time [5]. Research projects are inherently diverse and are developed independently by investigative teams across the spectrum of biomedical sciences. As such these projects tend to have diverse data dictionaries for representing common data elements such as demographics, clinical findings and laboratory results. Providing pre-built, shared forms may shorten the database development process while promoting harmonization of data collection. Although data collection form creation is straightforward in REDCap, researchers relayed early in the program their desire to reuse validated instruments developed previously by their own teams or by other research teams in the consortium. In addition to saving setup time for research studies, creating easily consumable, pre-defined data collection instruments from a shared library would facilitate the harmonization of data collection across multiple studies since they would be using a common data dictionary.

In the REDCap consortium software distribution model, all adopting sites host and manage their own web/database servers and also support the local researcher population in creating and managing new research projects. While this model works well for maximally protecting research study data and enabling complete autonomy by research institutions, the decentralized design is suboptimal for promoting the rapid inclusion and reuse of instruments from a central source. As a result a technical solution was devised for centralized electronic storage and distribution of data collection instruments via a shared library. The library is hosted on one central server at Vanderbilt University and is accessible to all the REDCap installations across the consortium. The REDCap base code package running at each local site includes several features for building and managing data collection instruments for each created study. These features include a user-friendly interface for browsing and searching through the library and one-click importation of the selected instrument to the local REDCap study for immediate use by research teams.

Providing a centralized instrument library and technical mechanism for easy dissemination and consumption of stored instruments was an essential piece of the SDIL challenge. However, a library is only useful if it contains a body of sharable common forms (in this case validated data collection instruments pre-coded in the REDCap data dictionary format). We established the REDCap Library Oversight Committee (REDLOC) to create and implement policy necessary to fulfill the following SDIL objectives: 1) facilitate reuse of data dictionaries and reduce duplication of effort; 2) promote use of validated data collection instruments; 3) facilitate the adoption of data standards (standard forms and terminologies) when appropriate; and 4) promote and facilitate data sharing by harmonizing data collection instruments across multiple projects and researchers.

Here we should clarify the meaning of “validated data collection instruments”. Validity is defined as the degree to which the data measure what they are intended to measure – that is, the results of a measurement correspond to the true state of the phenomenon being measured [12]. Thus, validated instruments have been subjected to a procedure (a validation study) to assess the degree to which the measurement obtained by the instrument is real. For example, a questionnaire used to screen for depression should identify a high proportion of persons with the condition, and should be "negative" in a high proportion of persons that are not depressed. In contrast “standardized data collection” implies the use of standards as defined by Richesson et al [7]. This includes standard data structures or data models such as the Clinical Data Acquisition Standards Harmonization (CDASH) Adverse Events module and National Cancer Institute (NCI) Common Data Elements, and standard terminologies or value sets such as the use of Centers for Disease Control and Prevention (CDC) race and ethnicity codes used in the NCI Demography Case Report Form (CRF) Module.

3. Methods

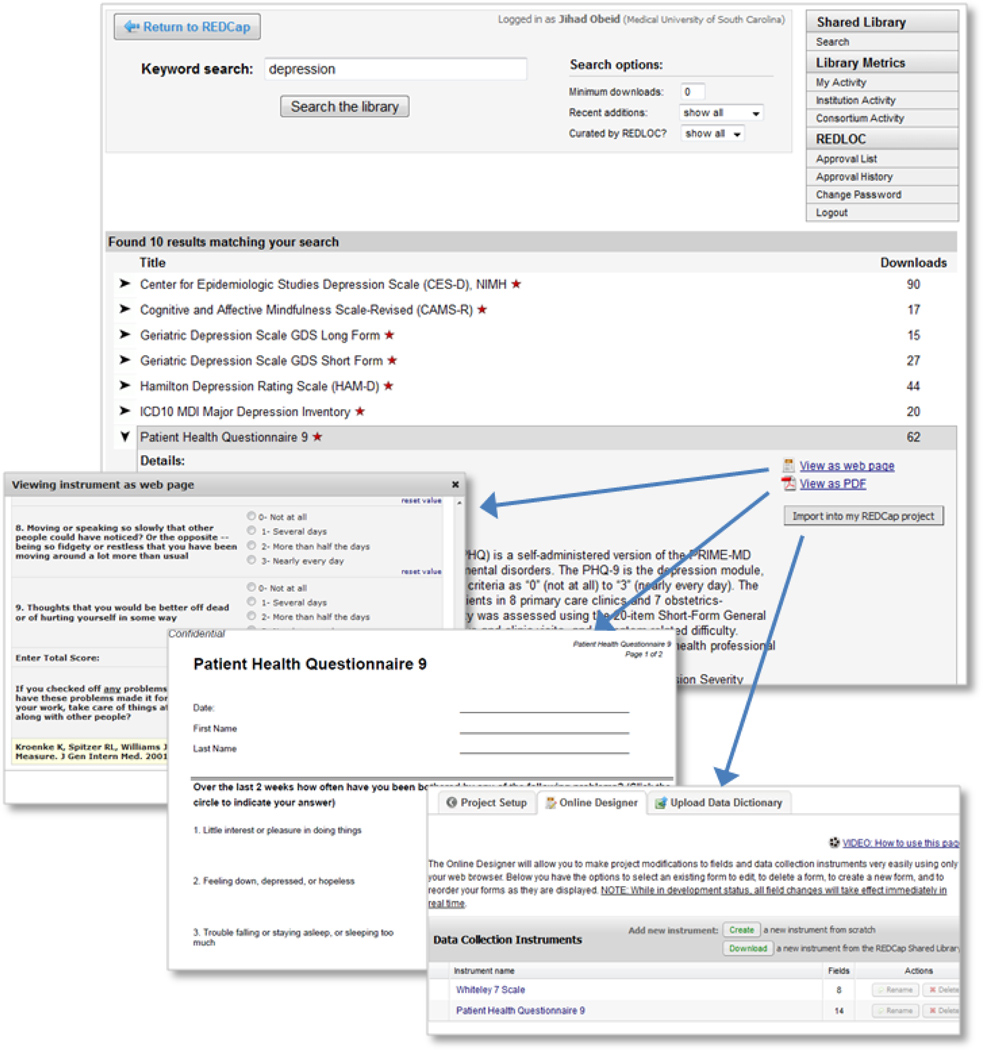

The REDCap SDIL technical infrastructure was developed according to plan and is now an integral component of the REDCap suite of data management tools. Research end-users can browse the library’s content from the central program website (www.project-redcap.org under the “Library” tab) or through the “Project Setup” module in their local REDCap server installation (figure 1). Data collection instruments are listed alphabetically by title and a search function allows users to filter the list and locate instruments using keywords, date of inclusion and/or number of downloads. More detailed information can be seen when a specific instrument is selected including references, description, view of the web-enabled form of the instrument and a PDF copy. The Shared Library page contains a table of real time library utilization metrics and a link to a form that allows public website users to suggest other instruments for inclusion in the library. Once an instrument is identified for use by a REDCap researcher end-user, he/she agrees to a ‘terms of use’ clause (includes general SDIL terms plus specific instrument conditions – e.g. citation requirements).

Figure 1. SDIL screens and researcher workflow.

The REDCap consortium website hosts the SDIL. Users search for instruments based on annotation fields completed when instruments are ‘checked in’ to the library. Once an instrument is identified, users have one-click access to see the instrument rendered in REDCap data collection mode or as a PDF document. When users access the library via their local REDCap installation, they are also presented with a button which allows them to import of the data collection instrument for immediate use within their REDCap research projects.

Users can then proceed to import the instrument’s data dictionary into their local REDCap project for immediate use. Behind the scenes, this workflow process results in the download of the data dictionary code in native XML library format from the library server, parsing of the XML by the local REDCap instance, and integration into the local project for immediate use by the researcher end-user. Since the data dictionary is now incorporated into the local project, the researcher end-user has the option to add, edit or delete fields, if they so choose, at the risk of diminishing or losing the validity of the data collection instrument.

3.1 Policy and Governance

The REDLOC group was assembled prior to project launch as an overseeing body to assist with the management of SDIL content, with special emphasis on end-user relevance and availability. The committee also reviews library utilization metrics, long-term sustainability and direction of the content areas. The committee was formed in October, 2009 by assembling ten volunteer members and chairs from the REDCap consortium and a program coordinator from Vanderbilt University. As the number of incoming instruments increased and the value of the library became evident, additional volunteers were added to the committee during the second year including two librarians, domain experts and expert coders who spontaneously formed a team that enabled the steady growth observed in the library content. Currently the committee is composed of 12 members two of whom are co-chairs. Membership is rotated annually and is open to volunteers from any institution in the consortium. Recruitment is announced annually on the dedicated REDCap consortium listserv. REDLOC members meet twice each month by webinar and review recommendations for instrument inclusion asynchronously by e-mail. The average total time commitment for REDLOC members is approximately 3-hours per month.

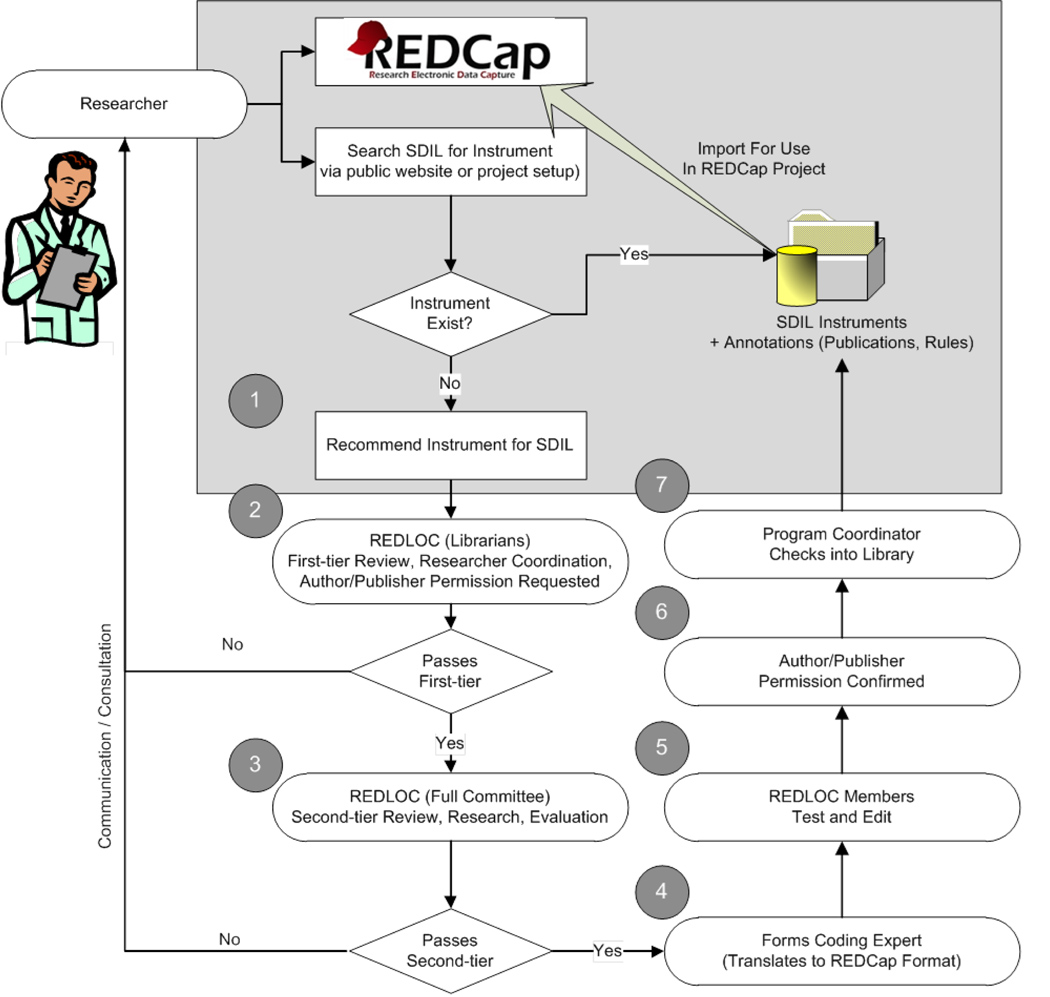

Existing library content is open to all consortium member installations. Furthermore, the workflow for adding instruments to the library was designed to be as open to research teams as possible (see figure 2). The process begins when a research end-user completes a recommendation for an instrument on the library website (mentioned above under the “Library” tab). A REDLOC librarian is assigned to: 1) confirm that the recommended instrument has been previously validated and published; 2) identify the copyright owner; and 3) confirm that no license fee is required for use under standard terms and conditions for research teams at academic and non-profit institutions using the REDCap data management platform. Next, REDLOC members are contacted to independently determine a priority score for the proposed instrument using objective criteria including evidence of scientific validation in peer-reviewed literature, evidence of relevance (e.g. citations in peer-reviewed literature, evaluation studies by domain experts, and publications that report using the proposed instruments) and estimation of the time required for coding the instrument.

Figure 2. Library workflow.

The workflow emphasizes research-team continuous engagement. The process begins with (1) an end-user recommendation for an instrument (as described in the narrative) followed by a screening and review process by the REDLOC librarian and review committee respectively (steps 2 and 3). If approved the instrument is assigned to a committee member for coding into REDCap format (4) then undergoes testing and verification (5). The REDLOC Program Coordinator sends an official permission letter (6) to the copyright owner (author or publisher) with a copy of the REDCap form of their instrument. (7) Upon receipt of formal permission for publication, the new instrument is included in the library and shared, with proper acknowledgement and terms of use included.

In order of priority, the instrument is assigned to a committee member for coding into REDCap format. Once available in REDCap format, REDLOC members are assigned to verify that the coded version is as faithful and complete a copy as possible of the source document (this includes the validation of permissible value sets if applicable). They also test the form to verify that entered data is saved correctly. The REDLOC Program Coordinator sends an official letter to the copyright owner, author or publisher (to be completed, signed and returned), and a copy of the REDCap form of their instrument. In some cases when working with open standards, agreements are reached with the respective organization (e.g. CDISC, NCI or PROMIS) that result in the sharing of instruments and the inclusion of specific language in the click through “terms of use”. Upon receipt of formal permission from the copyright owner, author or publisher for inclusion, the new instrument is included in the library and shared, with proper acknowledgement and terms of use.

4. Results

The REDLOC team tracks utilization of the library and continually monitors progress to assess relevance to and impact for consortium researchers. Since the inception of the SDIL in January, 2010, a total of 184 instruments were considered for inclusion into the library based on an initial needs assessment and ongoing recommendations from users. Forty two of the instruments submitted for review were rejected for various reasons including fees and/or licensing requirements from the publisher or author, or attached conditions for distribution that could not be met at the time of inclusion, such as regular utilization reports back to the author.

As of June, 2012, 128 instruments have been included in the library. This number includes a few large instruments that were broken down into smaller functional units, while maintaining instrument validity, in order to optimize for more granular choices and flexibility of utilization by end users, for example the Behavioral Risk Factor Surveillance System Questionnaire (BRFSS) [13], and the PROMIS forms [10].

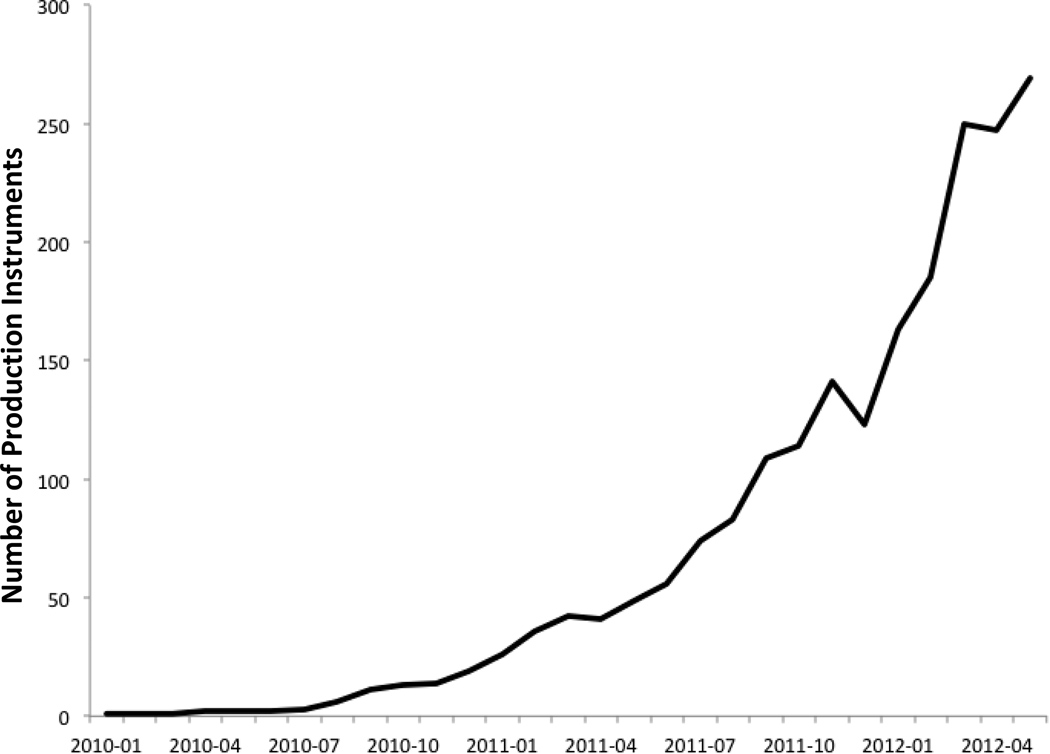

During the first 30 months of operation, 2578 unique instrument downloads were recorded by all consortium members. Instrument downloads are typically initiated by researchers to support the creation of databases to support studies in the development phase, but they may also occur during REDCap demonstrations or in some cases where study databases are initiated, but never moved to production (reasons might include lack of funding or regulatory denial). Although all REDCap project data are hosted locally by consortium partner sites and not discoverable by other sites, we have embedded into the software platform the ability for local informatics teams to regularly report rough metrics of use and impact back to a global consortium impact database (e.g. pooled data detailing the number of site-supported projects and end-users). This aggregate reporting also includes detection of downloaded SDIL instruments that are currently supporting local study databases. Instrument use data are de-coupled from other reported information, but provide sufficient detail to pool and study impact of the SDIL on a by-instrument and by-institution basis. We took a conservative approach in generating metrics for this manuscript, including statistics for projects that have downloaded instruments and eventually moved to production (i.e. utilized the instrument to collect live data from participants not just for development or testing). To date, there have been 292 downloaded instances of 72 instruments (out of 128 or 56%) that have been utilized in production. Figure 3 shows the adoption of SDIL instruments by projects in production over time. The most utilized instruments are: Rand 36-Item SF Health Survey Instrument v1.0 [9], 52 projects; Charlson Comorbidity Index [14], 21 projects; Center for Epidemiologic Studies Depression Scale (CESD) [15], 22 projects; Agitated Behavior Scale [16], 17 projects; Barthel Index [17], 11 projects; and Hamilton Depression Rating Scale (HAM-D) [18], 11 projects. Thus far, 53 different institutions have utilized the SDIL in at least one production project.

Figure 3. Library metrics.

This graph demonstrates the utilization of SDIL instruments over time. The y axis depicts number of projects that are in production consuming shared instruments from the library. The x-axis denotes time by months. The data was collected from across the consortium using routinely centrally collected utilization metrics for projects in production. The dips in the graph reflect the projects that have since been retired and rendered inactive.

5. Discussion

The choice of a validated instrument or data collection form is a per-study, science driven decision that is made by research teams. Having pre-coded data capture instruments that meet research projects’ requirements enable data coordinators and programmers to bypass the form design phase prior to the commencement of data collection. This inherently shortens the initial phase of implementing a data management and consequently the research cycle. The approach taken here is to provide researchers the opportunity to suggest data collection forms and instruments for inclusion in a vast library of pre-coded instruments deemed to be in demand to the fast-growing REDCap research community. We have set out to evaluate the success of this approach by discussing progress in achieving the original objectives of the SDIL.

5.1. Reuse of Data Dictionaries

Many investigators resort to de novo creation of data dictionaries when starting a new research project. One of the great features of REDCap is the empowering of researchers to code their own CRF’s in a flexible and straightforward manner, but this process can be time-consuming and may result in diverse implementations for the same data elements across multiple projects. REDCap CRF data dictionaries can be saved and reused by the same research team or other teams if they are aware of each other’s work, but require somewhat savvy users to export, move and import these dictionaries [5]. A key missing component in this scenario is a mechanism to facilitate the exchange of data dictionaries. The SDIL addresses that deficiency by providing a centralized location where researchers can find pre-coded, validated CRF data dictionaries for easy incorporation into their REDCap projects. Moreover, researchers can recommend validated data dictionaries for inclusion into the library for potential collaborators to use for their projects. A similar effort is ongoing in the behavioral and social science field as part of the Grid- Enabled Measures (GEM) project built upon the caBIG® platform [19], However, what differentiates SDIL from GEM is the fact that the instruments are already encoded in a consumable REDCap data dictionary format and can be readily incorporated into new research projects or pre-existing projects’ data dictionaries by research teams at any institution using the REDCap platform. Thus the novelty of this approach is in the provision of a curated set of consumable instruments in the form of pre-coded REDCap data dictionaries that are readily available at the fingertips of researchers. Once selected, an instrument can be automatically inserted into the user’s research project and used for data entry. The fact that these are curated instruments eliminates the burden on research users by providing references about validity, accuracy and availability in the public domain. The final decision about relevance and use in a given research project remains with the researchers who are the domain and subject matter experts in their field.

5.2. Promotion of Validated Data Collection Instruments Utilization

There exist several validated data collection instruments that are highly documented with dedicated websites, downloadable forms and description of scoring methods, along with clear terms and conditions for use [9, 10, 13], However these forms are not available in a format amenable to electronic data capture and therefore require programming or coding to turn them into functional databases. Alternatively, several validated data collection instruments are highly documented, but do not have dedicated portals to disseminate their use. Once researchers choose their instruments or measures, they often turn to REDCap to create their study databases incorporating the chosen instruments before they can begin data entry. The SDIL resource addresses these issues by making several of these public domain instruments available for download as data dictionaries that can be incorporated right into the database project ready for use. As such the SDIL not only significantly shortens database development time for researchers, but also promotes the use of the “right” tools for the job, by providing a path of least effort to an expanding collection of validated instruments or measures recommended by the research community for their research projects. The exponential growth in the number of downloads as seen in figure 3 provides evidence in support of this hypothesis. Finally, REDLOC has considered instruments that have been validated in languages other than English (e.g. Spanish and French). The committee has reached out beyond its membership as needed to find reviewers who are qualified for such reviews. A few non- English validated instruments are currently under review. We expect that demand for these instruments will grow as the REDCap consortium expands.

5.3. Facilitation of the Adoption of Data Standards

Currently, a standards affinity group within the consortium which includes REDLOC members is in conversations with CDISC on how to facilitate adoption of CDASH standards [20]. As of this writing, four CDASH forms are available in the library: CDASH V1.1 Adverse Events, CDASH V1.1 Common Identifiers, CDASH V1.1 Demographics, and CDASH V1.1 Protocol Deviations. Also included is the NCI Demography CRF Module based on the NCI Common Data Elements (CDE). The inclusion of such complex forms will simplify their adoption on relevant projects. This is evidenced by the number of downloads for each of these forms (ranging from 7 to 74 for a cumulative total of 162 as of this writing). Other standards-based modules will be added to the SDIL as they are coded into REDCap format. It might be worth noting that although the library includes some standards-based data dictionaries for clinical trials such as CDISC-CDASH it is intended to provide the flexibility of including any publicly available, validated instrument where such standards may not apply, for example, the National Institutes of Health (NIH) PROMIS [10], and the RAND 36-Item Short Form Health Survey developed for the Medical Outcomes Study [9].

5.4. Facilitation of Data Sharing

As mentioned earlier, data collection instruments and forms created for similar projects by different investigators often result in diverse data dictionaries. As a result, collaborators at different sites have a hard time pooling data from their diverse projects on the same research topic. One of the advantages of the SDIL is the harmonization of electronic data collection instruments and forms by allowing researchers at different sites to download these instruments from the library and instantaneously incorporate them into their projects. This results in identical data dictionaries at those sites provided individual researchers do not edit the forms after download. This significantly facilitates sharing and pooling of data between those sites. Moreover, we have recently developed an alternate “bottom-up” process where end-users can upload locally developed instruments or forms, for sharing and collaboration with other researchers at their own institution or at other institutions in the REDCap consortium, without full REDLOC approval. To qualify for upload to the SDIL in bottom-up mode, the instruments or forms have to be pushed from development status to production status in the local REDCap project, then they undergo an expedited review by the REDLOC librarian to confirm intent of sharing with the whole consortium and that there are no copyright infringements. These non-curated uploads are distinguished from the curated ones by flagging curated instruments with an asterisk in the library listing. As the committee gains more experience with this approach, evaluation of these submissions for quality control, heterogeneity and/or inconsistency may become necessary.

5.5. Limitations

One of the advantageous features of REDCap is the fact that researchers own the data dictionaries and are responsible for designing their own data collection forms [5, 6]. However, this also implies that researchers are free to modify validated instruments or standardized data collection forms after they have been downloaded from SDIL. In these instances, the instruments can lose their value as validated instruments, and in the case of standards can break the standards compliance. Though these are research-driven decisions, the SDIL informs the users of this risk during the instrument download process. More robust alerts when such changes are attempted will likely be considered in future work, but the ultimate capability to make any changes will remain with the research team.

A second possible SDIL drawback lies in the fact that the resource might make it too easy for researchers to erroneously choose an instrument that is not quite applicable for their research. However this is unlikely in a typical quantitative research design workflow where independent variables and outcome variables are identified very early in the design process, usually prior to database design. As previously stated, the selection of the best instruments or data collections for a study should be based primarily in research-specific criteria.

Third, although we realize that approval by a centralized committee for inclusion of instruments into the library might impose a bottleneck on the process and may impact scalability, we believe the benefit of quality and copyright control on validated instruments outweighs this risk. If at a later date this becomes a problem due to high demand for inclusion, this model will be reconsidered and/or complemented by other mechanisms. Moreover, the bottom-up approach where submissions get an expedited review by the REDLOC librarian should help overcome this bottleneck.

Fourth, it is difficult to evaluate the impact on research productivity beyond basic download and utilization metrics. This is further discussed below.

5.6. Future Directions

Although the library has shown significant growth over the past two years, we believe there is still a lot to be done. Currently, work continues on adding more validated data collection instruments and standards-based modules necessary for pooling of data across multiple sites. Striking examples are the evolving CDISC standard mentioned above, the NCI CDE’s maintained in the Cancer Data Standards Registry and Repository (caDSR) [21], and other bodies of standards that are being considered for inclusion. Another area that might improve adoption of these standards is work on REDCap interoperability with some existing electronic CRF templates such as CDISC Operational Data Model (ODM), Study Data Tabulation Model (SDTM) and Define.xml – an extension of the ODM [20]. We are also exploring a semi-automated methodology to expedite the conversion of lengthy electronic CRF formats such as the CDASH V1.1 into REDCap library consumable data dictionaries. This methodology could eventually be applied to other standards such as the NCI CDE’s.

Another feature being considered is the incorporation of concept linking for the data elements in REDCap. This would allow linking of data elements to various bodies of standard ontologies such as SNOMED-CT or NCI thesaurus. These concept mappings could be incorporated into the library, and researchers could then download these instruments into their REDCap project maintaining ease of use while allowing future mapping of data to standard concepts. As a result, data from various projects could be pooled together for future meta-analyses.

Although current metrics demonstrate the utility of the SDIL, future impact analysis may be necessary to evaluate effect on productivity. This could be accomplished by further tracking of utilization, user surveys and evaluation of resulting publications.

6. Conclusion

REDCap provides users a robust data management platform for their research projects and has enjoyed tremendous growth over the past few years because of its grass roots design, which facilitates research data collection needs. The addition of the SDIL further reduces the burden of sound data collection while promoting best practices. The creation of a centralized location of public domain validated instruments, pre-coded for immediate, use will inevitably significantly decrease duplication of effort. More importantly, we project that SDIL will not only simplify the use of suitable data collection instrument but will also create tremendous opportunities for data sharing within and across research teams. The workflow presented above is a new model that promotes the utilization of validated data collection instruments and standards-based forms, and facilitates data sharing. All are paramount in today’s data driven biomedical research.

Highlights.

We present a new model for sharing electronic data capture instruments.

The instruments are shared within an established electronic data collection system.

Re-use of data dictionaries reduces the burden for sound data collection.

The library promotes the use of validated instruments.

A secondary goal is to promote data sharing.

Acknowledgements

We thank past and current REDLOC members (Todd Ferris – Stanford University, Rob Schuff – Oregon Health and Science University, Bob Wong -- University of Utah, Elizabeth Wood – Weill-Cornell Medical College, Ray Balise – Stanford University, Denise Canales – University of Kentucky, Linda Carlin – University of Colorado Denver, Penni Hernandez – Stanford University, Jessie Lee – Weill-Cornell Medical College, Fatima Barnes – Meharry Medical College, Jennifer Ann Lyon – University of Florida, Jane Welch – University of North Carolina, Denise Babineau – Case Western Reserve University, Jason Lones – Rocky Mountain Poison and Drug Center), NCI contributors (Dianne Reeves, Denise Warzel) and CDISC participation (Rhonda Facile, Shannon Labout). This publication was supported by the following grants: the South Carolina Clinical & Translational Research (SCTR) Institute, UL1 RR029882 and UL1 TR000062 (the Medical University of South Carolina); UL1 RR026314 and UL1 TR000077 (University of Cincinnati); UL1 RR024975 and UL1 TR000445 (Vanderbilt University); Center for Collaborative Research in Health Disparities, G12 RR003051 and G12 MD007600 (University of Puerto Rico Medical Sciences Campus); UL1 RR024150 and UL1 TR000135 (Mayo Clinic); R24 HD042849 awarded to the Population Research Center at The University of Texas at Austin; Clinical & Translational Science Collaborative, UL1 RR024989 and UL1 TR000439 (Case Western Reserve University).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Bart T. Comparison of Electronic Data Capture with Paper Data Collection – Is There Really an Advantage? Pharmatech. 2003:1–4. [Google Scholar]

- 2.Wahi MM, Parks DV, Skeate RC, Goldin SB. Reducing errors from the electronic transcription of data collected on paper forms: a research data case study. J Am Med Inform Assoc. 2008;15:386–389. doi: 10.1197/jamia.M2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Welker JA. Implementation of electronic data capture systems: barriers and solutions. Contemp Clin Trials. 2007;28:329–336. doi: 10.1016/j.cct.2007.01.001. [DOI] [PubMed] [Google Scholar]

- 4.DiLaura R, Turisco F, McGrew C, Reel S, Glaser J, Crowley WF., Jr Use of informatics and information technologies in the clinical research enterprise within US academic medical centers: progress and challenges from 2005 to 2007. J Investig Med. 2008;56:770–779. doi: 10.2310/JIM.0b013e3175d7b4. [DOI] [PubMed] [Google Scholar]

- 5.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Franklin JD, Guidry A, Brinkley JF. A partnership approach for Electronic Data Capture in small-scale clinical trials. J Biomed Inform. 2011;44(Suppl 1):S103–S108. doi: 10.1016/j.jbi.2011.05.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Richesson RL, Krischer J. Data standards in clinical research: gaps, overlaps, challenges and future directions. J Am Med Inform Assoc. 2007;14:687–696. doi: 10.1197/jamia.M2470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Embi PJ, Payne PR. Clinical research informatics: challenges, opportunities and definition for an emerging domain. J Am Med Inform Assoc. 2009;16:316–327. doi: 10.1197/jamia.M3005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hays RD, Morales LS. The RAND-36 measure of health-related quality of life. Ann Med. 2001;33:350–357. doi: 10.3109/07853890109002089. [DOI] [PubMed] [Google Scholar]

- 10.Cella D, Yount S, Rothrock N, Gershon R, Cook K, Reeve B, Ader D, Fries JF, Bruce B, Rose M. The Patient-Reported Outcomes Measurement Information System (PROMIS): progress of an NIH Roadmap cooperative group during its first two years. Med Care. 2007;45:S3–S11. doi: 10.1097/01.mlr.0000258615.42478.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16:606–613. doi: 10.1046/j.1525-1497.2001.016009606.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Fletcher RW, Fletcher SW. In: Clinical Epidemiology: The Essentials. 4th ed. Lippincott Williams and Wilkins, editor. Baltimore: 2005. [Google Scholar]

- 13.Nelson DE, Holtzman D, Bolen J, Stanwyck CA, Mack KA. Reliability and validity of measures from the Behavioral Risk Factor Surveillance System (BRFSS) Soz Praventivmed. 2001;46(Suppl 1):S3–S42. [PubMed] [Google Scholar]

- 14.Charlson ME, Pompei P, Ales KL, MacKenzie CR. A new method of classifying prognostic comorbidity in longitudinal studies: development and validation. J Chronic Dis. 1987;40:373–383. doi: 10.1016/0021-9681(87)90171-8. [DOI] [PubMed] [Google Scholar]

- 15.Radloff LS. The CES-D scale: a self-report depression scale for research in the general population. Applied Psychological Measurement. 1977;1:385–401. [Google Scholar]

- 16.Corrigan JD. Development of a scale for assessment of agitation following traumatic brain injury. J Clin Exp Neuropsychol. 1989;11:261–277. doi: 10.1080/01688638908400888. [DOI] [PubMed] [Google Scholar]

- 17.Mahoney FI, Barthel DW. Functional evaluation: the Barthel Index. Md State Med J. 1965;14:61–65. [PubMed] [Google Scholar]

- 18.Hamilton M. A rating scale for depression. J Neurol Neurosurg Psychiatry. 1960;23:56–62. doi: 10.1136/jnnp.23.1.56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Moser RP, Hesse BW, Shaikh AR, Courtney P, Morgan G, Augustson E, Kobrin S, Levin KY, Helba C, Garner D, Dunn M, Coa K. Grid-enabled measures: using Science 2.0 to standardize measures and share data. Am J Prev Med. 2011;40:S134–S143. doi: 10.1016/j.amepre.2011.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.The Clinical Data Acquisition Standards Harmonization (CDASH) Standard. doi: 10.4103/2229-3485.167101. Available at http://www.cdisc.org/cdash. [DOI] [PMC free article] [PubMed]

- 21.The National Cancer Institute Cancer Data Standards Registry and Repository (caDSR) Available at https://cabig.nci.nih.gov/concepts/caDSR.