Abstract

For synchronous brain-computer interface (BCI) paradigms tasks that utilize visual cues to direct the user, the neural signals extracted by the computer are representative of voluntary modulation as well as evoked responses. For these paradigms, the evoked potential is often overlooked as a source of artifact. In this paper, we put forth the hypothesis that cue priming, as a mechanism for attentional gating, is predictive of motor imagery performance, and thus a viable option for self-paced (asynchronous) BCI applications. We approximate attention by the amplitude features of visually evoked potentials (VEP)s found using two methods: trial matching to an average VEP template, and component matching to a VEP template defined using independent component analysis (ICA). Templates were used to rank trials that display high vs. low levels of fixation. Our results show that subject fixation, measured by VEP response, fails as a predictor of successful motor-imagery task completion. The implications for the BCI community and the possibilities for alternative cueing methods are given in the conclusions.

I. INTRODUCTION

Motor imagery is a specific type of mental rehearsal applied for BCI use, in which the intention of the subject is determined by their imagination of movement of a specific part of the body e.g. feet, arms, tongue, etc. During motor imagery, the voluntary modulations of the sensorimotor rhythms in the α (8–12 Hz) and β (18–25 Hz) ranges are the target signals to be extracted and interpreted. Visually evoked potentials (VEP)s are also present in the scalp electroencephalogram (EEG) as a result of the cue which is designed to prompt a response from the user. In motor imagery tasks, the evoked response may be considered a source of artifact and removed or ignored in further processing.

The majority of motor imagery BCIs are defined as synchronous; the pace is set by the computer, and thus the timing of imagery is known by the classification algorithm. Because of the nature of BCI research and application, there are few overt cues about the attentional state of the user. To move towards the goal of a self-paced BCI, an additional control signal is needed from the subject.

In this study, we seek to infer a user's intent to perform motor imagery from their attentiveness to a pre-task visual cue. Visual attention is controlled by a distributed network of cortical and subcortical areas which act to provide “bias signals” that enhance or suppress the responses to visual stimuli. [1]. We focus on two components of the VEP for our assessment of attention: the N2 and the P3. The N2 is a negative-going wave that peaks around 200 ms and is comprised of multiple components. The N2c component over posterior areas is evident when a stimulus must be identified for classification into multiple target categories [2]. This component is modulated by conscious perception through an increase in the negativity of the N2 peak [3]. The P300 is a positive-going wave with a maximum over midline centro-parietal regions. Known as an “oddball response”, it has amplitude inversely proportional to the frequency of the target stimuli. Importantly for our case, the latency of this peak is negatively correlated with the level of attention. [2].

We know that as conscious perception of a stimulus changes, the response recorded at the level of the scalp also changes [2], [3], [4]. We seek to ask two basic questions:

Is there a correlation between the distribution of evoked responses and the production of sensorimotor rhythm modulation to control a motor imagery task correctly?

Can we apply some EEG signature of visual attention to the gating of a BCI paradigm reliably in real time?

To address this first question, the establishment of a template that is characteristic of a “good” VEP is required. We attempt two different methods of template building, and assess two different components of the VEP for indications of consistent correlations between VEP features and motor imagery performance. The answer to the second question is more difficult because it requires the single-shot identification of trials corresponding to a template, a difficult task for such highly variable data. The answer to this question is critical for assessing whether this type of visual gate makes it possible for a BCI user to self-pace the system.

II. METHODS

A. Experimental Setup

A commercial EEG recording system (Guger technologies, www.gtec.at) was used to acquire data from subjects. Data was sampled at 256 Hz and bandpass filtered at 0.1–30 Hz. Data was recorded with use of Simulink environment in MATLAB and stored on a Dell Latitude E6400 notebook computer running Windows XP. Subject was seated comfortably in a chair facing a LCD monitor which displayed cueing and feedback information. Experimental protocol was approved by the Institutional Review Board of Penn State University.

B. BCI Paradigm

Nine subjects, all male, aged 18–37 participated in a cue-paced, one-dimensional center-out task. Channels FC3, FC4, C5, C3, C1, C2, C4, C6, CP3, CP4, P5, P3, P1, P2, P4, P6, PO3, and PO4 were recorded, in addition to three electrooculogram (EOG) electrodes placed lateral to the left and right eyes as well as just above the nasion. All channels were referenced to linked earlobes, and ground was placed at FPz. Each subject performed four sessions over a two week period. Each session lasted approximately 1.5 hours.

During each session, the subject performed five runs of 60 trials each, divided equally between left, right, and no-target cues which were presented in a randomized sequence. The first run, from here on called the training run, was used to train the classifier so the remaining four testing runs could be used to give feedback to the subject as they performed the task. During the motor imagery tasks, the subject was cued by an arrow pointing in the left or right direction. After observation of the cue, the subjects were instructed to perform an object-oriented grasping imagery task for the arm corresponding to the direction of the arrow being displayed. Feedback and performance on the task were determined using a linear group mean based classifier described previously [5]. Over the four sessions, each subject completed 16 test runs consisting of 960 trials, with 320 trials per cue type. The data belonging to the 320 trials of left and right cues were analyzed offline using the methods described below.

C. Preprocessing

Artifact reduction was accomplished by linear regression, as detailed in [6]. This least-squares method assumes the linear superposition of neural source and artifact data to produce the measured signal. Assuming the independence of the artifact sources and the neural sources, training data can be used to find a weight matrix, which can be utilized in online BCI operation to remove eye-related noise from the recorded signal.

D. VEP Template Building

Using the data recorded during a one-dimensional cursor control task with feedback, we sought to quantify subject fixation by performing correlation of each trial with a VEP typical of attentive fixation behavior. We defined this evoked potential using two different techniques: averaging over trials, and component separation through Independent Component Analysis (ICA).

1) Averaging of VEPs

The first template for a “representative” VEP was developed by averaging over trials corresponding to each cue type. This results in an average of the varied examples of VEP, which while computationally efficient, loses some of the most critical information as a result of the averaging. Average templates were established for the channels P3, P4, PO3, and PO4. The times of peaks N2 and P3 in these templates were marked for further processing.

2) VEP component identification through ICA

The second method used to extract evoked potentials from the EEG was to perform blind source separation with ICA. The data input to the ICA algorithm was demeaned and detrended. The FastICA algorithm, described by Hyväarinen [7] and implemented in the software EEGLAB [8], was used for this purpose. This algorithm is a computationally efficient way to separate multichannel data into maximally independent components through a linear unmixing. With this transformation, we can locate components of the EEG that spatially and temporally correspond to a VEP. Two components were manually selected: an N2 component having an occipito-temporal locus and negative-going wave 200 ms following the cue, and a P3 component having a medial centro-parietal locus and broadly peaking in the 300–400 ms range.

E. Trial Ranking Through Template Matching

Left and right cued trials were ranked for each subject by template (average/ICA) and VEP feature (N2/P3). In the case of average template matching, individual trials of the original data in channels P3, P4, PO3, and PO4 were ranked by amplitude at the N2 and P3 peaks as defined in their associated templates. In the case of ICA component matching, the independent components in each trial, following unmixing by the transformation matrix, were matched to the VEP component template. Following each of the methods of trial ranking, the trials were grouped into a high amplitude (the top 33% of the trials) bin deemed the “good fixation” group, and low amplitude (the bottom 33% of the trials) bin deemed the “bad fixation” group. The remaining trials were excluded from further analysis.

From each of these two templates and two ranking criteria, we sought to assess how well the subject performed on the trial (approximated by the feedback at the end of that trial) as a function of matching to the VEP template. Because the dependent variable, the feedback, was non-normally distributed, we chose to perform a non-parametric test of statistical inference. To determine whether the difference in the means was significantly greater than random fluctuation, the data was put through a permutation test. We first computed the test statistic Tobs, the differences between the means of the feedback of the two fixation groups. Then we shuffled the labels of the data and found the difference in the means Tk for each k permutation, repeating the permutation procedure 1000 times. Two-sided p-values were computed for each combination of cues, templates, features, and channels/components as in (1). Significant p-values were identified at the α/2 = .025 level.

| (1) |

Here, H is the Heaviside function. The process of trial ranking and permutation testing for significance is shown for a generic case of template and feature in Fig. 1.

Fig. 1.

This flowchart shows an example sorting right cue trials by template matching. The procedure was repeated for both cue types (right, left), both templates (average, ICA), and both VEP waveform features (N2/P3 amplitude).

III. RESULTS

A. BCI Performance

As reported previously [5], the operation of the BCI was successful for eight of the nine subjects, achieving a range of accuracies at classifying left vs. right tasks of 64.2–97.8%. Thus, the feedback was a good indication of subject performance on the task. Subject 2, with a 49.5% success rate, was omitted from further analysis.

B. Template Building

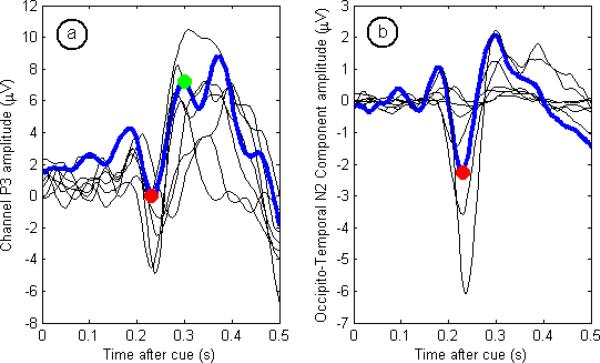

For each of the subjects, average templates were built separately for channels P3, P4, PO3, and PO4, and further specialized by left or right cue type. This resulted in eight different average templates, of which the one corresponding to trials with a right cue in channel P3 can be seen in Fig. 2a. For each of these templates, the times of peaks N2 and P3 were marked. Templates were also generated through ICA decomposition, although only two components were retained for each cue type from the decomposition of all 18 channels. The right occipito-temporal (N2) ICA template for each subject is shown in Fig. 2b.

Fig. 2.

Templates for eight subjects, with relevant peaks and intervals highlighted for subject 7. The red markers indicate peak N2, and the green marker P3. The left figure shows an example template from the averaging procedure. In this case, the template was calculated by averaging all the trials in which the subject was cued by a right arrow, recorded from channel P3. The right figure shows the VEP source identified by ICA as having an occipito-temporal origin with a pronounced N2 peak.

C. Template Matching and Significance of Fixation

For the average template, channels P3, P4, PO3, and PO4 were matched to their respective templates using the two different VEP features of the template. The result of one such matching procedure, shown in Fig. 3a, gives the means and standard deviations of the right trials from channel P3 belonging to the “good fixation” and “bad fixation” groups, sorted by their amplitude at the time of the N2 peak. Similarly, 3b shows the mean and standard deviations of occipito-temporal trial components with high and low matching to that ICA template.

Fig. 3.

Trial sorting by matching to template. (a) Trials sorted by amplitude of the N2 wave (with range outlined in the dashed grey box) of the average template, channel P3. (b) Unmixed trial components sorted by peak N2 with latency given by the occipito-temporal ICA component template. In both cases, the mean and standard deviations for the 33% of trials with the negative-most amplitude in these ranges are shown in blue, signifying good fixation, and the mean and standard deviation for the 33% of trials with the positive-most amplitude are shown in red.

Permutation tests performed on the data as described in the methods failed to find consistent VEP features among subjects that predicted success at performing motor imagery in the following seconds. Shown in Table 1 are the results of the attention-based grouping of right cues. For the average P4 template of subject 1, there was a significant increase in participant performance on right cued trials predicted by amplitude of the N2 peak. Besides this finding, no other significant correlations between template matching and trial feedback were found.

TABLE I.

Results of the permutation test for each subject from trials with right cues.

| Template | Average | ICA | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||

| Feature | N2 | P3 | N2 | P3 | |||||||

|

| |||||||||||

| Chan/Comp | P3 | P4 | PO3 | PO4 | P3 | P4 | PO3 | PO4 | N2 | P3 | |

| Subject | 1 | 0.691 | 0.021* | 0.785 | 0.185 | 0.999 | 0.860 | 0.515 | 0.774 | 0.460 | 0.784 |

| 2 | 0.314 | 0.296 | 0.249 | 0.790 | 0.196 | 0.363 | 0.470 | 0.533 | 0.294 | 0.827 | |

| 3 | 0.472 | 0.552 | 0.346 | 0.750 | 0.775 | 0.415 | 0.890 | 0.945 | 0.380 | 0.130 | |

| 4 | 0.795 | 0.786 | 0.883 | 0.846 | 0.794 | 0.948 | 0.927 | 0.275 | 0.752 | 0.025 | |

| 5 | 0.981 | 0.817 | 0.255 | 0.419 | 0.688 | 0.704 | 0.380 | 0.363 | 0.876 | 0.698 | |

| 6 | 0.447 | 0.572 | 0.475 | 0.791 | 0.599 | 0.415 | 0.407 | 0.630 | 0.724 | 0.924 | |

| 7 | 0.535 | 0.129 | 0.532 | 0.229 | 0.584 | 0.460 | 0.620 | 0.627 | 0.684 | 0.036 | |

| 8 | 0.112 | 0.040 | 0.026 | 0.109 | 0.627 | 0.650 | 0.777 | 0.559 | 0.384 | 0.161 | |

The values in the 10 columns represent the probability that the mean feedback of the original “good fixation” trials minus the “poor fixation” trials was greater than 97.5% of the mean difference for the 1000 permuted groups. An asterisk indicates a significant improvement in BCI performance associated with good fixation.

IV. CONCLUSION

Fixation to a visual stimulus, representing a momentary period of visual attention, and the subsequent evoked potential is not reliably predictive for performance in a motor-imagery BCI task. This finding has two implications for BCI applications.

First, it implies that fixation is not as critical to the success of the motor imagery paradigm as it has previously been thought, evidenced by synchronous designs that utilize some form of fixation for attention. While it would require eye tracking to precisely monitor subject fixation, we show that the variation in EEG signatures of fixation do not correlate with the outcome of the trial. This is critical when considering the practicality of BCIs for real-world use, where it is undesirable to completely monopolize patient attention, leaving them unable to interact with other parts of their environment.

Unfortunately, it also means that this type of visual probing for subject attention is not a viable option for creating an asynchronous BCI system as we have envisioned it with the current design. As there is no concurrent change in the user's ability to perform the task as their state of fixation changes, the practicality of using the VEP as a signal of the user's attention level is unlikely. However, it does leave open the possibility for probing for attention through other forms of visual stimulation, such as using more intense stimuli for a more robust response, or presenting the stimuli in an irregular fashion, to increase the amplitude of the P300 potential.

The second question posed in our introduction goes unanswered; although these procedures for assessing attention and gating subsequent motor imagery are able to be implemented online, the high variability of the background EEG data makes single-shot identification of attention a difficult task. Possibilities for reducing the background signal and therefore improving the signal to noise ratio of the VEP include filtering of the significant alpha component, which, when phase locked to the stimulus could produce substantial bias, as well as cueing for attention with different variations of visual and auditory stimuli.

Acknowledgments

This work was supported by the NIH grant K25NS061001(MK).

REFERENCES

- [1].Hillyard SA, Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proceedings of The National Academy of Sciences. 1998;95:781–787. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Niedermeyer E, da Silva FL. Niedermeyer's Electroencephalography: Basic Principles, Clinical Applications, and Related Fields. Lippincott Williams & Wilkins; 1999. [Google Scholar]

- [3].Railo H, Koivisto M, Revonsuo A. Tracking the processes behind conscious perception: A review of event-related potential correlates of visual consciousness. Consciousness and Cognition. 2011;20(no. 3):972–983. doi: 10.1016/j.concog.2011.03.019. [DOI] [PubMed] [Google Scholar]

- [4].Koivisto M, Revonsuo A. Event-related brain potential correlates of visual awareness. Neuroscience & Biobehavioral Reviews. 2010;34(no. 6):922–934. doi: 10.1016/j.neubiorev.2009.12.002. [DOI] [PubMed] [Google Scholar]

- [5].Geronimo A, Schiff S, Kamrunnahar M. IEEE EMBS. Cancun, Mexico: Apr, 2011. A simple generative model applied to motor-imagery brain-computer interfacing. [Google Scholar]

- [6].Schlögl A, Keinrath C, Zimmermann D, Scherer R, Leeb R, Pfurtscheller G. A fully automated correction method of EOG artifacts in EEG recordings. Clinical Neurophysiology. 2007;118(no. 1):98–104. doi: 10.1016/j.clinph.2006.09.003. [DOI] [PubMed] [Google Scholar]

- [7].Hyvärinen A. Fast and robust fixed-point algorithms for independent component analysis. IEEE Transactions on Neural Networks. 1999;10(no. 3):626–634. doi: 10.1109/72.761722. [DOI] [PubMed] [Google Scholar]

- [8].Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods. 2004;134(no. 1):9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]