Abstract

Listeners’ perception of acoustically presented speech is constrained by many different sources of information that arise from other sensory modalities and from more abstract higher-level language context. An open question is how perceptual processes are influenced by and interact with these other sources of information. In this study, we use fMRI to examine the effect of a prior sentence-fragment meaning on the categorization of two possible target words that differ in an acoustic phonetic feature of the initial consonant, voice-onset time (VOT). Specifically, we manipulate the bias of the sentence context (biased, neutral) and the target type (ambiguous, unambiguous). Our results show that an interaction between these two factors emerged in a cluster in temporal cortex encompassing the left middle temporal gyrus and the superior temporal gyrus. The locus and pattern of these interactions support an interactive view of speech processing and suggest that both the quality of the input and the potential bias of the context together interact and modulate neural activation patterns.

Keywords: phonetic category perception, fMRI, biasing context, voice onset time, top-down processing

In order to comprehend the intended message of a speaker, listeners must transform the acoustic input into meaningful sounds, words, and concepts. In some cases, the acoustic information may be degraded or ambiguous, which makes it difficult for the listener to correctly identify the speech content. Other sources of information are often available and may be used to resolve the ambiguity in the acoustic speech signal. A range of factors, from articulatory gestures to more abstract linguistic context, has been shown to constrain the interpretation of ambiguous speech (Borsky, Tuller, & Shapiro, 1998; Ganong, 1980; McGurk & MacDonald, 1976). Understanding how these various factors influence and constrain perception is crucial to developing an ecologically valid understanding of the mechanisms involved in speech perception.

Until recently, functional neuroimaging studies have mainly focused on the constraints on speech perception processes placed by other sources of sensory information. Results of studies examining the effects of visually presented articulatory gestures on acoustic phonetic perception suggest that this visual information impacts speech perception processing at early stages in the speech processing stream (Calvert, 2001; Hertrich, Mathiak, Lutzenber, & Ackerman, 2009; Pekkola et al., 2005). However, there is debate about whether more abstract linguistic properties such as meaning may also affect early stages of perception or whether such effects are due to post-perceptual processes (e.g. executive processes) (Norris, McQueen, & Cutler, 2000).

The effect of the lexical status of an auditory stimulus on phonetic perception has played an especially important role in this debate (McClelland, Mirman, & Holt, 2006; McQueen, Norris, & Cutler, 2006; Norris, McQueen, & Cutler, 2000, 2003). The “Lexical Effect,” or “Ganong Effect,” is a well-established behavioral effect that demonstrates how lexical bias can impact acoustic phonetic categorization (Fox, 1984; Ganong, 1980; Miller & Dexter, 1988). When an ambiguous sound such as one between a /k/ and a /g/ is paired with a syllable that forms either a real word or a nonword, ‘kiss’ or ‘giss’, the initial sound is more likely to be perceived consistent with the phonetic category that forms a real word (Ganong, 1980).

Most models of speech perception assume a hierarchically organized system in which acoustic information is mapped in stages onto phonetic categories and abstract phonological units, which are subsequently mapped onto lexical representations and ultimately semantic meaning and abstract concepts (Gaskell & Marslen-Wilson, 1997; McClelland & Elman, 1986; Norris et al., 2000; Protopapas, 1999). However, models differ in the degree to which higher levels of the hierarchical structure interact with lower levels. Two influential and competing hierarchical models, namely MERGE (Norris et al., 2000) and TRACE (McClelland & Elman, 1986) have accounted for the lexical effect, although they do so through different mechanisms.

MERGE, is a strictly feedforward model that accounts for the lexical effect through post-perceptual processes in which decision-making processes feed back to modify pre-lexical processing and acoustic phonetic selection during categorization (McQueen et al., 2006; Norris et al., 2000). In contrast, TRACE is an interactive model that accounts for the lexical effect through the inherent bidirectional interactions between lexical and pre-lexical levels (McClelland & Elman, 1986). A third computational approach, predictive coding has recently served as a framework for speech perception and also suggests that top-down processes can influence lower-level processes (Gagnepain, Henson, & Davis, 2012; Sohoglu, Peelle, Carlyon, & Davis, 2012; Spratling, 2008; Wild, Davis, & Johnsrude, 2012). In this view, predictions about incoming sensory input modulate subsequent responses to the sensory information.

Myers and Blumstein (2008) used fMRI in an attempt to distinguish between MERGE and TRACE by examining the neural structures and pattern of activation associated with the lexical effect. Both temporal and frontal structures have been implicated in acoustic phonetic categorization with responses in temporal structures attributed to sensory-based acoustic phonetic processing and those in frontal structures attributed to executive processes (Binder, Liebenthal, Possing, Medler, & Ward, 2004; Myers, Blumstein, Walsh, & Eliassen, 2011; Tsunada, Lee, & Cohen, 2011).

Such a strict dichotomy between structure and function, i.e. temporal/sensory processes and frontal/executive processes may be too simplistic. Indeed, Noppeney and Price (2004) using a semantic judgment task have suggested that temporal lobe structures are implicated in executive processes as well. Nonetheless, it is the case that most speech perception findings have been interpreted within the framework of the temporal/sensory and frontal/executive dichotomy. In particular, Myers and Blumstein (2008) reasoned that modulation of activation in frontal cortex would be consistent with a decision-making component, whereas changes in activation in temporal cortex would suggest perceptual components of the lexical effect. Their results showed that in addition to activation changes in frontal structures, significant clusters emerged in both the left and right superior temporal gyri (STG) that were sensitive to lexically-biased shifts in phonetic category for the ambiguous stimulus. This modulation of activation in the STG was used as evidence against a purely post-perceptual account of lexical information on pre-lexical processing and as evidence for direct interactions between lexical and pre-lexical processes.

Lexical influences on the perception of phonetic categorization have also been examined with EEG and MEG (Gow, Segawa, Ahlfors, & Lin, 2008). In a study by Gow et al. (2008), a Grainger causality analysis was performed to determine whether lexical information influenced the categorization of a /s/-/∫/ continuum. They found that the timing of the influence of lexical information in posterior superior temporal gyrus activation coincided with the timing of lexical processing and, similar to Myers and Blumstein (2008), concluded that higher-level lexical processes modulate lower-level phonetic perception.

Sentence context may place qualitatively different constraints on acoustic phonetic perception than the lexical status of an acoustically presented stimulus (Connine, 1987). An open question is whether these “higher levels” or more abstract sources of information, might also affect phonetic perception at early stages of pre-lexical processing. In a behavioral study, Borsky et al. (1998) examined the categorization of a minimal word pair in different sentence contexts where the distinguishing feature between two words was the VOT of the initial consonant. They showed that sentence context affected listeners’ perception of a boundary-value and hence ambiguous acoustic phonetic distinction (Borsky et al., 1998). In contrast to the lexical effect where there is only one valid lexical choice, the sentence context effect biased the categorization of two valid lexical entries. As a consequence, changes in phonetic perception were mediated by the processing of sentence meaning.

Several recent studies have investigated the neural systems that underlie the impact of sentence information on perception by orthogonally manipulating sentence context and the intelligibility of the speech signal and examining these effects on sentence comprehension. Sentence context was examined by varying the cloze probability of the last word of the sentence (Obleser & Kotz, 2009), by varying the syntactic complexity of the sentence (Obleser, Meyer, & Friederici, 2011), or by comparing anomalous and non-anomalous sentences (Davis, Ford, Kherif, & Johnsrude, 2011). To date, most studies have employed a global distortion manipulation of intelligibility that affects the entire acoustic stimulus, such as noise-vocoded speech (Davis et al., 2011; Friederici, Kotz, Scott, & Obleser, 2010; Obleser & Kotz, 2009, 2011; Obleser et al., 2011). Interactions between sentence context and intelligibility have resulted in modulation of activity in either frontal and/or temporal regions (Davis et al., 2011; Friederici et al., 2010; Obleser & Kotz, 2011; Obleser et al., 2011). Two of the studies also examined the relative timing of the responses and found later responses in frontal areas compared to temporal areas (Davis et al., 2011; Obleser & Kotz, 2011). However, interpretations of these similar findings differ. On the one hand, changes in temporal areas have been taken as evidence for the influence of higher-level processes on lower-level perceptual processes (Obleser & Kotz, 2009). On the other hand, earlier responses in temporal versus frontal areas have been used to argue for a bottom-up only feedforward view of speech processing (Davis et al., 2011).

Most detailed computational accounts of speech processing go only as far as lexical levels of representations and have not incorporated higher levels of linguistic processing such as sentence meaning. The predictions of these models can be extended to these levels, however. Top-down computational models of speech perception including interactive models such as TRACE (McClelland & Elman, 1996) and predictive coding models (Gagnepain, Henson, & Davis, 2012; Sohoglu et al. 2012) would predict that the processing of sentence meaning trickle down to and influence lower levels of representation. In this way, sentence meaning would interact with lower level of processing and affect acoustic phonetic processing (McClelland & Elman, 1986; Mirman, McClelland, & Holt, 2006). Such a functional architecture would predict that neural areas found to be engaged in the processing of phonetic information, such as temporal structures, should be modulated by the bias of the sentence context, and hence should show differential influences of sentence bias on an ambiguous versus unambiguous lexical target.

The aim of the current study is to investigate the potential role of top-down effects, specifically sentence context on speech perception using fMRI. However, rather than focusing on global distortions of the speech signal and examining effects of such distortions on sentence comprehension, we acoustically manipulated a phonetic feature (VOT) to produce ambiguous and unambiguous target word stimuli and investigated the effects of a prior sentence context on phonetic perception. In this way, it is possible to examine the interaction between sentence context as a function of the ambiguity of the acoustic signal and consider the extent to which higher-level sources of information (meaning/sentence context) influence and potentially interact with lower-level (acoustic-phonetic) perceptual processes.

To this end, we will use fMRI to examine those neural areas that show an interaction between “top-down” sentence context information (neutral vs. biased) and phonetic perception (ambiguous vs. unambiguous). The question of interest is whether such sentence context information modulates the perception of acoustic phonetic category structure ([g]-[k]) of an ambiguous and unambiguous target word and what neural areas are recruited under such conditions. Specifically, listeners will hear one of three sentence fragment contexts (goat-biased, coat-biased, and neutral), which will be paired with one of three targets that differ only in the acoustic phonetic feature of the initial consonant, voice-onset time (ambiguous, unambiguous ‘coat’, unambiguous ‘goat’) and will be asked to categorize the target stimulus as either ‘coat’ or ‘goat’.

Method

Materials

Sentence Fragments

In creating the sentence fragments, it was necessary to ensure that the selected verbs either biased listeners to ‘coat’ or to ‘goat’, and, in the case of the neutral sentences, did not favor either ‘coat’ or ‘goat’ stimuli. To that end, in two separate pilot studies each consisting of 8 participants, a total of 112 verbs were presented in sentences of the form ‘he verb the ___’in a sentence completion task. Participants were instructed to read the sentence and fill in the last word of the sentence with either “goat” or “coat”, whichever word they considered to be most appropriate for each sentence context. From these, twenty-four sentences that were completed with ‘coat’ 100 % of the time were chosen for the coat-biased sentence fragment context condition, and 24 sentences that were completed with ‘goat’ 100 % were chosen for the goat-biased sentences context condition (see Appendix). For the neutral sentence fragment context, 24 sentences were selected in which on average responses were 50 % ‘goat’ and 50 % ‘coat’ (see Appendix). Each sentence fragment to be used in the experiment was then digitally recorded four times spoken by a male speaker of English (PA). One recording deemed by the experimenter (SG) to be the clearest production was selected for each fragment.

Target Stimuli

The same native English male speaker who produced the sentence fragments also produced multiple tokens of the words ‘coat’ and ‘goat’. From these, one ‘coat’ and ‘goat’ token was selected to create a nine-step voice-onset time (VOT) continuum. To this end, the onset (burst and transitions) of the ‘coat’ stimulus was appended to the vowel and final consonant of the ‘goat’ stimulus. To create the continuum, the aspiration noise in ‘coat’ was cut in approximately 10 ms steps while adding vowel pitch periods also in approximately 10 ms steps from ‘goat’ starting after the two first transitional pitch periods. In this way, all stimuli on the continuum were of 437 ms duration. In order to avoid the introduction of clicks and spectral discontinuities, the aspiration noise that was excised was taken from the middle of the aspiration noise. This procedure resulted in a VOT continuum that ranged from 12 ms to 92 ms.

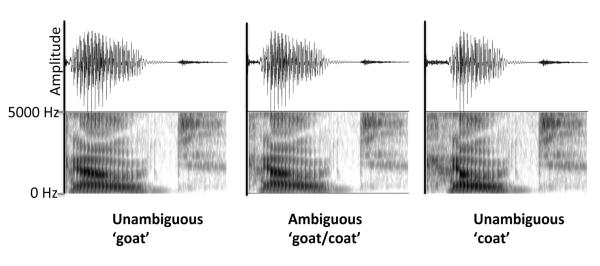

A total of three stimuli were selected from the continuum based on a pilot experiment with 10 participants: two good exemplars, one for [k] (with a VOT of 70ms) and the other for [g] (with a VOT of 21 ms), and one boundary value stimulus (with a VOT of 40 ms) for which the phonetic identity of the initial stop consonant (and hence the word) was ambiguous (see Figure 1). The three selected tokens were then appended to three sentence fragment contexts: coat-biased (e.g. he wore the _), goat-biased (e.g. he fed the _), and neutral (e.g. he saw the _). Twenty milliseconds of silence were added to the end of each of the 72 sentences fragments. Both the unambiguous ‘goat’ token and the unambiguous ‘coat’ token were identified as ‘goat’ and ‘coat’ respectively 100% of the time in all sentence contexts. For the ambiguous token, the percentage of ‘goat responses’ was 55% in the neutral sentence context, 60% in the goat-biased sentence context, and 45% in the coat-biased sentence context.

Figure 1.

Waveforms and spectrograms for the three target stimuli (unambiguous ‘goat’, unambiguous ‘coat’, and ambiguous ‘goat/coat’).

fMRI Experiment

Participants

Twenty-one right-handed native English speakers with normal hearing (11 male, mean age 24.4, SD= 3.4) participated in the study and were paid $25/hr for their participation. Three participants were eliminated from the imaging analysis due to excessive movement during scanning and one participant was eliminated due to headphone malfunction leaving a total of 17 participants (9 male, mean age 24.5, SD = 3.6) whose data were used in both the behavioral and fMRI analyses.

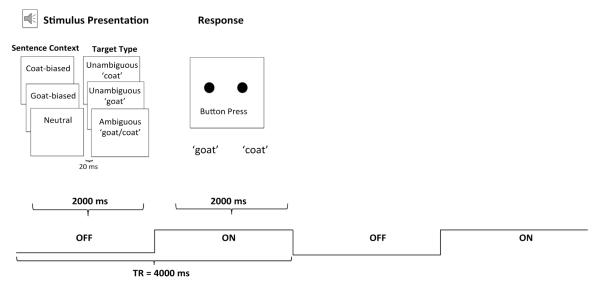

Behavioral Procedure

Participants heard a sentence from one of the three sentenced fragment contexts followed by either the ambiguous target or one of the two unambiguous targets in 3 experimental runs. Each run included 72 pseudo-randomly presented trials consisting of an equal number of each Sentence Context (goat-biased, coat-biased, neutral) paired with each of the three target stimuli (unambiguous goat, unambiguous coat, and ambiguous goat/coat). A different random order of the pairings was chosen for each of the three runs. Figure 2 shows an illustration of the experimental design.

Figure 2.

Illustration of experimental design.

Participants were instructed to press one button if they heard the word ‘goat’ and another if they heard the word ‘coat’ and to respond as quickly as possible without sacrificing accuracy. Word response and reaction time data were collected.

Scanning Protocol

MRI data were collected using a 3 Tesla Tim Trio fMRI scanner equipped with a 32 receiver channel head coil. For each subject, high-resolution anatomical scans were acquired for anatomical co-registration (TR = 1900 ms, TE = 2.98, TI = 900 ms, FOV = 256, 1 mm3 isotropic voxels). Functional images were acquired using an echo-planar sequence (TR = 2000 ms, TE = 28 ms, FOV = 192 mm, 3mm isotropic voxels) in thirty-three 3 mm3 thick slices. A sparse-sampling design was utilized in which the acquisition of each volume was followed by a 2 second silent gap that contained the auditory stimuli presented through MR compatible in-ear headphones. Across the experiment, an equal number of 3 different jitter times were selected (4, 8, or 12 seconds) and were counterbalanced across conditions and runs. Each of the three runs consisted of 146 EPI volumes collected over 9 min and 44 seconds, yielding a total of 438 volumes.

Image Analysis

The Analysis of Functional NeuroImages software (AFNI) was used to analyze the imaging data (Cox, 1996). The functional images were corrected for head motion by aligning all volumes to the fourth collected volume and using a 6-parameter rigid body transform (Cox & Jesmanowicz, 1999). The functional images were aligned with the structural images in Talairach space and resampled to 3 mm3. Spatial smoothing was performed using a 6-mm full width half maximum Gaussian kernel. A general linear model (GLM) analysis was used on each participant’s EPI data to estimate each individual’s hemodynamic response during each stimulus condition. The six output parameters of the motion correction were included as covariates in the GLM. Stimulus presentation times were included with stereotypic gamma-variate hemodynamic response curves provided by AFNI. No-response trials and outliers were censored from the GLM analysis. The coefficients from the GLM analysis were then converted to percent signal change units.

Analysis of Results

Behavioral Results

Categorization Results

Table 1 shows the behavioral categorization results. As can be seen, the biased sentence contexts affected acoustic phonetic perception and consequently the categorization of the ambiguous target. As expected, the unambiguous target was categorized consistently as either ‘coat’ or ‘goat’ irrespective of sentence fragment context. In contrast, relative to the neutral context, there were more ‘goat’ responses in the goat-biased sentences and more ‘coat’ responses in the coat-biased sentences. In order to determine the statistical reliability of these effects, an ANOVA and follow-up individual t-tests were conducted. A one-way ANOVA on the ambiguous target with Sentence Context as a factor (goat-biased, coat-biased, and neutral) and percent ‘goat responses’ as a dependent measure showed a significant main effect of Sentence Context F(2, 32) = 4.93, p = .014. Follow-up t-test comparisons showed that the categorization of the ambiguous target was significantly different in the goat-biased compared to the coat-biased sentence context t(16) = 2.51, p = .02, replicating the main finding in the literature (Borsky et al., 1998). Comparisons of each coat-biased and goat-biased sentence contexts to the neutral sentence context, however, revealed a significant difference in categorization only for the goat-biased sentence context t(16) = 2.18, p = .04, not for the coat-biased sentence context t(16) = −1.62, p = .13 (Prior behavioral research (Borsky et al., 1998) did not include a neutral sentence context condition). Given that a significant difference emerged between the neutral and the goat-biased comparison, all other analyses focused on the neutral and goat-biased sentence fragment context and ambiguous [g]/[k] and unambiguous ‘goat’ target stimuli.

Table 1.

Behavioral Categorization Results (N = 17). Mean values represent the percent of ‘goat’ responses. Values in parentheses represent standard errors of the mean.

| Sentence Context | |||

|---|---|---|---|

| Target Type | Coat-biased | Goat-Biased | Neutral |

| Ambiguous ‘goat/coat’ | 60 (8) | 72 (7) | 66 (7) |

| Unambiguous ‘goat’ | 100 (0) | 99 (0) | 98 (0) |

| Unambiguous ‘coat’ | 1 (1) | 0 (0) | 0 (0) |

Reaction Time Results

Table 2 shows reaction time latencies as a function of Target Type (ambiguous, unambiguous ‘goat’) and Sentence Context (neutral, goat-biased). As can be seen, there were slower reaction time latencies for the ambiguous compared to the unambiguous target and reaction time latencies were similar across the sentence context conditions. A two-way ANOVA confirmed these results. There was a main effect of Target Type, F(1,16) = 56.59, p< .001, no main effect of Sentence Context, F(1,16) = 1.54, p = .23, and no interaction between Target Type and Sentence Context, F(1,16) = 0.70, p = .42. The main effect for Target Type confirms earlier findings that response latencies are longer for boundary-value stimuli compared to endpoint stimuli in phonetic categorization tasks (Blumstein, Myers, & Rissman, 2005; Liebenthal, Binder, Spitzer, Possing, & Medler, 2005; Pisoni & Tash, 1974). The failure to show a sentence context effect indicates that there is no difference in task difficulty as a function of Sentence Context.

Table 2.

Behavioral Reaction Time Results (N = 17). Mean values represent reaction time in milliseconds. Values in parentheses represent standard errors of the mean.

| Sentence Context | ||

|---|---|---|

| Target Type | Goat-Biased | Neutral |

| Ambiguous ‘goat/coat’ | 893 (46) | 897 (40) |

| Unambiguous ‘goat’ | 708 (51) | 734 (45) |

fMRI Analysis

A two-way ANOVA (Sentence Context x Target Type) was conducted with the two conditions as fixed factors and participant as a random factor on the percent signal change values for each condition and each participant. Examination of main effects provides insights into the role of acoustic-phonetic manipulation, on the one hand, and sentence context, on the other. However, to examine the effects of sentence context on auditory word recognition, it is necessary to investigate those neural areas that showed a 2-way interaction.

All contrasts were corrected for multiple comparisons. A Monte Carlo simulation was used to determine the minimum cluster size at p < .05 with a voxel-wise threshold of p < .01. All reported clusters are greater than 34 contiguous voxels (see Table 3).

Table 3.

Regions exhibiting significant clusters across stimulus conditions. Clusters are thresholded at a voxel-level threshold of p < .01, and a cluster-level threshold of p < .05 with a minimum of 34 contiguous voxels.

| Cluster Size (# voxels) |

Maximum T-value |

Talairach Coordinates (x, y, z) |

|||

|---|---|---|---|---|---|

| Main effect of Target Type | |||||

| Ambiguous > Unambiguous | |||||

| Left Middle Frontal Gyrus | 672 | 6.26 | −46 | 26 | 20 |

| Right Middle Frontal Gyrus | 321 | 6.28 | 41 | 23 | 26 |

| Right Cingulate | 211 | 4.79 | 8 | 20 | 32 |

|

| |||||

| Unambiguous > Ambiguous | |||||

| Left precuneus | 1299 | 8.72 | −4 | −49 | 38 |

| Right superior temporal gyrus | 288 | 5.33 | 50 | −19 | 8 |

| Left angular gyrus | 190 | 5.57 | −43 | −58 | 20 |

| Left postcentral gyrus | 173 | 5.51 | −49 | −13 | 20 |

| Left anterior cingulate | 168 | 4.69 | −4 | 44 | −1 |

| Right angular gyrus | 168 | 5.85 | 41 | −67 | 29 |

| Right middle frontal gyrus | 163 | 5.99 | 20 | 23 | 38 |

| Left cerebellum | 131 | 7.88 | −22 | −49 | −16 |

| Left middle frontal gyrus | 121 | 5.43 | −28 | 8 | 47 |

| Right middle temporal gyrus | 61 | 4.63 | 44 | −49 | 5 |

| Left insula | 52 | 5.37 | −34 | −25 | 5 |

| Right inferior parietal lobe | 37 | 4.70 | 32 | −34 | 32 |

| Right precentralgyrus | 36 | 4.56 | 32 | −10 | 35 |

|

| |||||

|

Main effect of Sentence

Context |

|||||

| Goat-biased> Neutral | |||||

| Left superior frontal gyrus | 45 | 4.21 | −16 | 47 | 23 |

| Left inferior frontal gyrus | 40 | 4.04 | −37 | 23 | 2 |

|

| |||||

|

Sentence Context X Target Type

interaction |

F-value | ||||

|

(Goat-biased, Neutral) X

(Unambiguous ‘goat’, Ambiguous) |

|||||

|

|

|||||

| Left MTG/STG | 69 | 19.13 | −55 | −31 | 2 |

fMRI Results

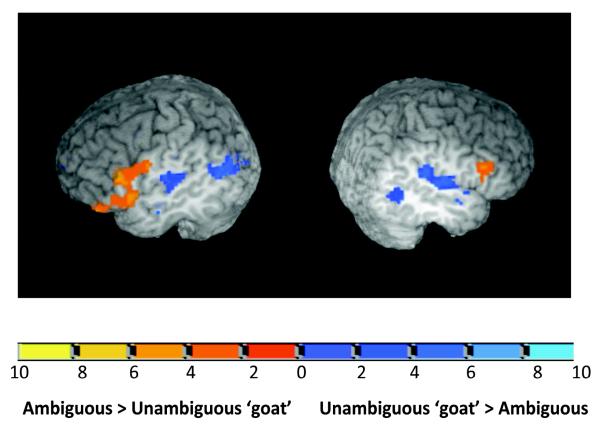

Several clusters in bilateral frontal, temporal and parietal cortex showed a main effect of Target Type (see Table 3). Some areas showed increased activation for the ambiguous stimulus and others showed increased activation for the unambiguous stimulus. Bilateral frontal areas including a cluster, with a peak of activation in the MFG extending into the IFG, showed greater activation for the ambiguous compared to the unambiguous stimuli, suggesting that greater resources are recruited for processing the ambiguous stimuli. Greater activation for the unambiguous compared to the ambiguous target emerged in the superior temporal, middle temporal, and temporo-parietal areas indicating that they responded more strongly to the unambiguous, hence better exemplar stimulus. Figure 3 shows these results. The angular gyrus, bilaterally, also showed greater activation for the unambiguous compared to the ambiguous target consistent with previous reports that this area may be tuned to the goodness of the phonetic category stimulus (Blumstein, Myers, & Rissman, 2005).

Figure 3.

Sagittal views of frontal and temporal activation for the main effect of Target Type. The image on the left shows a cut out at slice x = − 50 to depict left hemisphere activation. The image on the right shows a cut out at slice x = 50 to depict right hemisphere activation. The color scheme represents the T-value threshold for thecontrasts (Ambiguous target – Unambiguous ‘goat’ target) and (Unambiguous ‘goat’ target – Ambiguous target).

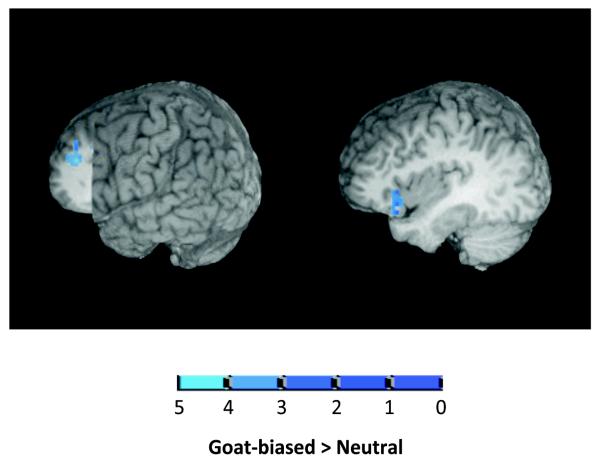

Only frontal areas showed a significant main effect of Sentence Context (see Table 3). In particular, as shown in Figure 4, the inferior frontal gyrus and the left superior frontal gyrus showed greater activation for the goat-biased compared to the neutral context.

Figure 4.

Activation pattern for main effect of Sentence Context at a voxel-wise threshold of p < .01. The image on the left shows a cut out at slice x = −15 and slice y = 30 of the superior frontal gyrus regions with peak Talairach Coordinates (−16, 47, 23). The image on the right shows a cut out at slice x = −35 of the inferior frontal gyrus with peak Talairach coordinates (−37, 23, 2).

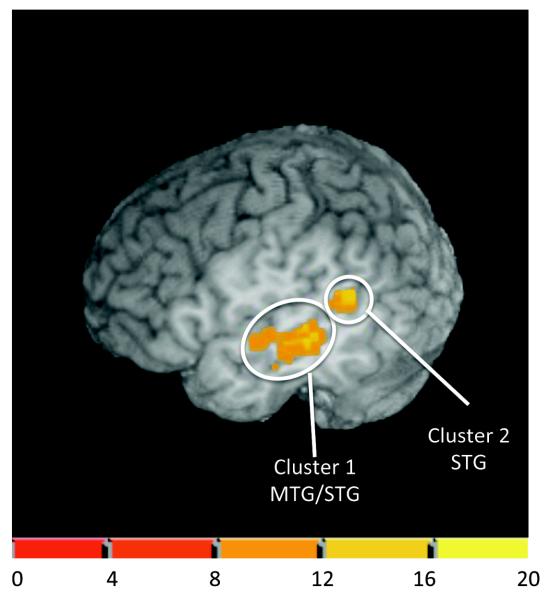

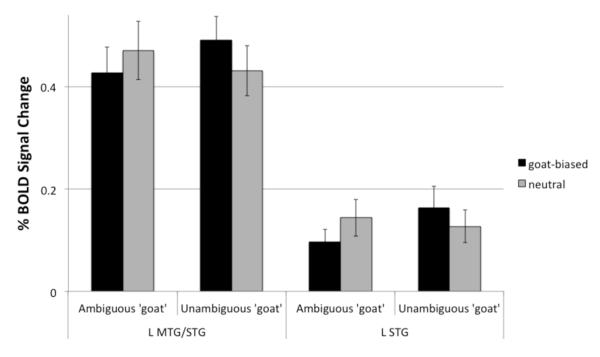

A Sentence Context x Target Type interaction yielded one significant cluster in left temporal cortex (see Table 3). Figure 5 shows that peak activation was in the middle temporal gyrus extending into the superior temporal gyrus (Figure 5, Cluster 1). Figure 6 shows the percent signal change across the stimulus conditions within this cluster. Post-hoc t-tests on the percent signal change in this region for each target type revealed a decrease in percent signal change that approached significance for the ambiguous target in the ‘goat-biased’ sentence context compared to the neutral context, t(16) = −2.02, p = .06. In contrast, for the unambiguous goat target stimulus, the goat-biased sentence context yielded a significantly greater percent signal change compared to the neutral sentence fragment contexts, t(16) = 3.68, p = .002.

Figure 5.

Activation pattern for the Sentence Context (Goat-biased, Neutral) by Target Type (Unambiguous ‘goat’, Ambiguous) interaction at a voxel-wise threshold of p < .01. The figure shows a cutout at slice x = −50. Cluster 1 is significant at a voxel-level threshold of p < .01 and corrected cluster-level threshold of p < .05 (69 voxels). Peak Talairach coordinates for Cluster 1 (−55, −31, 2). Cluster 2 is significant at a voxel-wise threshold of p < .01 (24 voxels) using a mask that was restricted to neural areas implicated in language processing (see text). Peak Talairach coordinates for Cluster 2 (−52, −52, 20). The color scheme represents the F-value threshold for the interaction.

Figure 6.

Percent signal change for the Sentence Context by Target Type interaction for the Left MTG/STG cluster and the Left STG cluster (p < .01) in Figure 5. Error bars represent standard errors of the mean over subjects.

To determine whether any additional clusters would emerge in the Sentence Context X Target Type interaction with a reduced cluster threshold, we restricted the search space to cerebral cortical areas implicated in language processing including the inferior frontal gyrus, the middle frontal gyrus, the middle temporal gyrus, the superior temporal gyrus, the supramarginal gyrus, and the angular gyrus. Results showed an additional cluster of 24 voxels (see Figure 5, Cluster 2) at a voxel threshold of p < .01 in the superior temporal gyrus. Analysis of the percent signal change within this cluster, as shown in Figure 6, indicates that there is a similar pattern of results to those found in the MTG/STG cluster. Namely, there was a significant decrease in percent signal change for the ambiguous target in the goat-biased sentence compared to neutral sentence context, t(16) = −2.28, p = .04, and a significant increase in percent signal change for the unambiguous target in the goat-biased sentence context compared to the neural context, t(16) = 2.77, p = .01.

In order to determine whether the BOLD response was influenced by reaction time differences, we conducted the same fMRI analysis as above using reaction time as a nuisance regressor. The same significant clusters emerged in the main effect of Sentence Context and in the Sentence Context x Target Type interaction results. In the case of the main effect of Target Type, the same clusters emerged as described in Table 3 with the exception of the right precentral gyrus cluster, which no longer reached significance.

General Discussion

In order to better understand the complex interactions that naturally take place in language processing, it is important to understand how different sources of information constrain and influence perception. In this study, we examined how abstract information, such as the meaning of a sentence context, constrains the selection of an acoustic stimulus that is phonetically ambiguous between two words and affects the neural pattern of activation. Specifically, we used a sparse-sampling fMRI design to investigate the neural areas, which showed an interaction between two factors: the type of sentence context (neutral vs. biased) and the type of target (ambiguous vs. unambiguous).

Consistent with the behavioral literature (Borsky et al., 1998), our results showed a significant effect of sentence context on the ambiguous lexical target with a significant change in word categorization as a function of the biasing context. In the original paper, Borsky et al. (1998) used two-biasing contexts, one towards ‘coat’ and one towards ‘goat’. However, they did not use a third neutral condition. Such a condition allows for determining whether the sentence context indeed shifts the perception of the ambiguous stimuli in both directions. For this reason, the current study utilized a third, neutral condition, in addition to the coat-biased and goat-biased conditions. Behavioral analysis revealed that the effect of sentence context on the perception of an acoustically ambiguous word target was significant only for the goat-biased context and not the coat-biased context. Asymmetries in the perception of a voiced-voiceless continuum have been shown under a number of conditions, including lexical bias (Burton & Blumstein, 1995) and speaking rate (Kessinger & Blumstein, 1997). Therefore, we focused our imaging analyses on the neutral and goat-biased sentence conditions, which showed significant behavioral perceptual differences.

Of most importance in answering the question about the influence of sentence context on phonetic perception is identifying those neural areas that showed a significant Sentence Context by Target Type interaction. However, before considering those findings in detail, we first identified those neural areas, which showed significant changes in brain activation as a result of each independent factor, Target Type and Sentence Context.

Looking at Target Type, the patterns of activation showed a network of brain areas involved in processing the acoustic-phonetic properties of speech including temporal, frontal, and parietal structures. Greater activation for acoustically ambiguous compared to unambiguous target stimuli emerged in frontal areas including the MFG and extending into the IFG, consistent with findings showing increased neural resources recruited for poor acoustic phonetic exemplars than for good ones (Binder et al., 2004; Blumstein et al., 2005). Additionally, a network of temporal and parietal areas implicated in acoustic phonetic processing showed greater activation for the unambiguous compared to the ambiguous target. These results are consistent with earlier findings showing increased activation in the superior temporal gyrus for between-category compared to within-category changes (Desai, Liebenthal, Waldron, & Binder, 2008; Obleser et al., 2006). Modulation of activation in the angular gyrus has also emerged as a function of the “goodness” of the phonetic category exemplar (Blumstein et al., 2005).

The influence of sentence context and the general effects of a biased sentence context revealed significantly greater activation for the biased compared to the neutral sentence context in two frontal structures, the inferior frontal gyrus and the superior frontal gyrus. According to recent work, the inferior frontal gyrus may be involved in the unification of lexical and sentence level information (Snijders, Petersson, & Hagoort, 2010; Snijders et al., 2009). Therefore, increased activation for the biased vs. neutral sentence context in these regions may reflect the unification of a goat-biased context with the ‘goat’ percept.

The primary goal of this study was to examine those neural areas showing the effects of higher-level sentence meaning on acoustic phonetic perception. To this end, we looked for those neural areas that showed a Sentence Context x Target Type interaction as this interaction reflects changes in phonetic perception as a function of the sentence context. FMRI analysis revealed a significant interaction effect between Sentence Context and Target Type in one significant cluster that encompassed portions of the left middle temporal gyrus (MTG) and the left superior temporal gyrus (STG). Percent signal change in this cluster showed that this region was differentially modulated by sentence context for both the ambiguous and unambiguous targets. In particular, for the unambiguous target (‘goat’), there was a significant increase in percent BOLD signal for the biased context (‘goat’) compared to the neutral sentence fragment context. In contrast, for the ambiguous target there was a decrease in percent BOLD signal change response for the biased (‘goat’) compared to neutral sentence fragment context.

Both the MTG and the STG are thought to play a crucial role in semantic and phonological aspects of language processing, respectively (Binder, Desai, Graves, & Conant, 2009; Hickok, 2009; Hickok & Poeppel, 2007). In particular, damage to the MTG leads to auditory comprehension deficits (Dronkers, 2004). Consistent with these findings, the middle temporal gyrus is considered to be a key structure in semantic memory retrieval and is thought to provide an interface between lexical and semantic information (Hickok, 2009; Snijders et al., 2009). The superior temporal gyrus, on the other hand, is involved in perceptual aspects of speech processing (Hickok & Poeppel, 2007); posterior portions of the STG elicit responses that vary according to acoustic phonetic category information typically showing greater activation for between-category differences compared to within category differences (Desai et al., 2008; Joanisse, Zevin, & McCandliss, 2007).

The hypothesized relative contributions of the MTG and STG to language processes suggest that “top-down modulation” may contribute to the pattern of results found in the current experiment. The sentence meaning bias presumably increases activation of the congruent lexical item, which in turn influences the activation of the phonetic category representation of the congruent target stimulus. As a consequence, there should be fewer neural resources needed to perceptually resolve the acoustic-phonetic ambiguity of the target stimulus. Consistent with this interpretation is the presence of an additional cluster in the STG, which did not survive the cluster size correction threshold for the whole brain. This area, which generally responds to phonetic category information (Desai et al., 2008; Hickok, 2009), showed the same pattern of activation as the significant cluster in MTG/STG. Taken together, these findings suggest that the higher level sentence context modulated earlier stages of speech processing.

In recent work using resting state functional connectivity and DTI structural analyses, Turken and Dronkers (2011) showed that the MTG is both functionally and anatomically connected to the STG. Given the known anatomical and functional connections between the MTG and STG and the hypothesized contributions of each of these regions to semantic and phonological processing, these areas may either be simultaneously engaged in the processing of congruent information or interacting with one another in order to integrate congruent sources of meaning and acoustic information.

Our current findings suggest that these modulatory effects of sentence context on phonetic perception are due to interactions between the two sources of information (i.e. bottom-up and top-down processes) and they challenge a strictly feedforward only computational account. In a recent study, Davis et al. (2011) examined the effects of sentence context (coherent vs. anomalous sentences) and intelligibility (degraded vs. normal) on neural activation patterns during a passive listening task and found interactions between these two factors in both frontal and temporal areas. Examining the average hemodynamic responses in frontal and temporal areas, they found that responses in frontal regions occurred later than those in superior temporal gyrus. Davis et al. (2011) used this as evidence to argue against ‘top-down’ modulation of activation in temporal cortex and suggest that the relatively delayed timing in frontal cortex compared to superior temporal cortex was more compatible with a feedforward account of speech perception processing. However, in a later series of studies, Davis and his colleagues proposed that interactive effects of sentence context and speech intelligibility are more consistent with top-down models. They further argue that predictive coding top-down models provide a better account than other top-down models such as TRACE (Gagnepain, Henson, & Davis, 2012; Wild, Davis, & Johnsrude, 2012; Sohoglu, Peelle, Carlyon, & Davis, 2012).

Although the purpose of this paper was not to test specific computational implementations of the influence of higher-level information on lower-level perception, the nature of the modulatory effects that emerged in the current study cannot be easily explained by current top-down models of speech perception including either predictive coding or TRACE.

Predictive coding is based on the calculation of prediction errors generated by a comparison between top-down prediction of the expected sensory input and the actual sensory input (Spratling, 2008; Summerfield &Koechlin, 2008; Gagnepain, Henson, & Davis, 2012; Sohoglu et al. 2012). Thus, the greater the difference between the expectations of the higher level information and the actual sensory input, the greater the activation. Presumably, this would result in greater activation when there is a greater difference between the predictions of the sentence context information and the congruency of the acoustic phonetic input.

A predictive coding account does predict our findings of greater activation for the ambiguous target in the neutral sentence context compared to the biased sentence context because in this case there is a greater prediction error in the neutral sentence context. However, in contrast to the predictions of predictive coding, the pattern of the interaction effect in the current study showed increased activation for the unambiguous target in the biased sentence context compared to the neutral sentence context. In a predictive coding account there should be reduced activation for the unambiguous target in the biased sentence context compared to the neutral sentence context because there is no prediction error generated by the biased context and the congruent unambiguous target.

The TRACE model also cannot account for the current pattern of the interaction results. In contrast to predictive coding and consistent with our findings, TRACE would predict increased activation for the unambiguous target in the biased compared to the neutral sentence context because top-down information boosts the activation of the unambiguous target stimulus. However, TRACE fails to predict our finding of a relative decrease in activation for the ambiguous target in the biased compared to neutral sentence context because the neutral sentence context is unable to resolve the ambiguity of the sensory input, and hence there should be less activation (rather than increased activation as we found) for the ambiguous target in the neutral compared to the biased context.

The pattern of the interaction effects that did emerge in the current study suggests that both the quality of the input and the potential bias of the context together interact and modulate neural activation patterns. This points to an interactive model where both sources of information are independently weighted, with the final pattern of activation produced by the integration of these two sources of information. Future research requires the development of such a model. Indeed, the results of the current study point to the potential importance of relating measures of neural activity to computational simulations in order to inform and shape models of the functional architecture of language and other cognitive systems.

Many studies have provided evidence for top-down modulation in speech perception by demonstrating that temporal areas are sensitive to manipulations of intelligibility (Davis & Johnsrude, 2003; Poldrack et al., 2001). There is even evidence in the literature that demonstrates modulation of activity in neural areas recruited for early auditory processing (i.e. Heschl’s gyrus) through top-down modulation (Jancke, Mirzazade, & Shah, 1999; Pekkola et al., 2005; Poghosyan & Ioannides, 2008; Kilian-Hutten, Valente, Vroomen, & Formisano, 2011; Gagnepain, Henson, & Davis, 2012).

In the visual domain hierarchical organization, interactive processes, and top-down modulation have also been considered and have provided interesting insights and connections into other domains including the auditory processing of language (Rauschecker & Tian, 2000; Bar, 2007; Sharpee, Atencio, & Schreiner, 2011). Taken together, these findings challenge feedforward only models of both auditory and visual processing. That the functional and neural architecture of the processing of language may similarly require both feedforward and feedback mechanisms is not surprising and is consistent with the view that the neural systems underlying language are built off of domain general computational and neural properties.

Our results suggest that the MTG/STG is integrating the two sources of information through both bottom-up and top-down processing and that this area responds in a manner that is sensitive to the congruency of the two sources of information. Consistent with this finding, a number of previous studies have shown that acoustically presented speech with visually presented written or gestural congruent information results in enhanced responses in the STG (Atteveldt, Formisano, Goebel, & Blomert, 2004; Calvert, 2001; Raij, Uutela, & Hari, 2000). Congruency and word meaning similarly boost activation in the MTG (Rodd, Davis, & Johnsrude, 2005; Tesink et al., 2008). Results of the current study showed a relative increase in activation when information was maximally congruent: when the unambiguous target was coupled with the congruent sentence context compared to the neutral sentence context. In contrast, a relative decrease in activation emerged when an ambiguous target was coupled with a goat-biased sentence context compared to a neutral sentence context. Taken together, these findings suggest that context alone does not drive the neural activation patterns, but rather that context differentially shapes the response in a manner that also depends on the quality of the acoustic speech signal.

Conclusions

To our knowledge, our results provide the first evidence for “localized” interactions in temporal cortex between meaning and acoustic information. A differential modulation of neural responses for ambiguous and unambiguous target stimuli as a function of sentence context encompasses regions in temporal cortex, which have previously been implicated in perceptual and semantic processing. We argue that listeners’ perception of speech is constrained by converging sources of information in a manner that is most consistent with the quality of the acoustic-phonetic input interacting with semantic expectations from prior context. This manifests in a modulatory effect that weights both acoustic input and semantic context and integrates this information in relation to their congruency.

Acknowledgments

This work was funded by NIDCD Grant RO1 DC006220 and NIMH Grant 2T32-MH09118. The authors would like to acknowledge the contributions of Jasmina Stritof in collecting some of the pilot data, Kathleen K. Kurowski and Paul Allopenna for their assistance in the preparation and recording of the stimuli, and Domenic Minicucci and Ali Arslan for their assistance with image processing. Special thanks to Paul Allopenna for helpful discussions about the theoretical implications of the TRACE model. The authors would also like to thank one anonymous reviewer and Matt Davis for their valuable feedback.

Appendix.

| Coat-biased | Goat-biased | Neutral |

|---|---|---|

| He altered the | He amused the | He added the |

| He buckled the | He ate the | He admired the |

| He buttoned the | He caged the | He brushed the |

| He designed the | He calmed the | He checked the |

| He dyed the | He cooked the | He claimed the |

| He fixed the | He cured the | He drew the |

| He folded the | He fed the | He enjoyed the |

| He hemmed the | He helped the | He examined the |

| He ironed the | He hunted the | He found the |

| He knitted the | He led the | He handled the |

| He made the | He milked the | He held the |

| He mended the | He named the | He moved the |

| He modified the | He pet the | He noted the |

| He patched the | He pushed the | He noticed the |

| He repaired the | He raised the | He offered the |

| He ripped the | He scared the | He owned the |

| He sewed the | He shocked the | He picked the |

| He shaped the | He shot the | He preferred the |

| He started the | He tasted the | He priced the |

| He stored the | He taught the | He rated the |

| He tailored the | He teased the | He showed the |

| He washed the | He tempted the | He sold the |

| He wore the | He trained the | He touched the |

| He wrinkled the | He walked the | He viewed the |

References

- Atteveldt N. v., Formisano E, Goebel R, Blomert L. Integration of letters and speech sounds in the human brain. Neuron. 2004;43(2):271–282. doi: 10.1016/j.neuron.2004.06.025. [DOI] [PubMed] [Google Scholar]

- Bar M. The proactive brain: using analogies and associations to generate predictions. Trends in Cognitive Sciences. 2007;11(7):280–289. doi: 10.1016/j.tics.2007.05.005. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? a critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Liebenthal E, Possing ET, Medler DA, Ward BD. Neural correlates of sensory and decision processes in auditory object identification. Nature Neuroscience. 2004;7(3):295–301. doi: 10.1038/nn1198. [DOI] [PubMed] [Google Scholar]

- Blumstein S, Myers E, Rissman J. The perception of voice onset time: an fMRI investigation of phonetic category structure. Journal of Cognitive Neuroscience. 2005;17(9):1353–1366. doi: 10.1162/0898929054985473. [DOI] [PubMed] [Google Scholar]

- Borsky S, Tuller B, Shapiro L. “How to milk a coat:” the effects of semantic and acoustic information on phoneme categorization. Journal of the Acoustical Society of America. 1998;103(5 Pt 1):2670–2676. doi: 10.1121/1.422787. [DOI] [PubMed] [Google Scholar]

- Burton M, Blumstein S. Lexical effects on phonetic categorization: the role of stimulus naturalness and stimulus quality. Journal of Experimental Psychology: Human Perception and Performance. 1995;21(5):1230–1235. doi: 10.1037//0096-1523.21.5.1230. [DOI] [PubMed] [Google Scholar]

- Calvert G. Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cerebral Cortex. 2001;11(2):1110–1123. doi: 10.1093/cercor/11.12.1110. [DOI] [PubMed] [Google Scholar]

- Connine CM. Constraints on interactive processes in auditory word recognition: The role of sentence context. Journal of Memory and Language. 1987:527–538. [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29(3):162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cox RW, Jesmanowicz A. Real-time 3D image registration for functional MRI. Magnetic Resonance in Medicine. 1999;42(1014-1018) doi: 10.1002/(sici)1522-2594(199912)42:6<1014::aid-mrm4>3.0.co;2-f. [DOI] [PubMed] [Google Scholar]

- Davis MH, Ford MA, Kherif F, Johnsrude IS. Does semantic context benefit speech understanding through “top-down” processes? Evidence from time-resolved sparse fMRI. Journal of Cognitive Neuroscience. 2011;23(12):3914–3932. doi: 10.1162/jocn_a_00084. [DOI] [PubMed] [Google Scholar]

- Davis MH, Johnsrude IS. Hierarchical processing in spoken language comprehension. The Journal of Neuroscience. 2003;23(8):3423–3431. doi: 10.1523/JNEUROSCI.23-08-03423.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desai R, Liebenthal E, Waldron E, Binder JR. Left posterior temporal regions are sensitive to auditory categorization. Journal of Cognitive Neuroscience. 2008;20(7):1174–1188. doi: 10.1162/jocn.2008.20081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers N, Wilkins D, Van Valin RJ, Redfern B, Jaeger J. Lesion analysis of the brain areas involved in language comprehension. Cognition. 2004;92(1-2):145–177. doi: 10.1016/j.cognition.2003.11.002. [DOI] [PubMed] [Google Scholar]

- Fox R. Effect of lexical status on phonetic categorization. Journal of Experimental Psychology: Human Perception and Performance. 1984;10(4):526–540. doi: 10.1037//0096-1523.10.4.526. [DOI] [PubMed] [Google Scholar]

- Friederici AD, Kotz SA, Scott SK, Obleser J. Disentangling syntax and intelligibility in auditory language comprehension. Human Brain Mapping. 2010;31:448–457. doi: 10.1002/hbm.20878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gagnepain P, Henson RN, Davis MH. Temporal predictive codes for spoken words in auditory cortex. Current Biology. 2012;22:615–621. doi: 10.1016/j.cub.2012.02.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ganong W. r. Phonetic categorization in auditory word perception. Journal of Experimental Psychology: Human Perception and Performance. 1980;6(1):110–125. doi: 10.1037//0096-1523.6.1.110. [DOI] [PubMed] [Google Scholar]

- Gaskell MG, Marslen-Wilson WD. Integrating form and meaning: A distributed model of speech perception. Language and Cognitive Processes. 1997;12(5/6):613–656. [Google Scholar]

- Gow JD, Segawa J, Ahlfors S, Lin F. Lexical influences on speech perception: a Granger causality analysis of MEG and EEG source estimates. NeuroImage. 2008 doi: 10.1016/j.neuroimage.2008.07.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hertrich I, Mathiak K, Lutzenber W, Ackerman H. Time course of early audiovisual interactions during speech and non-speech central-auditory processing: An MEG study. Journal of Cognitive Neuroscience. 2009;21:259–274. doi: 10.1162/jocn.2008.21019. [DOI] [PubMed] [Google Scholar]

- Hickok G. The functional neuroanatomy of language. Physics of Life Reviews. 2009;6(3):121–143. doi: 10.1016/j.plrev.2009.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nature Reviews Neuroscience. 2007;8(5):393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Jancke L, Mirzazade S, Shah NJ. Attention modulates activity in the primary and the secondary auditory cortex: a functional magnetic resonance imaging study in human subjects. Neuroscience Letters. 1999;266:125–128. doi: 10.1016/s0304-3940(99)00288-8. [DOI] [PubMed] [Google Scholar]

- Joanisse M, Zevin J, McCandliss B. Brain mechanisms implicated in the preattentive categorization of speech sounds revealed using FMRI and a short-interval habituation trial pardigm. Cerebral Cortex. 2007;17(9):2084–2093. doi: 10.1093/cercor/bhl124. [DOI] [PubMed] [Google Scholar]

- Kessinger R, Blumstein SE. Rate of speech effects on voice-onset time in Thai, French, and English. Journal of Phonetics. 1997;25(2):143–168. [Google Scholar]

- Kilian-Hutten N, Valente G, Vroomen J, Formisano E. Auditory cortex encodes the perceptual interpretation of ambiguous sound. The Journal of Neuroscience. 2011;31(5):1715–1720. doi: 10.1523/JNEUROSCI.4572-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liebenthal E, Binder JR, Spitzer SM, Possing ET, Medler DA. Neural substrates of phonemic perception. Cerebral Cortex. 2005;15:1621–1631. doi: 10.1093/cercor/bhi040. [DOI] [PubMed] [Google Scholar]

- McClelland J, Elman J. The TRACE model of speech perception. Cognitive Psychology. 1986;18(1):1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- McClelland J, Mirman D, Holt L. Are there interactive processes in speech perception? Trends in Cognitive Sciences. 2006;10(8):363–369. doi: 10.1016/j.tics.2006.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGurk H, MacDonald J. Visual influences on speech perception processes. Perception and Psychohysics. 1976;24(3):253–257. doi: 10.3758/bf03206096. [DOI] [PubMed] [Google Scholar]

- McQueen J, Norris D, Cutler A. Are there really interactive processes in speech perception. Trends in Cognitive Sciences. 2006;10(12):533. doi: 10.1016/j.tics.2006.10.004. [DOI] [PubMed] [Google Scholar]

- Miller JL, Dexter ER. Effects of speaking rate and lexical status on phonetic perception. Journal of Experimental Psychology: Human Perception and Performance. 1988;14(3):369–378. doi: 10.1037//0096-1523.14.3.369. [DOI] [PubMed] [Google Scholar]

- Mirman D, McClelland J, Holt L. An interactive Hebbian account of lexically guided tuning of speech perception. Psychonomic Bulletin & Review. 2006;13(6):958–965. doi: 10.3758/bf03213909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myers E, Blumstein S. The neural bases of the lexical effect: an fMRI investigation. Cerebral Cortex. 2008;18(2):278–288. doi: 10.1093/cercor/bhm053. [DOI] [PubMed] [Google Scholar]

- Myers E, Blumstein S, Walsh E, Eliassen J. Inferior frontal regions underlie the perception of phonetic category invariance. Psychological Science. 2011;20(7):895–903. doi: 10.1111/j.1467-9280.2009.02380.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noppeney U, Phillips J, Price C. The neural areas that control the retrieval and selection of semantics. Neuropsychologia. 2004;42:1269–1280. doi: 10.1016/j.neuropsychologia.2003.12.014. [DOI] [PubMed] [Google Scholar]

- Norris D, McQueen J, Cutler A. Merging information in speech recognition: feedback is never necessary. Behavioral and Brain Sciences. 2000;23(3):299–325. doi: 10.1017/s0140525x00003241. [DOI] [PubMed] [Google Scholar]

- Norris D, McQueen J, Cutler A. Perceptual learning in speech. Cognitive Psychology. 2003;47(2):204–238. doi: 10.1016/s0010-0285(03)00006-9. [DOI] [PubMed] [Google Scholar]

- Obleser J, Boecker H, Drzezga A, Haslinger B, Hennenlotter A, Roettinger M, Rauschecker JP. Vowel sound extraction in anterior superior temporal cortex. Human Brain Mapping. 2006;27:562–571. doi: 10.1002/hbm.20201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J, Kotz SA. Expectancy constraints in degraded speech modulate the language comprehension network. Cerebral Cortex. 2009;20(3):633–640. doi: 10.1093/cercor/bhp128. [DOI] [PubMed] [Google Scholar]

- Obleser J, Kotz SA. Multiple brain signatures of integration in the comprehension of degraded speech. NeuroImage. 2011;55:713–723. doi: 10.1016/j.neuroimage.2010.12.020. [DOI] [PubMed] [Google Scholar]

- Obleser J, Leaver AM, VanMeter J, Rauschecker JP. Segregation of vowels and consonants in human auditory cortex: evidence for distributed hierarchical organization. Frontiers in Psychology. 2010;1(232):1–14. doi: 10.3389/fpsyg.2010.00232. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J, Meyer L, Friederici AD. Dynamic assignment of neural resources in auditory comprehension of complex sentences. NeuroImage. 2011;56:2310–2320. doi: 10.1016/j.neuroimage.2011.03.035. [DOI] [PubMed] [Google Scholar]

- Pekkola J, Ojanen V, Autti T, Jaaskelainen l. P., Mottonen R, Tarkiainen A, Sams M. Primary auditory cortex activation by visual speech: an fMRI study at 3T. NeuroReport. 2005;16(2):125–128. doi: 10.1097/00001756-200502080-00010. [DOI] [PubMed] [Google Scholar]

- Pisoni D, Tash J. Reaction times to comparisons within and across phonetic categories. Perception & Psychophysics. 1974;15:289–290. doi: 10.3758/bf03213946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poghosyan V, Ioannides A. Attention modulates earliest responses in the primary auditory and visual cortices. Neuron. 2008;58(5):802–813. doi: 10.1016/j.neuron.2008.04.013. [DOI] [PubMed] [Google Scholar]

- Poldrack R, Temple E, Protopapas A, Nagarajan S, Tallal P, Merzenich M, Gabrieli J. Relations between the neural bases of dynamic auditory processing and phonological processing: evidence from fMRI. Journal of Cognitive Neuroscience. 2001;13(5):687–698. doi: 10.1162/089892901750363235. [DOI] [PubMed] [Google Scholar]

- Protopapas A. Connectionist modeling of speech perception. Psychological Bulletin. 1999;125(4):410–436. doi: 10.1037/0033-2909.125.4.410. [DOI] [PubMed] [Google Scholar]

- Raij T, Uutela K, Hari R. Audiovisual integration of letters in the human brain. Neuron. 2000;28(2):617–625. doi: 10.1016/s0896-6273(00)00138-0. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Tian B. Mechanisms and streams for processing of “what” and “where” in auditory cortex. Proceedings of the National Academy of Sciences. 2000;97(22):11800–11806. doi: 10.1073/pnas.97.22.11800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodd JM, Davis MH, Johnsrude IS. The neural mechanisms of speech comprehension: fMRI studies of semantic ambiguity. Cerebral Cortex. 2005;15:1261–1269. doi: 10.1093/cercor/bhi009. [DOI] [PubMed] [Google Scholar]

- Sharpee TO, Atencio CA, Schreiner CE. Hierarchical representations in the auditory cortex. Current Opinion in Neurobiology. 2011;21:761–767. doi: 10.1016/j.conb.2011.05.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snijders T, Petersson K, Hagoort P. Effective connectivity of cortical and subcortical regions duing unification of sentence structure. NeuroImage. 2010;52(4):1633–1644. doi: 10.1016/j.neuroimage.2010.05.035. [DOI] [PubMed] [Google Scholar]

- Snijders T, Vosse T, Kempen G, Van Berkum J, Petersson K, Hagoort P. Retrieval and unification of syntactic structure in sentence comprehension: an FMRI study using word-category ambiguity. Cerebral Cortex. 2009;19(7):1493–1503. doi: 10.1093/cercor/bhn187. [DOI] [PubMed] [Google Scholar]

- Sohoglu E, Peelle J, Carlyon R, Davis MH. Predictive top-down integration of prior knowledge during speech perception. The Journal of Neuroscience. 2012;32(25):8443–8453. doi: 10.1523/JNEUROSCI.5069-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spratling MW. Reconciling predictive coding and biased competition models of cortical function. Frontiers in Computational Neuroscience. 2008;2 doi: 10.3389/neuro.10.004.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tesink CMJY, Petersson KM, Berkum J. J. A. v., Brink D. v. d., Buitelaar JK, Hagoort P. Unification of speaker and meaning in language comprehension: An fMRI study. Journal of Cognitive Neuroscience. 2008;11:2085–2099. doi: 10.1162/jocn.2008.21161. [DOI] [PubMed] [Google Scholar]

- Tsunada j., Lee JH, Cohen YE. Representation of speech categories in the primate auditory cortex. Journal of Neurophysiology. 2011 doi: 10.1152/jn.00037.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turken AU, Dronkers NF. The neural architecture of the language comprehension network: converging evidence from lesion and connectivity analyses. Frontiers in Systems Neuroscience. 2011;5:1–19. doi: 10.3389/fnsys.2011.00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wild CJ, Davis MH, Johnsrude IS. Human auditory cortex is sensitive to the perceived clarity of speech. Neuroimage. 2012;60:1490–1502. doi: 10.1016/j.neuroimage.2012.01.035. [DOI] [PubMed] [Google Scholar]