Abstract

Background

Health information exchange is a national priority, but there is limited evidence of its effectiveness.

Objective

We sought to determine the effect of health information exchange on ambulatory quality.

Methods

We conducted a retrospective cohort study over two years of 138 primary care physicians in small group practices in the Hudson Valley region of New York State. All physicians had access to an electronic portal, through which they could view clinical data (such as laboratory and radiology test results) for their patients over time, regardless of the ordering physician. We considered 15 quality measures that were being used by the community for a pay-for-performance program, as well as the subset of 8 measures expected to be affected by the portal. We adjusted for 11 physician characteristics (including health care quality at baseline).

Results

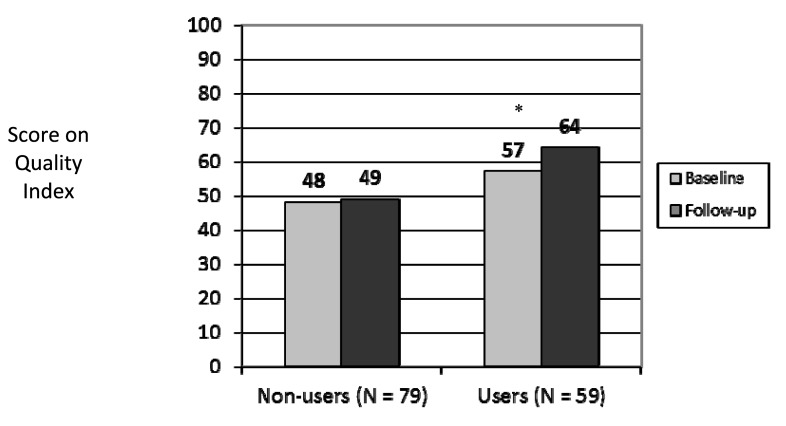

Nearly half (43%) of the physicians were portal users. Non-users performed at or above the regional benchmark on 48% of the measures at baseline and 49% of the measures at followup (p = 0.58). Users performed at or above the regional benchmark on 57% of the measures at baseline and 64% at follow-up (p<0.001). Use of the portal was independently associated with higher quality of care at follow-up for those measures expected to be affected by the portal (p = 0.01), but not for those not expected to be affected by the portal (p = 0.12).

Conclusions

Use of an electronic portal for viewing clinical data was associated with modest improvements in ambulatory quality.

Keywords: Health care quality, clinical informatics, quality indicators

1. Background

Health information exchange is a national priority. The Health Information Technology for Economic and Clinical Health Act (HITECH) established up to $29 billion in incentives for providers and hospitals for “meaningful use” of electronic health records, which includes health information exchange [1, 2]. Health information exchange involves the electronic sharing of clinical data, including sharing of clinical data across health care providers caring for the same patient [3]. Types of data that can be shared include laboratory results, radiology results, hospital discharge summaries, and operative reports.

Without electronic health information exchange, clinical information is missing in 1 out of every 7 primary care visits, because the data reside elsewhere and are not accessible at the point of care [4]. Health information exchange could improve quality by providing more complete and more timely access to clinical data, which in turn could improve medical decision making [5–8]. For example, using health information exchange, physicians could determine if tests recommended by clinical guidelines have been done for their patients or not, including when ordered by other providers. If access to external clinical data reveals that recommended tests have not been done, then those tests could be ordered; if it reveals that recommended tests have been done, then physicians could document those tests and avert duplicate ordering.

Previous evidence demonstrating the effect of health information exchange on quality has been limited [9–11]. Previous work in hospital-based settings has found that electronic laboratory result viewing, which is one aspect of health information exchange, can decrease redundant testing and shorten the time to address abnormal test results [12–15]. In the ambulatory setting, electronic laboratory result viewing has been found to increase patient satisfaction [16] and improve the timeliness of public health reporting [17, 18]. Other studies have considered the effects of health information exchange on emergency department utilization with mixed results [19–21].

2. Objectives

We sought to determine whether health information exchange was associated with higher ambulatory care quality, defined primarily as higher rates of recommended testing. We previously found, in a cross-sectional study, that health information exchange was associated with higher ambulatory care quality [22]. However, it was not possible in that study to rule out confounding by physicians' baseline quality of care. This study was designed to address that limitation. Our objective was to determine any association between health information exchange and quality of care, while adjusting for baseline quality of care.

3. Methods

3.1 Overview

We conducted a retrospective cohort study of primary care physicians in the ambulatory setting. All of the physicians had the opportunity to view clinical data through a free-standing Internet-based portal. We determined associations between actual usage of the portal and health care quality over time, adjusting for physician characteristics, including baseline quality.

3.2 Setting and Participants

This study took place in the Hudson Valley, the region of New York State immediately north of New York City. We included primary care physicians for adults (general internists and family medicine physicians) who were members of the Taconic Independent Practice Association (IPA), a not-for-profit organization that includes approximately 50% of the physicians in the Hudson Valley [23].

MVP Health Care is a regional health plan with covered lives in New York, Vermont and New Hampshire [24]. The Taconic IPA is the exclusive provider network for MVP Health Care patients in the Hudson Valley of New York, though Taconic IPA providers also accept other types of insurance. We restricted our sample to those primary care physicians in the Taconic IPA who each had at least 150 patients with MVP Health Care. Since 2001, MVP has been issuing Physician Quality Reports to primary care providers, which compare a physician's performance to a regional benchmark, which is typically the mean performance for MVP's HMO product. Since 2002, financial bonuses have been given for performance exceeding these regional benchmarks.

3.3 Electronic Portal

The health information exchange portal is run by MedAllies, a for-profit company, which was created by the Taconic IPA [25, 26]. The portal, launched in 2001, is Internet-based and allows physicians to log in with secure passwords from any computer. The portal displays results over time and allows providers to view their patients' results regardless of whether the underlying tests were ordered by themselves or other providers. The portal is a central repository that uses a master patient index, standardized terminology, and interoperability standards.

The portal allows physicians to access results in 2 counties, with data from 5 hospitals and 2 reference laboratories. All results generated by these hospitals and laboratories flow through the portal, including admission, discharge and transfer information; inpatient and ambulatory laboratory results; radiology and pathology results; and all inpatient transcriptions (including history and physical, consult, operative, and discharge summary reports).

The portal overall receives 10,000 results per week, contains clinical information for more than 700,000 patients, and is accessed by more than 1600 users (including 500 physicians) in 175 practices. On average, approximately 90% of a typical primary care physician's panel is represented.

3.4 Data

We received data from MedAllies on usage of the portal for 3 time periods: January – June 2005 (Time 1), July – December 2005 (Time 2), and January – June 2006 (Time 3). At that time, the portal was the most common health information technology intervention in the community, much more common, for example, than electronic health records, thus enabling measurement of the portal's effect in isolation from other interventions.

We received health care quality data from MVP Health Care, which MVP had collected from administrative claims and patient surveys and had included in their Physician Quality Reports. This included performance on 13 metrics from the Health Plan Employer Data and Information Set (HEDIS) and 2 patient satisfaction metrics: rates of

-

1.

mammography;

-

2.

Pap smears;

-

3.

colorectal cancer screening;

-

4.

appropriate asthma medication use;

-

5.

antibiotic use for acute upper respiratory infections;

-

6–9.

documentation of body mass index, nephropathy screening, lipid and glycemic control for patients with diabetes;

-

10–13.

documentation of body mass index and counseling for drug and alcohol use, sexual activity and tobacco use for adolescents;

-

14.

satisfaction with quality of care; and

-

15.

satisfaction with communication from physicians' offices.

Of those measures, we expected 8 to be affected by the use of the portal:

-

1.

mammography;

-

2.

Pap smears;

-

3.

colorectal cancer screening;

-

7–9.

nephropathy screening, lipid control and glycemic control for patients with diabetes;

-

14.

satisfaction with quality of care; and

-

15.

satisfaction with communication from physicians' offices.

These 8 measures were the ones that could be affected by the availability of external clinical data (e.g. laboratory tests) or were patient satisfaction measures, which could be improved by better provider knowledge of external clinical data.

We received physician-level data for all 15 quality metrics for 2 time periods. We used the first time period as the Baseline for the study (July 2004 – June 2005) and the second time period as the Followup (July 2005 – June 2006).

From MVP, we also obtained data on adoption of electronic health records during the baseline and follow-up time periods, as well as data on 9 other physician characteristics: gender, age, specialty, board certification, degree (MD vs. DO), physician group size, patient panel size, case mix and resource consumption. Case mix was calculated by MVP using the Diagnostic Cost Group (DxCG) All Encounter Explanation model [27, 28]. Resource consumption was also calculated by MVP, using its own algorithm, and reflected the total amount of health care services a physician's panel utilized. Case mix and resource consumption were each standardized, with values of 1.0 representing average, <1.0 lower than average, and >1.0 higher than average.

3.5 Statistical Analysis

Portal usage was measured by MedAllies as the average number of days per month a physician logged in during each time period. Usage exceeding 15 days per month was truncated by MedAllies and treated as 15 days per month. We present the number of physicians who had any use during each time period, and we present descriptive statistics on intensity of use. We chose to dichotomize usage in each time period as any use or no use. We considered treating usage as a continuous variable but did not have enough statistical power to do so.

Using Pearson correlation coefficients, we found that usage in one time period was associated with usage in another time period. We selected usage in Time 3 to be the main predictor, because this period had the most users and thus the most statistical power.

We used descriptive statistics to characterize our sample overall and stratified by usage in Time 3. We compared users to non-users, using t-tests for continuous variables and chi-squared or Fisher's exact tests for dichotomous variables.

We compared the performance of each physician to a regional benchmark: the mean performance of MVP's HMO. We assigned each physician a value of 1 if he or she scored equal to or better than the regional benchmark and 0 otherwise. We generated a quality index for each physician, equal to the number of metrics for which he or she performed at or better than the regional benchmark, divided by the total number of metrics for which that physician was eligible. The quality index could range from 0 to 100, with higher scores indicating higher quality. We calculated the average quality index for each study group (users and non-users) in each time period (baseline and follow-up). We compared the average quality index across study groups and across time periods using t-tests.

In order to adjust for potential confounders, we used generalized estimation equations. This method accounts for the clustering that occurs with having multiple quality metrics per physician and repeated measurements over time.

We constructed regression models in which quality at follow-up was the dependent variable. The independent variable was usage of the portal in Time 3, as defined above. We considered the 11 physician characteristics, including adoption of electronic health records and quality at baseline, as potential confounders. We entered those variables with bivariate p-values ≤0.20 into a multivariate model. We used backward stepwise elimination to generate the most parsimonious model, considering p-values ≤0.05 to be significant. We considered the 15 measures overall and stratified by whether or not the measures were expected to be affected by portal use.

All analyses were performed using SAS version 9.1 (SAS Institute, Inc., Cary, NC).

3.6 Role of the Funding Source

The funding sources had no role in the study's design, conduct or reporting.

4. Results

4.1 Physician Characteristics

Of the 168 primary care physicians in our original, cross-sectional study [22], 138 (82%) also had data for health care quality at follow-up and were included in this study. Of the 138 physicians in this study, most were male, with an average age of 48 years (►Table 1). Half were general internists, and half were in family practice. Most were board-certified with MD degrees. The average practice size was 4 physicians. Fewer than 1 in 5 had adopted electronic health records.

Table 1.

Baseline characteristics of primary care providers, stratified by use of an electronic portal for viewing clinical data (N = 138).

| Primary Care Provider Characteristics | Overall | Used Electronic Portal, Jan – Jun 2006 | p | |

|---|---|---|---|---|

| Yes | No | |||

| N = 138 | N = 59 | N = 79 | ||

| Demographic Characteristics | ||||

| Male gender, N (%) | 105 (76) | 45 (76) | 60 (76) | 0.97 |

| Age at baseline, mean (SD) | 47.7 (7.9) | 46.3 (7.4) | 48.8 (8.1) | 0.07 |

| Internal Medicine (vs. Family Practice), N (%) | 71 (51) | 33 (56) | 38 (48) | 0.36 |

| Board Certified, N (%) | 129 (93) | 55 (93) | 74 (94) | 0.92 |

| MD degree (vs. DO), N (%) | 122 (88) | 51 (86) | 71 (90) | 0.53 |

| Number of primary care providers in practice, mean (SD) | 4 (4.8) | 3.6 (3.4) | 4.3 (5.6) | 0.39 |

| MVP patient panel size, mean (SD) | 430 (288) | 536 (334) | 352 (218) | <0.001 |

| Case mix index*, mean (SD) | 1.06 (0.22) | 1.08 (0.17) | 1.05 (0.25) | 0.31 |

| Resource consumption index †, mean (SD) | 0.96 (0.12) | 0.96 (0.11) | 0.96 (0.13) | 0.83 |

| Adoption and Usage Characteristics | ||||

| Used electronic portal, Jan – Jun 2005, N (%) | 46 (33) | 41 (69) | 5 (6) | < 0.001 |

| Used electronic portal, Jul – Dec 2005, N (%) | 58 (42) | 56 (95) | 2 (3) | < 0.001 |

| Adopted electronic health records, Jan – Jun 2005, N (%) | 9 (7) | 6 (10) | 3 (4) | 0.17 |

| Adopted electronic health records, Jul – Dec 2005, N (%) | 16 (12) | 11 (19) | 5 (6) | 0.03 |

* Case mix was calculated by the participating health plan using the Diagnostic Cost Group (DxCG) All Encounter Explanation Model. [24, 25] Case mix was standardized, such that a value of 1.0 represented average case mix, <1.0 represented healthier than average, and >1.0 sicker than average.

† Resource consumption was calculated by the participating health plan using its own algorithm and reflected the total amount of health care services a physician's panel utilized, with standardized values of 1.0 representing average, <1.0 lower than average, and >1.0 higher than average resource consumption.

The number of physicians using the electronic portal increased over time. Of the 138 physicians, 46 (33%) used the portal in the first time period, 58 (42%) used it in the second time period, and 59 (43%) used it in the third time period. Forty (29%) physicians used it in all 3 time periods.

4.2 Electronic Portal Usage

We found that usage in one time period was strongly associated with usage in other time periods (p<0.001). The correlation between usage in Time 1 and usage in Time 2 was 0.81, and the correlation between usage in Time 2 and usage in Time 3 was 0.78. Among users in Time 3, the average physician logged in 8 days per month (SD 6 days, median 7 days).

Users were similar to non-users in terms of gender, age, specialty, board certification, degree, practice size, case mix, and resource consumption (►Table 1). Users were more likely than non-users to adopt electronic health records (p = 0.03) and have larger panels of patients (p<0.001).

4.3 Quality of Care

In terms of quality, physicians performed well, with ≥50% of the physicians overall meeting or exceeding the regional benchmark for 9 of the 15 measures (►Table 2).

Table 2.

Average ambulatory care quality for each of 15 measures at follow-up and the percentage of primary care physicians meeting or exceeding the regional benchmark, stratified by use of an electronic portal for viewing clinical data.

| Quality measure | N | Regional benchmark | Percentage of primary care physicians with performance meeting or exceeding the regional benchmark | |||

|---|---|---|---|---|---|---|

| Overall | Used Electronic Laboratory Result Viewing, Jan – Jun 2006 | |||||

| Yes (N = 59) | No (N = 79) | p | ||||

| Mammography, % female members 52–69 years old who had a mammogram in the reporting year or year prior | 137 | 76.4 | 44.5 | 57.6 | 34.6 | 0.01 |

| Pap smear, % of female members 21–64 years old who had a Pap smear in the reporting year or two years prior | 138 | 81.0 | 46.4 | 54.2 | 40.5 | 0.11 |

| Colorectal cancer screening, % of members 50–80 years old who had fecal occult blood testing in the reporting year, flexible sigmoidoscopy in the last 5 years, or colonoscopy in the last 10 years | 138 | 52.7 | 67.4 | 76.3 | 60.8 | 0.05 |

| Asthma medication management, % of members with asthma who filled a prescription for more than one short-term beta agonist in a specified 3-month period and were also on a long-term controller medication | 102 | 88.8 | 57.8 | 56.4 | 59.6 | 0.74 |

| Antibiotic use, % of members who were treated with an antibiotic for acute bronchitis, acute sinusitis or acute upper respiratory infection / pharyngitis* | 67 | 47.2 | 38.8 | 43.8 | 34.3 | 0.43 |

| For patients with diabetes only | ||||||

| Body mass index documented, % | 125 | 15.4 | 41.6 | 56.1 | 29.4 | 0.003 |

| Nephropathy screening, % | 125 | 61.6 | 40.8 | 45.6 | 36.8 | 0.32 |

| Low density lipoprotein cholesterol < 100 mg/dl in the reporting year or year prior, % | 125 | 45.1 | 75.2 | 86.0 | 66.2 | 0.01 |

| HbA1C < 7% in the reporting year, % | 125 | 48.9 | 77.6 | 79.0 | 76.5 | 0.74 |

| For adolescents (ages 14–18 years) only, in the two previous years | ||||||

| Body mass index documented, % | 47 | 10.3 | 66.0 | 69.6 | 62.5 | 0.61 |

| Screening or counseling documented for drug and alcohol use, % | 47 | 75.5 | 74.5 | 73.9 | 75.0 | 0.93 |

| Screening or counseling documented for pregnancy or sexually transmitted diseases, % | 47 | 68.0 | 61.7 | 69.6 | 54.2 | 0.28 |

| Screening or counseling documented for tobacco use, % | 47 | 75.9 | 83.0 | 87.0 | 79.2 | 0.70 |

| Member satisfaction score with communication with PCP's office, % (maximum possible score 100%) | 136 | 85.3 | 58.1 | 65.5 | 52.6 | 0.13 |

| Member satisfaction score with quality of care, 100% (maximum possible score 100%) | 136 | 86.9 | 48.5 | 56.9 | 42.3 | 0.09 |

* In the case of Antibiotic Use, the last three columns represent the percentage of primary care physicians who are at or below the regional benchmark, because a lower score is better.

4.4 Electronic Portal Usage and Quality

When we considered the results by measure, there were trends (p<0.20) for better performance by users compared to non-users for 7 of the 15 measures (►Table 2).

When we aggregated across measures, non-users performed at or above the regional benchmark for 48% of the quality metrics at baseline and 49% at follow-up (p = 0.58, ►Figure 1). Users performed at or above the regional benchmark for 57% of the quality metrics at baseline and 64% at follow-up (p<0.001), which is equivalent to an absolute improvement of 7% and a relative improvement of 12%. This is consistent with the bivariate association we observed between usage of the portal and higher quality of care at follow-up (►Table 3).

Fig. 1.

Average ambulatory quality of care for a composite of 15 measures, stratified by time and use of the portal. Comparisons were made with t-tests and generated the following p-values: at follow-up (black bars) difference between non-users and users (49% vs. 64%, p<0.0001), and within users (grey bar vs. black bar) baseline vs. followup (57% vs. 64%, p<0.001).

Table 3.

Bivariate associations between physician characteristics and higher quality of care at follow-up. The dependent variable reflects 15 quality measures at Follow-up, including measures related to preventive care, chronic disease management and patient satisfaction. Models adjust for clustering of quality indicators within physician by using generalized estimating equations. Panel size was included as a log-transformed variable due to skewness.

| Physician Characteristic | Odds Ratio (95% Confidence Interval) |

|---|---|

| Used electronic portal, Jan – Jun 2006 (yes vs. no) | 1.71 (1.31, 2.23) |

| Age (per 10 year increase) | 0.86 (0.74, 1.01) |

| Gender (male vs. female) | 0.73 (0.53, 1.01) |

| Specialty Internal Medicine (vs. Family Practice) | 1.01 (0.77, 1.32) |

| Board certified (yes vs. no) | 1.69 (0.96, 2.98) |

| Degree (MD vs. DO) | 1.05 (0.67, 1.65) |

| Number of physicians in group (per physician) | 0.97 (0.93, 1.01) |

| Patient panel size (for each 10-fold increase) | 1.39 (1.08, 1.78) |

| Case mix index (per unit increase) | 1.96 (0.89, 4.33) |

| Resource consumption index (per unit increase) | 1.33 (0.41, 4.31) |

| Adopted electronic health record (yes vs. no) at baseline | 1.07 (0.64, 1.79) |

| Adopted electronic health record (yes vs. no) at follow-up | 1.16 (0.76, 1.79) |

| Quality at baseline | 15.30 (11.69, 20.01) |

In the final multivariate model, usage of the electronic portal persisted as an independent predictor of quality of care at follow-up [Odds Ratio (OR) 1.42; 95% confidence interval (CI) 1.04, 1.95; p = 0.03; ►Table 4]. This model included adjustment for quality of care at baseline, which continued to be a strong predictor of quality at follow-up.

Table 4.

Final multivariate model with physician characteristics independently associated with higher quality of care at follow-up. The dependent variable reflects 15 quality measures, including measures related to preventive care, chronic disease management and patient satisfaction. The model below is derived from generalized estimating equations and adjusts for all variables listed (selected through stepwise regression with backwards elimination) plus clustering of quality indicators within physician. Panel size was included as a log-transformed variable due to skewness.

| Physician Characteristic | Odds Ratio (95% Confidence Interval) |

|---|---|

| Used electronic portal (yes vs. no) Jan – Jun 2006 | 1.42 (1.04, 1.95) |

| Number of physicians in group (per physician) | 0.95 (0.92, 0.99) |

| Patient panel size (per 10-fold increase) | 1.42 (1.06, 1.90) |

| Quality at baseline | 15.98 (12.10, 21.12) |

This association between usage of the portal and higher quality of care was significant for the subset of measures expected to be affected by the portal (adjusted OR 1.56; 95% CI 1.09, 2.23; p = 0.01) but not for those not expected to be affected (adjusted OR 1.49; 95% CI 0.90, 2.46; p = 0.12).

5. Discussion

We found that usage of an electronic portal for viewing clinical data was independently associated with modest improvements in quality of care, with an absolute improvement of 7% and a relative improvement of 12%. This association persisted after adjusting for physician characteristics including baseline quality of care. This association was significant for the subset measures that could be affected by access to external clinical data, but not for the other measures. The magnitude of the association we observed was modest and slightly less than the typical 12–20% improvement seen in quality for inpatient EHRs and for some previous ambulatory EHR initiatives [9].

This study is novel for its measurement of the effectiveness of a community-based portal on ambulatory quality, particularly defined as rates of recommended testing. Previous studies have focused on the related topics of physicians' perceptions [29, 30], patients' perceptions [16, 31–33], public health [17, 18], response time [13], redundant testing [12, 14, 15], emergency department utilization [19–21], and projected cost savings for the health care system [34].

This study is also notable for having taken place in small group practices, where the large majority of care is delivered and where adoption of HIT has lagged [35]. The setting was not in an integrated delivery system [36–38] or a hospital-based practice, but rather a community with multiple payers and physician-owned practices. This study's fragmented setting is more typical of the majority of American health care. This study is also strengthened by having actual usage data, which is distinct from other studies that used the potential for access to data as the predictor [39].

This study has several limitations. It took place in a single community, which may limit generalizability. This community is active in practice-based quality improvement, and many of the physicians who participated in this study were in practices that subsequently went on to achieve recognition by the National Committee for Quality Assurance as Patient-Centered Medical Homes. The data are several years old, and newer iterations of the portal and other forms of health information exchange may yield different results. The study was not a randomized trial, so the findings may be confounded by unmeasured physician characteristics; however, this study adjusted for baseline physician quality, which makes a spurious correlation less likely. We did not have data on which physicians were in which practices, so we were not able to adjust for clustering at that level. We also could not account for potential bias introduced by the fact that 18% of providers did not have data at follow-up. Future studies could examine health information exchange in a more granular manner, attempting to link access to data with specific medical decisions. Larger studies could also consider whether a “dose-response” relationship exists between usage and quality.

The federal government in investing heavily in health information exchange through the Statewide Health Information Exchange Cooperative Agreement Program, the Beacon Community program, and the Direct program [40, 41]. These investments all build on the government's incentives for meaningful use of electronic health records. How these efforts will interact to affect care is still unfolding.

6. Conclusion

We found modest but significant improvements in ambulatory quality with the use of an electronic portal for viewing clinical data. This study took place in a community with small group practices and multiple commercial payers, typical of many communities across the country. The findings from this study can inform national discussions, as the country embarks on an unprecedented effort to encourage the adoption and meaningful use of HIT.

7. Clinical Relevance Statement

We studied a web-based portal that allowed providers to view their patients' clinical data, regardless of whether the underlying tests were ordered by themselves or by other providers. We found that users of this portal were more likely to provide higher quality of care than non-users, even after adjusting for provider characteristics.

Conflicts of Interest

The authors have no conflicts of interest to disclose.

Protection of Human and Animal Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research involving Human Subjects, and was reviewed by the Institutional Review Boards of Weill Cornell Medical College in New York City and Kingston Hospital in Kingston, New York.

Acknowledgements

The authors thank A. John Blair III, MD, President of the Taconic IPA and CEO of MedAllies; Jerry Salkowe, MD, Vice President of Clinical Quality Improvement at MVP Health Care; and Deborah Chambers, Director of Quality Improvement at MVP Health Care for providing access to data. The authors also thank Alison Edwards, MStat for her assistance with data analysis. This work was supported by funding from the Agency for Healthcare Research and Quality (grant #1 UC1 HS01636), the Taconic Independent Practice Association, the New York State Department of Health, and the Commonwealth Fund (grant #20060550). The funding sources had no role in the study's design, conduct or reporting. The authors had full access to all the data in the study and take full responsibility for the integrity of the data and the accuracy of the data analysis. Previous versions of this work were presented at the American Medical Informatics Association Annual Symposium in San Francisco, CA on November 17, 2009 and the National Meeting of the Society of General Internal Medicine in Phoenix, Arizona on May 5, 2011.

References

- 1.American Recovery and Reinvestment Act of 2009, Pub L, No. 111–5, 123 Stat 115 (2009). [Google Scholar]

- 2.Steinbrook R. Health care and the American Recovery and Reinvestment Act. N Engl J Med 2009; 360: 1057–1060 [DOI] [PubMed] [Google Scholar]

- 3.Kern LM, Dhopeshwarkar R, Barron Y, Wilcox A, Pincus H, Kaushal R. Measuring the effects of health information technology on quality of care: a novel set of proposed metrics for electronic quality reporting. Jt Comm J Qual Patient Saf 2009; 35: 359–369 [DOI] [PubMed] [Google Scholar]

- 4.Smith PC, Araya-Guerra R, Bublitz C, Parnes B, Dickinson LM, Van Vorst R, et al. Missing clinical information during primary care visits. JAMA 2005; 293: 565–571 [DOI] [PubMed] [Google Scholar]

- 5.Blumenthal D, Glaser JP. Information technology comes to medicine. N Engl J Med 2007; 356: 2527–2534 [DOI] [PubMed] [Google Scholar]

- 6.Halamka JD. Health information technology: shall we wait for the evidence? Ann Intern Med 2006; 144: 775–776 [DOI] [PubMed] [Google Scholar]

- 7.Kaelber DC, Bates DW. Health information exchange and patient safety. J Biomed Inform 2007; 40 : S40 [DOI] [PubMed] [Google Scholar]

- 8.Institute of Medicine Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, D.C.: National Academy Press, 2001. [PubMed] [Google Scholar]

- 9.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006; 144: 742–752 [DOI] [PubMed] [Google Scholar]

- 10.Fontaine P, Ross SE, Zink T, Schilling LM. Systematic review of health information exchange in primary care practices. J Am Board Fam Med 2010; 23: 655–670 [DOI] [PubMed] [Google Scholar]

- 11.Hincapie A, Warholak T. The impact of health information exchange on health outcomes. Applied Clinical Informatics 2011; 2: 499–507 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bates DW, Kuperman GJ, Rittenberg E, Teich JM, Fiskio J, Ma'luf N, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med 1999; 106: 144–150 [DOI] [PubMed] [Google Scholar]

- 13.Kuperman GJ, Teich JM, Tanasijevic MJ, Ma'Luf N, Rittenberg E, Jha A, et al. Improving response to critical laboratory results with automation: results of a randomized controlled trial. J Am Med Inform Assoc 1999; 6: 512–522 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Stair TO. Reduction of redundant laboratory orders by access to computerized patient records. J Emerg Med 1998; 16: 895–897 [DOI] [PubMed] [Google Scholar]

- 15.Tierney WM, McDonald CJ, Martin DK, Rogers MP. Computerized display of past test results. Effect on outpatient testing. Ann Intern Med 1987; 107: 569–574 [DOI] [PubMed] [Google Scholar]

- 16.Matheny ME, Gandhi TK, Orav EJ, Ladak-Merchant Z, Bates DW, Kuperman GJ, et al. Impact of an automated test results management system on patients' satisfaction about test result communication. Arch Intern Med 2007; 167: 2233–2239 [DOI] [PubMed] [Google Scholar]

- 17.Nguyen TQ, Thorpe L, Makki HA, Mostashari F. Benefits and barriers to electronic laboratory results reporting for notifiable diseases: the New York City Department of Health and Mental Hygiene experience. Am J Public Health 2007; 97(Suppl 1): S142-S145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Overhage JM, Grannis S, McDonald CJ. A comparison of the completeness and timeliness of automated electronic laboratory reporting and spontaneous reporting of notifiable conditions. Am J Public Health 2008; 98: 344–350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Frisse ME, Johnson KB, Nian H, Davison CL, Gadd CS, Unertl KM, et al. The financial impact of health information exchange on emergency department care. J Am Med Inform Assoc 2011(epub ahead of print) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hansagi H, Olsson M, Hussain A, Ohlen G. Is information sharing between the emergency department and primary care useful to the care of frequent emergency department users? Eur J Emerg Med 2008; 15: 34–39 [DOI] [PubMed] [Google Scholar]

- 21.Vest JR. Health information exchange and healthcare utilization. J Med Syst 2009; 33: 223–231 [DOI] [PubMed] [Google Scholar]

- 22.Kern LM, Barron Y, Blair AJ, 3rd, Salkowe J, Chambers D, Callahan MA, et al. Electronic result viewing and quality of care in small group practices. J Gen Intern Med 2008; 23: 405–410 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Taconic IPA. (Accessed April 11, 2012.) Available from: www.taconicipa.com.

- 24.MVP Health Care. (Accessed April 11, 2012.) Available from: www.mvphealthcare.com.

- 25.Stuard SS, Blair AJ. Interval examination: regional transformation of care delivery in the hudson valley. J Gen Intern Med 2011; 26: 1371–1373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.MedAllies. (Accessed April 11, 2012.) Available from: www.medallies.com.

- 27.Verisk Health Sightlines DxCG Risk Solutions. (Accessed April 11, 2012.)Available from:www.verisk health.com

- 28.Ash AS, Ellis RP, Pope GC, Ayanian JZ, Bates DW, Burstin H, et al. Using diagnoses to describe populations and predict costs. Health Care Financ Rev 2000; 21: 7–28 [PMC free article] [PubMed] [Google Scholar]

- 29.Hincapie AL, Warholak TL, Murcko AC, Slack M, Malone DC. Physicians' opinions of a health information exchange. J Am Med Inform Assoc 2011; 18: 60–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Patel V, Abramson EL, Edwards A, Malhotra S, Kaushal R. Physicians' potential use and preferences related to health information exchange. Int J Med Inform 2011; 80: 171–180 [DOI] [PubMed] [Google Scholar]

- 31.O'Donnell HC, Patel V, Kern LM, Barron Y, Teixeira P, Dhopeshwarkar R, et al. Healthcare consumers' attitudes towards physician and personal use of health information exchange. J Gen Intern Med 2011; 26: 1019–1026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Patel VN, Dhopeshwarkar RV, Edwards A, Barron Y, Likourezos A, Burd L, et al. Low-income, ethnically diverse consumers' perspective on health information exchange and personal health records. Inform Health Soc Care 2011; 36: 233–252 [DOI] [PubMed] [Google Scholar]

- 33.Patel VN, Dhopeshwarkar RV, Edwards A, Barron Y, Sparenborg J, Kaushal R. Consumer Support for Health Information Exchange and Personal Health Records: A Regional Health Information Organization Survey. J Med Syst 2010(epub ahead of print) [DOI] [PubMed] [Google Scholar]

- 34.Walker J, Pan E, Johnston D, Adler-Milstein J, Bates DW, Middleton B. The value of health care information exchange and interoperability. Health Aff (Millwood) 2005; Suppl. Web Exclusives:W5–8 [DOI] [PubMed] [Google Scholar]

- 35.DesRoches CM, Campbell EG, Rao SR, Donelan K, Ferris TG, Jha A, et al. Electronic health records in ambulatory care – a national survey of physicians. N Engl J Med 2008; 359: 50–60 [DOI] [PubMed] [Google Scholar]

- 36.Byrne CM, Mercincavage LM, Pan EC, Vincent AG, Johnston DS, Middleton B. The value from investments in health information technology at the U.S. Department of Veterans Affairs. Health Aff (Millwood) 2010; 29: 629–638 [DOI] [PubMed] [Google Scholar]

- 37.Chen C, Garrido T, Chock D, Okawa G, Liang L. The Kaiser Permanente Electronic Health Record: transforming and streamlining modalities of care. Health Aff (Millwood) 2009; 28: 323–333 [DOI] [PubMed] [Google Scholar]

- 38.Paulus RA, Davis K, Steele GD. Continuous innovation in health care: implications of the Geisinger experience. Health Aff (Millwood) 2008; 27: 1235–1245 [DOI] [PubMed] [Google Scholar]

- 39.McCormick D, Bor DH, Woolhandler S, Himmelstein DU. Giving office-based physicians electronic access to patients' prior imaging and lab results did not deter ordering of tests. Health Aff (Millwood) 2012; 31: 488–496 [DOI] [PubMed] [Google Scholar]

- 40.U.S. Department of Health and Human Services, Office of the National Coordinator for Health Information Technology. HITECH Programs (Accessed April 11, 2012.)Available from:http://healthit.hhs.gov/portal/server.pt/community/healthit_hhs_gov_hitech_programs/1487

- 41.U.S. Department of Health and Human Services, Office of the National Coordinator for Health Information Technology. Nationwide Health Information Network: Overview.(Accessed April 11, 2012.)Available from:http://healthit.hhs.gov/portal/server.pt/community/healthit_hhs_gov_nationwide_health_infor mation_network/1142