Abstract

Background

The Accreditation Council for Graduate Medical Education Outcome Project intended to move residency education toward assessing and documenting resident competence in 6 dimensions of performance important to the practice of medicine. Although the project defined a set of general attributes of a good physician, it did not define the actual activities that a competent physician performs in practice in the given specialty. These descriptions have been called entrustable professional activities (EPAs).

Objective

We sought to develop a list of EPAs for ambulatory practice in family medicine to guide curriculum development and resident assessment.

Methods

We developed an initial list of EPAs over the course of 3 years, and we refined it further by obtaining the opinion of experts using a Delphi Process. The experts participating in this study were recruited from 2 groups of family medicine leaders: organizers and participants in the Preparing the Personal Physician for Practice initiative, and members of the Society of Teachers of Family Medicine Task Force on Competency Assessment. The experts participated in 2 rounds of anonymous, Internet-based surveys.

Results

A total of 22 experts participated, and 21 experts participated in both rounds of the Delphi Process. The Delphi Process reduced the number of competency areas from 91 to 76 areas, with 3 additional competency areas added in round 1.

Conclusions

This list of EPAs developed through our Delphi process can be used as a starting point for family medicine residency programs interested in moving toward a competency-based approach to resident education and assessment.

What was known

The Accreditation Council for Graduate Medical Education (ACGME) Outcome Project advanced competency-based education, but the resulting competencies were too generic to represent the dimensions of clinical work in a specialty.

What is new

Seventy-six entrustable professional activities (EPAs) for ambulatory family medicine practice selected by an expert panel can guide curriculum development and resident assessment for ambulatory practice in family medicine.

Limitations

Clinical experience at a single institution provided the basis for expert selection of the EPAs, and may limit generalizability.

Bottom line

These EPAs will assist programs in focusing resident education and assessment on the skills that will allow residents to be entrusted with clearly defined professional activities for ambulatory family medicine practice.

Introduction

Medical educators, although eager to embrace competency assessment, often are stopped by the question, “What am I supposed to measure?” Although highly useful, the 6 competencies of the Accreditation Council for Graduate Medical Education (ACGME) Outcome Project1 were not specific enough to provide adequate direction for residency curriculum development or to guide resident assessment in a particular clinical specialty.

To avoid sweeping assessments of the general qualities of learners, some educators have turned to documenting learners' individual skills and knowledge. Although these attributes are part of the overall process of care, assessing them individually does not “add up” to an ability to provide the appropriate care to patients. What physicians do in practice is far greater than the sum of any parts that can be documented as part of the measurement of competence.2–4

Either approach—focusing on the vague qualities of competence or a reductionist approach to assessment—risks measuring that which is measurable but not important.5 There is a need to identify the clinical situations in which trainees should, upon graduation, be trusted to perform competently. ten Cate and Scheele6 have called these specific, measurable areas of practice “entrustable professional activities” (EPAs), which are “professional activities that together constitute the mass of critical elements that operationally define a profession.”

EPAs are part of the essential professional work in a specialty (box).7 The value of EPAs is that they identify the professional activities of daily practice and can be used to drive curriculum development as well as assessment.8,9 For example, a competent family medicine physician is expected to provide care for a child with a respiratory illness. This includes eliciting a history, performing a physical examination, arriving at a diagnosis, and implementing a plan of care that is evidence based and takes into account the needs and values of the patient.

Box Conditions of Entrustable Professional Activitiesa

Are part of the essential professional work of the specialty and not general medical ability.

Must require adequate knowledge, skill, and attitude.

Must lead to recognized performance that is unique to a doctor.

Should be unique to physicians in that specialty.

Should be independently executable.

Should be executable within a time frame.

Should be observable and measurable in its process and outcome (well done or not well done)

Should reflect one or more of the Accreditation Council for Graduate Medical Education competency categories.

-

a

Adapted from ten Cate and Scheele6

Although each of these skills can be separately measured and documented in a variety of settings, the overall performance of them in situ constitutes the entrustable activity. As learners develop from beginners to competent clinicians, the corresponding level of required supervision decreases from complete oversight to “entrustment,” in which the learner receives certification that he or she could have provided the professional duty without oversight.10

Our residency program embarked on the development of competency-based assessment as part of a nationwide project to develop new models of resident education in family medicine.11 The goal was to develop a list of EPAs around which to structure our competency assessment.

Methods

The initial list of activities was developed by relying on model curricula12 and similar inventories developed by the Royal College of General Practice in the United Kingdom13 and the specialty document for family medicine in Denmark.14 We searched textbook chapter headings and searched lists of the most common diagnostic codes recorded by our residents to augment these resources. An iterative process was used to develop this initial list, reorganizing after each of several discussions. This list was then circulated among faculty members at our institution for comment.

After initial development, we tested the list for completeness in our outpatient center. Following each patient encounter and subsequent discussion by residents, preceptors completed a computerized form documenting residents' degree of performance of one or more EPAs during that visit. We piloted both the use of the form and the suitability of this list for 18 months. Following continuous feedback from preceptors, we developed a final list of 91 activities. Following this pilot period, we sought to determine how often each of these activities occurred during patient visits conducted by our residents during a 14-month period.

We also tested the list using a Delphi process to obtain the opinions of experts in family medicine education. Experts were chosen from 2 groups of academic physicians at the forefront of competency-based teaching and assessment in family medicine. The first group consisted of directors and key faculty members of programs participating in the Preparing Physicians for Personal Practice (P4) Project, as well as members of its organizing committee. The P4 Project is a national demonstration project conducted in 14 residencies evaluating innovative curricula to better prepare graduates for new models of primary care practice.15

The second group encompassed members of the Residency Competency Measurement Task Force formed by the Society of Teachers of Family Medicine. This group developed materials to aid family medicine residencies in creating assessment processes for their residents.

From the 2 groups, 22 of 37 potential experts agreed to participate. The Delphi Process consisted of 2 rounds of anonymous responses to a surveys sent electronically using Survey Monkey (http://www.surveymonkey.com). During the first round, experts were asked to rate the importance of each suggested EPA on a 7-point scale, ranging from “do not include” (1) to “must include” (7). Respondents were instructed to consider activities of a typical family physician in practice. Follow-up e-mails were sent weekly for 4 weeks to all participants to respond.

For the second round of voting, EPAs rated as 6 or 7 in round 1 were ordered by popularity (the percentage of “must include” responses during the first round), and each was labeled with the percentage of experts ranking it as “must include.” During the second round, experts were asked to mark each EPA as “must include” or “do not include.” Follow-up e-mails were again sent weekly until all responses were collected. Twenty-one experts (95.5%) responded to each round of the process.

The final list of EPAs consisted of those items ranked as “must include” by more than two-thirds of the experts. All data were processed using Microsoft Excel (Redmond, WA).

The project was approved by our Institutional Review Board.

Results

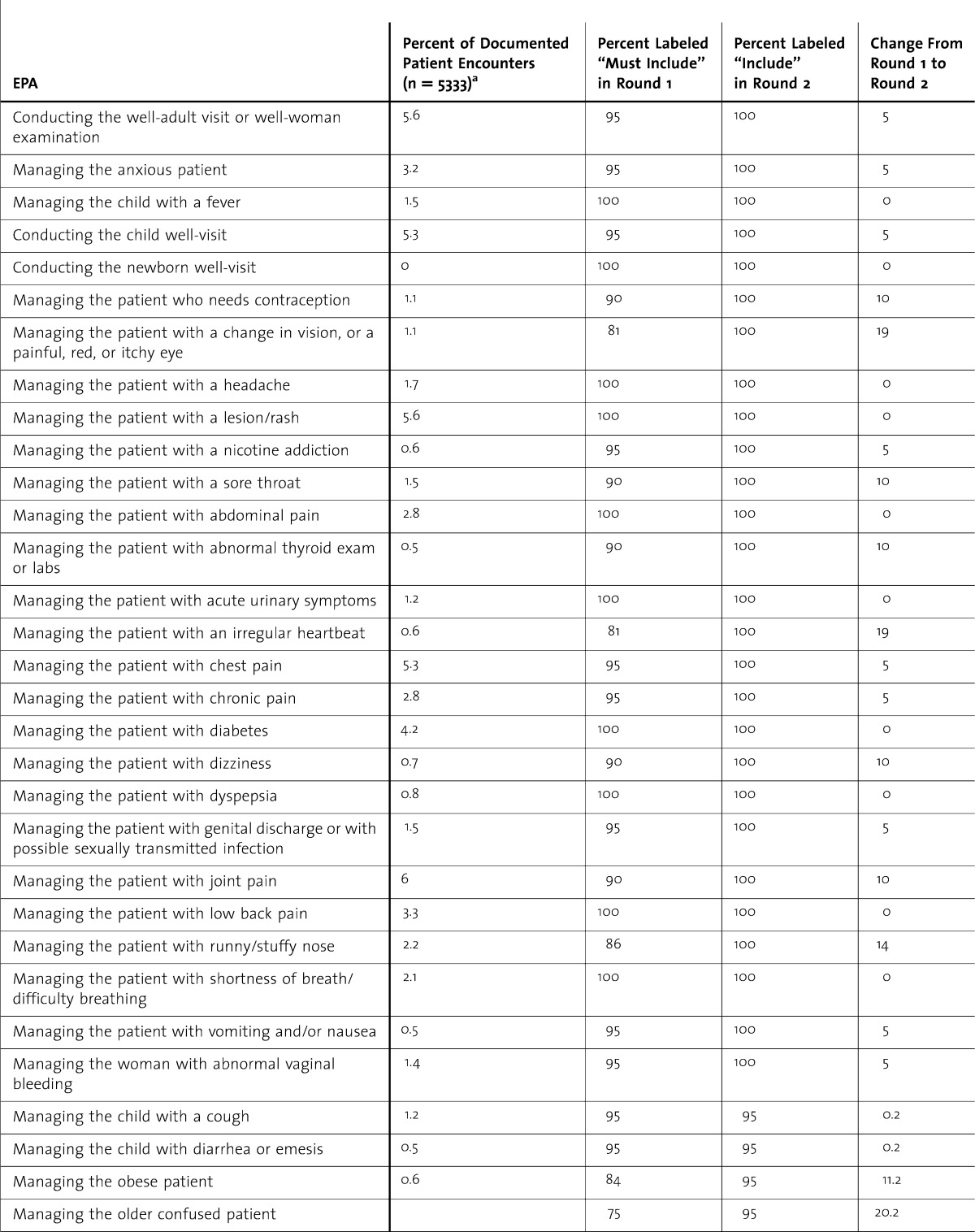

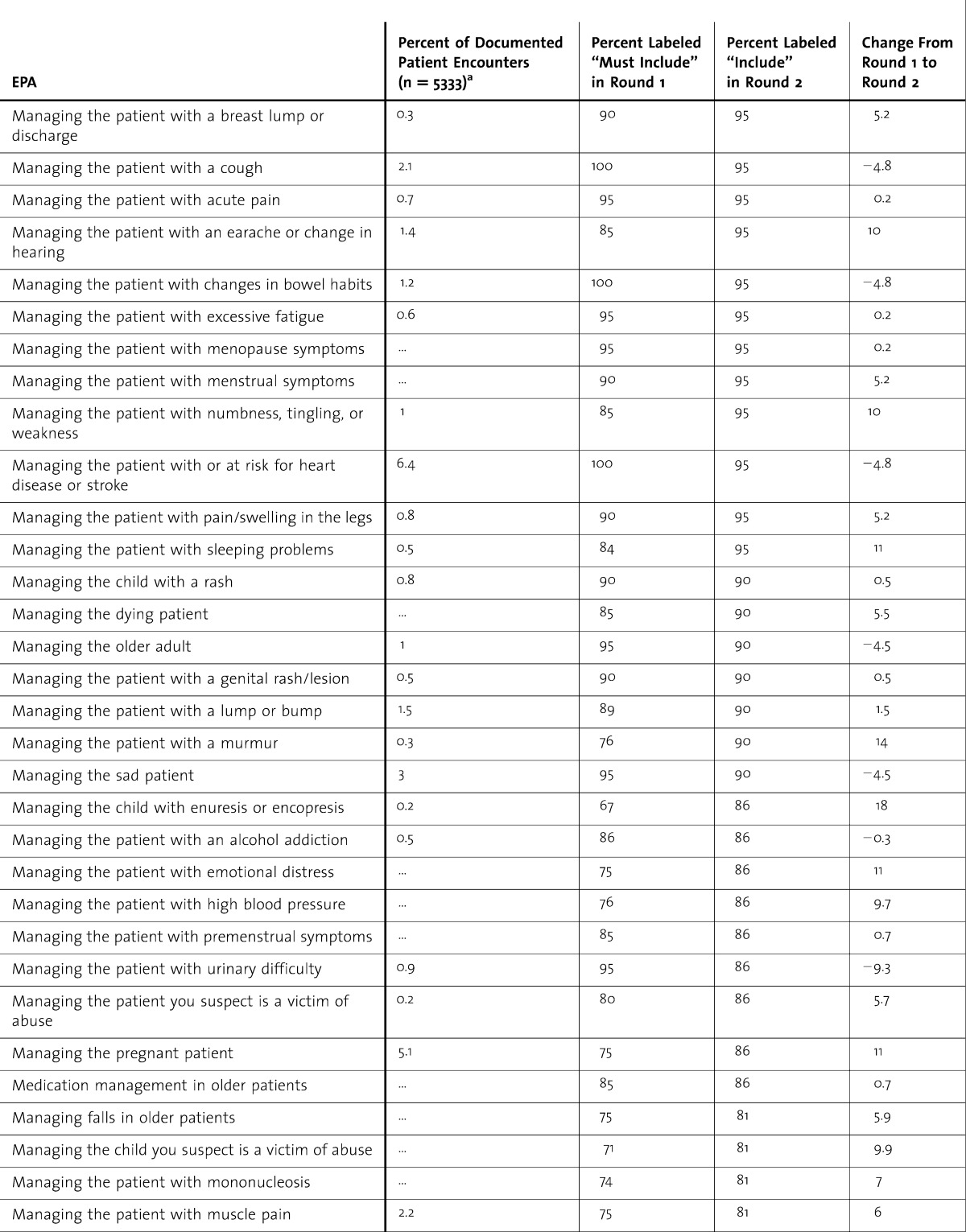

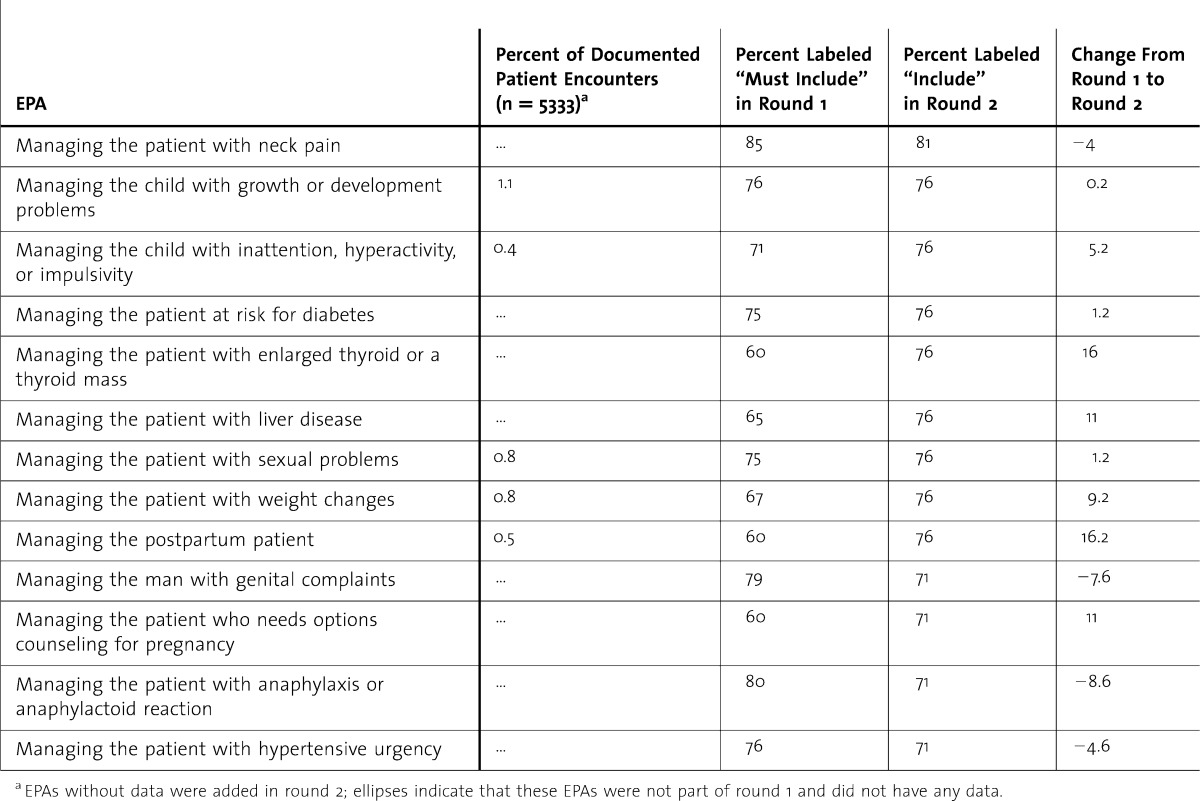

During a 14-month period, EPAs were documented for 5330 outpatient visits (27.2% of eligible visits). The percentage of documented visits for each EPA is shown in table 1. Only a single EPA, “managing the care of the newborn,” was not documented during this time; yet, 23 postpartum visits were documented, and it is likely that the combined visits were only documented for the latter EPA.

table 1.

Final List of Entrustable Professional Activities (EPAs)

During the first round, the percentage of experts ranking each activity as “must be included” (ie, a score of 6 or 7) ranged from 30% to 100%. In the second round, 76 EPAs were selected by more than 67% of the experts and comprised the final list. table 1 lists the final EPAs, the results from round 1 and round 2 of the Delphi process, and the change in voting between the 2 rounds.

table 1.

Final List of Entrustable Professional Activities (EPAs) Continued

table 1.

Final List of Entrustable Professional Activities (EPAs) Continued

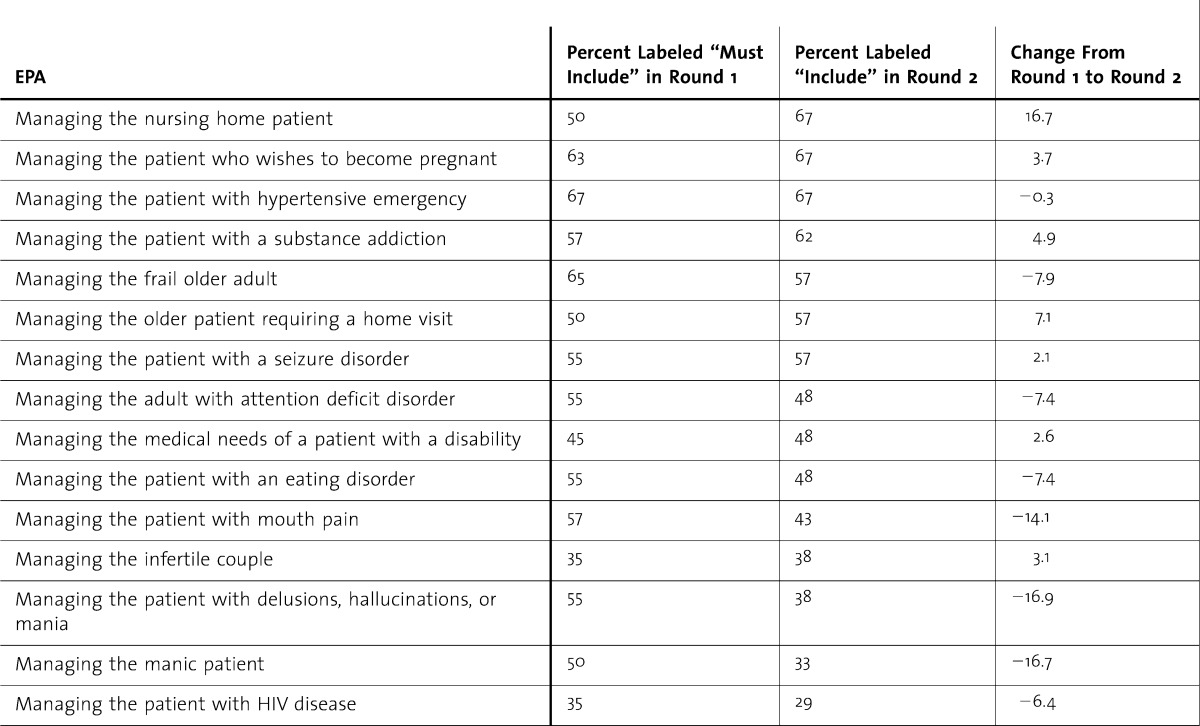

Three EPAs not in the original list were added via the Delphi process: “managing the patient with anaphylaxis or anaphylactoid reaction,” “managing the patient with hypertensive urgency,” and “managing the patient who needs options counseling for pregnancy.” The Delphi Process removed 15 activities from the original list developed in round 1 (table 2).

table 2.

Entrustable Professional Activities (EPAs) Removed by the Delphi Process

Discussion

We identified, developed, and tested 91 outpatient EPAs for family medicine, resulting in a final list of 76 EPAs that should be documented by the end of family medicine education. The Delphi Process added 3 EPAs not considered in the initial testing, and 5 competency areas in this original list were removed by the process.

The EPAs identified through our approach can be used to drive decisions regarding curriculum and assessment. Curriculum directors in family medicine residencies are familiar with the “topic creep” that occurs as arcane or esoteric topics are added to the curriculum due to the availability of experts, a service need, or an experience opportunity in a given area. A list of EPAs can serve to focus a curriculum, allowing program directors to assure that “must know” topics are not pushed out by “nice to know” topics.

The Delphi Method has been called an “opinion technology.”16 By using experts, the number of actual participants can be relatively low because reliability improvements level off beyond 20 to 30 participants.16 It has been used by other groups and disciplines to develop curricula, competencies, and objectives.17–19

A limitation of our approach to defining patient care EPAs is that we began with internal testing within a single residency program. Therefore, despite drawing on previously published lists, our list was influenced by the setting, clinical practice, faculty, and philosophy of our program. For our group of experts we sought a national sample of experts in family medicine education; yet, many of these experts were from the Northeast and none were from the Southeast. Using a different representation of experts or using a group opinion approach of all residency program directors, current residents, recent graduates, or some combination of the above may have produced slightly different results.

We feel confident in our results because there was little change in the initial list developed in our residency following the Delphi Process. Also, the list remains largely concordant with the list recently revised in Denmark.

Conclusions

A key goal of training is to create physicians who can be entrusted to perform a list of clearly defined professional activities. This list of 76 EPAs does not include many other skills and knowledge that the practicing clinician must possess, but it may help residencies further refine their curricula as well as develop methods of assessment that document graduates' capacity to be entrusted with these activities.

EPAs likely will play a role in assisting family medicine residencies in the development of milestones for assessing residents' ongoing professional development. Future research should evaluate whether other residencies can credential residents in most or all of these EPAs. In addition, future study could evaluate whether this list is representative of typical family medicine practice.

Acknowledgments

The authors would like to acknowledge Niels K. Kjaer, MD, MSPE, for his inspiration and guidance with this project.

Footnotes

Allen F. Shaughnessy, PharmD, MMedEd, is Professor of Family Medicine at Tufts University School of Medicine; Jennifer Sparks, MD, is a faculty member at the Lawrence Family Medicine Residency; Molly Cohen-Osher, MD, is Assistant Professor of Family Medicine at Tufts University School of Medicine; Kristen H. Goodell, MD, is Assistant Professor of Family Medicine at Tufts University School of Medicine; Gregory L. Sawin, MD, MPH, is Assistant Professor of Family Medicine at Tufts University School of Medicine, and Program Director at The Tufts University Family Medicine Residency at Cambridge Health Alliance; and Joseph Gravel Jr, MD, is Program Director of the Lawrence Family Medicine Residency.

Funding: The authors report no external funding source for this study.

Aspects of this paper have been presented at the 2009 Annual Meeting of the Society of Teachers of Family Medicine, April 2009, Denver, CO.

References

- 1.Accreditation Council for Graduate Medical Education. Common Program Requirements. http://www.acgme.org/acgmeweb/Portals/0/dh_dutyhoursCommonPR07012007.pdf. Accessed January 11, 2013. [Google Scholar]

- 2.Grant J. The incapacitating effects of competence: a critique. Adv Health Sci Educ Theory Pract. 1999;4(3):271–277. doi: 10.1023/A:1009845202352. [DOI] [PubMed] [Google Scholar]

- 3.Whitcomb ME. Redirecting the assessment of clinical competence. Acad Med. 2007;82(6):527–528. doi: 10.1097/ACM.0b013e31805556f8. [DOI] [PubMed] [Google Scholar]

- 4.Rees CE. The problem with outcomes-based curricula in medical education: insights from educational theory. Med Educ. 2004;38(6):593–598. doi: 10.1046/j.1365-2923.2004.01793.x. [DOI] [PubMed] [Google Scholar]

- 5.Snadden D. Portfolios–attempting to measure the unmeasurable. Med Educ. 1999;33(7):478–479. doi: 10.1046/j.1365-2923.1999.00446.x. [DOI] [PubMed] [Google Scholar]

- 6.ten Cate O, Scheele F. Competency-based postgraduate training: can we bridge the gap between theory and clinical practice. Acad Med. 2007;82(6):542–547. doi: 10.1097/ACM.0b013e31805559c7. [DOI] [PubMed] [Google Scholar]

- 7.ten Cate O. Entrustability of professional activities and competency-based training. Med Educ. 2005;39(12):1176–1177. doi: 10.1111/j.1365-2929.2005.02341.x. [DOI] [PubMed] [Google Scholar]

- 8.Jones MD, Jr, Rosenberg AA, Gilhooly JT, Carraccio CL. Perspective: competencies, outcomes, and controversy–linking professional activities to competencies to improve resident education and practice. Acad Med. 2011;86(2):161–165. doi: 10.1097/ACM.0b013e31820442e9. [DOI] [PubMed] [Google Scholar]

- 9.ten Cate O, Snell L, Carraccio C. Medical competence: the interplay between individual ability and the health care environment. Med Teach. 2010;32(8):669–675. doi: 10.3109/0142159X.2010.500897. [DOI] [PubMed] [Google Scholar]

- 10.Mulder H, Ten Cate O, Daalder R, Berkvens J. Building a competency-based workplace curriculum around entrustable professional activities: the case of physician assistant training. Med Teach. 2010;32(10):e453–e459. doi: 10.3109/0142159X.2010.513719. [DOI] [PubMed] [Google Scholar]

- 11.Green L American Board of Family Medicine. Update on the P4 project. Ann Fam Med. 2008;6(1):86. doi: 10.1370/afm.808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.American Academy of Family Physicians. Recommended curriculum guidelines for family medicine residents. http://www.aafp.org/online/en/home/aboutus/specialty/rpsolutions/eduguide.html. Accessed October 12, 2011. [Google Scholar]

- 13.Royal College of General Practitioners. Curriculum statements. http://www.rcgp-curriculum.org.uk/rcgp_-_gp_curriculum_documents/gp_curriculum_statements.aspx. Accessed October 12, 2011. [Google Scholar]

- 14.Kjaer NK, Maagaard R. General practice education–why and in which direction? [in Danish] Ugeskr Laeger. 2008;170(44):3506. [PubMed] [Google Scholar]

- 15.Green LA, Jones SM, Fetter G, Jr, Pugno PA. Preparing the personal physician for practice: changing family medicine residency training to enable new model practice. Acad Med. 2007;82(12):1220–1227. doi: 10.1097/ACM.0b013e318159d070. [DOI] [PubMed] [Google Scholar]

- 16.Dalkey NC. The Delphi Method: an experimental study of group opinion. http://www.rand.org/pubs/research_memoranda/RM5888.html. Accessed July 11, 2011. [Google Scholar]

- 17.Tandeter H, Carelli F, Timonen M, Javashvili G, Basak O, Wilm S, et al. A ‘minimal core curriculum’ for Family Medicine in undergraduate medical education: a European Delphi survey among EURACT representatives. Eur J Gen Pract. 2011;17(4):217–220. doi: 10.3109/13814788.2011.585635. [DOI] [PubMed] [Google Scholar]

- 18.Amin HJ, Singhal N, Cole G. Validating objectives and training in Canadian paediatrics residency training programmes. Med Teach. 2011;33(3):e131–e144. doi: 10.3109/0142159X.2011.542525. [DOI] [PubMed] [Google Scholar]

- 19.Fried H, Leao AT. Using Delphi technique in a consensual curriculum for periodontics. J Dent Educ. 2007;71(11):1441–1446. [PubMed] [Google Scholar]