Abstract

Background

Faculty involvement in resident teaching events is beneficial to resident education, yet evidence about the factors that promote faculty attendance at resident didactic conferences is limited.

Objective

To determine whether offering continuing medical education (CME) credits would result in an increase in faculty attendance at weekly emergency medicine conferences and whether faculty would report the availability of CME credit as a motivating factor.

Methods

Our prospective, multi-site, observational study of 5 emergency medicine residency programs collected information on the number of faculty members present at CME and non-CME lectures for 9 months and collected information from faculty on factors influencing decisions to attend resident educational events and from residents on factors influencing their learning experience.

Results

Lectures offering CME credit on average were attended by 5 additional faculty members per hour, compared with conferences that did not offer CME credit (95% confidence interval [CI], 3.9–6.1; P < .001). Faculty reported their desire to “participate in resident education” was the most influential factor prompting them to attend lectures, followed by “explore current trends in emergency medicine” and the lecture's “specific topic.” Faculty also reported that “clinical/administrative duties” and “family responsibilities” negatively affected their ability to attend. Residents reported that the most important positive factor influencing their conference experience was “lectures given by faculty.”

Conclusions

Although faculty reported that CME credit was not an important factor in their decision to attend resident conferences, offering CME credit resulted in significant increases in faculty attendance. Residents reported that “lectures given by faculty” and “faculty attendance” positively affected their learning experience.

Editor's Note: The online surveys used in this study can be located at http://svy.mk/UBaVji and http://svy.mk/T2acWs. Please contact the corresponding author for more details.

What was known

Faculty participation at resident conferences is beneficial to resident education, yet little is known about interventions that improve faculty attendance.

What is new

Offering continuing medical education (CME) credit for resident conferences increased faculty attendance by an average of 5 faculty members per lecture compared with non-CME lectures.

Limitations

Variables across sites were not controlled for, and single-specialty focus may limit generalizability.

Bottom line

Availability of CME credit improved faculty attendance at resident conferences.

Introduction

The Accreditation Council for Graduate Medical Education (ACGME) requires that all residency programs offer regularly scheduled didactic sessions for residents. Residents are required to participate in these educational experiences,1 and faculty must “demonstrate a strong interest in the education of residents” and “regularly participate” in organized educational events.1 Studies show that faculty involvement in resident teaching events is beneficial to resident education,2–5 yet evidence about the factors that promote faculty attendance at resident didactic conferences is limited. No studies to date, to our knowledge, have directly and prospectively evaluated the effect of continuing medical education (CME) credit on emergency medicine (EM) faculty conference attendance.

We prospectively explored the association between CME credit availability and faculty attendance rates by investigating motivating factors and barriers to faculty attendance as well as EM resident perceptions about the effect of faculty attendance on their educational experience. We hypothesized that the availability of CME credits would be associated with an increase in faculty attendance at weekly conferences and that faculty would cite the availability of CME credit as a motivating factor. We also surmised that residents would report a positive influence of faculty attendance on their educational experience.

Methods

Setting and Participants

Our research consists of a multi-site, prospective, observational study involving 5 ACGME-accredited EM programs at 4 university-based medical centers and 1 large, community-based center in the United States. Sites with ongoing and regularly scheduled CME lectures offering a minimum of 2 CME credit hours per month were considered for the study. Eligible participants were EM faculty and EM residents. Each site obtained approval for this study from its Institutional Review Board.

Faculty attendance at regularly scheduled resident didactic conferences was recorded by sign-in sheet at each location between August 2010 and April 2011. At some sites, one of the investigators confirmed accuracy of the sign-in sheet using a real-time count of attendees, and 1 site used a Web-based attendance tracking system. To reflect normal operating conditions, each site continued its usual methods of announcing resident conferences, lecture topics, and speakers. For each month during the 9-month study period, the number of faculty attending conferences offering CME versus those not offering CME was reported.

Survey Design

Between January and July 2010, a group of EM educators developed an online survey using the tailored design method,6 other available resources, and the literature7–10 to achieve construct validity of the survey instrument and to generate close-ended questions with ordered response categories. We asked faculty to rank, from most influential to least influential, 11 factors that influenced, and 9 factors that negatively affected their decision to attend resident conferences. We asked residents to rank 6 factors, in order of importance, in contributing to their learning experience. For data presentation, rank level and data score were inversely weighted.

The survey was e-mailed to conference attendees. At site 1, surveys were sent to an additional 37 clinical faculty members at affiliate sites to investigate factors motivating that group (those faculty members were not included in attendance totals during data collection or data analysis). Completion of the survey was considered consent for participation.

Data Analysis

Multiple-variable linear regression was used to evaluate the effect of offering CME credit on the number of faculty attending a given hour of lecture. Site 1 did not conduct CME lectures during month 9, and the site's data for that month were not used, resulting in 89 observations for the regression. We constructed a primary model examining the percentage of available faculty (faculty attending/total faculty at the site) per hour as the dependent variable (table 1) and a secondary model using count data for the number of faculty per hour of lecture (table 2). The model used the presence or absence of CME as a dichotomous variable and institution as a nominal categorical variable. A partial F test was used to determine the significance of residency site within the model (P < .05). The model was tested for assumptions of normality of distribution of residuals using the Shapiro-Wilk test as well as graphic examination of the data.11 Leverage plots were used to examine the data for outliers and overly influential data points, and the Breusch-Pagan test was used to test for heteroscedasticity.12 An attempt to use log transformation of the faculty attending/total faculty per hour to address mild heteroskedasticity resulted in a worse violation of the normal distribution of the residuals, and modeling was performed on untransformed dependent variables. Given the benchmark of 10 observations per degree of freedom considered, the sample was sufficiently large to avoid overfit of the model.

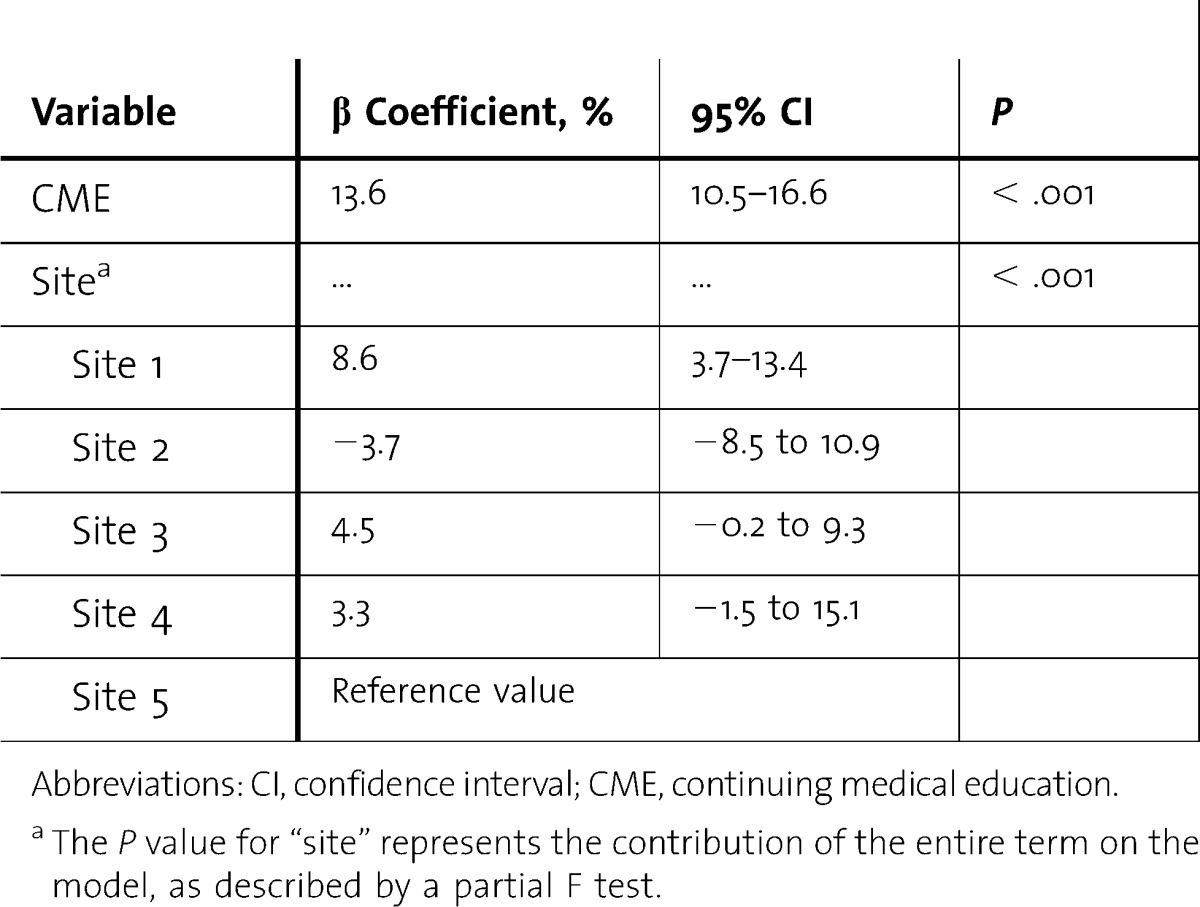

table 1.

Multiple-Variable Linear Regression Model Detailing the Effects of Covariates on Faculty Attendance as a Percentage of the Total Faculty per Site per Hour of Lecture

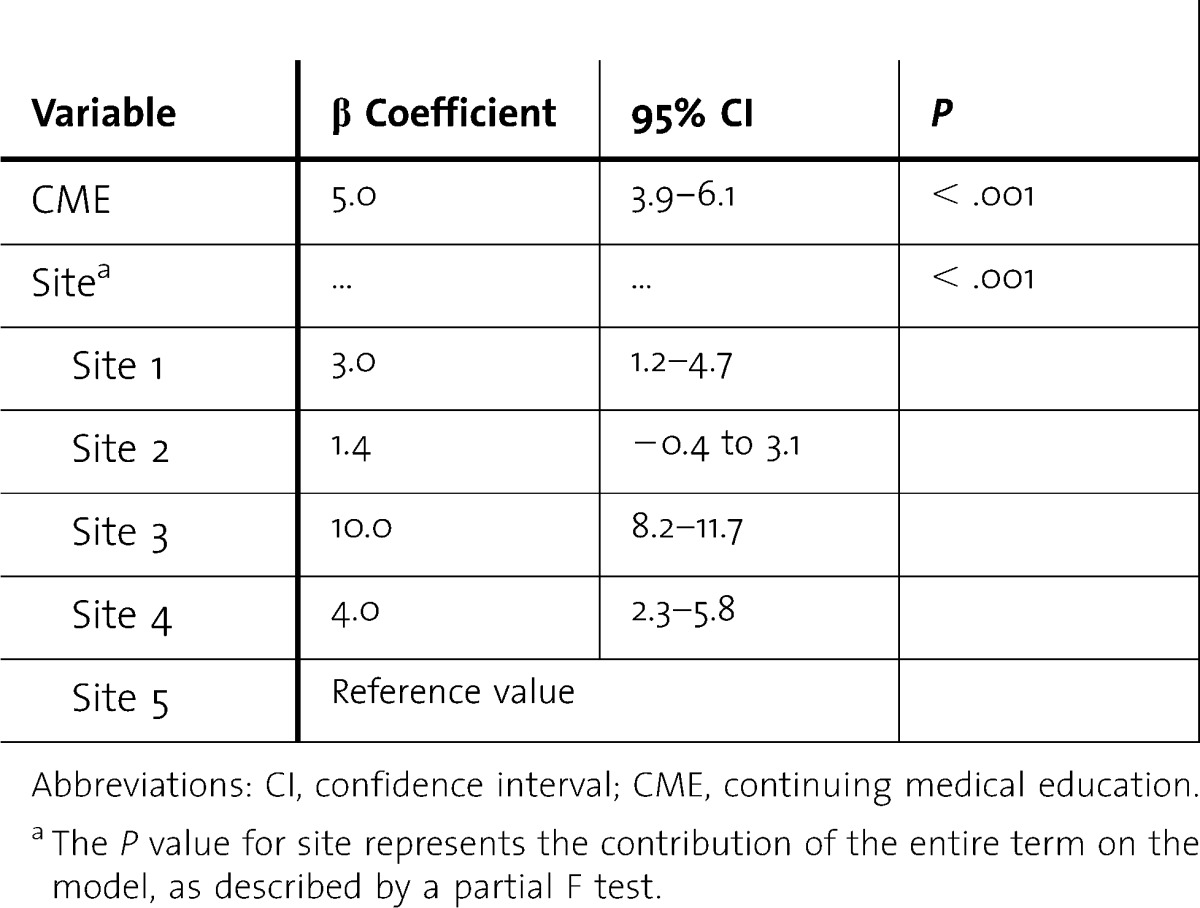

table 2.

Multiple-Variable Linear Regression Model Detailing the Effect Covariates on Faculty Attendance per Hour of Lecture

Results

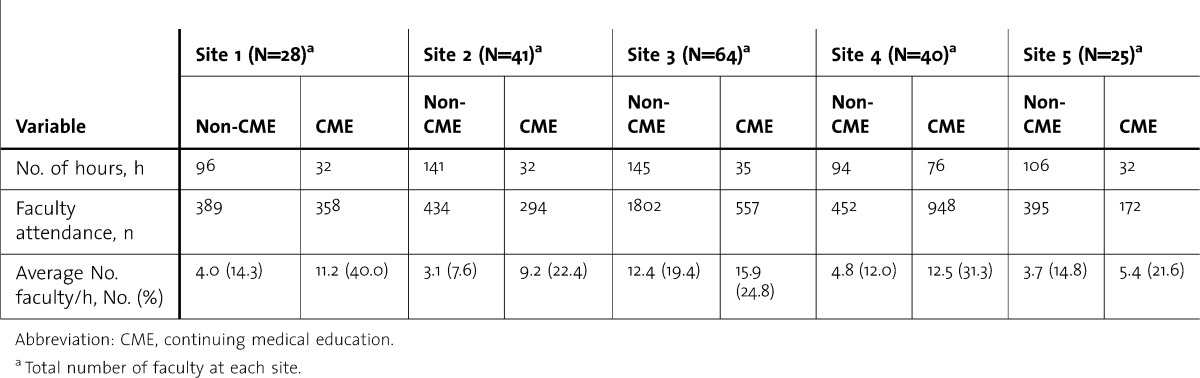

Availability of CME credit was associated with an average of 13.6% increase in faculty attendance per hour (95% confidence interval [CI], 10.5%–16.6%). The model was significantly predictive (F5,83 = 21.1; P < .001) with an adjusted R2 = 0.53. Similar results were obtained with the secondary model, demonstrating an average increase in faculty attendance of 5 faculty per hour (95% CI, 3.9–6.1), with an adjusted R2 = 0.73 and F5,83 = 46.9; P < .001. table 3 reflects total hours of non-CME and CME lecture provided and faculty attendance for each site during the study period. In both models, the month term was not significant, indicating that there was no seasonal trend in attendance during the study period, and the site term was significant in aggregate (F4,83 = 7.23; P < .001 in the percentage model, and F4,83 = 38.52; P < .001 in the secondary model). This confirmed that average attendance was significantly affected by residency site.

table 3.

Site-Specific Continuing Medical Education (CME) and Non-CME Hours Versus Faculty Attendance During Study Period

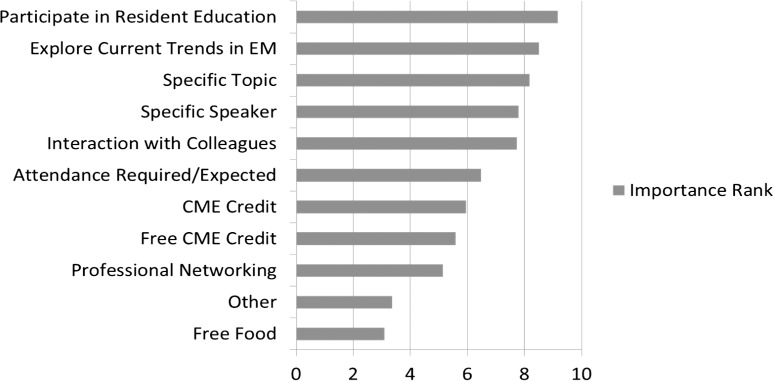

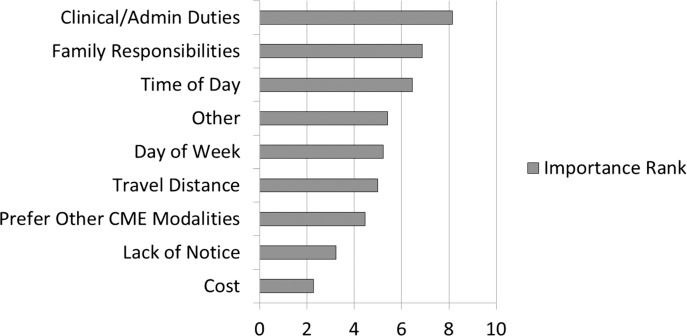

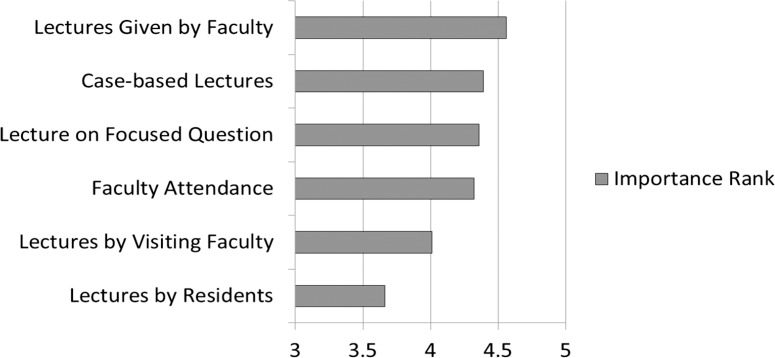

Of 235 faculty members, 150 (63.8%) participated in the survey. Faculty reported that “participation in resident education” was the most influential factor in their decision to attend resident conferences (data score, 9.17). Other important factors (with a score of 7 or more) included “exploring current trends in EM” (8.50), and the lecture's “specific topic” (8.17). Factors such as “availability of CME credits” (5.95) and “availability of free CME credits” (5.58; figure 1) were ranked lower in level of importance. Faculty reported “clinical responsibilities and administrative duties” were the factors that had the most significant negative influence on their decision to attend conferences (data score, 8.15) (figure 2). Of 216 residents, 136 (62.9%) completed the survey. Residents gave “lectures delivered by faculty” the highest score (4.56), whereas “lectures given by residents” received the lowest score (3.66). “Attendance and participation by faculty” received a score of 4.32 (figure 3).

FIGURE 1.

Data Scores From Faculty Survey of Factors Influencing Their Decision to Attend Resident Conference

Abbreviations: EM, emergency medicine; CME, continuing medical education.

FIGURE 2.

Data Scores From Faculty Survey for Factors in the Decision Not to Attend a Resident Conference

Note: 0 is least inhibitory; 9 is most inhibitory. Abbreviation: CME, continuing medical education.

FIGURE 3.

Rank Scores From Resident Survey of Factors That Positively Affect Their Learning Experience

Discussion

Our study demonstrates that offering CME credit is associated with increased faculty attendance. However, EM faculty ranked “participation in resident education” as the most influential reason for attending conference, not the “availability of CME credit.” In a 2005 survey,13 CME credit was the most common motivating factor reported for faculty attendance at grand rounds, followed closely by social interaction. Another study14 found that CME credit did not influence attendance and that only 42% of participants actually registered to receive the credit.

Several possibilities contribute to the incongruity between our findings of the effect of offering CME credit on faculty attendance and faculty's responses in our survey. Given the considerable effort and costs required to obtain CME credit for an educational event, few lectures are selected for CME-credit availability. Events with CME credit generally receive more attention from organizers and presenters; which may elevate the quality of the presentations and the relevance of topics, and CME events may have higher educational effect than do non-CME events. This explanation is supported by the rankings of factors, such as “explore current trends in EM,” “specific speaker,” and “specific topic.” At several sites, CME events include grand rounds and morbidity and mortality conferences, which are regarded as venues for current information and lively discussion among attendees and may cause participants to favor these events. Because these CME events may contain the elements that motivate faculty to attend, faculty attendance may not be causally related to CME credit but tangentially associated with the attributes of the variables. This does not explain why EM faculty ranked “participation in resident education” above all other motivating factors for attending conference, particularly because non-CME conferences drew fewer faculty attendees. Perhaps EM faculty members feel they can best contribute to residents' educational experiences at events that foster discussion among attendees.

In the responses to our survey, 88% of residents (120 of 136) ranked “attendance and participation by faculty at conference” as “important” or “very important” in contributing to their learning experience, but did not value “lectures given by residents” as highly (rating average of 3.7 versus 4.6). One reason for this is that faculty members may have greater knowledge about topics and better presentation skills.

Because residents regard faculty attendance and faculty lectures as beneficial to their learning experience, factors that significantly obstruct faculty participation are important as well. Logistical considerations (scheduling, location) have been cited as common barriers to attendance in another study15 and were also mentioned in the responses to our study. In contrast to the earlier findings, the 2 highest-ranked obstacles to attendance in our sample were “family responsibilities” and “clinical/administrative duties.” Both reasons present challenging targets for efforts to foster greater faculty participation at resident conferences. These findings should be considered within the greater context of graduate and continuing medical education. In addition to overcoming logistical barriers to attendance at educational events, some have called for greater focus on the barriers to changes in practice and implementation of knowledge transfer.16–18 Recent work19,20 has emphasized a need for more flexible and customized EM education with a focus on outcomes-based requirements.

Our study has several limitations. First, conditions across study sites were not uniform: CME credit was not randomized, educational formats were not identical (morbidity and mortality conference versus grand rounds), and only 2 sites assigned CME credit to specific lecture topics (the remaining sites assigned CME credit to events in a specific time slot). Second, other variables, such as experience of the presenter, the time of day, and faculty attendance requirements varied across sites. With the exception of 1 site, CME events were scheduled during normal conference hours without adjustments for faculty convenience. Institutional attendance requirements as a faculty performance parameter were also unlikely to have significantly affected attendance rates because attendance is linked to financial incentives at only 2 sites, and a policy at one of those sites discourages exclusive attendance at CME events. Third, the difference between faculty attendance at lectures delivered by residents and those delivered by faculty was not directly observed. Fourth, the survey instrument was not pretested, and validity and reliability calculations were not performed. Content and construct validity may have been skewed toward false-positive reporting of motivations among faculty to attend a conference (ie, “participate in resident education”) and false-negative reporting of the influence of CME credit. Finally, response bias may have been present in faculty members' answers about factors that motivated them to attend conferences.

Conclusions

Our research shows that offering CME credit for EM resident conference is associated with an increase of 5 faculty members per hour, although faculty respondents reported that they valued the opportunity to participate in resident education more than the availability of CME credit. Our results may be relevant to EM medicine programs and conference organizers when they consider methods to boost faculty attendance at resident conferences. Finally, residents value attendance and participation by faculty members at resident conferences.

Footnotes

Cedric W. Lefebvre, MD, FACEP, FAAEM, is Assistant Professor of Emergency Medicine at Wake Forest School of Medicine; Brian Hiestand, MD, MPH, FACEP, is Associate Professor of Emergency Medicine at Wake Forest School of Medicine. Michael C. Bond, MD, FACEP, FAAEM, is Assistant Professor of Emergency Medicine at the University of Maryland School of Medicine; Sean M. Fox, MD, is Assistant Professor of Adult and Pediatric Emergency Medicine at Carolinas Medical Center. Doug Char, MD, is Associate Professor of Emergency Medicine at Washington University School of Medicine; Drew S. Weber, MD, was a Resident in Emergency Medicine at Wake Forest School of Medicine and is now with the United States Air Force Medical Corps; David Glenn, MD, was a Resident in Emergency Medicine at Wake Forest School of Medicine and is now Emergency Medicine Physician with Novant Health of North Carolina; Leigh A. Patterson, MD, is Assistant Professor of Emergency Medicine at Brody School of Medicine; and David E. Manthey, MD, FACEP, FAAEM, is Professor of Emergency Medicine at Wake Forest School of Medicine.

Funding: The authors report no external funding source for this study.

The views expressed in this article are those of the authors and do not reflectthe official policy or position of the United States Air Force, Department ofDefense, or the US Government.

We would like to thank Linda J. Kesselring, MS, ELS, technical editor/writer, in the Department of Emergency Medicine at the University of Maryland, School of Medicine, for her assistance in preparing the manuscript for submission.

References

- 1.Accreditation Council for Graduate Medical Education. Program Requirement Emergency Medicine Guidelines, 2007. http://www.acgme.org/acgmeweb/tabid/131/ProgramandInstitutionalGuidelines/Hospital-BasedAccreditation/EmergencyMedicine.aspx. Accessed November 13, 2012. [Google Scholar]

- 2.Prince JM, Vallabhaneni R, Zenati MS, Hughes SJ, Harbrecht BG, Lee KK, et al. Increased interactive format for morbidity and mortality conference improves educational value and enhances confidence. J Surg Educ. 2007;64(5):266–272. doi: 10.1016/j.jsurg.2007.06.007. [DOI] [PubMed] [Google Scholar]

- 3.Mezrich JL. Putting the heat back into radiology morbidity and mortality conferences. J Am Coll Radiol. 2011;8(9):638–641. doi: 10.1016/j.jacr.2011.01.015. [DOI] [PubMed] [Google Scholar]

- 4.Joyner BD, Nicholson C, Seidel K. Medical knowledge: the importance of faculty involvement and curriculum in graduate medical education. J Urol. 2006;175(5):1843–1846. doi: 10.1016/S0022-5347(05)00981-X. [DOI] [PubMed] [Google Scholar]

- 5.Rosenblum ND, Nagler J, Lovejoy FJ, Jr, Hafler JP. The pedagogic characteristics of a clinical conference for senior residents and faculty. Arch Pediatr Adolesc Med. 1995;149:1023–1028. doi: 10.1001/archpedi.1995.02170220089012. [DOI] [PubMed] [Google Scholar]

- 6.Dillman D. Mail and Internet Surveys: The Tailored Design Method. 2nd ed. New York, NY: John Wiley & Sons Inc; 2000. [Google Scholar]

- 7.Ritter LA, Sue VM. Special issue: using online surveys in evaluation. New Dir Eval. 2007(115):1–65. [Google Scholar]

- 8.Burton LJ, Mazerolle SM. Survey instrument validity, part I: principles of survey instrument development and validation in athletic training education research. Athl Train Educ J. 2011;6(1):27–35. [Google Scholar]

- 9.Colletti JE, Flottemesch TJ, O'Connell TA, Ankel FK, Asplin BR. Developing a standardized faculty evaluation in an emergency medicine residency. J Emerg Med. 2010;39(5):662–668. doi: 10.1016/j.jemermed.2009.09.001. [DOI] [PubMed] [Google Scholar]

- 10.Wang Y-S. Assessment of learner satisfaction with asynchronous electronic learning systems. Inf Manage. 2003;41(1):75–86. doi:10.1016/S0378-7206(03)00028-4. [Google Scholar]

- 11.Royston P. Estimating departure from normality. Stat Med. 1991;10(8):1283–1293. doi: 10.1002/sim.4780100811. [DOI] [PubMed] [Google Scholar]

- 12.Breusch TS, Pagan AR. Simple test for heteroscedasticity and random coefficient variation. Econometrica. 1979;47(5):1287–1294. [Google Scholar]

- 13.Bandiera GW, Morrison L. Emergency medicine teaching faculty perceptions about formal academic sessions: “What's in it for us?”. CJEM. 2005;7(1):36–41. doi: 10.1017/s1481803500012914. [DOI] [PubMed] [Google Scholar]

- 14.Forman WB, Haykus W. Tumor conference: the role of continuing medical education. J Contin Educ Health Prof. 1988;8(4):267–270. doi: 10.1002/chp.4750080404. [DOI] [PubMed] [Google Scholar]

- 15.Cabana MD, Brown R, Clark NM, White DF, Lyons J, Lang SW, et al. Improving physician attendance at educational seminars sponsored by managed care organizations. Manag Care. 2004;13(9):49–57. [PubMed] [Google Scholar]

- 16.Kilian BJ, Binder LS, Mardsen J. The emergency physician and knowledge transfer: continuing medical education, continuing professional development, and self-improvement. Acad Emerg Med. 2007;14(11):1003–1007. doi: 10.1197/j.aem.2007.07.008. [DOI] [PubMed] [Google Scholar]

- 17.Diner BM, Carpenter CR, O'Connell T, Pang P, Brown MD, Seupaul RA, et al. Graduate medical education and knowledge translation: role models, information pipelines, and practice change thresholds. Acad Emerg Med. 2007;14(11):1008–1014. doi: 10.1197/j.aem.2007.07.003. [DOI] [PubMed] [Google Scholar]

- 18.Carpenter CR, Sherbino J. How does an “opinion leader” influence my practice. CJEM. 2010;12(5):431–434. doi: 10.1017/s1481803500012586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Sadosty AT, Goyal DG, Gene Hern H, Jr, Kilian BJ, Beeson MS. Alternatives to the conference status quo: summary recommendations from the 2008 CORD academic assembly conference alternatives workgroup. Acad Emerg Med. 2009;16(suppl 2):S25–S31. doi: 10.1111/j.1553-2712.2009.00588.x. [DOI] [PubMed] [Google Scholar]

- 20.Shojania KG, Silver I, Levinson W. Continuing medical education and quality improvement: a match made in heaven. Ann Intern Med. 2012;156(4):305–308. doi: 10.7326/0003-4819-156-4-201202210-00008. [DOI] [PubMed] [Google Scholar]