Abstract

Background

Multisource evaluations of residents offer valuable feedback, yet there is little evidence on the best way to collect these data from a range of health care professionals.

Objective

This study evaluated nonphysician staff members' ability to assess internal medicine residents' performance and behavior, and explored whether staff members differed in their perceived ability to participate in resident evaluations.

Methods

We distributed an anonymous survey to nurses, medical assistants, and administrative staff at 6 internal medicine residency continuity clinics. Differences between nurses and other staff members' perceived ability to evaluate resident behavior were examined using independent t tests.

Results

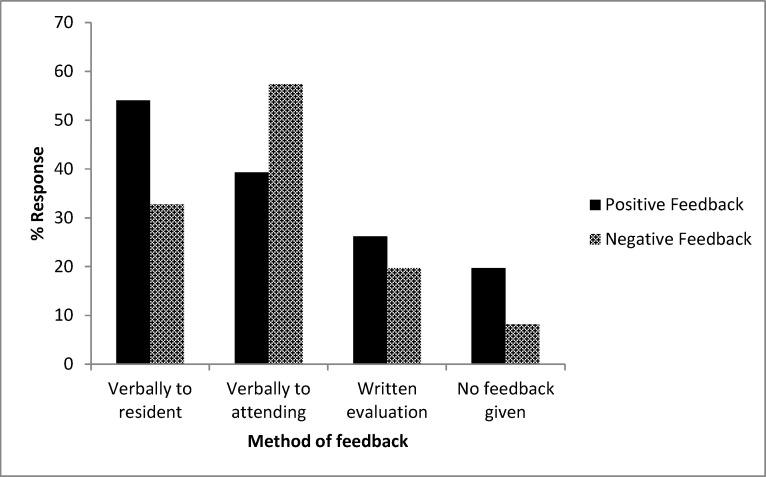

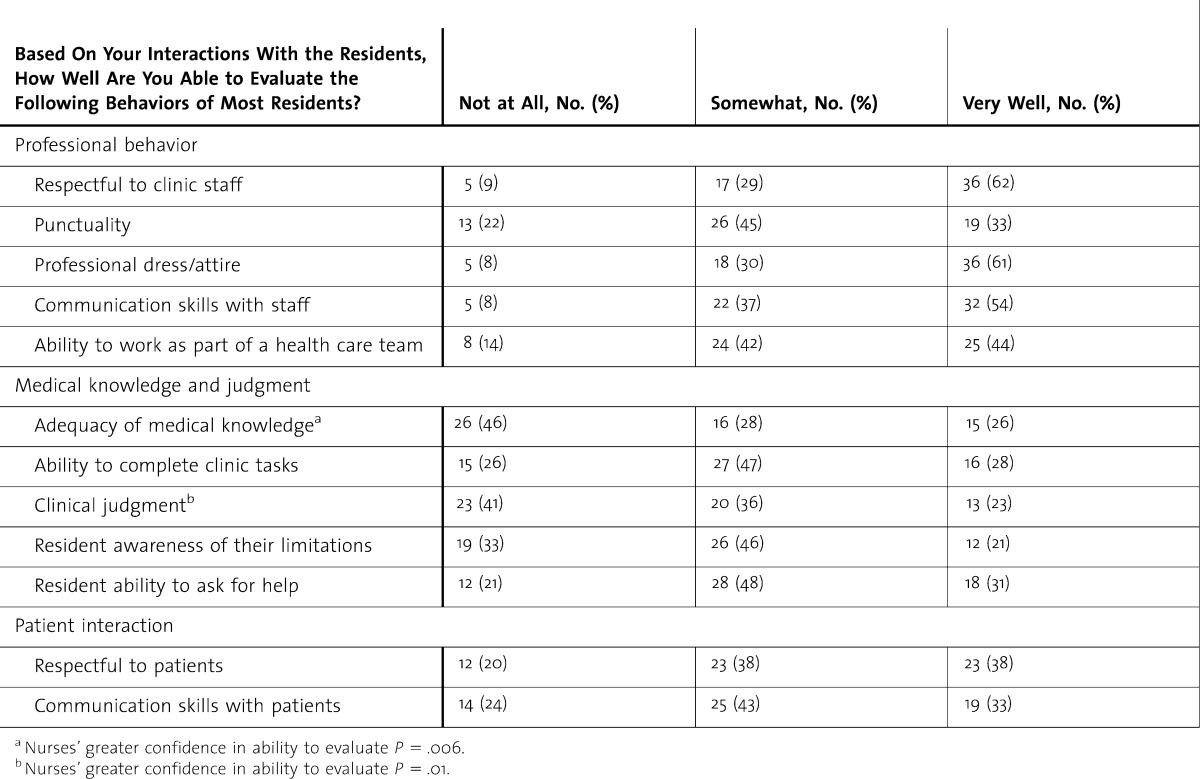

The survey response rate was 82% (61 of 74). A total of 55 respondents (90%) reported that it was important for them to evaluate residents. Participants reported being able to evaluate professional behaviors very well (62% [36 of 58] on the domain of respect to staff; 61% [36 of 59] on attire; and 54% [32 of 59] on communication). Individuals without a clinical background reported being uncomfortable evaluating medical knowledge (60%; 24 of 40) and judgment (55%; 22 of 40), whereas nurses reported being more comfortable evaluating these competencies. Respondents reported that the biggest barrier to evaluation was limited contact (86%; 48 of 56), and a significant amount of feedback was given verbally rather than on written evaluations.

Conclusions

Nonphysician staff members agree it is important to evaluate residents, and they are most comfortable providing feedback on professional behaviors. A significant amount of feedback is provided verbally but not necessarily captured in a formal written evaluation process.

Editor's Note: The online version of this article contains the survey instrument (76.5KB, doc) used in this study.

What was known

Multisource evaluations of residents offer valuable feedback.

What is new

Nonphysician staff members in an internal medicine clinic were most comfortable providing feedback on professional behaviors, with nurses also able to evaluate clinical judgment and medical knowledge. Feedback often was provided verbally.

Limitations

Single-site, single-specialty study may limit generalizability; continuity clinic setting may not generalize to inpatient settings.

Bottom line

Focusing multisource feedback questions on the competencies staff members can observe and evaluate is an important step for providing more effective evaluations.

Introduction

Multisource evaluation of physicians-in-training offers a broader perspective of trainee competence than supervising physicians alone can provide.1,2 Patient care, medical knowledge, practice-based learning and improvement, interpersonal and communication skills, professionalism, and systems-based practice represent fundamental aspects of physician competence that residency programs need to objectively assess.3,4 For many of these, particularly interpersonal and communication skills and professionalism, multisource feedback is considered the most effective approach. However, clinic staff may not feel comfortable reporting on all aspects of physician performance.

The ideal contributors for multisource evaluations and the format that would optimize the accuracy and quality of data collected have not been methodically evaluated. Studies have examined the use of multisource evaluations to assess patient care, teamwork, professionalism, and communication.1,5–13 Some have shown the inability of staff to complete multisource evaluations, resulting in numerous “unable to assess” responses,10,11,14 suggesting evaluators may not be asking the appropriate questions.

The purpose of this study was to evaluate nonphysician staff members' self-perceived ability to assess specific aspects of resident performance and behavior in an internal medicine continuity clinic to help determine the best format and venue for obtaining more useful information from multisource evaluations. We explored how internal medicine clinic staff members viewed their role in the resident evaluation process, what competencies staff members felt comfortable evaluating, and whether there were differences in evaluations by staff members with different professional backgrounds.

Methods

In spring 2010 we conducted a cross-sectional survey of the nonphysician continuity clinic staff working in the University of Colorado Internal Medicine Residency program.

Survey Development

We designed the survey to assess staff members' ability to evaluate specific resident behaviors. The survey domains were based on items rated as “unable to evaluate” by clinic staff on multisource evaluations from the residency program for the previous 2 years. Although clinic staff members had been able to complete general questions about communication and interpersonal skills, they frequently answered “unable to evaluate” on questions relating to specific professional behaviors, such as “the resident demonstrates respect for the patient's religion” or “the resident demonstrates respect for the patient's sexual orientation.”

We developed a 16-question survey to collect data on participant demographics, experiences providing feedback to residents, and preferences regarding frequency and method of providing feedback. The questionnaire asked respondents to rate their ability to evaluate 12 resident behaviors using a 3-point response scale (1 = not able to evaluate; 2 = somewhat able; 3 = very well).

We also conducted a focus group. The 18 participants included nurses, medical assistants, and telephone and administrative staff who interact with residents. Participants were provided with the multisource evaluation form currently used for reference and completed the study survey (provided as online supplemental material) as a starting point for discussion. The focus group participants discussed the survey questions and provided feedback on the proposed study survey, including questions on terminology and adequacy of response categories. The focus group findings confirmed the appropriateness of our questionnaire for assessing nonphysicians' ability to contribute to multisource feedback.

Survey Administration

Eligible staff members were defined as nonphysician staff members (nurses, nurse practitioners, medical assistants, clinic managers, and clinic administrative staff). A total of 6 of 7 continuity clinic sites associated with our residency program were included in the study (2 university-based clinics, 2 community health centers, 1 Veterans Affairs Medical Center, and 1 private practice site). One site did not participate in the survey because of scheduling conflicts. To prepare staff at participating sites, the principal investigator described the purpose of the study, provided the current multisource form for reference, and administered the survey. Participation was voluntary. To maintain anonymity, results were reported in aggregate, and a facilitator conducted the survey at the principal investigator's clinic site.

This study was approved by the Colorado Multiple Institution Review Board.

Data Analysis

Data analysis was performed using PASW17 (IBM SPSS Software, Armonk, NY). We conducted descriptive analyses to characterize the sample, beliefs about participation in resident evaluations, and experiences providing feedback to residents. Chi-square test was used to compare perceptions of resident evaluations by nurses and other clinic staff, including medical assistants, administrative staff, and clinic managers. The 12 resident behaviors assessed were combined into domains using exploratory factor analysis (principal axis extraction with varimax rotation). Because the number of items differed for the resulting scales, item means were used in independent t tests examining differences between nurses and other clinical staff in their perceived ability to evaluate the 3 types of resident behavior.

Results

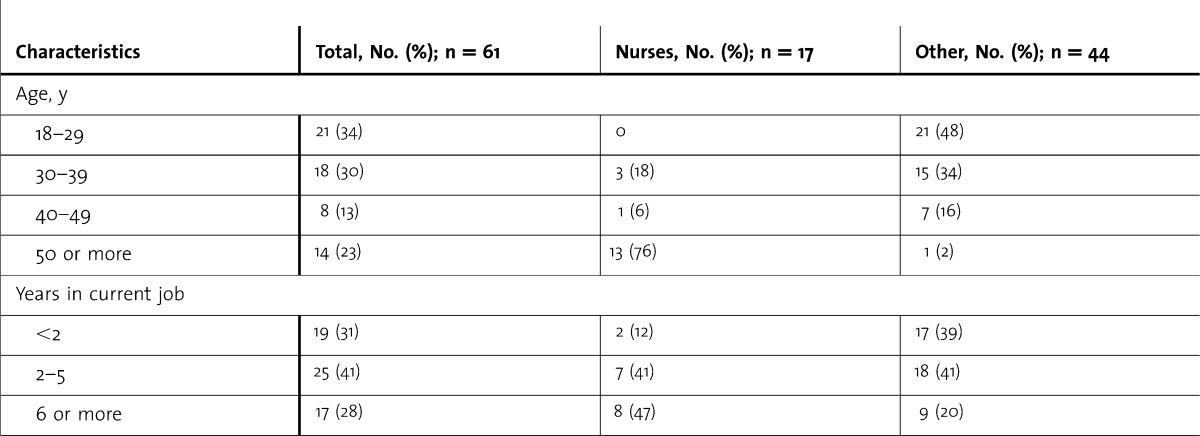

Our survey response rate was 82% (61 of 74). Participants were separated into 2 groups: (1) nurses and (2) other staff (nonnurses). The nurse category included 16 registered nurses and licensed practical nurses, and 1 nurse practitioner. Participants in the other staff category included 19 administrative staff members, 2 clinic managers, and 23 medical assistants. Nurses tended to be older than staff in the other category and to have more years of experience in their current position (table 1).

table 1.

Characteristics of the Survey Responders

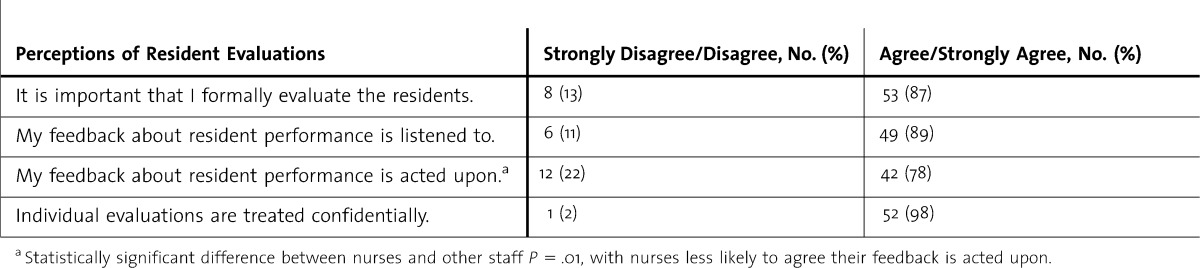

When asked about their perception of giving feedback to the residents, most participants (87%; 53 of 61) reported that it was important for them to evaluate the residents (table 2). When nurses and other staff respondents were compared using χ2 tests with Fisher exact test, a statistically significant difference (P = .01) was shown only for the responses to the question about whether staff feedback about the residents was acted upon. Only half (53%; 8 of 15) of the nurses thought their feedback was acted on compared with 87% (34 of 39) of the other staff.

table 2.

Continuity Clinic Staff Perceptions of Their Involvement in Resident Evaluations

Exploratory factor analysis grouped the 12 resident behaviors into 3 categories by behavior domain. The 3 scales account for 67% of the variance: professional behavior (respect to staff, punctuality, professional attire, communication with clinic staff, ability to work as part of a health care team; Cronbach alpha = .857); medical knowledge and judgment (adequacy of medical knowledge, ability to complete clinical tasks, clinical judgment, resident awareness of his or her limitations, resident ability to ask for help; Cronbach alpha = .892); and patient interaction (respect for patients, communication skills with patient; Cronbach alpha = .867). None of the independent t tests were significant when comparing nurses' responses in these 3 domains to those of other clinic staff (table 3). However, when the items were analyzed individually, nurses reported greater confidence in their ability to evaluate residents' medical knowledge and clinical judgment.

table 3.

Self-Reported Staff Ability to Evaluate Specific Resident Behaviors

All staff cited limited contact with residents as the biggest barrier to evaluating them (86%; 48 of 56). Lack of confidentiality was not reported as a concern among nurses or other clinical staff (98%; 55 of 56). When asked about giving both positive and negative feedback, respondents reported giving significant amounts of feedback verbally rather than completing formal evaluations (figure). Nurses were more likely than other staff members to give verbal feedback, both positive and negative, directly to the residents. Positive feedback was given verbally to the residents by 71% (12 of 17) of nurses versus 48% (21 of 44) of the other staff, and negative verbal feedback was given by 65% (11 of 17) of the nurses compared with 20% (9 of 44) of staff in the other category. Staff also reported giving verbal feedback on residents' performance directly to the attending physicians, both positive (39%; 24 of 61) and negative (57%; 35 of 61). Some participants in both groups reported not giving any feedback directly to the residents, regardless of whether it was positive (20%; 12 of 61) or negative (8%; 5 of 61).

FIGURE.

Methods of Feedback Used by the Respondents for Both Positive and Negative Feedback to the Residents

Discussion

Staff in an internal medicine clinic reported that it is important for them to evaluate residents, and that they were most comfortable providing feedback on professional behaviors and significantly less comfortable providing feedback about medical knowledge and judgment. They also reported that they felt their evaluations were handled confidentially. Nurses reported greater confidence in evaluating residents' medical knowledge and clinical judgment. We did not assess the influence of age and experience on these findings.

Physicians use multiple sources of information, such as patient medical records, direct contact, patient feedback, and written or verbal communication to assess their colleagues.15 Nonphysician staff members have less access to these multiple sources of information, and therefore must base their assessments on direct observations and interactions. This lack of external sources of information heightens the importance of focusing multisource questions on resident behaviors that can be directly observed by staff members. Prior research has evaluated the validity of specific multisource feedback forms by examining questions answered as “unable to evaluate” or “unable to assess.”11,16 However, these studies have not directly asked about specific competencies nonphysician staff felt were more difficult to assess. Previous studies have shown that feedback is most useful and well-received if it comes from a credible source with an adequate opportunity to observe what is being evaluated.17–21 Raters must understand their role in multisource feedback and the goals of the behavior they are evaluating.7,15,22 Think-aloud interviews by Mazor et al19 revealed that raters frequently base their evaluations on general impressions rather than directly observed behaviors, and they had different interpretations of the desired behaviors they were rating.15,23 Although training has been shown to improve faculty ability to give feedback, nonphysician staff members who participate in multisource feedback rarely receive comparable training.24 Our findings add to the knowledge about competencies that nonphysician staff members feel competent and comfortable in evaluating. This is an important step to focus multisource feedback questions on the specific competencies staff members can observe and evaluate in residents.

The study also suggests that evaluation forms need to be tailored to the specific staff members making the assessments. Our findings that nurses reported more comfort in evaluating medical knowledge and judgment but had limited contact with the residents will be important for developing good multisource feedback approaches on inpatient services where the nurses and residents are likely to have more interaction.

We found that a significant amount of feedback is provided verbally directly to the residents and/or their supervising physicians but is not captured in the formal evaluation process. Although verbal feedback can be beneficial, especially when combined with written feedback, residency leadership cannot track it and may not be aware of it. This creates a challenge in the context of Accreditation Council for Graduate Medical Education (ACGME) requirements for use of multisource feedback in resident evaluations.23,25 We also found that a concerning number of our responders reported not giving any feedback at all to the residents, highlighting the challenges of creating an educational environment where staff feedback is expected and encouraged. It also underscores the importance of asking staff members the appropriate questions to allow them to feel more comfortable with and engaged in the resident evaluation process.

Our study has several limitations. First, our single-site, single-specialty study may limit generalizability. Second, our continuity clinic setting may not generalize to other settings, particularly inpatient units. Although the continuity clinic setting allows longitudinal relationships to develop between clinic staff and residents, the amount of interaction between the staff and the residents during a given clinic session may be limited. The ongoing nature of that relationship and the need to recall behavior over the longer period of the clinic rotation could lead to a “halo effect” of rating learners higher because of a personal familiarity rather than directly observed behaviors.1,12,13 Third, the potential that the investigators may have influenced the survey responses could not be fully controlled because responses by some categories of staff, such as the clinic managers, could not be made anonymous. Finally, we did not attempt to validate the capacity of nonphysician staff to evaluate residents and focused solely on staff members' perception of their own abilities in the evaluation process.

Conclusions

Nonphysician staff members in an internal medicine clinic agree it is important for them to evaluate residents, and they are most comfortable providing feedback on professional behaviors. Nurses expressed more confidence in their ability to provide feedback on clinical judgment and medical knowledge than other staff members. A significant amount of feedback is provided verbally to residents and/or the attending physicians but is not captured in formal written evaluations required by the ACGME. Our findings provide an important first step in focusing multisource feedback questions on the specific competencies staff members are able to observe and evaluate in residents.

Footnotes

All authors are with the Division of General Internal Medicine, University of Colorado School of Medicine. Susan Michelle Nikels, MD, is Assistant Professor; Gretchen Guiton, PhD, is Associate Professor and Director of Evaluation; Danielle Loeb, MD, is Assistant Professor; and Suzanne Brandenburg, MD, is Professor of Medicine, Vice Chair for Education, and Residency Program Director.

Funding: This study was funded by the University of Colorado Division of General Internal Medicine Small Grants program.

The findings from this study were presented in 2011 at the Society of General Internal Medicine Annual Meeting in Phoenix, AZ.

References

- 1.Johnson D, Cujec B. Comparison of self, nurse, and physician assessment of residents rotating through an intensive care unit. Crit Care Med. 1998;26(11):1811–1816. doi: 10.1097/00003246-199811000-00020. [DOI] [PubMed] [Google Scholar]

- 2.Warm EJ, Schauer D, Revis B, Boex J. Multisource feedback in the ambulatory setting. J Grad Med Educ. 2010;2(2):269–277. doi: 10.4300/JGME-D-09-00102.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Accreditation Council for Graduate Medical Education. Common Program Requirements. V.A.1.b).(1)-(2) http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/140_internal_medicine_07012009.pdf. Accessed November 19, 2012. [Google Scholar]

- 4.Accreditation Council for Graduate Medical Education. RRC Program Requirements. V.A.1.b).(1)-V.A.1.b).(2) http://www.acgme.org/acgmeweb/Portals/0/dh_dutyhoursCommonPR07012007.pdf. Accessed November 19, 2012. [Google Scholar]

- 5.Torbeck L, Wrightson AS. A method for defining competency-based promotion criteria for family medicine residents. Acad Med. 2005;80(9):832–839. doi: 10.1097/00001888-200509000-00010. [DOI] [PubMed] [Google Scholar]

- 6.Joshi R, Ling FW, Jaeger J. Assessment of a 360-degree instrument to evaluate residents' competency in interpersonal and communication skills. Acad Med. 2004;79(5):458–463. doi: 10.1097/00001888-200405000-00017. [DOI] [PubMed] [Google Scholar]

- 7.Wood L, Hassell A, Whitehouse A, Bullock A, Wall D. A literature review of multi-source feedback systems within and without health services, leading to 10 tips for their successful design. Med Teach. 2006;28(7):e185–e191. doi: 10.1080/01421590600834286. [DOI] [PubMed] [Google Scholar]

- 8.Berk RA. Using the 360 degrees multisource feedback model to evaluate teaching and professionalism. Med Teach. 2009;31(12):1073–1080. doi: 10.3109/01421590802572775. [DOI] [PubMed] [Google Scholar]

- 9.Whitehouse A, Hassell A, Bullock A, Wood L, Wall D. 360 degree assessment (multisource feedback) of UK trainee doctors: field testing of team assessment of behaviours (TAB) Med Teach. 2007;29(2–3):171–176. doi: 10.1080/01421590701302951. [DOI] [PubMed] [Google Scholar]

- 10.Ellinas E. Fellowship multi-source feedback: a resource from obstetric anesthesiology. MedEdPortal. 2010 http://www.mededportal.org/publication/8171. Accessed December 20, 2012. [Google Scholar]

- 11.Lockyer JM, Violato C, Fidler H, Alakija P. The assessment of pathologists/laboratory medicine physicians through a multisource feedback tool. Arch Pathol Lab Med. 2009;133(8):1301–1308. doi: 10.5858/133.8.1301. [DOI] [PubMed] [Google Scholar]

- 12.Archer J, McGraw M, Davies H. Assuring validity of multisource feedback in a national programme. Arch Dis Child. 2010;95(5):330–335. doi: 10.1136/adc.2008.146209. [DOI] [PubMed] [Google Scholar]

- 13.Archer J, Norcini J, Southgate L, Heard S, Davies H. mini-PAT (Peer Assessment Tool): a valid component of a national assessment programme in the UK. Adv Health Sci Educ Theory Pract. 2008;13(2):181–192. doi: 10.1007/s10459-006-9033-3. [DOI] [PubMed] [Google Scholar]

- 14.Davis JD. Comparison of faculty, peer, self, and nurse assessment of obstetrics and gynecology residents. Obstet Gynecol. 2002;99(4):647–651. doi: 10.1016/s0029-7844(02)01658-7. [DOI] [PubMed] [Google Scholar]

- 15.Sargeant J, Macleod T, Sinclair D, Power M. How do physicians assess their family physician colleagues' performance?: creating a rubric to inform assessment and feedback. J Contin Educ Health Prof. 2011;31(2):87–94. doi: 10.1002/chp.20111. [DOI] [PubMed] [Google Scholar]

- 16.Qu B, Zhao YH, Sun BZ. Assessment of resident physicians in professionalism, interpersonal and communication skills: a multisource feedback. Int J Med Sci. 2012;9(3):228–236. doi: 10.7150/ijms.3353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lockyer J, Clyman SG. Multisource feedback (360-degree evaluation) In: Holmboe ES, Hawkins RE, editors. Practical Guide to the Evaluation of Clinical Competence. Philadelphia, PA: Mosby Elsevier; 2008. pp. 75–85. [Google Scholar]

- 18.Lockyer J. Multisource feedback in the assessment of physician competencies. J Contin Educ Health Prof. 2003;23(1):4–12. doi: 10.1002/chp.1340230103. [DOI] [PubMed] [Google Scholar]

- 19.Mazor KM, Canavan C, Farrell M, Margolis MJ, Clauser BE. Collecting validity evidence for an assessment of professionalism: findings from think-aloud interviews. Acad Med. 2008;83(10 suppl):S9–S12. doi: 10.1097/ACM.0b013e318183e329. [DOI] [PubMed] [Google Scholar]

- 20.Sargeant J, Mann K, Ferrier S. Exploring family physicians' reactions to multisource feedback: perceptions of credibility and usefulness. Med Educ. 2005;39(5):497–504. doi: 10.1111/j.1365-2929.2005.02124.x. [DOI] [PubMed] [Google Scholar]

- 21.Sargeant J, Mann K, Sinclair D, van der Vleuten C, Metsemakers J. Challenges in multisource feedback: intended and unintended outcomes. Med Educ. 2007;41(6):583–591. doi: 10.1111/j.1365-2923.2007.02769.x. [DOI] [PubMed] [Google Scholar]

- 22.Wilkinson TJ, Wade WB, Knock LD. A blueprint to assess professionalism: results of a systematic review. Acad Med. 2009;84(5):551–558. doi: 10.1097/ACM.0b013e31819fbaa2. [DOI] [PubMed] [Google Scholar]

- 23.Seifert CF, Yukl G, McDonald RA. Effects of multisource feedback and a feedback facilitator on the influence behavior of managers toward subordinates. J Appl Psychol. 2003;88(3):561–569. doi: 10.1037/0021-9010.88.3.561. [DOI] [PubMed] [Google Scholar]

- 24.Stark R, Korenstein D, Karani R. Impact of a 360-degree professionalism assessment on faculty comfort and skills in feedback delivery. J Gen Intern Med. 2008;23(7):969–972. doi: 10.1007/s11606-008-0586-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Overeem K, Wollersheim H, Driessen E, Lombarts K, van de Ven G, Grol R, et al. Doctors' perceptions of why 360-degree feedback does (not) work: a qualitative study. Med Educ. 2009;43(9):874–882. doi: 10.1111/j.1365-2923.2009.03439.x. [DOI] [PubMed] [Google Scholar]