Abstract

The lateral intraparietal area (LIP), a portion of monkey posterior parietal cortex, has been implicated in spatial attention. We review recent evidence from our laboratory showing that LIP encodes a priority map of the external environment that specifies the momentary locus of attention and is activated in a variety of behavioral tasks. The priority map in LIP is shaped by task-specific variables. We suggest that the multifaceted responses in LIP represent mechanisms for allocating attention, and that the attentional system may flexibly configure itself to meet the cognitive, motor and motivational demands of individual tasks.

Keywords: Attention, Parietal cortex, Monkey, Plasticity

1. Introduction

“Every one knows what attention is”, wrote William James in Principles of Psychology in 1890. James went on to give a broad definition of attention. “It is the taking possession by the mind, in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought. Focalization, concentration of consciousness are of its essence. It implies withdrawal from some things in order to deal effectively with others, and is a condition which has a real opposite in the confused, dazed, scatterbrained state which in French is called distraction, and Zerstreutheit in German”. Rather than viewing attention as a specific instance of perceptual or motor selection, James viewed it as the act of selection itself—a focused state affecting the entire mind.

The decades following William James saw a massive expansion of the empirical study of attention and other mental functions. The new psychophysicists and neurophysiologists, however, subtly redefined attention to suit their own needs. Instead of James’ broad definition they used the term in a narrower sense, to indicate a focusing of the perceptual apparatus on an external stimulus, measurable as a momentary change in the ability to discriminate or detect a given stimulus. The study of attention became the study of modulations of perception, in particular visual perception. Indeed, much effort has been devoted to understanding the types of changes in visual representations that are caused by directing or withdrawing attention (Reynolds & Chelazzi, 2004).

In parallel, physiologists have begun to address a related question—the question of how the brain allocates attention. How does the brain decide which object is relevant at any given moment? Is there a central attentional controller that allocates resources in a variety of tasks, or does selection take place independently in multiple modality-specific areas?

Some consensus now exists that attention is controlled by a distributed network of frontal and parietal areas. This network includes the lateral intraparietal area (LIP) and the frontal eye field (FEF) (Gottlieb, 2007; Moore, Armstrong, & Fallah, 2003), two areas that have also been implicated in the control of rapid eye movements (saccades). The FEF and LIP provide selective “salience” or “priority” maps of the visual world that encode the locations of attention-worthy objects, and whose properties correlate with behaviorally-measured attention (Gottlieb, 2007; Thompson & Bichot, 2005; Thompson, Biscoe, & Sato, 2005).

However, a puzzle is now emerging regarding activity in these areas, and in particular LIP. As LIP is studied in a wider range of behavioral tasks, it becomes clear that it is modulated by a variety of non-spatial factors—including motor, cognitive and motivational aspects of the task. Factors modulating LIP activity include the expectation of reward (Sugrue, Corrado, & Newsome, 2004), the passage of time (Leon & Shadlen, 2003) and the category membership of the attended object (Freedman & Assad, 2006). This has given rise to vigorous debate: does LIP really control attention or does it have other, unknown functions?

Here we review recent data from our own and other laboratories and argue that, even though multifaceted, the spatial response in LIP is nevertheless consistent with an attentional control signal. We emphasize the fact that attention is not a purely sensory but a behavioral selection process, and that to be effective attention has to be closely coupled with behavioral goals. For instance, during a routine but complex activity such as driving a car, we attend to the relevant visual information, such as the road, traffic signs, pedestrians and adjacent cars. In addition, we keep in mind our long-term goal (our destination) and the extended context in which we are driving (i.e., whether it is a holiday or a normal working day; whether we are on time or running late). These factors can influence how we allocate attention whether we attend more or less to information about out location, landmarks, or police vehicles. In addition, we must pay some attention to ongoing motor activity such as turning the wheel, manipulating the gear shift and pressing the foot pedals. Thus, visual, motor, cognitive and motivational factors determine how we allocate attention in this condition. We suggest that complex signals such as those found in LIP represent mechanisms through which behavior variably influences the allocation of attention. If correct, this view nudges us back toward William James’ original definition of attention not as a narrow domain-specific function but as a coordinated focusing that is influenced by and, in turn, influences large portions of the mind.

2. Methods

2.1. General methods and behavioral tasks

Data were collected with standard behavioral and neurophysiological techniques as described previously (Balan & Gottlieb, 2006; Oristaglio, Schneider, Balan & Gottlieb, 2006). All methods were approved by the Animal Care and Use Committees of Columbia University and New York State Psychiatric Institute as complying with the guidelines within the Public Health Service Guide for the Care and Use of Laboratory Animals. During experimental sessions monkeys sat in a primate chair with their heads fixed in the straight ahead position. Visual stimuli were presented on a SONY GDM-FW9000 Trinitron monitor (30.8 by 48.2 cm viewing area) located 57 cm in front of the monkeys’ eyes.

2.2. Identification of LIP

Structural MRI was used to verify that electrode tracks coursed through the lateral bank of the intraparietal sulcus. Before testing on the search task each neuron was first characterized with the memory-saccade task on which, after the monkey fixated a central fixation point, a small annulus (1° diameter) was flashed for 100 ms at a peripheral location and, after a brief delay the monkey was rewarded for making a saccade to the remembered location of the annulus. All the neurons described here had significant spatial selectivity in the memory-saccade task (1-way Kruskal-Wallis analysis of variance, p < .05) and virtually all (97%) showed this selectivity during the delay or presaccadic epochs (400-900 ms after target onset and 200 ms before saccade onset).

2.3. Covert search task

The basic variant of the covert search task (Fig. 1a) was tested with display size of four elements. Individual stimuli were scaled with retinal eccentricity and ranged from 1.5° to 3.0° in height and 1.0° to 2.0° in width. To begin a trial, monkeys fixated a central fixation spot (presented anew on each trial) and grabbed two response bars (Fig. 1). Two line elements were then removed from each placeholder, yielding a display with one target (a right- or left-facing letter “E”) and several unique distractors. Monkeys were rewarded for reporting target orientation by releasing the right bar for a right-facing cue or the left bar for a left-facing target within 100-1000 ms of the display change. A correct response was rewarded with a drop of juice, after which the fixation point was removed and the placeholder display was restored. Fixation was continuously enforced to within 1.5°-2° of the fixation point. Trials with errors (fixation breaks, incorrect, early or late bar releases) were aborted without reward.

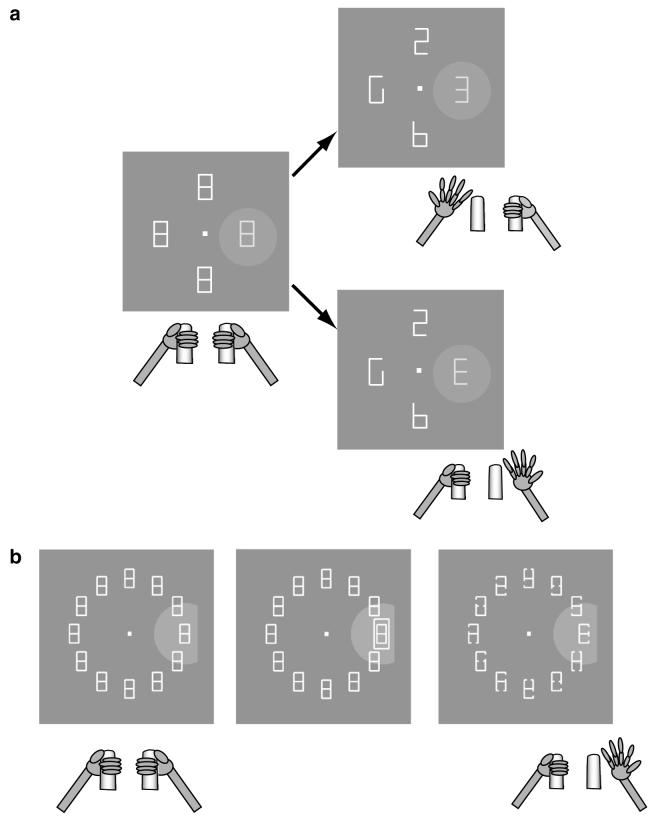

Fig. 1.

Behavioral task. (a) Four figure-8 placeholders remained stably on the screen throughout the intertrial interval and, to begin a trial, monkeys fixated a central spot and grabbed two bars at stomach level (left). The circular array was scaled and rotated so that one placeholder fell in the center of the RF (gray shaded area) when the monkey maintained central fixation. Approximately 500 ms after fixation achievement two line segments were removed from each placeholder, revealing one target, the letter “E”, and 3 unique distractors (right). Unpredictably and with uniform probabilities, the “E” appeared at any display location and could be forward-facing (bottom right) or backward-facing (top right). Monkeys received a juice reward if they indicated the orientation of the E by releasing the right bar if the E was forward-facing or the left bar if it was backward-facing. Trials ended with extinction of the fixation point and restoration of the placeholder display. (b) To examine bottom-up responses we used a variation of the basic task in which a perturbation stage was interposed between initial fixation and presentation of the target display. In the trials discussed here the perturbation appeared 200 ms before target onset and lasted 50 ms. The perturbation could be an abrupt onset frame as shown here, or a 50 ms color, luminance or position change in an existing placeholder. In the SAME context (shown here) the perturbation appeared at the target location; in the OPPOSITE context it appeared in a neighborhood opposite the target. To increase task difficulty the placeholder display contained 12 elements and only a fraction of each line segment was removed from a placeholder to reveal the search array. Adapted, with permission, from Balan and Gottlieb (2006), Oristaglio et al. (2006).

In the perturbation version of the search task (Fig. 1b) the initial fixation interval was lengthened to 800 or 1200 ms on each trial and a 50 ms visual perturbation was presented starting 200 ms before presentation of the target display. The perturbation consisted of a flash of a new object (a frame surrounding a placeholder), a brief change in the color, luminance (increase or decrease) or position of an existing placeholder. To increase task difficulty, display size was increased to 12 elements and only a fraction of each line segment was removed from each placeholder. The location of perturbation and target were randomly selected from among a restricted neighborhood of 2 or 3 elements centered in and opposite the neuron’s RF, with a spatial relationship determined by behavioral context. Contexts were run in interleaved blocks of ∼300 trials. Within each context the location of target and perturbation and 2-3 of the possible 5 perturbation types were randomly interleaved.

2.4. Data analysis

Firing rates were measured from the raw spike times and, unless otherwise stated, statistical tests are based on the Wilcoxon rank test or paired-rank test, or on non-parametric analysis of variance, evaluated at p = .05. For population analyses average firing rates were calculated for each neuron and the distributions of average firing rates were compared. ROC indices indicating selectivity for target location (Fig. 2b) were calculated by comparing response distributions for the target and distractors in the RF regardless of manual release; indices for limb selectivity (Fig. 6) were calculated by comparing distributions of firing rates associated with right and left bar release regardless of target location (Green & Swets, 1968). ROC indices were calculated in 10 ms bins aligned on target onset and bar release, and the statistical significance of each index was estimated using a permutation test. A value was deemed significant if its 95% confidence interval did not include 0.5.

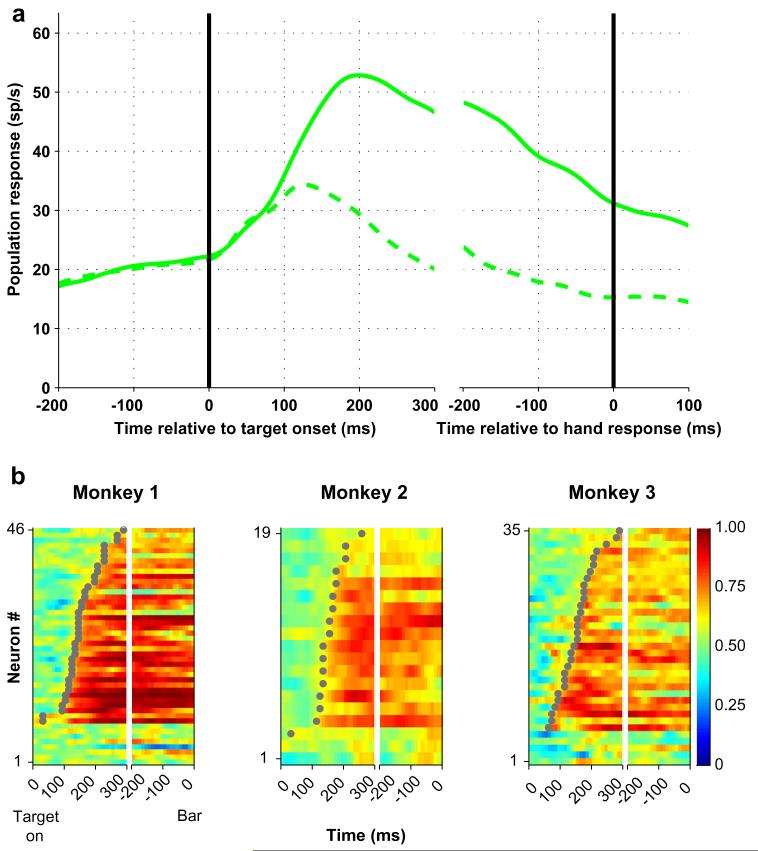

Fig. 2.

LIP neurons encode target location during covert search. (a) Population responses on trials in which the target (solid) or a distractor (dashed) were in the RF. Responses are aligned on search display onset in the left panel and on bar release in the right. Spike density histograms were derived by convolving individual spike times with a Gaussian kernel with standard deviation of 15 ms and averaging the convolved traces across all neurons (n = 81). (b) Color maps show the ROC values representing neuronal discrimination of target location for one monkey. Each row represents one neuron and each pixel represents the ROC value in a 10 ms time bin aligned on target onset (left) and bar release (right). Neurons are sorted by time of onset of significant selectivity (gray dots) within each subject. Adapted, with permission, from Oristaglio et al. (2006).

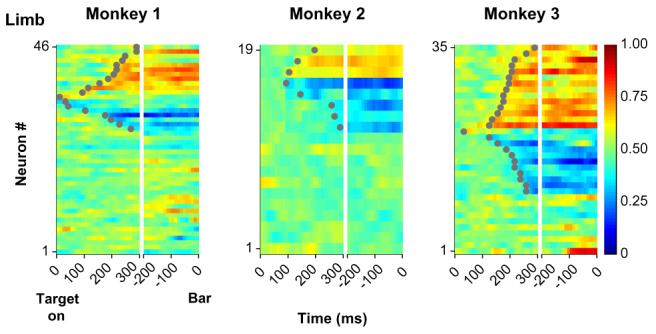

Fig. 6.

ROC analysis of the limb signal. Color maps show the ROC values representing neuronal discrimination of the active limb for one monkey. Each row represents one neuron and each pixel represents the ROC value in a 10 ms time bin aligned on target onset (left) and bar release (right). Values above and below 0.5 (red and deep blue) represent selectivity for the right or left limbs. Neurons are sorted by time of onset of significant selectivity (gray dots) within each category. Adapted, with permission, from Oristaglio et al. (2006).

3. Results

3.1. LIP neurons encode covert voluntary attention during visual search

When we began our experiments several lines of evidence suggested that LIP is important for spatial attention. Reversible inactivation of LIP had been shown to produce spatially-specific deficits in finding and discriminating visual targets (Wardak, Olivier, & Duhamel, 2002, 2004). In addition, LIP neurons had been shown to respond selectively for salient (abruptly appearing) objects and for eye movement targets, with firing rates correlating with psychophysical measures of attention (Bisley & Goldberg, 2003; Gottlieb, Kusunoki, & Goldberg, 1998).

Single-neuron recordings provided evidence for LIP involvement in two forms of attention—overt attention (accompanied by rapid eye movements) and automatic attention (directed toward salient objects independently of their behavioral relevance). We asked whether LIP is also recruited in relation to top-down covert attention in the absence of eye movements.

To address this question we (Oristaglio et al., 2006) devised a visual search task in which monkeys had to use covert attention to discriminate a peripheral visual target but were prevented from making saccades or any movement toward the target. In the task, a circular array of several figure-8 placeholders remained stably on the screen for a block of trials; monkeys began each trial by fixating a point in the center of the array and grabbing two response bars (Fig. 1a, left panel). At this point, the search display was presented by removing two line segments from each figure-8 placeholder, revealing a display with one target—an “E”-like shape—and several unique distractors. The location and the orientation of the target (right- or left-facing) changed unpredictably from trial to trial. Monkeys were rewarded for reporting target orientation by releasing the bar grasped with the right paw if the target was right-facing or the bar grasped with the left paw if it was left-facing.

The task was designed to minimize the impact of motor planning on visual selection. Monkeys maintained central fixation throughout the trial and we found no systematic effects of target location on eye position either during or after a trial (Rayleigh test for spatial directedness, p > .6). The bar release used for the perceptual report was not targeting and did not engage visual selection. Thus, to the extent that LIP was recruited in this task, it was expected to be engaged only by the process of visual search and not by those of motor planning.

Performance declined as a function of the number of distractors. Reaction times for displays of 2, 4, and 6 elements were 445 ± 39, 463 ± 29, and 474 ± 23 ms, with corresponding accuracy of 98 ± 4%, 95 ± 7%, and 90 ± 8% (p <10-4 and p <10-15 for the effect of set-size, Kruskal-Wallis analysis of variance) (Oristaglio et al., 2006). Thus, despite extensive training, the task remained challenging and the target did not simply pop-out from the visual array.

Our first question was whether LIP neurons, physiologically identified by their spatially selective activity before saccades (Barash, Bracewell, Fogassi, Gnadt, & Andersen, 1991), also respond during covert selection. During neuronal recording we adjusted the display so that one placeholder fell into the center of the receptive field (RF) of the neuron under study while the others were outside the RF. If neurons encode covert attention they should show selectivity for target location—i.e., respond more when the target than when a distractor is in the RF. As shown in Fig. 2, strong selectivity for target location was seen in the population response in LIP (Fig. 2a). Peak firing rates related to the target in the RF (175-225 ms after display onset) were comparable to those in the presaccadic epoch of the memory-guided saccade task (49 vs. 42 sp/s, p = .16). Quantitative analysis (see Section 2) showed that over 80% of neurons developed a statistically significant signal of target location during covert search, with median latencies of approximately 150 ms after display presentation (Fig. 2b).

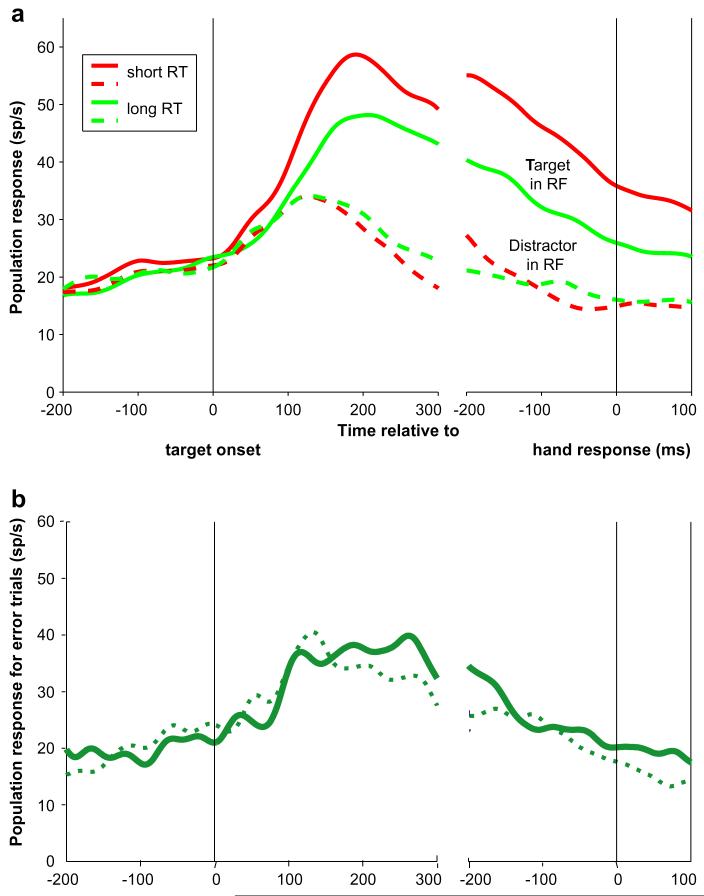

The target location signal was correlated with task performance. Target-related firing rates rose faster in trials with shorter than in those with longer reaction times (Fig. 3a), yielding a significant trial by trial correlation between firing rates and reaction times (firing rates 100-200 ms after search onset, population coefficient of -0.20, p <10-6). In addition, the robust target location selectivity on correct trials was almost entirely absent on error trials in which the monkey released the wrong bar (Fig. 3b).

Fig. 3.

The target location response reflects with task performance. (a) Population responses to the target in the RF divided according to median response latency. Red and green traces represent trials with latency below and above the median. (b) Population activity on error trials in which the target was in or out of the RF (solid and dashed traces). Although responses increased slightly during the search phase, there was no reliable discrimination of target location.

These results showed that LIP reliably encodes voluntary, top-down selection even if the task does not require eye movements.

3.2. Neurons integrate top-down and bottom-up responses in contextspecific manner

In natural behavior attention is often attracted to salient objects. However, the impact of such objects can be strongly dependent on behavioral context. For example, a singleton (pop-out) distractor is much more efficient in attracting attention if subjects are actively looking for a singleton target than if they are not (Bacon & Egeth, 1994). These observations suggest that the attentional weight of a conspicuous stimulus is not immutable but can be gated by behavioral context or the subject’s general behavioral strategy. As LIP neurons were known to be sensitive to both automatic and voluntary selection (Bisley & Goldberg, 2003; Gottlieb, Kusunoki, & Goldberg, 2004; Gottlieb et al., 1998), we wondered how these neurons integrate top-down and bottom-up information.

To examine this question we used a variant of the covert search task in which a brief visual perturbation—a 50 ms change in a display element—was delivered 200 ms before presentation of the search display (Balan & Gottlieb, 2006) (Fig. 1b). The type of perturbation delivered on a given trial was unpredictable and included the appearance of a new object (a frame around a placeholder) or a change in luminance, color or location of an existing placeholder. We further varied the behavioral significance of the perturbation by varying the spatial relationship between perturbation and target in interleaved blocks of trials. In the SAME trial blocks the perturbation always appeared at exactly the same location as the target, thus validly cueing target location. In OPPOSITE blocks the perturbation appeared at a range of locations approximately opposite the target, providing little reliable information about target location. Thus, an identical perturbation was potentially task-relevant in the SAME context but was a mere distractor in the OPPOSITE context.

Analysis of behavioral performance suggested that monkeys were sensitive to the contextual difference. In the SAME context monkeys had shorter reaction times on perturbation relative to no-perturbation trials (average difference of 38 ms or 7.35%, p < 10-16), suggesting that they used the perturbation as a cue for target location. However, in the OPPOSITE context perturbations caused no change in reaction time or accuracy, suggesting that monkeys learned to suppress their distracting effects. This null perturbation effect was not due to the large distance between perturbation and target as perturbations did impair performance if delivered at a later time (during search) in both contexts (Balan & Gottlieb, 2006). Thus, monkeys appeared to have treated the perturbations differently according to the higher-order relationship between perturbation and target.

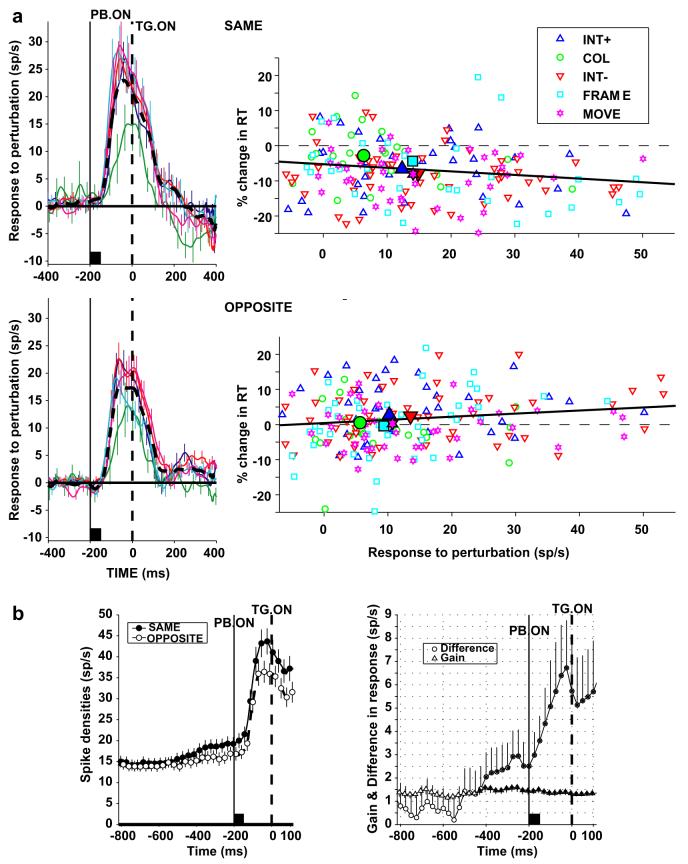

LIP responses were sensitive to context. Figure 4a shows average neural responses to the different perturbations in the SAME and OPPOSITE contexts (top and bottom left panels). Responses were not strongly affected by perturbation type but were stronger in the SAME relative to the OPPOSITE condition. Moreover, perturbation responses showed opposite correlations with reaction times in the two contexts (Fig. 4a, right panels). In the SAME context, when the perturbation marked the target’s location, a higher perturbation response was associated with a larger facilitation (decrease) in reaction time relative to no-perturbation trials (r = -.18, p <.0012). In the OPPOSITE context, when the perturbation marked a distractor location, the converse was found, as a larger perturbation response was coupled with greater increase in reaction time relative to no-perturbation trials (r = .14, p < .045).

Fig. 4.

Contextual effects in the integration of bottom-up and top-down information. (a) Left panels show population response to the perturbation in the SAME and OPPOSITE contexts, normalized by subtracting activity on no-perturbation trials. Right panels plot the corresponding relationship between the perturbation response (50-250 ms after perturbation onset) and change in reaction time relative to no-perturbation trials. Each point represents one neuron and one perturbation type (INT+, increase in luminance; COL change of color; INT-, decrease in luminance; FRAME, appearance of new frame; MOVE back and forth movement of placeholder). Although responses to color appeared weaker and more sluggish than those to the other transients (left panels), pairwise comparisons revealed significant differences between responses to color and the other transients in fewer than 25% of neurons, and no statistically significant differences at the population level. (b) The left panel shows the population responses (mean and standard error) in the SAME and OPPOSITE context when the perturbation was in the RF. The trial began with a fixation interval of 800 or 1200 ms, followed by the 50 ms perturbation (PB. ON) and presentation of the search display (TG. ON, time 0). After search display onset either a target or a distractor appeared in the RF and thus activity is no longer comparable between the two contexts. The right panel shows the running average of the difference and ratio (gain) between firing rates in the SAME and OPPOSITE contexts. Filled symbols represent time bins in which values were significantly different (p < .05) from 0 (difference) or 1 (gain). Adapted, with permission, from Balan and Gottlieb (2006).

Fig. 4b shows the time course of the contextual effect from the onset of fixation until appearance of the perturbation. We found that, at the onset of a trial, firing rates were similar in the SAME relative to the OPPOSITE context. However, toward the end of the fixation period firing rates increased slightly in the SAME relative to the OPPOSITE context. The contextual effect increased markedly once the perturbation appeared. The ratio between firing rates in the SAME and OPPOSITE contexts was approximately 1.1 throughout the fixation and perturbation responses, suggesting that the contextual effect consisted of a change of response gain. Once the perturbation appeared, this global increase in gain (in the SAME context) caused a specific enhancement in the response to the perturbation, which was, in turn, correlated with the facilitatory effect of the perturbation on task performance.

Taken together, these findings show that LIP neurons integrate cognitive and external (sensory) attentional cues and mediate context-dependent interactions between these cues. The contextual effect consisted of a change in response gain, which resulted in higher responses to a salient input when that input was task-relevant. This effect may be related to a recent report that LIP responses to a color singleton are weaker if that singleton is always presented as a task-irrelevant distractor (Ipata, Gee, Gottlieb, Bisley, & Goldberg, 2006).

3.3. Responses to covert attention are shaped by decisional factors

As mentioned above, many investigators have cast LIP as a specifically oculomotor area, arguing that it is specialized for integrating information relevant for saccade decisions (Gold & Shadlen, 2007). The present results show that LIP is strongly activated whether or not saccades ensue. Nevertheless, it is clear that neurons carry both oculomotor and attentional signals, and the question remains: how should we understand this convergence? Is every act of attentional selection a saccade plan that may or may not be executed depending on activity in downstream areas? Is attention exclusively related to saccades (Moore et al., 2003) or does it have a more general relation to motor planning? Some insight into these questions came from the unexpected finding that during this covert search task neurons were sensitive to the planning of the hand movement with which monkeys reported target orientation (Oristaglio et al., 2006).

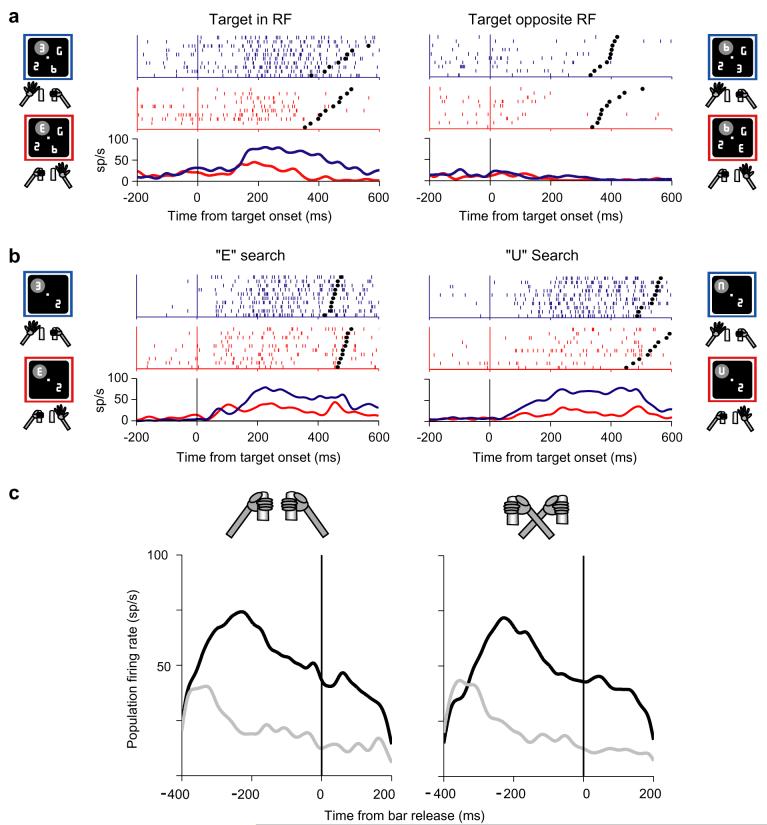

It is known that 20% of LIP neurons respond prior to reaching movements, encoding the location of a reach target (Snyder, Batista, & Andersen, 1997). However, the manual report we selected for this task was a mere grasp release that did not require spatial selection and thus was expected to produce an even smaller (if any) response in LIP. To our surprise, we found that in a sizeable fraction of neurons the target-selective response was strongly modulated by planning of the grasp release (Oristaglio et al., 2006). An example is shown in Fig. 5a. As described above, the neuron responded more when the target than when a distractor appeared in its RF. However, its responses when the target was in the RF were much stronger when the monkey released the left bar to indicate a left-facing “E” than when she released the right bar to indicate a right-facing “E”. Control experiments showed that these modulations did not reflect target shape per se as neurons showed consistent hand preference for a novel set of shapes (Fig. 5b). They also did not reflect the work space of the limb: when monkeys were trained to perform the task with their arms crossed across the body midline (while maintaining the same mapping between target orientation and a specific limb) limb selectivity remained unchanged (Fig. 5c). Therefore, neurons encoded some aspect of the effector (right or left limb) with which monkeys signaled target orientation independently of that limb’s position in space. Significant limb selectivity was found in more than a third of neurons with target location selectivity (as many as 70% in one monkey) and appeared with only a slight delay, on average, relative to information about target location (Fig. 6). These temporal dynamics suggest that limb selectivity may reflect the gradual evolution of a motor plan, which occurred largely in parallel with the attentional selection of the target (cf. Fig. 2b).

Fig. 5.

Spatial responses to the search target are modulated by limb motor planning. (a) Representative limb effect in one neuron. Neural activity is shown for trials in which the target was in the center of the RF or at the opposite location and was either facing to the right (red) or to the left (blue). In the raster plots each line is one trial, each tick represents the time of one action potential relative to target presentation. Spike density histograms were derived by convolving individual spike times with a Gaussian kernel with standard deviation of 15 ms. Activity is aligned on target presentation (time 0) and black dots represent bar release. Only correct trials are shown, ordered offline according to reaction time. Cartoons indicate the location of the target, the RF (gray oval) and the manual response. The neuron responded best when the target was in its RF (upper left quadrant) and the monkey released the left bar. (b) Neuron selective for left hand release on both “E” and “U” search (trials in which the target was in the RF). (c) Average response of 6 limb-selective neurons during task performance in the standard hand position and with hands crossed across the body midline. No neuron switched selectivity in the crossed hand position. Adapted, with permission, from Oristaglio et al. (2006).

A critical point that can be appreciated from Fig. 5a is that limb information did not independently activate LIP neurons. Neurons showed little selectivity if a distractor was in the RF, and showed no limb selectivity if there was no visual stimulation to the RF, even though monkeys performed the same bar release (Oristaglio et al., 2006). Consistent with this, correlations between LIP firing rates and reaction time were determined by the location of the target regardless of the manual response. Thus, limb selectivity in LIP is not a reliable correlate of a limb movement per se, but is strongly gated by and modifies the neurons’ primary signal of covert attention.

These findings show that motor influences in LIP are multimodal, reflecting planning of skeletal as well as ocular movements, and that these motor influences modulate a primary visuo-spatial response.

4. Discussion

We reviewed evidence showing that LIP neurons receive convergent signals from the sensory, motor and cognitive domains. When tested with a covert visual search task, neurons reflected the top-down significance of the search target, the limb with which monkeys reported target orientation, the context-dependent attentional weight of a visual transient. Reports from other laboratories suggest that the list of non-spatial variables influencing LIP neurons is broader still. Neurons are sensitive to expected reward (Platt & Glimcher, 1999; Sugrue et al., 2004), the difficulty of a perceptual decision (Roitman & Shadlen, 2002), prediction of motion trajectory (Assad & Maunsell, 1995), the length of elapsed time (Janssen & Shadlen, 2005; Leon & Shadlen, 2003), stimulus color (Toth & Assad, 2002), the proactive planning of a button press (Maimon & Assad, 2006) and abstract category (Freedman & Assad, 2006). Practically all studies of this area deliberately target a functionally-defined subset of neurons—neurons with spatially-selective delay activity before memory-guided saccades—and have reported no correlation (positive or negative) between response properties on the memory-guided saccade task and those on other paradigms. Thus, a wide variety of signals appears to converge onto a relatively well-defined neuronal population within the intraparietal sulcus.

4.1. Interpreting non-spatial signals

Based on these findings different investigators have attached different labels to LIP, the most prominent of which are “attention”, “saccade planning” or “decision-making” (see Culham & Kanwisher, 2001, for a similar view on human parietal cortex). We suggest, however, that the response properties in LIP allow a more focused interpretation. In particular we note that, in each of the experiments cited above, non-spatial signals were obtained in the context of directed visual attention. In each case a task-relevant object was placed in the RF and neurons had robust responses to this object (above and beyond those to a distractor, if one was present). Non-spatial variables such as reward, timing, or category modulated the visuospatial response without, in and of themselves, activating neurons. In our task, limb selectivity was not expressed in the absence of visual stimulation to the RF and was nearly absent if a task-irrelevant distractor was in the RF. Similarly, in a task in which monkeys were free to choose between two saccade targets associated with varying amounts of reward, Sugrue and Newsome noted that LIP neurons did not encode the abstract value of the two targets (which was linked to target color) but only signaled the relative pull of the target toward or away from the RF (Sugrue et al., 2004). In all cases, therefore, the primary and most consistent response in LIP encoded the location of a task-relevant target and non-spatial effects modulated the spatial response. Thus, we must be careful in interpreting statements such as “LIP neurons encode X”, where X is a nonspatial aspect of the task. Although we can extract information about X from LIP by selecting a specific subset of trials, LIP only encodes X if attention is appropriately deployed.

This in turn suggests that non-spatial signals in LIP represent inputs about non-spatial computations, which can affect the spatial allocation of attention. In and of itself this is a reasonable proposition, because much psychophysical evidence shows that spatial attention is sensitive not only to perceptual but also to motor, cognitive and motivational task components. Attention is allocated to the goal of an upcoming saccade, a reach movement, or a button press (Baldauf, Wolf, & Deubel, 2006; Deubel & Schneider, 2003; Kowler, Anderson, Dosher, & Blaser, 1995; Schiegg, Deubel, & Schneider, 2003). Attention is sensitive to behavioral context (Lamy, Leber, & Egeth, 2004), spatial prediction (Starr & Rayner, 2001) and presumably the passage of time and motivation or expected reward (Mesulam, 1999). Thus, any area implicated in attentional decisions would be expected to integrate multiple signals that are relevant for the selection process.

A substantial challenge is now to provide information about precisely how (or even whether) non-spatial variables bias attention in specific circumstances. Our own data provide an example about how this may work with regard to one non-spatial variable, extended “context”. As described above, in one version of the search task we varied the spatial relationship between a target and a visual perturbation, so that the perturbation was task-relevant in one context but entirely irrelevant in the other. We found that context was expressed in LIP as a gain change that elevated firing rates in the SAME relative to the OPPOSITE context. This baseline shift was initially non-spatial in the sense that it occurred before the target or perturbation were presented, and affected responses in all neurons regardless of the location of their RF. However, when the perturbation appeared this baseline increase strongly enhanced the response to the perturbation itself and thus was transformed into a spatial- (or object-) specific effect. This enhancement in turn correlated with the stronger behavioral effects of the perturbation in the SAME relative to the OPPOSITE context. In this way a contextual, non-spatial gain change affected the attentional weight of a specific object.

Another relatively straightforward example may be in the reward effects on LIP neurons, although direct evidence linking reward and spatial attention is not yet available. Increasing expected reward enhances target-selective responses in a large majority of LIP neurons so that the population response in LIP is stronger for targets associated with higher relative to lower reward (Sugrue et al., 2004). This is a potentially straightforward mechanism for biasing attention and motor choices toward the more highly rewarded target.

In other cases, however, it is more difficult to explain how nonspatial effects may translate into spatial attention. When we trained monkeys to report the orientation of a target by releasing a grasp with their right or left paws the target-selective response in LIP was modulated by the active limb. However, limb selection did not produce a wholesale response enhancement or suppression, but produced a “split” in the priority map, so that partially distinct neuronal populations encoded a given spatial location depending on the behavioral alternative. Because equal populations of neurons preferred each alternative, there was no population-level preference for one or the other alternatives, and thus we would not expect attention to be biased differently according to stimulus category or active limb. This raises the interesting—and perhaps unsettling—possibility that some non-spatial effects may be behaviorally silent in that they do not produce frank biases of spatial attention. It is interesting, however, that in one of the three monkeys we tested on the manual response task there was a large limb bias in LIP, as most neurons preferred the contralateral limb. This subject had a corresponding behavioral bias toward congruent target-limb configurations—that is, configurations in which both the target and the active limb were contralateral to the recorded hemisphere (Oristaglio et al., 2006). This suggests that the behavioral correlates of some non-spatial inputs may be found in a small subset of subjects or through more complex, second-order effects such as congruence or interference paradigms.

Thus, we can advance the hypothesis that non-spatial effects in LIP represent routes through which cognitive and other behavioral variables access systems of spatial attention. Determining how non-spatial factors bias spatial attention remains a central question for future research.

4.2. Attention, saccade planning, and decisions

The idea that LIP integrates multiple sources of information relevant for allocating attention suggests that this area is important for—or at least reflects—some form of decision-making. This in turn seems similar to the proposal, that LIP neurons encode a “decision variable” whose rise to a threshold reflects the accumulation of information toward a saccade motor threshold (Gold & Shadlen, 2007; Shadlen & Newsome, 2001). However, while we couch our interpretations in terms of covert attention, Shadlen and colleagues emphasize the role LIP for saccade motor decisions. Is this difference truly critical? Can we perhaps resolve it by merely exchanging the terms “attention” with “saccade planning” or “saccade likelihood”?

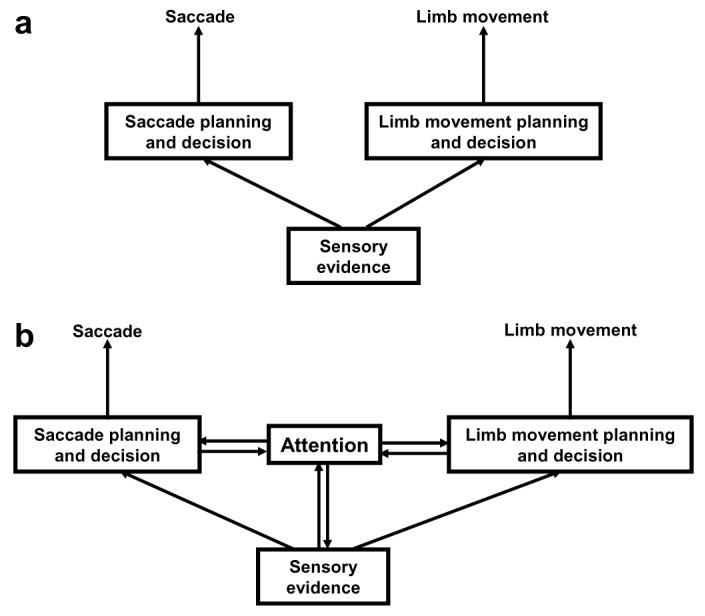

We propose that the distinction is real and goes beyond mere semantic differences. In particular, whether one interprets LIP as representing attention or saccade-specific decisions has important implications regarding the neural architecture underlying task performance, and the kinds of models used for describing its neural activity.

A crucial aspect of the decision-making framework, which contributes greatly to its simplicity and widespread appeal, is that it is essentially a two-stage model in which sensory evidence is mapped directly onto a motor response (Gold & Shadlen, 2007; Mazurek, Roitman, Ditterich, & Shadlen, 2003). This is depicted schematically in Fig. 7a. Given a source of sensory evidence, the accumulation of evidence toward a decision is postulated to occur in premotor networks that also plan the motor response through which the decision is expressed. If the decision is signaled with a saccade, saccade premotor areas (which would include LIP) are postulated to integrate the evidence toward the decision. If the decision is signaled with a limb movement, this integration is performed in limb premotor areas.

Fig. 7.

Two architectures for decision-making. (a) Decision-making framework, in which sensory evidence accumulates towards a decision in areas that are specific for planning the motor response through which the decision is expressed (either an limb or ocular movement). (b) A more complex architecture that includes an internal attentional stage. This stage is distinct from, but communicates with both sensory and motor representations.

Our findings both violate the assumption of motor specificity and reveal decision-related signals that cannot be described as either sensory evidence or motor planning. During the covert search task LIP was strongly active even though the perceptual decision was mapped onto a manual response. Furthermore, neurons encoded neither the sensory evidence (target orientation) nor the associated motor response manual release; although they were sensitive to this release but encoded a third independent variable—target location. It may be argued that the spatial response in LIP merely reflected a “covert saccade plan” unrelated to the task. However, activity correlated with performance of the cover task, and it is known that LIP inactivation impairs performance on covert search (Wardak, Ibos, Duhamel, & Olivier, 2006). A prior study also revealed strong links between the visuospatial response in LIP and the latency of a non-targeting, self-paced manual response (Maimon & Assad, 2006). These observations strongly suggest that LIP activity contributes to visuospatial computations in ways that are independent of the modality of the motor response.

There is abundant evidence that LIP activity can be dissociated from both overt saccades and saccade likelihood. As we described above, neurons had similar response levels in the covert search task and in the memory-guided saccade task, when saccade likelihoods were, respectively, nearly 0% and nearly 100%. Neurons have enhanced responses to a cue for an antisaccade relative to a prosaccade (Gottlieb & Goldberg, 1999) and have stronger responses for cues that instruct withholding relative to those that instruct making a saccade (Bisley & Goldberg, 2003)—i.e., in each case have stronger responses associated with saccade likelihoods closer to 0% than those closer to 100%. Thus, LIP responses can be understood neither in terms of saccade likelihood nor of motor-specific behavioral decisions. Although mechanisms that accumulate evidence in motor-specific fashion may operate in most tasks (during our covert search task, for example, they may have been evident in limb premotor areas), it is clear that the brain recruits at least one stage of processing, which represents visuospatial selection (attention) (Fig. 7b). This stage is distinct from stages representing the sensory evidence or the associated motor plan, although it communicates with both.

A related consideration is that covert attention is unlikely to be captured by rise-to-threshold models designed to account for motor latencies (Mazurek et al., 2003). Such models make the assumption that there is a threshold that can be associated with a unitary, well-defined motor response. However, unlike a motor response, attention may be graded (Kowler et al., 1995), may be allocated in parallel across the visual scene or may be shuttled among locations several times within a single motor reaction time (Bichot, Rossi, & Desimone, 2005; Cave & Bichot, 1999). Indeed, it is this flexibility that allows attention—a covert process—to influence vision beyond its role in selecting saccade targets.

These considerations suggest that the distinction between an attention and saccade interpretation of LIP is substantial and farreaching. Our arguments allow for the possibility that LIP indicates oculomotor decisions in very specific circumstances, when the decision is mapped on a saccade (or perhaps on any targeting motor response). In this case the covert and overt selection layers are isomorphic and LIP may well be one of the sites reflecting the accumulation of information toward the motor decision. In general, however, the role of LIP goes beyond saccade computations and is best described as an internal priority map which reflects covert spatial selection. This priority map influences motor output, but its relation with overt output is flexible and task-dependent.

5. Conclusions

We propose that LIP, and perhaps the brain’s system of spatial attention as a whole, is, in effect, an internal associative layer that binds multiple sensory, motor and cognitive variables into a selective spatial representation. These associative properties may be fine-tuned (or optimized) for specific tasks and may allow coordination among multiple behavioral outputs, perhaps helping generate the coordinated behavioral focusing envisaged by William James. A challenge for future work is to understand how non-spatial motor, cognitive and motivational variables influence the allocation of attention. Current computational models either discount attention altogether (Gold & Shadlen, 2007) or represent it solely through its end-results—i.e., changes in the sensory input (Verghese, 2001). Results such as those reviewed here, which describe the brain’s internal representation of attention, will spur the development of more realistic models which include a covert attentional stage that actively interacts with computations in both input and output layers.

References

- Assad JA, Maunsell JHR. Neuronal correlates of inferred motion in primate posterior parietal cortex. Nature. 1995;373:518–521. doi: 10.1038/373518a0. [DOI] [PubMed] [Google Scholar]

- Bacon WF, Egeth HE. Overriding stimulus-driven attentional capture. Perception & Psychophysics. 1994;55(5):485–496. doi: 10.3758/bf03205306. [DOI] [PubMed] [Google Scholar]

- Balan PF, Gottlieb J. Integration of exogenous input into a dynamic salience map revealed by perturbing attention. The Journal of Neuroscience. 2006;26(36):9239–9249. doi: 10.1523/JNEUROSCI.1898-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldauf D, Wolf M, Deubel H. Deployment of visual attention before sequences of goal-directed hand movements. Vision Research. 2006;46(26):4355–4374. doi: 10.1016/j.visres.2006.08.021. [DOI] [PubMed] [Google Scholar]

- Barash S, Bracewell RM, Fogassi L, Gnadt JW, Andersen RA. Saccade-related activity in the lateral intraparietal area. I. Temporal properties. Journal of Neurophysiology. 1991;66:1095–1108. doi: 10.1152/jn.1991.66.3.1095. [DOI] [PubMed] [Google Scholar]

- Bichot NP, Rossi AF, Desimone R. Parallel and serial neural mechanisms for visual search in macaque area V4. Science. 2005;308(5721):529–534. doi: 10.1126/science.1109676. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Goldberg ME. Neuronal activity in the lateral intraparietal area and spatial attention. Science. 2003;299(5603):81–86. doi: 10.1126/science.1077395. [DOI] [PubMed] [Google Scholar]

- Cave KR, Bichot NP. Visuospatial attention: Beyond a spotlight model. Psychonomic Bulletin & Review. 1999;6(2):204–223. doi: 10.3758/bf03212327. [DOI] [PubMed] [Google Scholar]

- Culham JC, Kanwisher NG. Neuroimaging of cognitive functions in human parietal cortex. Current Opinion in Neurobiology. 2001;11(2):157–163. doi: 10.1016/s0959-4388(00)00191-4. [DOI] [PubMed] [Google Scholar]

- Deubel H, Schneider WX. Delayed saccades, but not delayed manual aiming movements, require visual attention shifts. Annals of the New York Academy of Sciences. 2003;1004:289–296. doi: 10.1196/annals.1303.026. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443(7107):85–88. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annual Review of Neuroscience. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Gottlieb J. From thought to action: The parietal cortex as a bridge between perception, action, and cognition. Neuron. 2007;53(1):9–16. doi: 10.1016/j.neuron.2006.12.009. [DOI] [PubMed] [Google Scholar]

- Gottlieb J, Goldberg ME. Activity of neurons in the lateral intraparietal area of the monkey during an antisaccade task. Nature Neuroscience. 1999;2:906–912. doi: 10.1038/13209. [DOI] [PubMed] [Google Scholar]

- Gottlieb J, Kusunoki M, Goldberg ME. The representation of visual salience in monkey parietal cortex. Nature. 1998;391:481–484. doi: 10.1038/35135. [DOI] [PubMed] [Google Scholar]

- Gottlieb J, Kusunoki M, Goldberg ME. Simultaneous representation of saccade targets and visual onsets in monkey lateral intraparietal area. Cerebral Cortex. 2004;15(8):1198–1206. doi: 10.1093/cercor/bhi002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal detection theory and psychophysics. Wiley; New York: 1968. [Google Scholar]

- Ipata AE, Gee AL, Gottlieb J, Bisley JW, Goldberg ME. LIP responses to a popout stimulus are reduced if it is overtly ignored. Nature Neuroscience. 2006;9(8):1071–1076. doi: 10.1038/nn1734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janssen P, Shadlen MN. A representation of the hazard rate of elapsed time in macaque area LIP. Nature Neuroscience. 2005;8(2):234–241. doi: 10.1038/nn1386. [DOI] [PubMed] [Google Scholar]

- Kowler E, Anderson E, Dosher B, Blaser E. The role of attention in the programming of saccades. Vision Research. 1995;35(13):1897–1916. doi: 10.1016/0042-6989(94)00279-u. [DOI] [PubMed] [Google Scholar]

- Lamy D, Leber A, Egeth HE. Effects of task relevance and stimulus-driven salience in feature-search mode. Journal of Experimental Psychology. Human Perception and Performance. 2004;30(6):1019–1031. doi: 10.1037/0096-1523.30.6.1019. [DOI] [PubMed] [Google Scholar]

- Leon MI, Shadlen MN. Representation of time by neurons in the posterior parietal cortex of the macaque. Neuron. 2003;38(2):317–327. doi: 10.1016/s0896-6273(03)00185-5. [DOI] [PubMed] [Google Scholar]

- Maimon G, Assad JA. A cognitive signal for the proactive timing of action in macaque LIP. Nature Neuroscience. 2006;9(7):948–955. doi: 10.1038/nn1716. [DOI] [PubMed] [Google Scholar]

- Mazurek ME, Roitman JD, Ditterich J, Shadlen MN. A role for neural integrators in perceptual decision making. Cerebral Cortex. 2003;13(11):1257–1269. doi: 10.1093/cercor/bhg097. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. Spatial attention and neglect: Parietal, frontal and cingulate contributions to the mental representation and attentional targeting of salient extrapersonal events. Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences. 1999;354(1387):1325–1346. doi: 10.1098/rstb.1999.0482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moore T, Armstrong KM, Fallah M. Visuomotor origins of covert spatial attention. Neuron. 2003;40(4):671–683. doi: 10.1016/s0896-6273(03)00716-5. [DOI] [PubMed] [Google Scholar]

- Oristaglio J, Schneider DM, Balan PF, Gottlieb J. Integration of visuospatial and effector information during symbolically cued limb movements in monkey lateral intraparietal area. The Journal of Neuroscience. 2006;26(32):8310–8319. doi: 10.1523/JNEUROSCI.1779-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400(6741):233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Reynolds JH, Chelazzi L. Attentional modulation of visual processing. Annual Review of Neuroscience. 2004;27:611–647. doi: 10.1146/annurev.neuro.26.041002.131039. [DOI] [PubMed] [Google Scholar]

- Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. The Journal of Neuroscience. 2002;22(21):9475–9489. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiegg A, Deubel H, Schneider W. Attentional selection during preparation of prehension movements. Visual Cognition. 2003;10(4):409–431. [Google Scholar]

- Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. Journal of Neurophysiology. 2001;86(4):1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- Snyder LH, Batista AP, Andersen RA. Coding of intention in the posterior parietal cortex. Nature. 1997;VI(386):167–170. doi: 10.1038/386167a0. [DOI] [PubMed] [Google Scholar]

- Starr MS, Rayner K. Eye movements during reading: Some current controversies. Trends in Cognitive Sciences. 2001;5(4):156–163. doi: 10.1016/s1364-6613(00)01619-3. [DOI] [PubMed] [Google Scholar]

- Sugrue LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304(5678):1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Thompson KG, Bichot NP. A visual salience map in the primate frontal eye field. Progress in Brain Research. 2005;147:251–262. doi: 10.1016/S0079-6123(04)47019-8. [DOI] [PubMed] [Google Scholar]

- Thompson KG, Biscoe KL, Sato TR. Neuronal basis of covert spatial attention in the frontal eye field. The Journal of Neuroscience. 2005;25(41):9479–9487. doi: 10.1523/JNEUROSCI.0741-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toth LJ, Assad JA. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415(6868):165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Verghese P. Visual search and attention: A signal detection theory approach. Neuron. 2001;31(4):523–535. doi: 10.1016/s0896-6273(01)00392-0. [DOI] [PubMed] [Google Scholar]

- Wardak C, Ibos G, Duhamel JR, Olivier E. Contribution of the monkey frontal eye field to covert visual attention. The Journal of Neuroscience. 2006;26(16):4228–4235. doi: 10.1523/JNEUROSCI.3336-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardak C, Olivier E, Duhamel JR. Saccadic target selection deficits after lateral intraparietal area inactivation in monkeys. The Journal of Neuroscience. 2002;22(22):9877–9884. doi: 10.1523/JNEUROSCI.22-22-09877.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wardak C, Olivier E, Duhamel JR. A deficit in covert attention after parietal cortex inactivation in the monkey. Neuron. 2004;42(3):501–508. doi: 10.1016/s0896-6273(04)00185-0. [DOI] [PubMed] [Google Scholar]