Abstract

Objectives:

This study examined determinants of using an immunization registry, explaining the variance in use. The technology acceptance model (TAM) was extended with contextual factors (contextualized TAM) to test hypotheses about immunization registry usage. Commitment to change, perceived usefulness, perceived ease of use, job-task changes, subjective norm, computer self-efficacy and system interface characteristics were hypothesized to affect usage.

Method:

The quantitative study was a prospective design of immunization registry end-users in a state in the United States. Questionnaires were administered 100 end-users after training and system usage.

Results:

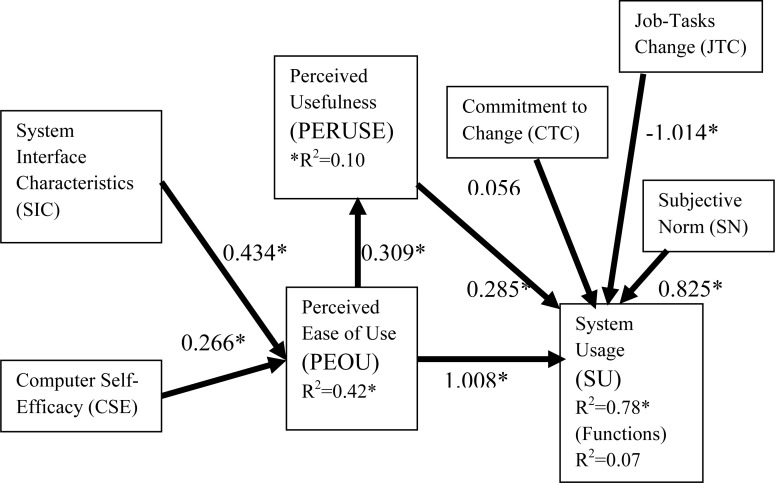

The results showed that perceived usefulness, perceived ease of use, subjective norm and job-tasks change influenced usage of the immunization registry directly, while computer self-efficacy and system interface characteristics influenced usage indirectly through perceived ease of use. Perceived ease of use also influenced usage indirectly through perceived usefulness. The effect of commitment to change on immunization registry usage was insignificant.

Conclusion:

Understanding the variables that impact information system use in the context of public health can increase the likelihood that a system will be successfully implemented and used, consequently, positively impacting the health of the public. Variables studied should be adequate to provide sufficient information about the acceptance of a specified technology by end users.

Keywords: Public health informatics; Technology acceptance model; TAM, Immunization registry; Public health; Health information technology

Introduction

Researchers, investors, managers and practitioners are just a few among many others attempting to understand why end-users do not use adopted information systems even when the systems appear to promise substantial benefit. Understanding why people use or, reject computers is one of the most challenging issues in information system (IS) research [1]. Even with improvement in application usability, lack of use remains a challenge, and has led many organizations to fail in achieving the benefits reaped from implemented systems [2]. As public health organizations continue to invest in information systems such as immunization registries, the expectation is that end-users are using the systems in order for benefits to be realized.

The National Vaccine Advisory Committee (NVAC) recommended that strategies geared toward improvement of immunization coverage and reduction of vaccine-preventable diseases should include immunization registries as key strategy [3]. Individual organizations were developing registries as far back as 1980s [3]. However, it was only in the early 1990s that population-based registries were promoted [3]. Public health organizations are aware that immunization registries can facilitate the realization of their public health objectives, and therefore they continue to develop and to promote the adoption of the registries. However, once organizations adopt the immunization registries, there is no empirical evidence in the literature demonstrating meaningful use.

Immunization registries are confidential, population-based, computerized information systems that contain data about children in a geographic area [4]. The Centers for Disease Control and Prevention (CDC) defines an immunization registry as a key tool used to increase and sustain high vaccination coverage by providing complete and accurate information on which to base vaccination decisions. An immunization registry with added capabilities such as vaccine management, adverse event reporting, lifespan vaccination histories and linkage with other electronic data sources is referred to as an immunization information system (IIS). Though ‘immunization registry’ and ‘IIS’ are used interchangeably in this article, the system studied is an IIS.

IIS are beneficial in providing information about immunization coverage levels by child, by immunization provider, and by geographic area [5]. They are of benefit to a wide range of stakeholders some of which include: children, parents, doctors and nurses, health plans, schools, communities, and states. The IIS has functionality for reminders and recalls. Parents can be reminded when an immunization is due and recalled when an immunization is missed (overdue). Healthcare providers are able to obtain a consolidated report on a child’s immunization history because the registry consolidates records from multiple providers. Healthcare providers are also able to use the IIS to obtain the most current recommendations for immunization practice and to determine what vaccine to administer. The IIS can also track contraindications and adverse events that are immunization-related. Managed care and other organizations can get coverage reports from the IIS. Immunization information systems are used for additional purposes such as clinical assessments and surveillance activities. IIS reduce the time needed by school nurses and administrative staff to check immunization status by providing automatic printouts of immunization status. The IIS also promotes greater accuracy of records avoiding duplication of immunizations [5]. IIS can help to prevent disease outbreak and control vaccine-preventable diseases by identifying under-immunized children (children at risk for vaccine-preventable diseases). Information on community coverage rates is included in IIS [5]. More comprehensive data can be made available in the IIS than on paper because the IIS can be linked to other databases such as newborn and lead screening or other state registries [5].

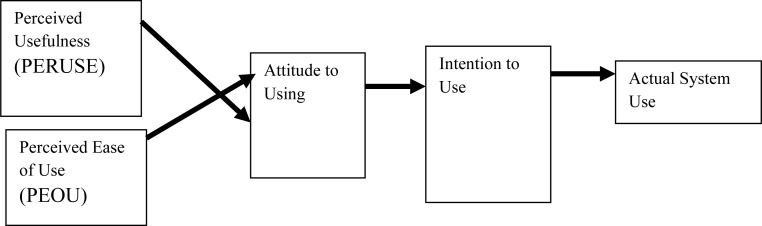

No study has tested a widely accepted theoretical framework on the use of immunization registries. A review provided evidence that the technology acceptance model (TAM) (Figure 1.) which associates system usage with acceptance was the most influential model of more than 20 computer use models [6]. This was attributed to the fact that TAM is powerful in describing information system use behavior [7–14]. This research therefore has its theoretical grounding in the TAM which has its roots in the theory of reasoned action (TRA) [15].TRA explains behavior and was formulated by Ajzen and Fishbein in 1967, and has its origins in social psychology.

Figure 1.

The Original Technology Acceptance Model

The initial TAM included attitude as a construct that mediated between PERUSE, PEOU and behavior intent. Attitude was not found to be significant and was removed resulting in a revised TAM in which behavior intention (BI), a proxy for actual use was a function of perceived ease of use (PEOU) and perceived usefulness (PERUSE). Both the determinants of BI have been found to be statistically significant in explaining use, but PERUSE was found to be a stronger determinant than PEOU [9]. TAM also postulated that PERUSE and PEOU mediated external variables.

Though TAM is popular and valid for explaining technology usage behavior; it is a de-contextualized theory, hence failing to include important contexts such as social, organizational and work context. The need to extend TAM with other contexts is reiterated throughout the literature, even by the developers of TAM. For example, researchers admit to having not included the social context in TAM and recommend extending TAM to explore the social context [9, 10].

In this study, additional variables are therefore added to TAM to contextualize it so that usage is examined as a function of elements of other contexts. In addition to examining the relationship between system usage, PEOU and PERUSE, the study also seeks to examine whether these new variables introduce moderating effects. The research model is thus further extended to test whether any of the added variables influence the strength and or direction of the relationship between the PEOU and PERUSE and system usage.

The study research model postulated that, immunization registry usage is indirectly related to (i) system interface characteristics (SIC), (ii) computer self-efficacy (CSE) and directly and indirectly related to (iii) PEOU. PEOU is a function of system interface characteristics (SIC) and computer self-efficacy (CSE). This study examined whether SIC and CSE affected SU indirectly through PEOU and through PEOU’s effect on PERUSE. The study also examined if PEOU is directly related to immunization registry usage. The study research model also postulated that, immunization registry usage is only directly related to (iv) perceived usefulness (PERUSE), (v) commitment to change (CTC) (vi) subjective norm (SN) and, (vii) job tasks change (JTC).

This research model examined immunization registry behavior as a function of perceived usefulness (PERUSE) and perceived ease of use (PEOU). PERUSE and PEOU were both proposed mediators in the model and variables they mediate were included in the model. Davis (1989) demonstrates that PERUSE mediates PEOU’s effect on system usage. The study also tested whether PERUSE mediated PEOU, hence attempting to validate Davis’s (1989) findings. The study tested whether PEOU mediated system interface characteristics and computer self-efficacy (both antecedents of PEOU).

The constructs were all conceptualized. PEOU was the degree to which an end-user believed using a system to be free of effort [9]. SIC were features of the system interface that had an influence on mental effort. CSE was found to affect PEOU [16, 17]. CSE was the end-user’s self-assessment of his/her ability to use information and computer technologies in general [16]. PEOU influenced PERUSE and, both PEOU and PERUSE influenced system usage. Perceived usefulness was the degree to which an end-user believed that using the information system would enhance his or her job performance [9]. Useful was defined as capable of being used advantageously [9]. Performance benefits were assessed by measuring a person’s anticipated consequences of using the system (PERUSE). The inclusion of PERUSE in this study’s research model was also supported by behavioral decision theory. Commitment to change was the end-user’s psychological attachment to using the system as implementation unfolds [18]. System usage was the dependent variable in this study and actual usage behavior was examined rather than intention to use a system in order to expand the scope to accommodate situations where use of a system was mandated. Subjective norm also affected system usage behavior. Subjective norm was defined as “an end-user’s perceptions that people who are important to him/her think he or she should or should not use the system” [19]. Using an information system is a social process; hence behavior towards the information system can be based on social influence from others [20]. Job characteristics also influenced system usage. When job-tasks change, it influences system usage. Job level changes were the changes that occurred due to a collection of tasks and non-task related factors. Task level changes are the changes that occurred to specific (or individual) tasks. Few studies have examined how changes in the job and tasks influence system usage. The impact of job-tasks change on expert system use was studied and showed a strong negative relationship between job–tasks change and use [21]. End-users will use a system more effectively if it does not impose changes to tasks they perform for their job. In this study, job-tasks changes were therefore the specific work-related tasks that changed upon implementation of a system.

Methods

The behavior of using an IIS is an important variable to be studied especially because many studies have found usage to be associated with system acceptance [22, 23]. The overall purpose of this study was to test a theoretically sound research model through an empirical study that answers the following research question: What factors significantly predict the use of a web-based immunization registry/IIS?

This study was conducted in a US state and the unit of analysis was the individual immunization registry end-user. All the organizations that had implemented the immunization registry developed by the State Health Department were contacted for recruitment. When calls were made to each organization, the specific contact information for the point person/people for the immunization registry was/were requested. The individuals were contacted and through the contact person additional prospective study participants were solicited. 100 study participants were then randomly selected from the provided prospective study participants. To be considered for the study, individuals had to meet the inclusion criteria of being a recent end-user of the immunization registry who had recently (within 3–6 months of training) been trained on the use of the registry. Additionally, the individual had to have worked at the agency for at least 2 years and also had to be able to participate in two phone interviews, one occurring about 3 months after the initial interview. The goal of the data collection was to gather data that was relevant and sufficient to test the study’s hypotheses and hence answer the study’s research question.

Data was collected by means of surveys administered through phone interviews. In this study, two surveys were administered to respondents. The first survey was administered to participants after system training, but before extensive use of the system. The second survey was administered to assess use of the system 3 months later. The constructs that were operationalized in the surveys to test the research hypotheses were: commitment to change (CTC), job-tasks change (JTC), system interface characteristics (SIC), computer self-efficacy (CSE), perceived usefulness (PERUSE), perceived ease of use (PEOU), subjective norm (SN) and system usage (SU). The questions with the exception of demographic and context questions were drawn from the literature. Though the survey was tested for content validity and the survey items were modified to be representative of the content domain. This study tested the following hypotheses:

H1: SIC is positively related to PEOU

H2: CSE is positively related to PEOU.

H3: PEOU is positively related to PERUSE.

H4: PERUSE is positively related to system usage.

H5: PEOU is positively related to system usage.

H6: Commitment to change is positively related to system usage.

H7: Subjective norm is positively related to system usage.

H8: Job-tasks change is negatively associated with system usage

In this study, additional variables are therefore added to TAM to contextualize it so that usage is examined as a function of elements from other contexts. In addition to examining the relationship between system usage, PEOU and PERUSE, the study also sought to examine whether these new variables introduce moderating effects. The research model was thus further extended to test whether any of the added variables influenced the strength and or direction of the relationship between the PEOU and PERUSE and system usage.

The conceptual model extended TAM with social (through subjective norm), organizational (through commitment to change), and work (through job-tasks change) contexts. The social dimension can be viewed as influence from peers whereby a user’s behavior is shaped by peers hence becoming an enabler or deterrent of technology acceptance. The peer dimension, involves the individual conforming to others’ beliefs, namely, normative beliefs. Subjective norm is the peer dimension examined in this study.

Though examining subjective norm is necessary, in situations where the external influence of peers does not influence behavior, an individual’s own beliefs such as how committed they are to the change will prevail, and is therefore more sustainable [18]. An individual working in an organization will exhibit this organizational variable; hence commitment to change is introduced in the conceptual model, as a determinant for system usage.

Lastly, technology is known to change work especially if the system is not designed to support an organizations business practices and workflow. This can therefore be an implementation barrier and for this reason in examining use, it is critical to examine this potential barrier to system use. TAM is therefore extended by adding the job-tasks change variable in the conceptual model.

The data was analyzed in SPSS V. 15.0. Cronbach’s alpha was used to investigate internal consistency of the items measuring each concept. Measures for latent variable were created by aggregating (by averaging) the items for: perceived ease of use (PEOU), perceived usefulness (PERUSE), job tasks change (JTC), system interface characteristics (SIC), commitment to change (CTC), subjective norm (SN).

Cronbach’s alpha and other reliability measures were computed to measure item consistency/reliability. A high Cronbach’s alpha (greater than .70) demonstrated that the items were measuring the same underlying construct and had good reliability. Pearson’s r correlation was computed to measure how closely related variables are by showing the degree of linear relationship between two variables.

Analysis involved using frequencies to display distribution of values for a variable and to check for any data entry or coding errors. Frequencies generated both statistical and graphical displays. Variability of responses was critical and variables that did not have enough variance in responses were not included in the analysis. Descriptive statistics that included, means, standard deviations and correlations were generated.

Regression analysis was applied to estimate the linear relationship between a dependent variable and independent variables. In cases where there was more than one independent variable predicting the dependent variable, the regression equation applied was: Y = a + b1*X1 + b2*X2 + ... + bn*Xn.. Where there was only one variable the regression equation applied was: Y=a + b * X (Y = dependent variable, X = independent variable, a=constant/intercept, b=slope /regression coefficient - the independent contributions of each independent variable to the prediction of the dependent variable.

The analysis included the following equations:

Equation 1: H1, H2: PEOU = b0 + b11SIC+ b12CSE +Control Variables

Equation 2: H3: PERUSE = b0 + b21PEOU+ b11SIC+ b12CSE +Control Variables

Equation 3 and 4: H4, H5, H6, H7, H8: SU= b0 + b31PERUSE+ b32CTC+ b33JTC+b34SN+ b21PEOU+ b11SIC+ b12CSE + Control Variables (SU was measured through functions and through the frequency of use, therefore the analysis generated results for both models)

This study sought to examine whether any of the variables that were added to the theoretical framework had moderating effects on the relationships between PERUSE and system usage, and PEOU and system usage. The following interaction effects were tested if the variable was found to be significant in the regression model:

PEOU * CTC

PEOU * SN

PEOU * JTC

PERUSE * CTC

PERSUE * SN

PERUSE * JTC

Each of the models included the following control variables: organization size (fulltime + part-time employees), education, job category, organization type, job tenure, number of hours work, gender, previous computer experience, number of years using a computer, previous experience collecting immunization data electronically and total number of computer applications used. Control variables were used to reduce the possibility of spurious relationships. The research sought to include non-technical variables in the research model as well, realizing that though technical inadequacies can lead to ineffective use of the system, non-technical issues also have an effect. Expert systems that fell into disuse, respondents were more likely to cite problems of a non-technical, non-economic nature than of a technical nature [21].

The models adjusted for clustering effects, potentially introduced because some of the study participants worked in the same organization. The study had 100 participants from 30 organizations. The analysis adjusted for the possibility of clustering effect of organizations on the data. The need to test for a possible clustering effect was based on the notion that individuals working at the same organization may have more similar responses than those in other organizations. If this effect had not been taken into account, the statistical tests would have underestimated the standard errors of parameter estimates. To avoid this, the adjustment was made by applying the Huber –White correction, a robust estimator of variance. The estimator relaxed the OLS assumption of independence of observations within clusters and only required that observations be independent across (between) clusters.

The multiple regression analysis involved selecting the dependent and independent variables to include in the regression in SPSS. The r-square was computed and it showed the proportion of variance in the dependent variable, which could be predicted from the independent variables. This measured the overall strength of association and did not show the extent to which a specific independent variable was associated with the dependent variable. The adjusted r-square was computed as well, indicating that as predictors are added to the model, each predictor will explain some of the variance in the dependent variable. The standard error of the estimate (root mean square error) which indicates the standard deviation of the error term was computed. The variance explained (sums of squares for the regression/model) by the independent variables and the variance not explained (sums of squares for the regression residual or error) by the independent variables was computed. The total sums of squares were also computed. The degrees of freedom (df) associated with the variances were also computed. The mean squares (sum of squares/ df) and F-value were also computed. The f-value was computed by dividing the mean square regression by the mean square residual. An associated p-value indicated whether the independent values significantly predict the dependent variables. A p-value less than 0.10 for the model indicated that together the group of independent variables showed a statistically significant relationship with the dependent variable. A p-value was also calculated for each independent variable, and indicated that the specific independent variable showed a statistically significant relationship with the dependent variable.

Multiple regression assumes that residuals (predicted minus observed values) are distributed normally, hence follow a normal distribution. The distribution of the variables was examined in this study by plotting normal probability plots and histograms to inspect the distribution of the residual values.

Collinearity was investigated to determine whether any of the independent variables were correlated. The VIF, tolerance and condition index were examined in this study to assess collinearity. Big values of VIF greater than 10 were considered potentially a problem. However, this was confirmed by examining the proportions of variance. Collinearity was also considered evident if 2 or more variables had large proportions of variance (0.50 or more) that correspond to a large condition indices (greater than 30).

Results

The response rate for this study was 77%. The 23% did not participate for a variety of reasons, some of which included, work load, their belief that they incapable to respond to questions because they did not use the immunization registry enough, or because upon several attempts to contact the individual for the interview, the person could not be reached or did not return the call.

Multiple regression analysis was applied to the data and generated estimates for the following four models:

Model 1: H1, H2: PEOU = b0 + b11SIC+ b12CSE +Control Variables

Model 2: H3: PERUSE = b0 + b21PEOU+ b11SIC+ b12CSE +Control Variables

Model 3: H4, H5, H6, H7, H8: SU (functions) = b0 + b31PERUSE+ b32CTC+ b33JTC+b34SN+ b21PEOU+ b11SIC+ b12CSE + Control Variables

Model 4: H4, H5, H6, H7, H8: SU (frequency) = b0 + b31PERUSE+ b32CTC+ b33JTC+b34SN+ b21PEOU+ b11SIC+ b12CSE + Control Variables

The instrument was tested for both content and construct validity. Inter-item correlation for items measuring the constructs was calculated for each construct and had a high Cronbach value for each ranging from 0.91 to 0.97. Collinearity amongst variables was also tested for. The VIF values were all below 10. Examining the proportions of variance validated this result. Collinearity was considered evident if 2 or more variables had large proportions of variance (0.50 or more), which corresponded to large condition indices (greater than 30). The results showed that the collinearity was evident, but it was amongst the control variables and not in the theoretical constructs. Since inferences were being made about theoretical constructs and not control variables this did not have negative implications on the study results and conclusions. Normality was also examined by plotting regression residual histograms and plots.

A generalized estimating equations model generated 2 different models, one model applied the robust estimator correction for the correlation matrix and the other did not. The former represented the correction for clustering effects and the latter did not. The goodness of fit statistics (quasi likelihood under independence model criterion – QIC) indicated which model was better. The models did not differ in goodness of fit suggesting that the clustering effect was insignificant in this study.

The significance of each model was determined from the p-value. A p-value for the model of <0.10 was significant and indicated that the independent variables (as a group) reliably predicted the dependent variable (also, show a significant relationship with the dependent variable). A p-value of <0.10 was also considered significant because of the small sample size used to test the hypothesis. The p-value for model 1 was <0.10, therefore, the independent variables reliably predicted perceived ease of use. The r-square of 0.513 for this model indicated that approximately 51% of the variability of perceived ease of use was explained by the variables in the model. The adjusted r-square of 0.42 indicated that approximately 42% of the variability of perceived ease of use was accounted for by the model even after taking into account the number of predictor variables in the model. In model 1, computer-self efficacy and system interface characteristics were the only significant predictors influencing perceived ease of use positively. Consequently, the hypotheses that system interface characteristics are positively related to perceived ease of use (H1), and that computer self-efficacy is positively related to perceived ease of use (H2) were supported.

The p-value for model 2 was < 0.10 (p=0.69) indicating that the independent variables reliably predict perceived usefulness. The r-square of 0.255 for this model indicated that approximately 26% of the variability of PERUSE was explained by the variables in the model. The adjusted r-square of 0.101 indicated that approximately 10% of the variability of PERUSE was accounted for by the model even after taking into account the number of predictor variables in the model. In model 2 only perceived ease of use was a significant predictor. This predictor’s coefficient was significantly different from 0. The result supported the hypothesis that PEOU is positively related to PERUSE (H3). The p-value for model 3 was <0.10 indicating that the independent variables reliably predicted system usage. The r-square of 0.829 for this model indicated that approximately 83% of the variability of SU was explained by the variables in the model. The adjusted r-square of 0.783 indicated that approximately 78% of the variability of SU was accounted for by the model even after taking into account the number of predictor variables in the model.

In model 3, the estimates showed that only perceived usefulness, perceived ease of use, subjective norm, and job-tasks change were significant predictors while the remaining were not. These predictors had coefficients that were significantly different from 0. Of the theoretical constructs, perceived usefulness, perceived ease of use, subjective norm and job-tasks change were significant predictors in model 3 and commitment to change was not. The significant variables influenced system usage positively with the exception of job-tasks change, which influenced system usage negatively. Therefore, the hypotheses that postulated PERUSE is positively related to system usage (H4), PEOU is positively related to system usage (H5), JTC is negatively related to system usage (H8), and SN is positively related to system usage (H7) were supported. The hypothesis that postulated CTC is positively related to system usage (H6) was not supported.

The p-value for model 4 was > 0.10 indicating that the independent variables did not reliably predict system usage and the model was not significant. Therefore, all of the hypotheses were not supported.

Frequency of use does not appear to be an adequate measure for system usage in a mandatory use context, reflected by the insignificance of the model and all of its variables. In a mandatory use context, the model that conceptualizes usage in relation to quality of use, as ‘functions for which the system is used for’ is a significant model and explains 73% of the variance in immunization registry usage. This percentage is higher than the percentage (45%–57% and lower for field studies) stated in the literature when TAM is not extended [24].

System interface characteristics and computer self-efficacy does not uniquely contribute to the prediction of system use, but both variables influenced PEOU (a significant predictor for system use), demonstrating a mediating effect through PEOU. The mediation effect of PERUSE is also evident, PEOU is a significant determinant of PERUSE and PERUSE is a significant predictor of system use. Therefore, PERUSE mediates between PEOU and SU. Moderating effects on PERUSE and system usage, and PEOU and system usage are not demonstrated.

Discussion

Understanding factors that influence the use of an implemented public health information system such as an immunization registry is of great important to those implementing the system and those interested in the positive impact of using the technology for positive public health outcomes. Use was examined from two perspectives in this study, 1) through frequency of use 2) through functions for which the system was used. The study argued that attempting to conceptualize or operationalize usage by examining the more widely used approach that based the operationalization on volume of usage (frequency of system use), would not be useful in a situation where system use was mandatory. The results supported this argument, showing that the model with the more comprehensive operationalization of usage was significant and the model that applied frequency of use as the dependent variable was not.

This study demonstrated the applicability and predictive power of an extended and modified technology acceptance model (cTAM) in predicting the actual use of an immunization registry. The percent of the variance explained by the extended TAM exceeded the percent of variance when TAM is not extended demonstrating that the independent variables were predicting a higher proportion of variance in system usage in this study than when TAM was not extended. Clearly, TAM proved to be an appropriate initial model for this study and it is evident that extending TAM increased its predictive power.

Extending TAM to include context variables extended the theory. All the context variables with the exception of commitment to change influenced the use of the immunization registry. Therefore, contextualizing TAM introduced new associations, making it evident that the social context and work context contribute to explaining why an immunization registry is used or not used.

This model was not only extended but it was modified to make it applicable in a mandatory use context. The high r-square of the model suggests that this research model is appropriate for mandatory use contexts. The research question asked what factors significantly predict the use of a web-based immunization registry/IIS? Perceived usefulness, perceived ease of use, subjective norm, job-tasks change, system interface characteristics and computer self-efficacy significantly predicted the use of this study’s immunization registry.

As theorized perceived usefulness, perceived ease of use, subjective norm and job-tasks change were found to have a significant influence on the end-user’s use of the immunization registry. Perceived usefulness, perceived ease of use and subjective norm were positively related to immunization registry/IIS use. Job-tasks change was negatively related to immunization registry/IIS use. Contrary to our postulation, commitment to change did not influence the use of the immunization registry. This finding can be attributed to the fact that the end-user’s cognitive dissonance did not need to be reduced because system usage was mandated.

As postulated, perceived ease of use mediated the influence of system interface characteristics and computer self-efficacy on the use of the immunization registry. Additionally, as postulated, perceived usefulness was found to mediate the influence of perceived ease of use on the use of the immunization registry at a significance level of 0.10. In this study, subjective norm influenced immunization registry use the most. This finding was not surprising given that study participants in clinics and the local health departments view colleagues in the state health department more important due to their governmental status. The immunization registry was implemented free of charge making the environment even more favorable for end-user’s to conform to other’s views. The study results also showed that the relationship between PERUSE and system usage as well as the relationship between PEOU and system usage were not moderated.

The study findings therefore show that end-users of immunization registries are therefore found to use an immunization registry if they think it is useful, easy to use, and if they are committed to the implementation. However, the end-user’s use of a system is independent of the influence by others more important to them, or by whether the system implementation changes job tasks. End-users also perceive a system easy to use if its ease is evident through a well-designed system interface that minimizes the cognitive effort and disorientation as the end users interact with the immunization registry’s interface. If end users of an immunization registry have a strong sense of their ability to use the registry, hence a high computer self-efficacy, they find the immunization registry easy to use. An end-user who believes that the immunization registry is easy to use believes that using the system will enhance his or her job performance. High computer self-efficacy, well designed immunization registry interface and belief in the benefits of the immunization registry indirectly then contribute to end-users using the implemented immunization registry as intended to perform job functions.

Some of these variables had been studied previously and the results in this study were consistent with other findings. For instance, four different systems were studied in four organizations and two involved voluntary usage and the other two involved mandatory usage [14]. It was found that in both the voluntary and mandatory context, perceived usefulness and perceived ease of use significantly influenced system use behavior directly.

Studies have shown inconsistent results about how perceived ease of use influences usage behavior [9, 25]. For instance, it was found that perceived ease of use influenced system usage indirectly but not directly [26]. Another study showed that perceived ease of use did not influence system usage directly or indirectly [27]. Nevertheless, most studies have confirmed that perceived ease of use predicts system usage through perceived usefulness [9, 25, 28].

To increase the validity of this study’s findings, in conducting the study it was imperative that spurious effects were controlled for. The study sought to minimize any spurious effects by introducing control variables. Control variables were introduced in this study to establish that the predictor (independent) variable was the sole cause of the observed effect in the dependent variable. In the significant models, it appeared that the majority of control variables did not contribute to the observed effect in the dependent variable.

The study controlled for other biases to minimize the likelihood of inconsistent estimates ensuring the hypotheses tests were reliable. In this study self-selection error was controlled for. A web-based survey was not chosen as a data collection tool because the data collected on the Internet could potentially suffer from self-selection. The use of the Internet by individuals varies and certain individuals are more likely to be on the Internet than others, and are therefore more likely to fill-in the web-survey.

Also, this study minimized the error of recall by asking users questions about recent events. The users are surveyed immediately after training of the system (before actual use of the system) and 3 months after use of the system.

Using expert knowledge, empirical evidence and theory to ensure that constructs were represented by valid and reliable measures minimized bias through measurement error. The high Cronbach’s alphas demonstrated that the items were measuring the same underlying construct and had good reliability.

Lack of exogeneity assumption of regressors is observed when key aspects in research models are omitted. This study drew from literature and theory to include exogenous variables in the research model, extending TAM. Experts confirmed content validity through the survey pre-test and focus groups. Questionnaire items to measure perception, beliefs, attitudes, judgments, or other theoretical constructs are likely to reflect measurement error because of absence of physical measures corresponding to these variables. In this study however, using behavior measures for the variables in the regression model mitigated this problem.

Systematic error, which is error that makes survey results unrepresentative of the target population by distorting the survey estimates in one direction, was controlled for in this study. This error can distort the results in any direction but tend to balance out on average. Though this error cannot be measured directly response rate was viewed as an appropriate the indicator in this study. In this study, the response rate of 77% was good and the sample size adequate, thereby minimizing this error.

Random error such as error introduced through data capture was minimized in this study. Controlling for this was critical in ensuring that results are not overestimated or underestimated. Data was captured on paper and transferred to SPSS. Though it was transferred and possibly prone to data entry errors, the data was checked three times against survey data. Double verification was also applied and it entailed entering the same data in SPSS on two separate occasions and comparing frequency data. Lastly, survey testing error was controlled for by pre-testing the survey to minimize this error.

This study has both theoretical and practical implications. This study conceptualized and operationalized use in a manner that was meaningful and applicable beyond the scope of volitional use, thus in a manner that signified use of the immunization registry to support job functions in a mandatory use context. The more common conceptualizations of use in the literature had been limited to a conceptualization that was specific to voluntary system use contexts, and one that did not measure use adequately in mandatory use context. Researchers have empirical evidence from a study that explored use from a mandatory use context. This research has also provided other researchers with measures and data collection tools that can be used in the context of mandatory use context.

Most of the previous operationalizations of use lacked comprehensiveness, and few of the studies related use to job functions. To measure use as conceptualized in this study, the operationalization needed to be granular and needed to measure the functions that the immunization registry is used for. In this study it was paramount that the conceptualization of use, represented system use of the immunization registry for specific job functions, and that the operationalization adequately measured this use.

There is no agreed upon way of conceptualizing or operationalizing the use construct in the literature. By focusing on functions rather than the common simpler operationalization such as frequency of use, this operationalization is applicable in a mandatory use context. The majority of studies have operationalized system usage for volitional contexts, therefore, there is no doubt that this study presents a new operationalization that future researchers seeking to study use in a mandatory context can apply.

Conclusion

As more public health data becomes available and accessible through information systems, it will be critical to understand what factors could potentially hamper effective and meaningful use, and to mitigate those beforehand. This study identifies variables able to influence use and serves as a useful guide for researchers and practitioners. The present national focus on meeting meaningful use requirements of electronic health records in the United States is mostly prevalent in clinical settings. However, public health agencies should adopt similar best practices and begin defining what is considered meaningful use and measuring what could potentially impact that use. This study is among few informatics studies based on a comprehensive and explicitly presented theoretical framework. The extended theoretical framework can be generalized across disciplines and should be tested in other contexts as well.

Figure 2.

Graphical Presentation of Study Findings - Model Estimates and R2 Shown.

Parameter estimates from Model 1, 2 and 3 are shown on this diagram and are preceded by *, indicating their significance level (*p<0.10 (significant).

R2 is also shown and it is the proportion of variance in the dependent variable explained by the independent variables.

Note: The parameter estimates showing the direct effects on system use are from Model 3 (functions system used for – dependent variable).

Footnotes

Conflict of Interest Statement

The author of this article, Dr. Wangia declares “I have no financial and personal relationships with other people or organizations that could inappropriately influence (bias) my work.”

References

- 1.Swanson EB. Information system implementation: Bridging the gap between design and utilization. Homewood, IL: Irwin; 1988. [Google Scholar]

- 2.Hasan B. The influence of specific computer experiences on computer self-efficacy beliefs. Computers in Human Behavior. 2003;19(4):443–450. [Google Scholar]

- 3.Freeman VA, DeFriese GH. The challenge and potential of childhood immunization registries. Annu Rev Public Health. 2003;24:227–246. doi: 10.1146/annurev.publhealth.24.100901.140831. [DOI] [PubMed] [Google Scholar]

- 4.NVAC . Development of Community- and State-Based Immunization Registries. National Vaccine Advisory Committee (NVAC); Washington, DC: 1999. [PubMed] [Google Scholar]

- 5.Kolasa MS, et al. Practice-based electronic billing systems and their impact on immunization registries. Journal of Public Health Management Practice. 2005;11(6):493–499. doi: 10.1097/00124784-200511000-00004. [DOI] [PubMed] [Google Scholar]

- 6.Saga V, Zmud R. The nature and determinants of IT acceptance, routinization, and infusion. IFIP Transactions A (Diffusion, Transfer, and Implementation of Information Technology) 1994;45:67–86. [Google Scholar]

- 7.Amoako-Gyampah K, Salam AF. An Extension of the Technology Acceptance Model in an ERP Implementation Environment. Information & Management. 2004;41:731–745. [Google Scholar]

- 8.D’Ambra J, Wilson CS. Use of the World Wide Web for International Travel: Integrating the Construct of Uncertainty in Information Seeking and the Task-Technology Fit (TTF) model. Journal of the American Society for Information Science and Technology. 2004;55(8):731–742. [Google Scholar]

- 9.Davis FD. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly. 1989;13(3):319–340. [Google Scholar]

- 10.Davis FD, Bagozzi RP, Warshaw PR. User acceptance of computer technology: A comparison of two theoretical models. Management Science. 1989;35(8):982–1003. [Google Scholar]

- 11.Hu PJ, et al. Examining the technology acceptance model using physician acceptance of telemedicine technology. Journal of Management Information Systems. 1999;16(2):91–112. [Google Scholar]

- 12.Igbaria M, Guimaraes T, Davis GB. Testing the determinants of microcomputer usage via a structural model. Journal of Management Information Systems. 1995;11(4):87–114. [Google Scholar]

- 13.Szajna B. Empirical evaluation of the revised technology acceptance model. Management Science. 1996;42(1):85–92. [Google Scholar]

- 14.Venkatesh V, Davis F. A theoretical extension of the technology acceptance model: four longitudinal field studies. Management Science. 2000;46(2):186–204. [Google Scholar]

- 15.Ajzen I, Fishbein M, editors. Understanding attitudes and predicting social behavior. Prentice-Hall; Englewood Cliffs, NJ: 1980. [Google Scholar]

- 16.Venkatesh V, Davis FD. A model of the antecedents of perceived ease of use: development and test. Decision Sciences. 1996;27(3):451–481. [Google Scholar]

- 17.Ma Q, Liu L. The Role of Internet Self-Efficacy in the Acceptance of Web-Based Electronic Medical Records. Journal of Organizational & End User Computing. 2005;17(1):8–57. [Google Scholar]

- 18.Malhotra Y, Galletta D. Extending the technology acceptance model to account for social influence: Theoretical bases and empirical validation. Hawaii International Conference on Systems Sciences. 1999 [Google Scholar]

- 19.Fishbein M, Ajzen I, editors. Belief, Attitude, Intention, and Behavior: An Introduction to Theory and Research. Addison-Wesley; Reading, MA: 1975. [Google Scholar]

- 20.Orlikowski WJ, Robey D. Information Technology and the Structuring of Organizations. Information Systems Research. 1991;2(2):143–169. [Google Scholar]

- 21.Gill TG. Expert Systems Usage: Task Changes and Intrinsic Motivation. MIS Quarterly. 1996;20(3):301–329. [Google Scholar]

- 22.Ein-Dor P, Segev E. Organizational context and the success of management information systems. Management Science. 1978;24(10):1064–1077. [Google Scholar]

- 23.Hamilton S, Chervany Nl. Evaluating information systems effectiveness, Part I. Comparing alternative approaches. MIS Quarterly. 1981;5(3):55–69. [Google Scholar]

- 24.Adams DA, Nelson RR, Todd PA. Perceived usefulness, ease of use, and usage of information technology: A replication. MIS Quarterly. 1992;16(2):227–247. [Google Scholar]

- 25.Keil M, Beranek PM, Konsynski BR. Usefulness and ease of use: Field study evidence regarding task consideration. Decision Support Systems. 1995;13(1):75–91. [Google Scholar]

- 26.Hans VDH. User Acceptance of Hedonic Information Systems. MIS Quarterly. 2004;28(4):695–704. [Google Scholar]

- 27.Chau PYK. An empirical assessment of a modified technology acceptance model. Journal of Management Information Systems. 1996;13(2):185–204. [Google Scholar]

- 28.Chau PYK, Hu PJ. Investigating healthcare professionals’ decisions to accept telemedicine technology: an empirical test of competing theories. Information and Management. 2002;39(4):297–311. [Google Scholar]