Abstract

Cortical neuroprostheses for movement restoration require developing models for relating neural activity to desired movement. Previous studies have focused on correlating single-unit activities (SUA) in primary motor cortex to volitional arm movements in able-bodied primates. The extent of the cortical information relevant to arm movements remaining in severely paralyzed individuals is largely unknown. We record intracortical signals using a microelectrode array chronically implanted in the precentral gyrus of a person with tetraplegia, and estimate positions of imagined single-joint arm movements. Using visually guided motor imagery, the participant imagined performing eight distinct single-joint arm movements while SUA, multi-spike trains (MSP), multi-unit activity (MUA), and local field potential time (LFPrms) and frequency signals (LFPstft) were recorded. Using linear system identification, imagined joint trajectories were estimated with 20 – 60% variance explained, with wrist flexion/extension predicted the best and pronation/supination the poorest. Statistically, decoding of MSP and LFPstft yielded estimates that equaled those of SUA. Including multiple signal types in a decoder increased prediction accuracy in all cases. We conclude that signals recorded from a single restricted region of the precentral gyrus in this person with tetraplegia contained useful information regarding the intended movements of upper extremity joints.

Index Terms: BCI, BrainGate, spike, local field potential, paralysis, motor imagery

Introduction

This work investigated estimation of visually-guided imagined single-joint arm movement trajectories in a person with high tetraplegia. This work is part of a larger effort to develop cortically controlled functional electrical stimulation (FES) neuroprostheses for restoring whole-arm movements. Intact non-human primates have used action potentials (spikes) from neuronal ensembles to control real-time kinematics of 2D and 3D computer cursors [1], [2] and robotic devices [3–6], as well as predict more cognitive parameters such as task goal [7], [8]. Temporarily paralyzed primates can control 1D FES systems for producing wrist torques [9], [10], and even recently whole hand grasping [11], using single or ensemble neuron activities. Many of the aforementioned studies build decoders from signals recorded during actual arm movements of intact primates before using these decoders in closed-loop tasks. Until recently, it was unclear if these successes in healthy animals would translate to persons with permanent and prolonged paralysis, where decoders cannot be built from actual arm movements. Both motor intact and paralyzed persons have now demonstrated control of communication devices and low-dimensional cursor movements using the electroencephalogram (EEG), electrocorticogram (ECoG), and local field potentials [12–15]. The initial BrainGate Clinical Trial involving persons with high tetraplegia demonstrated continuous two-dimensional cursor plus two-state control using spike ensembles recorded from a chronically implanted microelectrode array [16–20]. Recently, this Trial has demonstrated two- and three-dimensional control of robotic arms by persons with high tetraplegia [21]. Humans using brain-computer-interfaces (BCIs) have yet to demonstrate cortical control of higher dimensional tasks, such as controlling a multi-joint arm in greater than three dimensions (for controlling arm position and hand orientation). In monkeys, full free arm reaching movements can be extracted from a small, local population of neurons in primary motor cortex (M1) [22]. In paralyzed persons, it is unclear what relevant arm control signals can be extracted from a single area of cortex that has not controlled arm movement in many years. Hence, the first aim of this study was to investigate, through the use of visually guided motor imagery (VGMI), if neural activity from M1 in a chronically implanted person with high tetraplegia was systematically and differentially modulated with respect to the trajectories of eight observed and imagined single-joint arm movements.

To date, intracortical BCIs have focused on sorted single unit action potentials (SUAs) as the neural feature of choice for decoding. However, recent studies in animals have demonstrated that spike trains extracted by applying a simple amplitude threshold can provide directional information, without explicit sorting of the spike waveform [23], [24], and can be decoded to yield closed-loop 2D cursor control in able-bodied monkeys. Applying a simple amplitude threshold may be advantageous because it eliminates "spike sorting", which can be time-consuming, computationally burdensome, and imprecise [25]. Other studies suggest that spike detection is unnecessary; rather the continuous super-imposed activity of many neurons (multi-unit activity, or MUA), can serve as an optimal encoder of upcoming movement parameters [26], [27]. Local field potential (LFPs), which reflect the localized low frequency synaptic activity of a population of neurons, have also shown some promise in decoding. Some studies have reported that off-line decoding of LFPs recorded from able-bodied monkeys can yield estimates of arm direction and trajectory comparable, and in some cases superior, to those based on sorted spikes [28], [29], particularly in the parietal area where cognitive parameters [30], [31] can be predicted. A pair of studies involving reach-to-grasp movements by monkeys have shown that both low and high-frequency LFP signals are useful for offline prediction of arm endpoint kinematics and hand aperture, though ultimately reporting the LFP decoding performance to be inferior to SUA [32], [33]. In summary, no consensus exists as to which neural feature (or combination of features) is best for cortical control. Hence, as a second aim, this study investigated the abilities of different M1 neural features to encode information for predicting the trajectories of VGMI movements in a person with long-term paralysis. Preliminary portions of this work were reported in an IEEE conference proceedings [34].

Materials and Methods

A. Participant Information

The BrainGate2 pilot clinical investigation is conducted under an Investigational Device Exemption (Caution: Investigational Device Limited by Federal Law to Investigational Use.) from the US Food and Drug Administration. At the time of data collection, the participant was a fifty-five year-old woman who sustained a large pontine infarction nine years pre-enrollment. After completion of informed consent and medical and surgical screening, the 96-channel microelectrode array (Blackrock Microsystems, Salt Lake City, Utah, USA) was implanted into the M1 arm area knob using a pneumatic insertion technique [35]. Details of the human surgical procedure and the BrainGate system [19] have been previously reported.

B. Experimental Protocol

Experimental sessions were run 966 and 988 days post implantation of the microelectrode array. The research participant was seated comfortably in her wheelchair and placed 57 cm in front of a 15 inch flat-panel monitor. The monitor displayed a three-dimensional virtual world developed in-house using the Gamestudio (Conitech Datensysteme GmbH, La Mesa, CA) video game development environment. The participant had a first-person point-of-view of all movements made by an anthropomorphic virtual character displayed on the screen. Within a single block, the participant observed the virtual character performing a series of continuous single-joint arm movements (Figure 1). The movements included shoulder elevation in the 0° plane (SE@0) (shoulder ab/adduction), shoulder elevation in the 90° plane (SE@90) (shoulder flexion-extension), shoulder internal-external rotation (SIER), elbow flexion-extension (EFE), forearm pronation-supination (FPS), wrist flexion-extension (WFE), wrist ulnar-radial deviation (WURD), and hand opening-closing (HOC). Each observed joint movement traversed a 0.4 Hz sinusoidal trajectory lasting forty-five seconds, with a five second rest period between adjacent movements. Each single block contained two trials of each single-joint movement, with the order of observed movements randomized within a given block. The participant was instructed to “watch the visualization and imagine performing the same single-joint, speed-matched movement”. The participant viewed two thirteen-minute blocks of movements each day of recording.

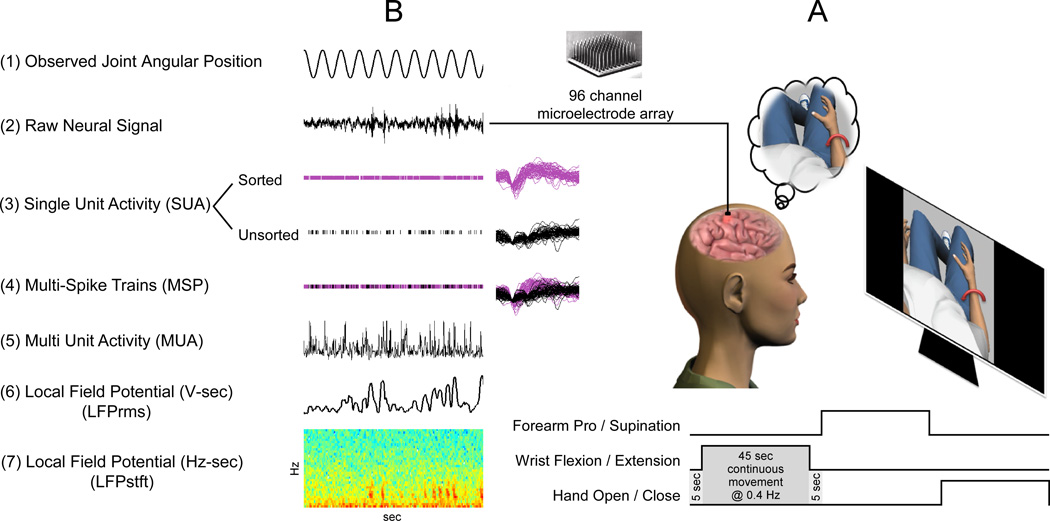

Figure 1.

(A) The participant observed and imagined performing eight single-joint continuous movements (see Methods for details). Each observed movement lasted 45 seconds, with 5 second rest breaks between consecutive movements. (B) During observation of the virtual movements (1), 30 kHz raw broadband neural data was continuously collected (2). Five neural feature types were extracted (3–7, see Methods, Signal Acquisition and Pre-processing) and used offline to predict the state and intended trajectories of the participant’s VGMI movements.

C. Signal Acquisition and Pre-processing

96-channel, full broadband neural data (0.3 Hz – 7.5 kHz, Fs=30 kHz), was recorded during each experimental session. Five different neural features were either collected online or derived offline: single-unit action potentials, multi-spike train action potentials, multi-unit neural activities, local field potential time-frequency signals, and local field potential time-amplitude signals (Figure 1B). The various features extracted from the recorded broadband neural data are summarized in Table 1.

TABLE 1.

Summary of Features used for Offline Decoding

| Feature Type | Feature Domain | Processing | No. of Features |

|---|---|---|---|

| Single-unit action potential (SUA) | Time – Firing Rate | Sorted online by human operator using time-amplitude windows applied to full broadband signal recorded at 30 kHz; windowed time stamps of action potentials result in instantaneous firing rates updated every 20 Hz | Day 1: 83 unsorted, 67 sorted (24 w/ SNR > 2.0) Day 2: 88 unsorted, 65 sorted (37 w/ SNR > 2.0) |

| Multi-spike trains (MSP) | Time – Firing Rate | Aggregates of sorted and unsorted SUAs on a single channel; firing rates are determined as described for SUA [23], [27] | Day 1: 83 MSP channels Day 2: 88 MSP channels |

| Multi-unit action potential (MUA) | Time – Firing Rate | Notch (60 Hz) and bandpass (300–600 Hz) filtering of continuous broadband data sampled at 30 kHz; RMS filtered at 100 Hz; downsampled to 20 Hz update rate [27] | 96 continuous MUA features |

| Local field potential (LFPrms) | Time – Amplitude | Notch (60 Hz) and lowpass (200 Hz) filtering; downsample to 500 Hz; RMS filter to 20 Hz update rate | 96 continuous LFPrms channels |

| Local field potential (LFPstft) | Time – Frequency Power | Notch (60 Hz) and lowpass (200 Hz) filtering; downsample to 500 Hz; Short-time-Fourier-transform determined temporally modulated power in α, β, γ, and γ+ frequency bands [29] | 96 channels × 4 frequency bands per channel = 384 LFPstft features |

Prior to the beginning of the movement imagery protocol, single unit action potentials (SUA) that crossed a manually adjusted threshold were isolated online by a human operator, based upon their waveform shapes relative to a time-amplitude window. Spikes that crossed the threshold but did not fit a time-amplitude window were classified as unsorted, and were included in subsequent analyses. As many as three sorted units and one unsorted unit were observed on a given electrode. On session day one, there were 83 unsorted units and 67 sorted units, 24 of which had a signal-to-noise (SNR) ratio > 2.0. On session day two, there were 88 and 65 unsorted and sorted units, respectively, with 37 sorted units having a SNR > 2.0. Offline, the spiking activities of all unsorted and sorted action potentials on a single electrode were aggregated into the multiple spike train (MSP). Continuous firing rates were determined from the discrete time spikes for all sorted and unsorted SUA and MSP neural data by counting spikes in non-overlapping rectangular windows 50 ms long. Multi-unit neural activity (MUA) was derived offline for each electrode by notch (60 Hz) and bandpass filtering (300 – 6000 Hz) the full broadband neural data using 8th order Butterworth filters. A 1st order root-mean-square (RMS) filter at 100 Hz was applied to obtain the signal envelopes, followed by downsampling the signals to 20 Hz.

Local field potential features were derived offline by notch (60 Hz) and lowpass filtering (200 Hz) the full broadband neural data using 8th order Butterworth filters, followed by downsampling to 500 Hz. A 256-sample windowed spectrogram, with a 231-sample overlap, was applied to the neural data to obtain frequency domain short-time-Fourier-transformed signal (LFPstft), updated at 20 Hz. The time modulated average powers in the alpha (0 – 12 Hz), beta (12 – 30 Hz), gamma (30 – 60 Hz), and higher gamma (60 – 201 Hz) frequency bands were determined for each of the 96 electrodes and were used as inputs for the offline decoders. An RMS filter was applied to the time domain LFP to obtain the time domain envelope (LFPrms) for each channel.

D. Predictions of Imagined Single-Joint Movements

We examined how much information each individual neural feature (i.e., the SUA of one unit, the MSP from one electrode, the MUA from one electrode, a single LFPstft frequency band from one electrode, and the LFPrms from one electrode) contained about the imagined continuous output trajectory of each observed joint. A neural feature x(t = 1..n) was related to an individual joint angle y(t = 1..n) through a linear impulse response filter (IRF) using single-input-single-output (SISO) linear system identification [36]. The IRF estimate Ĥxy was determined as the minimum mean squared error estimate that satisfied Y=XH, given by

| (1) |

where m is the memory length of the filter, and Y and the time-delayed X data matrices are given by

| (2) |

[37]. IRFs were determined from training data (random 75% of all data samples) for each SISO combination of an individual feature and an individual joint for each trial on a given day, and used in cross-validation (remaining 25% of all data) to predict the trajectories of the remaining imagined movements. The predicted trajectories ŷ(t) were computed by

| (3) |

where ‘*’ denotes convolution and τ is the neural time history included in the filter. m is related to the time history τ by m = τ·f, where f = 20 Hz is the update rate. IRFs were estimated with τ ranging from 200 – 4800 ms in 400 ms steps. The goodness of fit of the estimated imagined trajectories relative to the observed joint trajectories was quantified by the variance accounted for (VAF%), defined as

| (4) |

The optimal time history τi for a given single feature i was obtained by repeating the above described estimation process twenty times for each “feature – time history” combination (e.g. individual SUA at 600 ms), with the training and testing data sets randomized during each repetition. A 95% confidence interval of the average VAF% of the estimation was obtained for each “feature – time history” combination. A 1-way analysis of variance (1-ANOVA), using multiple comparisons and Bonferroni corrections, determined for each single feature the minimum τ that statistically maximized the average VAF% of the estimation.

We then examined how well the population activities of each feature type predicted the angular positions of the visualized movements using multiple-input-single-output (MISO) system identification. For a given observed joint, using the SUA signals, we began with the single unit that allowed for the best prediction (i.e. largest VAF%) of the position of the given joint. Using a recursive feature addition (RFA) algorithm with optimized time histories, subsequent units were added to the input feature space based upon which gave the largest increase in VAF% of the cross-validation data. Given inputs x1..i(t) with optimized memory lengths m1..i, the input matrix X of equation (2) becomes

| (5) |

and the estimated IRF of equation (1) becomes

| (6) |

Impulse response filters were built for training data consisting of input sets of 2, 3, 4, etc… features until the number of features were exhausted, the cross-validation VAF% statistically significantly dropped below 80% of the maximum achieved VAF% for five consecutive iterations, or the VAF% became negative for five consecutive iterations. A negative VAF% implied that the given set of inputs was a worse predictor of the joint position than the position mean alone. Similarly, using RFA, the maximum VAF%s based upon the MSP, MUA, LFPrms, and LFPstft features were determined.

Finally, we examined the effectiveness of combining neural feature types to predict the imagined continuous joint angles. The RFA algorithm was implemented with the input feature space including pairs of feature types. For example, one possible feature space could include the firing rates of several single-unit action potentials, as well as the alpha and gamma frequency bands of the LFPstft. RFA was applied to all paired combinations of signal types (e.g. MSP+SUA, MSP+MUA, MSP+LFPrms, etc…) to determine which combination of inputs was most effective at predicting each imagined joint trajectory.

Results

A. Prediction of Single-Joint Positions from Individual Features

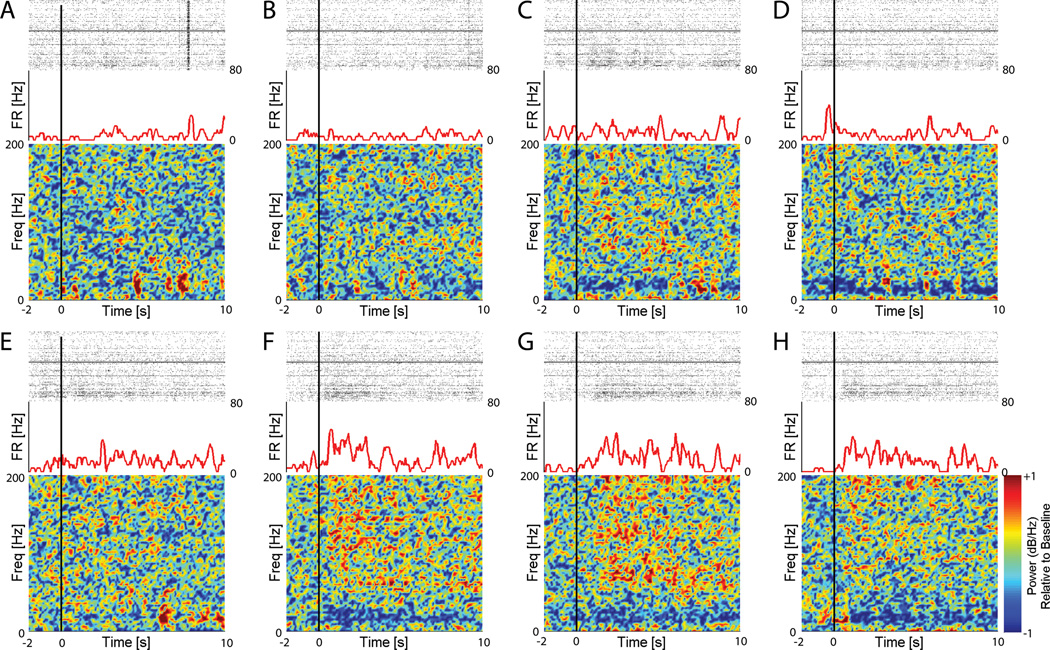

Figure 2 shows an example of the neural responses to each of the eight movement imageries (one panel per imagery). The example SUA shows a clear increase in firing rate after onset of wrist flexion/extension (WFE), wrist deviation (WURD), and hand opening/closing (HOC) imageries (panels F – H). This increased activity is not observed after onset of other imageries (panels A – E). Additionally, after onset of WFE, WURD, and HOC imageries, there is a clear decrease in low frequency LFP relative power (more blue) and an increase in higher frequency LFP relative power (more red). Similar behavior was observed in other SUAs that showed preferential activity for the other imageries. The systematic changes observed in the high and low LFP bands are qualitatively similar to what has been reported during ECoG-based movement and cognitive tasks [38].

Figure 2.

Each panel A – H shows the neural responses during VGMI of each of eight movements (A – SE@0, B – SE@90, C – SIER, D – EFE, E – FPS, F – WFE, G – WURD, H – HOC). Within each panel, the raster plot (top) shows activity from all recorded SUA during the given VGMI. The firing rate (FR) plot (middle) shows the activity of a selected SUA during the VGMI. The color plot (bottom) shows the LFP response (0–201 Hz in 3 Hz increments) relative to baseline on the selected electrode. The black vertical line represents onset of the movement visualization. Note the increase in activity of the selected unit at the onset of (F) WFE, (G) WURD, and (H) HOC imageries. Also note in F, G, and H the decrease in relative power in lower LFP frequencies (as evidenced by increased blue), and increase in relative power in higher LFP frequencies (as evidenced by increased red) after imagery onset.

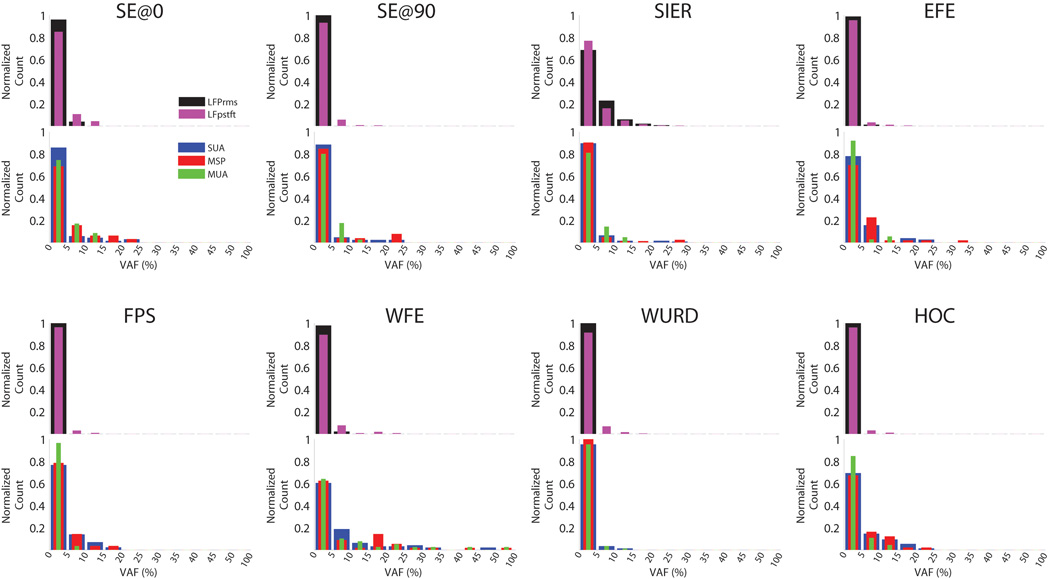

Single-input-single-output system identification (SID) revealed how well the trajectories of the observed movements were predicted from each individual feature. Across all sessions, 83.2 ± 8.4% of features needed no more than 200ms of time history included in the SID decoder to statistically maximize the VAF% of their predictions. Figure 3 shows the amount of movement information (VAF%) contained in individual features for each imagined joint movement, across all recording sessions. The majority of features individually predicted less than 5% of the total variance in the observed movement trajectories, though with some notable exceptions. For example, discrete neural signals (SUA, MSP) had the broadest VAF% distribution of all signal types, and the responses of many SUA and MSP features each accounted for greater than 15% of the predictions of the imagined movement trajectories. This was particularly true during the imagination of shoulder abduction-adduction (SE@0), wrist flexion-extension (WFE), and hand open-close (HOC). The imagined WFE trajectory could be predicted at greater than 40% VAF by several individual SUA, MSP, and MUA features. The VAF% of the multi-unit activities (MUA) primarily concentrated between 0 and 5%. However, again in the cases of SE@0, WFE, and HOC, MUA recorded from several electrodes could each account for more than 15% of the predictions of the imagined movements. The distributions of VAF% of SUA, MSP, and MUA were similar for each imagined movement. The LFPrms and LFPstft single features had VAF% distributions primarily concentrated between 0 – 5% for most movements, though during shoulder internal-external rotation (SIER), LFP features were found that each accounted for 5–20% of the variance.

Figure 3.

Each panel shows for a given VGMI the normalized distributions of VAF% of the trajectories predicted by single neural features (i.e. 1 SUA, 1 LFP frequency band, etc…) across all experimental sessions. The distribution of features whose estimates were worse than the position mean (i.e. negative VAF%) are omitted. Across all imageries, SUA, MSP, and MUA had wider VAF% distributions than LFPrms and LFPstft.

B. Decoding of Single-Joint Positions from Population Activity

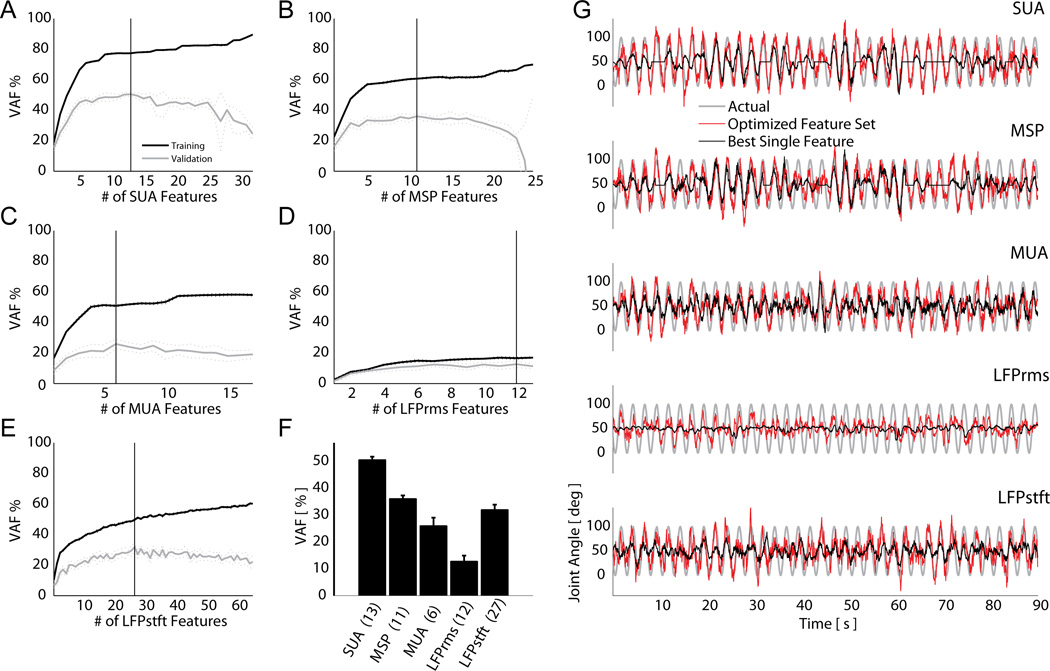

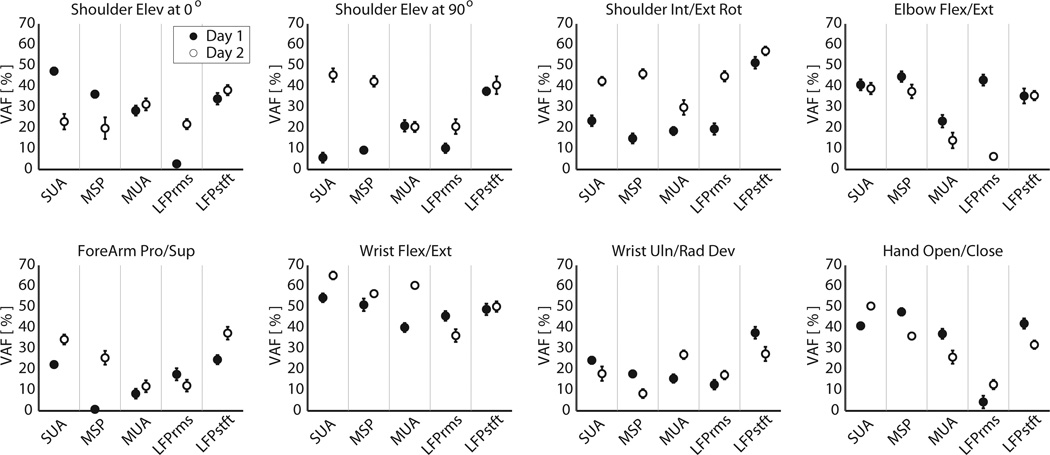

Using recursive feature addition (RFA) with optimized time histories, we determined the optimal set of inputs to include in the multiple-input-single-output decoder for each feature type. As an example, Figure 4A–E shows the results of recursively adding more features to the decoder to predict imagined hand opening-closing for each feature type. Addition of neural features monotonically increased the accuracies of the predicted trajectories of the imagined joint movements in the training data set. However addition of features past the optimal feature set in the cross-validation data actually hindered the prediction accuracy (panels A–E). Specifically, the effect of over-fitting was to decrease the smoothness of the prediction by introducing of higher frequency noise elements. For imagined hand opening-closing, the discrete SUA and MSP features provided more accurate predictions (i.e. higher VAF%) than the continuous MUA or LFP based feature sets (panel F). Plots of the observed versus predicted kinematics are shown in Figure 4G. Figure 5 summarizes the accuracies of the RFA-optimized prediction VAF%s for all imagined movements across all recording sessions. These RFA-optimized sets of features were rank ordered for each experimental session and each imagined movement, from best (1) to worst predictor (5). Features that gave statistically similar predictions, based upon the 95% confidence interval of the VAF% mean, were assigned the same ranking. Complete ranking results are reported in Table 2. While there existed some variability between session days with respect to the ranking of the signal features for a given imagined movement, some general trends become evident when examining the feature rankings over all imagined movements. LFPstft and SUA features were consistently the two best predictors of all imagined joint movements, with comparable average rankings of 1.56 and 1.69 respectively. These features were followed in rank order by MSP, MUA, and LFPrms. To assess if these rankings were statistically significant, the results of the RFA were subjected to a non-parametric multiple-repetition Friedman’s test. Similar to a balanced 2-way analysis of variance, Friedman’s test assessed the data for effects of the neural feature type, while balancing for any possible effects of the imagined movement type or movement-feature interactions [39]. On both session day 1, and session day 2, the effect of the feature type used for prediction proved to be statistically significant (p<0.001 for both days). Follow-up pairwise comparison tests, with appropriate Tukey LSD corrections for multiple comparisons, revealed that there was no statistically significant difference between the LFPstft, SUA, and MSP feature types for predicting all imagined movements, though MSP generally lagged both LFPstft and SUA in its overall ranking. MUA and LFPrms produced statistically similar prediction results to each other, but were not statistically similar to LFPstft, SUA, and MSP. These ranking results were consistent across both session days.

Figure 4.

(A–E) Recursive feature addition (RFA) was used to determine the optimal feature set for each signal type. Shown are curves of VAF% (mean ± 95% CI) when RFA was applied to each signal type during the hand open-close VGMI task. A vertical line in each plot delineates the optimal feature set during cross-validation for the given signal type, which is summarized in the bar graph (F). Numbers at the bottom of the bar graph show the number of features in the optimal set. (G) Cross-validation plots of the observed (grey) and the estimated imagined hand aperture using an optimized feature set (red) versus the best individual feature (black) for each signal type.

Figure 5.

Mean +/− 95% CI of VAF% when predicting continuous trajectories using an optimal set of features of each individual signal type.

TABLE 2.

Feature Rankings (1=best, 5=worst) for Prediction of Imagined Trajectories for each session day

| SE@0 | SE@90 | SIER | EFE | FPS | WFE | WURD | HOC | Average Rank |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| ● | ○ | ● | ○ | ● | ○ | ● | ○ | ● | ○ | ● | ○ | ● | ○ | ● | ○ | ||

| SUA | 1 | 3 | 3 | 1 | 2 | 2 | 1.5 | 1 | 1 | 1 | 1 | 1 | 2 | 3 | 2.5 | 1 | 1.69 ± 0.8 |

| MSP | 2 | 3 | 3 | 1 | 3 | 2 | 1 | 1 | 4 | 3 | 1.5 | 3 | 3 | 5 | 1 | 2 | 2.41 ± 1.2 |

| MUA | 4 | 2 | 2 | 4 | 3 | 5 | 5 | 4 | 3 | 4 | 3 | 2 | 3.5 | 1 | 3 | 4 | 3.28 ± 1.1 |

| LFPrms | 5 | 3 | 3 | 4 | 2.5 | 2 | 1 | 5 | 2 | 4 | 2 | 5 | 4 | 3 | 4 | 5 | 3.41 ± 1.3 |

| LFPstft | 2 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 2 | 4 | 1 | 1 | 2 | 3 | 1.56 ± 0.9 |

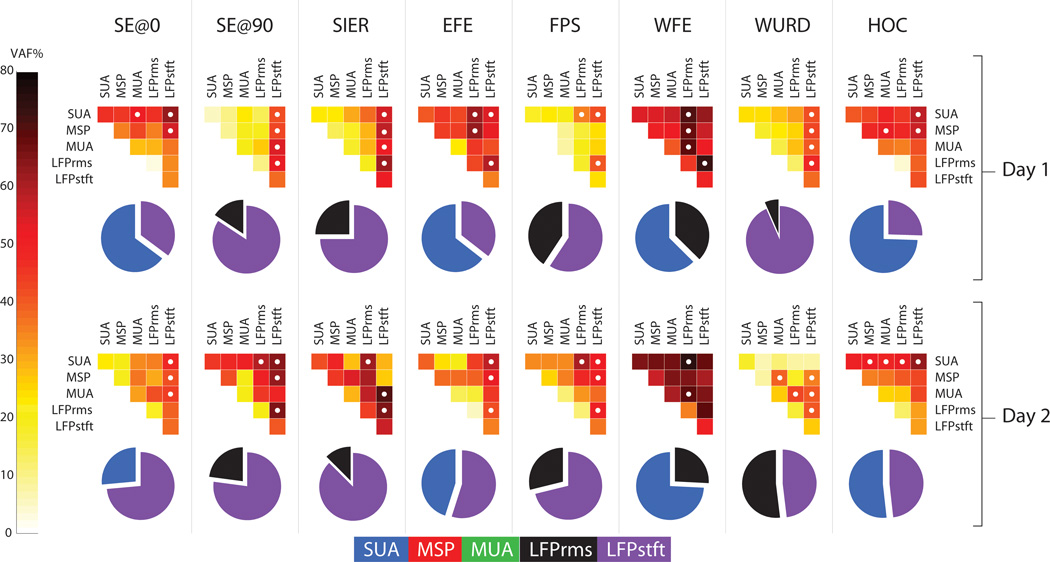

Figure 6 shows for both session days prediction results when pairs of feature types (e.g. SUA+MSP, MUA+LFPrms, etc…) were considered for decoding. Each upper-triangular color plot shows the mean VAF% resulting from predicting an imagined movements (each column) using decoders which included a single feature type (main diagonal) versus including pairs of feature types (plot interiors). In all cases, there was a significant advantage when combining feature types for neural decoding over using just a single feature type, as evidenced by the darker colors in the plot interiors. Overall, decoding of imagined shoulder movements (SE@0, SE@90, SIER) improved by an average of 12.8%, 18.6%, and 11.7% respectively using paired feature types over single feature types. Likewise, decoding of imagined EFE and FPS increased by 19.3% and 20% respectively. Finally, decoding of imagined distal movements (WFE, WURD, HOC) increased by 14.6%, 13.1% and 11.0% respectively. All increases in decoding accuracy were statistically significant based upon the confidence intervals of the mean (p < 0.05, paired t-test). In many cases, differing combinations of features resulted in statistically similarly accurate predictions. These are designated by white circles in the Figure 6 triangular plots. Each pie chart in Figure 6 shows, for each imagined movement the composition of the decoder of paired feature types that resulted in the highest prediction VAF%, consistent across both recording sessions. Each slice in the pie chart represents the portion of the total VAF% of the imagined movement prediction that is attributable to the stated neural feature. Over all movements, the LFPstft feature accounted for the largest portion of the explained variances over any other feature, accounting for 46.9% of the variance explained by the predictions on session day one and 56.0% on session day two. The next most useful signal for prediction in the paired signal analysis was the SUA, which accounted for 40.9% of the variance explained by the predictions on session day one and 31.2% on session day two.

Figure 6.

The top panel shows complete data from the first recording session and the bottom panel shows complete data from the second recording session. The upper triangular color plots compare the results of using a single neural feature type in the decoder (plot diagonals) versus using paired combinations of signal types (plot interiors). Lighter plot diagonals and darker plot interiors indicate that average VAF% was enhanced by using pairs of signal feature types (e.g. SUA + LFPstft) over a single signal feature type. White dots in the interior squares indicate those pairings which gave statistically equivalent maximal VAF%s (Friedman test, p < 0.05) for prediction of the given movement. The corresponding pie chart underneath each color plot shows the contribution of each signal type in the best pairing that was consistent across both recording sessions.

Discussion

The information encoded by a variety of intracortical signals related to executed arm movements has been widely investigated in the able-bodied monkey model. The present study demonstrated that in a person with prolonged and severe paralysis, cortical signals remaining post-injury carry significant information for predicting visually-guided imagined single-joint arm movements. Statistically, ensemble SUA and LFPstft were equally useful for predicting imagined single-joint arm movements, followed in rank by MSP, and MUA and LFPrms. Additionally, this study demonstrated that using pairs of signal modalities, such as SUA+LFPstft, enhanced the accuracies of the predictions.

Three recent studies have shown that using the same single 96-microelectrode array recording over M1 in a neurologically intact non-human primate, 25 arm related degrees-of-freedom (DOFs) could be predicted with high fidelity based upon sorted unit activities or high or low frequency local field potentials during random reaches [22], [32], [33]. In the present study, each of eight single-joint DOFs were predicted with varying success. Consistent with placement of the array in the distal arm and hand area of M1 [19], wrist flexion-extension and hand opening-closing were predicted more reliably than the other imagined joint movements. The finding of our study, that ensemble LFPstft decoding was on par with, and in some cases exceeded ensemble SUA decoding, is in contrast to two of these studies. Zhuang [33], using high frequency LFP bands, and Bansal [32], using low frequency LFP bands, both suggest that their decoding of arm kinematics in monkeys using LFPs were inferior to using sorted spikes (SUA). More specifically, Bansal noted that while the median decoding accuracy using an individual low frequency LFP band exceeded that using a single unit, the decoding accuracy using multiple SUAs exceeded that using multiple low frequency LFP bands as the number of available spikes increased. It should be noted that in many instances, LFP decoding exceeded SUA decoding in the average case (see [32], Figure 6). The difference in findings of our study may be due to the difference in task (purely imagined versus executed movement), may be due to the current study simultaneously using both low and high frequency LFP bands for decoding, or may be because the LFP is possibly better for decoding slower or smoother movements [32], such as those observed and imagined in the present investigation.

In all cases, including multiple feature types, and particularly the LFPstft features, within the decoder resulted in a more accurate prediction of joint angle position. Other investigations who have reported LFP decoding accuracies to be comparable to SUA decoding accuracies, also have reported this increase in decoding accuracy when combining LFPs with other signal types [28]. This suggests that in the current participant each of the different signal types, and the LFP in particular, contained partially distinct information about the imagined movements. The superposition and/or interaction of multiple feature types within a single decoder provided a more holistic and accurate picture of the imagined movement kinematics. Thus, future efforts may be better spent decoding the simultaneous activities of multiple feature types, including LFPs, to increase the accuracy of decoding imagined movements.

A critical assumption of this study is that the intended trajectories imagined by the paralyzed participant were the same as those observed. Some studies have suggested that paralyzed persons may exhibit impaired motor imagery ability, including abnormal temporal characteristics [40], [41]. Despite this potential imagery deficiency, the decoded trajectories of the imagined joint movements matched well with the observed joint movements after optimization of the neural feature set. Persons with spinal cord injury and brainstem stroke retain a good portion of the motor planning program [42], [43], and thus are able to produce appropriate mental imageries for modulation of neural activities related to arm movement. The use of visual guidance significantly aided the study participant in performing the mental imagery tasks. The instruction of imagining the visually guided movements, rather than making an actual attempt at the movement, was given to allow the study participant to mentally simulate the movements at a reasonable speed, without resulting in self-reported over-exertion. Several studies have indicated that, particularly in persons with paralysis, imagined movements result in qualitatively similar neural activity to attempted movements, though with reduced amplitude. Furthermore, typical inhibitory mechanisms for movement suppression are greatly weakened in paralyzed persons [44], [45]. Thus the present results would seem to be largely unaffected by any actual attempts at movement by the participant.

There is a question as to whether the observed modulation in the spiking activity for any movement was due to actual imagination of the movement, or due to the presentation of the visual stimulus. A number of studies have suggested that the initial single unit and neural population responses in M1 may be due to the presentation of the visual stimulus and not the desired motor plan. For example, Georgopoulos and colleagues showed that when monkeys are required to make movements in a direction orthogonal to that of the initial visual stimulus, a mental rotation of the neuronal population vector occurs from stimulus direction to movement direction before the actual movement occurs [46], [47]. Zhang and colleagues showed similarly that M1 activity encodes aspects of the visual stimulus, the necessary directional transformation between stimulus and target, and finally the movement itself [48]. In both the Georgopoulos and Zhang studies, the transformation in M1 from a visual stimulus-related to a behavioral response-related representation was complete in under 400 ms. The initial M1 activity in the present study may have been in response to the visual input, though it is unlikely that the visual representation dominated over the M1 response-related representation during the entire course of the imagined movements. Because the visual stimulus was continuously present during the current protocol, the associated M1 response may also have been continually present, and our experimentally design would not have been able to tease apart what part of the response was due to each. However, it could be argued that that any apparent visual representation in M1 was most likely due to an automatically activated feed-forward motor program that preceded the movement representation in M1 [48].

Though the present work focused on decoding single-joint movements from M1, the results have implications for realizing a cortically controlled multi-joint FES arm neuroprosthesis. Because of the seminal work of Georgopoulos and colleagues, which showed that the direction of hand movement in global space is encoded in single cell discharges and by the neural population vector [49–51], the field has largely focused on decoding arm endpoint kinematics from neural activities, in the global coordinate frame. Velliste and colleagues recently showed that a monkey could cortically operate a five-DOF robotic arm by controlling the endpoint position. The joint angles necessary to achieve the desired endpoint position were determined by an inverse kinematics solution, which had to implement constraints on the elbow elevation angle to achieve natural-looking movements [4]. Previous BrainGate studies also used an inverse kinematics and inverse dynamics model to convert endpoint control of a virtual arm into muscle activations for controlling elbow and shoulder movements in a two-dimensional plane [20]. This non-trivial inverse kinematics problem is made more complicated when attempting to solve for an eight-DOF arm moving in three-dimensional space, and even more so when attempting to determine, through inverse dynamics, the muscle activations required to achieve the desired joint angles. A more direct approach may be to decode joint positions (and related joint torques and muscle activations) in the intrinsic body coordinate frame, particularly since FES systems act directly to control these body coordinate frame DOFs. Ethier and colleagues achieved restored functional hand grasping in a temporarily paralyzed monkey [11] by directly decoding the required muscle activations to achieve the desired joint angles. The results of the present study show that joint angle information, in the body coordinate frame, can be directly decoded for the multi-joint shoulder, elbow, and wrist, in a person with high tetraplegia. Future work will investigate in parallel A) cortical control of arm joints in the body coordinate frame, particularly focusing on the coordination of the single-joint movements into a seamless multi-joint arm reach, and B) cortical control of the endpoint in the global coordinate frame, using a real-time 3-D musculoskeletal arm model [52] for solving the inverse kinematics and dynamics.

The two main limitations of this study are that data was only available from one participant, and the data analysis is performed offline, rather than in an online closed-loop experiment requiring coordination. Though there is some debate as to the extent that results from offline analyses can be extrapolated to online closed-loop control experiments due to biofeedback and neural adaptation [53], the results of the present study are informative in regards to the presence in the investigated participant of a variety of neural activities in response to visually guided cues of imagined limb movements, and how best to combine multiple neural features for decoding. These findings provide strong motivation to test the utility of these multi-modal arm joint decoders in closed-loop human BCI control studies of the BrainGate2 Clinical Trial.

Acknowledgment

We thank participant S3 for her dedication to this research. We thank Joris Lambrecht, MS for his expertise in the development of the 3D virtual environment.

AB Ajiboye was supported by a Career Development Award-1 through the Dept. of Veterans Affairs Rehabilitation Research and Development Service (RR&D). The work presented here was primarily supported by the Natl. Inst. of Health (NIH) through the Eunice Kennedy Shriver Natl. Inst. on Child Health and Human Development (NICHD, N01-HD-53403). This study was enabled through additional support for the BrainGate2 pilot clinical trial by RR&D, Office of Research and Development, Department of Veterans Affairs (Merit Review Awards: B6453R and A6779I) and by the NIH through the NICHD (RC1HD063931), the Natl. Inst. on Neurological Disorders and Stroke (R01NS-25074), the Natl. Inst. of Biomedical Imaging and Bioengineering (R01EB007401-01), the Natl. Inst. on Deafness and Other Communication Disorders (R01DC009899), and the Natl. Center for Research Resources (C06-16549-01A1). This work was also supported in part by the Doris Duke Charitable Foundation; the MGH-Deane Institute of Integrated Research on Atrial Fibrillation and Stroke, and the Katie Samson Foundation.

Biographies

A. Bolu Ajiboye received the dual BSE degree in biomedical and electrical engineering and computer science minor from Duke University in 2000. He received the PhD degree in biomedical engineering at Northwestern University under Richard. F. ff. Weir in 2007, where he was a National Institute of Health Pre-doctoral Fellow (F31). He is currently a research scientist in the Cleveland VA Medical Center, where he holds a Career Development Award. He is also an Assistant Professor in the Biomedical Engineering Dept. at Case Western Reserve University. Dr. Ajiboye’s research interests include investigation of BCI technologies for controlling neuroprostheses for restoring arm and hand function to severely motor impaired individuals.

John D. Simeral received the B.S. degree in Electrical Engineering from Stanford University in 1985, the M.S. in Electrical and Computer Engineering from the University of Texas at Austin in 1989, and in 2003 received the Ph.D. in Neuroscience from Wake Forest University School of Medicine as a pre-doctoral Ruth L. Kirschstein fellow. He was a postdoctoral fellow with Dr. John Donoghue in the Dept. of Neuroscience at Brown University. Dr. Simeral is currently an Assistant Professor (Research) in the School of Engineering at Brown University and a Research Biomedical Engineer for the Dept. of Veterans Affairs Rehabilitation Research and Development Service. His research focuses on extending our understanding of the neural basis of movement and developing neural prosthetic systems with the potential to enhance communication and independence for individuals with severe motor disability.

Leigh R. Hochberg is Associate Professor of Engineering, Brown University; Investigator, Center for Restorative and Regenerative Medicine, Rehabilitation R&D Service, Providence VA Medical Center; and Visiting Associate Professor of Neurology, Harvard Medical School. He maintains clinical duties in Stroke and Neurocritical Care at Massachusetts General Hospital and Brigham and Women’s Hospital, and is on the consultation staff at Spaulding Rehabilitation Hospital. Leigh directs the Laboratory for Restorative Neurotechnology at Brown and MGH, as well as the pilot clinical trials of the BrainGate2 Neural Interface System. His research is focused on developing and testing implanted neural interfaces to help people with paralysis and other neurologic disorders.

John P. Donoghue (M’03) was born in Cambridge, MA, on March 22, 1949. He received the Ph.D. degree from Brown University, Providence, RI, in 979, and the postdoctoral training from the National Institute of Mental Health. He is currently the Wriston Professor of Neuroscience and Engineering and the Director of the Brown Institute for Brain Science, Brown University. He is also with the Rehabilitation R&D Service, Dept. of Veterans Affairs, Washington, DC. His research interests include understanding of how the brain turns thought into movement and the development of the BrainGate neural interface system to restore control and independence for people with paralysis. Prof. Donoghue is a Fellow of the American Institute for Medical and Biological Engineering and the American Association for the Advancement of Science.

Robert F. Kirsch received the B.S. degree in electrical engineering from the University of Cincinnati, Cincinnati, OH, in 1982, and the M.S. and Ph.D. degrees in biomedical engineering from Northwestern University, Evanston, IL, in 1986 and 1990, respectively. He was a Postdoctoral Fellow in the Dept. of Biomedical Engineering at McGill University, Montréal, QC, Canada, from 1990 to 1993. He is currently Professor of Biomedical Engineering at Case Western Reserve University, Cleveland, OH, and Associate Director for Technology in the Cleveland VA FES Center, Cleveland, OH. His research focuses on restoring movement to disabled individuals using functional electrical stimulation (FES) and controlling FES actions via natural neural commands. Computer-based models of the human upper extremity are used to develop new FES approaches. FES user interfaces, including ones based on brain recordings, are being developed to provide FES users with the ability to command movements of their own arm.

Contributor Information

A. Bolu Ajiboye, Case Western Reserve University (Dept. of Biomedical Engineering) and the Dept. of Veterans Affairs Cleveland VA Medical Center (RR&D, FES Center of Excellence), Cleveland, OH, 44106, USA (ph: 216.791.3800 x4141; fax: 216.778.4259; aba20@case.edu)..

John D. Simeral, Dept. of Veterans Affairs (RR&D) Providence VA Medical Center, Providence, RI, 02908, USA and the School of Engineering, Brown Univ., Providence, RI, 02912, USA (john_simeral@brown.edu).

John P. Donoghue, Dept. of Veterans Affairs (RR&D) Providence VA Medical Center, Providence, RI 02908 USA and the Dept. of Neuroscience, Institute for Brain Science Program, Brown Univ., Providence, RI, 02912, USA. He is also with the Dept. of Veterans Affairs (RR&D), Providence, RI 02908 USA (john_donoghue@brown.edu)..

Leigh R. Hochberg, Dept. of Veterans Affairs (RR&D) Providence VA Medical Center, Providence, RI 02908 USA, the Dept. of Neurology, Massachusetts General Hospital, Brigham and Women’s Hospital, and Spaulding Rehabilitation Hospital, Harvard Medical School, Boston, MA, 02114, USA, and the School of Engineering,. He is also with the Dept. of Neuroscience Brain Science Program, Brown Univ., Providence, RI, 02912, USA, and the Dept. of Veterans Affairs (RR&D), Providence, RI 02908 USA (leigh_hochberg@brown.edu).

Robert F. Kirsch, Case Western Reserve University (Dept. of Biomedical Engineering) and the Dept. of Veterans Affairs Cleveland VA Medical Center (RR&D, FES Center of Excellence), Cleveland, OH, 44106, USA (rfk3@case.edu).

References

- 1.Serruya MD, Hatsopoulos NG, Paninski L, Fellows MR, Donoghue JP. Instant neural control of a movement signal. Nature. 2002;vol. 416(no. 6877):141–142. doi: 10.1038/416141a. [DOI] [PubMed] [Google Scholar]

- 2.Taylor DM, Tillery SIH, Schwartz AB. Direct cortical control of 3D neuroprosthetic devices. Science (80- ) 2002 Jun;vol. 296(no. 5574):1829–1832. doi: 10.1126/science.1070291. [DOI] [PubMed] [Google Scholar]

- 3.Taylor DM, Tillery SIH, Schwartz AB. Information conveyed through brain-control: cursor versus robot. IEEE Trans Neural Syst Rehabil Eng. 2003 Jun;vol. 11(no. 2):195–199. doi: 10.1109/TNSRE.2003.814451. [DOI] [PubMed] [Google Scholar]

- 4.Velliste M, Perel S, Spalding MC, Whitford AS, Schwartz AB. Cortical control of a prosthetic arm for self-feeding. Nature. 2008 Jun;vol. 453(no. 7198):1098–1101. doi: 10.1038/nature06996. [DOI] [PubMed] [Google Scholar]

- 5.Carmena JM, et al. Learning to control a brain-machine interface for reaching and grasping by primates. PLoS Biology. 2003 Nov;vol. 1(no. 2):193–208. doi: 10.1371/journal.pbio.0000042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wessberg J, et al. Real-time prediction of hand trajectory by ensembles of cortical neurons in primates. Nature. 2000 Nov;vol. 408(no. 6810):361–365. doi: 10.1038/35042582. [DOI] [PubMed] [Google Scholar]

- 7.Santhanam G, Ryu SI, Yu BM, Afshar A, Shenoy KV. A high-performance brain-computer interface. Nature. 2006 Jul;vol. 442(no. 7099):195–198. doi: 10.1038/nature04968. [DOI] [PubMed] [Google Scholar]

- 8.Musallam S, Corneil B, Greger B, Scherberger H, Andersen R. Cognitive control signals for neural prosthetics. Science (80- ) 2004 Jul;vol. 305(no. 5681):258–262. doi: 10.1126/science.1097938. [DOI] [PubMed] [Google Scholar]

- 9.Moritz CT, Perlmutter SI, Fetz EE. Direct control of paralysed muscles by cortical neurons. Nature. 2008 Dec;vol. 456(no. 7222):639–642. doi: 10.1038/nature07418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pohlmeyer EA, et al. Toward the restoration of hand use to a paralyzed monkey: brain-controlled functional electrical stimulation of forearm muscles. PLoS One. 2009 Jan;vol. 4(no. 6):e5924. doi: 10.1371/journal.pone.0005924. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ethier C, Oby ER, Bauman MJ, Miller LE. Restoration of grasp following paralysis through brain-controlled stimulation of muscles. Nature. 2012 Apr;vol. 485(no. 7398):368–371. doi: 10.1038/nature10987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.McFarland DJ, Sarnacki WA, Wolpaw JR. Electroencephalographic (EEG) control of three-dimensional movement. J Neural Eng. 2010 Jun;vol. 7(no. 3):036007. doi: 10.1088/1741-2560/7/3/036007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Birbaumer N, et al. A spelling device for the paralysed. Nature. 1999 Mar;vol. 398(no. 6725):297–298. doi: 10.1038/18581. [DOI] [PubMed] [Google Scholar]

- 14.Kennedy PR, Kirby MT, Moore MM, King B, Mallory A. Computer control using human intracortical local field potentials. IEEE Trans Neural Syst Rehabil Eng. 2004 Sep;vol. 12(no. 3):339–344. doi: 10.1109/TNSRE.2004.834629. [DOI] [PubMed] [Google Scholar]

- 15.Schalketal G. Two-dimensional movement control using electrocorticographic signals in humans. J Neural Eng. 2008 Mar;vol. 5(no. 1):75–84. doi: 10.1088/1741-2560/5/1/008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim S-P, Simeral JD, Hochberg LR, Donoghue JP, Friehs GM, Black MJ. Point-and-click cursor control with an intracortical neural interface system by humans with tetraplegia. IEEE Trans Neural Syst Rehabil Eng. 2011 Apr;vol. 19(no. 2):193–203. doi: 10.1109/TNSRE.2011.2107750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Simeral S-P, Kim JD, Black MJ, Donoghue JP, Hochberg LR. Neural control of cursor trajectory and click by a human with tetraplegia 1000 days after implant of an intracortical microelectrode array. J Neural Eng. 2011 Apr;vol. 8(no. 2) doi: 10.1088/1741-2560/8/2/025027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim S-P, Simeral JD, Hochberg LR, Donoghue JP, Black MJ. Neural control of computer cursor velocity by decoding motor cortical spiking activity in humans with tetraplegia. J Neural Eng. 2008 Dec;vol. 5(no. 4):455–476. doi: 10.1088/1741-2560/5/4/010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hochberg LR, et al. Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature. 2006 Jul;vol. 442:164–171. doi: 10.1038/nature04970. [DOI] [PubMed] [Google Scholar]

- 20.Chadwick EK, et al. Continuous neuronal ensemble control of simulated arm reaching by a human with tetraplegia. J Neural Eng. 2011 Jun;vol. 8(no. 3):034003. doi: 10.1088/1741-2560/8/3/034003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hochberg LR, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012 May;vol. 485(no. 7398):372–375. doi: 10.1038/nature11076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Vargas-Irwin CE, Shakhnarovich G, Yadollahpour P, K. Mislow JM, Black MJ, Donoghue JP. Decoding complete reach and grasp actions from local primary motor cortex populations. J Neurosci. 2010 Jul;vol. 30(no. 29):9659–9669. doi: 10.1523/JNEUROSCI.5443-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fraser GW, Chase SM, Whitford A, Schwartz AB. Control of a brain-computer interface without spike sorting. J Neural Eng. 2009 Oct;vol. 6(no. 5):055004. doi: 10.1088/1741-2560/6/5/055004. [DOI] [PubMed] [Google Scholar]

- 24.Ventura V. Spike train decoding without spike sorting. Neural Comput. 2008 Apr;vol. 20(no. 4):923–963. doi: 10.1162/neco.2008.02-07-478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Harris K, Henze D, Csicsvari J. Accuracy of tetrode spike separation as determined by simultaneous intracellular and extracellular measurements. J Neurophysiol. 2000:401–414. doi: 10.1152/jn.2000.84.1.401. [DOI] [PubMed] [Google Scholar]

- 26.Bullock TH. Signals and signs in the nervous system: the dynamic anatomy of electrical activity is probably information-rich. Proc Natl Acad Sci U S A. 1997 Jan;vol. 94(no. 1):1–6. doi: 10.1073/pnas.94.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Stark E, Abeles M. Predicting movement from multiunit activity. J Neurosci. 2007 Aug;vol. 27(no. 31):8387–8394. doi: 10.1523/JNEUROSCI.1321-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mehring C, Rickert J, Vaadia E, Cardosa de Oliveira S, Aertsen A, Rotter S. Inference of hand movements from local field potentials in monkey motor cortex. Nat Neurosci. 2003;vol. 6(no. 12):1253–1254. doi: 10.1038/nn1158. [DOI] [PubMed] [Google Scholar]

- 29.Rickert J, Oliveira SCD, Vaadia E, Aertsen A, Rotter S, Mehring C. Encoding of movement direction in different frequency ranges of motor cortical local field potentials. J Neurosci. 2005 Sep;vol. 25(no. 39):8815–8824. doi: 10.1523/JNEUROSCI.0816-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Andersen RA, Musallam S, Pesaran B. Selecting the signals for a brain-machine interface. Curr Opin Neurobiol. 2004 Dec;vol. 14(no. 6):720–726. doi: 10.1016/j.conb.2004.10.005. [DOI] [PubMed] [Google Scholar]

- 31.Scherberger H, Jarvis MR, Andersen RA. Cortical local field potential encodes movement intentions in the posterior parietal cortex. Neuron. 2005 Apr;vol. 46(no. 2):347–354. doi: 10.1016/j.neuron.2005.03.004. [DOI] [PubMed] [Google Scholar]

- 32.Bansal AK, Vargas-Irwin CE, Truccolo W, Donoghue JP. Relationships among low-frequency local field potentials, spiking activity, and three-dimensional reach and grasp kinematics in primary motor and ventral premotor cortices. J Neurophysiol. 2011 Apr;vol. 105(no. 4):1603–1619. doi: 10.1152/jn.00532.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhuang J, Truccolo W, Vargas-Irwin C, Donoghue JP. Decoding 3-D reach and grasp kinematics from high-frequency local field potentials in primate primary motor cortex. IEEE Trans Biomed Eng. 2010 Jul;vol. 57(no. 7):1774–1784. doi: 10.1109/TBME.2010.2047015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ajiboye AB, Hochberg LR, Donoghue JP, Kirsch RF. Application of system identification methods for decoding imagined single-joint movements in an individual with high tetraplegia. Conf Proc IEEE Eng Med Biol Soc. 2010 Jan;vol. 2010:2678–2681. doi: 10.1109/IEMBS.2010.5626629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rousche PJ, Normann Ra. A method for pneumatically inserting an array of penetrating electrodes into cortical tissue. Ann Biomed Eng. 1992 Jan;vol. 20(no. 4):413–422. doi: 10.1007/BF02368133. [DOI] [PubMed] [Google Scholar]

- 36.Hunter IW, Kearney RE. Two-sided linear filter identification. Med Biol Eng Comput. 1983 Mar;vol. 21(no. 2):203–209. doi: 10.1007/BF02441539. [DOI] [PubMed] [Google Scholar]

- 37.Westwick DT, Pohlmeyer EA, Solla SA, Miller LE, Perreault EJ. Identification of multiple-input systems with highly coupled inputs: application to EMG prediction from multiple intracortical electrodes. Neural Comput. 2006 Feb;vol. 18(no. 2):329–355. doi: 10.1162/089976606775093855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Gaona CM, et al. Nonuniform high-gamma (60-500 Hz) power changes dissociate cognitive task and anatomy in human cortex. J Neurosci. 2011 Feb;vol. 31(no. 6):2091–2100. doi: 10.1523/JNEUROSCI.4722-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Hollander M, Wolfe DA. Nonparametric Statistical Methods. Hoboken, NJ: John Wiley & Sons, Inc.; 1999. [Google Scholar]

- 40.Malouin F, Richards CL, Jackson PL, Lafleur MF, Anne D, Doyon J. The Kinesthetic and Visual Imagery Questionnaire ( KVIQ ) for Assessing Motor Imagery in Persons with Physical Disabilities: A Reliability and Construct Validity Study. J Neurol Phys Ther. 2007;vol. 31(no. March):20–29. doi: 10.1097/01.npt.0000260567.24122.64. [DOI] [PubMed] [Google Scholar]

- 41.Malouin F, Richards CL, Desrosiers J, Doyon J. Bilateral slowing of mentally simulated actions after stroke. Neuroreport. 2004;vol. 15(no. 8):1349–1353. doi: 10.1097/01.wnr.0000127465.94899.72. [DOI] [PubMed] [Google Scholar]

- 42.Sirigu A, et al. Congruent unilateral impairments for real and imagined hand movements. Neuroreport. 1995 May;vol. 6(no. 7):997–1001. doi: 10.1097/00001756-199505090-00012. [DOI] [PubMed] [Google Scholar]

- 43.Hotz-Boendermaker S, et al. Preservation of motor programs in paraplegics as demonstrated by attempted and imagined foot movements. Neuroimage. 2008 Jan;vol. 39(no. 1):383–394. doi: 10.1016/j.neuroimage.2007.07.065. [DOI] [PubMed] [Google Scholar]

- 44.Lacourse MG, Cohen MJ, Lawrence KE, Romero DH. Cortical potentials during imagined movements in individuals with chronic spinal cord injuries. Behav Brain Res. 1999;vol. 104:73–88. doi: 10.1016/s0166-4328(99)00052-2. [DOI] [PubMed] [Google Scholar]

- 45.Alkadhi H, et al. What disconnection tells about motor imagery: evidence from paraplegic patients. Cerebral Cortex. 2005 Feb;vol. 15(no. 2):131–140. doi: 10.1093/cercor/bhh116. [DOI] [PubMed] [Google Scholar]

- 46.Georgopoulos A, Lurito J, Petrides M, Schwartz AB, Joe T M. Mental rotation of the neuronal population vector. Science. 1989;vol. 243:234–236. doi: 10.1126/science.2911737. [DOI] [PubMed] [Google Scholar]

- 47.Lurito JT, Georgakopoulos T, Georgopoulos AP. Cognitive spatial-motor processes. Exp Brain Res. 1991;vol. 87:562–580. doi: 10.1007/BF00227082. [DOI] [PubMed] [Google Scholar]

- 48.Zhang J, Riehle A, Requin J, Kornblum S. Dynamics of single neuron activity in monkey primary motor cortex related to sensorimotor transformation. J Neurosci. 1997 Mar;vol. 17(no. 6):2227–2246. doi: 10.1523/JNEUROSCI.17-06-02227.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Georgopoulos A, Kettner R. Primate motor cortex and free arm movements to visual targets in three-dimensional space. I. Relations between single cell discharge and direction of movement. J Neurosci. 1988;vol. 8(no. August):2913–2927. doi: 10.1523/JNEUROSCI.08-08-02913.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Georgopoulos AP, Kettner RE, Schwartz AB. Primate Motor Cortex and Free Arm Movements to Visual Targets in Three- Dimensional Space. II . Coding of the Direction of Movement a Neuronal Population in by. J Neurosci. 1988;vol. 8:2928–2937. doi: 10.1523/JNEUROSCI.08-08-02928.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Georgopoulos AP, Schwartz AB, Kettner RE. Neuronal Population Coding of Movement Direction. Science (80- ) 1986;vol. 233(no. 4771):1416–1419. doi: 10.1126/science.3749885. [DOI] [PubMed] [Google Scholar]

- 52.Chadwick EK, Blana D, van den Bogert AJ, Kirsch RF. A Real-Time, 3-D Musculoskeletal Model for Dynamic Simulation of Arm Movements. IEEE Trans Biomed Eng. 2009;vol. 56:941–948. doi: 10.1109/TBME.2008.2005946. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Rouse AG, Moran DW. Neural adaptation of epidural electrocorticographic (EECoG) signals during closed-loop brain computer interface (BCI) tasks. Conf Proc IEEE Eng Med Biol Soc. 2009 doi: 10.1109/IEMBS.2009.5333180. 5514-7. [DOI] [PubMed] [Google Scholar]