Abstract

This paper reports on the phone scheduling systems that patients encounter when seeking addiction treatment. Researchers made a series of 28 monthly calls to 192 addiction treatment clinics to inquire about the clinics’ first available appointment for an assessment. Each month, the date of each clinic’s first available appointment and the date the appointment was made were recorded. During a 4-month baseline data collection period, the average waiting time from contact with the clinic to the first available appointment was 7.2 days. Clinics engaged in a 15-month quality improvement intervention in which average waiting time was reduced to 5.8 days. During the course of the study, researchers noted difficulty in contacting clinics and began recording the date of each additional attempt required to secure an appointment. On average, 0.47 callbacks were required to establish contact with clinics and schedule an appointment. Based on these findings, aspects of quality in phone scheduling processes are discussed. Most people with addiction seek help by calling a local addiction treatment clinic, and the reception they get matters. The results highlight variation in access to addiction treatment and suggest opportunities to improve phone scheduling processes.

1. Introduction

In the United States addiction treatment system, individuals often begin treatment by calling a local addiction treatment clinic. The initial call to schedule an appointment may be a patient’s first experience with addiction treatment, and research has demonstrated that patients respond to burdensome factors such as waiting time in deciding whether to enter treatment (Acton, 1975; Chun, Guydish, Silber, & Gleghorn, 2008; Sorensen et al., 2007). People with addiction generally have a low tolerance for waiting (Kaplan & Johri, 2000). Between 25-50% of patients placed on waiting lists are never admitted to treatment (Festinger et al., 1995; Hser et al., 1998; Stark, Campbell, & Brinkerhoff, 1990). Patients may continue to use alcohol and/or illicit drugs while waiting (Rosenbaum, 1995), and patients who are able to abstain during a waiting period often presume their abstinence means they do not need treatment (Redko, Rapp, & Carlson, 2006). Despite this evidence, lengthy waiting times are common in addiction treatment clinics (Carr et al., 2008).

Navigation problems may also impede access to treatment. The qualitative analysis of Ford et al. (2007) reported on 327 clinics that conducted “walk-throughs” of their processes to understand what a patient experiences when attempting to access treatment. Their analysis cited problems with phone access or first contact in 95 of the 319 clinics (approximately 30%) reporting walk-through results. Examples of phone access problems included calls being routed to the wrong person, staff members giving inconsistent information, and confusing phone systems making it difficult to leave a message or reach the appropriate person. Cumbersome phone scheduling systems may force patients to call several times before getting an appointment. During these delays, the need to satisfy a craving can overtake the intent to seek treatment.

As in addiction treatment, patients in primary care often face delays and obstacles to accessing treatment. In primary care, Murray and Berwick (2003) used an approach to waiting time measurement based on appointment availability that we have extended to addiction treatment clinics on a wide scale. Our goal is to quantitatively assess the phone scheduling processes that callers face in addiction treatment clinics and propose quality measures to assess and help improve phone access to addiction treatment.

2. Materials and methods

Data for this analysis come from the NIATx 200 study, a cluster-randomized trial of organizational change that compared quality improvement strategies across addiction treatment clinics in five states—Massachusetts, Michigan, New York, Oregon, and Washington. NIATx (the Network for the Improvement of Addiction Treatment) is a research center at the University of Wisconsin-Madison that promotes quality improvement in addiction treatment and coordinated the study.

Improvement collaboratives are a common method of quality improvement in healthcare, and yet little is known about which components of collaboratives are most effective. The NIATx 200 study tested which components most cost-effectively reduce waiting time to treatment, improve retention in treatment, and increase the number of new patients in addiction treatment clinics. Clinics were randomized within states to one of four groups, each of which used a different component of collaboratives: (1) interest circle calls (group teleconferences hosted by quality improvement experts), (2) coaching (individualized consulting by experts in quality improvement), (3) learning sessions (face-to-face meetings between quality improvement experts and clinic teams), and (4) the combination of all three components.

The study recruited outpatient and intensive-outpatient clinics at community addiction-treatment organizations. To be eligible, clinics needed to treat at least 60 patients annually, receive public funding, and have no previous experience with the quality improvement model used by NIATx. A total of 201 clinics were recruited, representing 174 unique organizations. Organizations could designate up to 4 clinics to participate, although most organizations (155 of 174) designated a single clinic.

Interventions were delivered at the clinic level. The project began with a six-month intervention focused on waiting time reduction. Besides the designated collaborative component, the intervention included a set of recommended practices, instructions, and quality improvement tools. The study was designed to provide the same content to all participants, varied by the type of support provided (interest circle calls, coaching, learning sessions, or a combination of the three). The intervention phase was followed by a nine-month sustainability period for monitoring waiting time improvements. Details of the study have been published elsewhere (Quanbeck et al., 2011).

In their work on reducing waiting time in primary care, Murray and Berwick (2003) developed a simple waiting time measurement approach that is based on the “third next available appointment.” Comparing the current date with the date of an open appointment in the future measures the number of days a patient has to wait to get an appointment. Murray and Berwick advocated using the third available appointment (rather than the first available appointment) to prevent a skewing of the data resulting from some patients getting immediate access because of last-minute no-shows and cancellations, while most patients wait longer. In contrast, we used the first available appointment because the improvement model used in NIATx 200 explicitly recommended practices such as walk-in availability and open scheduling. No-shows are a fact of life in addiction treatment, and the research team did not want to calculate waiting time in a way that penalized clinics that are flexible in meeting patient demand.

For the primary evaluation of NIATx 200, each of the five states supplied patient-level data that were used to calculate each clinic’s average waiting times from request to treatment. Primary waiting time measures are calculated using patients’ actual dates of service and are stored in administrative systems that can be difficult to access, and at baseline, none of the states collected the date of patients’ first request for treatment. The states required changes in their data systems to collect this information; the effort required and the significance of these changes are reported in Hoffman et al. (2011).

Because of the data systems’ limitations at baseline, and because clinics need ready access to performance data to conduct improvement projects, the research team launched an effort to request an alternative waiting time measure: the first available assessment appointment for a new patient. The research team called each clinic monthly to request this information, which was used to calculate a waiting time measure that the research team fed back to clinics every six months. Clinic teams could use this measure to monitor waiting time during their project work.

The analysis reported in this paper focuses on the waiting time measure collected during phone calls made to clinics to request the first available appointment for an assessment. Time to the first available assessment does not fully capture waiting time to treatment, because patients do not usually begin treatment on the same day as the assessment. However, clinics can theoretically shorten patients’ overall waiting time by reducing time to the first available appointment.

During data collection calls, callers identified themselves as researchers (i.e., not actual patients) requesting help for an alcohol and drug problem using a standardized patient profile: a non-pregnant female, self-referred, with no health insurance. The date of each call and the date of the first available appointment were recorded for each clinic every month. Waiting time was defined as time from contact to appointment, corresponding to the difference in calendar days between the date of the first available appointment and the date the appointment was made (which could be the date of a return call from the clinic or the last call placed by a researcher, if multiple calls were required).

Researchers called clinics during standard business hours (9 a.m. – 4 p.m. Monday–Friday), adjusted for each clinic’s time zone. The day of the month that the first call was placed to each clinic was varied using a computerized random number generator to reduce the potential for monthly periodicity effects. Early in the data collection process the research team was surprised that phone calls often went unanswered, and multiple callbacks were required to collect data. If calls or messages went unanswered, researchers called clinics up to seven times per month until an appointment date could be confirmed. Six months into the project, a field was added to the researchers’ database to record the date of each attempt to reach clinics, enabling a count of the monthly callbacks required to schedule an appointment for each clinic. Reasons for unanswered calls were noted by the researchers (e.g., left message—no return call).

For clinics in Cohort 1 (Michigan, New York, and Washington), calls started in July 2007. Calls to clinics in Cohort 2 (Massachusetts and Oregon) began in November 2007. The researchers set out to place 28 consecutive monthly calls to each of the 201 enrolled clinics. Four of the 201 clinics (2.0%) refused to submit information and were excluded from the analysis. We also excluded five clinics (2.5%) that specialized in treating patients in prison or jail settings, because the patient profile we used did not fit a patient from the correctional system.

To account for possible intervention effects, we tested for time trends in monthly waiting times using linear regression models with autocorrelated errors associated with repeated observations for each clinic. We estimated a linear trend of 0.10 days of reduction per month during the waiting time intervention (months 1-15). In the presentation of results, we examine waiting time before and after the intervention. The baseline period consists of data collected four months before the intervention period for each cohort; the post-intervention period consists of months 12-15 for each cohort.

Recording the number of callbacks provides an indication of the likelihood that a patient (or researcher) calling during normal business hours will have her call answered and is used as a measure of “phone access” in this analysis. In studying phone access, we focused on a nine-month period after the quality improvement intervention period ended (months 16-24). We chose this evaluation period for phone access for several reasons: first, we could not enter multiple callbacks into our database until six months into the project, making it impossible to study phone access before the intervention period; second, months 16-24 fall outside the intervention period for waiting time; and third, a time trend analysis suggested that callback rates increased slightly during the waiting time intervention period, an increase we attribute mainly to a ramping-up of the data collection process. During the evaluation period (months 16-24) callback rates were consistent from month to month, showing no statistically significant increase or decrease.

When multiple callbacks were required to schedule an appointment, we created a measure that incorporates time spent waiting for return calls or making callbacks by dividing the difference in waiting time between contact with the clinic vs. waiting time from the first request for an appointment by the total number of callbacks made. This calculation establishes an average addition to waiting time due to callbacks. We used data from the entire sample in this calculation; while the date of the first call placed to each clinic was clearly prescribed, the schedule for callbacks was less so, and might have been influenced by the researchers’ competing work demands or the increasing intensity of calling that was common near the end of each calendar month to close out monthly data collection. Using data from the entire sample makes the difference between the two waiting time measures a function of the number of callbacks made and not subject to the vagaries of the callback schedule for individual clinics.

The analytic dataset consisted of 28 monthly requests from 192 clinics (a total of 5,376 monthly appointment requests). Including 804 callbacks made during the period of evaluation for phone access (months 16-24), the researchers made 6,180 calls to clinics. Missing monthly observations were imputed based on the clinic’s other monthly values. The analysis is exploratory. Descriptive statistics are presented to examine waiting time to the first available appointment and phone access among the clinics. No statistical tests are performed on differences in means because of confidentiality concerns in identifying clinics and states in the analysis (during recruitment for NIATx 200, the research team had a stated policy to only publish results that had been aggregated to the group level based on random assignment). Rather, the results are intended to depict the phone scheduling processes that patients face when calling for an appointment. The data collection protocol was reviewed and approved by an Institutional Review Board at the University of Wisconsin-Madison.

3. Results

Table 1 displays organizational characteristics of the 201 clinics that enrolled in NIATx 200. Massachusetts recruited 43 clinics; Michigan, 42; New York, 41; Oregon, 37; and Washington, 38. The majority of the clinics (81%) were private, non-profit community addiction-treatment agencies. Most (54%) were freestanding clinics. Overall, median clinic admissions were 339 per year; the clinics treated a patient population that was 29% non-white/non-Hispanic. A separate analysis (Grazier et al., 2012) found that clinics that enrolled in NIATx 200 tended to have higher admissions and fewer minority patients than eligible clinics that did not enroll. Recruitment efforts were targeted at non-profit organizations; only three of the recruited clinics were for-profit.

Table 1.

Baseline Clinic Characteristics of 201 Enrolled Clinics

| Characteristic of clinic | N |

|---|---|

| Cohort 1 (#, proportion*) | |

| Michigan | 42 (0.21) |

| New York | 41 (0.20) |

| Washington | 38 (0.19) |

| Cohort 2 | |

| Massachusetts | 43 (0.21) |

| Oregon | 37 (0.18) |

| Type (#, proportion*) | |

| Private for-profit | 3 (0.01) |

| Private not-for-profit | 162 (0.81) |

| Unit of state government | 12 (0.06) |

| Unit of tribal government | 7 (0.03) |

| Unit of other government | 17 (0.08) |

| Primary setting (#, proportion*) | |

| Hospital or health center (including primary setting) | 18 (0.09) |

| Community mental health clinic | 35 (0.17) |

| Free-standing alcohol or drug treatment clinic | 109 (0.54) |

| Family or children's service agency | 8 (0.04) |

| Social services agency | 8 (0.04) |

| Corrections | 5 (0.02) |

| Other or unreported | 18 (0.09) |

| Annual admissions (median, 1st quartile, 3rd quartile) | 339 (214, 627) |

| Non-white/non-Hispanic patients (proportion) | 0.29 |

The sum of proportions across categories is less than one due to rounding error.

Table 2 presents descriptive statistics for the 192 clinics included in the analysis. During the 4-month baseline data collection period, average waiting time from contact to the first appointment was 7.26 days; in months 12-15 (at the end of the waiting time intervention period) average waiting time from contact to the first appointment was reduced to 5.81 days. During the evaluation period for phone access (months 16-24) the average number of callbacks required to schedule an appointment was 0.47. Ranges in waiting time and phone access are illustrated by presenting the standard deviation, minimum, first quartile, median, third quartile, and maximum values for each measure.

Table 2.

Waiting Time and Phone Access for 192 Clinics* in Analysis

| Waiting time from contact to appt. (baseline)** |

Waiting time from contact to appt. (months 12-15)** |

Number of callbacks required to schedule appt. (months 16- 24)*** |

|

|---|---|---|---|

| Mean | 7.26 | 5.81 | 0.47 |

| Std Dev. | 7.03 | 6.26 | 0.59 |

| Minimum | 0.00 | 0.00 | 0.00 |

| 1st quartile | 2.25 | 1.50 | 0.11 |

| Median | 5.00 | 3.25 | 0.33 |

| 3rd quartile | 10.25 | 7.88 | 0.67 |

| Maximum | 38.00 | 31.67 | 3.44 |

Of the 201 clinics enrolled in the study, 4 were excluded for missing data and 5 were excluded because they worked exclusively with criminal justice or prison populations, making the patient profile and phone access process inappropriate.

Waiting time is expressed in days, aggregated to the clinic level.

Callbacks are aggregated to the clinic level.

We calculated the average addition to waiting time due to callbacks using the approach outlined in the methods. This calculation provides an estimate of 7.8 additional days of waiting time for each callback required.

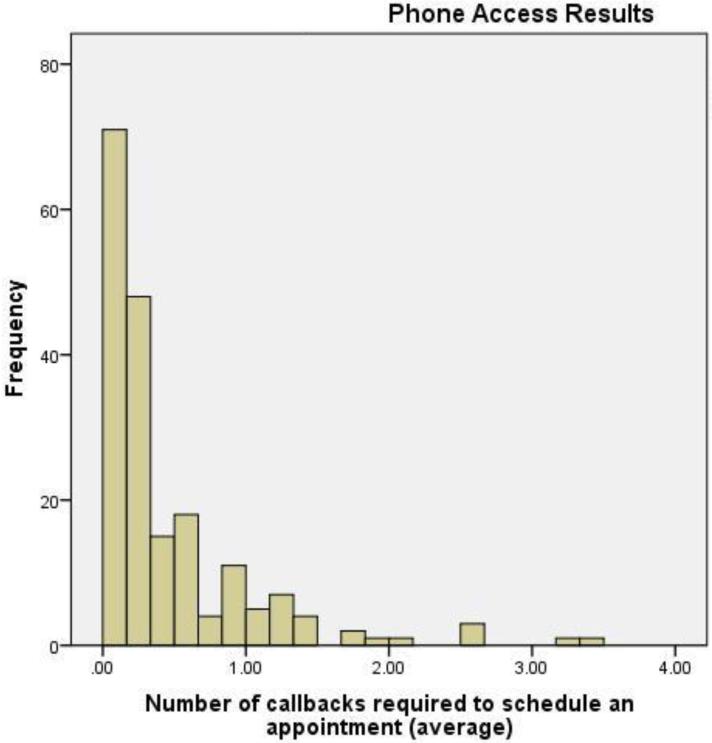

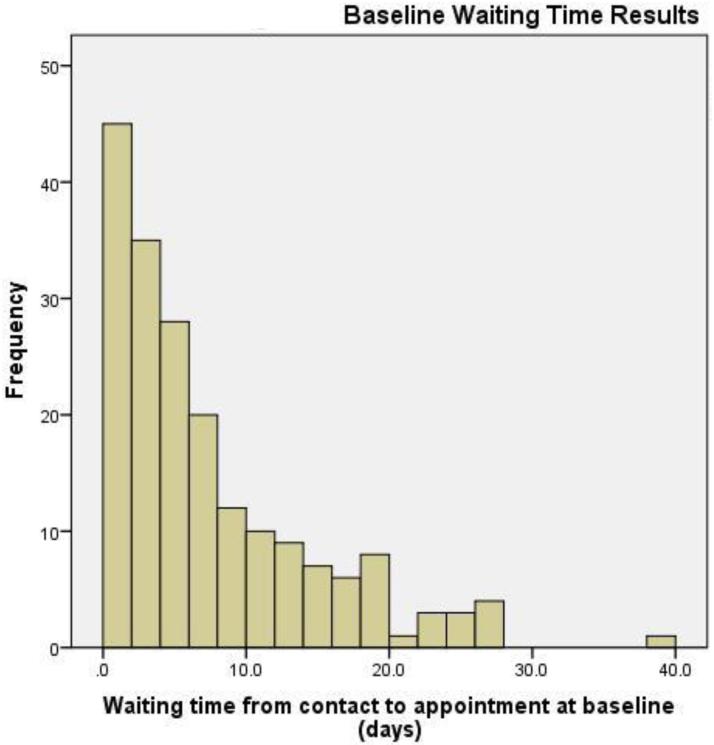

Figures 1 and 2 contain histograms that visually represent the dispersion in waiting time from contact to first appointment and phone access. For both waiting time and phone access, clinics on the right tail of the distribution skew the average value upward. For phone access, 132/192 clinics (69%) fall below the average value while for waiting time at baseline, 123/192 clinics (64%) do.

Figure 1.

Histogram of Phone Access Results

Mean: 0.47

Median: 0.33

Standard Deviation: 0.59

N=192

Figure 2.

Histogram of Waiting Time from Contact to Appointment Results

Mean: 7.26

Median: 5.00

Standard Deviation: 7.03

N=192

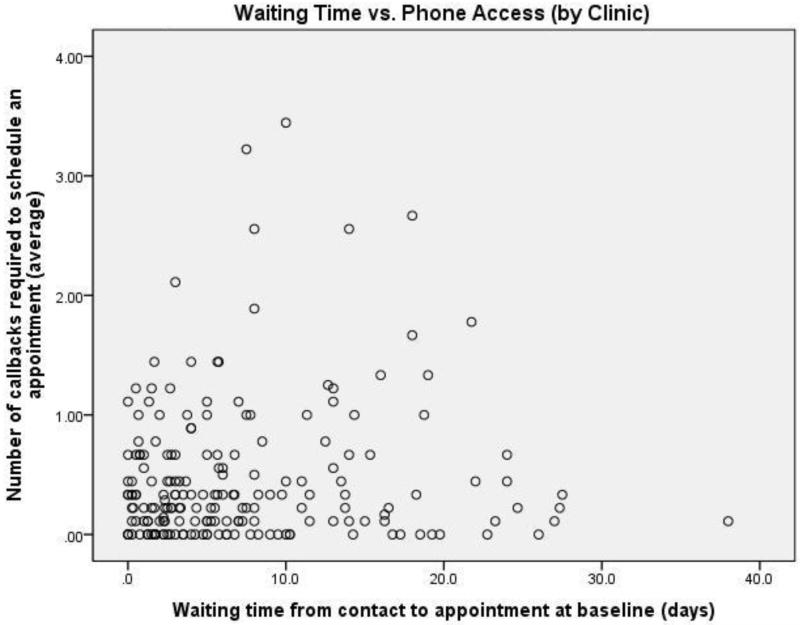

We used the data collected in this study to develop exploratory ratings of the quality of clinics’ scheduling phone processes based on the criteria of waiting time from contact to first appointment (at baseline) and phone access. What, specifically, makes a quality phone scheduling process? Certainly, having to wait for extended periods of time is not desirable from the patient’s perspective. Having to call back several times to accomplish the purpose of the call is similarly undesirable. This study provides a set of systematically collected data that may be used to characterize the quality of clinics’ phone scheduling processes. Figure 3 presents each clinic’s performance in both dimensions of quality identified in this study. Each clinic is represented as a single point, with the x-coordinate representing waiting time from contact to the first available appointment and the y-coordinate representing the number of callbacks required to schedule an appointment. Baseline waiting time values are used in Figure 3 to reflect pre-intervention conditions; the number of callbacks required to schedule an appointment from months 16-24 are used to allow time for ramping up of the data collection process.

Figure 3.

Waiting Time vs. Phone Access (by Clinic)

Note: Each data point represents average figures on both measures for one clinic.

In this graphical representation, the lower left region of the graph is most desirable, with movement upward and to the right being undesirable. Without making judgments about the relative importance of either factor, one reasonable interpretation of quality is to posit that clinics that combine short waiting times and few callbacks (i.e., those in the lower left region of the chart) provide service that facilitates access to treatment. Encouragingly, the majority of clinics are clustered in this lower-left hand region of the chart.

An approach to analyzing the dataset is to choose threshold values as standards for waiting time and phone access. Once quality standards are set, the proportion of clinics meeting them can be determined. For instance, if the standards set at a maximum of three days’ waiting time and no more than 0.25 callbacks required (on average), 34/192 clinics (18%) meet them. Patients calling clinics in this group can expect that their call will be answered, and they will be scheduled for an appointment within three days. Conversely, clinics that combine long waiting times with low phone access present two formidable barriers to entering treatment. Nine of 192 clinics (5%) combined average waiting time greater than 10 days and required at least one callback to schedule an appointment. In this small subset of clinics, the first call to the clinic is unlikely to be answered; when it is, the patient would still be asked to wait more than 10 days for an appointment. Admittedly, choosing threshold values as standards is somewhat arbitrary. Threshold values can easily be adjusted to recalculate the proportion of clinics meeting selected standards on one or both dimensions.

4. Discussion

This dataset provides an unprecedented opportunity to examine phone scheduling systems in addiction treatment. Placing thousands of phone calls to clinics revealed a picture of what it is like for patients to access treatment. The undertaking left the research team with the distinct impression that the quality of clinics’ phone scheduling processes varied, an observation consistent with anecdotal reports made in other evaluations (Capoccia, et al., 2007; Ford et al., 2007; McCarty et al., 2007; Hoffman et al., 2008). Quality is a difficult concept to define in healthcare and often a matter of subjective perception. For example, measures such as “patient satisfaction” provide a numerical basis for assessing quality, but still rely upon subjective ratings of experience. Previously, phone scheduling processes in addiction treatment have been subjectively described. In this study, we have two systematically collected quantitative measures related to phone scheduling quality: waiting time from contact to first appointment and number of callbacks required to schedule an appointment.

At baseline, average waiting time from contact to first appointment across all clinics ranged from zero days (patients could walk in at any time) to 38 days. While it is impossible to say exactly how long is “too long” for a patient to wait, research suggests that getting patients into treatment quickly is clinically important (Appel et al., 2004; Best et al., 2002). Delaying access by days or weeks for patients seeking urgent medical treatment would not be tolerated, and such delays are difficult to reconcile with the idea that addiction can be a life-threatening medical condition that deserves immediate attention. Patients with addiction may lose their motivation for recovery before a treatment slot opens, and some addicted patients view waiting lists as an indication of the view society has of them (Battjes & Onken, 1999; Redko et al., 2006).

Clinics’ phone scheduling processes should facilitate rather than impede getting into treatment. From a patient’s perspective, the desired result of the first call is getting an appointment as easily as possible (i.e., without having to call several times or wait for a return phone call). During the 9-month evaluation period for phone access (months 16-24), the researchers made 804 additional callbacks to obtain appointment information when they were unable to talk to a staff member, leave a message, or get a message returned. The results suggest that nearly half of the time (47%), a patient’s first phone call is met by voicemail (or not answered at all), leaving the patient waiting for a return call or forced to call back. In these instances, the researchers noted reasons that multiple calls were required. Examples of these reasons included clinics whose phone lines were busy; clinics that left calls unanswered for 10 or more rings without going to voicemail; clinics using dysfunctional voicemail systems that made it impossible to leave messages; clinics that repeatedly instructed callers to call back because the person responsible for making appointments was unavailable; and clinics putting the researcher on hold for more than 10 minutes. An example of the process encountered in one of the clinics requiring the most callbacks further illustrates the types of problems encountered by the researchers:

April: First attempt, phone line goes dead; second attempt, can’t leave a message, voice mailbox full; third attempt is met with a recording, and I leave a message that is not returned. Two more attempts are made on different days, but the line goes dead after several rings. July: Each call attempt is met with a voicemail; however, I cannot leave a message because the “mailbox is full.” I tried the operator by dialing “0” but was unable to reach anyone or leave a message.

While this example is extreme, phone scheduling problems that could hinder patient access were fairly widespread; 34 of 192 clinics (18% of the sample) averaged at least one callback for each monthly attempt to request an appointment. In many cases, phone access issues were transitory. The research team encountered severe access problems in several clinics, but these were relatively isolated cases. Six of 192 clinics (3%) averaged more than 2 callbacks per month, and were rarely if ever reachable with one phone call. In cases such as the example above, problems remained unresolved (and perhaps undetected) for months, even though the problems might be easily remedied once identified. Encouragingly, 43/192 clinics (22%) had “perfect” phone access over the evaluation period, meaning that 9 out of 9 appointment requests were fulfilled with one phone call.

The results described here confirm the existence of problems in clinics’ phone scheduling systems and indicate a range of responses across clinics in both waiting time and phone access. In their qualitative analysis, Ford et al. (2007) report that 30% of clinics had problems with phone access or first contact; this rate was based on content analysis of written reports, so the methods are quite different from those employed in this study. However, the rate and types of problems reported in Ford et al.’s (2007) study are fairly consistent with results reported here. Our results suggest that the majority of clinics studied provide reasonably good service, while a relative handful of clinics have significant room to improve on one or both measures.

Limitations

Despite our efforts to create real-world conditions, the effect of a research setting can never fully be eliminated. We felt it was critical that the researchers clearly identify themselves during the data collection process. While a clandestine approach to data collection may be preferable from a pure research perspective, such an approach is not amenable to collaborative research. The research team decided that the risk of engendering ill-will outweighed the potential benefits. In 2011, the U.S. Department of Health and Human Services proposed a plan to study access in more than 4,000 primary care clinics using “secret shoppers” posing as Medicare or Medicaid patients. The plan was abandoned after a public backlash from physicians, medical associations, and lawmakers. Instead, the process of data collection in our study was transparent, and data collected were compiled and returned to the research participants to provide feedback on improvement progress.

We attempted to replicate the experience of the patient but were unable to use the natural process for every clinic. Of the 192 clinics in the analysis, 26 clinics (13.5%) designated a particular contact person for fulfilling appointment requests. The designated contact person sometimes was a clinical supervisor, executive director, or other staff member who may not usually be involved in scheduling appointments. It is impossible to know whether the designated contact person was any more or less likely to answer the phone than the person we would have encountered had the usual scheduling process been followed. It is also unknown to what extent the research environment affected clinic behavior. On one hand, it is possible that clinics offered “good numbers” to improve their standing with the research team. On the other hand, it is difficult to imagine that patients would have an easier time navigating clinics’ phone scheduling systems than trained researchers who were in frequent contact with clinics, using contact information supplied by the clinics themselves as part of a voluntary study of quality improvement. The results may reflect a conservative estimate of phone access for actual patients contacting clinics for the first time, who often start with little information or experience in navigating the system.

With 197 out of 201 clinics reporting data, missing data were not a particular problem. The overall high response rate must in part be credited to the tact of the data collectors, who developed trust and cooperation with the clinics by clearly and patiently explaining the purpose of the calls on countless occasions. However, determining how to handle missing monthly observations in calculating waiting time did present challenges. Missing monthly waiting time observations represent a failure to contact clinics after repeated attempts, and perhaps the worst possible outcome from the patient’s perspective. Where data were missing, values were imputed based on the average of the clinic’s other monthly observations. One alternative way to handle missing values would be to assign the clinic a monthly value equal to the longest waiting time observed in any other month, which would raise the average waiting times presented here.

Our patient profile could not replicate the experience of all types of patients. For example, a homeless patient or a patient referred by the criminal justice system may not have access to a phone and may not be able to provide a callback number if required to leave a voicemail (Tuten, Jones, Ertel, Jakubowshi, & Sperlein, 2006). In addition, it may be that the appointments offered to patients varied by patient profile. For example, someone calling from a detox facility might be offered a different appointment than someone calling from the correctional system or someone who is self-referring. Our goal in making the calls was to be consistent in our patient profile so we could compare results within a clinic over time. Furthermore, HIPAA regulations would not allow a clinic to leave a voicemail message for a patient in a return phone call. For data collection purposes, we allowed clinics to leave appointment information in return messages, but in a real-world environment another phone call would have been necessary. Callbacks have an effect on how long patients wait for an appointment, but accurately modeling that effect with our data proved difficult due to variation in the frequency of callback attempts. A systematic callback schedule (for example, daily) after the first failed attempt might reduce the waiting time associated with callbacks that we estimated, but a daily callback schedule would have been impractical to implement. The researchers may have had more trouble scheduling appointments than patients really would if a clinic had caller identification and staff decided not to answer our calls. Finally, it is difficult to assess how representative these clinics are of the typical addiction treatment clinic, beyond observable organizational characteristics like size or ownership type. The clinics in this analysis volunteered to participate in a quality improvement study and are presumably interested in providing high-quality services. The nature of the sample may paint an overly optimistic view of the larger system, and raises questions about the generalizability of the findings.

Future research

The data collected on phone access and waiting time are exploratory, and further research is needed to validate the measures created for this analysis. The methods employed in this paper could potentially be extended to develop quality measures for phone scheduling processes in the addiction treatment field. Further research is needed to determine how these measures relate to process-of-care measures such as no-show and continuation rates and measures of waiting time calculated using patients’ actual dates of service. We did not examine clinic-to-clinic differences in waiting time or phone access due primarily to the risk of identifying participants; future research should focus on reasons for variation between clinics.

Conclusion

This paper presents a method for measuring waiting time and phone access to addiction treatment based on methods used in primary care clinics. The method is relatively easy to employ and may be useful for addiction treatment clinics, policymakers, and payers in assessing the quality of phone scheduling processes. The results confirm the existence of phone access problems that can hinder access to treatment (in previous research, evidence of these problems has been largely anecdotal or based on isolated examples) and provide an indication of their prevalence. The initial phone call a patient places to request help with addiction may be the most important call that person ever makes. Clinics that make patients call multiple times and wait for extended periods for their appointment have opportunities to improve their services.

As consumers in everyday life, we generally expect responsive customer service from the organizations we patronize. Organizations must continually examine their processes to uncover barriers to treatment. Leaders of addiction treatment clinics are encouraged to pick up the phone to experience what it is really like to seek treatment in their organizations. Experiencing the scheduling process as a patient does can provide an expedient method for exposing organizational problems that can be rectified through quality improvement.

Acknowledgements

A grant from The National Institute on Drug Abuse (R01 DA020832) supported the NIATx 200 research team at the University of Wisconsin-Madison and the 201 treatment clinics participating in the study. The University of Wisconsin-Madison managed and evaluated the study in collaboration with investigators from Oregon Health and Science University and the University of Miami. The authors wish to express their gratitude to the clinic staff members who participated in the NIATx 200 study, especially those who graciously provided information that we hope will help improve the addiction treatment system for the patients who need it.

References

- Acton JP. Nonmonetary factors in the demand for medical services: Some empirical evidence. The Journal of Political Economy. 1975;83(3):595–614. [Google Scholar]

- Appel PW, Ellison AA, Jansky HK, Oldak R. Barriers to enrollment in drug abuse treatment and suggestions for reducing them: Opinions of drug injecting street outreach patients and other system stakeholders. American Journal of Drug & Alcohol Abuse. 2004;30(1):129–153. doi: 10.1081/ada-120029870. [DOI] [PubMed] [Google Scholar]

- Battjes RJ, Onken LS. Drug abuse treatment entry and engagement: Report of a meeting on treatment readiness. Journal of Clinical Psychology. 1999;55(5):643–657. doi: 10.1002/(sici)1097-4679(199905)55:5<643::aid-jclp11>3.0.co;2-s. [DOI] [PubMed] [Google Scholar]

- Best D, Noble A, Ridge G, Gossop M, Farrell M, Strang J. The relative impact of waiting time and treatment entry on drug and alcohol use. Addiction Biology. 2002;7(1):67–74. doi: 10.1080/135562101200100607. [DOI] [PubMed] [Google Scholar]

- Capoccia VA, Cotter F, Gustafson DH, Cassidy EF, Ford JH, II, Madden L, et al. Making “stone soup”: Improvements in clinic access and retention in addiction treatment. Joint Commission Journal on Quality and Patient Safety. 2007;33(2):95–103. doi: 10.1016/s1553-7250(07)33011-0. [DOI] [PubMed] [Google Scholar]

- Carr CJA, Xu J, Redko C, Lane DT, Rapp RC, Goris J, et al. Individual and system influences on waiting time for addiction treatment. Journal of Addiction Treatment. 2008;34(2):192–201. doi: 10.1016/j.jsat.2007.03.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun JS, Guydish JR, Silber E, Gleghorn A. Drug treatment outcomes for persons on waiting lists. The American Journal of Drug and Alcohol Abuse. 2008;34(5):526–533. doi: 10.1080/00952990802146340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Festinger DS, Lamb RJ, Kountz MR, Kirby KC, Marlowe DB. Pretreatment dropout as a function of treatment delay and patient variables. Addictive Behaviors. 1995;20(1):111–115. doi: 10.1016/0306-4603(94)00052-z. [DOI] [PubMed] [Google Scholar]

- Ford JH, II, Green CA, Hoffman KA, Wisdom JP, Riley KJ, Bergmann L, et al. Process improvement needs in addiction treatment: Admissions walk-through results. Journal of Addiction Treatment. 2007;33(4):379–389. doi: 10.1016/j.jsat.2007.02.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grazier K, Oruongo J, Ford JH, II, Robinson J, Quanbeck A, Pulvermacher A, Gustafson DH. Clinic Participation in a Cluster-Randomized Trial of Quality Improvement. Manuscript submitted for review. 2012 [Google Scholar]

- Hoffman KA, Ford JH, II, Choi D, Gustafson DH, McCarty D. Replication and sustainability of improved access and retention within the network for the improvement of addiction treatment. Drug and Alcohol Dependence. 2008;98(1-2):63–69. doi: 10.1016/j.drugalcdep.2008.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman KA, Quanbeck A, Ford JH, II, Wrede F, Wright D, Lambert-Wacey D, et al. Improving substance abuse data systems to measure ‘waiting time to treatment’: Lessons learned from a quality improvement initiative. Health Informatics Journal. 2011;17(4):256–265. doi: 10.1177/1460458211420090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hser Y, Maglione M, Polinsky ML, Anglin MD. Predicting drug treatment entry among treatment-seeking individuals. Journal of Addiction Treatment. 1998;15(3):213–220. doi: 10.1016/s0740-5472(97)00190-6. [DOI] [PubMed] [Google Scholar]

- Kaplan EH, Johri M. Treatment on demand: An operational model. Health Care Management Science. 2000;3(3):171–183. doi: 10.1023/a:1019001726188. [DOI] [PubMed] [Google Scholar]

- McCarty D, Gustafson DH, Wisdom JP, Ford JH, II, Choi D, Molfenter T, et al. The Network for the Improvement of Addiction Treatment (NIATx): Enhancing access and retention. Drug and Alcohol Dependence. 2007;88(2-3):138–145. doi: 10.1016/j.drugalcdep.2006.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray M, Berwick DM. Advanced access: Reducing waiting and delays in primary care. JAMA: The Journal of the American Medical Association. 2003;289(8):1035–1040. doi: 10.1001/jama.289.8.1035. [DOI] [PubMed] [Google Scholar]

- Quanbeck AR, Gustafson DH, Ford JH, II, Pulvermacher A, French MT, McConnell KJ, et al. Disseminating quality improvement: Study protocol for a large cluster randomized trial. Implementation Science. 2011;6(1):44. doi: 10.1186/1748-5908-6-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Redko C, Rapp RC, Carlson RG. Waiting time as a barrier to treatment entry: Perceptions of substance users. Journal of Drug Issues. 2006;36(4):831–852. doi: 10.1177/002204260603600404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosenbaum M. The demedicalization of methadone maintenance. Journal of Psychoactive Drugs. 1995;27(2):145–149. doi: 10.1080/02791072.1995.10471683. [DOI] [PubMed] [Google Scholar]

- Sorensen JL, Guydish J, Zilavy P, Davis TB, Gleghorn A, Jacoby M, et al. Access to drug abuse treatment under treatment on demand policy in San Francisco. The American Journal of Drug and Alcohol Abuse. 2007;33(2):227–236. doi: 10.1080/00952990601174824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stark MJ, Campbell BK, Brinkerhoff CV. “Hello, may we help you?” A study of attrition prevention at the time of the first phone contact with substance-abusing patients. The American Journal of Drug and Alcohol Abuse. 1990;16(1-2):67–76. doi: 10.3109/00952999009001573. [DOI] [PubMed] [Google Scholar]

- Tuten M, Jones H, Ertel J, Jakubowshi JL, Sperlein JC. Reinforcement-based treatment: A novel approach to treating addiction during pregnancy. Counselor. 2006;7(3):22–29. [Google Scholar]