Abstract

Longitudinal brain image analysis is critical for revealing subtle but complex structural and functional changes of brain during aging or in neurodevelopmental disease. However, even with the rapid increase of clinical research and trials, a software toolbox dedicated for longitudinal image analysis is still lacking publicly. To cater for this increasing need, we have developed a dedicated 4D Adult Brain Extraction and Analysis Toolbox (aBEAT) to provide robust and accurate analysis of the longitudinal adult brain MR images. Specially, a group of image processing tools were integrated into aBEAT, including 4D brain extraction, 4D tissue segmentation, and 4D brain labeling. First, a 4D deformable-surface-based brain extraction algorithm, which can deform serial brain surfaces simultaneously under temporal smoothness constraint, was developed for consistent brain extraction. Second, a level-sets-based 4D tissue segmentation algorithm that incorporates local intensity distribution, spatial cortical-thickness constraint, and temporal cortical-thickness consistency was also included in aBEAT for consistent brain tissue segmentation. Third, a longitudinal groupwise image registration framework was further integrated into aBEAT for consistent ROI labeling by simultaneously warping a pre-labeled brain atlas to the longitudinal brain images. The performance of aBEAT has been extensively evaluated on a large number of longitudinal MR T1 images which include normal and dementia subjects, achieving very promising results. A Linux-based standalone package of aBEAT is now freely available at http://www.nitrc.org/projects/abeat.

Introduction

Brain structure and function change as a result of aging or brain diseases such as Alzheimer’s disease [1]. Magnetic resonance imaging (MRI) provides a safe way to image brain structure and function in vivo. Thus, longitudinal MRI is widely used to reveal brain changes in basic and clinical neuroscience studies. For example, Chetelat et al. [2] used a longitudinal voxel-based method to map the progression of gray matter (GM) loss in mild cognitive impairment (MCI) patients over time, and found a significant GM loss in brain areas such as temporal cortex and parietal cortex. Nakamura et al. [3] further found longitudinal neocortical GM volume reduction in the first-episode schizophrenia, but increase in the first-episode affective psychosis. In addition to these volumetric studies, longitudinal cortical surface change associated with normal aging was also studied in [4] by reconstructing cortical surfaces from longitudinal MR images. They found widespread aging-related cortical thickness decline, especially in frontal and parietal regions [4]. On the other hand, 4D cortical thickness measurement was also developed for studying Alzheimer’s disease (AD) in [5], [6].

Since brain change pattern could be subtle and complicated during aging or in brain diseases, it is important to develop accurate longitudinal analysis tools. To do this, current analysis tools are generally based on independent processing of each time-point image of the same subject, involving the steps of image preprocessing, brain extraction, tissue segmentation, and brain labeling. Specifically, image preprocessing is first used for bias correction and histogram matching for each original MR image. Brain extraction is then used to remove non-brain tissues, such as scalp, skull, and dura [7], while keeping all brain tissues such as white matter (WM), gray matter (GM), and cerebral spinal fluid (CSF). Tissue segmentation is further performed to classify the brain-extracted image into WM, GM, and CSF, which will allow the measurement of overall brain tissue changes over the time. Finally, brain labeling is applied to delineating brain ROIs in each time-point image, which allows the study of longitudinal change of each ROI [8], [9].

Various toolboxes have been developed for this purpose, including ITK [10], FSL [11], FreeSurfer [12], and SPM [13]. However, these toolboxes are mainly developed for analysis of single-time-point images, not for longitudinal images, except FreeSurfer that includes a longitudinal surface reconstruction component. Since brain changes are subtle during aging and in most degenerative diseases [1], especially for a typical longitudinal follow-up of only one to two years [9], [14], it is expected that the analysis results in each step of brain extraction, tissue segmentation, and ROI labeling should be accurate and consistent for the longitudinal images. However, it is challenging for the conventional single-time-point based analysis methods to achieve the longitudinal consistent results, since no temporal guidance is applied.

To address this limitation, we have developed a dedicated 4D Adult Brain Extraction and Analysis Toolbox (aBEAT). Specially, aBEAT provides functions of 4D brain extraction, 4D tissue segmentation, and 4D brain labeling for achieving the consistency in analyzing longitudinal brain MR images. It is worth noting that single-time-point image can be considered as a special case of longitudinal images and thus can also be analyzed by aBEAT. The functions of 4D brain extraction, 4D tissue segmentation, and 4D ROI labeling are provided by the following three 4D image analysis algorithms, respectively:

1: 4D deformable-surface-based brain extraction.

Classic brain extraction algorithms such as BSE [15], BET [16], and graph cut [17] generally perform a single run of brain extraction on a given image. Recently, advanced algorithms were developed to perform multiple brain extractions with multiple atlases or algorithms [7], [18]–[20] and then fuse all results to produce the final result with improved accuracy. However, all these algorithms are not able to achieve consistent brain extraction results from the longitudinal brain images, due to separate extraction of each time-point brain image. To address this issue, we use a 4D brain extraction algorithm [21], which was extended from a 3D deformable-surface-based brain extraction method [22]), for achieving consistent brain extraction results. It is performed by first constructing the initial common brain surface from the group mean of all aligned longitudinal images and then deforming it simultaneously to each time point with the constraint of temporal smoothness.

2: 4D tissue segmentation with cortical-thickness constraint [23].

A number of automated tissue segmentation algorithms [12], [13], [24] have been proposed to segment WM, GM, and CSF from the brain image. However, most of them were designed to segment 3D image. In contrast, CLASSIC [25] was specially designed for simultaneous segmentation of longitudinal brain images using voxel-wise tissue classification framework. However, it still cannot guarantee the consistency of cortical thickness measured on the longitudinal images, which could seriously affect the power of longitudinal study. To address this issue, we incorporate a 4D tissue segmentation algorithm with cortical-thickness constraint [23] into our toolbox. In this algorithm, a 3D coupled-level-sets method [26] is first used to obtain the initial segmentation of WM, GM, and CSF at each time-point, and then a longitudinal cortical-thickness constraint is further used to ensure its temporal consistency during the 4D tissue segmentation.

3: 4D ROI labeling with longitudinal groupwise image registration [27].

Although many pairwise image registration methods (such as Demons [28] and HAMMER [29]) can be used for atlas-based brain labeling, their labeling results for the longitudinal images could be inconsistent, since each time-point image is labeled independently. We thus propose to label all longitudinal images simultaneously with our longitudinal groupwise image registration algorithm [27], which can not only register all longitudinal images jointly to the common space, but also maintain their temporal coherence. Specifically, we will first adopt this algorithm to align all longitudinal images onto a common space for obtaining their group-mean image. Then, we use our symmetric feature-based pairwise registration method [30] to register an atlas with pre-labeled ROIs to this group-mean image. Finally, by combining the respective deformations, we can label the ROIs for each time-point image. Since the temporal coherence is well respected in our method, we will be able to get consistent labeling for different time points.

The performance of aBEAT has been extensively evaluated with a large number of longitudinal brain MR images from ADNI database. Compared with other existing algorithms for brain extraction (e.g., using 3D deformable-surface-based method) and tissue segmentation (e.g., using CLASSIC), aBEAT can achieve superior accuracy and consistency for longitudinal images. Moreover, our brain labeling module in aBEAT also shows promising results for longitudinal images. The remainder of this paper is organized as follows. The methodological description of aBEAT is provided in Section 2. Representative results by aBEAT are demonstrated in Section 3. Finally, discussion is presented in Section 4.

Methods

1. ADNI Database

Data used in the preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (http://adni.loni.ucla.edu). The ADNI was launched in 2003 by the National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), the Food and Drug Administration (FDA), private pharmaceutical companies and non-profit organizations, as a $60 million, 5-year public-private partnership. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). Determination of sensitive and specific markers of very early AD progression is intended to aid researchers and clinicians to develop new treatments and monitor their effectiveness, as well as lessen the time and cost of clinical trials.

The Principal Investigator of this initiative is Michael W. Weiner, MD, VA Medical Center and University of California–San Francisco. ADNI is the result of efforts of many co-investigators from a broad range of academic institutions and private corporations, and subjects have been recruited from over 50 sites across the U.S. and Canada. The initial goal of ADNI was to recruit 800 adults, ages 55 to 90, to participate in the research, approximately 200 cognitively normal older individuals to be followed for 3 years, 400 people with MCI to be followed for 3 years and 200 people with early AD to be followed for 2 years.” For up-to-date information, please see www.adni-info.org.

2. Overview of aBEAT

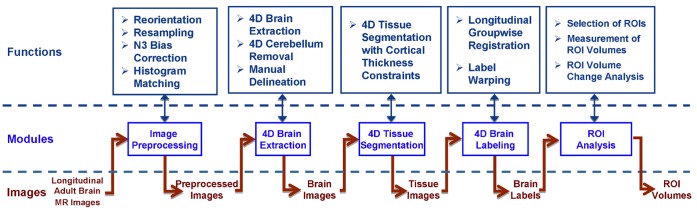

The architecture of aBEAT is shown in Fig. 1. The complete data processing pipeline consists of five major modules (see the five blue boxes in the middle row of Fig. 1). Briefly, the image preprocessing module normalizes the original images and corrects their intensities. The 4D brain extraction module consistently removes non-brain tissues (such as scalp and skull) and keeps brain tissues (including WM, GM, and CSF) from the preprocessed longitudinal images of each subject. The serial brain tissues of each subject are then jointly segmented by the 4D tissue segmentation module. Next, the 4D brain labeling module simultaneously warps an atlas with pre-labeled ROIs onto the longitudinal images for ROI labeling. Finally, longitudinal ROI volumes and the volume changes for all subjects can be automatically measured and displayed using the ROI analysis module. Major functions in each module are also listed in the top row of Fig. 1. It’s worth noting that the processing pipeline of the architecture is similar to that of our previously developed toolbox iBEAT [31]. However, the iBEAT is a dedicated toolbox for analysis of infant brain MR images, which have poor image quality, low tissue contrast, and most importantly the dynamic tissue change over time. Thus, all steps used for infant brain extraction, tissue segmentation, and brain labeling are different from the adult brain analysis, and definitely much different from the longitudinal image analysis. On the other hand, the 4D processing algorithms integrated in each functional module of aBEAT are specialized for the consistent analysis of longitudinal adult brain MR images, and are thus completely different from the processing algorithms in iBEAT. In addition, there is no ROI analysis module in iBEAT.

Figure 1. The architecture of aBEAT.

The user is free to process data using either an individual module or the entire pipeline (from image preprocessing to ROI analysis).

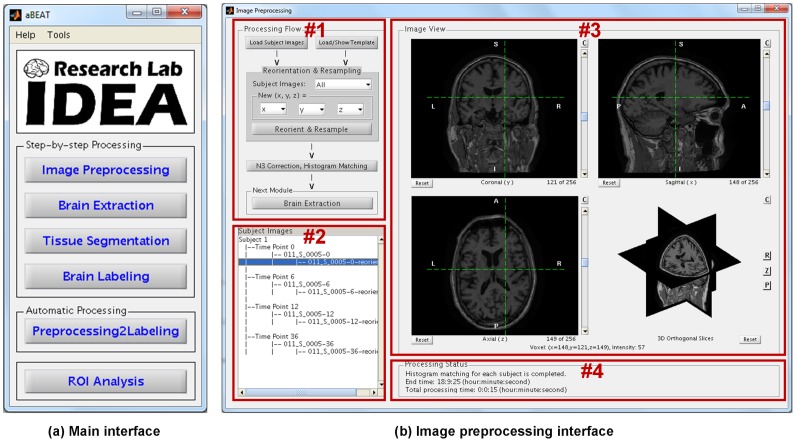

Parallel computing strategy is used in aBEAT for fast processing. Specifically, each image is processed by a thread in the image preprocessing module, while in the 4D modules such as brain extraction, tissue segmentation, and brain labeling, each subject is processed by a thread. It is worth noting that the current computer generally has multiple CPU cores, thus the use of the parallel computing strategy can largely reduce the computation time. The graphical user interfaces (GUIs) and the overall framework of aBEAT were implemented in MATLAB, while the modules and functions in aBEAT were implemented with the combination of C/C++, MATLAB, Perl and Shell scripts. The main interface and image preprocessing interface in aBEAT are shown in Fig. 2. Specifically, the main interface (see Fig. 2(a)) includes the menus for activating all five major processing modules (refer to Fig. 1). In addition to the step-by-step processing, the input images can be processed automatically from image preprocessing to brain labeling. The image preprocessing interface (see Fig. 2(b)) includes the step-by-step functions for image preprocessing. The interfaces of other modules are similar to the interface of this preprocessing module, except for the functions listed in the processing flow panel (#1).

Figure 2. The main interface and image preprocessing interface in aBEAT.

(a) The main interface includes the menus for activating all five major processing modules (refer to Fig. 1). In addition to step-by-step processing, input images can be processed automatically from image preprocessing to brain labeling. (b) The upper left panel (#1) displays step-by-step functions for image preprocessing. The bottom left panel (#2) lists the input images and generated images. The upper right panel (#3) displays a selected image. The bottom right panel (#4) shows the image processing status. The interfaces of other modules are similar to the interface of this preprocessing module, except for the functions listed in the processing flow panel (#1).

3. Image Preprocessing

Since the orientations, voxel sizes, and volume sizes of original input images may be different, aBEAT first reorients and resamples each image to a standard format, for facilitating further data analysis. Specifically, the standard orientation of aBEAT follows the RAS (Right, Anterior, and Superior) coordinate, which is a standard neurological convention and widely used in other neuroimaging software such as MRIcro [32], SPM [13], and eConnectome [33]. The standard voxel size and volume size in aBEAT are set as 1×1×1 mm3 and 256×256×256, respectively. The input images, whose original orientations are not in the RAS coordinate, are reoriented semi-automatically. Specifically, first the input image is reoriented tentatively with all valid reorientation parameters (obeying the right-hand rule). Then, the user can check all tentatively-reoriented images in the GUI and determine the right one that matches with the RAS coordinate system. Using the right reorientation parameters, aBEAT can reorient the input image, as well as other images that have the same original orientation, into the RAS coordinate. After all input images are reoriented and resampled, N3 bias correction [34] is performed on each of these images to remove intensity inhomogeneity. Finally, for each subject, the histograms of follow-up images are matched to the histogram of the baseline image to remove intra-subject intensity variations. Fig. 3 shows the N3 correction and histogram matching result for one subject.

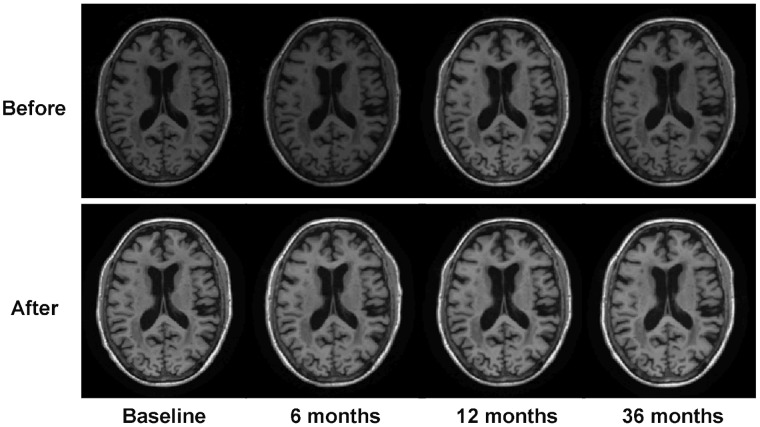

Figure 3. Illustration of N3 correction and histogram matching on serial images of one subject at 4 time points.

Axial slices of the serial images before and after the processing are shown, respectively. We can see that the intensity inhomogeneity and inconsistency of the serial images are removed clearly.

The reorientation, resampling, and N3 bias correction functions were implemented based on the FSL library (Analysis Group, FMRIB, Oxford, UK), ITK toolkit (Kitware Inc.), and MINC package (McConnell Brain Imaging Centre of the Montreal Neurological Institute, McGill University), respectively. In addition to the image preprocessing functions, a variety of functions were also implemented in aBEAT to support interactive inspection of MR images, including display of image slices, mouse-driven image slicing, zooming, translation, and rotation.

4. 4D Brain Extraction

A 4D deformable-surface-based brain extraction algorithm [21] was implemented in aBEAT to remove non-brain tissues (such as scalp, skull, and dura) simultaneously from the preprocessed images and produce consistent brain images for the following step of tissue segmentation.

4.1 4D Deformable-surface-based brain extraction

The 4D brain extraction algorithm, which was extended from our 3D deformable-surface-based brain extraction algorithm [22], consists of two steps: initialization of deformable surfaces, and consistent brain extraction with the deformable surfaces.

1: Initialization of Deformable Surfaces.

The initial deformable surfaces that roughly represent the brain boundaries of longitudinal images of a subject are obtained as follows. First, the preprocessed longitudinal brain MR images (with skull) are affine-aligned to their common space using a groupwise affine registration algorithm [35], for avoiding any potential bias due to the selection of template. Second, a brain probability map, attached with the MNI (Montreal Neurological Institute) brain atlas [36], is warped onto the affine-aligned image of each time-point by linear registration via FLIRT [37], followed by nonlinear registration via Demons [28]). Notice that the brain probability map in the space of MNI brain atlas was obtained by aligning and averaging a population of brain MR images with manually-delineated brain masks [22]. Third, the warped brain probability map is used to remove most non-brain voxels (scalp, skull, and dura) for the respective image of each time-point. Fourth, a spherical volume is estimated for each brain-extracted image of each time-point, according to its intensity and spatial distributions of brain voxels (WM, GM, and CSF). Notice that each estimated spherical volume is represented by its center of gravity (COG) and radius. Finally, the averaged COG and radius of all estimated spherical volumes from all brain-extracted images of all time-points are used to construct a common spherical surface, which is then imposed onto each time-point image as the initial brain surface.

2: Consistent Brain Extraction with Deformable Surfaces.

The above-obtained initial brain surfaces for the longitudinal images are deformed to achieve consistent brain extraction, typically with 1000 iterations [16]). Specifically, during the evolution of the deformable surface for each time-point image, four forces are placed at each vertex of the deformable surface to drive the surface deformation, which includes (1) spatial-smoothness force to smooth surface and obtain evenly spacing vertices; (2) image-intensity-based force to separate brain voxels from non-brain voxels; (3) brain-probability-map-guided force to drive the vertices to the true brain boundary; (4) temporal-smoothness force to drive each vertex to the center of its corresponding vertices in the temporal neighbors. Specially, with the temporal-smoothness force, we can obtain more accurate and temporally-consistent brain extraction results for the longitudinal brain images, compared with the case of using the 3D deformable-surface-based brain extraction method [22].

4.2 4D Cerebellum removal and manual delineation

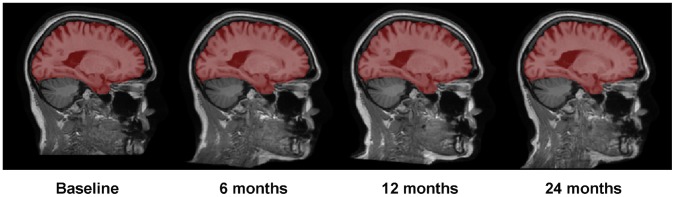

Automatic 4D cerebellum removal is performed based on the above brain extraction result for keeping only the cerebrum in the final image, as detailed below. First, as similarly described above, those brain-extracted longitudinal images are simultaneously registered with a groupwise affine registration algorithm [35], to further obtain their group-mean image. Then, the MNI brain atlas [36] is registered with this group-mean image using FLIRT [37], followed by Demons registration [28]. Finally, the cerebellums in the brain-extracted longitudinal images are simultaneously removed by the warped cerebellum mask from the MNI brain atlas. Fig. 4 shows the 4D brain extraction and cerebellum removal results on the preprocessed images at four time-points for a subject.

Figure 4. Demonstration of 4D brain extraction and cerebellum removal on four time-point images of a subject.

Cerebrums are extracted consistently.

If needed, the automatic 4D brain extraction results can be further refined by a manual editor provided in aBEAT. In this manual edition step, a colored brain mask (as shown in Fig. 4), representing the automatically-extracted brain of each time-point, will be overlaid on the corresponding preprocessed brain image. Then, a 3D (or 2D) painter or eraser tool can be used to edit each brain mask interactively in the three orthogonal slices (i.e., axial, coronal, and sagittal). Mouse-driven image inspection functions, such as image slicing, zooming, and translation, are also available in the manual editor for convenient editing. The final edited brain masks can be used to generate the final brain extraction results for the longitudinal images.

5. 4D Tissue Segmentation

The 4D tissue segmentation algorithm [23], which integrates local intensity distributions, spatial cortical-thickness constraint, and temporal cortical-thickness consistency constraint into a level-sets framework, was implemented in aBEAT to achieve consistent tissue segmentation for the longitudinal images.

Specifically, three level-set functions are used to separate WM, GM, CSF, and background intensities of each time-point image, where the zero-level surfaces of the level-set functions are the interfaces of WM/GM, GM/CSF, and CSF/background, respectively. Three terms, i.e., data fitting energy, spatial cortical-thickness constraint, and temporal cortical-thickness consistency constraint, are integrated into the level-sets framework. The three terms are briefly described below:

1: The data fitting term integrates local intensity distributions of current image and also the tissue probability from the population data. Specifically, the local intensity distributions are modeled for WM, GM, and CSF, respectively, by using Gaussian distributions with spatially-varying means and covariance matrices.

2: The spatial cortical-thickness constraint is proposed to preserve the cortical thickness (i.e., the distance between the surfaces of WM/GM and GM/CSF) within a biologically reasonable range (i.e., 1∼6.5 mm according to the literature), to guide the surface evolution during the segmentation [38].

3: The temporal cortical-thickness consistency constraint is proposed for consistent cortical segmentation of longitudinal images by making the estimated cortical thickness of current time-point in-between those at the immediate temporal neighbors [23].

The 4D tissue segmentation is then achieved by optimizing the above level-sets framework. First, an initial 3D segmentation using only the data fitting term and the spatial cortical-thickness constraint, also called as coupled level-sets [26], is performed at each time-point separately. Second, 4D registration [39] is performed based on the current segmentation results to obtain the difference of cortical thickness between neighboring time points. Third, the proposed 4D segmentation using data fitting term, cortical-thickness constraint, and temporal cortical-thickness smoothness constraint [23] is performed at each time-point image for joint segmentation. The second and third steps are performed alternately until convergence. It is worth indicating the importance of selecting good initialization for the three level-set functions. We adopted the initialization method in [26], where a convex optimization method was employed for initialization by using both global image statistical information and atlas spatial prior. The related parameters were chosen based on the cross-validation. This method has been proven robust by taking advantage of both global statistics and atlas prior. More details can be referred to [26]. Fig. 5 shows the 4D tissue segmentation result for longitudinal images of a normal control subject.

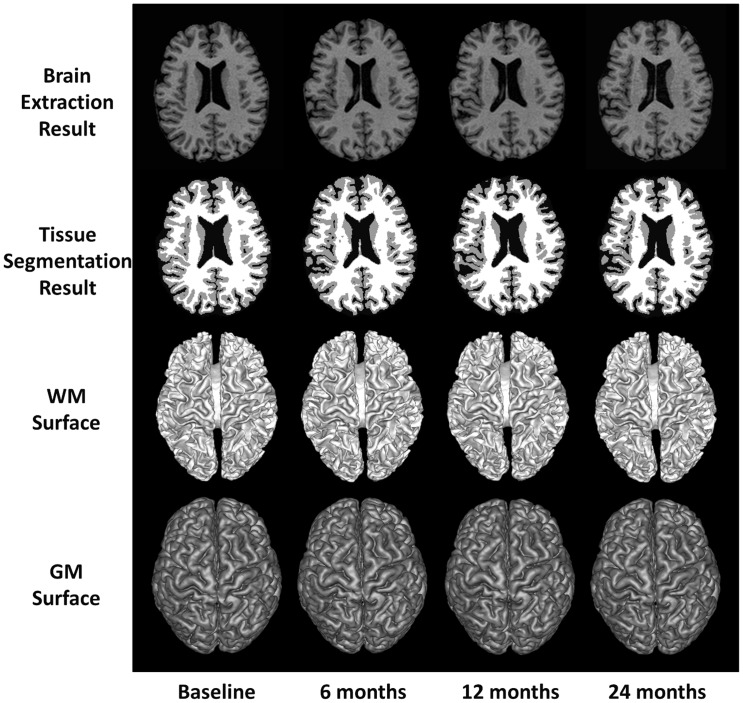

Figure 5. Demonstration of 4D tissue segmentation result.

WM, GM, and CSF tissues are segmented from the brain-extracted longitudinal images of a normal control subject at 4 time points. Both WM and GM surfaces are also displayed to show their consistency across different time-points.

6. 4D Brain Labeling

A novel longitudinal ROI labeling framework was developed in aBEAT to consistently label brain ROIs for the longitudinal images of subject. The MNI brain atlas [36] is used to label each longitudinal image into 45 ROIs in each hemisphere. It is worth noting that customized brain atlases can also be used in aBEAT for brain labeling.

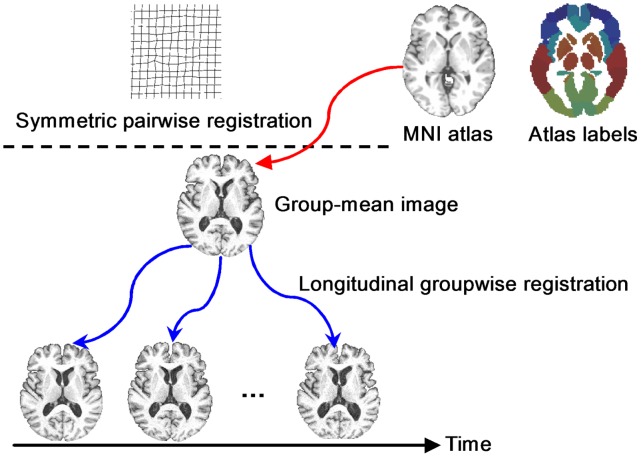

The general framework of our longitudinal ROI labeling is given in Fig. 6, which consists of two steps. In the first step, all longitudinal images of a subject are simultaneously registered to their group-mean image in the common space by our longitudinal groupwise image registration algorithm [27]. Specifically, we hierarchically select a set of key points with distinctive features to guide the registration between the tentatively-estimated group-mean image (in the middle of Fig. 6) and different time-point images by robust feature matching. Since the key points are located at distinctive regions, their correspondences can be identified more reliably. These key points are used as driving points to steer the whole registration. Meanwhile, by mapping the group-mean image onto the domain of each time point, every key point in the group-mean image has several warped points in different time points, which can be assembled into a time sequence to form a temporal trajectory. Therefore, the temporal coherence within longitudinal images can be assured by deploying kernel smoothing along all these temporal trajectories. Next, thin-plate splines (TPS) are performed to interpolate the dense deformation field for each time-point image, by considering all key points as control points in TPS. Given these tentatively-estimated spatiotemporal deformations, their average deformation will be used to further update the group-mean image. By repeating the above procedure (which includes correspondence detection, kernel smoothing, dense deformation interpolation, and group-mean image updating), we can finally obtain the spatiotemporal deformation fields (blue curves in Fig. 6) of all images to the group-mean image in the common space.

Figure 6. Illustration of our longitudinal ROI labeling framework.

The labels in MNI atlas are consistently warped, via the group-mean image, onto all time-point images of the subject.

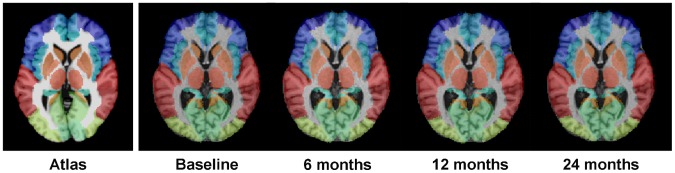

In the second step, a symmetric feature-based pairwise registration [30] is performed to estimate the deformation field (red curve in Fig. 6) from the MNI atlas image to the group-mean image of the subject. Finally, the deformation pathway from each longitudinal image to the MNI atlas can be obtained by composing its deformation field to the group-mean image (obtained in the first step) and the deformation field from the group-mean image to the MNI atlas image (obtained in the second step). Following the combined deformation pathway, we are able to map all 45×2 labels onto each time-point image. Since temporal coherence is well persevered in the first step, the labeling results across all time-point images are consistent, as shown in Fig. 7. From the second to the fifth columns of Fig. 7, we demonstrate the 4D ROI labeling result on the longitudinal brain images of a normal control subject, along with the MNI brain atlas shown in the first column.

Figure 7. Demonstration of 4D brain labeling result on longitudinal brain images of a normal control subject at four time-points.

The MNI brain atlas is shown in the first column, and different ROIs are shown with different colors.

7. ROI Analysis

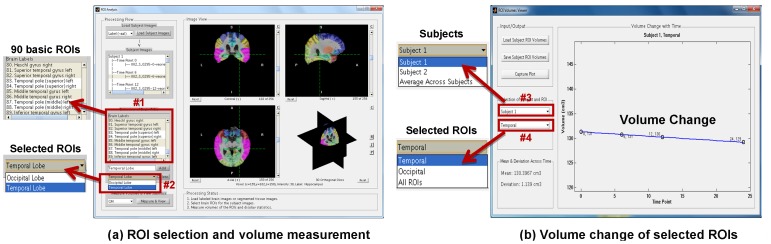

After performing 4D tissue segmentation and 4D brain labeling on longitudinal brain MR images of a group of subjects, we can obtain their respective serial tissue-segmented images and brain-labeled images, as well as their ROI volumes that can be used for longitudinal analysis of ROI volume changes. Specifically, the labeled ROI maps can be overlaid on the respective brain-extracted images (as shown in Fig. 8(a)), where a set of ROIs (such as temporal lobe and hippocampus) can be selected interactively by the user. The volumes of the selected ROIs for the longitudinal brain images of all subjects can then be measured automatically. Finally, the volume change over time for each ROI (or all ROIs) of each subject (or average volume across all subjects) can be displayed in aBEAT. In addition, longitudinal ROI volumes of all subjects can further be exported as a MATLAB ‘.mat’ file for future statistical analysis. Fig. 8(a) shows the interface for ROI selection and volume measurement. Fig. 8(b) shows the interface for display of volume change of selected ROIs.

Figure 8. ROI Analysis.

(a) The interface for ROI selection and volume measurement. When a brain-extracted image of a subject is selected, the respective labeled ROI map will be overlaid on the brain-extracted image. Then, a set of ROIs (shown in #2 panel) can be created, where each ROI may be a combination of multiple basic ROIs from the 90 basic ROIs (as shown in #1 panel, with Section 2.6 providing the definitions for the 90 basic ROIs). It’s worth noting that the selected basic ROIs in #1 panel are highlighted (in pink) in the labeled ROI map. The volumes of the selected ROIs (in #2 panel) for the longitudinal brain images of all subjects can then be measured automatically and displayed. (b) The interface for display of volume change of selected ROIs. The volume change over time for each ROI (or all ROIs, #4) of each subject (or the average volume across all subjects, #3) can be displayed.

Results

The performance of aBEAT in analysis of longitudinal brain MR images is evaluated qualitatively and quantitatively with a large number of longitudinal data from ADNI database. Representative evaluation results for 4D brain extraction, 4D tissue segmentation, 4D brain labeling, and the computation time are presented below.

1. 4D Brain Extraction

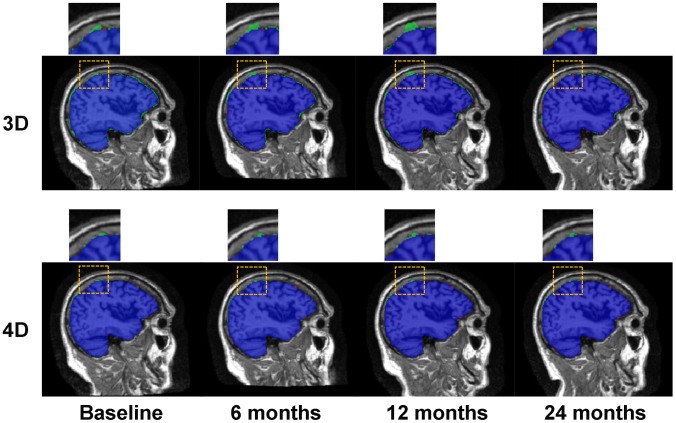

30 subjects (each with 4 time points), including 10 normal controls (NC), 10 mild cognitive impairment (MCI), and 10 Alzheimer’s disease (AD), were employed for the evaluation of 4D brain extraction. The longitudinal brain images of all these subjects were preprocessed (including bias correction and histogram matching) by aBEAT before brain extraction. The brain extraction results by aBEAT were compared with the results obtained by the 3D deformable-surface-based brain extraction method which achieved better performance over the classic BET and BSE methods as shown in [22]. Fig. 9 shows typical brain extraction results by the 3D deformable-surface-based method (top) and aBEAT (bottom), respectively. The small red regions in Fig. 9 indicate false negative voxels (wrongly-removed brain regions w.r.t. manual ground-truth), and the green regions denote false positive voxels (residual non-brain tissues w.r.t. manual ground-truth). Obviously, the 4D brain extraction in aBEAT achieves better performance than the 3D deformable-surface-based method.

Figure 9. Brain extraction results by the 3D deformable-surface-based method and the 4D method in aBEAT.

Sagittal slices are shown. Blue voxels show the common labeling results by automated method and manual ground-truth. Green voxels are the residual non-brain tissues (false positives), and red voxels are the wrongly-removed brain regions (false negatives). The regions in the yellow dotted squares are zoomed, which indicates that the 4D method is more accurate and consistent than the 3D method.

Furthermore, the 4D brain extraction was quantitatively evaluated. Specifically, for each time-point image of every subject, the overlap ratios between the manual ground-truth and the automated brain extraction results by the 3D deformable-surface-based method and the 4D method in aBEAT were measured using Jaccard Index, respectively. Notice that the manual ground-truth was semi-automatically delineated (similar to [40]) as follows: automated brain extraction was first performed, followed by manual delineation by experienced human raters using ITK-SNAP [41]. The averaged Jaccard Index degrees (across all subjects and all time points) for the 3D deformable-surface-based method and aBEAT are 0.96±0.02 and 0.98±0.005, respectively, which quantitatively indicates better performance achieved by the 4D brain extraction in aBEAT.

2. 4D Tissue Segmentation

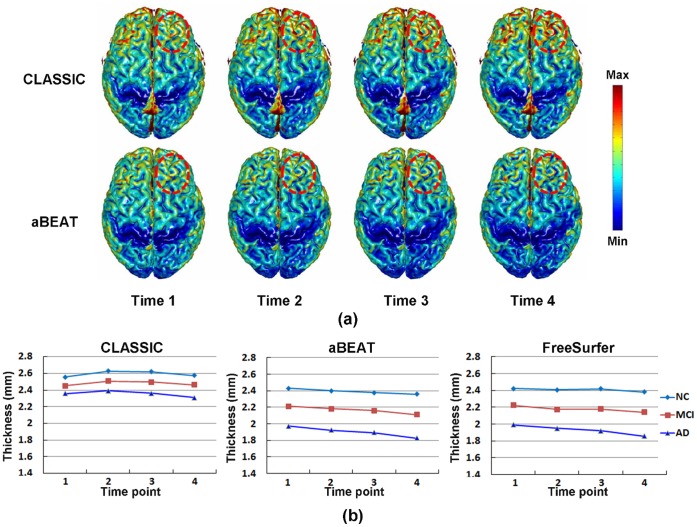

Ninety subjects (each with 4 time points in 24 months), including 30 NC, 30 MCI, and 30 AD, were employed for the evaluation of 4D tissue segmentation in aBEAT (after brain extraction). To demonstrate the advantage of aBEAT in 4D tissue segmentation, we compared its results with those obtained using CLASSIC [25]. Specifically, cortical thickness maps were constructed from the tissue-segmented images generated by CLASSIC and aBEAT, respectively. For each cortical thickness map, the cortical surface was reconstructed using the function “isosurface” in MATLAB, while the cortical thicknesses at surface vertices were colored using the function “isocolors” in MATLAB. Typical cortical thickness maps (which were obtained from a NC subject) by the two methods are shown in Fig. 10(a). We can see clearly, e.g., in the frontal lobe, that the cortical thickness by CLASSIC changes dramatically over time, while it is much consistent by aBEAT.

Figure 10. Tissue segmentation results.

(a) Cortical thickness maps derived by CLASSIC (the upper row) and aBEAT (the lower row) from a normal control subject. Circles indicate the region with dramatic thickness changes by CLASSIC, while consistent measurement achieved by aBEAT. (b) Changes of mean cortical thickness derived by CLASSIC (left), aBEAT (middle), and FreeSurfer (right) for the NC, MCI, and AD groups, respectively.

Furthermore, we measured the average cortical-thickness changes for each group (i.e., NC, MCI, and AD), using the cortical-thickness maps derived by CLASSIC and aBEAT, respectively. Specifically, we first calculated the mean cortical thickness for each time-point image of each subject, and then averaged the longitudinal mean cortical thicknesses from all subjects in each group. In Fig. 10(b), we show the longitudinal changes of mean cortical thickness obtained by CLASSIC and aBEAT. As we can see, the mean cortical thickness by aBEAT declines obviously and smoothly along time, while not obviously by CLASSIC. The lowest mean cortical thickness and the largest decrease of mean cortical thickness are both coming from AD group, which agrees with previous findings in the literature [42], [43]. Besides, we also measured the longitudinal changes of mean cortical thickness of each group using the longitudinal processing pipeline recently included in FreeSurfer [44]. It can be seen that the curves by aBEAT are smoother than those by FreeSurfer, especially for the NC and MCI groups.

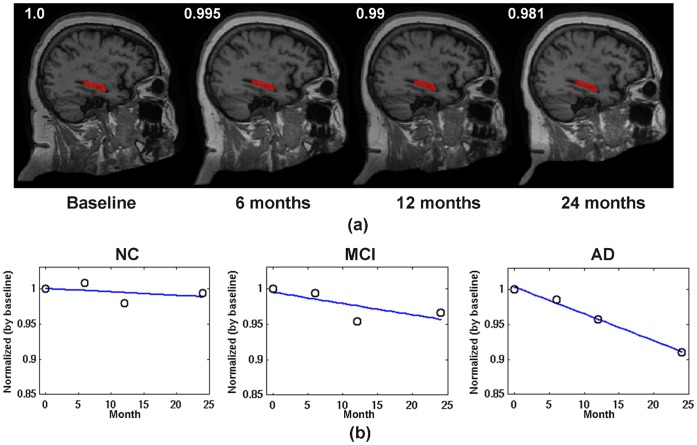

3. 4D Brain Labeling

Fifteen subjects (5 NC, 5 MCI, and 5 AD, each with 4 time points at baseline, 6th, 12th, and 24th months) were evaluated for automatic 4D ROI labeling using aBEAT. For longitudinal images of each subject, the WM, GM, and CSF were first segmented from the brain-extracted images using the 4D tissue segmentation module as evaluated above. Then, the ROIs in the MNI atlas were simultaneously mapped onto each time-point image to obtain the labeling maps by the 4D brain labeling module in aBEAT. Fig. 11(a) shows the automatically-labeled hippocampus (in red) on the sagittal view for a typical normal control subject. We can see that the hippocampus was accurately and consistently labeled at different time-points. To sensitively detect small neuronal changes in hippocampus [45], the hippocampal GM at each time-point image of each subject was further obtained, by masking the hippocampus ROI label with the GM map obtained from tissue segmentation result. The temporal change trends of hippocampal GM volume (normalized by the volume at baseline) are illustrated in Fig. 11(b) for all groups (NC, MCI, and AD). Notice that, for each group, the temporal change trend was estimated from the average change of hippocampal GM volume across all subjects in the group. It can be seen that, the decrease of hippocampal GM is subtle for NC, while very obvious for MCI and AD. The AD group shows the largest hippocampal GM reduction. These results are in agreement with previous findings by Kitayama et al. [45], Chetelat et al. [46], Colliot et al. [47], and Schuff et al. [9].

Figure 11. Brain labeling results.

(a) Automated 4D labeling results of hippocampus (in red) for a typical normal control subject, with four example slices provided. The hippocampal volume, which was normalized by baseline, decreases slightly from 1 (baseline) to 0.995 (6 months), 0.99 (12 months), and 0.981 (24 months). (b) The temporal development trends of hippocampal GM volume (also normalized by baseline) for the NC, MCI, and AD groups, respectively. The blue line in each plot is a linear fitting for the mean hippocampal GM measured at different time-points.

4. Computation Time

The computation time of aBEAT was estimated on a longitudinal dataset with 4 time points (i.e., 4 images were acquired for one subject at 4 different time points) and also on a cross-sectional dataset with 4 single-time-point images (i.e., 4 images were acquired from 4 different subjects at certain time point), respectively. The voxel size and volume size of each image are 1×1×1 mm3 and 256×256×256, respectively. Experiments were performed on a server with 8 CPU cores (Intel Xeon, 2.4 GHz) in Linux operating system. The total memory size of the server is 16 GB. The longitudinal dataset and cross-sectional dataset independently underwent the analysis pipeline in aBEAT (from image preprocessing to brain labeling as shown in Fig. 1), referred to as 4D analysis and 3D analysis, respectively. The computation time taken in each step of the 4D or 3D analysis is given in Table 1. The overall processing times for the 4D analysis and 3D analysis were 6.7 hours and 2.3 hours, respectively. The 4D analysis took more time, as it had to process all longitudinal images using just one thread, while the 3D analysis used multiple threads to process the cross-sectional images parallelly. In the future, we will accelerate the 4D/3D analysis in aBEAT by using more advanced technology, such as parallel computing based on Graphics Processing Units (GPU).

Table 1. Computation time taken in each major module for the 4 longitudinal or cross-sectional images.

| Image Preprocessing | Brain Extraction withCerebellum Removal | Tissue Segmentation | Brain Labeling | |

| 4D Analysis (Longitudinal) | 1.64 Minutes | 18.4 Minutes | 4 Hours | 2.38 Hours |

| 3D Analysis (Cross-sectional) | 1.43 Minutes | 16.2 Minutes | 1.15 Hour | 0.85 Hours |

Conclusion and Discussion

We have developed the aBEAT software with GUIs for 4D analysis of longitudinal brain MR images. The most significant feature of the aBEAT software is that it integrates a group of 4D image analysis algorithms and further provides a user-friendly platform for various 4D brain image analysis tasks, such as brain tissue segmentation and ROI labeling. Specifically, the integration of the advanced 4D brain extraction, 4D tissue segmentation, and 4D brain labeling algorithms ensures accurate and consistent measurement and analysis of longitudinal brain MR images. In addition, aBEAT can also be applied to 3D images for cross-sectional studies, i.e., by using a 3D deformable-surface-based method for brain extraction [22], a coupled level-sets algorithm for 3D tissue segmentation [26], and a symmetric diffeomorphic registration method for 3D brain labeling [30]). So far, a Linux-based standalone software package for aBEAT has been released on the website of Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC). A computer with 8 GB memory (or more) is recommended for analysis of longitudinal images using the software package.

The five major modules in aBEAT (as shown in Fig. 1) interact with each other seamlessly as explained below. The image preprocessing module corrects bias field in the intensities of input images and further normalizes them to match with those in the baseline image, thus benefiting the subsequent processing steps. The brain extraction module removes non-brain tissues and produces brain-extracted images, which facilitates the segmentation of WM, GM, and CSF by the tissue segmentation module. The brain-extracted images and the tissue-segmented images are then used in the brain labeling module for labeling brain ROIs, which can be analyzed statistically in the ROI analysis module. These five modules work sequentially for completing the processing and analysis of brain images. Importantly, each module can also perform its respective task independently, e.g., performing brain extraction by using only the brain extraction module.

aBEAT can be applied to many medical studies. For example, we can use it to segment brain tissues (i.e., GM, WM, and CSF) and brain ROIs (i.e., hippocampus) from longitudinal brain MR images of a subject, and then analyze temporal changes of brain tissues and ROIs to determine whether the subject has certain brain disease such as AD [2] or schizophrenia [3]. We can also use it for analysis of cross-sectional brain MR images, i.e., classifying the subjects into different groups (e.g., with high-risk psychosis or not [48]) according to the measured brain tissues and ROI labels. In addition to these examples on volume-based analysis, the brain tissues and ROI labels obtained by aBEAT can further be used for cortical surface reconstruction and the analysis of cortical ROIs [43].

Our current software package has several limitations, which also indicates the future direction of our work. (1) Although the volume-based ROI analysis function is available in aBEAT, surface-based ROI analysis function is not included yet. Therefore, 4D/3D surface reconstruction tools [43] are still required to reconstruct cortical surfaces from the segmented brain tissue maps. In addition, the measurement tools (i.e., cortical thickness estimator) and visualization tools (i.e., for rendering cortical-thickness map) [43] are also required. We will integrate these tools in our future version of the aBEAT software. (2) The parallel computing strategy used in aBEAT (as described in Section 2.2) can take advantage of multiple processor cores to accelerate image analysis. However, as tissue segmentation was implemented in MATLAB (not as efficient as C/C++ languages) and brain labeling was not fully parallelized, the computational speed of aBEAT is still limited. In the future, we will use C/C++ and GPGPU (General-Purpose computation on Graphics Processing Units) to speedup these algorithms and thus make our software computationally more efficient. (3) Currently, only the Linux version of our software is available. In the future, we will make cross-platform software for aBEAT. (4) Although the Analyze file format is one of the most popular file formats, it is currently the only file format supported by aBEAT. Therefore, users have to use other programs to convert the image file formats, e.g., between DICOM and Analyze formats. In the future, we will support more file formats to ease use of our software. (5) Currently, aBEAT is used for analysis of MR T1 images (the most widely-used type of MR images for adult brain). In the future, we will extend the software for analysis of other types of MR images such as T2 and FA images.

aBEAT is a free software for academic use. The Linux-based standalone software package and tutorial are available at http://www.nitrc.org/projects/abeat. For convenience of using this software, two NC datasets (each with 4 time points) from ADNI database are included in the package. The tutorial describes how to install and use aBEAT software correctly. In addition, frequently asked questions (FAQ) from users and the answers are also provided with the tutorial to address possible questions that new users may have during the use of this software package. User feedbacks are greatly welcomed for further improvement of this software package.

Acknowledgments

We thank colleagues for helping test software before release, including Ziwen Peng, Lan Zhou, Hanbo Chen, Tuo Zhang, Yeqin Shao, Kimhan Thung, Feng Liu, Fei Dai, Evren Arslan, and Mohan Boddu.

Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.ucla.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.ucla.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf. The following statements from ADNI on the ADNI database are cited: “Data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: Abbott; Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Amorfix Life Sciences Ltd.; AstraZeneca; Bayer HealthCare; BioClinica, Inc.; Biogen Idec Inc.; Bristol-Myers Squibb Company; Eisai Inc.; Elan Pharmaceuticals Inc.; Eli Lilly and Company; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; GE Healthcare; Innogenetics, N.V.; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Medpace, Inc.; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Servier; Synarc Inc.; and Takeda Pharmaceutical Company. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Disease Cooperative Study at the University of California, San Diego. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of California, Los Angeles.”

Funding Statement

This work was supported in part by National Institutes of Health grants EB006733, EB008374, EB009634, and AG041721, and also by The National Basic Research Program of China (973 Program) grant number 2010CB732505. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. No additional external funding received for this study.

References

- 1. Toga AW, Thompson PM (2003) Temporal dynamics of brain anatomy. Annual review of biomedical engineering 5: 119–145. [DOI] [PubMed] [Google Scholar]

- 2. Chetelat G, Landeau B, Eustache F, Mezenge F, Viader F, et al. (2005) Using voxel-based morphometry to map the structural changes associated with rapid conversion in MCI: a longitudinal MRI study. Neuroimage 27: 934–946. [DOI] [PubMed] [Google Scholar]

- 3. Nakamura M, Salisbury DF, Hirayasu Y, Bouix S, Pohl KM, et al. (2007) Neocortical gray matter volume in first-episode schizophrenia and first-episode affective psychosis: a cross-sectional and longitudinal MRI study. Biol Psychiatry 62: 773–783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Thambisetty M, Wan J, Carass A, An Y, Prince JL, et al. (2010) Longitudinal changes in cortical thickness associated with normal aging. Neuroimage 52: 1215–1223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Li Y, Wang Y, Xue Z, Shi F, Lin W, et al. (2010) Consistent 4D cortical thickness measurement for longitudinal neuroimaging study. Med Image Comput Comput Assist Interv 13: 133–142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Li Y, Wang YP, Wu GR, Shi F, Zhou LP, et al.. (2012) Discriminant analysis of longitudinal cortical thickness changes in Alzheimer’s disease using dynamic and network features. Neurobiology Of Aging 33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Eskildsen SF, Coupe P, Fonov V, Manjon JV, Leung KK, et al. (2012) BEaST: Brain extraction based on nonlocal segmentation technique. Neuroimage 59: 2362–2373. [DOI] [PubMed] [Google Scholar]

- 8. Bonne O, Brandes D, Gilboa A, Gomori JM, Shenton ME, et al. (2001) Longitudinal MRI study of hippocampal volume in trauma survivors with PTSD. The American journal of psychiatry 158: 1248–1251. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Schuff N, Woerner N, Boreta L, Kornfield T, Shaw LM, et al. (2009) MRI of hippocampal volume loss in early Alzheimer’s disease in relation to ApoE genotype and biomarkers. Brain 132: 1067–1077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ibanez L, Schroeder W, Ng L, JC (2003) The ITK Software Guide: The Insight Segmentation and Registration Toolkit (version 1.4). Kitware, Inc. [Google Scholar]

- 11. Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, et al. (2004) Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage 23: S208–S219. [DOI] [PubMed] [Google Scholar]

- 12. Fischl B, Salat DH, Busa E, Albert M, Dieterich M, et al. (2002) Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron 33: 341–355. [DOI] [PubMed] [Google Scholar]

- 13.Friston K, Ashburner J, Kiebel S, Nichols T, Penny W (2007) Statistical Parametric Mapping: The Analysis of Functional Brain Images. Academic Press. [Google Scholar]

- 14. Thompson PM, Hayashi KM, de Zubicaray G, Janke AL, Rose SE, et al. (2003) Dynamics of gray matter loss in Alzheimer’s disease. J Neurosci 23: 994–1005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Shattuck DW, Leahy RM (2001) Automated graph-based analysis and correction of cortical volume topology. Ieee Transactions on Medical Imaging 20: 1167–1177. [DOI] [PubMed] [Google Scholar]

- 16. Smith SM (2002) Fast robust automated brain extraction. Hum Brain Mapp 17: 143–155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Sadananthan SA, Zheng WL, Chee MWL, Zagorodnov V (2010) Skull stripping using graph cuts. Neuroimage 49: 225–237. [DOI] [PubMed] [Google Scholar]

- 18. Iglesias JE, Liu CY, Thompson PM, Tu ZW (2011) Robust Brain Extraction Across Datasets and Comparison With Publicly Available Methods. Ieee Transactions on Medical Imaging 30: 1617–1634. [DOI] [PubMed] [Google Scholar]

- 19. Shi F, Wang L, Gilmore JH, Lin W, Shen D (2011) Learning-based meta-algorithm for MRI brain extraction. Med Image Comput Comput Assist Interv 14: 313–321. [DOI] [PubMed] [Google Scholar]

- 20. Leung KK, Barnes J, Modat M, Ridgway GR, Bartlett JW, et al. (2011) Brain MAPS: An automated, accurate and robust brain extraction technique using a template library. Neuroimage 55: 1091–1108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wang Y, Li G, Nie J, Yap PT, Guo L, et al.. (2013) Consistent 4D Brain Extraction of Serial Brain MR Images. SPIE Medical Imaging. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Wang Y, Nie J, Yap PT, Shi F, Guo L, et al. (2011) Robust deformable-surface-based skull-stripping for large-scale studies. Med Image Comput Comput Assist Interv 14: 635–642. [DOI] [PubMed] [Google Scholar]

- 23.Wang L, Shi F, Li G, Shen D (2012) 4D Segmentation of Longitudinal Brain MR Images with Consistent Cortical Thickness Measurement. MICCAI Workshop on Spatiotemporal Image Analysis for Longitudinal and Time-Series Image Data Nice, France. [Google Scholar]

- 24. Zhang Y, Brady M, Smith S (2001) Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. Ieee Transactions on Medical Imaging 20: 45–57. [DOI] [PubMed] [Google Scholar]

- 25. Xue Z, Shen D, Davatzikos C (2006) CLASSIC: consistent longitudinal alignment and segmentation for serial image computing. Neuroimage 30: 388–399. [DOI] [PubMed] [Google Scholar]

- 26. Wang L, Shi F, Lin W, Gilmore JH, Shen D (2011) Automatic segmentation of neonatal images using convex optimization and coupled level sets. NeuroImage 58: 805–817. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Wu G, Wang Q, Shen D (2012) Registration of longitudinal brain image sequences with implicit template and spatial-temporal heuristics. Neuroimage 59: 404–421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Thirion JP (1998) Image matching as a diffusion process: an analogy with Maxwell’s demons. Med Image Anal 2: 243–260. [DOI] [PubMed] [Google Scholar]

- 29. Shen DG, Davatzikos C (2002) HAMMER: Hierarchical attribute matching mechanism for elastic registration. Ieee Transactions on Medical Imaging 21: 1421–1439. [DOI] [PubMed] [Google Scholar]

- 30.Wu G, Kim M, Wang Q, Shen D (2012) Hierarchical Attribute-Guided Symmetric Diffeomorphic Registration for MR Brain Images. MICCAI 2012. Nice, France. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Dai Y, Shi F, Wang L, Wu G, Shen D (2012) iBEAT: A Toolbox for Infant Brain Magnetic Resonance Image Processing. Neuroinformatics. [DOI] [PubMed] [Google Scholar]

- 32. Rorden C, Brett M (2000) Stereotaxic display of brain lesions. Behavioural Neurology 12: 191–200. [DOI] [PubMed] [Google Scholar]

- 33. He B, Dai Y, Astolfi L, Babiloni F, Yuan H, et al. (2011) eConnectome: A MATLAB toolbox for mapping and imaging of brain functional connectivity. J Neurosci Methods 195: 261–269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Sled JG, Zijdenbos AP, Evans AC (1998) A nonparametric method for automatic correction of intensity nonuniformity in MRI data. IEEE Trans Med Imaging 17: 87–97. [DOI] [PubMed] [Google Scholar]

- 35.Balci SK, Golland P, Wells W (2007) Non-rigid groupwise registration using B-Spline deformation model. Int Conf Med Image Comput Comput Assist Interv. 105–121. [Google Scholar]

- 36. Holmes CJ, Hoge R, Collins L, Woods R, Toga AW, et al. (1998) Enhancement of MR images using registration for signal averaging. Journal of Computer Assisted Tomography 22: 324–333. [DOI] [PubMed] [Google Scholar]

- 37. Jenkinson M, Smith S (2001) A global optimisation method for robust affine registration of brain images. Medical Image Analysis 5: 143–156. [DOI] [PubMed] [Google Scholar]

- 38. Zeng X, Staib LH, Schultz RT, Duncan JS (1999) Segmentation and measurement of the cortex from 3D MR images using coupled surfaces propagation. IEEE Transactions on Medical Imaging 18: 100–111. [DOI] [PubMed] [Google Scholar]

- 39. Shen DG, Davatzikos C (2004) Measuring temporal morphological changes robustly in brain MR images via 4-dimensional template warping. Neuroimage 21: 1508–1517. [DOI] [PubMed] [Google Scholar]

- 40. Shi F, Fan Y, Tang SY, Gilmore JH, Lin WL, et al. (2010) Neonatal brain image segmentation in longitudinal MRI studies. Neuroimage 49: 391–400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Yushkevich PA, Piven J, Hazlett HC, Smith RG, Ho S, et al. (2006) User-guided 3D active contour segmentation of anatomical structures: significantly improved efficiency and reliability. Neuroimage 31: 1116–1128. [DOI] [PubMed] [Google Scholar]

- 42. Holland D, Brewer JB, Hagler DJ, Fennema-Notestine C, Fenema-Notestine C, et al. (2009) Subregional neuroanatomical change as a biomarker for Alzheimer’s disease. Proceedings of the National Academy of Sciences of the United States of America 106: 20954–20959. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Li G, Nie J, Wu G, Wang Y, Shen D (2012) Consistent reconstruction of cortical surfaces from longitudinal brain MR images. Neuroimage 59: 3805–3820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Reuter M, Fischl B (2011) Avoiding asymmetry-induced bias in longitudinal image processing. Neuroimage 57: 19–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Kitayama N, Matsuda H, Ohnishi T, Kogure D, Asada T, et al. (2001) Measurements of both hippocampal blood flow and hippocampal gray matter volume in the same individuals with Alzheimer’s disease. Nuclear medicine communications 22: 473–477. [DOI] [PubMed] [Google Scholar]

- 46. Chetelat G, Desgranges B, Landeau B, Mezenge F, Poline JB, et al. (2008) Direct voxel-based comparison between grey matter hypometabolism and atrophy in Alzheimers disease. Brain 131: 60–71. [DOI] [PubMed] [Google Scholar]

- 47. Colliot O, Chetelat G, Chupin M, Desgranges B, Magnin B, et al. (2008) Discrimination between Alzheimer disease, mild cognitive impairment, and normal aging by using automated segmentation of the hippocampus. Radiology 248: 194–201. [DOI] [PubMed] [Google Scholar]

- 48. Pantelis C, Velakoulis D, McGorry PD, Wood SJ, Suckling J, et al. (2003) Neuroanatomical abnormalities before and after onset of psychosis: a cross-sectional and longitudinal MRI comparison. Lancet 361: 281–288. [DOI] [PubMed] [Google Scholar]