Abstract

In this paper we review our research on the effect and computational role of dynamical synapses on feed-forward and recurrent neural networks. Among others, we report on the appearance of a new class of dynamical memories which result from the destabilization of learned memory attractors. This has important consequences for dynamic information processing allowing the system to sequentially access the information stored in the memories under changing stimuli. Although storage capacity of stable memories also decreases, our study demonstrated the positive effect of synaptic facilitation to recover maximum storage capacity and to enlarge the capacity of the system for memory recall in noisy conditions. Possibly, the new dynamical behavior can be associated with the voltage transitions between up and down states observed in cortical areas in the brain. We investigated the conditions for which the permanence times in the up state are power-law distributed, which is a sign for criticality, and concluded that the experimentally observed large variability of permanence times could be explained as the result of noisy dynamic synapses with large recovery times. Finally, we report how short-term synaptic processes can transmit weak signals throughout more than one frequency range in noisy neural networks, displaying a kind of stochastic multi-resonance. This effect is due to competition between activity-dependent synaptic fluctuations (due to dynamic synapses) and the existence of neuron firing threshold which adapts to the incoming mean synaptic input.

Keywords: short-term synaptic plasticity, emergence of dynamic memories, memory storage capacity, criticality in up–down cortical transitions, neural stochastic multiresonances

1. Introduction

In the last decades many experimental studies have reported that transmission of information through the synapses is strongly influenced by the recent presynaptic activity in such a way that the postsynaptic response can decrease (that is called synaptic depression) or increase (or synaptic facilitation) at short time scales under repeated stimulation (Abbott et al., 1997; Tsodyks and Markram, 1997). In cortical synapses it was found that after induction of long-term potentiation (LTP), the temporal synaptic response was not uniformly increased. Instead, the amplitude of the initial postsynaptic potential was potentiated whereas the steady-state synaptic response was unaffected by LTP (Markram and Tsodyks, 1996).

From a biophysical point of view it is well accepted that short-term synaptic plasticity including synaptic depression and facilitation has its origin in the complex dynamics of release, transmission and recycling of neurotransmitter vesicles at the synaptic buttons (Pieribone et al., 1995). In fact, synaptic depression occurs when the arrival of presynaptic action potentials (APs) at high frequency does not allow an efficient recovering at short time scales of the available neurotransmitter vesicles to be released near the cell membrane (Zucker, 1989; Pieribone et al., 1995). This causes a decrease of the postsynaptic response for successive APs. Other possible mechanisms responsible for synaptic depression have been described including feedback activation of presynaptic receptors and from postsynaptic processes such as receptor desensitization (Zucker and Regehr, 2002). On the other hand, synaptic facilitation is a consequence of residual cytosolic calcium—that remains inside the synaptic buttons after the arrival of the firsts APs—which favors the release of more neurotransmitter vesicles for the next arriving AP (Bertram et al., 1996). This increase in neurotransmitters causes a potentiation of the postsynaptic response or synaptic facilitation. It is clear that strong facilitation causes a fast depletion of available vesicles so at the end it also induces a strong depressing effect. Other possible mechanisms responsible for short-term synaptic plasticity include, for instance, glial-neuronal interactions (Zucker and Regehr, 2002).

In the two seminal papers (Tsodyks and Markram, 1997) and (Abbott et al., 1997) a simple phenomenological model has been proposed based in these biophysical principles which nicely fits the evoked postsynaptic responses observed in cortical neurons. The model is characterized by three variables xj(t), yj(t), zj(t) that follow the dynamics

| (1) |

where yj(t) is the fraction of neurotransmitters which is released into the synaptic cleft after the arrival of an AP at time tjsp, xj(t) is the fraction of neurotransmitters which is recovered after previous arrival of an AP near the cell membrane and zj(t) is the fraction of inactive neurotransmitters. The model assumes conservation of the total number of neurotransmitter resources in time so one has xj(t) + yj(t) + zj(t) = 1. The released neurotransmitter inactivates with time constant τin and the inactive neurotransmitter recovers with time constant τrec. The synaptic current received by a postsynaptic neuron from its neighbors is then defined as Ii(t) = ∑jAijyj(t) where Aij represents the maximum synaptic current evoked in the postsynaptic neuron i by an AP from presynaptic neuron j which in cortical neurons is around 40 pA (Tsodyks et al., 1998).

For constant release probability Uj, the model describes the basic mechanism of synaptic depression. The model is completed to account for synaptic facilitation by considering that Uj increases in time to its maximum value U as the consequence of the residual cytosolic calcium that remains after the arrival of very consecutive APs, and follows the dynamics

| (2) |

Short term synaptic plasticity has profound consequences on information transmission by individual neurons as well as on network functioning and behavior. Previous works have shown this fact on both feed-forward and recurrent networks. For instance, in feed-forward networks activity-dependent synapses act as non-linear filters in supervised learning paradigms (Natschläger et al., 2001), being able to extract statistically significant features from noisy and variable temporal patterns (Liaw and Berger, 1996).

For recurrent networks, several studies revealed that populations of excitatory neurons with depressing synapses exhibit complex regimes of activity (Senn et al., 1996; Tsodyks et al., 1998, 2000; Bressloff, 1999; Kistler and van Hemmen, 1999), such as short intervals of highly synchronous activity (population bursts) intermittent with long periods of asynchronous activity, as is observed in neurons throughout the cortex (Tsodyks et al., 2000). Related with this, it was proposed (Senn et al., 1996, 1998) that synaptic depression may serve as a mechanism for rhythmic activity and central pattern generation. Also, recent studies on rate models have reported the importance of dynamic synapses in the emergence of persistent activity after removal of stimulus which is the base of the so called working memories (Barak and Tsodyks, 2007), and in particular it has been also reported the relevant role of synaptic facilitation, mediated by residual calcium, as the main responsible for appearance of working memories (Mongillo et al., 2008).

All these phenomena have stimulated much research to elucidate the effect and possible functional role of short term synaptic plasticity. In this paper we review our own efforts over the last decade in this research field. In particular, we have demonstrated both theoretically and numerically the appearance of different non-equilibrium phases in attractor networks as the consequence of the underlying noisy activity in the network and of the existence of synaptic plasticity (see section 2). The emergent phenomenology in such networks includes a high sensitivity of the network to changing stimuli and a new phase in which dynamical attractors or dynamical memories appear with the possibility of regular and chaotic behavior and rapid “switching” between different memories (Pantic et al., 2002; Cortes et al., 2004, 2006; Torres et al., 2005, 2008; Marro et al., 2007). The origin of such new phases and the extraordinary sensibility of the system to varying inputs—even in the memory phase—is precisely the “fatigue” of synapses due to heavy presynaptic activity competing with different sources of noise which induces a destabilization of the regular stable memory attractors. One of the main consequences of this behavior is the strong influence of short-term synaptic plasticity on storage capacity of such networks (Torres et al., 2002; Mejias and Torres, 2009) as we will explain in section 3.

The switching behavior is characterized by a characteristic time scale during which the memory is retained. The distribution of time scale depends in a complex way on the parameters of the dynamical synapse model and is the result of a phase transition. We have investigated the conditions for the appearance of power-law behavior in the probability distribution of the permanence times in the Up state, which is a sign for criticality (see section 4). This dynamical behavior has been associated (Holcman and Tsodyks, 2006) to the empirically observed transitions between states of high activity (Up states) and low activity (Down states) in the mammalian cortex (Steriade et al., 1993a,b).

The enhanced sensibility of neural networks with dynamic synapses to external stimuli could provide a mechanism to detect relevant information in weak noisy external signals. This can be viewed as a form of stochastic resonance (SR), which is the general phenomenon that enhances the detection by a non-linear dynamical system of weak signals in the presence of noise. Recent experiments in auditory cortex have shown that synaptic depression improves the detection of weak signals through SR for a larger noise range (Yasuda et al., 2008). In a feed-forward network model of spiking neurons, we have modeled these experimental findings (Mejias and Torres, 2011; Torres et al., 2011). We demonstrated theoretically and numerically that, in fact, short-term synaptic plasticity together with non-linear neuron excitability induce a new type of SR where there are multiple noise levels at which weak signals can be detected by the neuron. We denoted this novel phenomenon by bimodal stochastic resonances or stochastic multiresonances (see section 5) and, very recently, we have proved that this intriguing phenomenon not only occurs in feed-forward neural networks but also in recurrent attractor networks (Pinamonti et al., 2012).

2. Appearance of dynamical memories

In this section we review our work on the appearance of dynamical memories in attractor neural networks with dynamical synapses as originally reported in (Pantic et al., 2002; Torres et al., 2002, 2008; Mejias and Torres, 2009). For simplicity and in order to obtain straightforward mean-field derivations we have considered the case of a network of N binary neurons (Hopfield, 1982; Amit, 1989). However, we emphasize that the same qualitative behavior emerges in networks of integrate and fire (IF) neurons (Pantic et al., 2002).

Each neuron in the network, whose state is si = 1, 0 depending if the neuron is firing or not an AP, receives at time t from its neighbor neurons a total synaptic current, or local field, given by

| (3) |

where ωij(t) is the synaptic current received by the postsynaptic neuron i from the presynaptic neuron j when this fires an AP (sj(t) = 1). If the synaptic current to neuron i, hi(t), is larger than some neuron threshold value θi, neuron i fires an AP with a probability that depends on the intrinsic noise present in the network. The noise is commonly modeled as a thermal bath at temperature T. We assume parallel dynamics (Little dynamics) using the probabilistic rule

| (4) |

with σ = 1,0.

To account for short-term synaptic plasticity in the network we consider

| (5) |

where Dj(t) and Fj(t) are dynamical variables representing synaptic depression and synaptic facilitation mechanisms. The constants denote static maximal synaptic conductances, that contain information concerning a number P of random patterns of neural activity, or memories, ξμ ≡ {ξμi = 1,0; i = 1,…, N, μ = 1, …, P} previously learned and stored in the network. Such static memories can be achieved in actual neural systems by LTP or depression of the synapses due to network stimulation with these memories. For concreteness, we assume here that these weights are the result of a Hebbian-like learning process that takes place on a time scale that is long compared to the dynamical time scales of the neurons and the dynamical synapses. The Hebbian learning takes the form

| (6) |

also known as the covariance learning rule, with a = 〈ξμi〉 representing the mean level of activity in the patterns. It is well-known that a recurrent neural network with synapses (Equation 6) acts as an associative memory (Amit, 1989). That is, the stored patterns ξμ become local minima of the free-energy and within the basin of attraction of each memory, the neural dynamics (Equation 4) drives the network activity toward this memory. Thus, appropriate stimulation of (a subset of) neurons that are active in the stored pattern initiates a memory recall process in which the network converges to the memory state.

To model the dynamics of the synaptic depression Dj(t) and facilitation Fj(t), we simplify the phenomenological model of dynamic synapses described by Equations (1, 2), taking into account that in actual neural systems such as the cortex τin « τrec, which implies that yi(t) = 0 for most of the time and only at the exact point at which the AP arrives has a non-zero value yj(tsp) = xj(tsp) Uj(tsp). Thus, the synaptic current evoked in the postsynaptic neuron i by a presynaptic neuron j every time it fires is approximatively Iij(t) = Aij xj(tjsp) Uj(tjsp) which has the form given by Equation (5) with , Dj(t) ≡ xj(t) and Fj(t) ≡ Uj(t). We set U = 1 without loss of generality in order to have Dj(t) = Fj(t) = 1 ∀j, t for τrec, τfac « 1, that corresponds to the well know limit of static synapses without depressing and facilitating mechanism. In this limit, in fact, one recover the classical Amari–Hopfield model of associative memory (Amari, 1972; Hopfield, 1982) when one chooses the neuron thresholds as

| (7) |

It is important to point out that due to the discrete nature of the probabilistic neuron dynamics (Equation 4) together with the approach τin « τrec, only discrete versions of the dynamics for xi(t) and Ui(t) [see for instance (Tsodyks et al., 1998)] are needed here, namely

| (8) |

Equations (4–8) completely define the dynamics of the network. Note, that in the limit of τrec, fac → 0 the model reduces to the standard Amari–Hopfield model with static synapses.

To numerically and analytically study the emergent behavior of this attractor neural network with dynamical synapses, it is useful to measure the degree of correlation between the current network state s ≡ {si; i = 1, …, N} and each one of the stored patterns ξμ by mean of the overlap function

| (9) |

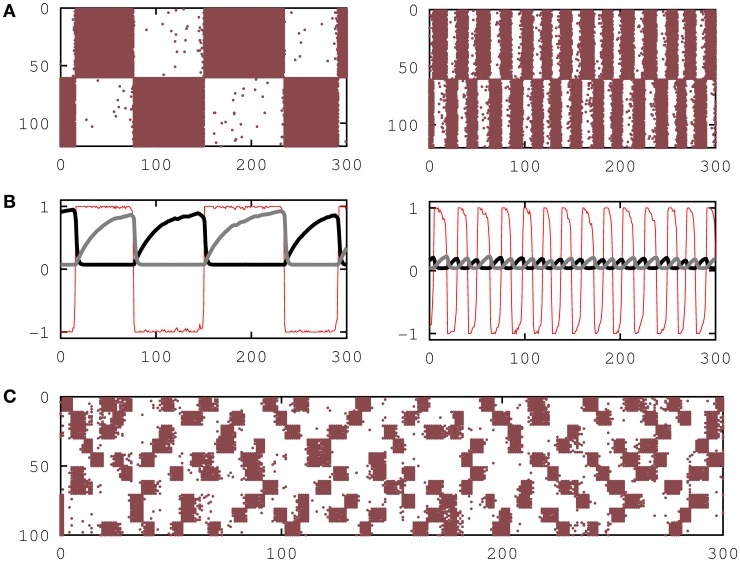

Monte Carlo simulations of the network storing a small number of random patterns (loading parameter α ≡ P/N → 0), each pattern having 50% active neurons (a = 0.5), no facilitation (Uj(t) = 1) and an intermediate value of τrec is shown in Figures 1A,B. It shows a new phase where dynamical memories characterized by quasi-periodic switching of the network activity between pattern (ξμ) and anti-pattern (1 − ξμ) configurations appear. For lower values of τrec the network reduces to the attractor network with static synapses and shows the emergence of the traditional ferromagnetic or associative memory phase at relatively low T, where network activity reaches a steady state that is highly correlated with one of the stored patterns, and a paramagnetic or no-memory phase at high T where the network activity reaches a highly fluctuating disordered steady state.

Figure 1.

Emergence of dynamic memories in attractor neural networks. (A) Raster plots showing the switching behavior of the network neural activity between one activity pattern and its anti-pattern for τrec = 26 and T = 0.025 (left) and for τrec = 50 and T = 0.025 (right), respectively. (B) The behavior of the overlap function mμ(s) (thin red line), the mean recovering variable xμ+ of active neurons in the pattern (thick black line) and the mean recovering variable xμ− of not active neurons in the pattern (thick gray line) are plotted for the two cases depicted in (A). (C) Raster plot that shows the emergence of dynamic memories when 10 activity patterns are stored in the synapses for τrec = 50. In all panels the firing threshold was set to θi = 0, and the network size was N = 120 in (A) and (B) and N = 100 in (C).

The Figure 1C shows simulation results of a network with P = 10 patterns and a = 0.1, demonstrating that switching behavior is also obtained for relatively large number of patterns and sparse network activity. Figure 2B shows that the switching behavior is not an artifact of the binary neuron dynamics and is also obtained in a network of more realistic networks of spiking integrate-and-fire neurons.

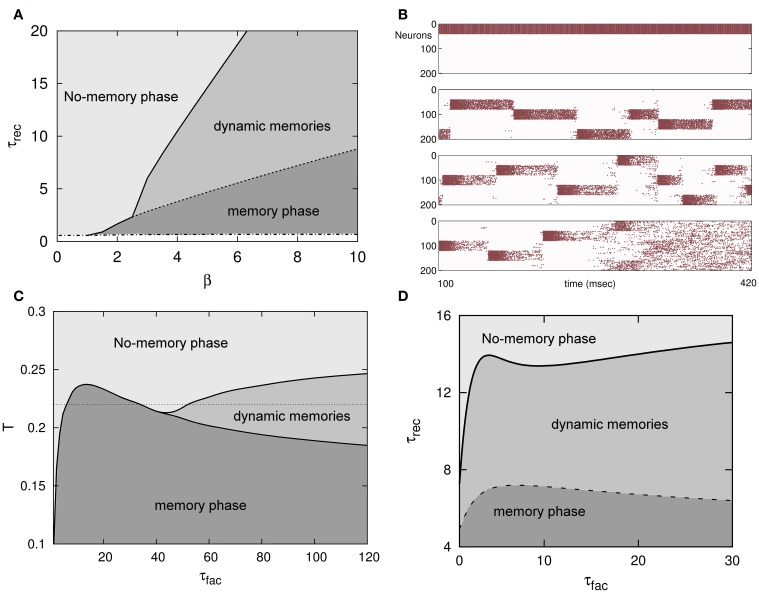

Figure 2.

(A) Phase diagram (τrec, β ≡ T−1) of an attractor binary neural network with depressing synapses for α = 0. A new phase in which dynamical memories appear—with the network activity switching between the different memory attractors—emerges between the traditional memory and no-memory phases that characterize the behavior of attractors neural networks with static synapses. (B) The emergent behavior depicted in (A) is robust when a more realistic attractor network of IF neurons and more stored patterns are considered (5 in this simulation). From top to bottom, the behavior of the network activity for τrec = 0, 300, 800 and 3000 ms is depicted, respectively. For some level of noise the network activity pass from the memory phase to the dynamical phase and from this to the no-memory phase when τrec is increased. (C) Phase diagram (T, τfac) for τrec = 3 and U = 0.1 of an attractor binary neural network with short-term depression and facilitation mechanisms in the synapses and α = 0. (D) Phase diagram (τrec, τfac) for T = 0.1 and U = 0.1 in the same system than in (C). In both, (C,D), the diagrams depict the appearance of the same memory, oscillatory and no-memory phases than in the case of depressing synapses. The transition lines between different phases, however, show here a clear non-linear and non-monotonic dependence with relevant parameters consequence of the non-trivial competition between depression and facilitation mechanisms. This is very remarkable in (C) where for a given level of noise, namely T = 0.22 (horizontal dotted line), the increase of facilitation time constant τfac induces the transition of the activity of the network from a no-memory state to a memory state, from this one to a no-memory state again, and finally from this last to an oscillatory regime.

All time constants, such τrec or τfac are given in units of Monte Carlo steps (MCS) a temporal unit that in actual systems can be associated, for instance, with the duration of the refractory period and therefore of order of 5 ms.

In the limit of N → ∞ (thermodynamic limit) and α → 0 (finite number of patterns) the emergent behavior of the model can be analytically studied within a standard mean field approach [see for details (Pantic et al., 2002; Torres et al., 2008)]. The dynamics of the system then is described by a 6P-dimensional discrete map

| (10) |

where ℱ is a 6P-dimensional non-linear function of the order parameters

| (11) |

that are averages of the microscopic dynamical variables over the sites that are active and quiescent, respectively, in a given pattern μ, that is

| (12) |

with ci(t) being mi(t), xi(t), and Ui(t), respectively.

Local stability analysis of the fixed point solutions of the dynamics (Equation 10) shows that, similarly to the Amari–Hopfield standard model and in agreement with Monte Carlo simulations described above, the stored memories ξμ are stable attractors in some regions of the space of relevant parameters, such as T, U, τrec, and τfac. Varying these parameters, there are, however, some critical values for which the memories destabilize and an oscillatory regime, in which the network visits different memories, can emerge. These critical values are depicted in Figures 2A,C,D in the form of transition lines between phases or dynamical behaviors in the system. For instance, for only depressing synapses (τfac = 0, Uj(t) = 1), there is a critical monotonic line τ*rec(T−1), as in a second order phase transition, separating the no-memory phase and the oscillatory phase (solid line in Figure 2A) where oscillations start to appears with small amplitude as in a supercritical Hopf bifurcation. Also there is a transition line τ**rec(T−1), also monotonic, between the oscillatory phase and the memory phase which occurs sharply as in a first order phase transition (dashed line in Figure 2A). When facilitation is included, the picture is more complex, although similar critical and sharp transitions lines appear separating the same phases. Now, however, the lines separating different phases are non-monotonic and highly non-linear which shows the competition between a priori opposite mechanisms, depressing and facilitating, as is depicted in Figures 2C,D. In fact, among other features, synaptic depression induces fatigue at the synapses which destabilizes the attractors, and synaptic facilitation allows a fast access to the memory attractors and to stay there during a shorter period of time (Torres et al., 2008). As in Figure 1, in all phase diagrams appearing in Figure 2, τrec and τfac are given in MCS units (see above) with a value for that temporal unit of around the typical duration of the refractory period in actual neurons (~5 ms).

The attractor behavior of the recurrent neural network has the important property to complete a memory based on partial or noisy stimulus information. In this section we have seen that memories that are stable with static synapses become meta-stable with dynamical synapses, inducing a switching behavior among memory patterns in the presence of noise. In this manner, dynamic synapses provide the associative memory with a natural mechanism to dissociate from a memory in order to associate with a new memory pattern. In contrast, with static synapses the network would stay in the stable memory state forever, preventing recall of new memories. Thus, dynamic synapses change stable memories into meta-stable memories for certain ranges of the parameters.

3. Storage capacity

It is important to analyze how short-term synaptic plasticity affects the maximum number of patterns of neural activity the system is able to store and efficiently recall, that is, the so called maximum storage capacity. In a recent paper we have addressed this important issue using a standard mean field approach in the model described by Equations (3–8) when it stored P = α N activity random patterns with α > 0 and N → ∞, a = 1/2 and in the absence of noise (T = 0). In fact, for very low temperature (T « 1), redefining the overlaps as and assuming steady-state conditions in which there is only one pattern (condensed pattern) with overlap and the remaining patters , it is straightforward (Hertz et al., 1991) to see that the steady state of the system is described by the set of mean field equations

| (13) |

where γ ≡ Uτrec, , is the spin-glass order parameter, is the pattern interference parameter and

which in the limit of N → ∞ becomes a Gaussian variable

where z is a random normal-distributed variable N[0,1]—see details in (Mejias and Torres, 2009). Then, the appearing in Equation (13) becomes an average over ![]() . Using standard techniques in the limit T = 0 (Hertz et al., 1991), the set of the resulting three mean-field equations reduces to a single mean-field equation which gives the maximum number of patterns that the system is able to store and retrieve, namely (see mathematical details in Mejias and Torres, 2009)

. Using standard techniques in the limit T = 0 (Hertz et al., 1991), the set of the resulting three mean-field equations reduces to a single mean-field equation which gives the maximum number of patterns that the system is able to store and retrieve, namely (see mathematical details in Mejias and Torres, 2009)

| (14) |

where with M being the overlap of the current state of the network activity with the pattern that is being retrieved. The Equation (14) has a trivial solution y = 0 (M = 0). Non-zero solutions (with non-zero overlap M) exist for α less than some critical α, which defines the maximum storage capacity of the system αc.

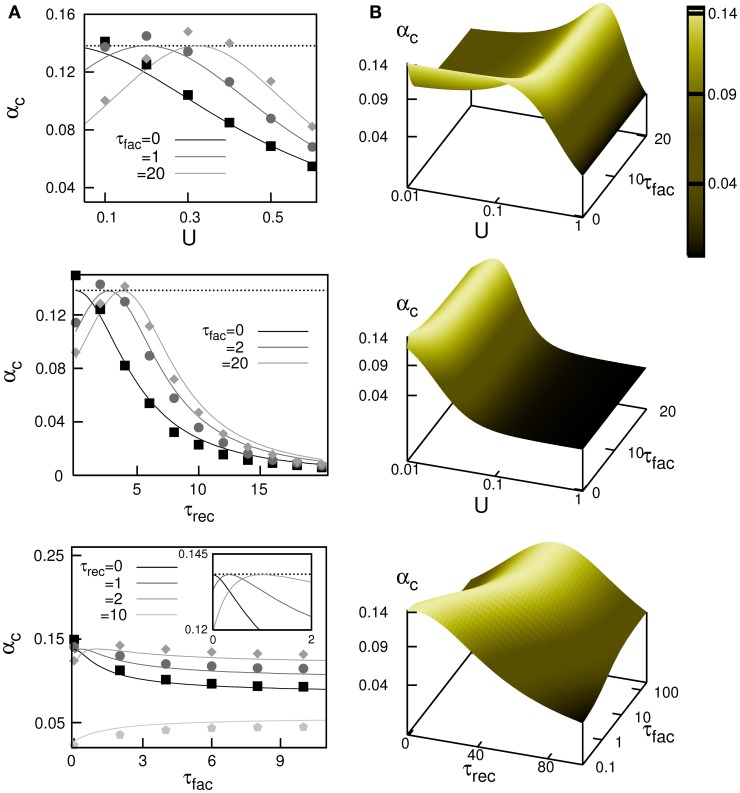

A complete study of the system by means of Monte Carlo simulations (in a network with N = 3000 neurons) has demonstrated the validity of this mean field result and is depicted in Figure 3A. The figure shows the behavior of αc obtained from Equation (14) (different solid lines), when some relevant parameters of the synapse dynamics are varied, and it is compared with the maximum storage capacity obtained from the Monte Carlo simulations (different symbols). The most remarkable feature is that in the absence of facilitation the storage capacity decreases when the level of depression increases (that is, large release probability U, or large recovering time τrec); see black curves in the top and middle panels of Figure 3A. This decrease is caused by the loss of stability of the memory fixed points of the network due to depression. Facilitation (see dark and light gray curves) allows to recover the maximal storage capacity of static synapses, which is the well know limit αc ≈ 0.14 (dotted horizontal line), in the presence of some degree of synaptic depression. In general the competition between synaptic depression and facilitation induces a complex non-linear and non-monotonic behavior of αc for different synaptic dynamics parameters as is shown in different panels of Figure 3B. In general, large values of αc appear for moderate values of U and τrec, and large values of τfac. These values qualitatively agree with those described in facilitating synapses in some cortical areas, where U is lower than in the case of depressing synapses and τrec is several times lower than τfac (Markram et al., 1998). Note that facilitation or depression never increases the storage capacity of the network above the maximum value αc ≈ 0.14.

Figure 3.

Maximum storage capacity obtained in attractor neural networks with dynamic synapses with both depressing and facilitating mechanisms. (A) Behaviour of αc as a function of U, τrec and τfac. The solid lines correspond the theoretical prediction from the mean field Equation (14) and symbols are obtained from Monte Carlo simulations. From top to bottom, it is shown αc(U) for τrec = 2 and different values of τfac, αc(τrec) for U = 0.2 and different values of τfac and αc(τfac) for U = 0.2 and different values of τrec, respectively. The horizontal dotted lines correspond to the static synapses limit αc ≈ 0.138. (B) Mean-field results from Equation (14) for the dependence of αc for different combinations of relevant parameters. This corresponds—from top to bottom—to the surfaces αc(U, τfac) for τrec = 2, αc(U, τfac) for τrec = 50 and αc(τrec, τfac) for U = 0.02. In all panels, τrec and τfac are given in MCS units that can be associated to a value of 5 ms if one assumes that a MCS corresponds to the duration of the refractory period in actual neurons.

4. Criticality in up–down transitions

In a recent paper (Holcman and Tsodyks, 2006), the emergent dynamic memories described in section 2 that result from short-term plasticity have been related to the voltage transitions observed in cortex between a high-activity state (the Up state) and a low-activity state (the Down state). These transitions have been observed in simultaneous individual single neuron recordings as well as in local field measurements.

Using a simple but biologically plausible neuron and synapse model similar to the models described in sections 1 and 2, we have theoretically studied the conditions for the emergence of this intriguing behavior, as well as their temporal features (Mejias et al., 2010). The model consists of a simple stochastic bistable rate model which mimics the average dynamics of a population of interconnected excitatory neurons. The neural activity is summarized by a single activity ν(t), whose dynamics follows a stochastic mean field equation

| (15) |

where τν is the time constant for the neuron dynamics, νm is the maximum synaptic input to the neuron population, J is the (static) synaptic strength and θ is the neuron threshold. The function is a sigmoidal function which models the excitability of neurons in the population.

The synaptic input from other neurons is modulated by a short-term dynamic synaptic process x(t) which satisfies the stochastic mean field equation

| (16) |

The parameters τr, U and D are, respectively, the recovery time constant for the stochastic short-term synaptic plasticity mechanism, a parameter related with the reliability of the synaptic transmission (the average release probability in the population) and the amplitude of this synaptic noise. The explanation of each term appearing in the rhs of Equation (16) is the following: the first term accounts for the slow recovery of neurotransmitter resources, the second term represents a decrease of the available neurotransmitter due to the level of activity in the population and the third term is a noise term that accounts for all possible sources of noise affecting transmission of information at the synapses of the population and that remains at the mesoscopic level.

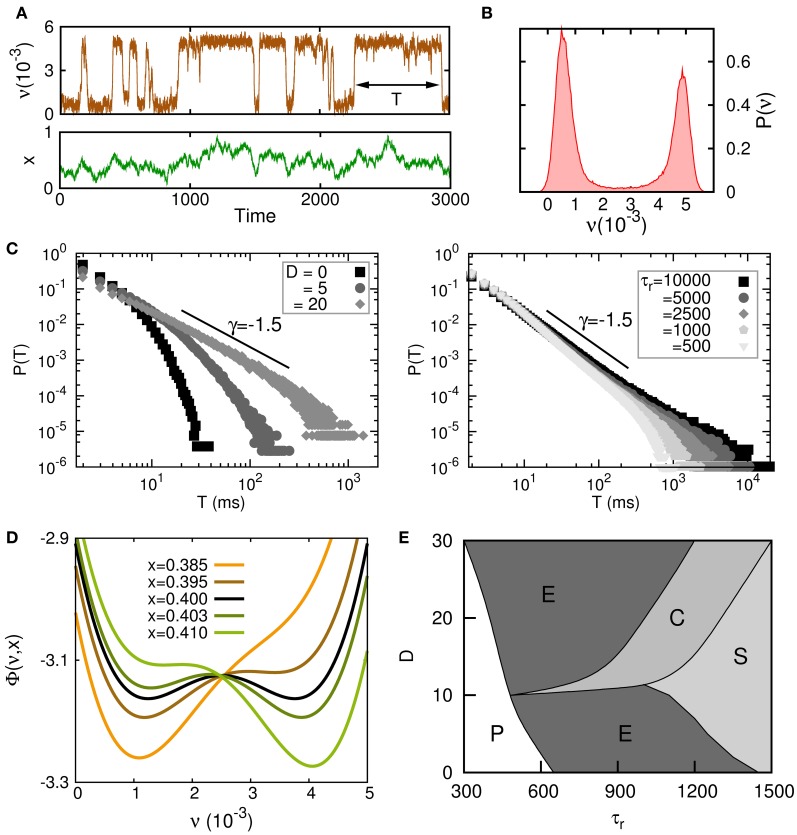

A complete analysis of this model, both theoretically and by numerical simulations, shows the appearance of complex transitions between high (up) and low (down) neural activity states driven by the synaptic noise x(t), with permanence times in the up state distributed according to a power-law for some range of the synaptic dynamic parameters. The main results of this study are summarized in Figure 4. On Figure 4A, a typical time series of the temporal behavior of the mean neural activity ν(t) of the system in the regime in which irregular up–down transitions occur is depicted. In Figure 4B, the histogram of ν(t) for this time series shows a clear bimodal shape corresponding to the two only possible states for ν(t). Figure 4C shows how the parameters τr and D, that control the stochastic dynamics of x(t), also are relevant for the appearance of power law distributions P(T) for the permanence time in the up or down state T. As is outlined in (Mejias et al., 2010), the dynamics can be approximately described in an adiabatic approximation, in which the neuron dynamics is subject to an effective potential Φ. Figure 4D shows how Φ changes for different values of the mean synaptic depression x. For relatively small x (orange and brown lines) all synapses in the population have a strong degree of depression and the population has a small level of activity, that is, the global minimum of the potential function is the low-activity state (the down state). On the other hand, when synapses are poorly depressed and x takes relatively large values (dark and light green lines) the neuron activity level is high and the potential function has its global minimum in a high-activity state (up state). For intermediate values of x (black line) the potential becomes bistable. Figure 4E shows the complete phase diagram of the system and illustrates the regions in the parameter space (D, τr) where different behaviors emerge. In the phase (P) no transition between a high-activity state and low-activity state occurs. In phase (E) transitions between up and down states are exponentially distributed. The phase (C) is characterized by the emergence of power-law distributions P(T), and therefore is the most intriguing phase since it could be associated to a critical state. Finally, phase (S) is characterized by a highly fluctuating behavior of both ν(t) and x(t). In fact, ν(t) is behaving as a slave variable of x(t) and, therefore, it presents the dynamical features of the dynamics (Equation 16), which has some similarities with those of colored noise for U small. In fact for U = 0, and making the change z(t) = x(t) − 1 the dynamics (Equation 16) transforms in that for an Ornstein–Uhlenbeck (OU) process (van Kampen, 1990).

Figure 4.

Criticality in up–down transitions. (A) Typical times series for the neuron population rate variable ν(t) and the mean depression variable x(t) in the neuron population when irregular up–down transitions emerge. Parameter values were J = 1.2 V, τr = 1000 τν U = 0.6, D = 0, δ = 0.3, and νm = 5 · 10−3. (B) Histogram of the same time series for ν(t) which presents bimodal features corresponding to two different levels of activity. (C) Transitions from exponential to power law behavior for the probability distribution for the permanence time in the up or down state P(T) when parameters D (left panel) and τr (right panel) are varied. Model parameters were the same than in panel (A) except that J = 1.1 V in the left panel and U = 0.04 and D/τr = 0.02/τν in the right panel. (D) A variation of x(t) induces a change in the shape of the potential function Φ—driving the dynamics of the rate variable ν(t)—which causes transitions between the up and down states. Parameters were the same than in panel (A) except that J = 1.1 V. (E) Complete phase diagram (D, τr), for the same set of parameters than in panel (D), where different phases characterize different dynamics of ν(t), x(t) (see main text for the explanation).

From these studies, we can conclude that the experimentally observed large fluctuations in up and down permanence times in the cortex can be explained as the result of sufficiently noisy dynamical synapses (large D) with sufficiently large recovery times (large τr). Static synapses (τr = 0) or dynamical synapses in the absence of noise (D = 0) cannot account for this behavior, and only exponential distributions for P(T) emerge in this case.

5. Stochastic multiresonance

In section 2 we mentioned that short-term synaptic plasticity induces the appearance of dynamic memories as the consequence of the destabilization of memory attractors due to synapse fatigue. The synaptic fatigue in turn is due to strong neurotransmitter vesicle depletion as the consequence of high frequency presynaptic activity and large neurotransmitter recovering times. Also, we concluded that this fact induces a high sensitivity of the system to respond to external stimuli, even if the stimulus is very weak and in the presence of noise. The source of the noise can be due to the neural dynamics as well as the synaptic transmission. It is the combination of non-linear dynamics and noise that causes the enhanced sensitivity to external stimuli. This general phenomenon is the so called stochastic resonance (SR) (Benzi et al., 1981; Longtin et al., 1991).

In a set of recent papers we have studied the emergence of SR in feed-forward neural networks with dynamic synapses (Mejias and Torres, 2011; Torres et al., 2011). We considered a post-synaptic neuron which receives signals from a population of N presynaptic neurons through dynamic synapses modeled by Equations (1, 2). Each one of these presynaptic neurons fires a train of Poisson distributed APs with a given frequency fn. In addition the postsynaptic neuron receives a weak signal S(t) which we can assume sinusoidal. In addition, we assume a stationary regime, where the dynamic synapses have reached their asymptotic values and . If all presynaptic neurons fire independently the total synaptic current is a noisy quantity with mean ĪN and variance σ2N given by

| (17) |

with Ip = A u∞x∞ and A the synaptic strength. To explore the possibility of SR, we vary the firing frequency of the presynaptic population fn. The reason for this choice is that varying fn changes the output variance σ2N and fn can also be relatively easily controlled in an experiment.

To quantify the amount of signal that is present in the output rate we use the standard input–output cross-correlation or power norm (Collins et al., 1995) during a time interval Δt and defined as:

| (18) |

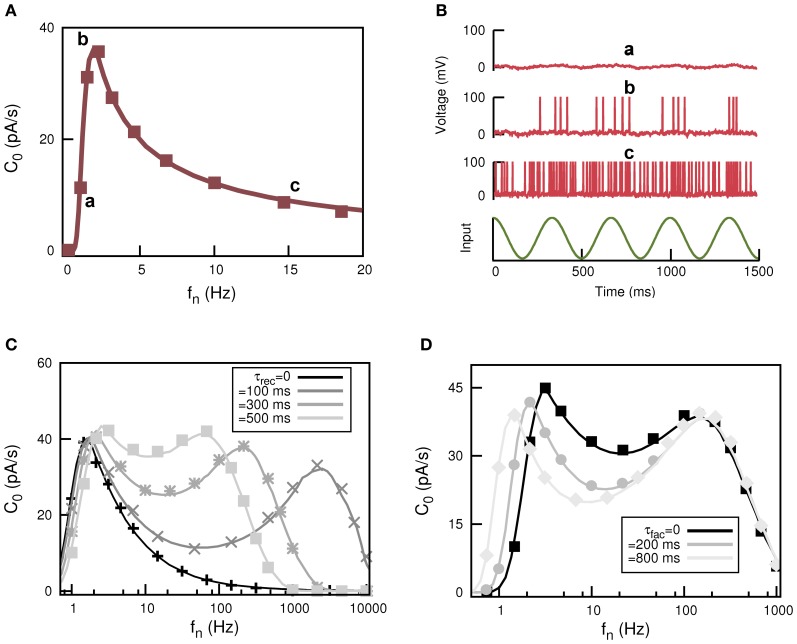

where ν(t) is the firing rate of the post-synaptic neuron. The behavior of C0 as a function of fn for static synapses is depicted in Figure 5A which clearly shows a resonance peak at certain non-zero input frequency fn. The output of the postsynaptic neuron at the positions in the frequency domain labeled with “a,” “b,” and “c” is illustrated in Figure 5B and compared with the weak input signal. This shows how stochastic resonance emerges in this system. For low firing frequency (case labeled with “a”) in the presynaptic population the generated current is so small that the postsynaptic neuron only has sub-threshold behavior weakly correlated with S(t). For very large fn (case labeled with “c”) both ĪN and σ2N are large and the postsynaptic neuron is firing all the time, so it can not detect the temporal features of S(t). However, there is an optimal value of fn at which the postsynaptic neuron fires strongly correlated with S(t); in fact it fires several APs each time a maximum in S(t) occurs (case labeled with “b”).

Figure 5.

Appearance of stochastic multiresonances in feed forward neural networks of spiking neurons with dynamic synapses. (A) Behaviour of C0—defined in Equation (18)—as a function of fn for static synapses showing the phenomenon of stochastic resonance. (B) Temporal behavior for the response of the postsynaptic neuron at each labeled position of the resonance curve in panel (A). (C) Resonance curve for C0 when dynamic synapses are included. The most remarkable feature is the appearance of a two-peak resonance in the frequency domain, with the position of high frequency peak controlled by the particular value of τrec. (D) The panel shows another interesting feature of the two-peak resonance curve for C0, that is, the control of the position of the low frequency peak by τfac.

This behavior dramatically changes when dynamic synapses are considered, as is depicted in Figures 5C,D. In fact, for dynamic synapses there are two frequencies at which resonance occurs. That is, short-term synaptic plasticity induces the appearance of stochastic multi-resonances (SMR). Interestingly, the position of the peaks is controlled by the parameters that control the synapse dynamics. For instance, in Figure 5C it is shown how for a fixed value of facilitation and increasing depression (increasing τrec) the second resonance peak moves toward low values of fn while the position of the first resonance peak remains unchanged. On the other hand, for a given value of depression, the increase of facilitation time constant τfac moves the first resonance peak while the position of the second resonance peak is unaltered (see Figure 5D). This clearly demonstrates that in actual neural systems synapses with different levels of depression and facilitation can control the signal processing at different frequencies.

The appearance of SMR in neural media with dynamic synapses is quite robust: SMR also appears when the post-synaptic neuron is model with different types of spiking mechanisms, such as the FitzHugh–Nagumo (FHN) model or the integrate and fire model (IF) with an adaptive threshold dynamics (Mejias and Torres, 2011). SMR also appears with more realistic stochastic dynamic synapses and more realistic weak signals such as a train of inputs with small amplitude and short durations distributed in time according to a rate modulated Poisson process (Mejias and Torres, 2011).

The physical mechanism behind the appearance of SMR is the existence of a non-monotonic dependence of the synaptic current fluctuations with fn—due to the dynamic synapses—together with the existence of an adaptive threshold mechanism in the postsynaptic neuron to the incoming synaptic current. In this way, the distance in voltage between the mean post-synaptic sub-threshold voltage and the threshold for firing remains constant or decreases very slowly for increasing presynaptic frequencies. This implies the existence of two values of fn at which current fluctuations are enough to induce firing in the post-synaptic neuron [see Mejias and Torres (2011) for more details].

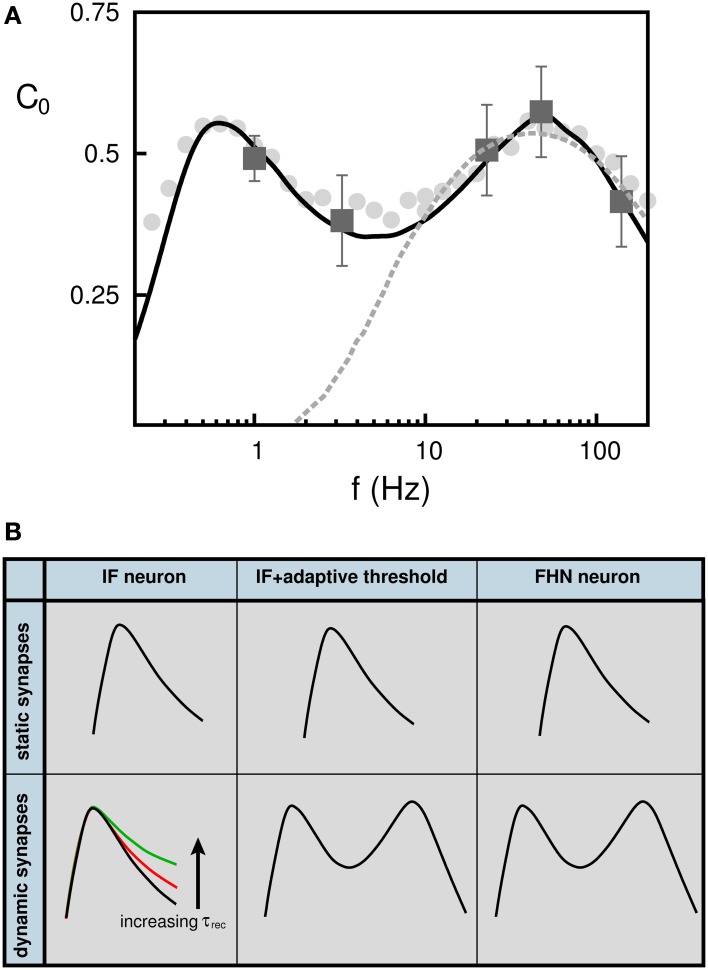

In light of these findings, we have reinterpreted recent SR experimental data from psycho-physical experiments on human blink reflex (Yasuda et al., 2008). In these experiments the neurons responsible for the blink reflex receive inputs from neurons in the auditory cortex, which are assumed to be uncorrelated due to the action of some external source of white noise. The subject received in addition a weak signal in the form of a periodic small air puff into the eyes. The authors measured the correlation between the air puff signal and the blink reflex and their results are plotted in Figure 6A (dark gray square error-bar symbols). They used a feed-forward neural network with a postsynaptic neuron with IF dynamics with fixed threshold to interpret their findings (light-gray dashed line). With this model, only the high-frequency correlation points can be fitted. Using instead a FHN model or an IF with adaptive threshold dynamics, we were able to fit all experimental data points (black solid line). The SMR is also observed with more realistic rate-modulated weak Poisson pulses (light-gray filled circles) instead of the sinusoidal input (black solid line). Both model predictions are consistent with the SMR that is observed in this experiments. In Figure 6B we summarize the conditions that neurons and synapses must satisfy for the emergence of SMR in a feed forward neural network.

Figure 6.

(A) Appearance of stochastic multi-resonance in experiments in the brain. Dark gray square symbols represent the values of C0 obtained in the experiments performed in the human auditory cortex. Dashed light gray line corresponds to best model prediction using a neuron with fixed threshold (Yasuda et al., 2008). Solid black line correspond to our model consisting of a FHN neuron and depressing synapses. Gray filled circle symbols shows C0 when the weak signal is a train of (uncorrelated) Poisson pulses instead of the sinusoidal input (solid line). (B) Schematic overview showing the neuron and synapse mechanisms needed for the appearance of stochastic multi-resonances in feed-forward neural networks. (see (Torres et al., 2011) for more details).

6. Relation with other works

The occurrence of non-fixed point behavior in recurrent neural networks due to dynamic synapses has also been reported by others (Senn et al., 1996; Tsodyks et al., 1998; Dror and Tsodyks, 2000). These studies differ from our work because one assumes continuous deterministic neuron dynamics (instead of binary and stochastic, as in our work). The oscillations observed in these networks do not have the rapid switching behavior as we observe and seem unrelated to the metastability that we have found in our work.

In addition, it has been reported that oscillations in the firing rate can be chaotic (Senn et al., 1996; Dror and Tsodyks, 2000) and present some intermittent behavior that resembles observed patterns of EEG. The chaotic regime in these continuous models seems unrelated to the existence of fixed point behavior and most likely understood as a generic feature of non-linear dynamical systems.

It is worth noting that for each neuron, the effect of dynamic synapses is modeled through a single variable xi that multiplies the synaptic strength wij for all synapses that connect to i. There is one depression variable per neuron and not per connection. As a result, one can obtain the same behavior of the network by interpreting xi as implementing a dynamic firing thresholds (Horn and Usher, 1989) instead of a dynamic synapse.

The switching behavior that we described in this paper, is somewhat similar to the neural network with chaotic neurons that displays a self-organized chaotic transition between memories (Tsuda et al., 1987; Tsuda, 1992).

The possible interpretation of the switching behavior as up/down cortical transitions is controversial, because similar cortical oscillations can be generated without synaptic dynamics, where the up state is terminated because of hyperpolarizing potassium ionic currents (Compte et al., 2003). However, a very recent study has focused on the interplay between synaptic depression and these inhibitory currents and concludes that synaptic depression is relevant for maintaining the up state (Benita et al., 2012). The reason for that counterintuitive behavior is that synaptic depression decreases the firing rate in the up state which also decreases the effect of the hyper-polarizing potassium currents and, as a consequence, the prolongation of the up state.

Related also is a recent study on the effect of dynamic synapses on the emergence of a coherent periodic rhythm within the Up state which results in the phenomenon of stochastic amplification (Hidalgo et al., 2012). It has been shown that this rhythm is an emergent or collective phenomenon given that individual neurons in the up state are unlocked to such a rhythm.

The relation between dynamic synapses and storage capacity has also been studied by others. For very sparse stored patterns (a « 1) it has been shown that storage capacity decreases with synaptic depression (Bibitchkov et al., 2002), in agreement with our findings. On the other hand, it has been reported that the basin of attraction of the memories are enlarged by synaptic depression (Matsumoto et al., 2007) and these are even enlarged more when synaptic facilitation is taken into account (Mejias and Torres, 2009).

(Otsubo et al., 2011) reported a theoretical and numerical study on the role of short-term depression on memory storage capacity in the presence of noise, showing that noise reduces the storage capacity (as is also the case for static synapses). (Mejias et al., 2012) shows the important role of facilitation to enlarge the regions for memory retrieval even in the presence of high noise.

In the last decade there has been some discussion whether neural systems, or even the brain as a whole, can work in a critical state using the notion of self-organized criticality (Beggs and Plenz, 2003; Tagliazucchi et al., 2012). As we stated in section 4, the combination of colored synaptic noise and short-term depression can cause power-low distributed permanence times in the Up and Down states, which is a signature of criticality. The emergence of critical phenomena as a consequence of dynamic synapses has also been explored by others (Levina et al., 2007, 2009; Bonachela et al., 2010; Millman et al., 2010).

Finally, it is worth mentioning a recent work that has investigated the formation of spatio-temporal structures in an excitatory neural network with depressing synapses (Kilpatrick and Bressloff, 2010). As a result of dynamic synapses, robust complex spatio-temporal structures, including different types of travelling waves, appear in such a system.

7. Conclusions

It is well-known that during transmission of information, synapses show a high variability with a diverse origin, such as the stochastic release and transmission of neurotransmitter vesicles, variations in the Glutamate concentration through synapses and the spatial heterogeneity of the synaptic response in the dendrite tree (Franks et al., 2003). The cooperative effect of all these mechanisms is a noisy post-synaptic response which depends on past pre-synaptic activity. The strength of the postsynaptic response can decrease or increase and can be modeled as dynamical synapses.

In a large number of papers, we have studied the effect of dynamical synapses in recurrent an feed-forward networks, the result of which we have summarized in this paper. The main findings are the following:

Dynamic memories: Classical neural networks of the Hopfield type, with symmetric connectivity, display attractor dynamics. This means that these networks act as memories. A specific set of memories can be stored as attractors by Hebbian learning. The attractors are asymptotically stable states. The effect of synaptic depression in these networks is to make the attractors lose stability. Oscillatory modes appear where the network rapidly switches between memories. Instead, the permanence time to stay in a memory can have any positive value and becomes infinite in the regime where memories are stable. Thus, the recurrent network with dynamical synapses implements a form of dynamical memory.

Input sensitivity: The classical Hopfield network is relatively insensitive to external stimuli, once it has converged into one of its stable memories. Synaptic depression improves the sensitivity to external stimuli, because it destabilizes the memories. In addition, synaptic facilitation further improves the sensitivity of the attractor network to external stimuli.

Storage capacity: The storage capacity of the attractor neural network, i.e., the maximum number of memories that can be stored in a network, is proportional to the number of neurons N and scales as Pmax = αN with α = 0.138. Synaptic depression causes a decrease of the maximum storage capacity but facilitation allows to recover the capacity of the network with static synapses under some conditions.

Up and down states: The emergence of dynamic memories has been related to the well-known up–down transitions observed in local-field recording in the cortex. We demonstrated that the observed distributions of permanence times can be explained by a stochastic synaptic dynamics. Scale free permanence time distributions could signal a critical state in the brain.

Stochastic multiresonance: Whereas static synapses in a stochastic network give rise to a single stochastic resonance peak, dynamical synapses produce a double resonance. This phenomenon is robust for different types of neurons and input signals. Thus, dynamic synapses may explain recently observed SMR in psychophysical experiments. SMR also seems to occur in recurrent neural networks with dynamic synapses as it has been recently reported (Pinamonti et al., 2012). This work demonstrates the relevant role of short-term synaptic plasticity for the appearance of the SMR phenomenon in recurrent networks, although the exact underlying mechanism behind it is slightly different than in the case described here, namely feed-forward neural networks.

It is important to point out that although the phenomenology reported in this review has been obtained using different models, all the reported phenomena can be also derived in a single model consisting in a network of binary neurons with dynamic synapses as described in section 1. The phenomena reported in sections 2 and 3 have in fact been obtained using this model and the phenomenon of stochastic multiresonance (section 5) has been reported recently in such a model by Pinamonti et al. (2012). The results on critical up and down states that are reported in section 4 have been obtained in a mean-field model that can be derived from the same binary model and by assuming in addition sparse neural activity and sparse connectivity, which increases the stochasticity in the synaptic transmission through the whole network.

In addition, our studies show that the reported phenomena are robust to detailed changes in the model, such as replacing the binary neurons by graded response neurone or integrate-and-fire neurone.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Joaquin J. Torres acknowledges support from Junta de Andalucia (project FQM-01505) and the MICINN-FEDER (project FIS2009-08451).

References

- Abbott L. F., Valera J. A., Sen K., Nelson S. B. (1997). Synaptic depression and cortical gain control. Science 275, 220–224 [DOI] [PubMed] [Google Scholar]

- Amari S. (1972). Learning patterns and pattern sequences by self-organizing nets of threshold elements. IEEE Trans. Comput. 21, 1197–1206 [Google Scholar]

- Amit D. J. (1989). Modeling Brain Function: The World of Attractor Neural Network. Cambridge, UK: Cambridge University Press. [Google Scholar]

- Barak O., Tsodyks M. (2007). Persistent activity in neural networks with dynamic synapses. PLoS Comput. Biol 3:e35 10.1371/journal.pcbi.0030035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beggs J. M., Plenz D. (2003). Neuronal avalanches in neocortical circuits. J. Neurosci. 23, 11167–11177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benita J. M., Guillamon A., Deco G., Sanchez-Vives M. V. (2012). Synaptic depression and slow oscillatory activity in a biophysical network model of the cerebral cortex. Front. Comp. Neurosci. 6:64 10.3389/fncom.2012.00064 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benzi R., Sutera A., Vulpiani A. (1981). The machanims of stochastic resonance. J. Phys. A Math. Gen. 14, L453 [Google Scholar]

- Bertram R., Sherman A., Stanley E. F. (1996). Single-domain/bound calcium hypothesis of transmitter release and facilitation. J. Neurophysiol. 75, 1919–1931 [DOI] [PubMed] [Google Scholar]

- Bibitchkov D., Herrmann J. M., Geisel T. (2002). Pattern storage and processing in attractor networks with short-time synaptic dynamics. Netw. Comput. Neural Syst. 13, 115–129 [PubMed] [Google Scholar]

- Bonachela J. A., de Franciscis S., Torres J. J., Muñoz M. A. (2010). Self-organization without conservation: are neuronal avalanches generically critical? J. Stat. Mech. Teor Exp. 2010:P02015 10.1088/1742-5468/2010/02/P02015 [DOI] [Google Scholar]

- Bressloff P. C. (1999). Mean-field theory of globally coupled integrate-and-fire neural oscillators with dynamic synapses. Phys. Rev. E 60, 2160–2170 10.1103/PhysRevE.60.2160 [DOI] [PubMed] [Google Scholar]

- Collins J. J., Carson Chow C. C., Imhoff T. T. (1995). Aperiodic stochastic resonance in excitable systems. Phys. Rev. E 52, R3321–R3324 10.1103/PhysRevE.52.R3321 [DOI] [PubMed] [Google Scholar]

- Compte A., Sanchez-Vives M. V., McCormick D. A., Wang. X.-J. (2003). Cellular and network mechanisms of slow oscillatory activity (<1 hz) and wave propagations in a cortical network model. J. Neurophysiol. 89, 2707–2725 10.1152/jn.00845.2002 [DOI] [PubMed] [Google Scholar]

- Cortes J. M., Garrido P. L., Marro J., Torres J. J. (2004). Switching between memories in neural automata withsynaptic noise. Neurocomputing 58–60 67–71. [Google Scholar]

- Cortes J. M., Torres J. J., Marro J., Garrido P. L., Kappen H. J. (2006). Effects of fast presynaptic noise in attractor neural networks. Neural Comput. 18, 614–633 10.1162/089976606775623342 [DOI] [PubMed] [Google Scholar]

- Dror G., Tsodyks M. (2000). Chaos in neural networks with dynamic synapses. Neurocomputing 32–33, 365–37022422014 [Google Scholar]

- Franks K. M., Stevens C. F., Sejnowski T. J. (2003). Independent sources of quantal variability at single glutamatergic synapses. J. Neurosci. 23, 3186–3195 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hertz J., Krogh A., Palmer R. G. (1991). Introduction to the Theory of Neural Computation. Redwood City, CA: Addison-Wesley. [Google Scholar]

- Hidalgo J., Seoane L. F., Cortés J. M., Muñoz M. A. (2012). Stochastic amplification of fluctuations in cortical up-states. PLoS ONE 7:e40710 10.1371/journal.pone.0040710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holcman D., Tsodyks M. (2006). The emergence of up and down states in cortical networks. PLoS Comput. Biol. 2, 174–181 10.1371/journal.pcbi.0020023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopfield J. J. (1982). Neural networks and physical systems with emergent collective computational abilities. Proc. Natl. Acad. Sci. U.S.A. 79, 2554–2558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horn D., Usher M. (1989). Neural networks with dynamical thresholds. Phys. Rev. A 40, 1036–1044 10.1103/PhysRevA.40.1036 [DOI] [PubMed] [Google Scholar]

- Kilpatrick Z. P., Bressloff P. C. (2010). Spatially structured oscillations in a two-dimensional excitatory neuronal network with synaptic depression. J. Comput. Neurosci. 28, 193–209 10.1007/s10827-009-0199-6 [DOI] [PubMed] [Google Scholar]

- Kistler W. M., van Hemmen J. L. (1999). Short-term synaptic plasticity and network behavior. Neural Comput. 11, 1579–1594 [DOI] [PubMed] [Google Scholar]

- Levina A., Herrmann J. M., Geisel T. (2007). Dynamical synapses causing self-organized criticality in neural networks. Nat. Phys. 3, 857–860 10.1098/rsif.2012.0558 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levina A., Herrmann J. M., Geisel T. (2009). Phase transitions towards criticality in a neural system with adaptive interactions. Phys. Rev. Lett. 102, 118110 10.1103/PhysRevLett.102.118110 [DOI] [PubMed] [Google Scholar]

- Liaw J. S., Berger T. W. (1996). Dynamic synapse: a new concept of neural representation and computation. Hippocampus 6, 591–600 [DOI] [PubMed] [Google Scholar]

- Longtin A., Bulsara A., Moss F. (1991). Time-interval sequences in bistable systems and the noise-induced transmission of information by sensory neurons. Phys. Rev. Lett. 67, 656–659 10.1103/PhysRevLett.67.656 [DOI] [PubMed] [Google Scholar]

- Markram H., Tsodyks M. (1996). Redistribution of synaptic efficacy between neocortical pyramidal neurons. Nature 382, 759–760 10.1038/382807a0 [DOI] [PubMed] [Google Scholar]

- Markram H., Wang Y., Tsodyks. M. (1998). Differential signaling via the same axon of neocortical pyramidal neurons. Proc. Natl. Acad. Sci. U.S.A. 95, 5323–5328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marro J., Torres J. J., Cortes J. M. (2007). Chaotic hopping between attractors in neural networks. Neural Netw. 20, 230–235 10.1016/j.neunet.2006.11.005 [DOI] [PubMed] [Google Scholar]

- Matsumoto N., Ide D., Watanabe M., Okada M. (2007). Retrieval property of attractor network with synaptic depression. J. Phys. Soc. Jpn. 76, 084005 [Google Scholar]

- Mejias J. F., Hernandez-Gomez B., Torres J. J. (2012). Short-term synaptic facilitation improves information retrieval in noisy neural networks. EPL (Europhys. Lett.) 97, 48008 [Google Scholar]

- Mejias J. F., Kappen H. J., Torres J. J. (2010). Irregular dynamics in up and down cortical states. PLoS ONE 5:e13651 10.1371/journal.pone.0013651 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mejias J. F., Torres J. J. (2009). Maximum memory capacity on neural networks with short-term depression and facilitation. Neural Comput. 21, 851–871 10.1162/neco.2008.02-08-719 [DOI] [PubMed] [Google Scholar]

- Mejias J. F., Torres J. J. (2011). Emergence of resonances in neural systems: the interplay between adaptive threshold and short-term synaptic plasticity. PLoS ONE 6:e17255 10.1371/journal.pone.0017255 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millman D., Mihalas S., Kirkwood A., Niebur E. (2010). Self-organized criticality occurs in non-conservative neuronal networks during up states. Nat. Phys. 6, 801–805 10.1038/nphys1757 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mongillo G., Barak O., Tsodyks M. (2008). Synaptic theory of working memory. Science 319, 1543–1546 10.1126/science.1150769 [DOI] [PubMed] [Google Scholar]

- Natschläger T., Maass W., Zador A. (2001). Efficient temporal processing with biologically realistic dynamic synapses. Netw. Comput. Neural Syst. 12, 75–87 [PubMed] [Google Scholar]

- Otsubo Y., Nagata K., Oizumi M., Okada M. (2011). Influence of synaptic depression on memory storage capacity. J. Phys. Soc. Jpn. 80, 084004 [Google Scholar]

- Pantic L., Torres J. J., Kappen H. J., Gielen S. C. A. M. (2002). Associative memory with dynamic synapses. Neural Comput. 14, 2903–2923 10.1162/089976602760805331 [DOI] [PubMed] [Google Scholar]

- Pieribone V. A., Shupliakov O., Brodin L., Hilfiker-Rothenfluh S., Zernik A. J., Greengard P. (1995). Distinct pools of synaptic vesicles in neurotransmitter release. Nature 375, 493–497 10.1038/375493a0 [DOI] [PubMed] [Google Scholar]

- Pinamonti G., Marro J., Torres J. J. (2012). Stochastic resonance crossovers in complex networks. PLoS ONE 7:e51170 10.1371/journal.pone.0051170 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senn W., Wyler K., Streit J., Larkum M., Lüscher H. r., Merz F., et al. (1996). Dynamics of random neural network with synaptic depression. neural networks. Neural Netw. 9, 575–588 [Google Scholar]

- Senn W., Segev I., Tsodyks M. (1998). Reading neuronal synchrony with depressing synapses. Neural Comput. 10, 815–819 [DOI] [PubMed] [Google Scholar]

- Steriade M., McCormick D. A., Sejnowski. T. J. (1993a). Thalamocortical oscillations in the sleeping and aroused brain. Science 262, 679–685 10.1126/science.8235588 [DOI] [PubMed] [Google Scholar]

- Steriade M., Nunez A., Amzica F. (1993b). A novel slow (<1hz) oscillation of neocortical neurons in vivo: depolarizing and hyperpolarizing components. J. Neurosci. 13, 3252–3265 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliazucchi E., Balenzuela P., Fraiman D., Chialvo D. R. (2012). Criticality in large-scale brain fMRI dynamics unveiled by a novel point process analysis. Front. Physiol. 3:15 10.3389/fphys.2012.00015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Torres J. J., Cortes J. M., Marro J. (2005). Instability of attractors in auto-associative networks with bio-inspired fast synaptic noise. LNCS 3512, 161–167 [Google Scholar]

- Torres J. J., Cortes J. M., Marro J., Kappen H. J. (2008). Competition between synaptic depression and facilitation in attractor neural networks. Neural Comput. 19, 2739–2755 10.1162/neco.2007.19.10.2739 [DOI] [PubMed] [Google Scholar]

- Torres J. J., Marro J., Mejias J. F. (2011). Can intrinsic noise induce various resonant peaks? New J. Phys. 13:053014 10.1088/1367-2630/13/5/053014 [DOI] [Google Scholar]

- Torres J. J., Pantic L., Kappen H. J. (2002). Storage capacity of attractor neural networks with depressing synapses. Phys. Rev. E. 66:061910 10.1103/PhysRevE.66.061910 [DOI] [PubMed] [Google Scholar]

- Tsodyks M., Uziel A., Markram H. (2000). Synchrony generation in recurrent networks with frequency-dependent synapses. J. Neurosci. 20, RC50 (1–5). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsodyks M. V., Markram H. (1997). The neural code between neocortical pyramidal neurons depends on neurotransmitter release probability. Proc. Natl. Acad. Sci. U.S.A. 94, 719–723 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsodyks M. V., Pawelzik K., Markram H. (1998). Neural networks with dynamic synapses. Neural Comput. 10, 821–835 [DOI] [PubMed] [Google Scholar]

- Tsuda I. (1992). Dynamic link of memory–chaotic memory map in nonequilibrium neural networks. Neural Netw. 5, 313–326 [Google Scholar]

- Tsuda I., Koerner E., Shimizu H. (1987). Memory dynamics in asynchronous neural networks. Prog. Theor. Phys. 78, 51–71 [Google Scholar]

- van Kampen N. G. (1990). Stochastic Processes in Physics and Chemistry. Amsterdam: North-Holland Personal Library (Elsevier). [Google Scholar]

- Yasuda H., Miyaoka T., Horiguchi J., Yasuda A., Hanggi P., Yamamoto Y. (2008). Novel class of neural stochastic resonance and error-free information transfer. Phys. Rev. Lett. 100:118103 10.1103/PhysRevLett.100.118103 [DOI] [PubMed] [Google Scholar]

- Zucker R. S. (1989). Short-term synaptic plasticity. Annu. Rev. Neurosci. 12, 13–31 10.1146/annurev.ne.12.030189.000305 [DOI] [PubMed] [Google Scholar]

- Zucker R. S., Regehr W. G. (2002). Short-term synaptic plasticity. Annu. Rev. Physiol. 64, 355–405 10.1146/annurev.physiol.64.092501.114547 [DOI] [PubMed] [Google Scholar]