Abstract

At perceptual threshold, some stimuli are available for conscious access whereas others are not. Such threshold inputs are useful tools for investigating the events that separate conscious awareness from unconscious stimulus processing. Here, viewing unmasked, threshold-duration images was combined with recording magnetoencephalography to quantify differences among perceptual states, ranging from no awareness to ambiguity to robust perception. A four-choice scale was used to assess awareness: “didn’t see” (no awareness), “couldn’t identify” (awareness without identification), “unsure” (awareness with low certainty identification), and “sure” (awareness with high certainty identification). Stimulus-evoked neuromagnetic signals were grouped according to behavioral response choices. Three main cortical responses were elicited. The earliest response, peaking at ∼100 ms after stimulus presentation, showed no significant correlation with stimulus perception. A late response (∼290 ms) showed moderate correlation with stimulus awareness but could not adequately differentiate conscious access from its absence. By contrast, an intermediate response peaking at ∼240 ms was observed only for trials in which stimuli were consciously detected. That this signal was similar for all conditions in which awareness was reported is consistent with the hypothesis that conscious visual access is relatively sharply demarcated.

Keywords: cognition, vision

Conscious visual representations must be generated rapidly enough to affect behavior advantageously. Thus, vision must be fast. However, the network activation that supports visual perception is complex and involves many spatially segregated brain areas (1). As a result, certain integration delays must ensue for successful perceptual analysis to be achieved. An experimental paradigm was thus designed to address the temporal and neuronal conditions required for a rapid, high-level visual response.

Several previous studies suggest that visual perception relies primarily on the early activation of occipital cortices (2–4). Others show that it results from the late activation (5, 6) of temporal (7), parietal, and frontal areas (8). Finally, others indicate that perception is associated with a midlatency evoked response (9), the timing of which can be delayed when stimulus energy is decreased to near-threshold levels (10, 11).

These conflicting findings demonstrate the unresolved nature of the timing underlying visual perception. Moreover, varied interpretations of the term “perception” may contribute to varied interpretations of the neuronal processes being studied, making it more difficult to understand the timing of cognitive events generated by the brain. To avoid such errors in communication, we define the term perception to mean the conscious awareness or detection of a presented visual stimulus: a phenomenon not to be confused with related processes such as visual identification and recognition. Although our definition is limited to the event in which a visual stimulus achieves conscious access, we concede that perception may be associated with various levels of certainty and clarity. To this effect, we used a single visual stimulus presentation combined with magnetoencephalography (MEG) to characterize the cortical events associated with subjective experiences ranging from absent to ambiguous to robust perception.

Threshold duration stimuli (numbered images from 0 to 9) were delivered by using light emitting diode (LED) displays (Fig. 1). Resulting stimulus identification, degree of perceptual certainty, and neuromagnetic responses were measured. This method allowed quantification of cortical activation related to the perception of unmasked target stimuli, with high resolution. Four awareness report choices were used to describe perceptual certainty: “didn’t see,” “couldn’t identify,” “unsure,” and “sure.” Because identical threshold-duration stimuli were delivered on all trials, differences in brain activity correlated with different awareness reports directly reflected changes in perceptual states rather than in stimulus features. Such conditionalization of brain responses according to trial outcome is a sensitive method for studying the neuronal correlates of conscious experience (12). We found the earliest evoked response correlated with perception to be an almost “all-or-none” midlatency signal peaking at ∼240 ms after the stimulus. This event, early enough to inform action yet late enough to allow substantial integrative analysis, offers important insights into the temporal requirements placed on our visual machinery.

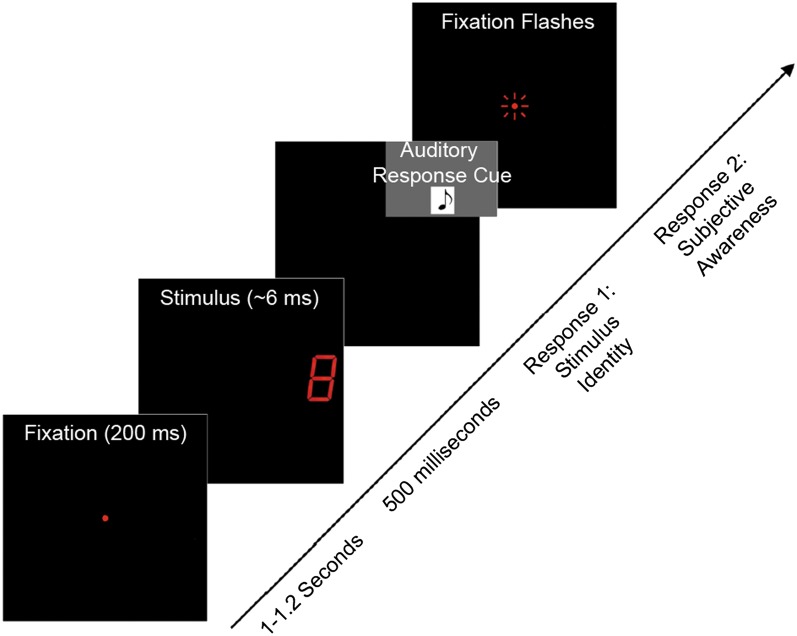

Fig. 1.

Experimental protocol. On each trial, a fixation was displayed for 200 ms and then turned off. After a delay, a threshold-duration stimulus (digits 0–9) was delivered, followed by a response tone (500 ms after the stimulus was turned off) cueing subjects to report the number they saw. When this initial response had been registered, the fixation was flickered rapidly, cueing subjects to report their awareness of the stimulus (“didn’t see,” “couldn’t identify,” “unsure,” or “sure”).

Results

Behavioral Results.

Measured threshold stimulus durations ranged from 5.3 ± 1.7 ms to 9.4 ± 4.1 ms for different digits (average thresholds per stimulus) and from 1.9 ± 0.1 ms to 36.3 ± 4.6 ms for different subjects (averaged threshold per subject). The mean threshold duration, averaged across all stimuli and subjects, was 6.7 ± 0.5 ms (mean of the average thresholds per stimulus).

The mean percentage of correct stimulus identification was 57 ± 4% (mean ± SEM). The number of subjects with response accuracy values within 1 SD of the mean was 14; the number with response accuracy values within 2 SDs was 18 (18 subjects total). Thus, stimulus identification accuracy was comparable among subjects.

Subjective awareness reports of “didn’t see” and “couldn’t identify” were associated with significantly more incorrect than correct stimulus identifications. This would be expected given that stimuli that were not consciously detected or detected but not identified were catalogued as unrecognized. Subjective awareness reports of “unsure” and “sure” were associated with significantly more correct than incorrect stimulus identifications. This, too, is expected given that detected and recognized stimuli were presumably more likely to be correctly identified. Taken together, stimulus identification accuracy and subjective awareness reports were congruent for our study.

MEG Results.

Event-related fields.

Timing of evoked responses.

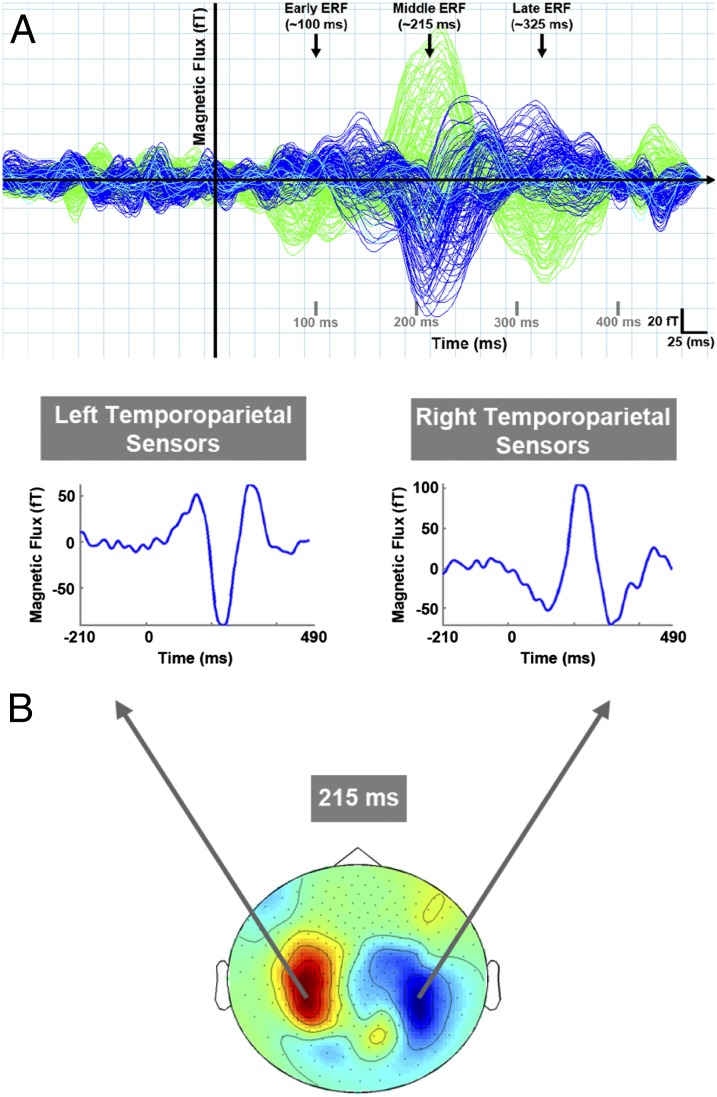

Three robust event-related fields (ERFs) were seen in the time-series data averaged across all trial types for each MEG channel: an early (40–140 ms poststimulus), a middle (140–290 ms poststimulus), and a late ERF (190–390 ms poststimulus) response (Fig. 2A shows data collected from one subject). Of these, the middle ERF had the largest peak amplitude.

Fig. 2.

(A) ERFs of interest. Representative data from one subject showing three main ERFs in the MEG data averaged across all trial types. Traces represent the averaged magnetic response for each sensor as a function of time for one recording run (150 trials). Blue and green traces represent sensors over the right and left cortical hemispheres, respectively. Aqua traces represent midline sensors. The earliest evoked response peaked between 40 and 140 ms after stimulus presentation (early ERF). A second response peaked between 140 and 290 ms after stimulus (middle ERF) and was followed by a third response peaking between 190 and 390 ms after stimulus. (B) Sensor groups of interest. Left and right temporoparietal sensors were robustly activated at the selected time point (peak of the middle response). Both sensor groups showed all three ERFs of interest. Each time series trace is from one MEG sensor that is representative of the sensor group from which it was selected. Data are a topographical representation of grand-averaged ERFs.

Mean peak latencies for early, middle, and late ERFs were 101 ± 9 ms, 238 ± 21 ms, and 293 ± 25 ms, respectively (averaged across all experimental conditions and sensor groups per ERF).

Global field pattern and sensor selection.

Grand averaged ERFs at the peak of the middle response (215–216 ms poststimulus) showed two main clusters of MEG sensors being robustly activated: left and right temporoparietal sensors. Although frontal and occipital sensors were also activated at this time, previous work that used the same experimental methods showed neuromagnetic responses recorded in temporoparietal channels to be robustly correlated with stimulus perception (13). Thus, sensor-level data analysis was restricted to left and right temporoparietal channels only. The main deflection in the ERF waveforms for this time point had opposite directionality in left vs. right temporoparietal sensors, reflecting the orientation of underlying sources. Time-series data for representative channels from each sensor group of interest also showed strong ERFs during the early and late time windows of interest (as detailed above). Fig. 2B illustrates a topographical representation of activated sensor groups and example time series data from one representative sensor per group.

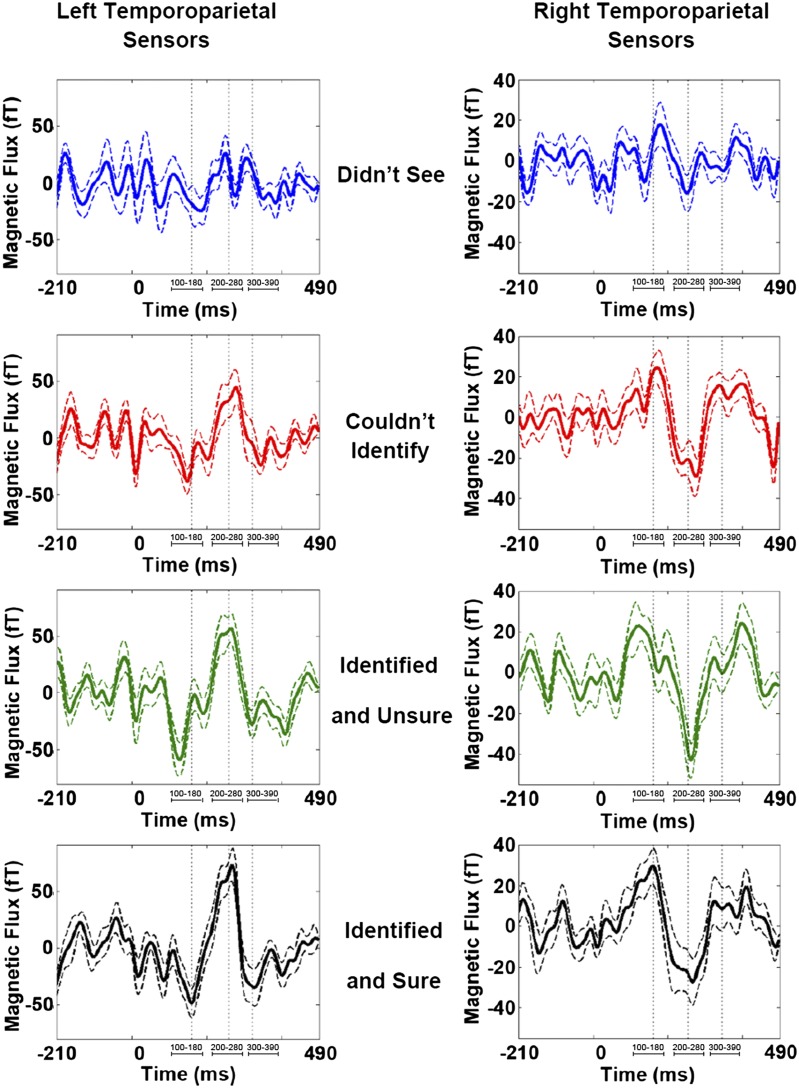

Visualization of grand-averaged ERFs grouped by subjective awareness.

For left and right temporoparietal sensor groups, ERFs recorded for trials in which subjects reported not perceiving the presented stimulus (awareness report “didn’t see”) showed little signal deflection from baseline (Fig. 3, Top). However, a low-amplitude response during the time window of the early ERF was visible for this trial type. In contrast, middle and late ERFs were largely absent.

Fig. 3.

Sensor recordings for each subjective awareness report. Grand-averaged ERFs for left (Left) and right temporoparietal (Right) sensor groups for “didn’t see” (blue trace), “couldn’t identify” (red trace), “unsure” (green trace), and “sure” (black trace) conditions. Dashed lines indicate SEM for each time point. Vertical dotted lines approximate ERF peak latencies for early, middle, and late ERFs. Relevant time intervals of interest are identified below each ERF. For both sensor groups, an early ERF was observable for all trial types (regardless of whether subjects reported perception). By contrast, a middle evoked response was observed only for trials in which stimuli were perceived (“couldn’t identify,” “unsure,” and “sure” conditions). A late ERF was also mainly present for trials in which stimuli were perceived.

For trials in which subjects reported seeing but not recognizing a stimulus (awareness report “couldn’t identify”), a notable middle evoked response was visible (Fig. 3, second row). In fact, for this condition, all three ERFs of interest were present, and the time series data resembled that recorded for the remaining two trial types.

For trials in which subjects reported seeing and being able to identify a stimulus with low or high certainty (i.e., awareness reports of “unsure” and “sure”), early, middle, and late ERFs were present and comparable to responses observed for trials in which stimuli were perceived but not identified (Fig. 3, bottom two rows).

In sum, although the early ERF (peak latency ∼100 ms) was present for all conditions (i.e., trials in which stimuli were not perceived or perceived with differing levels of certainty), the middle and late ERFs (peak latency ∼240 and ∼290 ms, respectively) were not. Instead, these later responses were only seen for trials in which perception occurred regardless of (i) whether stimuli could or could not be identified and (ii) how certain subjects were of stimulus identifications that were made.

Amplitude and latency analysis.

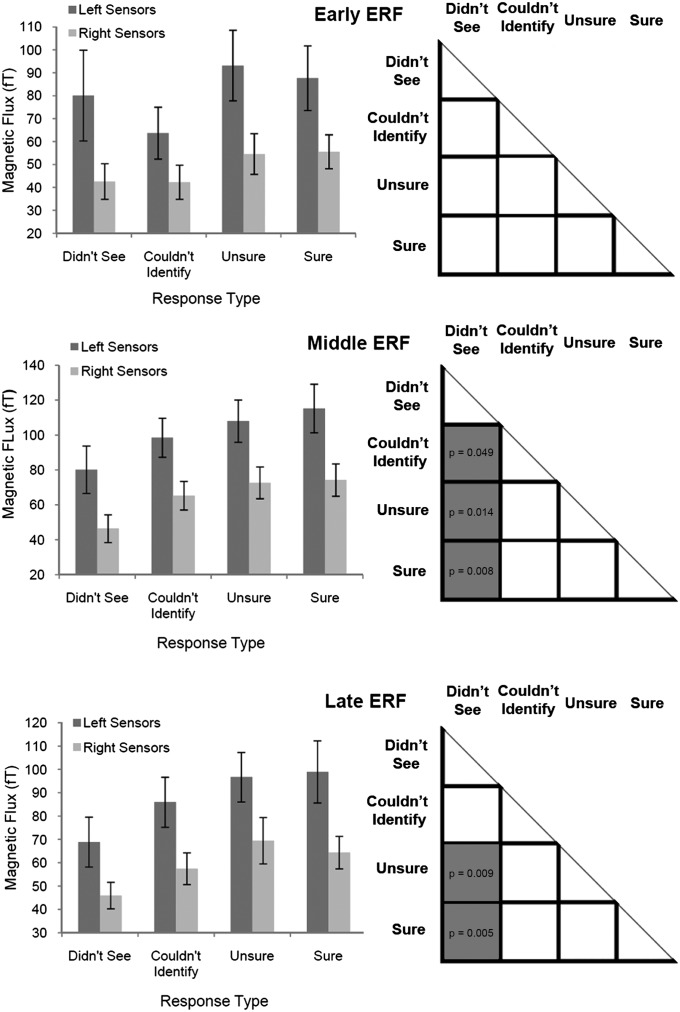

For the early ERF, although averaged peak amplitudes differed between limited (i.e., “didn’t see” and “couldn’t identify”) and more robust perception conditions (i.e., “unsure” and “sure”), there were no significant differences between any pair of experimental conditions (i.e., this response could not distinguish perception from its absence; Fig. 4). P values for all pairwise comparisons were ≥0.106.

Fig. 4.

Mean ERF peak amplitudes and statistical differences between conditions. (Left) Mean ERF peak amplitudes for each subjective awareness choice. Error bars are SEMs. For the early ERF, limited perception conditions (“didn’t see” and “couldn’t identify”) showed lower mean peak amplitudes than robust perception conditions (“sure” and “unsure”). For middle and late ERFs, mean peak amplitudes increased steadily with reported clarity of perception. (Right) Statistically significant differences among the various trial types (gray squares depict significantly different findings of pairwise comparisons; P values ≤ 0.05 are depicted in respective squares). For the early ERF, no significant differences in peak amplitudes were seen across conditions. For the middle ERF, the no-perception condition (i.e., “didn’t see“) was lower in amplitude and statistically different vs. all other perception-related conditions. There were no significant differences in peak amplitudes for all conditions in which perception occurred (“couldn’t identify,“ “unsure,“ and “sure“). For the late ERF, a similar pattern was seen; however, only perception with identification conditions (“unsure“ and “sure“) were significantly different vs. the no-perception condition. Again, all conditions in which perception occurred had similar peak amplitudes.

For the middle ERF, mean peak amplitudes gradually increased as perceptual reports became more robust (Fig. 4, Middle Left). However, this parameter was significantly different for the “didn’t see” (i.e., no perception) condition compared with the remaining three perception-related conditions: (i) “couldn’t identify,” or perception without identification (P = 0.049); (ii) “unsure,” or perception with low certainty identification (P = 0.014); and (iii) “sure,” or perception with high certainty identification (P = 0.008). Finally, and crucially: for all three successful perception conditions, ERF peak amplitudes were of similar amplitude (all pairwise comparisons, P ≥ 0.361), suggesting a threshold-dependent all-or-none–type response pattern (Fig. 4).

For the late ERF, mean peak amplitudes again gradually increased as perceptual reports became more robust (Fig. 4, Bottom Left) and were different for the “didn’t see” condition compared with the “unsure” (P = 0.009) and “sure” (P = 0.005) conditions (Fig. 4). Although late ERF peak amplitude was lower for the “didn’t see” than the “couldn’t identify” condition, this result did not reach the significance threshold (P = 0.065). Finally, for all three perception-related conditions, ERF peak amplitudes were statistically similar (all pairwise comparisons, P ≥ 0.255).

Discussion

Threshold inputs tap the boundaries of our perceptual capabilities and are tools for studying brain events that separate perception from unconscious stimulus processing. We used unmasked, threshold-duration visual digits and measured the neuromagnetic signals elicited by the ensuing perceptual events. MEG signals, directly reflecting dendritic current flow in cortical neurons (14), allow for brain events to be recorded over the entire cortical surface with millisecond resolution. We found that the earliest neuromagnetic correlate of stimulus perception was a response with amplitude maxima ∼240 ms after stimulus onset. This response could distinguish between perception and no-perception conditions and was similar in amplitude for all conditions in which perception was reported, regardless of the associated level of certainty. These results suggest that visual perception correlates with a high-amplitude, all-or-none event in the brain with timing in the 200- to 250-ms range.

Earlier work from our laboratory suggested that a midlatency, perception-related brain response was elicited in inferior temporal cortices when visual stimuli were correctly identified. The temporal precision of this response increased as stimulus duration was lengthened, and, as a result, perceptual experience was presumably more robust (13). The present data more clearly demonstrate the correlation between such a response and perceptual experience, as only threshold duration stimuli were delivered and subjects reported the quality of their subjective experience in addition to stimulus identity.

The earliest evoked response measured peaked at ∼100 ms poststimulus and was observed for all experimental conditions. Peak amplitudes for this response were similar across conditions, suggesting that such early activity may not reliably indicate conscious visual experience. This result is puzzling considering the expectation that successful perception trials are more likely when chance variations in early sensory responses are larger in amplitude (15–17). Indeed, earlier findings have demonstrated a correlation between the amplitude of the 100-ms response and stimulus categorization (18), whereas others have shown its absence for unperceived stimuli (2). In the case of one earlier study (18), higher-amplitude responses within this time window indicated stronger unconscious feature encoding, leading to more accurate stimulus categorization later on. In another study (2), differences between these findings and our own could be attributable to methodological differences between EEG and MEG techniques [however, the result from ref. 2 could not be confirmed by more recent attempts with use of the same experimental methods (9)]. Furthermore, it is possible that our experimental design resulted in inadequate signal-to-noise ratio (caused by limited trial number) for appropriate resolution of amplitude variation in such a small and highly variable early response. In terms of function, such early activity may indicate the unconscious encoding of stimulus features that is necessary for later perception- and recognition-related processes to function appropriately (19, 20).

A late response, peaking at ∼300 ms after stimulus presentation, was also observed. This activity pattern, although similar to the midlatency response in tracking the perceptual metric we defined, demonstrated statistically similar peak values for the no-perception and perception-without-identification conditions. Hence, this later activity was less reliable than the midlatency response in signaling conscious visual awareness. The timing of the late response falls within the window of the highly studied P300 evoked potential that is known to reflect attention and working memory processes (21). A similar, late response can also be seen after the visual awareness negativity (described below) and is absent for perceived stimuli when attentional focus is on local rather than global stimulus features (22), ruling it out as a correlate of visual consciousness.

Although our results are consistent with previous findings indicating that no electrophysiological correlate of visual consciousness occurs until ∼200 ms after stimulus presentation (22–26), the fact that the perceptual event is potentially categorical and supported by an all-or-none model had not been emphasized. A previously described evoked response termed visual awareness negativity (11, 24, 27) had been correlated with visual perception in numerous paradigms (11, 23, 24, 27) and shown to be identical for perceived stimuli regardless of attentional manipulations (22). Similarly, the previously described N170/M170 (28) and the subdurally recorded N200 (29) also occur with similar latency and are highly specific for the perception (30) and identification of faces (18) and words (31). Cortical responses to visual stimuli that peak ∼200 ms after stimulus may therefore be the earliest events to signal conscious perception in a stimulus- and paradigm-independent manner. The midlatency evoked response we characterized peaked slightly later, at ∼240 ms after stimulus, possibly because of the extremely short exposure durations used.

The most interesting aspect of our findings is the fact that a detectable difference in response amplitude could not be identified when threshold duration stimuli were perceived, regardless of associated certainty. Therefore, it can be inferred that any variation in the midlatency evoked response for perception-related conditions is weak or absent. Moreover, this response was undetected for trials in which perception did not occur. This finding may result in part from inadequate resolution of any response as a result of a limited number of trials. However, a large increase in signal amplitude was already present for perception-related conditions with the number of trials we used.

Although our results demonstrate an abrupt transition in brain activity for perceived vs. not-perceived inputs, it is possible that a more graded, visual identification process is also occurring but was not identified in this work. Indeed, the fact that subjects used a graded response system of “didn’t see,” “couldn’t identify,” “unsure,” and “sure” suggests that a cortical correlate must exist. Additionally, the perceptual response we describe here did not track with the behavioral choices subjects used. As the behavioral metric itself was associated stimulus identification, it follows that identification and perception may be related but separate processes.

One explanation for this finding is that the perceptual signal we describe may in fact represent the rapid spatial localization of salient visual targets—a process likely to involve the “dorsal stream” components of visual analyses that localize to areas of parietal cortex (32). However, this is less likely because earlier work from our laboratory localized the perceptual correlate of interest to bilateral inferior temporal cortices (13), areas more traditionally associated with the “ventral visual stream” (32). Additionally, activation of dorsal stream areas has also been associated with unconscious, visually guided behavior (33). As the midlatency signal in this work was correlated with conscious visual awareness, it is less likely that the perceptual event we have characterized overlaps in function with dorsal stream networks.

A second explanation may be that, although perception involves the binding of sensory information into the context of one’s internal brain state (34), identification may result from subsequent levels of processing achieved thereafter. That is, threshold duration inputs that were not perceived may not have become properly enmeshed within the context of the subject’s endogenous brain activity. Conversely, the threshold stimuli that were perceived did. It is conceivable that the perceptual event, which, in this case, involves the binding of external sensory information with ongoing neuronal activation patterns, may result in an explosive all-or-none process capable of broadcasting information to relevant, distant brain locations. Inputs that reach deeper stages of processing at various brain sites may subsequently be associated with more localized activity that parses the identity and significance of relevant inputs (events that may generate electrophysiological changes varying over finer gradients). As this later activity would potentially involve smaller subsets of spatially separated neurons, the present study may not have sufficient resolution to quantify such events.

Within the context of the model described here, the advantage of an all-or-none type of perceptual event is substantial. Because the all-or-none signal exists in one of two dramatically differing amplitudes (essentially “on” vs. “off”), when it is rapidly communicated over distances, noise added at each relay point will not significantly alter the information carried within the signal. As a result, an all-or-none type event, such as the perceptual correlate we have described here, would be an ideal candidate for broadcasting the presence of a potentially salient visual stimulus to multiple brain areas in a reliable and rapid manner. When this information has been successfully transmitted, more detailed sensory analyses and behavioral responses may or may not be initiated depending on the relevance of the input to the needs of the subject at that time. Such a model offers a possible explanation for how certain external sensory events gain internal significance within the rich and complex communication patterns sustained by the nervous system.

Methods

Participants.

Twenty-three volunteers (10 male; 22 right-handed; mean age, 28 y) provided informed consent to participate in this study, approved by the institutional review board (IRB) at NYU School of Medicine. All participants had normal or corrected-to-normal vision. Data from five subjects were excluded as a result of inadequate number of trials for analysis (<10 trials per experimental condition).

Stimuli.

A stimulation device was designed and built that displays unmasked visual signals at submillisecond duration. The apparatus consists of eight seven-segment LED displays (digit height, 14 mm; digit width, 8 mm; color, red) arranged in a circle with a radius of 30 mm. Each display is equidistant from the center, which contains a small, circular LED (5 mm; red) that served as a fixation. The entire circuit is covered with black fiberglass so internal LEDs were invisible until turned on. The device was placed 1,770 mm from the subject’s nasion, giving a stimulus visual angle of 0.45°.

Only the two horizontally aligned display units were used during experimentation. With the subject fixating centrally, stimuli were pseudorandomly delivered to the left or right visual fields during each trial, perifoveally (1° eccentricity). This was done to avoid expectation of stimulation at a single location. Stimuli were digits 0 through 9, randomly interleaved.

All stimulus durations were oscilloscopically calibrated to be accurate to 100 μs. During experimentation, only threshold-duration stimuli were delivered (∼6 ms long). The device had a separate output port that sent a pulse to the MEG acquisition computer 10 ms before stimulus onset, allowing MEG data acquisition and analysis to be precisely aligned with stimulus onset.

Stimulus luminance was measured by using an optometer (model 61 CRT; United Detector Technology). Average luminance for all threshold duration stimuli was 5.4 ± 0.4 cd/m2.

Our stimulation apparatus received input via a serial port connected to a PC running Presentation software (version 12.2; Neurobehavioral Systems). This program sent output parameters for each trial (stimulus identity and location) to the device. The device controlled the exact timing of stimulation and eliminated extraneous jitter in event timing. All responses were collected by the Presentation program and logged into a separate text file for later MEG data analysis by trial type.

Auditory stimuli for the first response cue (50 ms, 500 Hz sinusoid) were delivered via a speaker system placed outside the magnetically shielded room (MSR) adjacent to a passthrough that allowed access into the room. Sound volume was adjusted to be comfortable for each subject.

Task.

Dark adaptation and threshold determination.

Before stimulus exposure, subjects were seated in the MSR and dark-adapted for 30 min (35). Given that stimuli were brief (≤100 ms) and small (<1°), delivering them in the dark optimized the signal-to-noise ratio of elicited MEG activity.

The accelerated stochastic approximation method (36) was used to determine threshold durations (50% accurate identification) per number stimulus (i.e., 0–9) and subject. The stimulus sequence was terminated when the step size remained at 0.1 ms for 20 iterations of each digit. For a given subject, the times recorded for these 20 iterations per number were averaged to yield unique threshold durations for digits 0 through 9. In this way, thresholds were perceptually matched across stimuli and subjects.

Experimental paradigm.

On each trial (Fig. 1), a fixation LED was displayed for 200 ms and then turned off. Subjects were instructed (i) to maintain fixation and (ii) not to saccade toward the stimulus that would appear in the periphery.

After a delay (jittered between 1 and 1.2 s), subjects were shown a threshold-duration stimulus (digits 0–9) in one of two locations (left or right visual field). A total of 750 trials were delivered (half each in the left and right visual fields). A previous study from our laboratory that used the same experimental setup showed no significant differences in stimulus recognition and evoked responses as a result of the visual field of stimulation. Thus, trials were collapsed across visual fields for data analysis to increase the total trials of each trial type and signal-to-noise ratio of MEG signals. To minimize fatigue, trials were divided into five runs (150 trials each) taking ∼10 min per run. Subjects were given short breaks between runs while remaining in the MSR. The five runs were later concatenated for analysis. Fig. 1 summarizes the relevant event timing.

Subjects reported the identity of the number they saw (via button press) when cued with the auditory beep (500 ms poststimulus). There were 10 button choices in total: one for each digit from 0 through 9. When the subject saw a given digit, he/she would press the button corresponding to that specific stimulus. If the subject was unable to identify a stimulus, he/she was instructed to press any one of the available buttons at random and initiate the next trial.

When the first response had been registered, a second cue (rapid flickering of the fixation LED) was sent. In response, subjects reported their awareness of the presented stimulus for that particular trial. Choices included button 1 (“didn’t see”), button 2 (“couldn’t identify”), button 3 (“unsure”; i.e., saw and identified with low certainty), and button 4 (“sure”; i.e., saw and identified with high certainty).

Behavioral data were collected by using a fiberoptic button press (LUMItouch; Photon Control) that registered all responses to the acquisition computer as input from an additional channel.

Data Acquisition.

MEG data were recorded in 1-s epochs by using a 275-channel, whole-head system (VSM Med Tech) at a sampling rate of 600 Hz. Epochs contained 500 ms each of pre- and poststimulus periods. Data were baseline-corrected by using the prestimulus window and band pass-filtered between 2.5 and 30 Hz for the analysis of the ERFs. At the start and end of each run, the subject’s head position, relative to the sensor array, was recorded by using digitized coils placed at the nasion and left and right preauricular points. This ensured that even minimal head movements occurring during recordings would be noted. Filtered data for all subjects, and for each MEG recording run per subject, were realigned so head positions were matched to that measured for the first recording run for subject 1 by using the ft_megrealign function from the open-source MATLAB FieldTrip toolbox (Fieldtrip Toolbox for MEG/EEG Analysis; F. C. Donders Centre, Radboud University Nijmegen, Nijmegen, The Netherlands; product of MathWorks). This subroutine interpolates MEG signals onto standard gradiometer locations by projecting individual, time-locked data toward a coarse source reconstruction. Resulting signals are then recalculated for standard gradiometer locations. In this manner, changes in head position between MEG recordings for each subject would be corrected. Additionally, activity originating from similar brain regions would be recorded in nearby MEG sensors for all participants.

Data Analysis.

Psychophysics.

Recognition performance was measured for each subject during experimentation. Chance-level accuracy was 0.1 (i.e., 10 digits used). Data were analyzed to yield (i) the average percentage of correct identifications, (ii) the number of individuals with response accuracy levels within 1 to 2 SDs of the mean, and (iii) the total number of correct/incorrect responses associated with each subjective awareness choice. Statistically significant differences in psychophysical data were identified by using a two-tailed t test. Variance was reported as the SEM.

MEG and ERFs.

The open-source MATLAB Fieldtrip tool box (Fieldtrip Toolbox for MEG/EEG Analysis; F. C. Donders Centre, Radboud University, Nijmegen, Nijmegen, The Netherlands) was used to analyze MEG data. Stimulus-evoked changes in the magnetic field over time were analyzed as averaged ERFs of epochs grouped by trial type (i.e., awareness report choices). ERFs were calculated for each MEG sensor by averaging the time-series data for all single trials corresponding to a particular trial type for that sensor. For analysis methods not supported by this tool box, software was written in-house by using MATLAB.

For each subject, time-series data collected for 150 trials (one run) were averaged for each MEG sensor (after collapsing across all experimental conditions). These data were plotted to observe the timing of all salient ERFs evoked by the general paradigm used (Fig. 3). By using these results, relevant time windows of interest were calculated for each salient ERF.

The time point associated with the peak amplitude of the maximal amplitude ERF was chosen after inspection of data averaged across all trial types (as detailed above). To visualize the associated global field pattern of MEG activity, grand-average data were topographically plotted for this time point, and maximally activated sensor groups were identified (Fig. 4). Identified sensor groups were used to select channels for further analysis (as detailed below).

As response maxima varied slightly in sensor location for each subject, the set of sensors used for further ERF analyses were selected individually by using the following criteria: (i) 10 sensors were selected for each MEG sensor group showing strong ERFs in the topographical plot of grand-averaged data (described above); (ii) channels selected for each group had to display large deflections from baseline (during the peak of the most salient ERF) that were statistically different to the prestimulus period [Kolmogorov–Smirnov test-corrected for multiple comparisons with false discovery rate of 0.05 (37); this statistic was used to ensure that selected channels showed poststimulus signal distributions that were significantly different to the prestimulus baseline]; and (iii) time series data for selected channels had to represent that recorded in surrounding sensors.

For each individual, MEG data were grouped according to the four experimental trial types [i.e., awareness reports of (i) “didn’t see,” (ii) “couldn’t identify,” (iii) “unsure,” and (iv) “sure”]. To ensure that ERF signal-to-noise ratios were matched across all conditions per subject, the number of trials of each trial type were kept the same for each individual. The number of epochs chosen per subject corresponded to the number of epochs recorded for the trial type with minimal total trials. For conditions in which a subset of total trials were used, epochs were randomly sorted before the relevant number were chosen, thereby ensuring that selected epochs were not restricted to the start or end of a recording run (i.e., fatigue would not bias these data). The mean number of epochs per trial type was 45 ± 8.

For each trial type, ERFs were calculated for each MEG sensor. To generalize data across spatially localized sensor areas, ERFs for the 10 selected channels of each identified sensor group were averaged to yield a region-specific ERF per each sensor group and experimental condition. Amplitude and latency measures were then calculated (as detailed below).

For each sensor group and experimental condition (i.e., trial type), ERF data were averaged across all subjects. Resulting grand-averaged signals were plotted separately per condition to visualize differences in evoked responses correlated with each subjective awareness report. Variance measures for each data point were plotted ± SEM.

For each individual, the peak amplitude and latency of each salient ERF was measured per subjective awareness response choice and sensor group of interest (as detailed above).

ERF peak amplitudes were measured as the absolute value of the maximal signal deflection from baseline for each identified time window of interest (i.e., corresponding to each salient ERF). Latency measures were the time point of this maximal deflection.

Amplitude differences were first compared within a given sensor group. Pairwise comparisons of each experimental condition yielded similar trends in significant differences for all identified sensor groups of interest. Hence, the values for each experimental condition were combined across sensor groups. Statistical analysis was then repeated to yield a more general pattern of differences common to all identified sensor groups. Statistical significance was determined by using a two-tailed t test. Variance measures were reported as SEMs.

Mean latencies were calculated for each ERF of interest by averaging across all conditions and subjects for early, middle, and late time windows of interest. Variance was reported as SEM.

Footnotes

The authors declare no conflict of interest.

References

- 1.Zeki S. Parallel processing, asynchronous perception, and a distributed system of consciousness in vision. Neuroscientist. 1998;4(5):365–372. [Google Scholar]

- 2.Pins D, Ffytche D. The neural correlates of conscious vision. Cereb Cortex. 2003;13(5):461–474. doi: 10.1093/cercor/13.5.461. [DOI] [PubMed] [Google Scholar]

- 3.Corthout E, Uttl B, Walsh V, Hallett M, Cowey A. Timing of activity in early visual cortex as revealed by transcranial magnetic stimulation. Neuroreport. 1999;10(13):2631–2634. doi: 10.1097/00001756-199908200-00035. [DOI] [PubMed] [Google Scholar]

- 4.Tong F. Primary visual cortex and visual awareness. Nat Rev Neurosci. 2003;4(3):219–229. doi: 10.1038/nrn1055. [DOI] [PubMed] [Google Scholar]

- 5.Ergenoglu T, et al. Alpha rhythm of the EEG modulates visual detection performance in humans. Brain Res Cogn Brain Res. 2004;20(3):376–383. doi: 10.1016/j.cogbrainres.2004.03.009. [DOI] [PubMed] [Google Scholar]

- 6.Babiloni C, Vecchio F, Bultrini A, Luca Romani G, Rossini PM. Pre- and poststimulus alpha rhythms are related to conscious visual perception: A high-resolution EEG study. Cereb Cortex. 2006;16(12):1690–1700. doi: 10.1093/cercor/bhj104. [DOI] [PubMed] [Google Scholar]

- 7.Ishai A, Ungerleider LG, Martin A, Schouten JL, Haxby JV. Distributed representation of objects in the human ventral visual pathway. Proc Natl Acad Sci USA. 1999;96(16):9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Del Cul A, Baillet S, Dehaene S. Brain dynamics underlying the nonlinear threshold for access to consciousness. PLoS Biol. 2007;5(10):e260. doi: 10.1371/journal.pbio.0050260. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Koivisto M, et al. The earliest electrophysiological correlate of visual awareness? Brain Cogn. 2008;66(1):91–103. doi: 10.1016/j.bandc.2007.05.010. [DOI] [PubMed] [Google Scholar]

- 10.Wilenius ME, Revonsuo AT. Timing of the earliest ERP correlate of visual awareness. Psychophysiology. 2007;44(5):703–710. doi: 10.1111/j.1469-8986.2007.00546.x. [DOI] [PubMed] [Google Scholar]

- 11.Ojanen V, Revonsuo A, Sams M. Visual awareness of low-contrast stimuli is reflected in event-related brain potentials. Psychophysiology. 2003;40(2):192–197. doi: 10.1111/1469-8986.00021. [DOI] [PubMed] [Google Scholar]

- 12.Campbell FW, Kulikowski JJ. The visual evoked potential as a function of contrast of a grating pattern. J Physiol. 1972;222(2):345–356. doi: 10.1113/jphysiol.1972.sp009801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sekar K, Findley WM, Llinás RR. Evidence for an all-or-none perceptual response: Single-trial analyses of magnetoencephalography signals indicate an abrupt transition between visual perception and its absence. Neuroscience. 2012;206:167–182. doi: 10.1016/j.neuroscience.2011.09.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hämäläinen MS. Basic principles of magnetoencephalography. Acta Radiol Suppl. 1991;377:58–62. [PubMed] [Google Scholar]

- 15.Vallbo AB, Johansson R. Skin mechanoreceptors in the human hand: Neural and psychophysical thresholds. In: Zotterman Y, editor. Sensory Functions of the Skin in Primates, with Special Reference to Man. Oxford: Pergamon; 1976. pp. 185–199. [Google Scholar]

- 16.Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci. 1993;10(6):1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- 17.Nienborg H, Cumming B. Correlations between the activity of sensory neurons and behavior: how much do they tell us about a neuron’s causality? Curr Opin Neurobiol. 2010;20(3):376–381. doi: 10.1016/j.conb.2010.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Liu J, Harris A, Kanwisher N. Stages of processing in face perception: an MEG study. Nat Neurosci. 2002;5(9):910–916. doi: 10.1038/nn909. [DOI] [PubMed] [Google Scholar]

- 19.Tanskanen T, Näsänen R, Montez T, Päällysaho J, Hari R. Face recognition and cortical responses show similar sensitivity to noise spatial frequency. Cereb Cortex. 2005;15(5):526–534. doi: 10.1093/cercor/bhh152. [DOI] [PubMed] [Google Scholar]

- 20.Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431(7010):859–862. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- 21.Polich J. Updating P300: an integrative theory of P3a and P3b. Clin Neurophysiol. 2007;118(10):2128–2148. doi: 10.1016/j.clinph.2007.04.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Koivisto M, Revonsuo A, Lehtonen M. Independence of visual awareness from the scope of attention: an electrophysiological study. Cereb Cortex. 2006;16(3):415–424. doi: 10.1093/cercor/bhi121. [DOI] [PubMed] [Google Scholar]

- 23.Kaernbach C, Schröger E, Jacobsen T, Roeber U. Effects of consciousness on human brain waves following binocular rivalry. Neuroreport. 1999;10(4):713–716. doi: 10.1097/00001756-199903170-00010. [DOI] [PubMed] [Google Scholar]

- 24.Wilenius-Emet M, Revonsuo A, Ojanen V. An electrophysiological correlate of human visual awareness. Neurosci Lett. 2004;354(1):38–41. doi: 10.1016/j.neulet.2003.09.060. [DOI] [PubMed] [Google Scholar]

- 25.Ojanen V, Revonsuo A, Sams M. Visual awareness of low-contrast stimuli is reflected in event-related brain potentials. Psychophysiology. 2003;40(2):192–197. doi: 10.1111/1469-8986.00021. [DOI] [PubMed] [Google Scholar]

- 26.Koivisto M, Revonsuo A, Salminen N. Independence of visual awareness from attention at early processing stages. Neuroreport. 2005;16(8):817–821. doi: 10.1097/00001756-200505310-00008. [DOI] [PubMed] [Google Scholar]

- 27.Koivisto M, Revonsuo A. An ERP study of change detection, change blindness, and visual awareness. Psychophysiology. 2003;40(3):423–429. doi: 10.1111/1469-8986.00044. [DOI] [PubMed] [Google Scholar]

- 28.Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. J Cogn Neurosci. 1996;8(6):551–565. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Allison T, Puce A, Spencer DD, McCarthy G. Electrophysiological studies of human face perception. I: Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb Cortex. 1999;9(5):415–430. doi: 10.1093/cercor/9.5.415. [DOI] [PubMed] [Google Scholar]

- 30.Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage. 2008;39(4):1959–1979. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- 31.Bentin S, Mouchetant-Rostaing Y, Giard MH, Echallier JF, Pernier J. ERP manifestations of processing printed words at different psycholinguistic levels: time course and scalp distribution. J Cogn Neurosci. 1999;11(3):235–260. doi: 10.1162/089892999563373. [DOI] [PubMed] [Google Scholar]

- 32.Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansfield RJW, editors. Analysis of visual behavior. Cambridge: MIT Press; 1982. pp. 549–586. [Google Scholar]

- 33.Milner DA, Goodale MA. The Visual Brain in Action. Oxford: Oxford Univ Press; 2001. [Google Scholar]

- 34.Llinas RR, Ribary U, Joliot M, Wang X-J. Content and context in temporal thalamocortical binding. In: Buzsaki G, Llinas R, Singer W, Berthoz A, editors. Temporal Coding in the Brain. Berlin: Springer-Verlag; 1994. pp. 251–272. [Google Scholar]

- 35.Barlow HB, Kohn HI, Walsh EG. The effect of dark adaptation and of light upon the electric threshold of the human eye. Am J Physiol. 1947;148(2):376–381. doi: 10.1152/ajplegacy.1947.148.2.376. [DOI] [PubMed] [Google Scholar]

- 36.Kesten H. Accelerated stochastic-approximation. Ann Math Stat. 1958;29(1):41–59. [Google Scholar]

- 37.Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15(4):870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]