Abstract

Context

The Centers for Medicare & Medicaid Services publicly reports hospital 30-day all-cause risk-standardized mortality rates (RSMRs) and 30-day all-cause risk-standardized readmission rates (RSRRs) for acute myocardial infarction (AMI), heart failure (HF), and pneumonia. The relationship between hospital performance as measured by RSMRs and RSRRs has not been well characterized.

Objective

We determined the relationship between hospital RSMRs and RSRRs overall, and within subgroups defined by hospital characteristics.

Design, Setting, and Participants

We studied Medicare fee-for-service beneficiaries discharged with AMI, HF, or pneumonia between July 1, 2005 and June 30, 2008. We quantified the correlation between hospital RSMRs and RSRRs using weighted linear correlation; evaluated correlations in groups defined by hospital characteristics; and determined the proportion of hospitals with better and worse performance on both measures.

Main Outcome Measures

Hospital 30-day RSMRs and RSRRs.

Results

The analyses included 4506 hospitals for AMI; 4767 hospitals for HF; and 4811 hospitals for pneumonia. The mean RSMRs and RSRRs were 16.60% and 19.94% for AMI; 11.17% and 24.56% for HF; and 11.64% and 18.22% for pneumonia. The correlations (95% confidence intervals [CIs]) between RSMRs and RSRRs were 0.03 (95% CI: −0.002, 0.06) for AMI, −0.17 (95% CI: −0.20, −0.14) for HF, and 0.002 (95% CI: −0.03, 0.03) for pneumonia. The results were similar for subgroups defined by hospital characteristics. Although there was a significant negative linear relationship between RSMRs and RSRRs for HF, the shared variance between them was only 2.90% (r2 = 0.029).

Conclusions

Our findings do not support concerns that hospitals with lower RSMRs will necessarily have higher RSRRs. The rates are not associated for patients admitted with an AMI or pneumonia and only weakly associated, within a certain range, for patients admitted with HF.

Introduction

Measuring and improving hospital quality of care, particularly outcomes of care, is an important focus for clinicians and policymakers. The Centers for Medicare & Medicaid Services (CMS) began publicly reporting hospital 30-day all-cause risk-standardized mortality rates (RSMRs) for patients with acute myocardial infarction (AMI) and heart failure (HF) in June 2007 and for pneumonia in 2008. In June 2009, CMS expanded public reporting to include hospital 30-day all-cause risk-standardized readmission rates (RSRRs) for patients hospitalized with these 3 conditions.1–8 The National Quality Forum approved these measures and an independent committee of statisticians nominated by the Committee of Presidents of Statistical Societies endorsed the validity of the methodology.9 The mortality and readmission measures have been proposed for use in federal programs to modify hospital payments based on performance.10,11

Some researchers have raised concerns that hospital mortality rates and readmission rates have an inverse relationship, such that hospitals with lower mortality rates are more likely to have higher readmission rates.12,13 Such a relationship would suggest that interventions that improve mortality might also increase readmission rates by resulting in a higher risk group being discharged from the hospital. Conversely, the 2 measures could provide redundant information. If these measures have a strong positive association, then we could infer that they reflect similar processes and it may not be necessary to measure both. We have limited information about this relationship, an understanding of which is critical to our measurement of quality,12 and yet questions surrounding an inverse relationship have led to public concerns about the measures.14

In this study, we investigated the association between hospital-level 30-day RSMRs and RSRRs for Medicare fee-for-service beneficiaries admitted with AMI, HF, or pneumonia, which are the measures that are publicly reported. We further determined the relationships among these measures for subgroups of hospitals to evaluate if the relationships varied systematically within certain subgroups of hospitals (e.g., by teaching status, geographical location). Finally, we used top and bottom performance quartiles to examine the percent of hospitals that had similar performance on both measures for each condition. We hypothesized that these measures convey information, are not strongly correlated, and that many hospitals perform better on both measures and worse on both measures, indicating that performance on one measure does not dictate performance on the other.

METHODS

Study Cohort

The study cohorts included hospitalizations of Medicare beneficiaries aged 65 years and older with a principal discharge diagnosis of AMI, HF, or pneumonia as potential index hospitalizations. We used International Classification of Diseases, 9th Revision, Clinical Modification (ICD-9-CM) codes to identify AMI, HF, and pneumonia discharges between July 1, 2005 and June 30, 2008.15 We used Medicare hospital inpatient, outpatient, and physician Standard Analytical Files to identify admissions, readmissions, and inpatient and outpatient diagnosis codes, and assigned each hospitalization to a disease cohort based on the principal discharge diagnosis. We determined mortality and enrollment status from the Medicare Enrollment Database.

We defined the study samples consistent with CMS methods.2,4–8 We restricted the samples to patients enrolled in fee-for-service Medicare Parts A and B for 12 months before their index hospitalizations in order to maximize our ability to risk-adjust. We excluded patients who left the hospital against medical advice and those who had a length of stay more than 1 year. The mortality cohorts included 1 randomly selected admission per patient annually. In the mortality cohorts, patients transferred to another acute-care hospital were excluded if their principal discharge diagnosis was not the same at both hospitals, as were admissions of individuals enrolled in hospice at admission or at any time in the previous 12 months.

To construct the cohort for the analyses of RSRRs, we included hospitalizations for patients who were discharged alive and who continued in fee-for-service for at least 30 days following discharge. Multiple index hospitalizations per patient were included if another index hospitalization occurred at least 30 days after discharge from the prior index hospitalization. The readmission and mortality samples thus, by design, include different, partially overlapping subsets of Medicare patients.

Hospital 30-Day RSMRs and RSRRs

We estimated hospital 30-day all-cause RSMRs and RSRRs for Medicare patients hospitalized with AMI, HF, and pneumonia at all non-federal acute-care hospitals during 2005 to 2008 using methods endorsed by the National Quality Forum and used by CMS in public reporting. We defined 30-day mortality as death from any cause within 30 days from the date of admission, and readmission as the occurrence of 1 or more hospitalizations in any acute-care hospital in the U.S. that participated in fee-for-service Medicare for any cause within 30 days of discharge from an index hospitalization. In the mortality analysis, we linked transfers into a single episode of care with outcomes attributed to the first (transfer-out) hospital. In the readmission analysis, we attributed readmissions to the hospital that discharged the patient to a non-acute setting.

Hospital Characteristics

To examine whether the relationship between RSMR and RSRR was consistent among subgroups of hospitals, we stratified the sample by hospital region, safety-net status, and urban/rural status. We used Annual Survey data from the American Hospital Association to categorize public or private hospitals as safety-net hospitals if their Medicaid caseload was greater than 1 standard deviation above the mean Medicaid caseload in their respective state, as done in previous analyses of access and quality in safety-net hospitals.16,17 We used hospital ZIP codes to classify hospitals as urban or rural.18,19

Statistical Analysis

We used hierarchical logistic regression models to estimate RSMRs and RSRRs for each hospital. The RSMR models were estimated using a logit link, with the first level adjusted for age, sex, and 25 clinical covariates for AMI, 21 clinical covariates for HF, and 28 clinical covariates for pneumonia. In a similar procedure, the RSRR models were adjusted at the first level for age, sex, and 29 clinical covariates for AMI, 35 clinical covariates for HF, and 38 clinical covariates for pneumonia. We coded covariates from inpatient and outpatient claims during the 12 months before the index admission. The second level of the mortality and readmission models permitted hospital-level random intercepts to vary in order to identify hospital-specific random effects and account for the clustering of patients within the same hospital.20 With this approach, we separated within-hospital from between-hospital variation after adjusting for patient characteristics.

We calculated means and distributions of hospital RSMRs and RSRRs. We quantified the linear and non-linear relationship between the 2 estimators. To do so, we determined the Pearson correlation between the estimated RSMRs and RSRRs weighted by the hospital average of RSMR and RSRR volume. The estimators were weighted because each has its own measure of uncertainty, even after shrinkage, which reflects the observed number of cases on which the estimate is based as well as how much within-hospital clustering exists. To identify potential nonlinear relationships between RSMRs and RSRRs for the 3 conditions, we also fitted generalized additive models using RSRR as the dependent variable and a cubic spline smoother of RSMR as the independent variable. We also stratified correlations by the hospital characteristics described.

For each condition, we also classified all hospitals by both RSMR and RSRR, as identified by placement within quartiles. We considered hospitals to be higher performers if they were in the lowest quartile of mortality for RSMR and RSRR, and lower performers if they were in the top quartile.

We conducted correlation analyses and calculated means and performance categories using SAS 9.1 (SAS Institute, Cary, NC). We used the mgcv package in R to fit generalized additive models. We obtained Institutional Review Board approval through the Yale University Human Investigation Committee.

RESULTS

Study Sample

For AMI, the sample for final analysis consisted of 4506 hospitals with 590,809 admissions for mortality and 586,027 admissions for readmission; for HF, 4767 hospitals with 1,161,179 admissions for mortality and 1,430,030 admissions for readmission; and for pneumonia, 4811 hospitals with 1,225,366 admissions for mortality and 1,297,031 admissions for readmission (Table 1).

Table 1.

Distribution of RSMRs and RSRRs, and estimated between-hospital variance.

| Percentile | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| N Patient | N Hospital |

Mean (SD) | Minimum | 25th | 50th | 75th | Maximum | Estimated between- hospital variance |

|

| Acute myocardial infarction | |||||||||

| RSMR %) | 590,809 | 4506 | 16.60 (1.55) | 10.90 | 15.74 | 16.57 | 17.43 | 24.90 | 0.048 |

| RSRR (%) | 586,027 | 4506 | 19.94 (1.05) | 15.26 | 19.45 | 19.87 | 20.37 | 29.40 | 0.022 |

| Heart failure | |||||||||

| RSMR (%) | 1,161,179 | 4767 | 11.17 (1.46) | 6.60 | 10.26 | 11.06 | 11.96 | 19.85 | 0.055 |

| RSRR (%) | 1,430,030 | 4767 | 24.56 (1.95) | 15.94 | 23.38 | 24.42 | 25.63 | 34.35 | 0.026 |

| Pneumonia | |||||||||

| RSMR (%) | 1,225,366 | 4811 | 11.64 (1.84) | 6.36 | 10.39 | 11.46 | 12.68 | 21.58 | 0.069 |

| RSRR (%) | 1,297,031 | 4811 | 18.22 (1.63) | 13.05 | 17.16 | 18.09 | 19.14 | 27.57 | 0.029 |

Abbreviations: RSMR, risk-standardized mortality rate; RSRR, risk-standardized readmission rate; SD, standard deviation

RSMRs and RSRRs

The median RSMR was 16.57% for AMI, 11.06% for HF, and 11.46% for pneumonia. The RSMRs ranged from 10.90% to 24.90% for AMI, from 6.60% to 19.85% for HF, and from 6.36% to 21.58% for pneumonia (Table 1). The size of the inter-quartile ranges was 1.69% for AMI, 1.70% for HF, and 2.29% for pneumonia. The median RSRR was 19.87% for AMI, 24.42% for HF, and 18.09% for pneumonia. The RSRRs ranged from 15.26% to 29.40% for AMI, from 15.94% to 34.35% for HF, and from 13.05% to 27.57% for pneumonia. The size of the inter-quartile ranges was 0.92% for AMI, 2.25% for HF, and 1.98% for pneumonia.

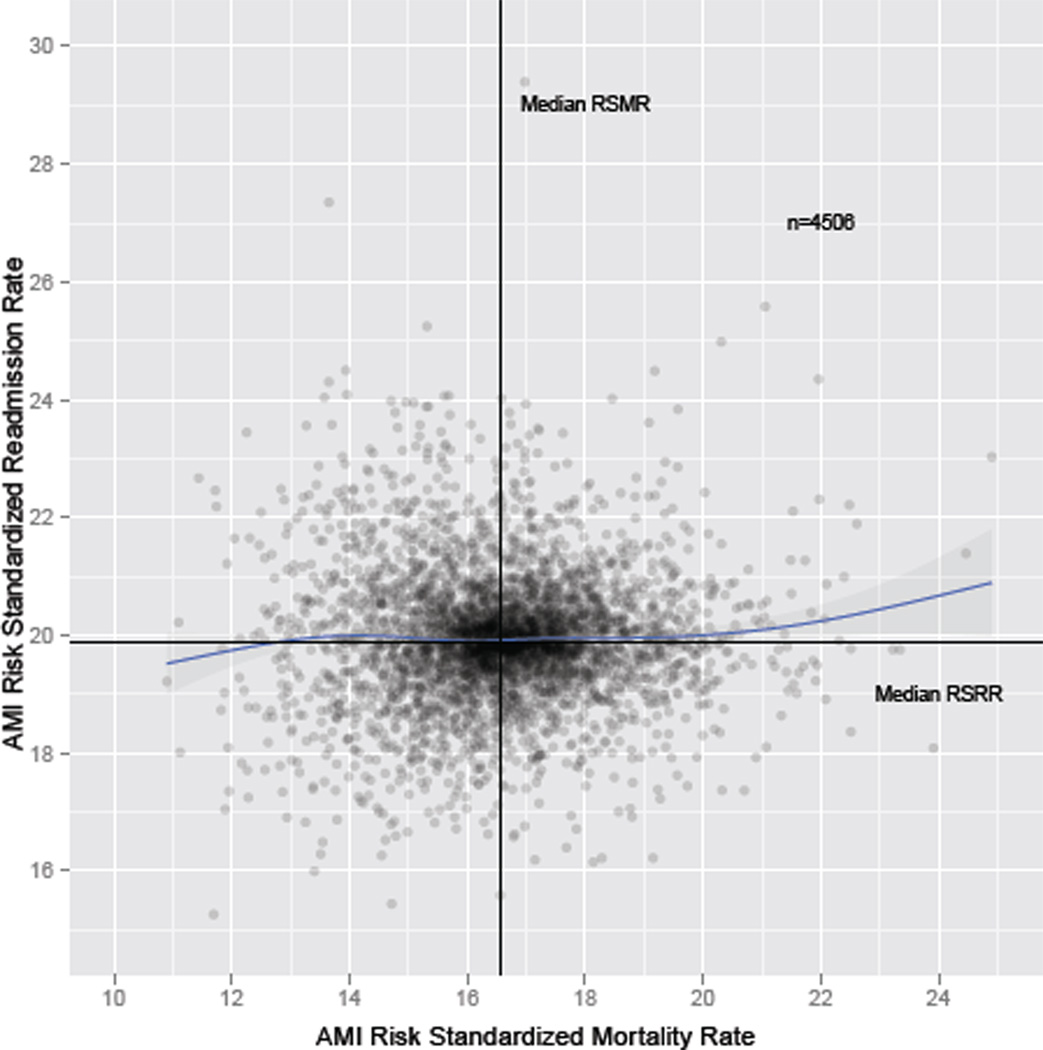

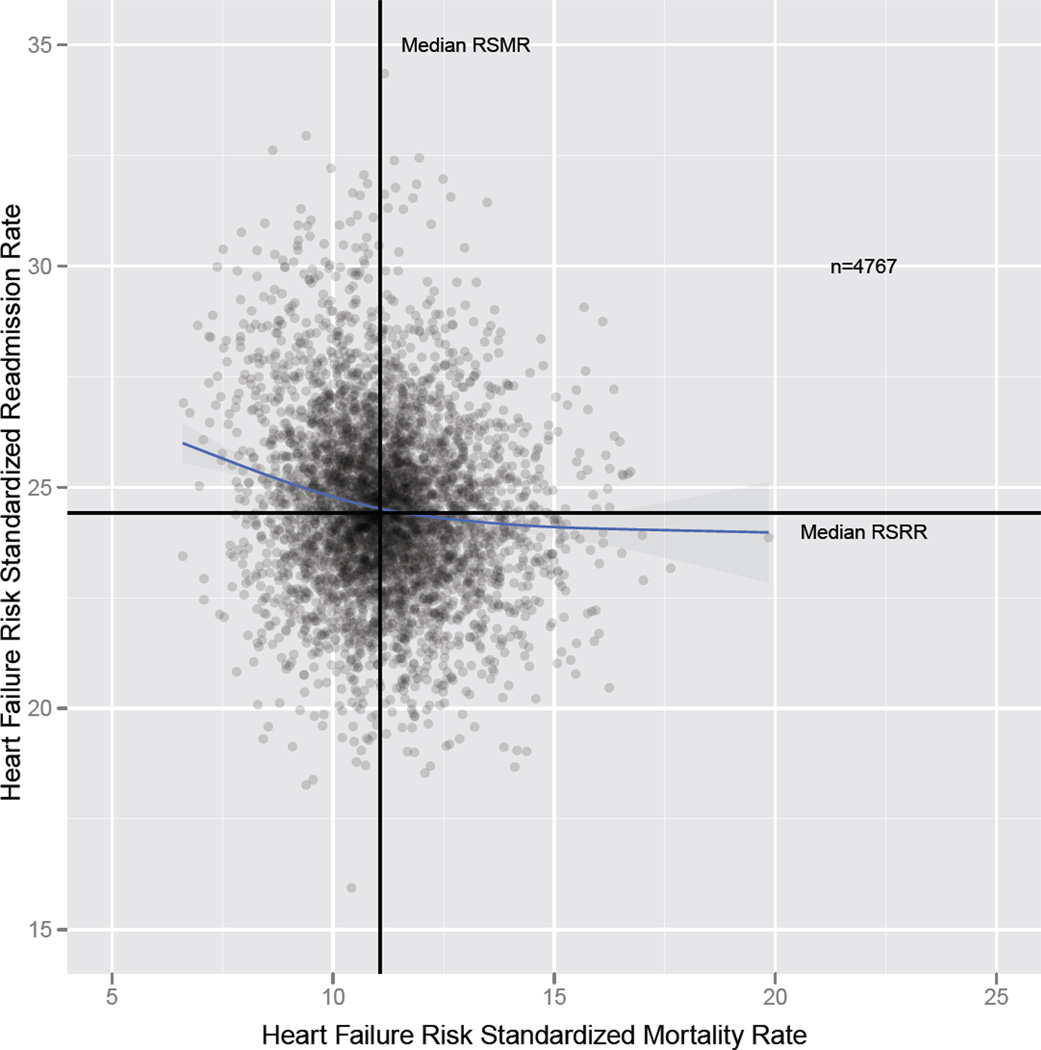

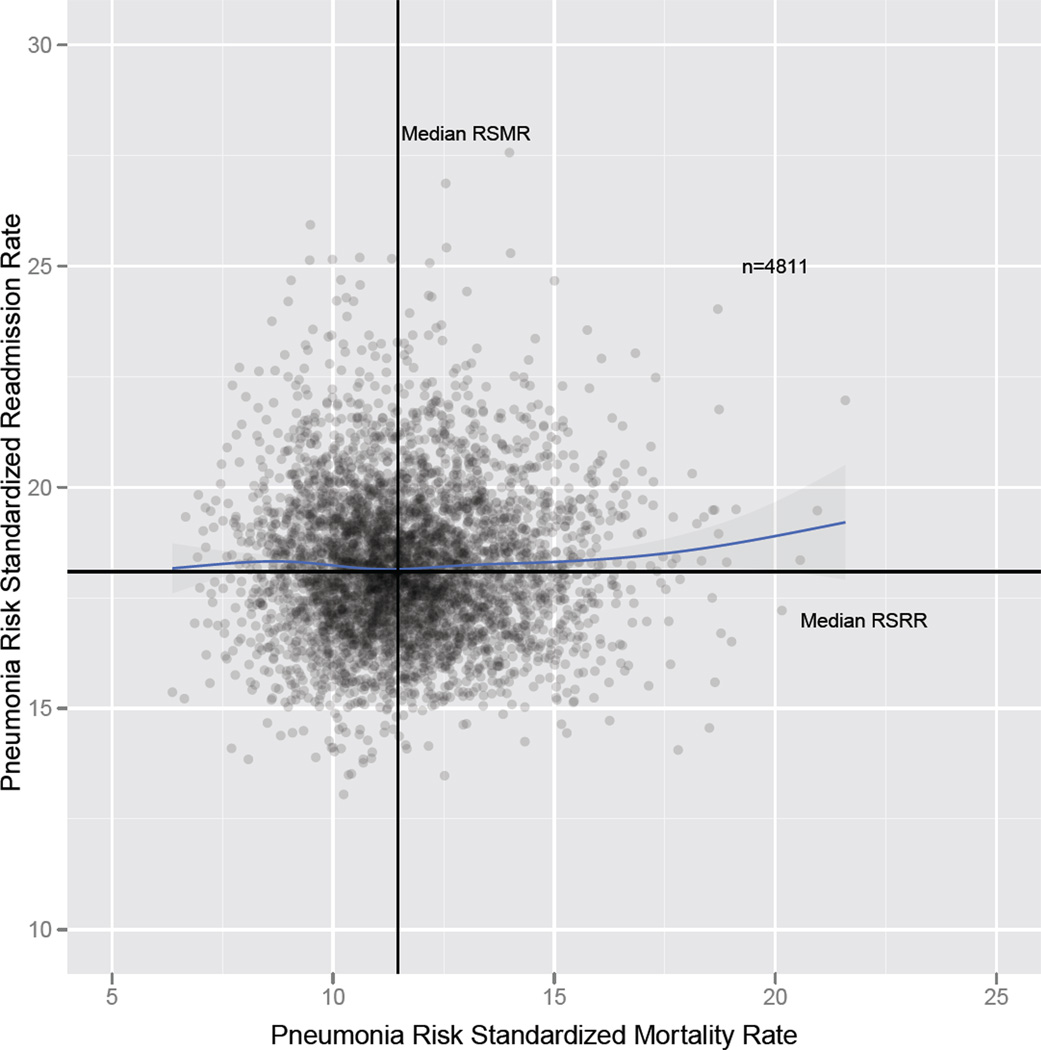

The Pearson correlation (95% confidence interval [CI]) between RSMRs and RSRRs was 0.03 (95% CI: −0.002, 0.06) for AMI, −0.17 (95% CI: −0.20, −0.14) for HF, and 0.002 (95% CI: −0.03, 0.03) for pneumonia (Figures 1a, 1b, 1c; Table 2). The linear association was statistically significant only for HF. Results from generalized additive models were consistent with those findings, with no apparent relationship between RSMRs and RSRRs for AMI and pneumonia. Although we observed a significant negative linear relationship between RSMRs and RSRRs for HF, the shared variance between RSMRs and RSRRs was only 2.9% (r2= 0.029). For HF, the relationship was most prominent in the lower range of the RSMR (e.g., hospitals with an RSMR <11%).

Figure 1.

a. Scatterplot of hospital-level RSMRs and RSRRs for AMI.

Axes show the mean RSMRs and RSRRs. The Pearson correlation coefficient is 0.03 (−0.002, 0.056). The blue line is the cubic spline smooth regression line with RSRR as the dependent variable and RSMR as the independent variable. The tinted area around the cubic spline regression line indicates a 95% confidence band.

b. Scatterplot of hospital-level RSMRs and RSRRs for HF.

Axes show the mean RSMRs and RSRRs. The Pearson correlation coefficient is −0.17

(−0.200, −0.145). The blue line is the cubic spline smooth regression line with RSRR as the dependent variable and RSMR as the independent variable. The tinted area around the cubic spline regression line indicates a 95% confidence band.

c. Scatterplot of hospital-level RSMRs and RSRRs for pneumonia.

Axes show the mean RSMRs and RSRRs. The Pearson correlation coefficient is 0.002

(−0.026, 0.031). The blue line is the cubic spline smooth regression line with RSRR as the dependent variable and RSMR as the independent variable. The tinted area around the cubic spline regression line indicates a 95% confidence band.

Abbreviations: AMI, acute myocardial infarction; HF, heart failure; RSMR, risk-standardized mortality rate; RSRR, risk-standardized readmission rate

Table 2.

Pearson correlation of RSMR and RSRR by hospital characteristics.*

| Acute myocardial infarction | Heart failure | Pneumonia | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| N | Correlation | 95% CI | CI | N | Correlation | 95% | CI | N | Correlation | 95% | CI | |

| Overall | 4506 | 0.03 | −0.002 | 0.06 | 4767 | −0.17 | −0.20 | −0.14 | 4811 | 0.002 | −0.03 | 0.03 |

| Teaching status | ||||||||||||

| COTH | 287 | 0.01 | −0.11 | 0.12 | 290 | −0.21 | −0.32 | −0.10 | 291 | 0.08 | −0.04 | 0.19 |

| Teaching | 460 | 0.06 | −0.03 | 0.15 | 465 | −0.26 | −0.34 | −0.17 | 469 | −0.01 | −0.09 | 0.09 |

| Non - teaching | 3427 | 0.08 | 0.05 | 0.12 | 3628 | −0.13 | −0.17 | −0.10 | 3651 | 0.01 | −0.02 | 0.05 |

| Ownership status | ||||||||||||

| Private for-profit | 624 | 0.16 | 0.09 | 0.24 | 650 | −0.09 | −0.17 | −0.01 | 655 | 0.12 | 0.04 | 0.19 |

| Private not-for-profit | 2576 | −0.004 | −0.04 | 0.04 | 2647 | −0.22 | −0.26 | −0.19 | 2657 | −0.03 | −0.07 | 0.01 |

| Public | 974 | 0.18 | 0.12 | 0.24 | 1086 | −0.01 | −0.07 | 0.05 | 1099 | 0.08 | 0.02 | 0.14 |

| Safety-net status | ||||||||||||

| Safety-net hospital | 1271 | 0.06 | 0.005 | 0.11 | 1393 | −0.13 | −0.18 | −0.08 | 1410 | 0.04 | −0.02 | 0.09 |

| Non-safety-net hospital | 2903 | 0.01 | −0.02 | 0.05 | 2990 | −0.19 | −0.22 | −0.15 | 3001 | −0.01 | −0.04 | 0.03 |

| Geographic location | ||||||||||||

| Urban | 2165 | 0.001 | −0.04 | 0.04 | 2208 | −0.22 | −0.26 | −0.18 | 2221 | −0.00 | −0.04 | 0.04 |

| Rural | 1533 | 0.18 | 0.13 | 0.23 | 1594 | −0.10 | −0.16 | −0.06 | 1601 | −0.01 | −0.06 | 0.04 |

Abbreviations: CI, confidence interval; COTH, Council of Teaching Hospitals; RSMR, risk-standardized mortality rate; RSRR, riskstandardized readmission rate

Information on hospital bed size, ownership, teaching status, and urban status was obtained through linking American Hospital Association 2004 data. We were able to perform linkage for 93% of acute myocardial infarction measure hospitals, 92% of heart failure measure hospitals, and 92% of pneumonia measure hospitals.

In subgroup analyses, the correlations between RSMRs and RSRRs did not differ substantially in any of the subgroups of hospital types, including hospital region, safety-net status, and urban/rural status (Table 2).

Top Performers

For AMI, 381 hospitals (8.5%) were in the top-performing quartile of both measures, with lower RSMRs and RSRRs; for HF, 259 hospitals (5.4%) were in the top-performing quartile; and for pneumonia, 307 hospitals (6.4%) were in the top-performing quartile for RSMRs and RSRRs. For AMI, 302 hospitals (6.7%) were in the bottom-performing quartile of both measures, with higher RSMRs and RSRRs; for HF, 252 hospitals (5.3%) were in the bottom-performing quartile; and for pneumonia, 344 hospitals (7.2%) were in the bottom-performing quartile for RSMRs and for RSRRs (Tables 3a, 3b, 3c).

Table 3.

| a. Hospitals by mortality and readmission performance quartiles for AMI. | ||||||

|---|---|---|---|---|---|---|

| RSRR quartiles for acute myocardial infarction | ||||||

| 1 | 2 | 3 | 4 | Mean (Range) | ||

| RSMR quartile for acute myocardial infarction |

1 | 381 (8.5%) | 174 (3.9%) | 185 (4.1%) | 387 (8.6) | 14.7 (10.9–15.7) |

| 2 | 251 (5.6%) | 341 (7.6%) | 332 (7.4%) | 202 (4.5%) | 16.2 (15.7–16.6) | |

| 3 | 215 (4.8%) | 354 (7.9%) | 323 (7.2%) | 235 (5.2%) | 17.0 (16.6–17.4) | |

| 4 | 278 (6.2%) | 259 (5.8%) | 287 (6.4%) | 302 (6.7%) | 18.5 (17.4–24.9) | |

| Mean (Range) | 18.7 (15.3–19.5) | 19.7 (19.5–19.9) | 20.1 (19.9–20.4) | 21.2 (20.4–29.4) | ||

| b. Hospitals by mortality and readmission performance quartiles for HF. | ||||||

|---|---|---|---|---|---|---|

| RSRR quartiles for heart failure | ||||||

| 1 | 2 | 3 | 4 | Mean (Range) | ||

| RSMR quartile for heart failure |

1 | 259 (5.4%) | 225 (4.7%) | 284 (6.0%) | 424 (8.9) | 9.4 (6.6–10.3) |

| 2 | 278 (5.8%) | 339 (7.1%) | 300 (6.3%) | 274 (5.8%) | 10.7 (10.3–11.1) | |

| 3 | 286 (6.0%) | 314 (6.6%) | 351 (7.4%) | 242 (5.1%) | 11.5 (11.1–12.0) | |

| 4 | 368 (7.7%) | 314 (6.6%) | 257 (5.4%) | 252 (5.3%) | 13.1 (12.0–19.8) | |

| Mean (range) | 22.3 (15.9–23.4) | 23.9 (23.4–24.4) | 25.0 (24.4–25.6) | 27.1 (25.6–34.4) | ||

| c. Hospitals by mortality and readmission performance quartiles for pneumonia. | ||||||

|---|---|---|---|---|---|---|

| RSRR quartiles for pneumonia | ||||||

| 1 | 2 | 3 | 4 | Mean (Range) | ||

| RSMR quartile for pneumonia |

1 | 307 (6.4%) | 278 (5.8%) | 289 (6.0%) | 328 (6.8) | 9.5 (6.4–10.4) |

| 2 | 288 (6.0%) | 332 (6.9%) | 310 (6.4%) | 273 (5.7%) | 10.9 (10.4–11.5) | |

| 3 | 304 (6.3%) | 327 (6.8%) | 316 (6.6%) | 257 (5.3%) | 12.0 (11.5–12.7) | |

| 4 | 303 (6.3%) | 268 (5.6%) | 287 (6.0%) | 344 (7.2%) | 14.1 (12.7–21.6) | |

| Mean (Range) | 16.3 (13.0–17.2) | 17.6 (17.2–18.1) | 18.6 (18.1–19.1) | 20.1 (19.1–27.6) | ||

Abbreviations: AMI, acute myocardial infarction; HF, heart failure; RSMR, risk-standardized mortality rate; RSRR, risk-standardized readmission rate

COMMENT

In a national study of the CMS publicly reported outcomes measures, we failed to find evidence that performance on the measure for 30-day RSMR is strongly associated with a hospital’s performance on 30-day RSRR. These findings should allay concerns that institutions with good performance on RSMRs will necessarily be identified as poor performers on their RSRRs. For AMI and pneumonia, there was no discernible relationship and for HF, the relationship was only modest and not throughout the entire range of performance. At all levels of performance on the mortality measures, we found both high and low performers on the readmission measures.

This study represents the first comprehensive examination of the relationship between these measures within hospitals. A letter to the editor in a major medical journal identified a potential concern in the relationship between the 2 measures for patients with HF. Our analysis, which is consistent with their report, markedly extends the content of this letter and puts it in perspective with the other measures. The association between the mortality and readmission rates was only present for the HF measure and for this condition is quite modest and exists for only a limited range of each measure. Moreover, we show that hospitals can do well on both measures with many hospitals having low RSMRs and RSRRs.

Some studies have produced findings that might be interpreted as suggesting that there should be an inverse relationship between the measures, but are not truly discordant with our results. For example, Heidenreich and colleagues, in a study of hospitals within the Veteran Affairs health care system, reported that, at the patient level, mortality after an admission for HF declined from 2002 to 2006 while readmission increased. They did not, however, examine changes in individual hospital performance nor investigate the relationship between RSMRs and RSRRs at the hospital level or for other conditions.

As a rationale for our study, there are several plausible reasons to think that there might be a relationship between the measures. Hospitals with a lower mortality rate may have been discharging patients who had a greater severity of illness, compared with hospitals with higher mortality rates, in ways that are not accounted for in the risk models. Also, hospitals with higher mortality rates might have had patients die before they could be readmitted, such that high mortality rates caused lower readmission rates. However, our empiric analysis failed to validate this concern. If higher mortality rates did lead to a healthier cohort of survivors and a lower risk of readmission, we would expect to have seen a strong inverse relationship between 30-day RSMR and RSRR across the 3 conditions, as the effect would not have been related to a single diagnosis.

Among the measures, HF alone had an association between the 2 measures, but the shared variance was small and many hospitals performed well for both HF measures. Many others performed poorly on both measures. Moreover, the relationship was most pronounced among the hospitals at the lower range of the RSMR. The observation that any relationship was only noted for 1 condition suggests that this is not a robust finding that could be applicable across conditions. For HF, there may be factors that contribute to the finding, but they were not apparent in this analysis.

The findings are consistent across types of hospitals. For AMI and pneumonia, there is no evidence of a relationship across categories of hospitals defined by teaching status, rural or urban location, or for-profit status. For HF, there is also general consistency, though the inverse relationship is stronger for teaching, for-profit, and urban hospitals.

These findings also suggest that mortality and readmission measures convey distinct information. This observation has face validity because the factors that may be important in mortality, including rapid triage as well as early intervention and coordination in the hospital, may not be those that dominantly affect readmission risk. For readmission, factors related to the transition from inpatient to outpatient care, patient education and support, the availability of outpatient support, and the admission thresholds might play a more important role. In addition, the time periods for the 2 measures are different, which may contribute to their differences. Although both measures cover 30 days, the starting time of the outcome periods are different. The time period for the mortality measure begins at admission, and more than half of the outcomes occur before discharge. The time period for the readmission measure begins at hospital discharge, and all the events occur after the index hospitalization.

Our results are also consistent with research on predictors of mortality and readmission. Factors that are strong predictors of mortality tend to be weak predictors of readmission, if there is any relationship at all.2,4–8,21,22 Mortality risk models with medical record information or claims data have good discrimination and indicate that, in total, clinical factors have a dominant influence on mortality risk. For readmission, models using medical record information or administrative claims have much weaker predictive ability and discrimination, suggesting that readmission risk is not simply the inverse of mortality risk. Some of those unmeasured factors may relate to quality of care. We note that the discrimination and predictive measures characterize model performance at the patient level, whereas our findings are focused on the hospital level – the correlation of the estimated hospital-specific RSMRs and RSRRs. The patient-level risk of readmission is higher than that for mortality but the interquartile ranges are similar in size.

This study has several limitations. First, we are assessing overall patterns and cannot exclude the possibility that in some hospitals, performance on one of the measures influences performance on the other. Second, there may be a concern that hierarchical modeling obscures a relationship because many hospitals have lower volume. However, because the hierarchical approach is best at minimizing the average error in the hospital-specific estimates over all the hospitals under consideration, this approach is less likely to identify spurious results. Moreover, our findings were consistent in large as well as small hospitals, as designated by bed size. In addition, we sought to evaluate the measures in use in order to address a policy relevant question. Third, our study did not investigate the validity of the measures. We did design the mortality and readmission measures in this study to be measures of quality; the National Quality Forum, which has a rigorous and thorough vetting process with many levels of evaluation, approved them for that purpose; CMS publicly reports them as quality measures; and the Affordable Care Act incorporates them into incentive programs as quality measures. Nevertheless, some critics may not consider the measures to reflect quality of care and our study is designed to determine the relationship between the mortality and readmission measures, not to further evaluate their validity.

From a policy perspective, the distinctiveness of the measures is important. A strong inverse relationship might have implied that institutions would need to choose which measure to address. These findings indicate that many institutions do well on mortality and readmission, and that performance on one does not dictate the performance on the other.

ACKNOWLEDGMENT

Funding/Support: Dr. Krumholz is supported by grant U01 HL105270-03 (Center for Cardiovascular Outcomes Research at Yale University) from the National Heart, Lung, and Blood Institute in Bethesda, Maryland. Dr. Chen is supported by Career Development Award K08 HS018781-03 from the Agency for Healthcare Research and Quality in Rockville, Maryland. Dr. Ross is supported by grant K08 AG032886-05 from the National Institute on Aging in Bethesda, Maryland and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program in New York, New York.

The analyses upon which this publication is based were performed under Contract Number HHSM-500-2008-0025I/HHSM-500-T0001, Modification No. 000007, entitled "Measure Instrument Development and Support,” funded by the Centers for Medicare & Medicaid Services, an agency of the U.S. Department of Health and Human Services. The content of this publication does not necessarily reflect the views or policies of the Department of Health and Human Services, nor does mention of trade names, commercial products, or organizations imply endorsement by the U.S. Government. The authors assume full responsibility for the accuracy and completeness of the ideas presented.

Role of the Sponsors: The funding sponsors had no role in the design and conduct of the study; in the collection, management, analysis, and interpretation of the data; or in the preparation, review, or approval of the manuscript.

Footnotes

Author Contributions:

Study concept and design: Krumholz

Acquisition of data: Krumholz

Analysis and interpretation of data: Krumholz, Lin, Keenan, Chen, Ross, Drye, Bernheim, Wang, Bradley, Han, Normand (Dr. Lin had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis.)

Drafting of the manuscript: Krumholz

Critical revision of the manuscript for important intellectual content: Krumholz, Lin, Keenan, Chen, Ross, Drye, Bernheim, Wang, Bradley, Han, Normand

Statistical analysis: Lin, Normand

Obtained funding: Krumholz

Administrative, technical, or material support: Krumholz

Study supervision: Krumholz

Conflict of Interest Disclosures: Drs. Krumholz and Ross report that they are recipients of a research grant from Medtronic through Yale University. Dr. Krumholz chairs a cardiac scientific advisory board for UnitedHealth, and Dr. Ross is a member of a scientific advisory board for FAIR Health. Dr. Normand is a member of the Board of Directors of Frontier Science and Technology. The remaining authors report no conflicts of interest.

REFERENCES

- 1.Bernheim SM, Grady JN, Lin Z, et al. National patterns of risk-standardized mortality and readmission for acute myocardial infarction and heart failure. Update on publicly reported outcomes measures based on the 2010 release. Circ Cardiovasc Qual Outcomes. 2010 Sep;3(5):459–467. doi: 10.1161/CIRCOUTCOMES.110.957613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Keenan PS, Normand S-LT, Lin Z, et al. An administrative claims measure suitable for profiling hospital performance on the basis of 30-day all-cause readmission rates among patients with heart failure. Circulation. 2008;1(1):29–37. doi: 10.1161/CIRCOUTCOMES.108.802686. [DOI] [PubMed] [Google Scholar]

- 3.Krumholz HM, Merrill AR, Schone EM, et al. Patterns of hospital performance in acute myocardial infarction and heart failure 30-day mortality and readmission. Circ Cardiovasc Qual Outcomes. 2009 Sep;2(5):407–413. doi: 10.1161/CIRCOUTCOMES.109.883256. [DOI] [PubMed] [Google Scholar]

- 4.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with heart failure. Circulation. 2006 Apr 4;113(13):1693–1701. doi: 10.1161/CIRCULATIONAHA.105.611194. [DOI] [PubMed] [Google Scholar]

- 5.Krumholz HM, Wang Y, Mattera JA, et al. An administrative claims model suitable for profiling hospital performance based on 30-day mortality rates among patients with an acute myocardial infarction. Circulation. 2006 Apr 4;113(13):1683–1692. doi: 10.1161/CIRCULATIONAHA.105.611186. [DOI] [PubMed] [Google Scholar]

- 6.Lindenauer PK, Bernheim SM, Grady JN, et al. The performance of US hospitals as reflected in risk-standardized 30-day mortality and readmission rates for Medicare beneficiaries with pneumonia. J Hosp Med. 2010 Jul-Aug;5(6):E12–E18. doi: 10.1002/jhm.822. [DOI] [PubMed] [Google Scholar]

- 7.Bratzler DW, Normand SL, Wang Y, et al. An administrative claims model for profiling hospital 30-day mortality rates for pneumonia patients. PLoS One. 2011;6(4):e17401. doi: 10.1371/journal.pone.0017401. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Krumholz HM, Lin Z, Drye EE, et al. An administrative claims measure suitable for profiling hospital performance based on 30-day all-cause readmission rates among patients with acute myocardial infarction. Circ Cardiovasc Qual Outcomes. 2011 Mar 1;4(2):243–252. doi: 10.1161/CIRCOUTCOMES.110.957498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ash A, Fienberg SE, Louis TA, Normand SL, Stukel TA, Utts J. The COPSS-CMS White Paper Committee. [Accessed December 14, 2012];Statistical issues on assessing hospital performance. http://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/HospitalQualityInits/Downloads/Statistical-Issues-in-Assessing-Hospital-Performance.pdf.

- 10.Department of Health and Human Services. Medicare program hospital inpatient value-based purchasing program. Final rule. Fed Regist. 2011 May 6;76(88):26490–26547. [PubMed] [Google Scholar]

- 11.Department of Health and Human Services. Medicare program; hospital inpatient prospective payment system for acute care hospitals and the long-term care hospital prospective payment system and FY2012 rates; hospitals' FTE resident caps for graduate medical education payment; Final rule. Final Register. 2011 Aug 18;76(160):51476–51846. [PubMed] [Google Scholar]

- 12.Gorodeski EZ, Starling RC, Blackstone EH. Are all readmissions bad readmissions? (Letter to the Editor) N Engl J Med. 2010;363(3):297–298. doi: 10.1056/NEJMc1001882. [DOI] [PubMed] [Google Scholar]

- 13.Ong MK, Mangione CM, Romano PS, et al. Looking forward, looking back: assessing variations in hospital resource use and outcomes for elderly patients with heart failure. Circ Cardiovasc Qual Outcomes. 2009 Nov;2(6):548–557. doi: 10.1161/CIRCOUTCOMES.108.825612. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Serrie J. [Accessed December 14, 2012];More than 2,200 hospitals face penalties under ObamaCare rules. 2012 Aug 23; foxnews.com. http://www.foxnews.com/politics/2012/08/23/more-than-2200-hospitals-face-penalties-for-high-readmissions/

- 15.Desai MM, Lin Z, Schreiner GC, et al. [Accessed December 14, 2012];2009 measures maintenance technical report: acute myocardial infarction, heart failure, and pneumonia 30-day risk-standardized readmission measures. 2009 Apr 7; http://qualitynet.org/dcs/ContentServer?c=Page&pagename=QnetPublic%2FPage%2FQnetTier3&cid=1219069855841.

- 16.Ross JS, Cha SS, Epstein AJ, et al. Quality of care for acute myocardial infarction at urban safety-net hospitals. Health Affairs. 2007;26(1):238–248. doi: 10.1377/hlthaff.26.1.238. [DOI] [PubMed] [Google Scholar]

- 17.Hadley J, Cunningham P. Availability of safety net providers and access to care of uninsured persons. Health Serv Res. 2004;39(5):1527–1546. doi: 10.1111/j.1475-6773.2004.00302.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hart LG, Larson EH, Lishner DM. Rural definitions for health policy and research. Am J Public Health. 2005 Jul;95(7):1149–1155. doi: 10.2105/AJPH.2004.042432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Morrill R, Cromartie J, Hart G. Metropolitan, urban, and rural commuting areas: toward a better depiction of the United States Settlement System. Urban Geography. 1999;20(8):727–748. [Google Scholar]

- 20.Normand SL, Zou KH. Sample size considerations in observational health care quality studies. Stat Med. 2002 Feb 15;21(3):331–345. doi: 10.1002/sim.1020. [DOI] [PubMed] [Google Scholar]

- 21.Krumholz HM, Chen J, Wang Y, Radford MJ, Chen YT, Marciniak TA. Comparing AMI mortality among hospitals in patients 65 years of age and older: evaluating methods of risk adjustment. Circulation. 1999 Jun 15;99(23):2986–2992. doi: 10.1161/01.cir.99.23.2986. [DOI] [PubMed] [Google Scholar]

- 22.Krumholz HM, Chen YT, Wang Y, Vaccarino V, Radford MJ, Horwitz RI. Predictors of readmission among elderly survivors of admission with heart failure. Am Heart J. 2000 Jan;139(1 Pt 1):72–77. doi: 10.1016/s0002-8703(00)90311-9. [DOI] [PubMed] [Google Scholar]