Abstract

We implemented a direct-observer hand hygiene audit program that used trained observers, wireless data entry devices, and an intranet portal. We improved the reliability and utility of the data by standardizing audit processes, regularly retraining auditors, developing an audit guidance tool, and reporting weighted composite hand hygiene compliance scores.

Monitoring hand hygiene (HH) performance and providing feedback to healthcare personnel (HCP) are essential for the success of an HH promotion program. Observation by trained observers remains the gold standard for measuring compliance.1 However, the use of direct observation is frequently limited by high expenses, few observations, inadequate staffing, and delayed data feedback.2 Furthermore, the Hawthorne effect, ascertainment biases, and unbalanced data reporting often reduce the accuracy and usefulness of data collected by observers.3-5

Duke University Hospital (DUH) is a 950-bed hospital in North Carolina. Before 2009, HH was monitored by infection preventionists who made unannounced visits. However, these audits were infrequent, inefficient, captured few observations, and occurred only between 9:00 am and 4:00 pm during the week. We describe the implementation, observations, and refinements of a novel electronic-assisted directly observed HH audit (DOHA) program at DUH in 2009.

Methods

In spring 2009, auditors were trained to perform HH audits in accordance with modified criteria based on the Centers for Disease Control and Prevention (CDC) guidelines.6,7 HH was defined as cleaning ungloved hands with water and soap or with hospital-approved sanitizer, gel, or foam. Performance of HH was measured at 2 patient care moments: before and after entering a patient care area. Auditors wore identification badges and made observations in 40 inpatient and outpatient clinical areas; they did not enter patient or clinic rooms and did not confront noncompliant staff members.

Auditors were trained to use personal digital assistants (PDAs) that used in-house recording software to collect data on HH compliance, type of HH opportunity, disinfectant used, and category of HCP. The PDAs securely and wirelessly transmitted data to a central server.

HH data were analyzed and viewable in real time via an intranet Web site accessible to leadership, unit managers and directors. The Web site showed 30-day HH compliance from the following 3 perspectives: (1) compliance among all clinical HCP, (2) compliance by clinical service area, and (3) compliance by healthcare worker type. Additionally, the Web site showed time-trended performance, interunit comparisons, and used a traffic light color-coding scheme to represent HH performance in relation to predefined targets.8

We analyzed HH data collected during 2009 and 2010 and implemented several improvements to the DOHA program in 2011. We performed hypothesis testing with Student t test and F-test. A P value of .05 or less was considered significant.

Results

The DOHA program started in April 2009 and made 30,397 HH observations with a compliance rate of 88%. In 2010, the DOHA program expanded from 5 to 9 observers and made 72,456 observations with a compliance rate of 88%.

Physical therapy personnel (95%), respiratory therapy personnel (93%), and nurse practitioners (90%) had the highest HH compliance. Electrocardiogram technicians (79%), physicians (78%), and food personnel (77%) had the lowest compliance.

Stratified by unit type, the neonatal intensive care unit (97%), pediatric bone marrow transplant unit (96%), and surgical intensive care unit (94%) had the highest HH compliance. The emergency department (77%), preoperative admission department in the ambulatory surgical center (77%), and postoperative recovery unit (79%) had the lowest HH compliance.

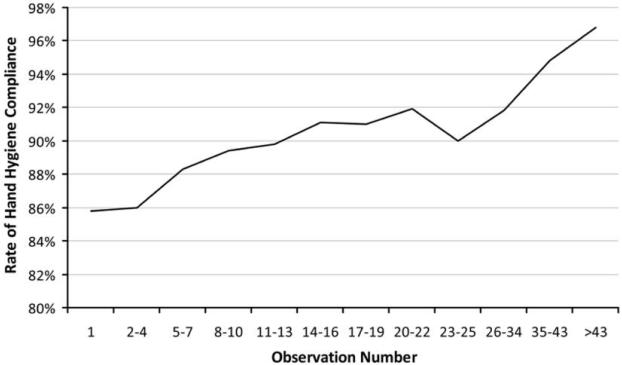

We analyzed HH compliance by the order in which observations were made during audit sessions. In other words, we calculated the overall HH compliance of all first HH observations, all second observations, all third observations, and so on. The compliance of all first 5 observations was less than 86%; compliance increased the longer audit sessions lasted, such that the overall compliance of all thirty-fifth through forty-third observations was 95% (Figure 1).

Figure 1.

Hand hygiene compliance as a function of the number of observations made on a ward, demonstrating that the impact of the Hawthorne effect is lowest at start of an observation session.

We analyzed unit-specific HH compliance and noted substantial fluctuations in monthly HH performance even though no major changes in HH practice occurred. A review of the auditing procedures revealed several factors contributed to these fluctuations, including a variable number of HH observations performed per unit per month, variations in the number of HH observations performed per auditor, an inability to identify and focus audits on units with a low number of observations, and auditors who followed their own preferences and biases when choosing units or type of HCP to audit.

In 2011, we implemented changes to the DOHA program on the basis of issues identified in 2010 (Table 1). First, we expanded audits to include evenings and weekends. We also standardized the auditing process to minimize interobserver variation by creating a standard operating procedure (SOP), and auditors were retrained to follow the SOP twice a year to improve the reliability of data.

Table 1.

Audit-Related Factors, Potential Influence on Hand Hygiene (HH) Compliance Data, and Strategies to Address Each Factor

| Category, factor | Potential impact | Strategy to address factor |

|---|---|---|

| Observer (Hawthorne) effect | Erroneously high HH compliance result | Adoption of a quota-based audit system within 10 minutes; minimized the time in each clinical area to minimize impact of Hawthorne effect on results of HH audits |

| Variations in auditing process | ||

| Difference in number of observations made by auditors (eg, some auditors made a low number of observa- tion per audit session, whereas others made numer- ous observations) |

Erroneous fluctuations in HH performance; re- duces ability to make conclusions about HH performance in clinical areas with low number of observations |

Audit guidance tool prompts auditors to make at least 10 observations at each audit area before moving to the next area; areas with a low num- ber of observations would appear higher on the priority list for more observations |

| Difference in the type of units and clinical areas sur- veyed (eg, some units had a low number of observa- tions, whereas some units had numerous observations) |

Inability to make conclusions about HH perfor- mance in clinical areas with low number of ob- servations; low number of observations in a clin- ical area may lead to exaggerated high or low compliance rates |

Audit guidance tool analyzes the clinical areas where the auditor had recently observed; soft- ware automatically directs the auditor to priori- tize areas with a low number of HH observations |

| Difference in the type of healthcare personnel observed during audits (eg, HH audits conducted during reg- ular daytime hours on surgical wards consisted mostly of observations from nursing staff) |

Unit level reporting of HH should have a balanced representation of healthcare personnel that pose risk of pathogen transmission to patients; over- representation of HH data by a specific health- care personnel group would also inadvertently overestimate or underestimate the overall HH performance in that clinical area |

Unit level HH compliance is now a weighted com- posite score; we now report an adjusted compos- ite HH score, 40% of which is data from physi- cians, 40% of which is data from nurses, and 20% of which is the mean of other HCP categories |

| Consistency of applying criteria for rating HH compliance | ||

| Difference in how criteria for rating HH compliance was applied |

Reduces internal consistency and validity | Standardization of criteria for rating HH compli- ance; a written standard operating procedure was written and approved; all auditors now undergo bi-annual validation with a master certified in- fection preventionists using the standardized criteria |

Second, we built an audit guidance tool (AGT) to minimize unbalanced auditing among units.9 In principle, each auditor would perform a quota (n = 10) of audits in an area or move on within 10 minutes. The AGT specifically analyzed observations already made by an auditor and guided observers to wards or HCP types that required additional observations to ensure balanced auditing.

Finally, we changed from reporting overall monthly HH compliance to reporting a weighted, composite score. This change was designed to minimize variations in HH performance caused by inclusion of different proportions of HCP in each report. We reported a composite score in which 40% was based on physician score, 40% on nursing staff score, and 20% on the scores for all remaining categories of HCP.

The changes made to the DOHA program produced several results in 2011. The volume of HH audits increased to 90,538 observations with a HH compliance of 86%. Moreover, standardization of HH audits, use of the AGT, and reporting composite HH scores all reduced fluctuations in monthly HH data. For example, the variance in monthly compliance in a general surgical unit decreased from 42.7 in 2010 to 16.0 in 2011 (P = .11).

Discussion

We implemented a large-scale DOHA program based on a team of trained auditors recording observations directly into wireless handheld devices that immediately transmitted data for analysis and review on a Web site. Before this program, 3 infection preventionists made only 75–100 observations each month and found HH compliance results of approximately 50%. In 2011, the program made 90,538 observations with a HH compliance of 86%. As a result of improvements to the auditing and reporting process, we believe that data from this program were reliable, representative, and accurate.

We observed the impact of the Hawthorne effect, which describes the modification of behavior when subjects become aware that they are being studied.3 First, we observed that the longer auditors remained on a unit, the higher the HH compliance. This time-dependent pattern might be attributable to improved HH once an auditor is detected and the news of their presence is progressively spread throughout the unit. Therefore, we hypothesized that early observations in an audit would be less affected by the Hawthorne effect. Thus, in 2011, we implemented quota-based audits instructing auditors to make 10 observations or stay a maximum of 10 minutes before moving to another area.

There were other innovative features that improved the validity and consistency of HH data. First, auditors were trained and revalidated according to a SOP, which improved interrater and interobservational reliability. Second, we built an AGT that analyzed existing HH observations to help our auditors focus observations in specific areas or types of HCP. The AGT also reduced any bias caused by auditor personal preferences for sampling certain units.

Third, we developed a weighted composite HH score rather than reporting the raw HH compliance rate. The composite score helped minimize fluctuations in HH results caused by inclusion of different HCP each month. The combination of the AGT and reporting a composite score substantially improved the consistency of our monthly HH data.

Finally, the data from our DOHA program has high utility. The results of audits were available in real time and were easily accessible by unit leaders charged with improving HH performance of the team. HH data were presented using a graphical interface with analytical tools that easily showed current performance relative to a priori goals, historic performance, and comparable peers. Armed with reliable and accurate HH data, hospital leadership made the decision to tie overall HH compliance of a unit to the performance measure of the unit leadership. This level of administrative endorsement was a testament to the utility and success of the DOHA program.

There were limitations to our study. This was a single-center study, and the generalizability of our findings was limited. However, we are conducting trials of this program at other hospitals. Second, our criteria for HH compliance were adapted from those of the CDC and the World Health Organization to accommodate the practical limitations of our hospital. Next, this study did not examine cost effectiveness and was not able to compare findings with those for other strategies of HH monitoring. However, HH compliance as documented in this project was similar to compliance determined using other means, including video surveillance.10 The correlation suggests good external validity of our program.

In summary, we implemented a large-scale electronic-assisted DOHA program throughout a tertiary care hospital. As anticipated, we detected the Hawthorne effect, which altered the HH behavior of healthcare staff. We also discovered that poorly coordinated HH auditing and reporting can lead to misleading and inaccurate HH compliance data. We made improvements in the accuracy and validity of HH data after implementing standardized auditing protocols, quota-based audits, an AGT, and a composite HH score. We believe that this type of regulated and coordinated DOHA program could be implemented in most hospitals to provide highly useful data to motivate HH behavior and to improve patient safety.

Acknowledgments

We thank the Duke University Hospital President’s Office for funding support of the directly observed hand hygiene audit.

Financial support. This work was supported by dedicated funding from the senior administration from Duke University Hospital.

Footnotes

Presented in part: Society for Healthcare Epidemiology of America 21st Annual Meeting; Dallas, Texas; April 1–4, 2010.

Potential conflicts of interest. All authors report no conflicts of interest relevant to this article. All authors submitted the ICMJE Form for Disclosure of Potential Conflicts of Interest, and the conflicts that the editors consider relevant to this article are disclosed here.

References

- 1.Haas JP, Larson EL. Measurement of compliance with hand hygiene. J Hosp Infect. 2007;66(1):6–14. doi: 10.1016/j.jhin.2006.11.013. [DOI] [PubMed] [Google Scholar]

- 2.Marra AR, Moura DF, Jr, Paes AT, dos Santos OF, Edmond MB. Measuring rates of hand hygiene adherence in the intensive care setting: a comparative study of direct observation, product usage, and electronic counting devices. Infect Control Hosp Epidemiol. 2010;31(8):796–801. doi: 10.1086/653999. [DOI] [PubMed] [Google Scholar]

- 3.Parsons HM. What happened at Hawthorne?: new evidence suggests the Hawthorne effect resulted from operant reinforcement contingencies. Science. 1974;183(4128):922–932. doi: 10.1126/science.183.4128.922. [DOI] [PubMed] [Google Scholar]

- 4.Eckmanns T, Bessert J, Behnke M, Gastmeier P, Ruden H. Compliance with antiseptic hand rub use in intensive care units: the Hawthorne effect. Infect Control Hosp Epidemiol. 2006;27(9):931–934. doi: 10.1086/507294. [DOI] [PubMed] [Google Scholar]

- 5.Dhar S, Tansek R, Toftey EA, et al. Observer bias in hand hygiene compliance reporting. Infect Control Hosp Epidemiol. 2010;31(8):869–870. doi: 10.1086/655441. [DOI] [PubMed] [Google Scholar]

- 6.Boyce JM, Pittet D. Guideline for hand hygiene in health-care settings: recommendations of the Healthcare Infection Control Practices Advisory Committee and the HICPAC/SHEA/APIC/IDSA Hand Hygiene Task Force. Infect Control Hosp Epidemiol. 2002;23(Suppl 12):S3–S40. doi: 10.1086/503164. [DOI] [PubMed] [Google Scholar]

- 7.Pittet D, Allegranzi B, Boyce J. The World Health Organization guidelines on hand hygiene in health care and their consensus recommendations. Infect Control Hosp Epidemiol. 2009;30(7):611–622. doi: 10.1086/600379. [DOI] [PubMed] [Google Scholar]

- 8.Maurer K, Maurer J, Peltier E, Savo P. Are industry-based safety initiatives relevant to medicine? Focus Patient Safety. 2001;4(4):1–3. [Google Scholar]

- 9.The Joint Commission . Measuring hand hygiene adherence: overcoming the challenges. Joint Commission; Oakbrook Terrace, IL: 2009. [Google Scholar]

- 10.Armellino D, Hussain E, Schilling ME, et al. Using high-technology to enforce low-technology safety measures: the use of third-party remote video auditing and real-time feedback in healthcare. Clin Infect Dis. 2012;54(1):1–7. doi: 10.1093/cid/cis201. [DOI] [PMC free article] [PubMed] [Google Scholar]