Abstract

During an early epoch of development, the brain is highly adaptive to the stimulus environment. Exposing young animals to a particular tone, for example, leads to an enlarged representation of that tone in primary auditory cortex. While the neural effects of simple tonal environments are well characterized, the principles that guide plasticity in more complex acoustic environments remain unclear. In addition, very little is known about the perceptual consequences of early experience-induced plasticity. To address these questions, we reared juvenile rats in complex multitone environments that differed in terms of the higher-order conditional probabilities between sounds. We found that the development of primary cortical acoustic representations, as well as frequency discrimination ability in adult animals, were shaped by the higher-order stimulus statistics of the early acoustic environment. Our results suggest that early experience-dependent cortical reorganization may mediate perceptual changes through statistical learning of the sensory input.

Introduction

During a “critical period” of heightened plasticity in early postnatal life, the brain and behavior are shaped by sensory experience (Zhang et al., 2001; Hensch, 2004; Sanes and Bao, 2009). Early exposure to language and music, for example, leads to long-lasting changes in perception and neuronal representation (Kuhl et al., 1992; Näätänen et al., 1997; Pantev et al., 1998; Werker and Tees, 2005; Parbery-Clark et al., 2009; Kuhl, 2010). Perceptual changes in infants depend not only on the frequency of encountered stimuli (Maye et al., 2002), but also on the higher-order statistical relationships between stimuli (Saffran et al., 1996; Schön and Francois, 2011). These statistical learning capabilities extend beyond language and music to visual (Fiser and Aslin, 2002), auditory (Saffran et al., 1999), object (Wu et al., 2011), and social learning (Kushnir et al., 2010), and may represent a general mechanism by which infants form meaningful representations of the environment(Xu and Griffiths, 2011).

In juvenile animals, exposure to sensory stimuli also leads to changes in cortical sensory representations (Zhang et al., 2001; Noreña et al., 2006; de Villers-Sidani et al., 2007; Zhou and Merzenich, 2008; Barkat et al., 2011), indicating that the early environment plays a role in shaping neural circuits in animals as well. Most studies investigating this form of plasticity have focused on simple stimulus environments that lack the kind of systematic statistical regularities found in natural environments (Zhang et al., 2001; Singh and Theunissen, 2003; Han et al., 2007). In addition, very few studies have investigated and found evidence for perceptual consequences of early experience in animals (Han et al., 2007; Prather et al., 2009; Sanes and Bao, 2009; Sarro and Sanes, 2011).

Animal vocalizations, like human speech and music, exhibit complex statistical structure (Holy and Guo, 2005; Takahashi et al., 2010). For example, rodent pup and adult calls are repeated in bouts (Liu et al., 2003; Holy and Guo, 2005; Kim and Bao, 2009), resulting in high sequential conditional probabilities for calls of the same type, and low sequential conditional probabilities for calls of different types (Holy and Guo, 2005). This higher-order statistical structure may provide information for classifying the calls into distinct, categorically perceived groups of sounds (Ehret and Haack, 1981). Although adult rats are sensitive to the statistical structure of the sensory environment (Toro and Trobalon, 2005), it is not known whether the statistical regularities of sounds experienced during early life have long-term effects on perception or cortical acoustic representation.

To investigate the effect of the statistical structure of the early acoustic environment on perception and neuronal representations, we exposed litters of rat pups to sequences of tone pips that differed only in terms of the higher-order conditional probabilities between tones. We then performed neurophysiological recordings to assess changes in neuronal response properties, as well as behavioral testing to measure changes in perceptual ability. We found that both the development of neuronal receptive fields in primary auditory cortex, as well as frequency discrimination ability in adult rats, were affected by the higher-order stimulus statistics of the early acoustic environment. Our results suggest that early experience-dependent neural changes could provide a mechanism for perceptual changes related to statistical learning.

Materials and Methods

Acoustic rearing of young rat pups.

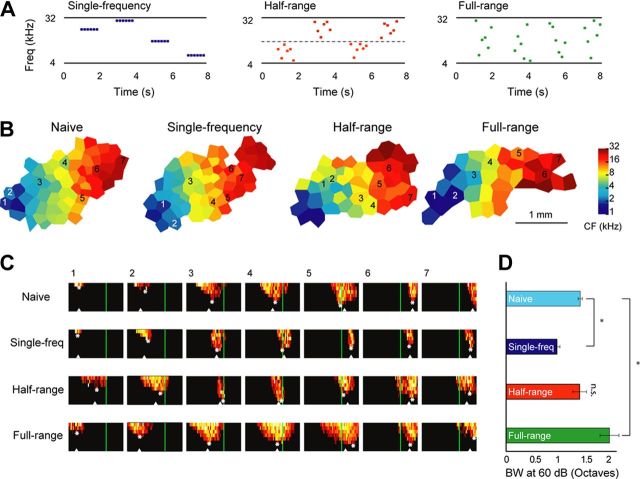

All procedures used in this study were approved by the University of California Berkeley Animal Care and Use Committee. Female Sprague Dawley rats were used in the present study. Three groups of rat pups (single-frequency, half-range, full-range) were placed with their mothers in an anechoic sound-attenuation chamber from postnatal day (P)9 to P35. This time period comprises the critical period for experience-dependent plasticity in primary auditory cortex, including changes in frequency tuning, neuronal tuning bandwidth, and complex sound selectivity. All groups heard 1-s-long trains of six tone pips (100 ms, 60 dB SPL), with one train occurring every 2 s (Fig. 1A). For all groups, tones were drawn from a logarithmically uniform frequency distribution spanning 4–32 kHz, with constraints placed only on the sequential conditional probabilities of sounds within a sequence. For the full-range group, tones within a single sequence were drawn from the entire breadth of the distribution (Fig. 1A). For the single-sequence group, sequences were made up of the same tone repeated six times (Fig. 1A). For the half-range group, tones within a sequence were either higher or lower than the “boundary frequency” of 11.314 kHz (Fig. 1A). After sound exposure, rats were moved to a regular animal room environment until they were mapped. A control litter was reared in a regular animal room environment.

Figure 1.

Influences of higher-order stimulus statistics on spectral selectivity of primary auditory cortical neurons. A, Schematics of the acoustic environments that the animals experienced. The three acoustic environments had the same logarithmically uniform frequency distribution from 4 to 32 kHz and the same temporal presentation rates, but differed in the conditional probabilities of the tonal frequencies within sequences. B, Representative cortical maps. The sound exposure did not alter the overall tonotopic characteristic frequency distribution. C, Representative frequency–intensity receptive fields. The corresponding locations are marked on the tonotopic maps in B. The green vertical lines mark the low conditional probability boundary experienced by the half-range group. Stars denote the characteristic frequency or CF, and triangles denote the center-of-mass frequency. Horizontal axis depicts frequency logarithmically from 1 to 32 kHz and vertical axis depicts intensity from 10 to 80 dB SPL. D, Tuning bandwidth at 60 dB SPL. Cyan, Naive control; dark blue, single-frequency; red, half-range; green, full-range. Frequency tuning bandwidth became narrower in the single-frequency group and broader for the full-range group compared to control. *p < 0.05, determined by an ANOVA with post hoc Tukey–Kramer test.

Electrophysiological recording procedure.

The primary auditory cortex of sound-reared (single-sequence, n = 5; half-range, n = 4; full-range n = 6) and naive control rats (n = 7) were mapped at comparable ages from P35–P52. There were no significant age differences between groups (one-way ANOVA, F(3,18) = 0.48, n.s.), and none of the reported neurophysiological parameters showed a significant correlation with age. Rats were preanesthetized with buprenorphine (0.05 mg/kg, s.c.) a half hour before they were anesthetized with sodium pentobarbital (50 mg/kg, followed by 10–20 mg/kg supplements as needed). Atropine sulfate (0.1 mg/kg) and dexamethasone (1 mg/kg) were administered once every 6 h. The head was secured in a custom head holder that left ears unobstructed, and the cisterna magna was drained of CSF. The right auditory cortex was exposed through a craniotomy and durotomy and was kept under a layer of silicone oil to prevent desiccation. Sound stimuli were delivered to the left ear through a custom-made speaker that had been calibrated to have <3% harmonic distortion and flat output in the entire frequency range.

Cortical responses were recorded by repeatedly inserting two pairs of tungsten microelectrodes (240 μm spacing; manufactured by FHC, catalog no. MX211EW) orthogonally into the cortex to a depth of 500–600 μm, where responses to noise bursts could be found. Recording sites were chosen to evenly and densely map primary auditory cortex while avoiding surface blood vessels and were marked on an amplified digital image of the cortex. The sampling density between groups was not significantly different (one-way ANOVA for electrode spacing between groups, F(3,18) = 0.97, n.s.). Multiunit responses to 25 ms tone pips of 51 frequencies (1–32 kHz, 0.1 octave spacing, 5 ms cosine-squared ramps) and eight sound pressure levels (0–70 dB SPL, 10 dB steps) were recorded to reconstruct the frequency-intensity receptive field. The total number of multiunit receptive fields recorded in each group was n = 333 (naive control), n = 248 (single-sequence), n = 202 (half-range) and n = 291 (full-range).

Electrophysiological data analysis.

The characteristic frequency (CF), center-of-mass frequency, tuning bandwidth, and threshold were determined for each receptive field (RF) using an automated algorithm. The MATLAB function medfilt2.m was used to perform two-dimensional median filtering of the RF, such that each output pixel contained the median value in the 3 × 3 neighborhood of the corresponding input pixel, Each receptive field was then thresholded at 30% of the maximum response to define the response area of the receptive field. The CF was defined from this thresholded receptive field as the frequency at which responses are evoked at threshold, the lowest sound pressure level that evokes a response. The center-of-mass frequency was calculated as a weighted average of the RF frequencies, where each frequency was weighted by the magnitude of the response it elicited. Bandwidth was defined as the width of the thresholded RF and was measured both at an absolute intensity level of 60 dB (bandwidth at 60 dB) and relative to threshold (bandwidth 20 dB above threshold).

“Category-symmetric” receptive fields were defined as sites where the response rate to tones in the low-frequency category was within 20% of the response rate to tones in the high-frequency category. This was calculated for each receptive field by dividing the total response (number of spikes) to frequencies higher than the boundary frequency (11.314 kHz) by the total response to all frequencies to obtain a category response index (CRI) ranging from 0 to 1, and then defining receptive fields with a CRI between 0.4 and 0.6 as category symmetric. For each animal, the number of category-symmetric sites was divided by the total number of sites recorded in that animal to obtain the percentage of category-symmetric sites.

To test the hypothesis that RF centers of mass were shifted away from the boundary in the half-range group compared to all other groups, we assigned RFs with a center of mass within 0.1 octaves of the boundary a value of 1, and RFs with a center of mass >0.1 octaves from the boundary a value of 0. Logistic regression (using the function glmfit.m in MATLAB, MathWorks) was used to determine the relationship between group and probability of membership in the boundary versus nonboundary region. For this analysis, the coefficients (β) and p values are reported in the text for all statistically significant comparisons.

To quantify changes in tuning curve slope at the boundary frequency (11.314 kHz), frequency tuning curves at 60 dB were fitted with a cubic spline interpolation (MATLAB, MathWorks). The squared sum of errors of the fitting averaged <0.01. Receptive fields were divided into bins based on the difference between their characteristic frequency and the boundary frequency (up to 1.5 octaves), and the absolute value of the tuning curve derivatives was plotted against CF, as described previously by Schoups et al., 2001. Because the number of neurons in each CF bin for each animal was very small, for this analysis only neurons were pooled across subjects and then compared across groups.

Behavioral testing.

Behavioral training and testing were conducted using the littermates of the mapped animals (or in some cases identically exposed animals from other litters) and started when the pups were 2 months old. Animals were food deprived to reach a 10% body weight reduction before training was started. Training took place in a wire cage located in an anechoic sound-attenuation chamber. On automatic initiation of a trial, tone pips of 100 ms duration and a standard frequency were played 5 times/s through a calibrated speaker (Fig. 4A). After a random duration of 5–35 s, tone pips of a target frequency were played in the place of every other standard tone pip. Rats were trained to detect the frequency difference and make a nose poke in a nosing hole within 3 s after the first target tone, which was scored as a hit and rewarded with a food pellet. The randomly varying time frame (5–35 s) before onset of the alternating frequencies ensured that rats could not adopt a timing-based strategy (e.g., by making a nose poke at a set time interval after tone onset); rather, the only cue for a successful nose poke was a perceived difference between the standard and target frequencies. The 3 s response window after onset of the alternating frequency sequence allowed the animal to hear up to 7 repetitions of the standard target pair before making a nose poke, thus increasing the probability of a nose poke for very small frequency differences. In 10% of trials, the target frequency was the same as the standard frequency, and the false alarm rate was determined by calculating the percentage of these “same” trials that resulted in a nose poke. On each training day, animals were allowed to achieve 250–300 hits. All animals underwent 3 d of initial training with a large difference between the target and standard frequencies (Δf = 1 octave). Rapid procedure learning occurred in this phase of training. To minimize frequency-specific effects of procedure training on tone representation and perception, tone frequencies during the training phase were randomly chosen to be between 2.8 and 45.3 kHz (0.5 octaves below 4 kHz and above 32 kHz). In the subsequent testing phase (3 d), we tested perceptual discrimination of smaller Δfs of 0.5, 0.3, and 0.1 octaves at 9 test frequencies equally spaced (on a logarithmic scale) across the 4–32 kHz range. Delta f levels and frequencies were randomly intermixed within each block. The larger delta f values were included to motivate the animals and often led to saturated performance levels of >85%. Consequently, we performed an additional analysis in which we included only the delta f level that led to performance between 60 and 85% for each animal. This range was chosen to closely match percent-correct performance levels typically used in psychophysical staircase procedures in which task difficulty is adjusted to individual performance (Garcia-Perez, 1998). Animals that did not achieve performance within this range or had a false alarm rate of >30% were excluded. A total of eight naive controls, eight single-frequency exposed animals, eight half-range exposed animals and seven full-range exposed animals reached the criteria and were included in the final data analysis. To quantify elevated frequency discrimination ability at the boundary using a within-subjects test, we computed a boundary contrast metric (BCM) as the difference between discrimination ability at the boundary frequency and average discrimination ability at four neighboring frequencies (highlighted in yellow in Fig. 4D–G), and compared BCM values across groups.

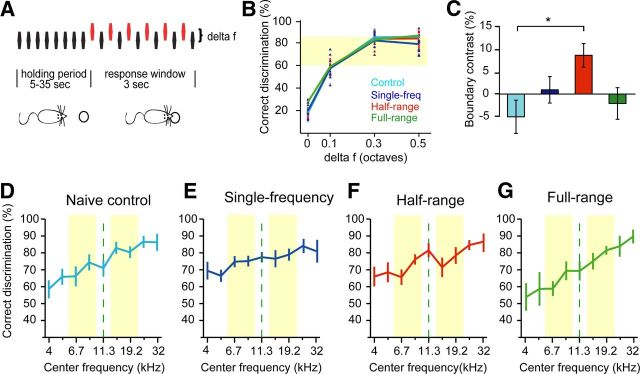

Figure 4.

Perceptual boundary shaped by conditional stimulus probabilities. A, Schematic paradigm of frequency discrimination task. Animals were required to make a nose poke when they detected a frequency difference between a standard frequency fs (black) and a target frequency ft (red). B, When we compared performance across groups for delta f levels of 0, 0.1, 0.3 and 0.5, there were no significant differences between groups. C, However, when we focused our analysis only on delta f levels for which animals performed within the 60–85% range (highlighted in yellow in B), animals in the half-range group (red) showed elevated frequency sensitivity at the boundary (boundary contrast) compared to the control group. *p < 0.05, ANOVA with post hoc Tukey–Kramer test. D–G, Individually adjusted performance curves for the 60–85% performance range, on which part C is based. The boundary contrast was calculated by subtracting the average performance at frequencies highlighted in yellow from performance at the boundary (dashed line) to achieve a within-subjects comparison.

Statistical testing.

Statistical significance was determined using ANOVA and post hoc Tukey–Kramer tests at a significance level of p < 0.05 (anovan.m and multcompare.m in MATLAB, MathWorks) in all cases except for the center-of-mass analysis, for which we used logistic regression (described above, Electrophysiological data analysis).

Results

To probe the sensitivity of the developing auditory cortex to higher-order stimulus probabilities, we exposed three groups of rat pups to sequences of tone pips that differed only in terms of the conditional probabilities between tone pips within a sequence (Fig. 1A). All groups experienced sequences of six tones repeated at an ethological rate (Kim and Bao, 2009) of 6 Hz, with 1 s intervals between sequences. The temporal structure of sequences approximated rat vocalization patterns, which are characterized by bouts of calls repeated at around 6 Hz (Kim and Bao, 2009). The overall spectral distribution was the same for all groups; all tones were drawn from a logarithmically uniform frequency distribution between 4 and 32 kHz (Fig. 1A). Across groups, however, different constraints were placed on the spectral composition of individual sequences. For the single-frequency group, sequences were made up of the same tone repeated six times. For the full-range group, tones within a sequence were drawn from the entire breadth of the tone distribution, resulting in a broad spectral range (3 octaves). For the half-range group, tones within a sequence were drawn from either the higher or lower half of the frequency range, resulting in two categories of sounds with high conditional probabilities and a low conditional probability “boundary” at 11.314 kHz (Fig. 1A). The half-range group sequences had an intermediate spectral range (1.5 octaves). Animals were continuously exposed to the tone sequences from P9 to P35 and subsequently mapped between P35 and P52. Naive control animals were maintained in a normal animal husbandry room and mapped at matching ages.

Sound exposure did not lead to gross changes in the tonotopic organization of primary auditory cortex (Fig. 1B). The distribution of characteristic frequencies (CFs; white stars in Fig. 1C) was not different between groups (data not shown). However, inspection of individual receptive fields revealed changes in receptive field bandwidth and shape (Fig. 1C). The receptive field bandwidth at 60 dB was significantly different between the groups (one-way ANOVA, F(3,18) = 13.02; p < 9.2*10−5). A post hoc Tukey–Kramer test at the 0.05 significance level showed that receptive fields in the full-range condition were significantly broader than those in the control group, whereas those in the single-frequency condition were significantly narrower (Fig. 1D). The bandwidth differences were present regardless of whether we measured tuning bandwidth at a fixed intensity level (e.g., 60 dB as shown in Fig. 1D) or relative to threshold (e.g., bandwidth 20 dB above threshold, one-way ANOVA, F(3,18) = 13.21; p = 8.5*10−5, data not shown; a post hoc Tukey–Kramer test yielded the same results as those for bandwidth at 60 dB). These results indicate that receptive field bandwidth is shaped by the spectral range of tones with high conditional probabilities within sequences, but not the overall spectral distribution of the stimulus ensembles.

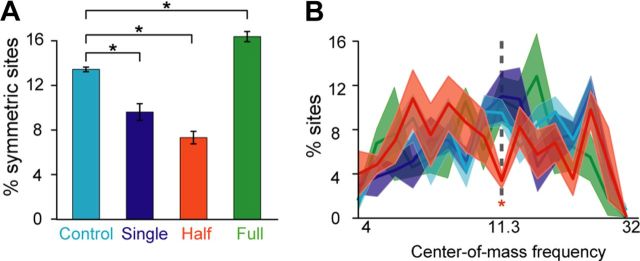

Receptive field bandwidth was not different between the half-range condition and the control condition (Tukey–Kramer post hoc test at significance level of 0.05, n.s.). Since the spectral range of the half-range sequences (1.5 octaves) approximately matched the tuning bandwidth of naive auditory cortical receptive fields (mean ± 95% confidence interval = 1.47 ± 0.13), adaptation to the spectral range should lead to shifting rather than broadening or narrowing of receptive fields. We examined the distribution of the frequency-intensity response area on each side of the low conditional probability boundary frequency (11.314 kHz). Receptive fields in the half-range condition shifted to preferentially respond to the lower or higher portion of the frequency range, with fewer multiunit sites responding equally well to both sides (Fig. 1C). We quantified this effect by comparing the percentage of sites in each animal that responded approximately equally (or symmetrically) to tones in the low and high categories (see Materials and Methods). A one-way ANOVA showed a significant effect of group on the percentage of category-symmetric receptive fields: F(3,18) = 64.13, p < 8.3*10−10 (Fig. 2A). A post hoc Tukey–Kramer test at a significance level of 0.05 showed that the percentage of category-symmetric receptive fields was significantly different between all groups. Compared to the control, the single-sequence and full-range groups had significantly lower and higher percentages of category-symmetric sites, respectively: these effects can be directly predicted from their respectively narrower and broader receptive field bandwidths, and therefore do not provide indication of receptive field shifting. Interestingly, however, the percentages of category-symmetric sites in the half-range group were the lowest compared to all other groups; since receptive field bandwidths in this group were not different from the control, this effect must be due to shifting of the receptive fields to preferentially encode either the low-frequency category or the high-frequency category. Together with the narrower tuning bandwidth seen in the single-frequency group, these results suggest that pairs of sounds with low conditional probabilities within sequences tend to be represented by separate populations of cortical neurons.

Figure 2.

Selective representation of low and high tones in the half-range condition. A, Percentage of category-symmetric sites in each animal. Category-symmetric sites are defined as sites that respond approximately equally to frequencies in the low and high categories (see Materials and Methods). *p < 0.05, ANOVA with post hoc Tukey–Kramer test. B, Distributions of center-of-mass frequency. Significantly fewer neurons in the half-range group had center-of-mass frequency within 0.1 octaves of the low conditional probability boundary (dashed line) compared to the control group. Shown are group mean and SEM; *p < 0.05, logistic regression.

The shift in receptive fields but not in CFs in the half-range group suggests that changes in frequency selectivity occurred at the flanks but not at the threshold of receptive fields. Comparison of frequencies at the center of mass of the receptive fields (white triangle in Fig. 1B, compiled data in Fig. 2B) that are sensitive to shifts in the flanks of receptive fields confirmed that compared to control, significantly fewer multiunits in the half-range, but not single-sequence or full-range groups, had receptive fields centered within 0.1 octaves of the boundary frequency (logistic regression: control vs half-range, β=−0.9699, p < 0.05; all other comparisons n.s.).

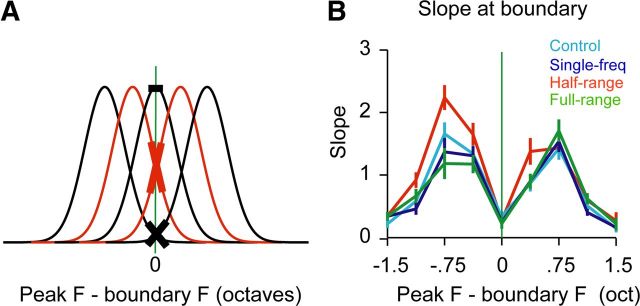

Previous studies (Schoups et al., 2001; Han et al., 2007) have shown that sensory experience can lead to asymmetric changes in the stimulus–response functions of neuronal tuning curves, and in particular a steepening of tuning curve slopes. We therefore hypothesized that the shift in RF center of mass and altered distribution of category-symmetric receptive fields in the half-range group could be due to steeper tuning curve slopes, specifically at the boundary frequency. Because tuning curve slopes at any given frequency vary depending on a particular neuron's best frequency (Fig. 3A), any effects will likely be washed out by this peak-dependent variability. Therefore, we grouped recording sites by the peaks of their tuning curves. Sites tuned to the boundary frequency (Fig. 3B; peak F − boundary F = 0) and sites tuned to frequencies far away from the boundary frequency showed minimal changes in slope. Sites tuned to frequencies flanking the boundary frequency had visibly steeper slopes at the boundary frequency in the half-range group relative to all other groups (Fig. 3B). A four-group by nine-CF bin ANOVA showed a significant main effect of group F(3,820) = 4.36, p < 0.05, a significant main effect of bin F(8,820) = 20.22, p < 0.05, and a nonsignificant interaction F(24,820) = 1.34, n.s.). A post hoc Tukey–Kramer test across groups showed that tuning curve slopes at the boundary were significantly steeper in the half-range group compared to all other groups.

Figure 3.

Steeper tuning curve slopes in the half-range group at the low conditional probability boundary. A, Schematic of the dependency of tuning curve slope on tuning curve peak. Neurons with tuning curve peaks flanking the low conditional probability boundary (green) have the steepest slope at the boundary. B, Absolute values of tuning curve slopes as a function of neuronal tuning curve peaks relative to the boundary. Tuning curve slopes in the half-range group were steeper than tuning curve slopes in the other groups, especially for neurons tuned to flanking frequencies. An ANOVA with post hoc Tukey–Kramer test showed that receptive fields in the half-range group (red) had significantly higher tuning curve slopes at the boundary compared to all other groups, p < 0.05.

Neurons are most sensitive to stimulus differences at the slopes of tuning curves, and experimental (Schoups et al., 2001; Han et al., 2007) and theoretical (Butts and Goldman, 2006) evidence suggests that the steepness of tuning curves may be related to performance in tasks involving fine stimulus discriminations. We therefore examined how the steeper tuning curve slopes observed in the half-range group impacted perception using a frequency discrimination task. Behavioral testing was performed in the littermates (or other identically sound-exposed animals) of the animals for which we mapped primary auditory cortex. Animals were food deprived to attain a 10% reduction in body weight and trained for 3 d on a perceptually easy frequency discrimination task that required them to make a nose poke when they perceived a difference between a standard frequency (fs) and target frequency (ft) that were 1 octave apart (Fig. 4A and Materials and Methods). On the fourth day, all animals underwent the second phase of difficult perceptual testing in which their perceptual discrimination ability was examined with smaller frequency differences of 0.5, 0.3, and 0.1 octaves.

When we compared discrimination performance for all frequency differences, we saw no significant differences between groups (Fig. 4B; four-group by four-frequency differences ANOVA, F(3,136) = 2.3, n.s.). However, we noted a high degree of variance in performance levels between animals, likely due to the fact that training length was identical regardless of individual progress. To avoid floor or ceiling effects resulting from the task being too easy or too hard for individual animals, we chose the frequency difference that gave a mean performance level between 60 and 85% for each animal for further analysis. This range was chosen to most closely match discrimination thresholds commonly used in psychophysical staircase procedures (Garcia-Perez, 1998) that also adapt task difficulty to each individual based on performance. The average frequency difference levels that led to 60–85% performance did not differ across groups (F(3,27) = 0.58, n.s.).

Figure 4, D–G, show the individually adjusted discrimination curves for the four groups of animals. Since data for each animal reflect performance at a different difficulty levels, we focused our analysis on within-subject comparisons only and specifically tested the hypothesis that frequency discrimination at the boundary frequency was superior to frequency discrimination at nearby frequencies in half-range group animals. To this end, we calculated a boundary contrast metric for each animal by subtracting the average performance for the four frequencies nearest to the boundary (highlighted in yellow in Fig. 4D–G) from performance at the boundary (dashed line). The BCM was significantly different from the control group for the half-range group only (Fig. 4C; one-way ANOVA, F(3,27) = 3.43, p < 0.05; post hoc Tukey–Kramer test at a 0.05 significance level).

Discussion

The study provides evidence that cortical representation and perception in a nonhuman animal are shaped by the higher-order conditional probabilities of sounds in the early acoustic environment. Specifically, we found that tonal stimuli with high conditional probabilities within 6 Hz sequences tended to be represented together in the primary auditory cortex of developing rats, whereas tones with low conditional probabilities were represented separately. This was manifested by broader frequency tuning in rats exposed to spectrally broad (full-range) tone sequences, narrower tuning in rats exposed to single-frequency tone sequences, and preferentially selective receptive fields in rats exposed to randomly alternating half-range tone sequences. Furthermore, in the half-range group the low-probability stimulus boundary was represented by steeper tuning curve slopes and correspondingly elevated perceptual sensitivity compared to neighboring frequencies, forming both a neuronal and perceptual boundary.

The neural mechanisms underlying the preferential corepresentation of stimuli with high conditional probabilities are not known. Broadening and narrowing of receptive fields can be the result of plasticity at both thalamocortical and recurrent intracortical synaptic connections (Liu et al., 2007), and the present study does not distinguish between the two. However, our results do suggest that multiunits in primary auditory cortex are able to compute and encode joint stimulus probabilities over hundreds of milliseconds. A previous study found that critical period plasticity in rats is enhanced for sounds repeated at 6 Hz relative to other modulation rates (Kim and Bao, 2009). This result, together with our finding that sounds within 6 Hz sequences are represented together, suggests that the prolonged temporal integration window of cortical neurons compared to subcortical neurons (Bao et al., 2004; Chang et al., 2005; Wang, 2007) may serve the purpose of allowing spectrotemporal dependencies to be encoded in primary auditory cortex.

Sensory training can rapidly alter cortical stimulus representations (Edeline et al., 1993; Ohl and Scheich, 1996; Reed et al., 2011). Since our electrophysiological data were collected from littermates of the behaviorally tested animals, they likely do not precisely reflect the state of sensory representation in the behaviorally tested animals. Therefore, it was not possible, nor did we attempt, to fully predict the observed behavioral performance from the collected neuronal data. Our goal was limited to testing the theoretically predicted and previously reported effects of tuning curve slopes on perceptual discrimination performance (Schoups et al., 2001; Butts and Goldman, 2006; Han et al., 2007). The frequency-specific increase in perceptual sensitivity at the probability boundary for the half-range group is likely not caused by this frequency-nonspecific training procedure. The correspondence between the increased perceptual sensitivity and the elevated tuning curve slopes at the boundary in the half-range group, even though observed in different animals, lends support to the link between the steepness of primary cortical tuning curve slopes and perceptual discrimination ability (Schoups et al., 2001; Butts and Goldman, 2006; Han et al., 2007).

Whether perceptual learning depends on plastic changes in the auditory cortex is matter of current debate. Some studies have found correlations between altered cortical stimulus representations and perceptual behaviors (Recanzone et al., 1992; Recanzone et al., 1993; Polley et al., 2004; Polley et al., 2006; Han et al., 2007), but others have failed to observe such relationships (Talwar and Gerstein, 2001; Brown et al., 2004; Reed et al., 2011). As evidence supporting both views accumulates, it is increasingly evident that the conclusion depends on the specifics of the tasks used to measure behavioral performances as well as the types of plasticity effects that are considered (Berlau and Weinberger, 2008). A particular perceptual change may be mediated by different forms of cortical plasticity in different contexts. For example, stimulus discrimination performance could be altered by changes in neuronal characteristic frequency, tuning bandwidth, spontaneous firing rate, or response magnitude (Gilbert et al., 2001; Kim and Bao, 2008). At the same time, seemingly similar plasticity effects could have different perceptual consequences and subserve different aspects of the learning process. For example, a transient increase in stimulus representation may serve to enhance stimulus salience and facilitate early procedural learning in an early phase of perceptual training (Reed et al., 2011), while long-lasting representational enlargement may serve a different, perceptual as opposed to procedural function, for example by improving or impairing stimulus discrimination performance (Recanzone et al., 1993; Han et al., 2007). In the latter case, the perceptual effects of the enlarged representation may be mediated by secondary effects of the altered stimulus representation, such as changes in neuronal bandwidths, alignment of tuning curve slopes, etc. (see Han et al., 2007, their supplementary Fig. 4). Our current finding of correlated increases of perceptual sensitivity and tuning curve slopes supports a role of cortical plasticity in perceptual learning.

Perceptual boundaries at ethologically relevant stimulus transitions have been documented across a wide range of nonhuman animals (May et al., 1989; Nelson and Marler, 1989; Wyttenbach et al., 1996; Baugh et al., 2008), including rodents (Ehret and Haack, 1981). However, unlike in humans where the existence of language-specific differences in speech sound perception (Fox et al., 1995; Iverson and Kuhl, 1996) has long pointed to a role for early experience in shaping perception, perceptual boundaries in animals have often been assumed to be innate (Kuhl and Miller, 1978; Mesgarani et al., 2008; but see Prather et al., 2009). On the contrary, the results of the present study suggest that auditory perceptual boundaries in rodents can emerge as a direct result of sensory experience. More specifically, neuronal encoding of stimulus statistics may provide a basic mechanism whereby sensory stimuli (including animal vocalizations) are segmented into behaviorally relevant categories (Prather et al., 2009).

It is unknown whether categorical perception for rodent vocalizations (Ehret and Haack, 1981) arises by the same mechanisms as those documented in the present study. Rodent vocalizations occur in bouts on similar time scales and with similar spectral bandwidths as the tone sequences used in this study (Holy and Guo, 2005; Kim and Bao, 2009). Early experience of those vocalizations could thus theoretically lead to similar cortical representations and reduced perceptual contrast of the individual calls despite their substantial trial-by-trial variability (Holy and Guo, 2005). By contrast, functionally different call types that do not occur in the same bout may be represented by distinct populations of neurons, resulting in perceptual boundaries and categorical perception of the calls. Further work involving manipulation of mother and pup vocalizations during the critical period will be necessary to examine this question further.

Theoretical considerations and empirical observations have suggested that perception is a process of statistical inference in which incoming information conveyed by our sensory receptors is combined with expectations drawn from experience (Knill and Richards, 1996; Körding and Wolpert, 2004). Representing probability structures is essential for statistical inference. Previous studies have suggested that simple prior probability distributions of sensory stimuli may be stored in the size of their representations in the sensory cortex (Simoncelli, 2009; Köver and Bao, 2010). Here we show that higher-order probability distributions may also be stored in the sensory cortex, where they can be integrated with sensory information to shape sensory perception in more complex ways.

Footnotes

This work was supported by the National Institute on Deafness and Other Communicative Disorders. H.K. was supported by a predoctoral fellowship from Boehringer Ingelheim Fonds, Germany (http://www.bifonds.de). We thank Chris Rodgers and Heesoo Kim for their comments on the manuscript, and Milan Shen and Julia Viladomat for their discussion of statistical analysis procedures.

The authors declare no competing financial interests.

References

- Bao S, Chang EF, Woods J, Merzenich MM. Temporal plasticity in the primary auditory cortex induced by operant perceptual learning. Nat Neurosci. 2004;7:974–981. doi: 10.1038/nn1293. [DOI] [PubMed] [Google Scholar]

- Barkat TR, Polley DB, Hensch TK. A critical period for auditory thalamocortical connectivity. Nat Neurosci. 2011;14:1189–1194. doi: 10.1038/nn.2882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baugh AT, Akre KL, Ryan MJ. Categorical perception of a natural, multivariate signal: mating call recognition in tungara frogs. Proc Natl Acad Sci U S A. 2008;105:8985–8988. doi: 10.1073/pnas.0802201105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlau KM, Weinberger NM. Learning strategy determines auditory cortical plasticity. Neurobiol Learn Mem. 2008;89:153–166. doi: 10.1016/j.nlm.2007.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown M, Irvine DR, Park VN. Perceptual learning on an auditory frequency discrimination task by cats: association with changes in primary auditory cortex. Cereb Cortex. 2004;14:952–965. doi: 10.1093/cercor/bhh056. [DOI] [PubMed] [Google Scholar]

- Butts DA, Goldman MS. Tuning curves, neuronal variability, and sensory coding. PLoS Biol. 2006;4:e92. doi: 10.1371/journal.pbio.0040092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang EF, Bao S, Imaizumi K, Schreiner CE, Merzenich MM. Development of spectral and temporal response selectivity in the auditory cortex. Proc Natl Acad Sci U S A. 2005;102:16460–16465. doi: 10.1073/pnas.0508239102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Villers-Sidani E, Chang EF, Bao S, Merzenich MM. Critical period window for spectral tuning defined in the primary auditory cortex (A1) in the rat. J Neurosci. 2007;27:180–189. doi: 10.1523/JNEUROSCI.3227-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Edeline JM, Pham P, Weinberger NM. Rapid development of learning-induced receptive field plasticity in the auditory cortex. Behav Neurosci. 1993;107:539–551. doi: 10.1037//0735-7044.107.4.539. [DOI] [PubMed] [Google Scholar]

- Ehret G, Haack B. Categorical perception of mouse pup ultrasound by lactating females. Naturwissenschaften. 1981;68:208–209. doi: 10.1007/BF01047208. [DOI] [PubMed] [Google Scholar]

- Fiser J, Aslin RN. Statistical learning of new visual feature combinations by infants. Proc Natl Acad Sci U S A. 2002;99:15822–15826. doi: 10.1073/pnas.232472899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox RA, Flege JE, Munro MJ. The perception of English and Spanish vowels by native English and Spanish listeners: a multidimensional scaling analysis. J Acoust Soc Am. 1995;97:2540–2551. doi: 10.1121/1.411974. [DOI] [PubMed] [Google Scholar]

- García-Pérez MA. Forced-choice staircases with fixed step sizes: asymptotic and small-sample properties. Vision Res. 1998;38:1861–1881. doi: 10.1016/s0042-6989(97)00340-4. [DOI] [PubMed] [Google Scholar]

- Gilbert CD, Sigman M, Crist RE. The neural basis of perceptual learning. Neuron. 2001;31:681–697. doi: 10.1016/s0896-6273(01)00424-x. [DOI] [PubMed] [Google Scholar]

- Han YK, Köver H, Insanally MN, Semerdjian JH, Bao S. Early experience impairs perceptual discrimination. Nat Neurosci. 2007;10:1191–1197. doi: 10.1038/nn1941. [DOI] [PubMed] [Google Scholar]

- Hensch TK. Critical period regulation. Annu Rev Neurosci. 2004;27:549–579. doi: 10.1146/annurev.neuro.27.070203.144327. [DOI] [PubMed] [Google Scholar]

- Holy TE, Guo Z. Ultrasonic songs of male mice. PLoS Biol. 2005;3:e386. doi: 10.1371/journal.pbio.0030386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iverson P, Kuhl PK. Influences of phonetic identification and category goodness on American listeners' perception of /r/ and /l. J Acoust Soc Am. 1996;99:1130–1140. doi: 10.1121/1.415234. [DOI] [PubMed] [Google Scholar]

- Kim H, Bao S. Distributed representation of perceptual categories in the auditory cortex. J Comput Neurosci. 2008;24:277–290. doi: 10.1007/s10827-007-0055-5. [DOI] [PubMed] [Google Scholar]

- Kim H, Bao S. Selective increase in representations of sounds repeated at an ethological rate. J Neurosci. 2009;29:5163–5169. doi: 10.1523/JNEUROSCI.0365-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knill DC, Richards W. Perception as Bayesian inference. New York: Cambridge UP; 1996. [Google Scholar]

- Körding KP, Wolpert DM. Bayesian integration in sensorimotor learning. Nature. 2004;427:244–247. doi: 10.1038/nature02169. [DOI] [PubMed] [Google Scholar]

- Köver H, Bao S. Cortical plasticity as a mechanism for storing Bayesian priors in sensory perception. PLoS One. 2010;5:e10497. doi: 10.1371/journal.pone.0010497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK. Brain mechanisms in early language acquisition. Neuron. 2010;67:713–727. doi: 10.1016/j.neuron.2010.08.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl PK, Miller JD. Speech perception by the chinchilla: identification function for synthetic VOT stimuli. J Acoust Soc Am. 1978;63:905–917. doi: 10.1121/1.381770. [DOI] [PubMed] [Google Scholar]

- Kuhl PK, Williams KA, Lacerda F, Stevens KN, Lindblom B. Linguistic experience alters phonetic perception in infants by 6 months of age. Science. 1992;255:606–608. doi: 10.1126/science.1736364. [DOI] [PubMed] [Google Scholar]

- Kushnir T, Xu F, Wellman HM. Young children use statistical sampling to infer the preferences of other people. Psychol Sci. 2010;21:1134–1140. doi: 10.1177/0956797610376652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu BH, Wu GK, Arbuckle R, Tao HW, Zhang LI. Defining cortical frequency tuning with recurrent excitatory circuitry. Nat Neurosci. 2007;10:1594–1600. doi: 10.1038/nn2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu RC, Miller KD, Merzenich MM, Schreiner CE. Acoustic variability and distinguishability among mouse ultrasound vocalizations. J Acoust Soc Am. 2003;114:3412–3422. doi: 10.1121/1.1623787. [DOI] [PubMed] [Google Scholar]

- May B, Moody DB, Stebbins WC. Categorical perception of conspecific communication sounds by Japanese macaques, Macaca fuscata. J Acoust Soc Am. 1989;85:837–847. doi: 10.1121/1.397555. [DOI] [PubMed] [Google Scholar]

- Maye J, Werker JF, Gerken L. Infant sensitivity to distributional information can affect phonetic discrimination. Cognition. 2002;82:B101–111. doi: 10.1016/s0010-0277(01)00157-3. [DOI] [PubMed] [Google Scholar]

- Mesgarani N, David SV, Fritz JB, Shamma SA. Phoneme representation and classification in primary auditory cortex. J Acoust Soc Am. 2008;123:899–909. doi: 10.1121/1.2816572. [DOI] [PubMed] [Google Scholar]

- Näätänen R, Lehtokoski A, Lennes M, Cheour M, Huotilainen M, Iivonen A, Vainio M, Alku P, Ilmoniemi RJ, Luuk A, Allik J, Sinkkonen J, Alho K. Language-specific phoneme representations revealed by electric and magnetic brain responses. Nature. 1997;385:432–434. doi: 10.1038/385432a0. [DOI] [PubMed] [Google Scholar]

- Nelson DA, Marler P. Categorical perception of a natural stimulus continuum: birdsong. Science. 1989;244:976–978. doi: 10.1126/science.2727689. [DOI] [PubMed] [Google Scholar]

- Noreña AJ, Gourévitch B, Aizawa N, Eggermont JJ. Spectrally enhanced acoustic environment disrupts frequency representation in cat auditory cortex. Nat Neurosci. 2006;9:932–939. doi: 10.1038/nn1720. [DOI] [PubMed] [Google Scholar]

- Ohl FW, Scheich H. Differential frequency conditioning enhances spectral contrast sensitivity of units in auditory cortex (field Al) of the alert Mongolian gerbil. Eur J Neurosci. 1996;8:1001–1017. doi: 10.1111/j.1460-9568.1996.tb01587.x. [DOI] [PubMed] [Google Scholar]

- Pantev C, Oostenveld R, Engelien A, Ross B, Roberts LE, Hoke M. Increased auditory cortical representation in musicians. Nature. 1998;392:811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. J Neurosci. 2009;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley DB, Heiser MA, Blake DT, Schreiner CE, Merzenich MM. Associative learning shapes the neural code for stimulus magnitude in primary auditory cortex. Proc Natl Acad Sci U S A. 2004;101:16351–16356. doi: 10.1073/pnas.0407586101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Polley DB, Steinberg EE, Merzenich MM. Perceptual learning directs auditory cortical map reorganization through top-down influences. J Neurosci. 2006;26:4970–4982. doi: 10.1523/JNEUROSCI.3771-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Prather JF, Nowicki S, Anderson RC, Peters S, Mooney R. Neural correlates of categorical perception in learned vocal communication. Nat Neurosci. 2009;12:221–228. doi: 10.1038/nn.2246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Recanzone GH, Merzenich MM, Jenkins WM, Grajski KA, Dinse HR. Topographic reorganization of the hand representation in cortical area 3b owl monkeys trained in a frequency-discrimination task. J Neurophysiol. 1992;67:1031–1056. doi: 10.1152/jn.1992.67.5.1031. [DOI] [PubMed] [Google Scholar]

- Recanzone GH, Schreiner CE, Merzenich MM. Plasticity in the frequency representation of primary auditory cortex following discrimination training in adult owl monkeys. J Neurosci. 1993;13:87–103. doi: 10.1523/JNEUROSCI.13-01-00087.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed A, Riley J, Carraway R, Carrasco A, Perez C, Jakkamsetti V, Kilgard MP. Cortical map plasticity improves learning but is not necessary for improved performance. Neuron. 2011;70:121–131. doi: 10.1016/j.neuron.2011.02.038. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- Saffran JR, Johnson EK, Aslin RN, Newport EL. Statistical learning of tone sequences by human infants and adults. Cognition. 1999;70:27–52. doi: 10.1016/s0010-0277(98)00075-4. [DOI] [PubMed] [Google Scholar]

- Sanes DH, Bao S. Tuning up the developing auditory CNS. Curr Opin Neurobiol. 2009;19:188–199. doi: 10.1016/j.conb.2009.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sarro EC, Sanes DH. The cost and benefit of juvenile training on adult perceptual skill. J Neurosci. 2011;31:5383–5391. doi: 10.1523/JNEUROSCI.6137-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schön D, François C. Musical expertise and statistical learning of musical and linguistic structures. Front Psychol. 2011;2:167. doi: 10.3389/fpsyg.2011.00167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Simoncelli EP. Gazzaniga M, editor. Optimal estimation in sensory systems. The Cognitive Neurosciences. (Ed 4) 2009:525–535. [Google Scholar]

- Singh NC, Theunissen FE. Modulation spectra of natural sounds and ethological theories of auditory processing. J Acoust Soc Am. 2003;114:3394–3411. doi: 10.1121/1.1624067. [DOI] [PubMed] [Google Scholar]

- Takahashi N, Kashino M, Hironaka N. Structure of rat ultrasonic vocalizations and its relevance to behavior. PLoS One. 2010;5:e14115. doi: 10.1371/journal.pone.0014115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talwar SK, Gerstein GL. Reorganization in awake rat auditory cortex by local microstimulation and its effect on frequency-discrimination behavior. J Neurophysiol. 2001;86:1555–1572. doi: 10.1152/jn.2001.86.4.1555. [DOI] [PubMed] [Google Scholar]

- Toro JM, Trobalón JB. Statistical computations over a speech stream in a rodent. Percept Psychophys. 2005;67:867–875. doi: 10.3758/bf03193539. [DOI] [PubMed] [Google Scholar]

- Wang X. Neural coding strategies in auditory cortex. Hear Res. 2007;229:81–93. doi: 10.1016/j.heares.2007.01.019. [DOI] [PubMed] [Google Scholar]

- Werker JF, Tees RC. Speech perception as a window for understanding plasticity and commitment in language systems of the brain. Dev Psychobiol. 2005;46:233–251. doi: 10.1002/dev.20060. [DOI] [PubMed] [Google Scholar]

- Wu R, Gopnik A, Richardson DC, Kirham NZ. Infants learn about objects from statistics and people. Dev Psychobiol. 2011;47:1220–1229. doi: 10.1037/a0024023. [DOI] [PubMed] [Google Scholar]

- Wyttenbach RA, May ML, Hoy RR. Categorical perception of sound frequency by crickets. Science. 1996;273:1542–1544. doi: 10.1126/science.273.5281.1542. [DOI] [PubMed] [Google Scholar]

- Xu F, Griffiths TL. Probabilistic models of cognitive development: towards a rational constructivist approach to the study of learning and development. Cognition. 2011;120:299–301. doi: 10.1016/j.cognition.2011.06.008. [DOI] [PubMed] [Google Scholar]

- Zhang LI, Bao S, Merzenich MM. Persistent and specific influences of early acoustic environments on primary auditory cortex. Nat Neurosci. 2001;4:1123–1130. doi: 10.1038/nn745. [DOI] [PubMed] [Google Scholar]

- Zhou X, Merzenich MM. Enduring effects of early structured noise exposure on temporal modulation in the primary auditory cortex. Proc Natl Acad Sci U S A. 2008;105:4423–4428. doi: 10.1073/pnas.0800009105. [DOI] [PMC free article] [PubMed] [Google Scholar]