Abstract

This methodological paper reports an initial attempt to evaluate the feasibility and utility of a nonverbal task for assessing generalized same/different judgments of auditory stimuli in individuals who have intellectual disabilities. Study 1 asked whether participants could readily acquire a baseline of auditory same/different, go-left/go-right performance with minimal prompting. Sample stimuli consisted of pairs of successively presented sine-wave tones. If the tones were identical, participants were reinforced for selections of a visual stimulus on the left side of the computer screen; if the two stimuli were different, selections of the visual stimulus on the right were reinforced. Two of five participants readily acquired the task, generalized performance to other stimuli and completed a rudimentary protocol for examining auditory discriminations that are potentially more difficult than those used to establish the initial task. In Study 2, two participants who could not perform the go-left/go-right task with tone stimuli, but could do so with spoken-word stimuli, successfully transferred control by spoken words to tones via an auditory superimposition-and-fading procedure. The findings support the feasibility of using the task as a general-purpose auditory discrimination assessment.

Keywords: auditory discrimination, go-left/go-right, intellectual disabilities, matching to sample, superimposition and fading

Limited and defective auditory learning skills are widely reported in individuals with intellectual disabilities (Abbeduto, Furman, & Davies, 1989; Chapman, Schwartz, & Kay-Raining Bird, 1991; Miller, 1987), particularly in those who also have autism (Klinger, Dawson & Renner, 2002). These problems impose limitations on a wide range of learning opportunities, including communication, social skills training, and academic instruction. Such opportunities are often the primary focus of intervention programs, for instance those based on the principles of applied behavior analysis (ABA). Children who fail to discriminate words spoken to them lack critical prerequisites for acquiring effective listening and speaking repertoires. Language training, whether vocal or alternative/augmentative, cannot succeed unless the child can distinguish one word from another. Indeed, Barker-Collo et al. (1995) and Vause, Martin and Yu (2000) have shown that auditory discrimination performance correlates highly with communication ability in individuals with moderate to profound intellectual disabilities.

From a behavior analytic perspective, auditory learning problems can be characterized as a failure of auditory stimulus control; failure to discriminate one auditory stimulus from another. Though establishing auditory stimulus control has been addressed intermittently for many years (Green, 1990; Mcllvane & Stoddard, 1981, 1985; Meyerson & Kerr, 1977; Schreibman, Charlop, & Koegel, 1982; Schreibman, Kohlenberg, & Britten, 1986; Serna, Stoddard & Mcllvane, 1992; Stoddard, 1982; Stoddard & Mcllvane, 1989), universal success has been elusive, particularly for individuals whose intellectual functioning is very low. One reason may be that the auditory stimuli used in many of these studies are spoken words, which are relatively complex stimuli, given their many prosodic features (e.g., pitch, duration, rise and fall, rhythm, etc.). As is known from studies of restricted stimulus control (e.g., “stimulus overselectivity,” Dube et al., 2003) and feature classes of visual stimuli (e.g., Serna, Wilkinson, & Mcllvane, 1998), individuals with intellectual disabilities may develop control by only a single feature of a stimulus, the results of which could be stimulus control that is incompatible with that intended by the experimenter or teacher (stimulus control topography coherence theory; Mcllvane & Dube, 2003; McDvane, Serna, Dube, & Stromer, 2000). For example, “high functioning” individuals with autism show superior pitch discrimination compared to typically developing individuals (Bonnel et al., 2003); one could envision stimulus-control failures if the intended basis of an auditory discrimination (e.g., spoken words) involved more than just pitch.

To begin to address problems of auditory stimulus control in individuals with intellectual disabilities, a reliable method for assessing extant auditory discrimination abilities is needed. Often in auditory assessment studies that use verbally capable individuals (e.g., Bonnel, Mottron, Peretz, Trudel, Gallun, & Bonnel, 2003; Jones et al., 2009; Moore, 1973; Serna, Jeffery, & Stoddard, 1996), extensive verbal instructions are used to establish the task. Sometimes, participants are also instructed to indicate their responses by voice. Unfortunately, the language requirements of such tasks preclude their use with individuals who have limited or no verbal skills, a population that would benefit most from such an assessment. Therefore, to extend the reach of an auditory discrimination assessment to individuals with intellectual disabilities who have limited language skills, such a method must not rely on verbal instruction or a verbal response.

In stimulus control terms, an auditory discrimination assessment task would be essentially an auditory version of generalized conditional stimulus-stimulus relational performance. That is, once an initial baseline performance is established, other auditory discriminations would be made on the basis of the relation of sameness or identity. But what might the task itself look like? One task in which individuals might report non-verbally whether two successively presented auditory stimuli are the same or are different would be the go/no-go task (e.g., D'Amato & Columbo, 1985): If the stimuli are the same, respond; if the stimuli are different, refrain from responding. Though the go/no-go task has yet to be used for assessing pairs of successive auditory stimuli, it has been used with auditory stimuli for individuals with intellectual disabilities. For example, Serna, Stoddard and Mcllvane (1992) showed that two individuals with severe intellectual disabilities could learn the task when the S+ (the “go” stimulus) was the word “touch” and the S− (the “no-go” stimulus) was the word “wait.” However, unpublished subsequent tests of the go/no-go task yielded far less successful results with several additional participants with severe intellectual disabilities, despite the use of stimulus-control shaping prompting procedures (see Mcllvane & Dube, 1992, for a discussion of stimulus-control shaping). The primary pattern of responding for these additional participants was a tendency to respond regardless of whether the S+ or S− was present; it was difficult for them to refrain from responding. Capitalizing on this propensity of individuals with intellectual disabilities to respond in the presence of auditory stimuli, Serna, Jeffery, & Stoddard (1996) established auditory discrimination of two digitized spoken words with a go-left/go-right (yes/no) procedure (e.g., D'Amato & Worsham, 1974), in which a response is required on every trial: If Word 1, respond to a visual stimulus on the lower-left side of a computer screen; If Word 2, respond to the right. The Serna et al. (1996) study suggests that an adaptation of the go-left/go-right task for use with pairs of successively presented auditory stimuli might be possible for individuals with intellectual disabilities.

The present methodological paper reports an initial attempt to evaluate the feasibility and utility of a nonverbal assessment task for generalized same/different judgments of auditory stimuli by individuals who have intellectual disabilities. More specifically, the paper asked in the first of two studies whether a go-left/go-right task could be adapted such that participants would respond to one side of a computer screen if two successively presented auditory stimuli were identical and to the other side of the screen if the stimuli were not identical. If such performance could be established, would it generalize to new auditory stimuli? The first study also explored a rudimentary protocol for examining auditory discriminations that are potentially more difficult than those used to establish the initial task. In a second follow-up study, the paper examined a stimulus-control shaping procedure for transferring go-left/go-right stimulus control from one auditory stimulus category (tones) to another (digitized spoken words). Importantly, the present studies represent the beginning of a larger programmatic effort to explore discrimination of individual features (e.g., pitch, duration, etc.) of auditory stimuli. Thus, goal of the study was to explore discrimination of different tone frequencies.

General Method

Apparatus, Stimuli and Setting

Experimental sessions were controlled by custom in-house software (MTS: Dube, 1991; Dube & Hiris, 1999) running on a Macintosh desktop computer fitted with a touch-sensitive monitor or a mouse. All stimulus presentations, response consequences, and shaping procedures were fully automated, and responses to the touch-screen monitor were recorded to disk. Two types of auditory stimuli were used: sine-wave tones and recorded spoken words. Pure-tone auditory stimuli were constructed using SoundEdit-16® software (Macromedia) on a Macintosh computer. Spoken-word stimuli were recorded by a masculine speaking voice into a digital audio workstation. All stimuli were created and presented as 16bit, 44.1khz wave files. Auditory stimuli were presented via two external speakers seated to the left and right of the computer monitor, or through stereo headphones. All auditory stimuli were presented at a comfortable volume, as determined by the experimenter and participant in an initial volume test. All experimental sessions were conducted in a quiet room away from the participants' classrooms. An experimenter, seated behind and to the participant's right, monitored all sessions, initiated trials and delivered reinforcers.

Procedure

Participants were trained by various procedures to “report” whether two successively presented auditory stimuli were the same or different, using a same/different, go-left/go-right task. The task is illustrated in Figure 1. Each trial began with the presentation of one of two pairs of auditory stimuli. Each stimulus in the pair was separated by 500ms of silence. The pairs of stimuli were either identical or different. Each pair was repeated every 1000ms until the participant made a response. Simultaneous to the presentation of the stimuli, a white square appeared in the center of the computer screen. A response to the square in the center of the screen produced two additional squares (hereafter referred to as “keys”), each with a distinct non-representational form. One key was located in the lower left corner and the other in the lower right corner of the screen (see Figure 1). If the auditory stimuli were the same, a response to one key was designated as correct, and a reinforcer was delivered; if they were different, a response to the other key was designated as correct. A response to any key resulted in the immediate cessation of the auditory stimulus complex, and the visual stimuli disappeared. Correct responses were then followed immediately by a 1-sec flashing visual display and a series of melodic tones, as well as the delivery of a token and an intertrial interval (ITI), in which the screen was blank. Incorrect responses resulted in a blank screen and an ITI. The experimenter initiated all trials with a press to a keyboard spacebar; trials were initiated when the participant had finished placing their token in a small receptacle to the side of the computer screen. Thus, ITIs generally ranged from about 3 to 5 sec. All trials were presented in a random order with an equal number of same and different auditory pairs of stimuli. Each session generally consisted of 60 trials. At the conclusion of a session, tokens were traded in for money or edibles such as raisins. Sessions were usually conducted 3–5 times per week, depending on the availability of each participant.

Figure 1.

Baseline frequency discrimination task with the same/different, go/no-go procedure. The left panel illustrates “same” trials, either with 750Hz or 500Hz. The right panel illustrates "different" trials.

Study 1: Same/Different, Go-Left/Go-Right Frequency Discrimination

Study 1 asked whether participants with intellectual disabilities could readily acquire same/different, go-left/go-right performances via minimal prompting and differential reinforcement (“trial and error”) in a frequency discrimination task involving two sine-wave tones. As noted earlier, such performance could serve as a useful baseline from which further assessments of auditory discrimination could be conducted. The next step in auditory discrimination assessment would be to examine pairs of auditory stimuli that differ from those used for baseline performance, thereby examining generalized auditory same/different judgments. Therefore, a second question of Study 1 asked whether participants who could acquire the baseline frequency discrimination task could also generalize their performance to new auditory stimuli, recorded spoken words. Finally, for participants that could successfully generalize their same/different performance, Study 1 also examined the feasibility of a procedure designed to nonverbally test participants' unreinforced performance in an assessment task of different (relatively large and small) tone disparities. Performance with different disparities ultimately could provide evidence on the extent of participants' discrimination abilities as auditory stimuli become more similar in one respect or another. These three questions were addressed in three separate experimental phases, as described below.

Method

Participants

Five participants, all with intellectual disabilities, were recruited from a local residential school for individuals with developmental disabilities. Each was assigned a code to conceal their identity. Table 1 shows their chronological ages (CAs) and estimated mental ages (eMAs). Chronological ages ranged from 14-3 (years-months) to 21-0 years (mean 17-10; median 19-5). eMA scores, as assessed by the Peabody Picture Vocabulary Test (IIIA and 4A; Dunn and Dunn, 1997, 2007), ranged from 6-1 to 9–11 (years-months; mean 7–8; median 6–8). All participants had normal hearing (+/− 5dB HL), as assessed with a pure-tone audiogram prior to their participation. Four of the five participants had served in prior studies involving visual discrimination, but none had experienced the present procedures.

Table 1.

Chronological Ages (CA) and Estimated Mental Ages (eMA) for Study-1 Participants

| Participant | Gender | CA (years-months) | eMA (years-months) |

|---|---|---|---|

| Code | |||

| RSE | MF | 19-10 | 6-01 |

| MIB | M | 21-0 | 6-07 |

| EBG | M | 15-0 | 9-02 |

| JAR | M | 14-3 | 6-08 |

| MES | M | 19-5 | 9-11 |

Procedure

Phase 1: Baseline same/different, go-left/go-right training

Each of the five participants was exposed to baseline training of the experimental task with minimal prompting and differential reinforcement. Stimuli consisted of sine-wave tones of 750Hz and 500Hz. Four two-stimulus complexes were used: 750Hz-750Hz, 500Hz-500Hz (“same” trials) and 750Hz-500Hz, 500Hz-750Hz (“different” trials). Each of the four stimulus complexes was presented an equal number of times across the 60-trials sessions. For all participants, the auditory stimuli were presented through speakers, and responses were made to a touch-sensitive computer screen. The first experimental session for each participant contained a minimal prompting procedure for the first eight trials, the presentation of only the correct response key after a touch to the center key. For all subsequent trials of the first session and all subsequent sessions, both response keys were present. All participants' correct responses were reinforced. Successful task acquisition was defined as three 60-trial sessions in which accuracy was 90% or greater. Failure to acquire the task was defined as three sessions in which there was no upward or downward trend in accuracy.

Phase 2: generalization to new auditory stimuli

Participants that acquired the baseline tone discrimination were exposed to reinforced sessions with new auditory stimuli, the spoken words “cat” and “dog.” Like the previous phase, four two-stimulus complexes were used: “cat”-“cat”, “dog”-“dog” (“same” trials) and “cat”-“dog”, “dog”-“cat” (“different” trials). Successful task acquisition was defined as three 60-trial sessions in which overall accuracy was 90% or greater.

Phase 3: auditory stimulus disparity assessment

In Phase 3, participants that demonstrated generalization in Phase 2 were exposed to a probe assessment of their performance with pairs of tone disparities that differed from those used in the baseline trials of Phase 1. The assessment protocol consisted of three steps: (a) three sessions of review of the reinforced baseline same/different, go-left/go-right task with 500Hz and 750Hz stimuli; (b) three sessions of the same baseline task without reinforcement, in preparation for (c) assessment sessions consisting of a random mix of unreinforced baseline auditory stimulus pairs and unreinforced, untrained test pairs of stimuli. Table 2 shows the baseline and experimental probe pairs used in the latter step. The left and center columns represent all the baseline and test pairs for EBG's assessment and the first assessment for MIB, respectively. Each test pair was presented four times per session. The full assessment consisted of three sessions. Thus, the participants were exposed to twelve instances of each pair. Based on his performance, a second assessment was conducted with MIB. This assessment was similar to his first, except that some of the frequency values were altered; the altered pairs appear in bold (see the right column of Table 2).

Table 2.

Baseline and Probe-Trial Auditory Stimulus Pairs and Results for EBG and MIB, Experiment 1, Phase 3.

| EBG |

MIB (first assessment) |

MIB (second assessment) |

||||

|---|---|---|---|---|---|---|

| auditory tone- stimulus pairs |

accuracy | auditory tone- stimulus pairs |

accuracy | auditory tone- stimulus pairs |

accuracy | |

| Baseline | 500Hz - 500Hz | 100% | 500Hz - 500Hz | 100% | 500Hz - 500Hz | 100% |

| 750Hz - 750Hz | 100% | 750Hz - 750Hz | 92% | 750Hz - 750Hz | 100% | |

| 500Hz - 750Hz | 83% | 500Hz - 750Hz | 100% | 500Hz - 750Hz | 100% | |

| 750Hz - 500Hz | 100% | 750Hz - 500Hz | 100% | 750Hz - 500Hz | 100% | |

| Probes | 500Hz - 520Hz | 92% | 500Hz - 520Hz | 83% | 500Hz - 510Hz | 75% |

| 520Hz - 500Hz | 92% | 520Hz - 500Hz | 92% | 510Hz - 500Hz | 83% | |

| 520Hz - 520Hz | 92% | 520Hz - 520Hz | 100% | 510Hz - 510Hz | 100% | |

| 500Hz - 625Hz | 100% | 500Hz - 625Hz | 100% | 500Hz - 625Hz | 100% | |

| 625Hz - 500Hz | 100% | 625Hz - 500Hz | 100% | 625Hz - 500Hz | 100% | |

| 625Hz - 625Hz | 100% | 625Hz - 625Hz | 100% | 625Hz - 625Hz | 92% | |

| 750Hz - 730Hz | 92% | 750Hz - 730Hz | 8% | 750Hz - 720Hz | 67% | |

| 730Hz - 750Hz | 83% | 730Hz - 750Hz | 0% | 720Hz - 750Hz | 75% | |

| 730Hz - 730Hz | 92% | 730Hz - 730Hz | 100% | 720Hz - 720Hz | 100% | |

| 750Hz - 625Hz | 100% | 750Hz - 625Hz | 92% | 750Hz - 625Hz | 100% | |

| 625Hz - 750Hz | 100% | 625Hz - 750Hz | 92% | 625Hz - 750Hz | 83% | |

Results

Phase 1

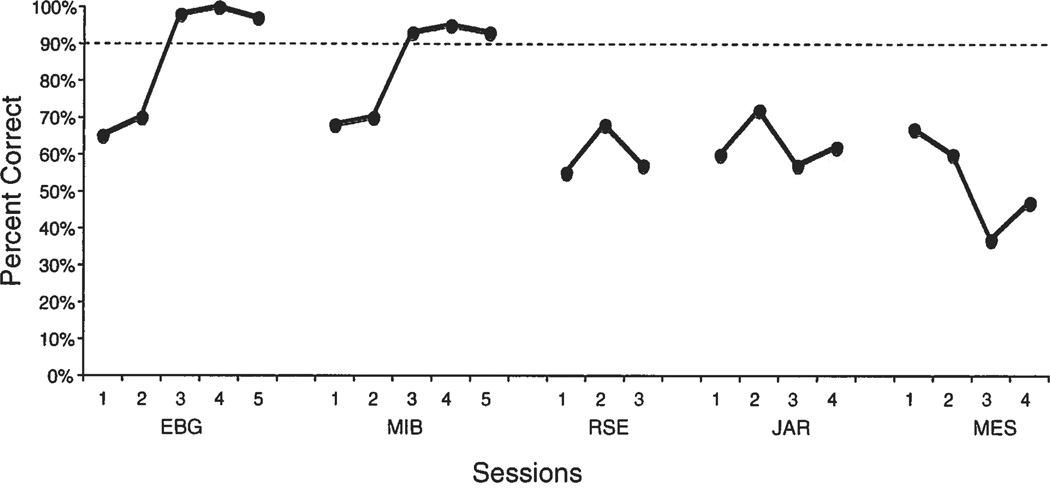

Figure 2 shows the results of Experiment 1, Phase 1. Two participants, EBG and MIB, successfully mastered the frequency discrimination task. EBG and MIB met the learning criterion in five sessions, respectively. JAR, RSE, and MES did not meet the mastery criterion. EBG and MIB both went on to participant in Phases 2 and 3.

Figure 2.

Percent correct across sessions in Study 1 for all five participants. The crriterion performance level, 90% accuracy, is indicated by the dotted line.

Phase 2

EBG and MIB demonstrated immediate criterion performance with the spoken-word stimuli. Across their three sessions, their accuracy was 100%, 100% and 98% (EBG) and 98%, 98% and 97% (MIB).

Phase 3

In the first step, reintioduction of the tone stimuli, both subjects maintained criterion (<90%) accuracy across three sessions. Both participants also maintained criterion accuracy in the second step, unreinforced baseline sessions. The third step consisted of one 3-session assessment for EBG and two 3-session assessments for MIB. Table 2 shows the results. For each participant, each of the four baseline and 11 probe types was presented 12 times across three sessions. As shown in the upper portion of the table, both participants demonstrated nearly perfect mean accuracy on each of the baseline trial types. The lower left portion of the table shows the results of each probe type for EBG. Note that “same” trial pairs are underlined. The remaining “different” pairs represented different tone disparities: a relatively small, 20Hz disparity (500Hz vs. 520Hz and 750Hz vs. 730Hz), and a large, 125Hz disparity (500Hz vs. 625Hz and 750Hz vs. 625Hz). EBG showed perfect accuracy when the disparity was large and somewhat lower accuracy when the disparity was small.

During the first assessment for MIB (middle column), he showed nearly perfect accuracy with the large disparity and somewhat lower accuracy with the small disparity programmed at 500Hz. However, MIB's accuracy with the small disparity at 750Hz was extremely low, suggesting that he judged those pairs of stimuli as the same. To test this hypothesis, MIB received a second 3-session assessment (right column, pairs in bold) in which the small disparity at 750Hz was made greater (30Hz) in an attempt to make it easier to discriminate. At the same time, the small disparity at 500Hz on which he performed fairly well was made even smaller (lOHz), a presumably more difficult discrimination. The large disparities remained the same as her first assessment. As expected, MIB showed greater accuracy with the 30Hz disparity and somewhat lower accuracy with the lOHz disparity, compared to her first assessment.

Discussion

In Phase 1, two of the five participants with developmental disabilities readily acquired the auditory same/different, go-left/go-right task with minimal prompting. With this task, the two participants demonstrated auditory same/different judgments of sine-wave tones. Importantly, the participants' performance was not dependent on the particular stimuli used to train the task; these participants' also immediately demonstrated highly accurate performance with new stimuli, recorded spoken words, in Phase 2. Thus, across Phases 1 and 2, participants demonstrated generalized auditory same/different judgments, an important prerequisite for using the auditory same/different, go-left/go-right task for further assessments.

Phase 3 was a demonstration of the essential features of a non-verbal auditory discrimination assessment: Establish a baseline with auditory stimuli from the same dimension as the one that will be assessed; maintain that baseline with no explicit feedback; and then present unreinforced probe trials mixed with the original baseline. Both participants readily maintained their baseline performance in the absence of reinforcement. Moreover, several aspects of their performance in the latter step of the assessment in the present study contributed to the face validity of the procedure. Specifically, participants maintained high baseline accuracy throughout the probes sessions, indicating that the essential stimulus control by the same/different task remained intact. High accuracy was also maintained with the probe trials in which the two stimuli in the pair were identical. In one sense, the identical probe pairs (see Table 2, underlined probes) functioned as "control" trials; not only would high accuracy be expected (they are relatively easy discriminations to make), but in combination with “different” probes, they helped to rule out alternate sources of stimulus control, such as a position bias. In addition, participants' performance was fairly consistent within probe value; performance was similar whether a given stimulus in a pair was presented first or second in the stimulus complex. Lastly, both participants' performance with “different” probes bolstered the face validity of the procedure. Each demonstrated high accuracy with relatively large disparities, but somewhat lower accuracy with relatively small disparities. This was particularly true with MIB's first assessment, in which she regarded the probes with a 20Hz disparity at 500Hz as the same (i.e., apparently could not tell the difference between them). The increased disparity in the second assessment, and MIB's improved performance, lends further credence to the face validity of the assessment. Curiously, in the first assessment, the 20Hz disparity at 750Hz did not result in the low accuracy shown by MIB with the 20Hz disparity at 500Hz. It is unclear why this was the case. Nevertheless, given her lowered accuracy when the disparity was changed to lOHz in the second assessment as well as her maintenance of high accuracy on baseline and “same” probes, there is no reason to suspect that any general feature of the assessment was responsible for this performance, other than, perhaps, the stimulus frequency values.

Taken all together, it appears that the results of the two participants who completed all three phases of Study 1 support the feasibility of using the auditory go-left/go-right task as a general-purpose, nonverbal auditory assessment of same/different judgments with individuals with developmental disabilities.

Study 2: Stimulus-Control Shaping Methods for Establishing a Tone Discrimination

Though successful in many respects, Study 1 proved limited in another: Only two of the five participants readily acquired the tone-discrimination task. Given the few participants available for study, one cannot readily surmise from Study 1 the percentage of a larger population of participants with similar levels of disability that would readily acquire the task with minimal prompting. Therefore, it is important to explore alternative methods for establishing the task with participants who cannot readily acquire it. This was the focus of Study 2; the aim was same/different judgments of different tone frequencies.

Via informal follow-up investigation, two of the three participants that did not acquire the tone discrimination in Study 1, RSE and JAR, eventually were able to demonstrate the auditory same/different, go-left/go-right task with the spoken words, “dog” and ”cat.” Further, each demonstrated generalization to new words, ”tree” and ”house.” However, for these participants, their performance with words was not a sufficient prerequisite for demonstrating subsequent same/different judgments with tone stimuli, even when the disparity between the stimuli was very large: l,250Hz. Therefore, Study 2 asked whether a superimposition and fading method (Etzel & LeBlanc, 1979; Terrace, 1963), known to be successful with many participants with intellectual disabilities that are learning visuals discriminations, would prove feasible in transferring stimulus control by spoken words to tones. Unfortunately, JAR was unable to continue participation due to behavior problems. Hence, Study 2 examined stimulus control transfer with RSE and a naïve participant with intellectual disabilities.

Method

Participants, Apparatus, Setting

Two participants served: RSE from Study 1 and a naïve participant, RBW. RBW was a female with a CA of 18-9 and an eMA of 6–8. RBW attended a day school program for individuals with intellectual disabilities. The apparatus was the same as Study 1, except that RSE's responses were record by a touch-sensitive monitor, while RBW responded using a mouse. In addition, stimuli were presented through speakers with RSE and through headphones with RBW. The study setting was the same as in Study 1.

Stimuli

The baseline stimuli for RSE were the recorded spoken words, “tree” and “house”; her target-discrimination stimuli were sine-wave tones, 250Hz and 1,500Hz. During superimposition (see below), the 250Hz tone was superimposed on “tree” and the l,500Hz tone was superimposed on “house.” Each tone was matched in duration to their respective words (250Hz = 592ms; 1500 = 772ms). The baseline stimuli for RBW were the recorded spoken words, “dog” and “cat”; her target discrimination stimuli were sine-wave tones, 2,500Hz (superimposed on “dog,” 577ms) and 3,500Hz (superimposed on “cat,” 477ms).

Pre-Study Performance

Each participant entered Study 2 with highly accurate same/different judgments of recorded spoken words, which were established via a variety of stimulus control shaping methods. However, each participant demonstrated low-accuracy same/different judgments of their respective tone stimuli (RSE: 60% across two prompted sessions; RBW: 44% across four unprompted sessions).

Procedure

Superimposition and fading

In general, the superimposition-and-fading protocol consisted of six stages; criterion performance (≥ 90% accuracy) was required to move from one stage to the next. In the first stage, the words were presented alone. In the second stage, the words were presented at 100% intensity, while the tones were gradually faded onto the words in 8 steps of increasing intensity, from 0% to 100%. In the third stage, both words and tones were presented at equal (100%) intensity. In the fourth stage, the tones remained at 100% intensity, while words were faded out in 8 steps of decreasing intensity, from 100% to 0%. In the fifth stage, only the tones at 100% intensity were presented, but with their respective durations used during the previous fading stages. Finally, in the sixth stage, to ensure that responses were being made on the basis of frequency and not stimulus duration, all tone durations were standardized as follows: 150ms for RBW and 500ms for RSE. For both participants, fading-in or fading-out auditory stimuli followed a titrating progression. That is, correct responses resulted in a progression to the next fading step; errors resulted in the presentation of the previous intensity-fading step. Based on RSE's performance, several modifications were made to the fourth stage, fading out the words, as shown in Table 4. The number of trials per session varied from 40 to 62 for both participants.

Table 4.

Auditory Superimposition-and-Fading Protocol Stage and Results for RSE

| Session Number |

Protocol Stage | Session Accuracy in Percent |

|---|---|---|

| 1 | words only | 100 |

| 2 | fade-in tones | 100 |

| 3 | words and tones | 100 |

| 4 | fade-out words | 55a |

| 5 | words and tones | 100 |

| 6 | words and tones | 98 |

| 7 | fade-out words 100% to 13% intensity | 100a |

| 8 | words (13% intensity) and tones (100% intensity) | 100 |

| 9 | fade-out words (13%, 11%, 10%, 8%, 7%, 5%, 4%, 2%, 0%) | 29a |

| 10 | fade-out words (13%, 11%, 10%, 8%, 7%, 5%, 4%, 2%, 0%) | 67a |

| 11 | fade-out words 13% to 5% intensity | 100a |

| 12 | words (5% intensity) and tones (100% intensity) | 100 |

| 13 | fade-out words (5%, 4%, 3%, 2%, 1%, 0%) | 89a |

| 14 | fade-out words (5%, 4%, 3%, 2%, 1%, 0%) | 89a |

| 15 | fade-out words (5%, 4%, 3%, 2%, 1%, 0%) | 93a |

| 16 | fade-out words (3%, 2%, 1%, 0%) | 91a |

| 17 | fade-out words (3%, 2%, 1%, 0%) | 100a |

| 18 | final performance: tones only | 100 |

| 19 | final performance: tones only | 69 |

| 20 | final performance: tones only | 94 |

| 21 | final performance: tones only, standardized at 500ms | 92 |

| 22 | final performance: tones only, standardized at 500ms | 98 |

| 23 | final performance: tones only, standardized at 500ms | 90 |

last fading step accuracy in percent

Results and Discussion

Tables 3 and 4 show the results of RBW and RSE, respectively. As shown in Table 3, RBW required only a single session to meet criterion for the first three stages. In the fourth session, the words were faded out. However, RBW showed only 86% accuracy when the words were no longer present. Nevertheless, of the 28 trials at that final step, RBW's responses were correct for the last 19 consecutive trials. Therefore, she progressed to the final stage, in which the stimulus durations were standardized at 150ms (Sessions 5 and 6), and showed 100% accuracy.

Table 3.

Auditory Superimposition-and-Fading Protocol Stage and Results for RBW

| Session Number |

Protocol Stage | Session Accuracy in Percent |

|---|---|---|

| 1 | words only | 100 |

| 2 | fade-in tones | 100 |

| 3 | words and tones | 100 |

| 4 | fade-out words | 86a,b |

| 5 | final performance: tones only, standardized at 150ms. | 100 |

| 6 | final performance: tones only, standardized at 150ms. | 100 |

last fading step accuracy in percent

100% accuracy on the last 19 trials

Like RBW, RSE (see Table 4) required only a single session for each of the first three stages, but RSE required many more sessions to complete the protocol. For example, in Session 4, RSE did not meet criterion on the final fading step. In Sessions 5 and 6, her previously accurate word-and-tone performance was recovered. In Session 7, the fading steps were modified such that the tones remained at 100%, but the words faded out only to 13% intensity; she performed with high accuracy. In Session 8, RSE maintained the superimposition of 100%-intensity tones with 13%-intensity words at high accuracy. In Sessions 9 and 10, a new set of fading steps was introduced in an attempt to fade the intensity of the words more slowly, from 13% to 0%. However, RSE still could not meet criterion when the tones were presented alone. In Session 11, the fading steps were again modified such that the tones remained at 100%, but the words faded out from 13% intensity to 5%; she performed with high accuracy. In Session 12, she maintained high accuracy when the entire session consisted of words at 5% intensity and tones at 100% intensity. In Sessions 13–17, attempts were again made to fade the words even more slowly; RSE's accuracy improved. In Sessions 18–20, RSE showed highly accurate performance with only the tones for two of the three sessions. It is unclear why RSE's performance was poor during Session 19. Nevertheless, when the durations for each of the tone stimuli were standardized at 500ms (Sessions 20–23), RSE met criterion, indicating that she had learned the tone discrimination with the task.

In sum, both participants showed transfer of stimulus control within the same/different, go-left/go-right task from auditory stimuli that they could discriminate (spoken words) to auditory stimuli they could not discriminate (tones) via a superimposition-and-fading procedure. Though RBW progressed through the protocol rapidly, RSE required several modifications to the protocol until she was able to demonstrate criterion performance with the tone stimuli. Nevertheless, Study 2 demonstrated a successful procedure for participants who do not readily acquire tone discriminations with minimal prompting.

General Discussion

In Study 1, two of five participants with intellectual disabilities readily acquired an auditory same/different, go-left/go-right discrimination task with sine-wave tone stimuli. These two participants then immediately generalized their performance to new auditory stimuli, recorded spoken words. Finally, the two participants successfully demonstrated face-value performance in a rudimentary auditory assessment task with tone stimuli. For participants who entered Study 2 with extant discrimination of spoken words, it was demonstrated that tone discrimination in the task could be established via a superimposition-and-fading method. Together, the two studies provide support for the feasibility and potential utility of the go-left/go-right task for further development as an auditory assessment procedure. To our knowledge, this is the first demonstration of an entirely nonverbal task that can be used to evaluate same/different judgments of single pairs of successively presented auditory stimuli with individuals with intellectual disabilities.

This demonstration extends previous auditory discrimination methods in important ways. First, as noted earlier, the present go-left/go-right task requires a response on every trial. Thus, the present task does not preclude use by participants with intellectual disabilities or any other participant that may have difficulty refraining from responding, as would be required in a go/no-go task. Also, consider the more traditional matching-to-sample format of the go-left/go-right task used by Serna et al. (1996): The participants were required to respond to the left key, given one auditory sample stimulus, and to the right key given the other auditory sample stimulus. Though technically the two auditory stimuli were presented successively, a response requirement and an intertrial interval intervened between the presentations of the auditory stimuli. It would not be unreasonable to assume that the separation in time between the presentation of the two auditory stimuli (at least several seconds) could present difficulties for participants with intellectual challenges. The traditional matching format would likely present other problems. For example, participants would require separate sample-left and sample-right training for each and every experimental auditory stimulus pair of experimental interest. That is, the single-sample go-left/go-right format does not lend itself to generalized performance. The result would be a very cumbersome way to assess discrimination. Similar issues would be present using the auditory matching task from Dube, Green, and Serna (1993) for same/different assessment purposes. In their task, each trial consisted of the presentation of an auditory sample followed by one auditory comparison, then a second presentation of the same auditory sample followed by the second comparison. Normally capable adults participants indicated that one or the other sample/comparison presentation was related (by reflexivity, symmetry or transitivity) by selecting one of two concurrently available gray rectangles positioned in the upper left or right of the computer screen. Each rectangle had been experimentally associated with either the first or second auditory sample/comparison set during the trial. The procedure proved an excellent one for examining stimulus equivalence class formation (Sidman, 1971; Sidman & Tailby, 1982) in which all stimuli were auditory. However, like theSerna et al. (1996) procedure, the memory requirements are greater than might be reasonable for a participant with intellectual disabilities. Further, as an assessment procedure, perhaps to test discrimination of decreasing disparity between tone stimuli, use of the Dube et al. (1993) would present challenges. For example, suppose one sought to determine whether participants judged two similar frequencies, 3,000Hz and 2,920Hz, as the same or different. Stimulus 3,000Hz might be played as a sample stimulus with 2,920Hz as a comparison. But what would be used as the other comparison? One possibility would be to present a tone that is very different from the sample, such as 1,000Hz. However, should a participant select the 2,920Hz tone in this context, we would know only that s/he judges 3,000Hz and 2,920Hz as more similar to one another than 3,000Hz and l,000Hz; we would not know whether s/he judges 3,000Hz and 2,920Hz as the same, that is, as an undetectable difference. In sum, these issues are not problems in the present procedure; the auditory stimuli are presented in relatively rapid succession, that is, as a pair in which the stimuli are separated only by 500ms. Only a single response is required to indicate a same/different judgment per pair of stimuli, another result of which is a relatively efficient procedure.

The findings from the present report also suggest questions for additional study. Most studies in auditory discrimination or perception with individuals with autism, for example (e.g., Bonnel, 2003), focus on populations that are “high-functioning,” and therefore require participants to have high enough skill levels of receptive language to understand relatively complex task instructions. The present nonverbal task does not require such skills. Indeed, the participants in the present studies would be considered “lower functioning,” even though they all had some language skills. Though not the focus of the present study, it is unknown whether the participants might have been able to express in simple verbal terms whether they regarded the pairs of stimuli as same or different. However, in a study involving a yes/no visual-discrimination task, analogous to the procedures used in the present study, Serna, Oross and Murphy (1998) found that one verbal participant with intellectual disabilities (CA 18-10; eMA 7-6; similar in functioning ability to those in the present study) was unable to complete a verbal-response version of the task, but had no difficulty completing a nonverbal version of the task. This finding suggests that even for individuals whose functioning level permits them to make verbal responses, a nonverbal task, such as the present one, may be more practical. Of course, a formal study that examines this question is needed before any firm conclusions can be drawn.

The present study also raises concerns about the generality of the findings to other individuals with intellectual disabilities. Only two of the initial five participants readily acquired the task with tone discriminations in Study 1. However, two additional participants (RSE and JAR) had acquired word discriminations in informal follow-up investigation. In Study 2, RSE and a naïve participant, RBW, eventually acquired the tone discrimination in the go-left/go-right task via a stimulus control shaping method. More indepth manipulations are needed before one can assert that the present method is completely reliable. For example, unaddressed in the present study is the extent to which participants who undergo prolonged stimulus-control shaping programming to establish a tone discrimination will generalize their performance to other pairs of stimuli that are of interest to experimenters. Neither RSE or RBW were available for extended generalization testing, but in a brief, informal follow-up investigation, their performance did not always indicate accurate generalization. Nevertheless, a study with a larger group of participants, perhaps including several groups of graded functioning levels, would help address this question. For participants who do not acquire the task with any auditory stimuli (e.g., MES in the present study), methods for establishing the first instances of generalized conditional auditory stimulus-stimulus relations based on identity need to be further researched.

Finally, should additional research in this area ultimately result in a very reliable and general-purpose auditory assessment procedure, there are several ways the task could be used. For example, the procedure may be useful for determining psychophysical performance with a variety of auditory stimuli; one could easily envision testing a more extensive set of graded-disparity pairs such that an auditory pitch-discrimination threshold could be calculated. Other characteristics of speech could be assessed as well, such as duration of auditory stimuli. More generally, the assessment could be used to verify the discriminability of auditory stimuli prior to their use in an auditory-visual matching to sample task. Of course, one would need an additional procedure to verify the discriminability when they are separated in time, as is the case in most auditory-visual matching tasks. Nevertheless, the assessment task could ultimately play a significant role in understanding more fully the reasons that auditory learning sometimes fails to occur.

Acknowledgments

This research was supported by a grant from the Eunice Kennedy Shriver National Institute of Child Health and Human Development to the University of Massachusetts Medical School, Shriver Center, HD25995.

References

- Abbeduto L, Furman L, Davies B. Relation between the receptive language and mental age of persons with mental retardation. American Journal on Mental Retardation. 1989;93:535–543. [PubMed] [Google Scholar]

- Barker-Collo S, Jamieson J, Boo F. Assessment of Basic Learning Abilities test: Prediction of communication ability in persons with developmental disabilities. International Journal of Practical Approaches to Disability. 1995;19:23–28. [Google Scholar]

- Bonnel A, Mottron L, Peretz I, Trudel M, Gallun E, Bo A. Enhanced pitch sensitivity in individuals with autism: A signal detection analysis. Journal of Cognitive Neuroscience. 2003;15(2):226–235. doi: 10.1162/089892903321208169. [DOI] [PubMed] [Google Scholar]

- Chapman RS, Schwartz SE, Kay-Raining Bird E. Language skills of children and adolescents with Down syndrome: I. Comprehension. Journal of Speech and Hearing Research. 1991;34:1106–1120. doi: 10.1044/jshr.3405.1106. [DOI] [PubMed] [Google Scholar]

- D'Amato MR, Colombo M. Auditory matching-to-sample in monkeys (Cebus apella) Animal Learning and Behavior. 1985;13:375–382. [Google Scholar]

- D'Amato MR, Worsham RW. Retrieval cues and short-term memory in capuchin monkeys. Journal of Comparative and Physiological Psychology. 1974;86:274–282. [Google Scholar]

- Dube WV, Hiris EJ. MTS v 11.6.7 Documentation [Computer software manual] Waltham, MA: E. K. Shriver Center; 1999. [Google Scholar]

- Dube WV. Computer software for stimulus control research with Macintosh computers. Experimental Analysis of Human Behavior Bulletin. 1991;9:28–30. [Google Scholar]

- Dube WV, Lombard KM, Farren KM, Flusser DS, Balsamo LM, Fowler TR, Tomanari GY. Stimulus overselectivity and observing behavior in individuals with mental retardation. In: Murata-Soraci K, Cook R, Soraci S, editors. Perspectives on Fundamental Processes in Intellectual Functioning: Vol 2. Visual information processing: Implications for understanding individual differences. Stamford, CT: Ablex; 2003. pp. 109–123. [Google Scholar]

- Dube WV, Green G, Serna RW. Auditory successive conditional discrimination and auditory stimulus equivalence classes. Journal of the Experimental Analysis of Behavior. 1993;59:103–114. doi: 10.1901/jeab.1993.59-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. Third Edition. Circle Pines, MN: American Guidance Service, Inc; 1997. [Google Scholar]

- Dunn LM, Dunn DM. Peabody Picture Vocabulary Test. Fourth Edition. Minneapolis, MN: NCS Pearson, Inc; 2007. [Google Scholar]

- Etzel BC, LeBlanc JM. The simplest treatment alternative: The law of parsimony applied to choosing appropriate instructional control and errorless-learning procedures for the difficult-to-teach child. Journal of Autism and Developmental Disorders. 1979;9:361–382. doi: 10.1007/BF01531445. [DOI] [PubMed] [Google Scholar]

- Green G. Differences in development of visual and auditory-visual equivalence relations. American Journal on Mental Retardation. 1990;95:260–270. [PubMed] [Google Scholar]

- Jones CRG, Happe F, Baird G, Simonoff E, Marsden AJS, Tregay J, Phillips RJ, Goswami U, Thomson JM, Charman T. Auditory discrimination and auditory sensory behaviours in autism spectrum disorders. Neuropsychologia. 47:2850–2858. doi: 10.1016/j.neuropsychologia.2009.06.015. [DOI] [PubMed] [Google Scholar]

- Klinger L, Dawson G, Renner P. Autistic disorder. In: Mash E, Barkley R, editors. Child psychopathology. 2nd edn. New York: Guilford Press; 2002. pp. 409–454. [Google Scholar]

- Mcllvane WJ, Dube WV. Stimulus control topography coherence theory: Foundations and extensions. The Behavior Analyst. 2003;26:195–213. doi: 10.1007/BF03392076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mcllvane WJ, Dube WV. Stimulus control shaping and stimulus control topographies. The Behavior Analyst. 1992;15:89–94. doi: 10.1007/BF03392591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mcllvane WJ, Stoddard LT. Acquisition of matching-to-sample performances in severe mental retardation: Learning by exclusion. Journal of Mental Deficiency Research. 1981;25:33–48. doi: 10.1111/j.1365-2788.1981.tb00091.x. [DOI] [PubMed] [Google Scholar]

- Mcllvane WJ, Stoddard LT. Complex stimulus relations and exclusion in mental retardation. Analysis and Intervention in Developmental Disabilities. 1985;5:307–321. [Google Scholar]

- Mcllvane WJ, Serna RW, Dube WV, Stromer R. Stimulus control topography coherence and stimulus equivalence: Reconciling test outcomes with theory. In: Leslie J, Blackman DE, editors. Issues in experimental and applied analyses of human behavior. Reno: Context Press; 2000. pp. 85–110. [Google Scholar]

- Meyerson L, Kerr N. Teaching auditory discriminations to severely retarded children. Rehabilitation Psychology. 1977;24((Monograph Issue)):123–128. [Google Scholar]

- Miller JF. Language and communication characteristics of children with Down syndrome. In: Crocker A, Pueschel S, Rynders J, Tinghey C, editors. Down syndrome: State of the art. Baltimore: Brooks; 1987. pp. 233–262. [Google Scholar]

- Moore BCJ. Frequency difference limens for short-duration tones. The Journal of the Acoustical Society of America. 1973;54(3):610–619. doi: 10.1121/1.1913640. [DOI] [PubMed] [Google Scholar]

- Schreibman L, Charlop MH, Koegel RL. Teaching autistic children to use extra-stimulus prompts. Journal of Experimental Child Psychology. 1982;33:475–491. doi: 10.1016/0022-0965(82)90060-1. [DOI] [PubMed] [Google Scholar]

- Schreibman L, Kohlenberg BS, Britten KR. Differential responding to content and intonation components of a complex auditroy stimulus by nonverbal and verbal autistic children. Analysis and Intervention in Developmental Disabilities. 1986;6:109–125. [Google Scholar]

- Serna RW, Jeffery JA, Stoddard LT. Establishing go-left/go-right auditory discrimination baselines in an individual with severe mental retardation. The Experimental Analysis of Human Behavior Bulletin. 1996;14:18–23. [Google Scholar]

- Serna RW, Oross S, Murphy NA. Nonverbal assessment of line-orientation in individuals with mental retardation. The Experimental Analysis of Human Behavior Bulletin. 1998;16:13–15. [Google Scholar]

- Serna RW, Wilkinson KM, Mcllvane WJ. Blank-comparison assessment of stimulus-stimulus relations in individuals with mental retardation. American Journal on Mental Retardation. 1998;103:60–74. doi: 10.1352/0895-8017(1998)103<0060:BAOSRI>2.0.CO;2. [DOI] [PubMed] [Google Scholar]

- Serna RW, Stoddard LT, Mcllvane WJ. Developing auditory stimulus control: A note on methodology. Journal of Behavioral Education. 1992;2:391–403. [Google Scholar]

- Sidman M, Tailby W. Conditional discrimination vs matching-to-sample: An expansion of the testing paradigm. Journal of the Experimental Analysis of Behavior. 1982;37:5–22. doi: 10.1901/jeab.1982.37-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sidman M. Reading and auditory-visual equivalences. Journal of Speech and Hearing Research. 1971;14:5–13. doi: 10.1044/jshr.1401.05. [DOI] [PubMed] [Google Scholar]

- Stoddard LT. An investigation of automated methods for teaching severely retarded individuals. In: Ellis NR, editor. International review of research in mental retardation. New York: Academic Press; 1982. pp. 163–207. [Google Scholar]

- Stoddard LT, Mcllvane WJ. Establishing auditory stimulus control in profoundly retarded individuals. Research in Developmental Disabilities. 1989;10:141–151. doi: 10.1016/0891-4222(89)90003-6. [DOI] [PubMed] [Google Scholar]

- Stoddard LT, Mcllvane WJ. Generalization after intradimensional discrimination training in 2-year old children. Journal of Experimental Child Psychology. 1989;47:324–334. doi: 10.1016/0022-0965(89)90035-0. [DOI] [PubMed] [Google Scholar]

- Terrace HS. Errorless transfer of a discrimination across two continua. Journal of the Experimental Analysis of Behavior. 1965;6:223–232. doi: 10.1901/jeab.1963.6-223. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vause T, Martin GL, Yu DCT. ABLA test performance, auditory matching, and communication ability. Journal on Developmental Disabilities. 2000;7:123–141. [Google Scholar]