Abstract

Previous studies have shown overlapping neural activations for observation and execution or imitation of emotional facial expressions. These shared representations have been assumed to provide indirect evidence for a human mirror neuron system, which is suggested to be a prerequisite of action comprehension. We aimed at clarifying whether shared representations in and beyond human mirror areas are specifically activated by affective facial expressions or whether they are activated by facial expressions independent of the emotional meaning. During neuroimaging, participants observed and executed happy and non-emotional facial expressions. Shared representations were revealed for happy facial expressions in the pars opercularis, the precentral gyrus, in the superior temporal gyrus/medial temporal gyrus (MTG), in the pre-supplementary motor area and in the right amygdala. All areas showed less pronounced activation in the non-emotional condition. When directly compared, significant stronger neural responses emerged for happy facial expressions in the pre-supplementary motor area and in the MTG than for non-emotional stimuli. We assume that activation of shared representations depends on the affect and (social) relevance of the facial expression. The pre-supplementary motor area is a core-shared representation-structure supporting observation and execution of affective contagious facial expressions and might have a modulatory role during the preparation of executing happy facial expressions.

Keywords: emotion, face, fMRI, mirror neurons

INTRODUCTION

For our daily social interactions it is essential to communicate our own emotions through facial expressions appropriately. To interact successfully, it is crucial that we understand the emotional facial expressions of our interaction partner. The neural basis of action understanding including the comprehension of emotions is still under debate.

According to the visual hypothesis, action understanding is based on the visual analysis of different action-elements (Rizzolatti et al., 2001; for an exemplary study, see Haxby et al., 1994). In contrast to this, the direct matching hypothesis suggests the understanding of an action to be partly based on the projection of the observed action onto motor and pre-motor areas (Gallese et al., 1996; Iacoboni et al., 1999; Rizzolatti et al., 2001). By this, the observer's motor system ‘resonates' and enables him to understand the perceived action (Rizzolatti et al., 2001; Rizzolatti and Craighero, 2004; van der Gaag et al., 2007). This hypothesis was based on the discovery of special visuomotor neurons in macaque monkeys (di Pellegrino et al., 1992). These so-called mirror neurons (MNs) are embedded in premotor cortizes (di Pellegrino et al., 1992; Gallese et al., 1996; Umilta et al., 2001; Kohler et al., 2002; Ferrari et al., 2003) and the anterior inferior parietal lobule (aIPL) (Fogassi and Luppino, 2005; Rozzi et al., 2008). They discharge during execution but also during observation of an action (Rizzolatti and Craighero, 2004). Most studies in monkeys have examined hand and arm movements (e.g. di Pellegrino et al., 1992; Gallese et al., 1996; Nelissen et al., 2005). In addition, MNs have been found for communicative mouth actions (Ferrari et al., 2003). Based on studies showing that MNs also code abstract aspects of actions (e.g. Kohler et al., 2002) they are suggested to be the basis for understanding the intention of an action (Iacoboni and Dapretto, 2006).

To identify putative MN areas in the human brain, activation patterns during observation and execution and/or imitation of hand or arm movements (e.g. Iacoboni et al., 1999; Koski et al., 2002; Kilner et al., 2006; Gazzola et al., 2007) and facial emotional expressions (Carr et al., 2003; Leslie et al., 2004; Hennenlotter et al., 2005; Montgomery and Haxby, 2008) have been examined by means of neuroimaging. In the first study, in which conjoint activations for emotional facial expressions were examined in this way, a number of distributed brain regions including inferior frontal gyrus (IFG), the posterior parietal cortex, the insula, and the amygdala were found to be activated during both imitation and pure observation of emotional facial expressions (Carr et al., 2003). A subsequent study that used videos of dynamic facial expressions instead of still photographs largely replicated these findings (Leslie et al., 2004). A further refinement of this experimental approach was introduced by Hennenlotter et al. (2005) who compared activity across observation and pure execution without observation to exclude that any overlapping activity might be due to common visual input during both experimental conditions. Later, van der Gaag and colleagues (2007, p. 81) showed that the insula, the frontal operculum, the pre-supplementary motor area (pre-SMA), and the superior superior temporal sulcus (STS) are more involved in observation and (delayed) imitation of emotional in contrast to non-emotional facial expressions. Activation of single cells of the SMA during both observation and execution of emotional facial expressions was shown in patients suffering from drug refractory seizure (Mukamel, 2010, p. 541). Finally, Anders et al. (2011) used an interactive setting, in which a sender and a perceiver communicated by means of a video camera during fMRI to demonstrate that emotion-specific information is encoded by highly similar signals in a distributed ‘shared’ network in the sender's and the perceiver's brain.

Based on these findings, it has been suggested that a putative MNS for affect might comprise additional brain regions beyond the ‘classical’ frontal and parietal mirror areas. Specifically, overlapping insula activity has been interpreted as providing evidence for a role of this area as a ‘relay’ from action to emotion representation (Carr et al., 2003; for interconnections of the insula with the somatosensory cortex and their importance for simulation, see also Keysers et al., 2010). Activation of the insula has been associated with the subjective feeling of emotions (Craig, 2002; Wicker et al., 2003), understanding facial expressions (Adolphs et al., 2003), and with self-reported empathy scores (Jabbi et al., 2007). Furthermore, right insula and the adjacent frontal operculum are functionally connected with the IFG, when people observe emotional facial expressions (Jabbi and Keysers, 2008). And the insula is strongly interconnected with the amygdala (Craig, 2002).

To our best knowledge, ‘shared representations' for observing and executing emotional facial expressions have never been directly compared with shared representations for non-emotional facial expressions. In fact, studies often combined visual input and execution in an (delayed) imitation condition (Carr et al., 2003; van der Gaag et al., 2007), or did not include a non-emotional facial expression (Carr et al., 2003; Hennenlotter et al., 2005). In case of the imitation studies it remains open, whether activation during movement execution was driven by the visual input or by the executed movement. In case of the missing non-emotional expression, it is still open if activation is really affect-specific. We wanted to address these open questions, by examining and contrasting shared execution–observation representations for happy and non-emotional dynamic facial expressions. We hypothesized that execution and observation of both happy and non-emotional facial expression would commonly activate premotor areas including the pars opercularis of the IFG, the aIPL and superior temporal cortices. In contrast, the insula, the amygdala, and pre-SMA were hypothesized to be more strongly activated by happy than by non-emotional facial expressions.

METHODS

Subjects

Thirty-two right-handed volunteers (15 males, 17 females) participated in the study. The average age was M = 24.6 years (s.d. = 5.44) and the average school education was 12.85 years (s.d. = 0.64). Right-handedness was confirmed by the Edinburgh inventory (Oldfield, 1971). Participants had normal or corrected-to-normal vision and were excluded when they had neurological or psychiatric disorders. All participants were paid for their participation and gave written informed consent to the study protocol prior to participation that was proved and affirmed by the local ethics committee (according to the declaration of Helsinki).

Stimuli

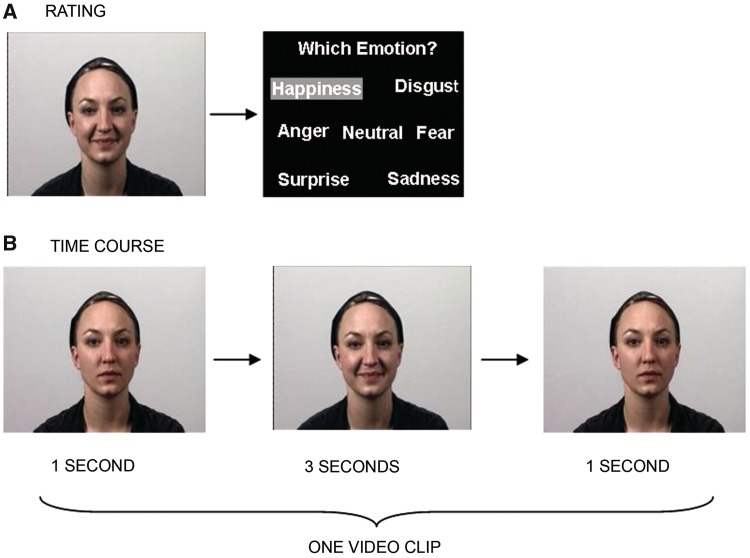

The stimulus material was produced in an in-house media center and encompassed video clips depicting actors performing different facial expressions and video clips showing scrambled facial silhouettes. Facial expressions were displayed by 24 professional actors (12 males, 12 females) aged between 19 and 39 years, who were filmed with a Sony DVX 2000® video camera. Each actor displayed a complete set of facial expressions including six basic emotions (happiness, sadness, anger, disgust, surprise and anxiety), 16 non-emotional facial expressions (among others lip protrusion, mouth opening and blow up cheeks), and neutral (amimic) faces. Each clip lasted for 5 s. The actor started with a neutral face (duration = 1 s), then, a facial gesture was performed (duration = 3 s). The clip ended with the actor showing a neutral face again (duration = 1 s (Figure 1B). All videos were evaluated in a behavioral pilot study (15 males, 15 females) in which raters were asked to rate and categorize the shown expressions into one of seven categories (Figure 1 A). For the present fMRI study, videos of happy facial expressions [average recognition rate 99.4% (s.d. = 0.01)], non-emotional facial expressions [lip protrusion, average recognition rate 97.9% (s.d. = 0.03) and videos of neutral facial expressions (without deliberate movement, average recognition rate 97.9% (s.d. = 0.03)] were included.

Fig. 1.

(A) All video clips depicting actors were rated in a prior behavioral study. Participants of the rating study watched each video clip. Afterwards, they had to categorize the video to either one of the six basic emotions or to the neutral category. (B) Time course of one exemplary video clip. Each video started and ended with the actor having a neutral face without any movement.

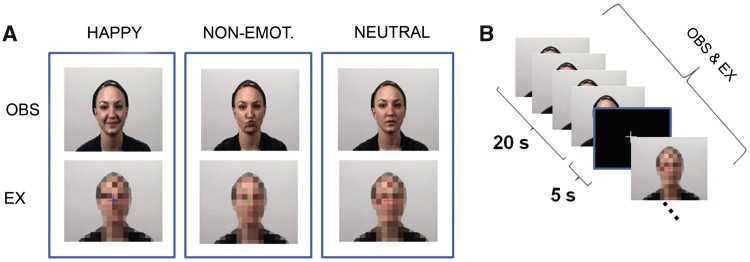

For the execution condition, video clips were created that showed facial silhouettes that were scrambled by moving squares (Figure 2). These scrambled videos were generated using Photoshop CS3 10.0® and Adobe Premiere Pro CS3®. One frame of the original video clips served as a reference and the actors face was scrambled with the pixelate mosaic effect. To implement movement, the color of the squares was changed frame-by-frame. The color and figure-ground configuration was congruent to the video clips depicting the actors. The single frames were finally assembled to a video lasting 5 s. First, only the scrambled figure was presented. After 1 s, a colored fixation cross was projected onto the video for 3 s to provide a temporal cue for movement execution (see following section ‘Experimental Paradigm’). Thereafter, the cross disappeared for the remaining 1 s and participants were instructed to stop performing the facial gesture. The timing of the fixation cross matched the execution time of the facial expressions (Figure 1 B).

Fig. 2.

(A) Video clips were used as stimulus material in all conditions. In the observation condition, participants were presented with videos showing actors demonstrating a happy facial expression, a non-emotional facial movement, or a neutral face without any movement. Participants were asked to observe the actors without making any movement themselves. In the execution condition, scrambled video clips were presented; a colored fixation cross in the middle of the scramble indicated which movement had to be executed by the participants. (B) One block encompassed 4 video clips à 5 s. Blocks were separated by a low level baseline of 5 s duration.

Experimental paradigm

Before fMRI scanning, participants were familiarized with the task. They were instructed that they would either see video clips depicting either actors showing a facial expression (happy, lip protrusion or neutral face without movement), or scrambled faces. For the facial expressions, participants were to observe the video clips without making any movement. For the scrambled faces, they were to execute the facial expressions (happy, lip protrusion or neutral) themselves as long as the cross was projected onto the scrambled video. The color of the fixation cross indicated which facial gesture had to be executed by the participants. While observing or executing happy facial expressions, participants were asked to also put themselves into a happy mood.

Stimuli were presented in a block design with the 3 × 2 factors ‘facial expression’ (happy, non-emotional or neutral) and ‘task’ (observe or execute). Two experimental runs composed 18 blocks each (3 of each condition).

Each block lasted 20 s and consisted of four videos of the same facial expression. The order of blocks was pseudo-randomized (three randomization sequences existed, randomly assigned to the participants). A low-level baseline (white fixation cross on black background) was presented between the blocks for 5 s. Additionally, the low-level baseline was presented 6 times per run for 20 s to allow the hemodynamic response to decline.

The stimuli were presented with MR-compatible goggles (Resonance Technology, Inc. Northridge, CA, USA) using the Presentation© software package (Neurobehavioral Systems, Inc., Albany, CA, USA).

Since there is considerable evidence for the contagiousness of emotional facial expressions (Dimberg and Petterson, 2000; Dimberg et al., 2000), it was important for us to control participants' compliance to the task using a scanner-compatible video camera. The goal of our study was to explore SRs of observation and execution in premotor cortices and, therefore, this technique had to ensure that the participants did not overtly mimic facial expressions during the observation condition. The tapes were judged online and after the experiment by a certified rater using the ‘Facial Action Coding System’ (FACS; Ekman and Friesen, 1978). The ongoing experiment was stopped if a participant mimicked the actor's facial gesture overtly during the observation condition or executed wrong expressions during the execution condition. In this case, participants were instructed again and the experiment was restarted. One participant had to be excluded because of repeated mimic reactions during the observation condition.

After the fMRI recording, a post-scanning questionnaire was completed by the participants to assess their emotional experience during the experiment. Participants were asked to rate how much happiness they felt during each condition on a 7-point Likert scale (1 = ‘not at all’ until 7 = ‘very strong’). Questionnaire data were analyzed by using SPSS 15.0 (Statistical Packages for the Social Sciences, Version 15.0, SPSS Inc., USA). A repeated measures ANOVA and post hoc paired t-tests were calculated between self-ratings of the experimental conditions to clarify if the participants felt happier in the emotional than in the non-emotional conditions.

FMRI acquisition parameters and analyses

Functional T2*-weighted images were obtained with a Siemens 3-Tesla MR-scanner using Echo planar imaging (EPI; TR = 2200 ms, TE = 30 ms, flip angle 90°, FoV 224 mm, base resolution 64, voxel size 3.5 mm2, 36 slices with slice thickness 3.5 mm, distance factor 10%).

High-resolution T1-weighted anatomical 3D Magnetization Prepared Rapid Gradient Echo (MP-RAGE) images (TR 1900 ms, TE 2.52 ms, TI 900 ms, flip angle 9°, FoV 250 mm, 256 × 256 matrix, 176 slices per slab, 1 mm isotropic resolution) were acquired at the end of the experimental runs.

Data processing and statistical analysis of the imaging data were performed using SPM5 (Wellcome Department of Imaging Neuroscience, London, UK) implemented in Matlab 7.2 (Mathworks Inc., Sherborn, MA, USA). The first five EPI volumes were discarded to allow for T1 equilibration effects. The remaining functional images were realigned to the first image to correct for head motion (Ashburner and Friston, 2003). Head motion of <4 mm and <3° was accepted. Four subjects did not meet these requirements and were excluded from further analyses. Considering also the subject with unreliable facial responses, 27 out of 32 participants could be evaluated. For each participant, the T1 image was co-registered to the mean image of the realigned functional images. The mean functional image was normalized to the MNI template (Montreal Neurological Institute; Evans et al., 1992; Collins et al., 1994) using a segmentation algorithm (Ashburner and Friston, 2005). Normalization parameters were applied to all EPI images and the T1 image. The images were resampled to 1.5 × 1.5 × 1.5 mm voxel size and spatially smoothed with an 8 mm full width half maximum (FWHM) isotropic Gaussian kernel.

Data were subsequently analyzed by a two-level approach. Using a general linear model (GLM), each experimental condition and stimulus was modeled on the single-subject level with a separate regressor convolved with a canonical hemodynamic response function and its first temporal derivative. The inclusion of the temporal derivative should minimize effects of imprecision in the modeling of the hemodynamic response. Six additional regressors were included into the GLM as covariates of no interest to model variance related to absolute head motion.

The parameter estimates for each voxel were calculated using maximum likelihood estimation and corrected for non-sphericity. First-level contrasts were computed using baseline contrasts and afterwards fed into a flexible factorial second-level group analysis using an ANOVA (factor: condition; blocking factor: subject). The factor condition encompassed 6 levels:

Happy_Observation (H_OBS);

Non-Emotional_Observation (NE_OBS);

Neutral_Observation (N_OBS);

Happy_Execution (H_EX);

Non-Emotional_Execution (NE_EX); and

Neutral_Execution (N_EX)

To identify shared representations for observation and execution of the happy facial gesture, a conjunction null analysis was performed according to the following formula: (H_OBS > N_OBS) ∩ (H_EX > N_EX). Our analysis focused on areas which were actively involved in execution as well as observation of happy facial expression—as opposed to merely less inhibited compared to neutral activity. Therefore, we applied inclusive masking with H_OBS and with H_EXE (each T>3.14) to this contrast. Even though inclusive masking was applied, the conjunction analysis was calculated for the whole brain and thus avoided biased statistics. Calculations for the non-emotional (NE) facial gesture were performed analogue to the happy facial condition: (NE_OBS > N_OBS) ∩ (NE_EX > N_EX) masked inclusive NE_OBS and NE_EX (T > 3.14).

To further specify contributions from the emotional condition, the following analysis was performed: (H_OBS > NE_OBS) AND (H_EX > NE_EX) masked inclusive with H_OBS and H_EX (T > 3.14).

All whole brain analyses are reported significant at a threshold of P < 0.05, family-wise error (FWE) corrected. Brain structures were labeled using the Anatomy Toolbox v 1.6 (Eickhoff et al., 2005, 2007).

Regions-of-interests as derived from the literature (see ‘Introduction’ section and Table 1) were subjected to a hypothesis-driven ROI analysis. Separate ROI analyses were calculated for all hypothesized regions that did not emerge in the whole brain analysis. The calculation and masking procedure was alike the whole brain analysis. For each ROI, a mask encompassing one ROI in one hemisphere each was obtained from the AAL atlas embedded in the WFU PickAtlas software (Wake Forest University, Winston-Salem, NC, USA; Maldjian et al., 2003). Results of the ROI analyses are reported at a significance threshold of P < 0.05 (FWE-corrected).

Table 1.

Overview of conjoint activations for observation and imitation or execution of emotional facial expressions of previous studies

| Reference | Conditions | Analysis | Regions |

|---|---|---|---|

| Carr et al. (2003) | Observation, imitation; happy, sad, angry, surprise, disgust, afraid whole face, only eyes, only mouth | Main effect for both conditions | IFG (L/R) |

| IPL (L/R) | |||

| ST (L) | |||

| Insula (R) | |||

| Amygdala (R) | |||

| Leslie et al. (2004) | Observation, imitation; smile, frown | Main effect for imitation as mask for the viewing condition | IFG (L) |

| IPL (R) | |||

| ST(R) | |||

| SMA (L/R) | |||

| Hennenlotter et al. (2005) | Observation, execution; smile, neutral | Conjunction similar to inclusive masking (see also Wicker 2003; Keysers, 2004) | IFG (R) |

| IPL (R) | |||

| Insula (L) | |||

| van der Gaag et al. (2007) | Observation, delayed imitation; happy, fear, disgust, neutral (blow up cheeks) | Conjunction similar to inclusive masking (see also Wicker 2003; Keysers, 2004) | IFG (L/R) |

| IPL(L/R) | |||

| Insula (L/R) | |||

| Amygdala (L/R) | |||

| pre-SMA (L/R) |

IFG, inferior frontal gyrus; IPL, inferior parietal lobule; ST, superior temporal cortex, SMA, supplementary motor area

RESULTS

Questionnaire data

To identify condition-driven differences regarding the reported subjective feeling of happiness, questionnaire data were entered as within-subjects factors in a repeated measures ANOVA with the factors ‘facial expression’ (happy, non-emotional or neutral) and ‘task’ (observation and execution). Mauchly's test indicated that the assumption of sphericity had not been violated [χ2(2) = 2.212, P = 0.331]. The results revealed a significant main effect for the factor facial expression (F(2, 50) = 52.358, P < 0.01, η2partial = 0.677). There was no interaction between facial expression and task.

Post hoc t-tests indicated a higher subjective feeling of happiness during the observation of happy emotional expressions (M = 4.73, s.d. = 1.00) than during the observation of non-emotional facial expressions [M = 3.00, s.d. = 1.79), t(25) = 5.39, P < 0.0001] and during the observation of neutral facial expressions (M = 2.08, s.d. = 1.44), t(25) = 8.89, P < 0.0001), respectively. Similarly, during the execution of facial expressions a greater feeling of happiness during the happy condition (M = 4.96, s.d. = 1.18) in comparison to the non-emotional (M = 2.92, s.d. = 1.44), t(25) = 5.92, P < 0.0001 and the neutral condition (M = 2.00, s.d. = 1.17), t(25) = 9.83, P = 0.00025) was confirmed by the results of the t-tests.

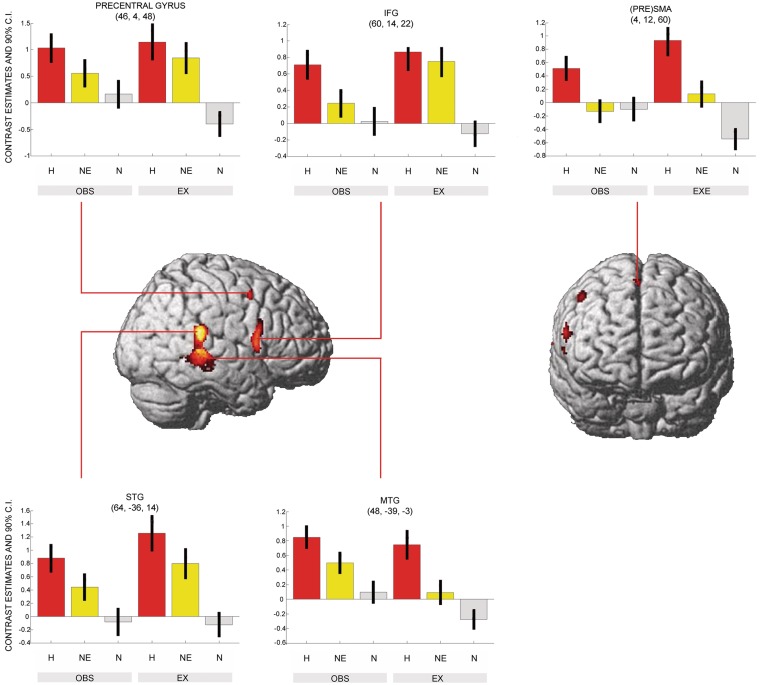

Whole-brain analysis

For happy facial expressions a shared network for both observation and execution ((H_OBS > N_OBS) ∩ (H_EX > N_EX) masked inclusive with H_OBS and H_EX; T > 3.14) was revealed in the right superior temporal gyrus (STG) extending into medial temporal gyrus (MTG), in the right pars opercularis (IFG, area 44), in the right precentral gyrus, right pre-supplementary motor area (pre-SMA), and in lobule VI of left cerebellum (Figure 3 and Table 1).

Fig. 3.

Shared neural network underlying observation and execution of happy facial expressions. The conjunction analysis was FWE-corrected at a threshold of P < 0.05. Shared representations were found in the pars opercularis, the STG extending to the MTG, the precentral gyrus and the (PRE)SMA. This network was less activated in the non-emotional condition (see inserts). Particularly the (PRE)SMA was not activated in the non-emotional facial gesture (see direct comparison in Figure 4). H, happy; NE, non-emotional; N, neutral; OBS, observation; EX, execution.

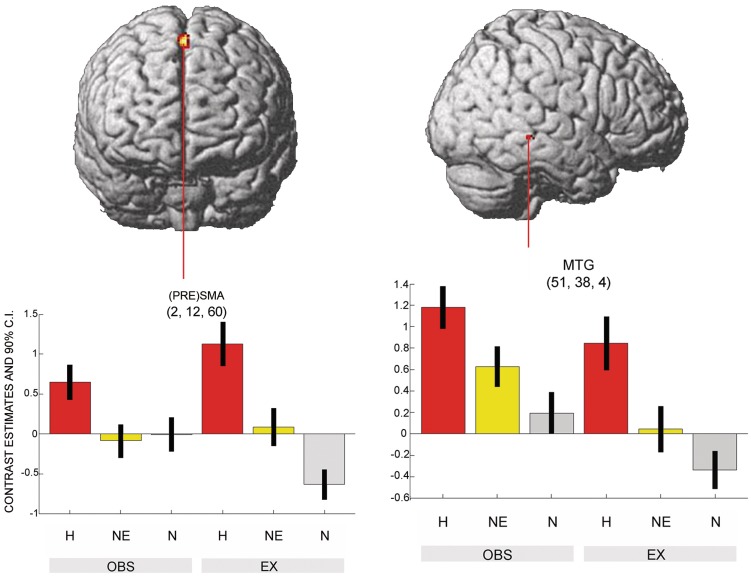

For the non-emotional facial expression, no conjoint activations of observation and execution were found ((NE_OBS > N_OBS) ∩ (NE_EX > N_EX) masked inclusive with NE_OBS and NE_EX; T > 3.14). The comparison of shared representations of happy versus non-emotional facial expressions revealed higher neural activity in the pre-SMA and the MTG near the STS ((H_OBS > NE_OBS) AND (H_EX > NE_EX) masked inclusive with H_OBS and H_EX; T > 3.14) (Figure 4 and Table 2).

Fig. 4.

Social relevance reflected in the direct comparison of emotional- with non-emotional shared representations. The conjunction analysis was FWE-corrected at a threshold of P < 0.05. Significant differences were found in the (PRE)SMA and the MTG.

Table 2.

Activation clusters of for shared neural networks (Observation ∩ execution)

| Contrast | MNI |

k | T | P (FWE) | Hemisphere | Region | ||

|---|---|---|---|---|---|---|---|---|

| X | Y | Z | ||||||

| Happy > Neutral | 48 | −39 | −3 | 1445 | 7.65 | <0.001 | R | STG |

| 60 | 14 | 22 | 302 | 6.59 | <0.001 | R | IFG (pars op.) | |

| 4 | 12 | 60 | 46 | 5.61 | 0.003 | R | Pre-SMA | |

| 46 | 4 | 48 | 49 | 5.40 | 0.008 | R | Precentral gyrus | |

| −22 | −66 | −27 | 3 | 4.87 | 0.029 | L | Cerebellum (Lobule VI) | |

| Happy > Non-emotional | 2 | 12 | 60 | 108 | 5.78 | <0.001 | R | Pre-SMA |

| 51 | −38 | −4 | 24 | 5.19 | 0.018 | R | MTG | |

MNI, Montreal Neurological Institute; k, cluster size in voxel; P < 0.05 FWE corrected; STG, superior temporal gyrus; IFG, inferior frontal gyrus; pars op., pars opercularis; pre-SMA, pre-supplementary area; MTG, medial temporal gyrus

ROI analysis

ROI conjunction analyses for the happy facial expression were performed for the amygdala, the insula, the IPL, the left pars opercularis, and for the left STG. Of those, the amygdala revealed significant neural activity in the right (26, −3, −21; k = 33, T = 3.86, P < 0.004) and the left hemisphere (−26, 0, −21; k = 13, T = 3.40, P < 0.015). When ROI analyses were uncorrected for multiple comparisons a trend was observed in the left STG (T = 3.17, P = 0.001). The other ROIs were not activated significantly (all T < 0.84, uncorrected for multiple comparisons).

ROI analyses for the non-emotional facial expression were performed for the amygdala, the insula, the IPL, the pars opercularis, the left and right SMA and the STG. Supra threshold activation was found in the right STG (66, −39, 14; k = 41, T = 4.61, P < 0.003). A trend was observed for the left amygdala (left: T = 2.15, P = 0.016) and in the right pars opercularis (T = 2.84, P = 0.003) when ROI analyses were uncorrected for multiple comparisons, whereas all other ROI analyses were not significant (all T < 0.84, uncorrected for multiple comparisons).

DISCUSSION

We examined neural correlates of shared representations for observation and execution of facial expressions as possible neural prerequisite for the understanding of these expressions. We analyzed the impact of the affect of facial expressions on the neural correlates of shared representations of observation and execution. Participants reported a significantly higher feeling of happiness in the affective conditions. Regions that were activated in response to observation and execution of the emotional facial expression yielded less activity in the non-emotional facial movement conditions.

Shared representations for happy facial expressions

Several regions were found to be reflecting shared representations for happy facial expressions. The activation of the pars opercularis is in line with the results of previous studies (Carr et al., 2003; Leslie et al., 2004; Hennenlotter et al., 2005; van der Gaag et al., 2007; Montgomery and Haxby, 2008). It has been suggested that the intention of the person displaying the facial expression is coded by these lateral frontal activations (Umilta et al., 2001; van der Gaag et al., 2007). This assumption was corroborated by a study of Fazio and colleagues (2009) where lesions in the pars opercularis accompanied with less accurate comprehension of human actions.

Activation of the superior temporal cortex as shared representation has previously been found in several studies (e.g.Hennenlotter et al., 2005; Montgomery and Haxby, 2008). It is well known that superior temporal cortices respond to observed (e.g. Allison et al., 2000) and even imagined biological motion (Grossman and Blake, 2001), thus, supporting the present finding of STG/MTG activation during the observation of emotional facial expressions. While forwarding visual input to the mirror circuit (Iacoboni and Dapretto, 2006), STS activation during action execution might reflect feedback from human mirror areas (Iacoboni and Dapretto, 2006; Montgomery et al., 2007). This feedback might also provide the basis for visual guided movements.

Apart from lateral frontal and temporal activation, we found activation in the pre-SMA, a region shown to be involved in a shared representation network for observation and imitation of emotional facial expressions (van der Gaag et al., 2007).

In line with our hypothesis, we found significant activation of the amygdala. This exceeds the findings of previous studies reporting amygdala activation during observation and imitation of emotional facial expressions (Carr et al., 2003; van der Gaag et al., 2007) to this structure's relevance for the execution of emotional facial expressions. The amygdala might therefore not only be involved in the evaluation of observed emotional stimuli but also during their expression. In this study, we speculate that amygdala activation during the execution of the emotional facial expression was related to an evaluation of the subjects' own emotion.

In contrast to two other studies (Carr et al., 2003; van der Gaag et al., 2007), the insula did not emerge as part of the shared representation network for emotional facial expressions. However, most studies had not included an execution condition. Thus, the insula might be important for the imitation than for the execution of an action. The second possibility might be that insula activity is influenced by the valence of the emotion as most studies showed activation of the insula in case of disgust—or painful stimuli (for an overview, see the ‘Introduction’ section in Jabbi and Keysers, 2008). Nevertheless, Hennenlotter and colleagues (2005) who had included and execution task reported insula activation as part of an observation–execution network for happy facial expressions. A lack of result confirmation might be due to differences in the study protocol. The faster succession of the shorter video clips of Hennenlotter and colleagues (2005) might have also been related to an unspecific activation of the insula. Indeed, a recent magnet encephalography (MEG) study found that early insula activity was valence-unspecific, whereas later insula activity was rather due to negative valence (Chen et al., 2009). It is therefore possible that the arousal-specific insula activity was better taped by Hennenlotter and colleagues (2005) because of their shorter video clips.

Contrary to other studies (e.g. Hennenlotter et al., 2005), we did not find parietal areas to be part of a shared representation network, either. Results of studies exploring shared representations of emotional facial expressions were heterogeneous (Carr et al., 2003; Leslie et al., 2004) and due to this, the exact role of parietal areas in the context of shared representations and MNs remains unclear. Our data support the assumption that parietal activations are rather associated with the perception and execution of hand movements or gestures than with facial expressions. This hypothesis is supported by a study of Wheaton and colleagues (2004), who found activations in right anterior inferior parietal cortex during observation of hand movements, but not during observation of facial expressions. Furthermore, parietal activations might be more important during imitation of emotional facial expressions (Carr et al., 2003; van der Gaag et al., 2007) than during their execution.

Shared representations for non-emotional facial expressions

The ROI analyses revealed shared representations for non-emotional facial expressions emerged in the right STG, which did not confirm previous findings of frontal premotor area activation during observation of intransitive mouth actions (chewing) (Buccino et al., 2001). As it has previously been shown that premotor mirror areas are activated during observation of non-emotional facial expressions, it is not assured that it was the affect that modulated mirror area activity in the current experiment. The engagement of premotor regions might also reflect the everyday relevance of the observed chewing movement. In contrast, nonsense movement (lip protrusion) might not convey the same amount of communicative information nor transport social intentions as this might be the case in smiling.

Direct comparison of emotional and non-emotional shared representations

The direct comparison of the emotional with the non-emotional facial expression resulted in activation in the right MTG near the STS and the right pre-SMA. Sensitivity of the STS to socially relevant stimuli has been shown previously. For instance, the right STS was more involved in emotional face processing contrasted with neutral face processing (Engell and Haxby, 2007).

The supplementary motor cortex encompassing pre-SMA, SMA proper and the supplementary eye field has been suggested to play a central role in a variety of tasks concerning motor preparation and movement execution (for a review, see Nachev et al., 2008). Thereby, the pre-SMA seems to be more involved in motor preparation, while SMA proper was shown to contribute more to the actual movement execution (Lee et al., 1999; Nachev et al., 2008). Indeed, connectivity studies in monkeys showed that the monkey homologue to SMA proper is directly connected to the motor cortex whereas the homologue to pre-SMA is connected to the dorsolateral prefrontal cortex (Nachev et al., 2008). It is known that the pre-SMA is interconnected with the prefrontal cortex (Nachev et al., 2008) and the striatum (Lehericy et al., 2004) and has a direct connection with the subthalmic nucleus (Nambu et al., 1996). Therefore, it could be argued that connection of pre-SMA with the subthalamic nucleus might break ongoing activity in cortical-basalganlia routes and inhibit a contagious response during observation. Activation of the pre-SMA might also represent a starting contagious answer, which is stopped by other control mechanisms. The activation of the pre-SMA in the comparison of happy with non-emotional facial expressions could be interpreted in relation to the higher contagiousness of the happy facial expressions in comparison with the non-emotional one and a resulting difference in the effort to inhibit imitation (Dimberg et al., 2002).

Activation of the supplementary motor cortex during affective imitation tasks was documented by a number of studies (e.g. Leslie et al., 2004; van der Gaag et al., 2007). Involvement of this structure has also been shown for spontaneous facial execution of happy affect (pre-SMA; Iwase et al., 2002) and executing happy affect by smiling and laughing (SMA proper). The latter was also correlated with the magnitude of facial muscle reaction (Iwase et al., 2002). This confirms the results of two single-case studies investigating single cell responses of the SMA in patients suffering from drug refractory seizures by intracranial recordings (Fried et al., 1998; Krolak-Salmon et al., 2006). Here, electrical stimulation of the anterior part of the left (pre-) SMA caused smiling, laughter and the feeling of merriment. But most interestingly, in one study, emotion-specific responses of the pre-SMA were recorded after very short latencies during the observation of emotional facial expressions. These results suggest the involvement of pre-SMA in the perception of facial expressions (Krolak-Salmon et al., 2006) in addition to movement preparation and execution (Fried et al., 1998).

Concluding remarks and limitations

We found significant differences in the shared representations for emotional compared with non-emotional facial expressions. The facial expressions were matched with respect to facial movement, but the non-emotional facial expression may be less common and provide less communicative information than the emotional facial expression, which may allow for alternative explanatory models. Notwithstanding this ambiguity, emotional facial expressions modulated activity of a shared representation circuit. The amygdala was involved during the expression of emotions and may support the evaluation of own emotions. In contrast, pre-SMA activity may be related to the contagiousness of the emotional facial expressions. In a similar way, positive feeling might be triggered by motor programs. In future, studies using transcranial magnetic stimulation during observation of emotional facial expressions could examine self-reports of affect when pre-SMA activation is inhibited and shed light on this left open question. During complex social interactions, the MN system is extended by affective and additional premotor areas.

Conflict of Interest

None declared.

Acknowledgments

We thank Simon Eickhoff and Thilo Kellermann for their kind and knowledgeable assistance in methodologies and statistics. Many thanks to our colleagues Martin Lemoz and Uli Heuter for lending us the camera and other technical materials and also to Sebastian Kath for helping to prepare the stimulus material. This work was funded by the Federal Ministry of Education and Research [BMBF, FKZ 01GW0751]. Funding was also provided by the Interdisciplinary Centre for Clinical Research of the Medical Faculty of the RWTH Aachen University [IZKF, N2 and N4-2].

REFERENCES

- Adolphs, R. (2003). Cognitive Neuroscience of human social behaviour. Nature Reviews Neuroscience, 4, 165–178. [DOI] [PubMed]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: role of the STS region. Trends in cognitive sciences. 2000;4:267–78. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Anders S, Heinzle J, Weiskopf N, Ethofer T, Haynes JD. Flow of affective information between communicating brains. Neuroimage. 2011;54:439–46. doi: 10.1016/j.neuroimage.2010.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Rigid body registration. In: Frackowiak RS, editor. Human Brain Function. 2nd edn. San Diego: Academic Press; 2003. pp. 2–18. [Google Scholar]

- Ashburner J, Friston KJ. Unified segmentation. Neuroimage. 2005;26:839–51. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, et al. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. European Journal of Neuroscience. 2001;13:400–4. [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proceedings of the Academy of Natural Sciences of Philadelphia. 2003;100:5497–502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen YH, Dammers J, Boers F, et al. The temporal dynamics of insula activity to disgust and happy facial expressions: a magnetoencephalography study. Neuroimage. 2009;47:1921–8. doi: 10.1016/j.neuroimage.2009.04.093. [DOI] [PubMed] [Google Scholar]

- Collins DL, Neelin P, Peters TM, Evans AC. Automatic 3D intersubject registration of MR volumetric data in standardized Talairach space. Journal of Computer Assisted Tomography. 1994;18:192–205. [PubMed] [Google Scholar]

- Craig AD. How do you feel? Interoception: the sense of the physiological condition of the body. Nature reviews. Neuroscience. 2002;3:655–76. doi: 10.1038/nrn894. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Fadiga L, Fogassi L, Gallese V, Rizzolatti G. Understanding motor events: a neurophysiological study. Experimental Brain Research. 1992;91:176–80. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- Dimberg U, Petterson M. Facial reactions to happy and angry facial expressions: evidence for right hemisphere dominance. Psychophysiology. 2000;37:693–6. [PubMed] [Google Scholar]

- Dimberg U, Thunberg M, Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychological Science. 2000;11:86–9. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- Dimberg U, Thunberg M, Grunedal S. Facial reactions to emotional stimuli: automatically controlled emotional responses. Cognition & Emotion. 2002;16:449–71. [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, et al. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage. 2007;36:511–21. doi: 10.1016/j.neuroimage.2007.03.060. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, et al. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–35. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Facial action coding system. Palo Alto, CA: Consulting Psychologists Press; 1978. [Google Scholar]

- Engell AD, Haxby JV. Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia. 2007;45:3234–41. doi: 10.1016/j.neuropsychologia.2007.06.022. [DOI] [PubMed] [Google Scholar]

- Evans AC, Marrett S, Neelin P, et al. Anatomical mapping of functional activation in stereotactic coordinate space. Neuroimage. 1992;1:43–53. doi: 10.1016/1053-8119(92)90006-9. [DOI] [PubMed] [Google Scholar]

- Fazio P, Cantagallo A, Craighero L, et al. Encoding of human action in Broca's area. Brain. 2009;132:1980–8. doi: 10.1093/brain/awp118. [DOI] [PubMed] [Google Scholar]

- Ferrari PF, Gallese V, Rizzolatti G, Fogassi L. Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. European Journal of Neuroscience. 2003;17:1703–14. doi: 10.1046/j.1460-9568.2003.02601.x. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Luppino G. Motor functions of the parietal lobe. Current Opinion in Neurobiology. 2005;15:626–31. doi: 10.1016/j.conb.2005.10.015. [DOI] [PubMed] [Google Scholar]

- Fried I, Wilson CL, MacDonald KA, Behnke EJ. Electric current stimulates laughter. Nature. 1998;391:650. doi: 10.1038/35536. [DOI] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Pt 2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Rizzolatti G, Wicker B, Keysers C. The anthropomorphic brain: the mirror neuron system responds to human and robotic actions. Neuroimage. 2007;35:1674–84. doi: 10.1016/j.neuroimage.2007.02.003. [DOI] [PubMed] [Google Scholar]

- Grossman ED, Blake R. Brain activity evoked by inverted and imagined biological motion. Vision Research. 2001;41:1475–82. doi: 10.1016/s0042-6989(00)00317-5. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. Journal of Neuroscience. 1994;14:6336–53. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hennenlotter A, Schroeder U, Erhard P, et al. A common neural basis for receptive and expressive communication of pleasant facial affect. Neuroimage. 2005;26:581–91. doi: 10.1016/j.neuroimage.2005.01.057. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Dapretto M. The mirror neuron system and the consequences of its dysfunction. Nature Reviews Neuroscience. 2006;7:942–51. doi: 10.1038/nrn2024. [DOI] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–8. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Iwase M, Ouchi Y, Okada H, et al. Neural substrates of human facial expression of pleasant emotion induced by comic films: a PET Study. Neuroimage. 2002;17:758–68. [PubMed] [Google Scholar]

- Jabbi M, Keysers C. Inferior frontal gyrus activity triggers anterior insula response to emotional facial expressions. Emotion. 2008;8:775–80. doi: 10.1037/a0014194. [DOI] [PubMed] [Google Scholar]

- Jabbi M, Swart M, Keysers C. Empathy for positive and negative emotions in the gustatory cortex. Neruoimage. 2007;34:1744–53. doi: 10.1016/j.neuroimage.2006.10.032. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kaas JH, Gazzola V. Somatosensation in social perception. Nature Reviews Neuroscience. 2010;11:417–29. doi: 10.1038/nrn2833. [DOI] [PubMed] [Google Scholar]

- Kilner JM, Marchant JL, Frith CD. Modulation of the mirror system by social relevance. Social Cognitive and Affective Neuroscience. 2006;1:143–8. doi: 10.1093/scan/nsl017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohler E, Keysers C, Umilta MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–8. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Koski L, Wohlschlager A, Bekkering H, et al. Modulation of motor and premotor activity during imitation of target-directed actions. Cerebral Cortex. 2002;12:847–55. doi: 10.1093/cercor/12.8.847. [DOI] [PubMed] [Google Scholar]

- Krolak-Salmon P, Henaff MA, Vighetto A, et al. Experiencing and detecting happiness in humans: the role of the supplementary motor area. Annals of Neurology. 2006;59:196–9. doi: 10.1002/ana.20706. [DOI] [PubMed] [Google Scholar]

- Lee KM, Chang KH, Roh JK. Subregions within the supplementary motor area activated at different stages of movement preparation and execution. Neuroimage. 1999;9:117–23. doi: 10.1006/nimg.1998.0393. [DOI] [PubMed] [Google Scholar]

- Lehericy S, Ducros M, Krainik A, et al. 3-D diffusion tensor axonal tracking shows distinct SMA and pre-SMA projections to the human striatum. Cerebral Cortex. 2004;14:1302–9. doi: 10.1093/cercor/bhh091. [DOI] [PubMed] [Google Scholar]

- Leslie KR, Johnson-Frey SH, Grafton ST. Functional imaging of face and hand imitation: towards a motor theory of empathy. Neuroimage. 2004;21:601–7. doi: 10.1016/j.neuroimage.2003.09.038. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–9. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Montgomery KJ, Haxby JV. Mirror neuron system differentially activated by facial expressions and social hand gestures: a functional magnetic resonance imaging study. Journal of Cognitive Neuroscience. 2008;20:1866–77. doi: 10.1162/jocn.2008.20127. [DOI] [PubMed] [Google Scholar]

- Montgomery KJ, Isenberg N, Haxby JV. Communicative hand gestures and object-directed hand movements activated the mirror neuron system. Social Cognitive and Affective Neuroscience. 2007;2:114–22. doi: 10.1093/scan/nsm004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mukamel, R., Ekstrom, A.D., Kaplan, J., Iacoboni, M., Fried, I. (2010). Single-Neuron Responses in Humans during Execution and Observation of Actions. Current Biology, 20, 1–7. [DOI] [PMC free article] [PubMed]

- Nachev P, Kennard C, Husain M. Functional role of the supplementary and pre-supplementary motor areas. Nature Reviews Neuroscience. 2008;9:856–69. doi: 10.1038/nrn2478. [DOI] [PubMed] [Google Scholar]

- Nambu A, Takada M, Inase M, Tokuno H. Dual somatotopical representations in the primate subthalamic nucleus: evidence for ordered but reversed body-map transformations from the primary motor cortex and the supplementary motor area. Journal of Neuroscience. 1996;16:2671–83. doi: 10.1523/JNEUROSCI.16-08-02671.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelissen K, Luppino G, Vanduffel W, Rizzolatti G, Orban GA. Observing others: multiple action representation in the frontal lobe. Science. 2005;310:332–36. doi: 10.1126/science.1115593. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nature Reviews Neuroscience. 2001;2:661–70. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Rozzi S, Ferrari PF, Bonini L, Rizzolatti G, Fogassi L. Functional organization of inferior parietal lobule convexity in the macaque monkey: electrophysiological characterization of motor, sensory and mirror responses and their correlation with cytoarchitectonic areas. European Journal of Neuroscience. 2008;28:1569–88. doi: 10.1111/j.1460-9568.2008.06395.x. [DOI] [PubMed] [Google Scholar]

- Umilta MA, Kohler E, Gallese V, et al. I know what you are doing. a neurophysiological study. Neuron. 2001;31:155–65. doi: 10.1016/s0896-6273(01)00337-3. [DOI] [PubMed] [Google Scholar]

- van der Gaag C, Minderaa RB, Keysers C. Facial expressions: what the mirror neuron system can and cannot tell us. Social Neuroscience. 2007;2:179–222. doi: 10.1080/17470910701376878. [DOI] [PubMed] [Google Scholar]

- Wheaton KJ, Thompson JC, Syngeniotis A, Abbott DF, Puce A. Viewing the motion of human body parts activates different regions of premotor, temporal, and parietal cortex. Neuroimage. 2004;22:277–88. doi: 10.1016/j.neuroimage.2003.12.043. [DOI] [PubMed] [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G. Both of us disgusted in my insula: the common neural basis of seeing and feeling disgust. Neuron. 2003;40:655–64. doi: 10.1016/s0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]