Abstract

Visual perception of absolute distance (between an observer and an object) is based upon multiple sources of information that must be extracted during scene viewing. The viewing duration needed to fully extract distance information, particularly in navigable real-world environments, is unknown. In a visually-directed walking task, a sensitive response to distance was observed with 9-ms glimpses when floor- and eye-level targets were employed. However, response compression occurred with eye-level targets when angular size was rendered uninformative. Performance at brief durations was characterized by underestimation, unless preceded by a block of extended-viewing trials. The results indicate a role for experience in the extraction of information during brief glimpses. Even without prior experience, the extraction of useful information is virtually immediate when the cues of angular size or angular declination are informative.

Human vision is dynamic: The eyes are directed from one location to another to at a rate of around three times per second to extract visual information from the environment. As a result, there has been much interest in the time course for the availability of various information sources during the perception of scenes. For example, while acquiring visual details about multiple objects typically requires a number of gaze shifts (Nelson & Loftus, 1980; Hollingworth & Henderson, 2002), one can determine the meaning of a scene, its gist, on the basis of information extracted within the time frame of a single fixation (e.g., Potter, 1975, 1976; Potter & Levy, 1969). Recent work also suggests that we can classify specific scene properties (e.g., navigability and mean depth) based on information extracted even more quickly than gist (Greene & Oliva, 2009a). For example, at the fastest end of the spectrum, a scene can be judged as natural (vs. man-made) in a single 19-ms glimpse. These outcomes sharpen our understanding of how visual scenes are comprehended and how we represent our immediate visual environments, particularly given the sequential sampling process evident in eye movement behavior.

One element of a scene for which the speed of extraction has not yet been determined is the absolute distance between an observer and an object. Although a large body of research has identified multiple cues that support the perception of space in humans (Cutting & Vishton, 1995; Sedgwick, 1986), surprisingly little is known about the time needed to extract distance information from real-world environments. This knowledge is crucial not only for understanding how a scene representation is built up over time, but also for establishing baselines for clinical and applied settings. Age-related performance deficits have been observed in limited-viewing-time recognition tasks (e.g., Di Lollo et al., 1982), and reductions in information processing speed are thought to result from traumatic brain injury (e.g., Ponsford & Kinsella, 1992). Deficits such as these may in turn have negative impacts on visual space perception and the control of action. Even in neurotypical individuals, high workload or highly dynamic tasks (e.g., aircraft flight control, automobile driving) could create conditions that limit the ability to fully extract the available distance information. Thus, assessing the temporal dynamics of absolute distance perception is crucial for understanding visual space perception and action in a broad range of contexts. Given the limitations imposed by perceptual processing speed in healthy young adults, how quickly can information about the absolute distance of objects be extracted from an environment? Does extraction proceed at the same rate for all absolute distance cues, or are some cue configurations processed more quickly than others?

Here, we addressed these issues by controlling the duration of viewing in a visually-directed walking task (see Loomis & Philbeck, 2008 for a review). In this method, participants view a target object and then indicate its location by attempting to walk to it without vision. While there is a memory component to the task, performance is generally thought to reflect the quality of the initial visual input. In principle, verbal reports or other behaviors could be used to indicate target distance. However, because a primary function of vision is to guide navigation through one’s immediate environment, we felt blind walking would provide a particularly compelling demonstration of the visual system’s ability to extract spatial information. Viewing duration was controlled by a liquid crystal shutter window, which could provide brief glimpses of the target object and its setting. This presentation was followed by a masking stimulus, which is customary in limited-viewing-time tasks.

EXPERIMENT 1

We first compared performance with a viewing duration too brief to allow for a saccadic eye movement (113 ms) to that with extended (5 sec) viewing. Because the perception of a scene is derived from a series of relatively discrete glimpses of the visual environment, it is of interest to know the utility of a single glimpse. In natural situations, isolated brief glimpses of an environment are rare; glimpses more typically occur in the context of information obtained from previous glimpses. Stored information from past glimpses may provide an important spatial context that could facilitate processing of information from new glimpses. To test for effects of this kind, viewing durations were administered in blocks with block order manipulated between groups.

Method

Participants

Two groups of 14 naïve observers participated in Experiment 1. All participants were 18 to 35 years of age, reported normal or corrected-to-normal vision, and were paid $10/h or participated in exchange for course credit.

Stimuli and Apparatus

Participants viewed target objects through a liquid crystal shutter window, which can transition from a translucent (but completely light-scattering) state to a transparent state and back again very quickly and reliably (within 1–2 ms of the intended duration). Timing accuracy was verified on each trial by monitoring the output of a phototransistor, which was exposed to an infrared laser through the shutter window only when the window became transparent (Pothier et al., 2009). The window provided a field of view of approximately 80° × 60° (horizontal x vertical). A masking stimulus was projected by an LCD projector onto a screen positioned to observer’s left, and this image reflected into a beamsplitter positioned in front of the shutter window. The mask was a scrambled image of segments of the room, and its presentation was controlled with a mechanical shutter (1 sec. duration, ISI = 0). The shutter window and beamsplitter were mounted to a sliding stage equipped with a chinrest. The testing environment was a laboratory space that extended 7.4 meters in depth from the participant’s viewing position. The visible space was mostly empty with bookshelves located in the far periphery of the visual field. The target object was an orange cone (13.0 cm × 23.5 cm) presented at 11 distances from 3 to 5 m. The room and the targets were indirectly lit with two photo-studio flood lamps. The lighting was bright yet diffuse, and had direction with multiple cast shadows.

Procedure

Great care was taken to ensure that participants only saw the testing environment through the shutter and during experimental trials. Before testing, the experimenter provided instructions outside the laboratory and then ushered participants into the room with their eyes closed. Participants wore earplugs and hearing protectors to minimize spatial sound cues. The experimenter then used a flashlight to guide participants into the chinrest and to show the participant the general region in the window where they could expect to see the target. The lighting was turned on manually just prior to the beginning of each trial. The experimenter then instructed the participant to open the eyes, and announced the impending opening of the shutter window with a “ready, here we go”. Immediately after the shutter window closed, the mask was projected and reflected onto the beamsplitter directly in front of the shutter. After the mask, participants closed their eyes while the experimenter pushed the apparatus to the side, and then walked in a darkened room with their eyes closed.

Data Analysis

Accuracy was examined in terms of bias, the overall tendency toward under- or overestimation, and response-sensitivity, the degree to which walked distance changed systematically with changes in the distance of the target. For each measure we applied a regression approach to the repeated-measures design. Bias was evidenced by a significant contribution of viewing duration as a predictor in the regression model, and response-sensitivity was evidenced by a significant contribution of the distance x viewing duration interaction term. The F and p values reported in the text correspond to the test for a change in R2 for each of these steps in the analysis.

Results

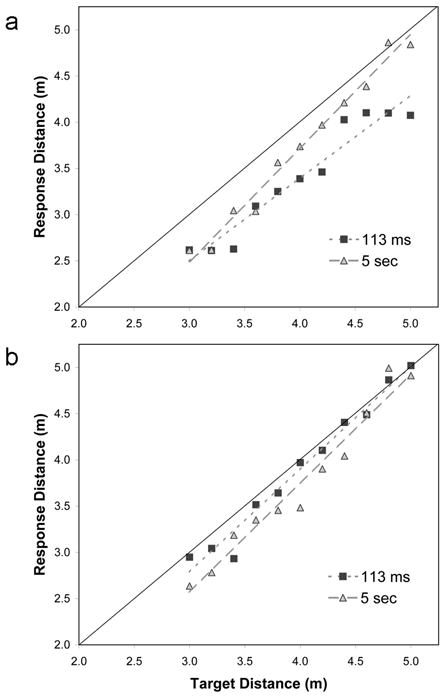

Performance was generally good but differences between viewing durations depended on block order (Fig. 1). When the 113-ms block was first, there was a greater bias toward underestimation with brief viewing (−15%) than with extended viewing (−7%), ΔR2 = .02, F(1,292) = 16.57, p < .01. Some bias towards underestimation might be expected given that the shutter apparatus occluded approximately 1 m of the immediate ground plane (He et al., 2004; Wu et al., 2004). However, the bias largely disappeared with extended viewing, despite an identical amount of ground plane occlusion. The effect thus reflects differences in what can be extracted during the respective viewing times. This increase in bias was accompanied by a reduction in response-sensitivity, ΔR2 = .008, F(1,291) = 6.93, p < .01. The slope of the regression lines with brief viewing was 0.89 compared to 1.22 with extended viewing.

Figure 1.

Mean walked distance to floor-level targets as a function of target distance and viewing duration a) when the block of 113-ms trials was first; and b) when the block of 5-sec trials was first.

In contrast to the above, performance in the two viewing conditions was statistically indistinguishable when the 5-sec block was first: There were differences neither in bias (−4%) nor sensitivity (mean slope = 1.15), all ps > .13. The results suggest that the information needed to support performance can be extracted in a single brief glimpse when longer duration glimpses are provided beforehand. To determine whether the benefit observed when the 113-ms block was second could be attributed to practice or task familiarity, we conducted a control experiment in which participants performed two consecutive blocks using exactly the same viewing duration (100 ms). Performance in the second block could not be differentiated from that of the first on any measure. This provides additional support for the idea that the facilitation observed during limited viewing trials is a product of information specifically acquired during extended viewing trials. Extended viewing clearly affords the creation of a representation that is more elaborated in depth; the availability of this representation either provides a structure for integrating incoming information during brief glimpses, or guides attention more effectively toward informative parts of the scene when viewing time is limited.

EXPERIMENT 2

Our next aim was to determine the shortest viewing duration that would support a response sensitive to the distribution of target distances employed. While first-block performance was better with extended viewing in Experiment 1, response-sensitivity was high even when the brief viewing condition came first (the mean slope did not differ from 1, t(13) = − .86, p = .41). Subsequent pilot testing with 25- and 50-ms viewing durations showed little change in performance from the level observed with 100 ms of viewing. Given that a scene can be categorized after a 40-ms glimpse with a mask (Greene & Oliva, 2009a), presumably on the basis of global image properties (Greene & Oliva, 2009b) that can be extracted with little demands on attention (Alvarez & Oliva, 2009), and given that a liberal estimate for the speed of covert attentional deployment is around 33 ms (Egeth & Yantis, 1997; Saarinen & Julesz, 1991), blind walking performance in the 25–50-ms range would have to be based on information that can be extracted in parallel across the visual field or with at most a single shift of covert attention. Can useful information about distance (i.e., sufficient to cause some variation in response) be extracted in an even quicker time frame?

Experiment 2 examined performance at an ultra-brief (9 ms) viewing duration, a duration that approaches the boundary between seeing and not seeing the target. Our aim was to test the shortest viewing duration that would support reliable detection of the target across all participants. Based on our pilot data we chose to contrast performance at this speed to that with a moderately-brief (69 ms) viewing duration.

Method

Two groups of 12 naïve observers participated in Experiment 2. One group performed the 9-ms block of trials first; a second group performed the 69-ms block first. The method and the design were the same as Experiment 1, except a Styrofoam ball (17.8 cm diameter) was employed as a target with 9 distances in the 3–5m range. To ensure that observers’ responses were indeed based on visual information gained from seeing target, we also included 5 trials without a target and asked participants to report the presence or absence of a target object before initiating the walking response. It is crucial to verify that participants see the target, because performance differences across viewing durations might otherwise be attributed to non-spatial factors that govern target detectability (Watson, 1986).

Results

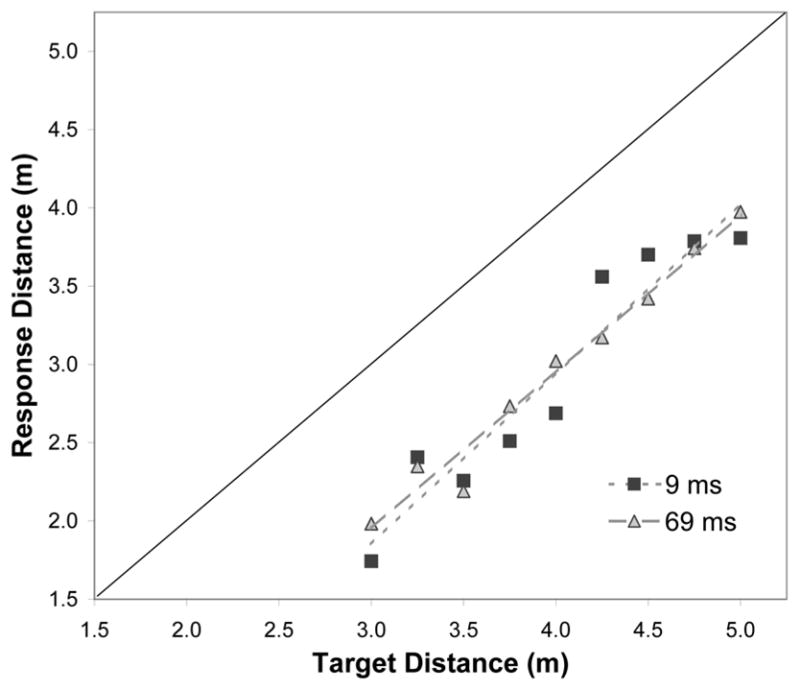

While there was a strong bias towards underestimation (−25%), performance exhibited near perfect response-sensitivity (the mean slope was 1.05). Importantly, these outcomes did not depend on viewing duration or block order (Fs < 1). While performance was very good even with the most fleeting of glimpses, it is worth noting that participants rarely missed targets that were present and rarely false-alarmed on no-target trials, confirming that target detection in the 9-ms viewing condition was highly reliable. (Across all participants and trials there was 1 false alarm and 2 misses.)

EXPERIMENT 3

Experiments 1 and 2 suggest that all the distance information needed to support accurate walking performance can be extracted with a single brief glimpse of around 100 ms if that glimpse is preceded by longer-duration glimpses of the environment. However, even without prior exposure to the target and its context, the extraction of useful distance information appears to be virtually immediate. Does this high speed of extraction depend on the kind of distance information available in the viewing situation, or are all distance cues extracted equally quickly? To address this question, we began removing sources of distance information from the viewing situation. If distance information is generally extracted quickly, a sensitive response would be expected at the fastest viewing duration regardless of the particular cues that are available. We first removed angular declination because it has previously been shown to be a particularly potent cue within the present distance range (Ooi et al., 2001; Philbeck & Loomis, 1997). Given the range of physical cue values and the known perceptual “gain” of this cue in the tested distance range, it is reasonable to suppose that angular declination information could drive performance at ultra-brief viewing durations. Angular declination was rendered non-informative by suspending ball targets at eye level.

Method

One group of 12 naïve observers participated in Experiment 3. The method was the same as Experiment 2, except the target was presented at eye level and viewing durations of 9, 65, and 187 ms were administered randomly in a single block of trials. Because targets were reliably detected in Experiment 2, we opted not to include no-target trials, though participants were instructed to report if they did not see the target. Because targets appeared at the same location within the window, we assumed target detection would be at least as reliable here.

Results

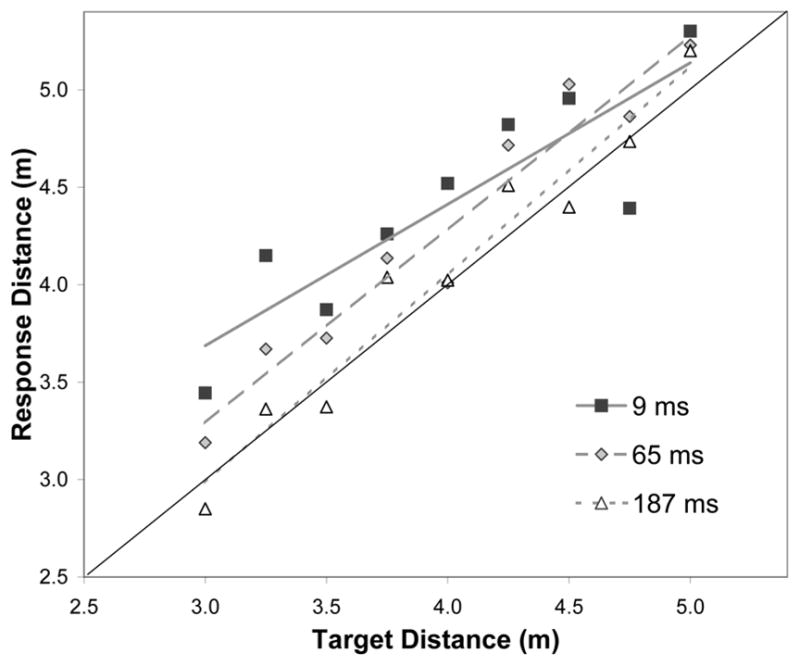

Accuracy was generally quite high but performance did improve across the range of viewing durations (Fig. 3). In contrast to the previous experiments, here there was a bias towards overestimation that decreased with increasing viewing duration, ΔR2 = .017, F(1,311) = 7.719, p < .01. Response-sensitivity increased with increasing viewing duration, ΔR2 = .005, F(1,309) = 4.053, p < .05, though the effect was driven by a modest flattening of the slope in the 9-ms condition (which did not differ from 1, t(11) = −1.67, p = .12). The slopes of the regression lines were 0.73, 1.00, and 1.07 for 9, 65, and 187 ms viewing durations, respectively. Critically, while there was a modest drop in sensitivity in the fastest viewing duration and a bias towards overestimation, useful information about distance can clearly be extracted in our fastest manipulated time frame even in this reduced-cue setting.

Figure 3.

Mean walked distance to eye-level targets as a function of target distance and viewing duration.

EXPERIMENT 4

The performance observed with eye-level targets suggests that angular declination is not the only source of distance information that can be extracted quickly. In fact, because relative angular size was informative in Experiments 1 and 2, performance with floor-level targets could be driven by the size cue as opposed to angular declination. Alternatively, angular size and declination might each be extracted quickly. Here, we examined performance with floor- and eye-level targets each with angular size rendered non-informative. This was accomplished by systematically varying physical ball size with distance so that targets always subtended the same visual angle.

Method

Two groups of 12 naive observers participated in Experiment 4. One group performed the floor-level block first; a second group performed the eye-level block first. Targets were five Styrofoam balls (9.8, 12.2, 14.6, 17.8 19.8 cm) with distances selected to equate for visual angle subtended (2.25°). As a result, floor-level targets ranged from 2.06 to 4.87 m, and eye-level targets ranged from 2.55 to 5.10 m. Viewing durations of 9, 65, and 113 ms were administered randomly within each block of trials.

Results

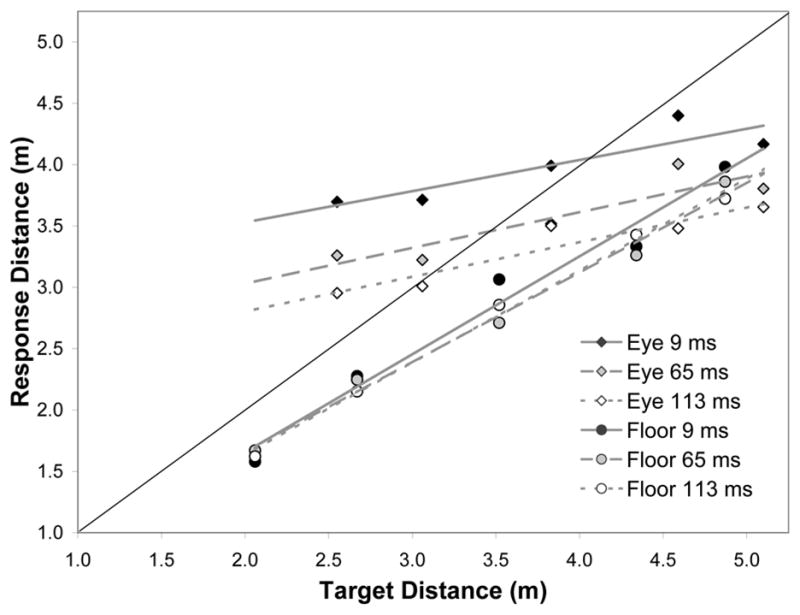

Clear performance differences were observed between viewing conditions. Because accuracy did not depend on block order (all ps > .16), we report the bias and response-sensitivity for the collapsed data set. Figure 4 shows that there were differences in bias, ΔR2 = 1.21, F(1,695) = 121.22, p < .001, as well as response-sensitivity, ΔR2 = 0.04, F(1,693) = 58.97, p < .001. Overall, there was greater bias toward underestimation with floor-level targets (−20%) than with eye-level targets (−5%), but a more sensitive response was observed with floor-level targets than eye-level targets (the mean slopes were 0.76 and 0.28 for each, respectively). Of particular interest was the effect of viewing duration in each of these two viewing conditions. Viewing duration had no effect on bias or response-sensitivity when floor-level targets were used (Fs < 1). When eye-level targets were used, response-sensitivity was also unaffected by viewing duration (F < 1), but there was a systematic change in bias (4%, −7%, and −13% for 9-, 65-, and 113-ms conditions, respectively), ΔR2 = 0.06, F(1,335) = 36.47, p < .001. The changing bias coupled with a compressed response range suggests that the fast extraction of distance information in Experiment 3 was driven by the availability of the angular size cue. In contrast, the removal of relative angular size as a cue was not detrimental to fast extraction of distance to floor-level targets. While the slope was somewhat flattened relative to Experiment 2, performance at 9 ms was as good as it was at 113 ms. The overall pattern suggests that relative angular size and angular declination are each sources of information that can be extracted in the fastest of time frames.

Figure 4.

Mean walked distance as a function of target distance and viewing duration for eye-level and floor-level targets.

Discussion

In this paper we asked a straightforward question: What duration of viewing is required to determine the distance to objects in real environments? Our data suggest the answer depends on a number of factors, including the visual cues that are available during the glimpse itself and stored information about the environment obtained from previous glimpses. When floor-level targets were used, an accurate response appeared to require information that cannot be extracted in a single brief glimpse. A bias towards underestimation was observed when viewing time was limited (< 113 ms), and this bias was eliminated only when performance was preceded by a block of extended-viewing trials (5 sec). Repeated presentations of 100-ms trials did not alleviate the bias. Interestingly, responses were completely unbiased when eye-level targets of constant physical size were used and the viewing duration was somewhat longer (187 ms--still insufficient to permit an eye movement). The difference might be attributed to differences in gaze direction during the glimpse for the two viewing conditions. Participants often report being frustrated by an inability to get a sense of the room size. The persistent underestimation with limited viewing might be attributed to the fact that participants were never given time to direct their gaze away from the floor and toward regions that might be informative about the overall scale of the space. Future research will be needed to determine the source of the extended-viewing benefit, the potential role for eye movements, and the source of bias more generally. Nevertheless, the enhanced performance suggests that information continues to accrue in some cases, even though the stimulus cue configuration has not changed.

Perhaps the most astonishing outcome was that useful information about distance can be extracted from glimpses as brief as 9 ms when relative angular size and/or angular declination are informative. While this outcome is striking, it is important to note that the perception of distance is not likely to be fully developed within this time frame. Indeed, studies in the domains of object recognition (Eddy et al., 2006) and stereoscopic vision (Schumer, 1979; White & Odom, 1985) indicate that processing after brief (50-ms) glimpses continues for at least several hundred milliseconds. With glimpses as brief as 9 ms, it would be fair to wonder whether one even has a perception of distance. That is, while participants clearly see the ball (detection was reliable), they might not see it as occupying a distal location. Distance could be cognitively inferred rather than perceived. This is a thorny issue; it is virtually impossible to rule out post-perceptual influences in any distance judgment. We argue that the more tractable and important issue is whether information may be extracted to control behavior within the allotted time frame. Presumably, visual input is required to support even a cognitive strategy, and any reliable change in performance as a function of viewing duration is still informative about the information extracted.

Nevertheless, the phenomenology underlying the responses is an interesting issue in its own right. In a supplementary experiment, we gave 14 participants 9-ms glimpses of floor-level ball targets that varied in physical size so that the visual angle subtended by the target did not vary with distance (four 1.95-deg targets 2.53 to 5.08 m distant were randomly intermixed with four 2.29-deg targets 2.60 to 4.77 m distant). Although the linkage is not always one-to-one, much research suggests a tight connection between perceived size and perceived distance (Sedgwick, 1986). To exploit this connection, we asked observers to separate their hands to indicate the ball size. This was in addition to blind walking, though only one response was given on any trial and the required response was not revealed until after the glimpse. Blind walking performance here showed the expected pattern of results despite the need to prepare two responses (mean slope = .82; mean bias = −18%). Of particular interest was whether participants would be sensitive to changes in physical size. While the visual angle of the target was completely non-predictive in that regard, targets of greater visual angle were indeed judged to be of greater physical size, ΔR2 = 0.03, F(1,97) = 9.63, p < .01. Importantly, however, target distance explained a significant amount of the variance in size judgments, ΔR2 = 0.03, F(1,96) = 15.41, p < .001, and this was over and above that explained by the angular size variable. On the reasonable assumption that observers would be unlikely to deduce the linkage between angular declination and physical ball size, this variation in the indicated size supports the idea that participants indeed perceived the balls to vary in distance on the basis of 9 ms glimpses.

Our findings have important implications for theories of scene and space perception. The temporal dynamics of information extraction has factored prominently into theories of scene perception (Hollingworth & Henderson, 2002; Irwin & Zelinsky, 2002; Rensink, 2000), which primarily aim to account for the representation that is built up from one fixation to the next. Developing accurate representations of observer-to-object distances is certainly an important aspect of a scene representation, yet the focus in this domain has been primarily on the directional and conceptual locations of objects as opposed to absolute distance. We show that some but not all the information needed to accurately represent absolute distance can be acquired in a single brief glimpse, and that some metric information can be extracted as quickly as the global information that presumably supports judgments about a scene’s gist.

In the domain of visual space perception, much attention has been given to the strengths of various cues and the processes of cue combination, but little attention has been given to the time needed to extract these cues from the environment. We show that the time needed to extract distance information varies widely depending on the kind of information available--there is no fixed time course for the perception of distance. The primary implication is that the speed of extraction must be considered in addition to cue strength and reliability in any theory of dynamic cue combination. The differential availability of relative distance information over time has received some consideration in the context of the planning and controlling of motoric interactions with objects, particularly with regard to the perception of surface slant (Greenwald et al., 2005). We show that absolute distance perception is dynamic even when the scene is static and head position is stable.

Figure 2.

Mean walked distance to floor-level targets as a function of target distance and viewing duration. Data are shown for the 9-ms-first group, though performance in no way depended on block order.

Acknowledgments

This research was supported in part by a grant from NIH (RO1 NS052137) to J.W.P.

References

- Alvarez G, Oliva A. Spatial ensemble statistics are efficient codes that can be represented with reduced attention. Proceedings of the National Academy of Sciences, USA. 2009;106:7345–7350. doi: 10.1073/pnas.0808981106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cutting JE, Vishton PM. Perceiving layout and knowing distances: The integration, relative potency, and contextual use of different information about depth. In: Epstein W, Rogers S, editors. Handbook of perception and cognition: Vol. 5, Perception of space and motion. San Diego: Academic Press; 1995. pp. 69–117. [Google Scholar]

- Di Lollo V, Arnett JL, Kruk RV. Age-related changes in rate of visual information processing. Journal of Experimental Psychology: Human Perception and Performance. 1982;8:225–237. doi: 10.1037/0096-1523.8.2.225. [DOI] [PubMed] [Google Scholar]

- Eddy M, Schmid A, Holcomb PJ. Masked repetition priming and event-related brain potentials: A new approach for tracking the time-course of object perception. Psychophysiology. 2006;43:564–568. doi: 10.1111/j.1469-8986.2006.00455.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Egeth HE, Yantis S. Visual attention: Control, representation, and time course. Annual Review of Psychology. 1997;48:269–297. doi: 10.1146/annurev.psych.48.1.269. [DOI] [PubMed] [Google Scholar]

- Greene MR, Oliva A. The briefest of glances: The time course of natural scene understanding. Psychological Science. 2009a;20:464–472. doi: 10.1111/j.1467-9280.2009.02316.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene MR, Oliva A. Recognition of natural scenes from global properties: Seeing the forest without representing the trees. Cognitive Psychology. 2009b;58:137–176. doi: 10.1016/j.cogpsych.2008.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenwald HS, Knill DC, Saunders JA. Integrating visual cues for motor control: A matter of time. Vision Research. 2005;45:1975–1989. doi: 10.1016/j.visres.2005.01.025. [DOI] [PubMed] [Google Scholar]

- He ZJ, Wu B, Ooi TL, Yarbrough G, Wu J. Judging egocentric distance on the ground: Occlusion and surface integration. Perception. 2004;33:789–806. doi: 10.1068/p5256a. [DOI] [PubMed] [Google Scholar]

- Hollingworth A, Henderson JM. Accurate visual memory for previously attended objects in natural scenes. Journal of Experimental Psychology: Human Perception and Performance. 2002;59:601–612. [Google Scholar]

- Irwin DE, Zelinsky GJ. Eye movements and scene perception: Memory for things observed. Perception & Psychophysics. 2002;64:882–895. doi: 10.3758/bf03196793. [DOI] [PubMed] [Google Scholar]

- Julesz B. Binocular depth perception without familiarity cues. Science. 1964;145:356–362. doi: 10.1126/science.145.3630.356. [DOI] [PubMed] [Google Scholar]

- Loomis JM, Philbeck JM. Measuring perception with spatial updating and action. In: Klatzky RL, MacWhinney B, Behrmann M, editors. Embodiment, Ego-space, and Action. New York: Psychology Press; 2008. pp. 1–43. [Google Scholar]

- Nelson WW, Loftus GR. The functional visual field during picture viewing. Journal of Experimental Psychology: Human Learning and Memory. 1980;6:391–399. [PubMed] [Google Scholar]

- Ooi TL, Wu B, He ZJ. Distance determined by the angular declination below the horizon. Nature. 2001;414:197–200. doi: 10.1038/35102562. [DOI] [PubMed] [Google Scholar]

- Philbeck JW, Loomis JM. Comparison of two indicators of perceived egocentric distance under full-cue and reduced cue conditions. Journal of Experimental Psychology: Human Perception and Performance. 1997;23:72–85. doi: 10.1037//0096-1523.23.1.72. [DOI] [PubMed] [Google Scholar]

- Ponsford J, Kinsella G. Attentional deficits following closed-head injury. Journal of Clinical and Experimental Neuropsychology. 1992;14:822–838. doi: 10.1080/01688639208402865. [DOI] [PubMed] [Google Scholar]

- Pothier SJ, Philbeck JW, Chichka D, Gajewski DA. Tachistoscopic exposure and masking of real three dimensional scenes. Behavior Research Methods. 2009;41:107–112. doi: 10.3758/BRM.41.1.107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Potter MC. Meaning in visual scenes. Science. 1975;187:965–966. doi: 10.1126/science.1145183. [DOI] [PubMed] [Google Scholar]

- Potter MC. Short-term conceptual memory for pictures. Journal of Experimental Psychology: Human Learning and Memory. 1976;2:509–522. [PubMed] [Google Scholar]

- Potter MC, Levy EI. Recognition memory for a rapid sequence of pictures. Journal of Experimental Psychology. 1969;81:10–15. doi: 10.1037/h0027470. [DOI] [PubMed] [Google Scholar]

- Rensink RA. The dynamic representation of scenes. Visual Cognition. 7:17–42. [Google Scholar]

- Saarinen J, Julesz B. The speed of attentional shifts in the visual field. Proceedings of the National Academy of Sciences, USA. 1991;88:1812–1814. doi: 10.1073/pnas.88.5.1812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schumer RA. Thesis. Stanford University; 1979. Mechanisms in human stereopsis. [Google Scholar]

- Sedgwick HA. Space perception. In: Boff KR, Kaufman L, Thomas JP, editors. Handbook of Perception and Human Performance. New York: Wiley; 1986. pp. 21.1–21.57. [Google Scholar]

- Watson AB. Temporal sensitivity. In: Boff KR, Kaufman L, Thomas JP, editors. Handbook of Perception and Human Performance. New York: Wiley; 1986. pp. 6.1–6.43. [Google Scholar]

- White KD, Odom JV. Temporal integration in global stereopsis. Perception & Psychophysics. 1985;37:139–144. doi: 10.3758/bf03202848. [DOI] [PubMed] [Google Scholar]

- Wu B, Ooi TL, He ZJ. Perceiving distance accurately by a directional process of integrating ground information. Nature. 2004;428:73–77. doi: 10.1038/nature02350. [DOI] [PubMed] [Google Scholar]