Abstract

Previous evidence suggests that relatively separate neural networks underlie initial learning of rule-based and information-integration categorization tasks. With the development of automaticity, categorization behavior in both tasks becomes increasingly similar and exclusively related to activity in cortical regions. The present study uses multi-voxel pattern analysis to directly compare the development of automaticity in different categorization tasks. Each of three groups of participants received extensive training in a different categorization task: either an information-integration task, or one of two rule-based tasks. Four training sessions were performed inside an MRI scanner. Three different analyses were performed on the imaging data from a number of regions of interest (ROIs). The common patterns analysis had the goal of revealing ROIs with similar patterns of activation across tasks. The unique patterns analysis had the goal of revealing ROIs with dissimilar patterns of activation across tasks. The representational similarity analysis aimed at exploring (1) the similarity of category representations across ROIs and (2) how those patterns of similarities compared across tasks. The results showed that common patterns of activation were present in motor areas and basal ganglia early in training, but only in the former later on. Unique patterns were found in a variety of cortical and subcortical areas early in training, but they were dramatically reduced with training. Finally, patterns of representational similarity between brain regions became increasingly similar across tasks with the development of automaticity.

Keywords: fMRI, multi-voxel pattern analysis, categorization, automaticity, multiple memory systems

1. Introduction

The ability to group objects and other stimuli into classes, despite their perceptual dissimilarity, is extremely helpful for organizing the environment and adaptively responding to its demands. For this reason, categorization has attracted much attention as a subject of behavioral and neurobiological research. Research in the neuroscience of human category-learning has shown that a variety of areas are recruited during learning and performance of categorization tasks, including visual, prefrontal, parietal, medial temporal and motor cortices, as well as the basal ganglia (for reviews, see Ashby & Maddox, 2005; Seger & Miller, 2010).

1.1 Multiple systems of category learning

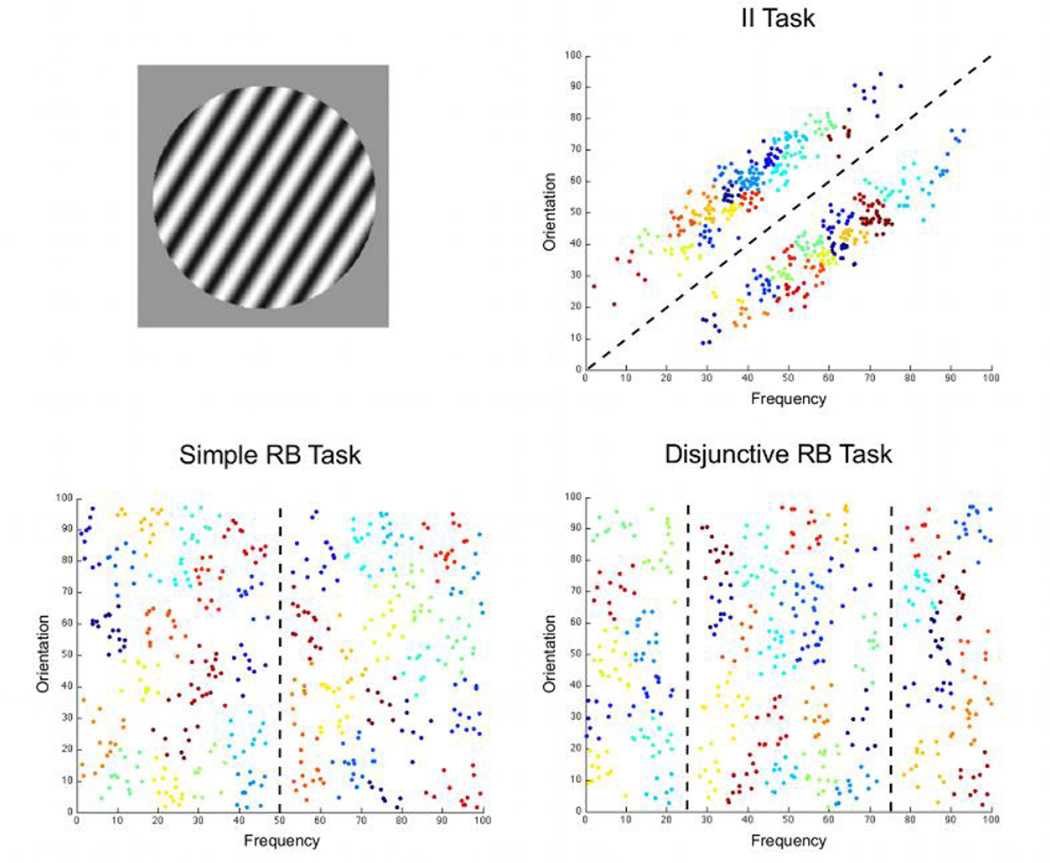

A body of behavioral and neurobiological evidence suggests that the brain areas associated with categorization are organized in relatively separate category-learning systems and that different categorization tasks engage the systems differently (Ashby & Maddox, 2005; Nomura & Reber, 2008; Poldrack & Foerde, 2008). Information-integration (II) tasks, which require the integration of information from two or more stimulus components at a pre-decisional stage, recruit a procedural-learning system that relies on feedback-based learning of associations between stimuli and responses. An example is shown in the top-right panel of Figure 1, where information about the orientation and width of stripes must be integrated to categorize the stimuli correctly. Rule-based (RB) tasks, in which the optimal strategy is easy to verbalize and can be learned through a logical reasoning process, recruit a declarative-learning system that is based on explicit reasoning and hypothesis testing. An example is shown in the bottom-left panel of Figure 1, where the simple verbal rule “respond A if the stripes are narrow and B if the stripes are wide” can solve the task.

Figure 1.

Information about the tasks and stimuli used in the present study. The top-left panel shows an example stimulus. The other three panels show the category structures in each of the tasks. Dashed lines represent optimal bounds separating the two categories and different colors represent different clusters of stimuli revealed by a k-means cluster analysis (see section 2.4).

The COVIS model of category learning (Ashby, Alfonso-Reese, Turken, & Waldron, 1998; for recent versions of the model, see Ashby, Paul, & Maddox, 2011; Ashby & Valentin, 2005) is a formal description of these two learning systems and the brain regions subserving each of them. Learning of sensory-motor associations in the COVIS procedural system is implemented in the synapses from visual sensory neurons onto medium spiny neurons in the striatum, the input structure of the basal ganglia. The output of the basal ganglia controls motor responses though its influence on premotor areas, via the ventral lateral and ventral anterior thalamic nuclei. Learning through explicit reasoning and hypothesis testing in the COVIS declarative system is implemented in a network of frontal, medial temporal and basal ganglia areas. Candidate rules are maintained in working memory representations in the lateral PFC, via a series of reverberating loops through the medial dorsal nucleus of the thalamus. These rules are selected from among all available rules via a network that includes the anterior cingulate cortex. If evidence is accumulated that a particular rule does not lead to accurate performance, the rule is switched by reducing attention to it via frontal input to the head of the caudate nucleus, which ultimately inhibits the thalamic-PFC loops in charge of working memory maintenance.

Many behavioral studies have found dissociable effects of experimental manipulations in RB and II tasks. For example, switching the locations of response keys after categorization learning interferes with performance in II tasks, but not with performance in RB tasks (Ashby, Ell, & Waldron, 2003; Maddox, Bohil, & Ing, 2004), suggesting that category learning is tied to specific motor responses only in the former. Learning in II tasks is also disrupted if feedback is absent (Ashby, Queller, & Berretty, 1999), presented before the stimulus (Ashby, Maddox, & Bohil, 2002) or delayed by a few seconds (Maddox, Ashby, & Bohil, 2003). The same manipulations have smaller or no effects in RB tasks. On the other hand, asking participants to perform a simultaneous task during category learning, which demands working memory and attention, interferes more with RB tasks than with II tasks (Waldron & Ashby, 2001). Similarly, dual-task interference has been found for declarative, but not implicit knowledge about a probabilistic categorization task (Foerde, Poldrack, & Knowlton, 2007).

Neurobiological studies also suggest that the brain areas involved in category learning differ for II and RB tasks. For example, in the only fMRI study that has directly contrasted task-related activity in II and RB tasks, Nomura et al. (2007) found that activity in the hippocampus, anterior cingulate cortex, middle frontal gyrus and body of the caudate all correlated with successful performance in the RB task, whereas only activity in the body and tail of the caudate correlated with successful performance in the II task. Direct comparison of task-related activity between the tasks in several regions of interest (ROIs) revealed higher activity for the RB than the II task in the hippocampus, and higher activity for the II than the RB task in the caudate, suggesting a dissociation between a hippocampal-based declarative system and a basal ganglia-based procedural system in category learning.

Several other studies have found results that are generally in agreement with COVIS. During the early stages of learning of RB categorization tasks, accuracy is found to be correlated with activation in the hippocampus, head of the caudate, dorsolateral prefrontal cortex, ventrolateral prefrontal cortex, and posterior parietal cortex (Filoteo et al., 2005; Helie, Roeder, & Ashby, 2010; Seger & Cincotta, 2006). On the other hand, activity during learning of II tasks increases in the body and tail of the caudate, and in the putamen (Cincotta & Seger, 2007; Waldschmidt & Ashby, 2011).

Other studies have used a “weather prediction” categorization task, in which feedback about category membership is usually probabilistic. Participants can use a variety of strategies to achieve good performance in this task (Gluck, Shohamy, & Myers, 2002) and neuroimaging studies suggest that dissociable learning systems might underlie such strategies (for a review, see Poldrack & Foerde, 2008). For example, accuracy in the weather prediction task correlates with activity in the hippocampus in normal conditions and with activity in the striatum when a secondary task must be simultaneously performed (Foerde, Knowlton, & Poldrack, 2006). In addition, activity in the hippocampus and the basal ganglia have been found to be negatively correlated across participants and across learning stages in the weather prediction task (Poldrack et al., 2001). Using a deterministic version of this task, Seger et al. (2011) found that activity in the head of the caudate was higher in trials in which participants indicated that they implicitly or automatically knew the correct response, whereas activation in hippocampus was related to trials in which participants indicated that they had an episodic memory of the stimulus and its correct response.

In sum, the results of both behavioral and neuroimaging studies are in line with the idea that dissociable systems might underlie category learning.

1.2 Automaticity in categorization

Unlike early learning performance, other evidence suggests that similar mechanisms might come to control performance after categories have been overlearned and automatic categorization behavior has developed (Ashby & Crossley, 2012). For instance, behavioral studies of early category learning have found button-switch interference in II tasks, but not in RB tasks, and dual-task interference in RB tasks, but not in II tasks. After the development of automatic categorization behavior, button-switch interference is found in both types of tasks, whereas dual-task interference is found in neither (Helie, Waldschmidt, & Ashby, 2010). These results are in line with recent models of automaticity in II (SPEED: Ashby, Ennis, & Spiering, 2007) and RB (Helie & Ashby, 2009) categorization, which propose that responses are selected in automatic categorization through direct projections from sensory to motor cortex, regardless of the type of categorization task that has been trained.

This hypothesis is supported by several neuroimaging studies. First, overtraining in a face categorization task is related to an increase in coherence between the right fusiform face area and premotor cortex (DeGutis & D’Esposito, 2009). Although this study did not show differences in task-related activity between well-practiced and novel tasks in any brain region, more recent studies have reported such differences. Second, Helie et al. (2010) trained subjects in two RB tasks for almost 12,000 trials distributed over 20 sessions, with four of these sessions (1, 4, 10 and 20) performed in an MRI scanner. They found that the correlation between accuracy and task-related activity increased throughout training in ventrolateral prefrontal, motor, and premotor cortices, whereas the same correlation decreased in hippocampus, basal ganglia and thalamic nuclei. These results suggest that hippocampus and subcortical areas have a role in early RB category learning, whereas automatic RB categorization is mostly supported by premotor and prefrontal cortical areas. Finally, Waldschmidt and Ashby (2011) conducted a similar study in which participants practiced an II task for 20 sessions and were scanned on four different occasions (sessions 2, 4, 10 and 20). They found an increase in task-related activity for motor and premotor regions across training. Among subcortical areas, only the putamen showed significant task-related activity throughout training. The correlation between such activity and performance decreased with the development of automaticity.

In sum, a body of behavioral and neuroimaging data supports a model of categorization in which early learning of RB and II tasks engages different systems, each involving several cortical and subcortical areas, whereas automatic performance in both tasks engages mostly cortical motor and premotor areas.

1.3 Open questions and the current study

There are still open questions regarding the neural substrates of categorization. For example, neuroimaging studies of early category learning have not found a completely clear-cut dissociation between systems in RB and II tasks. Areas thought to be involved in declarative learning, such as the hippocampus and the head of the caudate, have been found to be active during learning of II tasks (Cincotta & Seger, 2007), whereas areas thought to be involved in procedural learning, such as the putamen and body and tail of the caudate, have been found to be active during learning of RB tasks (Seger & Cincotta, 2006; see also Ell, Marchant, & Ivry, 2006). One possibility is that these areas have a role in processes that are used across different tasks, such as feedback processing for the head of the caudate (Cincotta & Seger, 2007; Seger & Cincotta, 2005).

However, part of the problem might be that these different studies have used different procedures. Most neuroimaging studies in the literature have not directly compared brain activity during II and RB tasks, with the exception of Nomura et al. (2007). Furthermore, most studies have not controlled for the possibility that participants could use explicit strategies in II tasks or procedural strategies in RB tasks. Specifically, COVIS predicts that people show a bias to try explicit rules early in any categorization task (Ashby et al., 1998). Thus, a comparison between RB and II tasks should consider only those trials during II learning in which rules are less likely to be used by the participants.

Similarly, no previous studies have directly compared II and RB tasks across the development of automaticity. One previous behavioral study has shown similar patterns of behavior in II and RB tasks after the development of automaticity (Helie, Waldschmidt, et al., 2010), but it is not clear whether this behavioral similarity is accompanied by similarity in patterns of task-related brain activity.

Here, we directly compare brain activation patterns in II and RB tasks throughout the development of automatic categorization using multi-voxel pattern analysis (MVPA). Imaging data from three different groups of participants were analyzed. Each group was scanned while categorizing circular sine-wave gratings that varied in the width and orientation of the dark and light bars. The structure of the categorization task varied across groups. The II task (see top-right panel in Figure 1) required integration of information about width and orientation. The simple RB task (see bottom-left panel in Figure 1) required attending to a single stimulus dimension and classifying stimuli according to their position relative to a single category bound. The disjunctive RB task (see bottom-right panel in Figure 1) required attending to a single stimulus dimension as well, but classifying stimuli according to their position relative to two category bounds.

Two different RB tasks were included in this study because each matched a different feature of the II task. The Simple RB task has a similar structure to that of the II task, with both involving a single category boundary. However, results from several previous studies strongly suggest that the II task should be much more difficult for people to master than the Simple RB task (e.g., Ashby, Ell, & Waldron, 2003; Ashby, Maddox, & Bohil, 2002; Maddox, Ashby, & Bohil, 2003). The Disjunctive RB task was included with the expectation that it would match better the II task for difficulty. However, this task had a different structure from the other two tasks, involving learning of two category boundaries instead of just one.

To summarize, previous evidence suggests that the two RB tasks are likely to be learned through an explicit rule, whereas the II task is likely to be learned through an implicit strategy. The simple RB task is expected to be easy to learn, whereas the Disjunctive RB and II tasks should be more difficult to learn. After category learning is achieved, overtraining in all three tasks should ultimately lead to automatic categorization behavior.

Subjects were trained in the three tasks for 20 sessions, with 4 sessions conducted inside an MRI scanner. Importantly, the first scanning session was the first training session in both RB tasks, but it was the second training session in the II task. Thus, it is unlikely that subjects in the II task would have been trying explicit rules by the time of scanning.

Three analyses were performed. These analyses are novel in two important ways: they focus on comparisons across tasks instead of on activity in each particular task and they are multivariate instead of univariate. All analyses focused on ROIs thought to be involved in categorization on the basis of previous research and theory.

In the unique patterns analysis, activation patterns from a particular brain region were classified according to the task performed by the subject. Accurate classification of these patterns reveals information in the region that is unique to one of the tasks, thus helping to discriminate among them. In the common patterns analysis, activation patterns from a particular brain region were classified according to the category of the stimuli producing them. The classifier was trained with patterns from participants who completed one categorization task and tested with patterns from participants who completed a different task. Accurate classification of these patterns reveals category-relevant information in the region that is common across the tasks performed by the two groups. In the classification similarity analysis, activation patterns from a particular brain region were again classified according to the category of the stimuli producing them. Here, however, the classifier was trained and tested with patterns from the same group. Which patterns the classifier correctly and incorrectly classifies reveals information about the category representations present in a particular brain region. Similar representations in two different regions should lead to similar vectors of hits and errors made by the classifier. The similarity of these confusion vectors was computed for all pairs of regions considered. Because the resulting matrices of representational similarities were indexed by region, they could be compared across groups. In contrast, the original patterns cannot be compared in this way, because they are indexed by specific stimuli and categories, which differ across tasks (Kriegeskorte, Mur, & Bandettini, 2008).

2. Materials and Methods

2.1. Sample

Twenty-four healthy undergraduate students from the University of California, Santa Barbara voluntarily participated in this study in exchange for course credit or a monetary compensation. Eight participants trained in each of the three tasks depicted in Figure 1 and provided data for the analyses. All participants gave their written informed consent to participate in the study. The institutional review board of the University of California, Santa Barbara, approved all procedures in the study.

Standard GLM-based analyses of the imaging data acquired on this sample have been previously reported (Helie, Roeder, et al., 2010; Waldschmidt & Ashby, 2011). The data from three of the 11 participants in the original II group were not included here because some data needed to complete all analyses was missing.

2.2. Stimuli and apparatus

The stimuli were circular sine-wave gratings of constant contrast and size that varied in orientation from 20 to 110 and in frequency from 0.25 to 3.58 cpd. Figure 1 shows an example stimulus together with the category structures used to train participants in each of the tasks. Stimuli were presented and responses were recorded using MATLAB augmented with the Psychophysics Toolbox (Brainard, 1997), running on a Macintosh computer. For a more detailed description of the stimuli and apparatus, see Helie, Waldschmidt, and Ashby (2010).

2.3. Neuroimaging

A rapid event-related fMRI procedure was used. Images were obtained using a 3T Siemens TIM Trio MRI scanner at the University of California, Santa Barbara Brain Imaging Center. The scanner was equipped with an 8-channel phased array head coil. Cushions were placed around the head to minimize head motion. A localizer, a GRE field mapping (3 mm thick; FOV: 192 mm; voxel: 3×3×3 mm; FA=60°), and a T1-flash (TR=15 ms; TE=4.2 ms; FA=20°; 192 sagittal slices 3-D acquisition; 0.89 mm thick; FOV: 220 mm; voxel: 0.9×0.9×0.9 mm; 256×256 matrix) were obtained at the beginning of each scanning session, and an additional GRE field-mapping scan was acquired at the end of each scanning session. Functional runs used a T2*-weighted single shot gradient echo, echo-planar sequence sensitive to BOLD contrast (TR: 2000 ms; TE: 30 ms; FA: 90°; FOV: 192 mm; voxel: 3×3×3 mm) with generalized auto calibrating partially parallel acquisitions (GRAPPA). Each scanning session lasted approximately 90 min.

2.4. Neuroimaging Analysis

Preprocessing was conducted using FEAT (fMRI Expert Analysis Tool) version 5.98, part of FSL (www.fmrib.ox.ac.uk/fsl). Volumes from all the blocks in a single session were concatenated into a single series. Preprocessing included motion correction using MCFLIRT (Jenkinson, Bannister, Brady, & Smith, 2002), slice timing correction (via Fourier time-series phase-shifting), BET brain extraction, and a high pass filter with a cutoff of 100 s. The data were not spatially smoothed during preprocessing. Each functional scan was registered to the corresponding structural scan using linear registration (FLIRT: Jenkinson et al., 2002; Jenkinson & Smith, 2001). Each structural scan was registered to the MNI152-T1-2 mm standard brain using nonlinear registration (FNIRT: Anderson et al., 2007). The resulting linear and nonlinear transformations, and their inverse transformations, were jointly used to transform volumes from subject space to standard space and vice-versa (see below). All transformations used nearest-neighbor interpolation.

The rest of the analyses were performed using MATLAB (The MathWorks, Natick, MA, USA). The first step in preparing the pre-processed data for MVPA was to generate 40 maps of t values (t-maps) for each scanning block and each subject in the experiment. Each individual t-map represented activity related to the correct classification of a small cluster of stimuli. Estimating parameters for small stimulus clusters had the advantage of combining data from several trials in a session to achieve more reliable estimates. This procedure was possible because the 480 stimuli presented to participants in each task were highly similar and all fell within a small region of the stimulus space. For each category within each task, all 240 two-dimensional vectors describing stimuli in physical space were used as input to a k-means clustering analysis. This procedure found the 20 clusters that minimized the sum of Euclidean distances from each data point to its corresponding cluster centroid. The analysis was repeated 3,000 times, each with a different randomly selected starting position for the cluster centroids, and the result with the lowest sum of Euclidean distances was retained. The resulting clusters are represented by circles of different colors in Figure 1.

A modification of the finite BOLD response (FBR) method proposed by Ollinger et al. (2001) was used to obtain a t-map associated to each of the stimulus clusters. The FBR method avoids assumptions about the HRF that are inherent to parametric estimation methods and it is more successful than the latter in unmixing the responses to temporally adjacent events in event-related designs (Turner, Mumford, Poldrack, & Ashby, 2012). For each stimulus cluster, a separate FBR analysis was run which included eight parameters for each of three event types: the target cluster, crosshair, feedback, and all stimulus presentations excluding those of the target stimulus cluster. This iterative estimation method has the advantage of reducing collinearity among regressors, which produces more reliable parameter estimates that outperform other estimation methods when used for MVPA (Mumford, Turner, Ashby, & Poldrack, 2012; Turner et al., 2012). A contrast was created by taking the parameter estimates representing the peak of activity related to the target cluster (parameters 2–5) and subtracting from these the estimates representing the early (parameter 1) and late (parameters 6, 7, and 8) components of the activity related to the target cluster. The resulting t-maps were then submitted to classification analyses. Such vectors of t values have been found to produce better classifier performance than vectors of raw beta estimates (Misaki, Kim, Bandettini, & Kriegeskorte, 2010).

The classification analyses focused on a set of 19 anatomical ROIs. Information about these ROIs is summarized in Table 1, including the abbreviations that will be used to refer to each ROI throughout the rest of this paper. The ROIs included in our analyses have been found to be involved in visual categorization by several previous studies (see section 1) and/or have been proposed to be involved in categorization learning and automaticity by neurobiologically detailed theories of such cognitive abilities (COVIS: Ashby et al., 1998; SPEED: Ashby et al., 2007). Table 1 includes information about the hypothesized role of each ROI in categorization, together with references containing a more in-depth discussion of such functional roles and the empirical evidence for them.

Table 1.

Information about the ROIs included in this study.

| ROI | Abbreviation | Size (2×2×2 mm voxels) |

Reason to be included | References |

|---|---|---|---|---|

| Putamen | PUT | 3,939 | COVIS: Response learning and selection | Ashby, Ennis & Spiering, 2007 |

| Body and Tail of Caudate | CD-bt | 1,330 | COVIS: Response learning and selection | Ashby et al., 1998; Ashby, Paul & Maddox, 2011 |

| Head of the Caudate | CD-hd | 2,063 | COVIS: Rule switching | Ashby et al., 1998; Ashby, Paul & Maddox, 2011 |

| Globus Pallidus | GP | 2,337 | COVIS: Response selection and rule switching | Ashby et al., 2005; Ashby, Ennis & Spiering, 2007 |

| Motor Thalamus | va/vlTH | 2,870 | COVIS: Response selection | Ashby, Ennis & Spiering, 2007 |

| Frontal Thalamus | mdTH | 4,127 | COVIS: Rule maintenance in working memory | Ashby et al., 2005 |

| Primary Motor | M1 | 29,115 | Motor movement control | Dum & Strick, 2005 |

| Dorsal Premotor | dPM | 15,460 | SPEED: Motor representations | Ashby, Ennis & Spiering, 2007 |

| Ventral Premotor | vPM | 4,215 | SPEED: Motor representations | Ashby, Ennis & Spiering, 2007 |

| Supplementary Motor | SMA | 3,752 | SPEED: Motor representations | Ashby, Ennis & Spiering, 2007 |

| Posterior ACC | pACC | 2,143 | SPEED: Motor representations | Ashby, Ennis & Spiering, 2007 |

| Primary Visual | V1 | 16,378 | Visual input to cortex | Chalupa & Werner, 2004 |

| Extrastriate Cortex | ESC | 22,607 | COVIS: Visual representations | Ashby et al., 1998 |

| Inferotemporal Cortex | IT | 16,610 | COVIS: Visual representations | Ashby et al., 1998 |

| Ventrolateral PFC | vlPFC | 12,510 | COVIS: Rule maintenance in working memory | Ashby et al., 2005 |

| Dorsolateral PFC | dlPFC | 21,990 | Evidence of rule representations | Muhammad, Wallis Miller, 2006; Seger & Cincotta, 2006 |

| Medial ACC | mACC | 2,485 | COVIS: Rule selection | Ashby et al., 1998, 2005 |

| Pre SMA | pre-SMA | 2,218 | Possible role in switching between systems | Ashby & Crossley, 2010b; Hikosaka & Isoda, 2010 |

| Hippocampus | HPC | 5,837 | COVIS: Rule storage | Ashby, Paul & Maddox, 2011 |

The anatomical boundaries of each ROI were created in MNI152-T1-2 mm standard space using the Harvard-Oxford Cortical Structural Atlas, the Harvard-Oxford Subcortical Structural Atlas, or the Oxford Thalamic Connectivity Probability Atlas (all three included in FSL). The anterior cingulate cortex was divided into mACC and pACC based on structural landmarks (Vogt, Hof, & Vogt, 2004). The premotor cortex was divided in regions (pre-SMA, SMA, vPM and dPM) as defined by Picard and Strick (2001). The dlPFC was extracted following the definition in Petrides and Pandya (2004). The CD-hd was extracted according to Nolte (2008) and the CD-bt was obtained by extracting CD-hd from the caudate mask. Table 1 includes a column with information about the size of each ROI.

Three separate classification analyses were performed on the data from each group. The goal of the common patterns analysis was to determine which ROIs responded similarly in different categorization tasks. Because this analysis required presenting data from different subjects to the classifier, the original t-maps were transformed from subject space to standard space using FLIRT and FNIRT (see above) and the analysis was performed in this common space. A support-vector machine (SVM) with a linear kernel was trained to determine the category to which a cluster belonged from the t-values in a particular ROI. The classifier was trained with all the data from one task (the t-values corresponding to each of the 40 clusters and each of the 8 subjects) and then tested with all the data from a second task. The analysis was repeated twice for each pair of tasks (i.e., RB1/RB2, RB1/II, RB2/II), switching across repetitions the data set used for training and testing. The average accuracy for both repetitions is reported. The analysis was repeated for each pair of tasks (3 in total), each ROI (19 in total), and each scanning session (4 in total).

The goal of the unique patterns analysis was to determine which ROIs carry patterns of activation that are different across different categorization tasks. Because this analysis required presenting data from different subjects to the classifier, the original t-maps were transformed from subject space to standard space using FLIRT and FNIRT (see above) and the analysis was performed in this common space. An SVM with a linear kernel was trained with data from two out of the three groups (RB1 and RB2, RB1 and II, or RB2 and II), to determine the task in which a participant was engaged from a vector of t-values. A 64-fold cross-validation method was used. For each fold, all vectors from one subject of each group were selected as testing data, and the rest of the data were used to train the classifier to determine the experimental task to which each vector belonged. Mean accuracy with the test vectors is reported. The analysis was repeated for each pair of tasks (3 in total), each ROI (19 in total), and each scanning session (4 in total).

Permutation tests were carried out to evaluate the statistical significance of each of the obtained classification accuracies. Class labels were randomly permuted 500 times and the full analysis was performed with these permuted labels. Empirical accuracy distributions were built from the results and the probability of observing a mean accuracy equal to or larger than the accuracy actually observed was computed in each case. These p-values were corrected for multiple comparisons by holding the false-discovery rate at q = 5% (Benjamini & Hochberg, 1995).

Finally, the goal of the classification similarity analysis was to evaluate the similarity of category representations in different ROIs within each task. Because this analysis required training and testing the classifier with data from a single participant at a time, the original untransformed t-maps were used. The ROI masks were transformed from standard space to subject space using FNIRT and FLIRT (see above). In a separate analysis for each group, a classifier was trained to decode the category to which each cluster-related vector belonged. For each ROI and each subject, the 40 cluster-related vectors of t-values were used to train a linear SVM using a leave-one-out cross-validation procedure. This resulted in a vector of 40 binary values for each subject, representing whether the classifier could correctly classify each one of the cluster-related vectors when it was used in the test. These vectors were averaged across subjects to obtain a single confusion vector for each ROI, which represents the level to which activity patterns within that ROI could be used to accurately decode the category of a particular cluster. If, across subjects, two different ROIs encoded category information in a similar way, then a classifier trained with patterns from those ROIs should tend to correctly classify the same clusters, and their confusion vectors should be correlated. The correlation between confusion vectors of each pair of ROIs was computed and subtracted from 1 to get a representational dissimilarity matrix (RDM). Because these RDMs are indexed by ROI, it is possible to compare them across different tasks by computing their correlation, which represents how similar the patterns of confusion similarities are across tasks. Following the recommendation of Kriegeskorte et al. (2008), correlations between RDMs were tested for significance using a permutation test, in which the labels of one of the matrices were randomly re-ordered before computing their correlation. This process was repeated 500 times to obtain an empirical distribution of the RDM correlation under the null hypothesis.

3. Results

3.1 Behavioral Results

A detailed analysis of the behavioral data from this experiment is presented in Helie, Waldschmidt and Ashby (2010), as part of a more extensive study that included more participants and additional behavioral tests. Several results suggest that automaticity had developed by the final scanning session. Immediately after this session, subjects in all groups showed a button-switch interference effect and lack of a dual-task interference effect. These two effects indicate that the participants’ categorization behavior by the final scanning session showed two important features of automaticity: behavioral inflexibility and efficiency (Shiffrin & Schneider, 1977). Furthermore, by the final scanning session accuracy and response time (RT) had reached a near-asymptotic level and differences among groups in these two measures had disappeared.

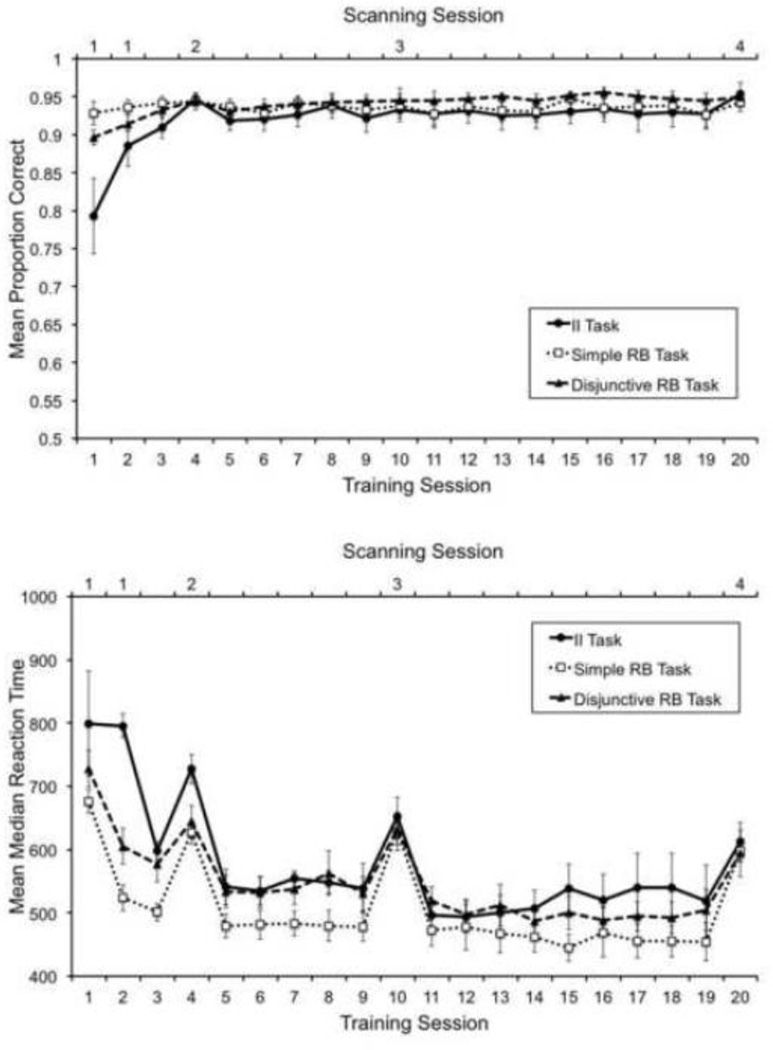

These features were also observed in the learning curves of the sub-sample of participants included in the present study. Figure 2 shows mean accuracies (top panel) and mean median correct RTs (bottom panel) for each group across training sessions. Both accuracies and RTs during the final session are very similar across groups. A one-way ANOVA comparing mean percent correct across groups in session 20 showed no statistically significant differences, F(2, 24) =1.104, p>.1. A one-way ANOVA comparing RTs across groups in session 19 showed no statistically significant differences, F(2, 24) =.54, p>.1. The reason to analyze data from session 19 instead of data from session 20 was that RT changed importantly during scanning sessions, compared to all other training sessions.

Figure 2.

Mean accuracy (top) and mean median correct response times (bottom) across training sessions. Scanning sessions are marked at the top of each panel. Error bars represent standard errors.

To evaluate at what point in training the learning curves reached an asymptotic level, we computed an estimate of the local slope of the curve for each subject and each training session. Scanning sessions were excluded from the analysis of RTs. For each training session, we took the data from that session, the previous session and the following session and found the line that minimized mean squared deviations from these three data points. At the asymptote of the learning curve, the slope of this line should be near zero. We tested whether the mean slope deviated from zero in each session and each group through a one-sample t-test. These tests were not corrected for multiple comparisons, to avoid decreasing the power of the test to detect small changes in learning curves (i.e., a more conservative criterion for asymptotic performance).

For the accuracy learning curves, mean slopes were significantly different from zero (α=.05) up to training session 3 in the II and Disjunctive RB tasks, but stopped being significantly different from zero in session 4. Mean slopes were never significantly different from zero in the Simple RB task. For the RT learning curves, mean slopes were significantly different from zero up to training session 5 in the II and Disjunctive RB tasks, but stopped being significantly different from zero in session 6. In the Simple RB task, mean slopes were significantly different from zero up to training session 3, but stopped being significantly different from zero in session 5.

Thus, the behavioral results with our sample of participants mirrored those obtained with the full sample analyzed by Helie, Waldschmidt and Ashby (2010): by the final scanning session, accuracy and RT had reached near-asymptotic levels and differences among groups in these two measures had disappeared. These results, together with the results of the follow-up tests run by Helie et al. and summarized previously, suggest that automaticity had developed by the final scanning session.

Furthermore, our analysis of the slopes of the accuracy learning curves indicates that by the second scanning session (training session 4) category learning had reached asymptote in all groups. In sum, the behavioral results suggest that category learning was still underway during the first scanning session, but was finished by the second scanning session. Furthermore, automaticity developed at some point during the period starting at the second scanning session and ending at the final scanning session. Below, we interpret the results of neuroimaging data taking into consideration this distinction between a stage of category learning (scanning session 1) and a stage of automaticity development (scanning sessions 2 through 4).

The behavioral data were also analyzed by fitting several classification models to each subject’s responses, where each model implemented a different strategy that could be adopted to solve the categorization task. A full description of the models and the results for a larger sample of subjects can be found in Helie et al. (2010). In our case, the most important result concerns to what extent subjects in the II task were trying explicit rules instead of an optimal strategy by the time of their first scan. As indicated before, the main reason to scan these subjects for the first time during the second training session was because at this point they should have stopped trying to learn the categorization task through explicit rules. As expected, during the first scanning session, the data from all subjects in the II task was fit best by a model incorporating an optimal categorization strategy (with fit evaluated through the Bayesian Information Criterion). However, as shown in Figure 2, these subjects had not yet fully learned the categorization task and their performance was comparable to that shown by subjects in the Disjunctive RB task during their first scanning session (training session 1). A one-way ANOVA comparing accuracy in the first scanning session across groups found no significant differences, F(2, 24) =1.57, p>.1, suggesting that groups were unlikely to be at different stages of category learning during their first scanning session.

3.2 Common and Unique Patterns Analyses

Before presenting the results of the common and unique patterns analyses, it seems necessary to comment on what exactly is possible to learn from each. In the common patterns analysis, high classifier accuracy for a particular ROI reveals that the activation in that ROI is diagnostic about the category membership of each cluster of stimuli and also that the ROI responds similarly in the different tasks. Such a finding suggests that the ROI is mediating some basic categorization process that is not task specific. The different categorization tasks included in this analysis did not share similar category structures (see Figure 1), but they did share the same motor responses. Thus, for example, we would expect to find high common-patterns classifier accuracy in motor regions. On the other hand, high accuracy in non-motor regions would be more surprising. For example, all categorization tasks might recruit some similar attentional processes, but it seems unlikely that perceptual attention would differ for stimuli in different categories, especially since the categories differed across tasks. In fact, the only factor that was identical across tasks were the responses, so one might therefore suspect that high accuracy should be found only in ROIs that hold information about motor responses.

High classifier accuracy in the unique patterns analysis suggests that activation in that ROI is diagnostic about which task an individual is performing and is consistent across stimuli, categories and subjects. Even so, it is important to note that such activation may or may not be informative about the category membership of the stimulus cluster. In fact, because the classifier is trained to decode task- from stimulus-related activation regardless of category membership, it is likely that the activation patterns that are useful for this classification are not particularly diagnostic about category membership. This is an important difference between the unique patterns analysis and the common patterns analysis.

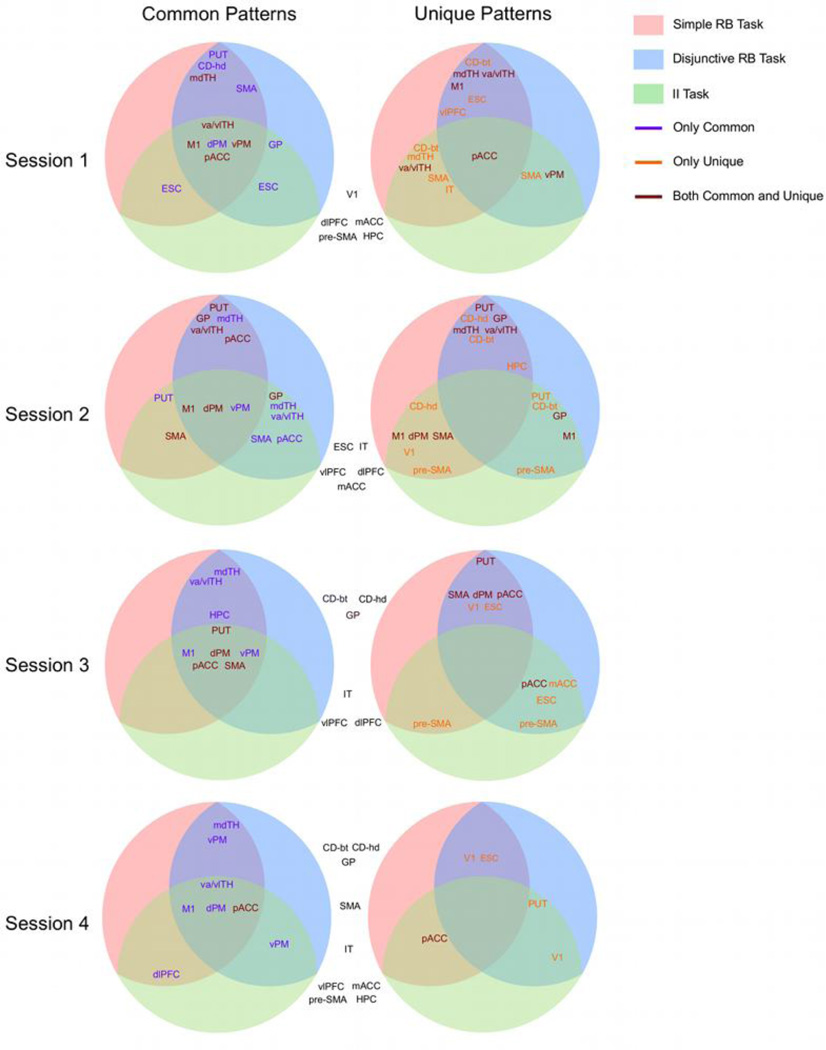

The results of the common patterns analysis and the unique patterns analysis are summarized in Figure 3, in the form of several circular diagrams, one for each combination of analysis and scanning session. In each circle, different tasks are represented by areas of different colors and classification results involving a pair of tasks are displayed at the intersection of their corresponding areas. The information displayed is a list of the ROIs for which accuracy was significantly above chance in the corresponding classification task. For example, the circle at the top-left corner of Figure 3 shows the putamen in the intersection of the two RB tasks, but not in the region representing the II task. This means that, when vectors of activation from putamen were used as input to the classifier, accuracy was significantly above chance in the analysis involving both RB tasks, but not in the analyses involving the II task and either RB task. Because this circle shows results from the common patterns analysis of data from the first session, the result suggests the presence of patterns of activation common to both RB tasks in putamen, but no evidence of patterns common to the II task and either RB task in this ROI. In the same diagram, we see primary motor cortex listed in the intersection of all tasks. This means that, when vectors of activation from primary motor cortex were input to the classifier, accuracy was significant in all three analyses.

Figure 3.

Graphical summary of the results of the common patterns and unique patterns analyses. Each circle summarizes the results of one analysis in one particular session. Tasks are represented by colored areas inside the circle and the results of an analysis involving two tasks are presented at the intersection of the two corresponding areas. The list of ROIs represents those brain regions for which the analysis resulted in significantly accurate classification. A purple font indicates significant accuracy only in the common patterns analysis, an orange font indicates significant accuracy only in the unique patterns analysis, and a brown font indicates significant accuracy in both analyses. The list of ROIs outside the circles in black font indicates those brain regions for which neither of the two analyses resulted in significantly accurate classification. Abbreviations: vlPFC, ventrolateral prefrontal cortex; dlPFC, dorsolateral prefrontal cortex; mACC and pACC, middle and posterior anterior cingulate cortex, respectively; HPC, hippocampus; SMA, supplementary motor area; dPM and vPM, dorsal and ventral premotor cortex, respectively; M1, primary motor cortex; V1, primary visual cortex; ESC, extrastriate visual cortex; IT, inferotemporal cortex; CD-hd, head of the caudate nucleus; CD-bt, body and tail of the caudate nucleus; GP, globus pallidus; PUT, putamen; mdTH, medial dorsal nucleus of the thalamus; va/vlTH, ventral anterior and ventral lateral nuclei of the thalamus.

The information displayed in the diagrams corresponding to the unique patterns analysis should be interpreted analogously. For example, the circle at the top-right of Figure 3 shows the body and tail of the caudate in the intersection of the simple RB task and the other two tasks, but not in the intersection between the II task and the disjunctive RB task. This means that, when vectors of activation from the body and tail of the caudate were used as input to the classifier, it was possible to discriminate the simple RB task from the other two tasks with significant accuracy, suggesting patterns of activation unique to the simple RB task in this region of the caudate.

Furthermore, different font colors are used to indicate which ROIs showed significant accuracy only in the common patterns analysis (purple), only in the unique patterns analysis (orange), or in both analyses (brown). For example, the top-left circle shows the putamen in purple font in the intersection between the two RB tasks. This means that activation from putamen supported high classification accuracy in the common patterns analysis, but it did not support discrimination between the tasks in the unique patterns analysis. On the other hand, the mdTH is shown in brown in the intersection between the two RB tasks, meaning that activation from this ROI led to high accuracy in both classification analyses. Finally, the top-right circle shows the body and tail of the caudate in orange font in the intersection between the two RB tasks. This means that this ROI supported discrimination between the two RB tasks in the unique patterns analysis, but not accurate performance in the common patterns analysis.

Finally, ROIs for which accuracy was not significant in either analysis for a particular session are shown outside the diagrams. Table 2 shows more detailed information about the accuracy achieved by the classifier in the common patterns analysis, whereas Table 3 shows the detailed results for the unique patterns analysis.

Table 2.

Statistically significant (permutation test, p < .05) accuracies of the MVPA classifier in the common patterns analysis. Different columns show results from different scanning sessions.

| II Task / Simple RB Task | ||||

|---|---|---|---|---|

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Putamen | .57 | .60 | ||

| Motor Thalamus (va, vl) | .56 | .58 | ||

| Motor Cortical Areas | ||||

| Primary Motor | .74 | .71 | .74 | .74 |

| Dorsal Premotor | .66 | .66 | .66 | .57 |

| Ventral Premotor | .59 | .62 | .61 | |

| SMA | .56 | .58 | ||

| Posterior ACC | .59 | .58 | .58 | |

| Visual Cortical Areas | ||||

| Extrastriate Cortex | .57 | .54 | .53 | .55 |

| Other Cortical Areas | ||||

| Dorsolateral PFC | .55 | |||

| II Task / Disjunctive RB Task | ||||

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Putamen | .60 | |||

| Pallidum | .56 | .56 | ||

| Motor Thalamus (va, vl) | .60 | .59 | .57 | |

| Frontal Thalamus (md) | .58 | |||

| Motor Cortical Areas | ||||

| Primary Motor | .72 | .70 | .78 | .74 |

| Dorsal Premotor | .57 | .65 | .63 | .63 |

| Ventral Premotor | .61 | .61 | .63 | .60 |

| SMA | .57 | .56 | ||

| Visual Cortical Areas | ||||

| Extrastriate Cortex | .56 | |||

| Simple RB Task / Disjunctive RB Task | ||||

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Putamen | .56 | .56 | .57 | |

| Head of the Caudate | .56 | |||

| Pallidum | .57 | |||

| Motor Thalamus (va, vl) | .63 | .57 | .59 | .58 |

| Frontal Thalamus (md) | .60 | .56 | .58 | .57 |

| Motor Cortical Areas | ||||

| Primary Motor | .76 | .75 | .78 | .76 |

| Dorsal Premotor | .63 | .66 | .64 | .61 |

| Ventral Premotor | .61 | .61 | 60 | .63 |

| SMA | .60 | .61 | ||

| Posterior ACC | .61 | .63 | ||

| Other Cortical Areas | ||||

| Hippocampus | .56 | |||

Table 3.

Statistically significant (permutation test, p < .05) accuracies of the MVPA classifier in the unique patterns analysis. Different columns show results from different scanning sessions.

| II Task / Simple RB Task | ||||

|---|---|---|---|---|

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Body and Tail of Caudate | .58 | |||

| Head of the Caudate | .59 | |||

| Motor Thalamus (va, vl) | .61 | |||

| Frontal Thalamus (md) | .59 | |||

| Motor Cortical Areas | ||||

| Primary Motor | .56 | |||

| Dorsal Premotor | .56 | |||

| SMA | .64 | .58 | ||

| Posterior ACC | .57 | .67 | ||

| Visual Cortical Areas | ||||

| Primary Visual | .55 | |||

| Inferotemporal Cortex | .58 | |||

| Other Cortical Areas | ||||

| Pre SMA | .61 | .57 | ||

| II Task / Disjunctive RB Task | ||||

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Putamen | .64 | .58 | ||

| Body and Tail of Caudate | .65 | |||

| Pallidum | .60 | |||

| Motor Cortical Areas | ||||

| Primary Motor | .57 | |||

| Ventral Premotor | .65 | |||

| SMA | .57 | |||

| Posterior ACC | .62 | .65 | ||

| Visual Cortical Areas | ||||

| Primary Visual | .57 | |||

| Extrastriate Cortex | .56 | |||

| Other Cortical Areas | ||||

| Medial ACC | .68 | |||

| Pre SMA | .57 | .58 | ||

| Simple RB Task / Disjunctive RB Task | ||||

| Session 1 | Session 2 | Session 3 | Session 4 | |

| Subcortical Areas | ||||

| Putamen | .56 | .57 | ||

| Body and Tail of Caudate | .55 | .61 | ||

| Head of the Caudate | .58 | |||

| Pallidum | .57 | |||

| Motor Thalamus (va, vl) | .57 | .62 | ||

| Frontal Thalamus (md) | .66 | .66 | ||

| Motor Cortical Areas | ||||

| Primary Motor | .57 | |||

| Dorsal Premotor | .56 | |||

| SMA | .56 | |||

| Posterior ACC | .57 | .62 | ||

| Visual Cortical Areas | ||||

| Primary Visual | .67 | .59 | ||

| Extrastriate Cortex | .56 | .67 | .60 | |

| Other Cortical Areas | ||||

| Ventrolateral PFC | .60 | |||

| Hippocampus | .58 | |||

3.2.1 Category Learning

The top two circles in Figure 3 show the results from the first scanning session, representing data acquired when early category learning was still in progress. The common patterns analysis suggests that common patterns of activity across all tasks can be found in motor areas, both cortical and thalamic. This result is likely due to the fact that all categorization tasks involved the same motor responses and it might be general to any task involving such responses, instead of specific to the categorization tasks included here. Besides these motor areas, there is evidence suggesting common patterns of activity between the II task and the RB tasks in only two areas: ESC (both RB tasks) and GP (simple RB task only). In contrast, the results suggest common patterns of activity to both RB tasks in an additional motor ROI (SMA) and in three other non-motor ROIs (PUT, CD-hd, mdTH).

The unique patterns analysis showed a much more complex pattern of results. Only one ROI, pACC, included patterns of activation that could discriminate all pairs of tasks. More ROIs can discriminate between the II task and each of the RB tasks, including thalamic (va/vlTH, mdTH), motor (SMA, vPM), visual (IT) and striatal (CD-bt) regions. Interestingly, the subcortical ROIs that could discriminate between the II task and the simple RB task could also discriminate between the two RB tasks. The areas that can only discriminate between RB tasks are the vlPFC, ESC and M1. The areas that can only discriminate the II from either of the RB tasks are SMA, IT and vPM.

Finally, it is worth noting that classification accuracy did not reach significance in either classification analysis for some cortical areas, including visual (V1), medial temporal (HPC), and frontal (dlPFC, mACC, pre-SMA) areas.

3.2.2 Categorization automaticity

The three bottom rows in Figure 3 depict information from the last three scanning sessions, representing data acquired during the development of automaticity. The common patterns analysis suggests that motor ROIs consistently provided common patterns of activity across all tasks throughout the experiment. This is also true of the motor thalamus (va/vlTH). In the last session, the only motor ROI that is not included in the diagram is SMA.

On the other hand, basal ganglia ROIs lose their informativeness for the common patterns analyses as automaticity develops, and none of them is included in the diagram by the final session. Interestingly, the results suggest that mdTH still holds activation patterns that are common to both RB tasks by the end of training.

The most striking pattern of results from the unique patterns analysis is that the number of informative ROIs drops considerably across the development of automaticity, from 3–7 ROIs in the first two sessions, to only 1–2 in the last session. The largest drop occurs between the second and third sessions and it affected all types of ROIs (i.e., subcortical, motor, visual, medial temporal and frontal) more or less equally in the discrimination between the II task and both RB tasks. On the other hand, in the discrimination between the two RB tasks, the drop in informative ROIs occurred mostly in subcortical, frontal and medial temporal areas. Motor cortical areas remained informative during the third session, but not in the final session, whereas visual areas remained informative until the final session.

The general drop in the informativeness of all ROIs within the unique patterns analysis explains why several motor areas that show both common and unique patterns early in training (brown) show only common patterns later on (purple). Note that in the last session most ROIs are either exclusively informative for the common patterns analysis (purple) or for the unique patterns analysis (orange).

A closer look at the pattern of results for each ROI in the unique patterns analysis reveals that results were much more inconsistent than in the common patterns analysis, in the sense that most ROIs did not reliably discriminate between tasks in more than one session. This pattern is easier to visualize by comparing Tables 2 and 3. Relatedly, the data does not show a clear dissociation between ROIs that could discriminate only between II and RB tasks and areas that could discriminate only between different RB tasks. For example, all subcortical ROIs could discriminate between the II task and at least one RB task. All subcortical ROIs could also discriminate between the two RB tasks in either of the first two sessions. The results regarding frontal and medial temporal ROIs show a more clear dissociation. Whereas pre-SMA could discriminate the II task from both RB tasks (scanning sessions 2 and 3), vlPFC and HPC could discriminate between RB tasks (scanning sessions 1 and 2).

As indicated before, the ROIs that were not informative in either analysis during the first scanning session were visual, medial temporal, and non-motor cortical regions. By the last session, basal ganglia ROIs and one motor area (SMA) joined this set.

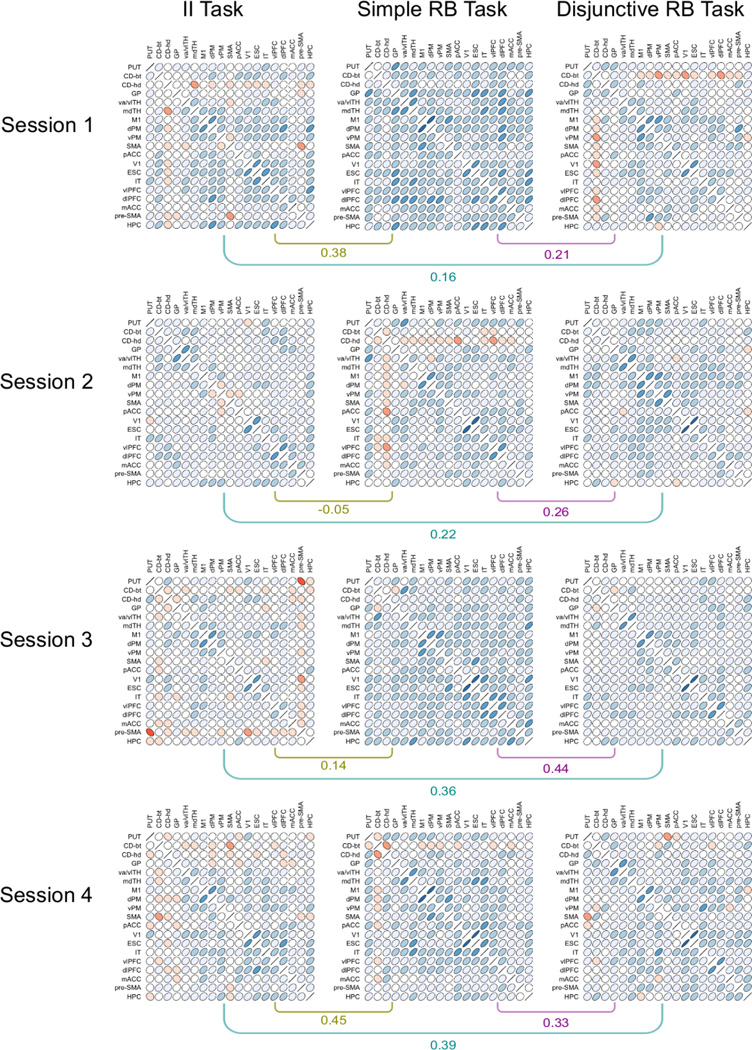

3.3 Classification Similarity Analysis

The results of the classification similarity analysis are summarized by twelve RDMs, one for each combination of task and session. Each cell in an RDM contains an estimation of how dissimilar the category representations are in a pair of ROIs. The whole RDM is thus a summary of the degree of similarity of the category representations across all ROIs. These patterns of representational similarity can themselves be similar or dissimilar for two specific tasks, which can be measured by correlating the tasks’ RDMs.

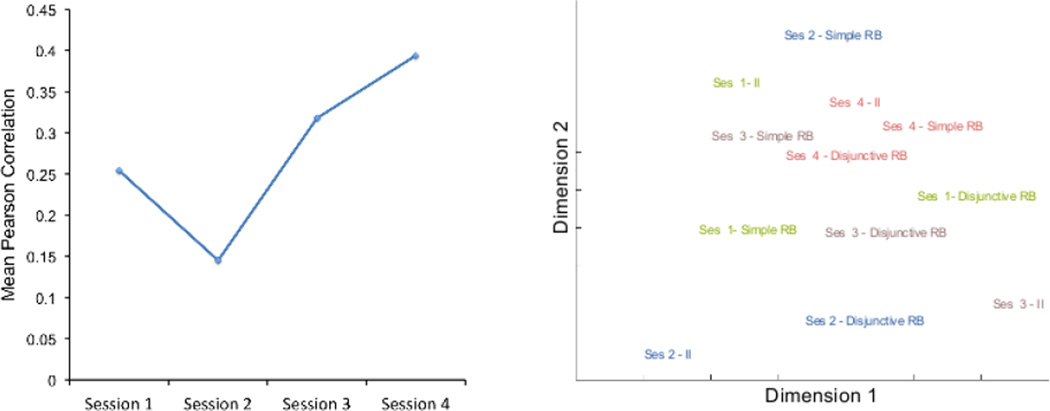

The most important result from the classification similarity analysis is summarized in Figure 4. The left panel shows the mean correlation between individual task RDMs across the four scanning sessions. The permutation test indicated that these mean correlations were significantly above zero (p < .05) in sessions 1, 3 and 4 (after Bonferroni correction for multiple comparisons). The mean correlation among RDMs increased with over-training in each task. A line fit to these data had a slope of .0591, which was significantly above zero (p < .05) according to the permutation test. This increase in correlation was observed for each pair of RDMs (see Figure 5 and its description below).

Figure 4.

Results of the classification similarity analysis. The left panel shows the mean Pearson correlation between representational similarity matrices of different tasks in each scanning session. The right panel shows the two-dimensional solution of a multidimensional scaling performed on the dissimilarities (1-Pearson correlation) between all representational similarity matrices obtained, one for each combination of task and scanning session.

Figure 5.

Matrices of correlations between ROIs obtained in the classification similarity analysis and their pairwise correlations within each session. Each cell within a matrix displays a contour line from a bivariate normal density matching the observed correlation. Red ellipses represent negative correlations and blue ellipses represent positive correlations. The shade of color represents correlation magnitude, with darker color indicating higher absolute correlations.

Dissimilarities (one minus Pearson correlation) between all pairs of RDMs (3 tasks 4 sessions) were entered into a non-metric multidimensional scaling analysis, which positioned these RDMs in the two-dimensional configuration that best conserved their original dissimilarities. The results are shown in the right panel of Figure 4; they reveal that RDMs were more similar across tasks during the last session than to other RDMs from the same task in previous sessions.

A more detailed visualization of the results of this analysis is provided in Figure 5, which shows matrices of correlations between ROIs (1-RDM) for each task and each scanning session. Each cell in these matrices depicts a contour line from a bivariate normal density matching the observed correlation (Murdoch & Chow, 1996). To aid visualization, negative correlations are plotted in red and positive correlations in blue, with darker shades of color representing higher magnitudes. The correlations between each pair of matrices in a particular session are shown below them as well.

When the matrices from the first and last sessions are compared, the most salient pattern of results is that many ROIs decrease their correlations with other regions in the II and simple RB tasks. Regions in the basal ganglia, medial temporal and frontal lobes, which show patterns of classification accuracies similar to other brain regions early in training, show decreased similarities by the last session. In the II task, for example, correlations between regions of the basal ganglia and other regions go from being mostly positive during the first session to being mostly negative in the last session. In the simple RB task, correlations between most ROIs are positive and relatively high in the first session. By the last session, correlations have decreased considerably, especially in frontal and medial temporal regions, but also in the basal ganglia. On the other hand, in both the II task and the simple RB task a number of ROIs in the center of the matrix seems to maintain moderately positive correlations with one another. These include thalamic nuclei, motor regions, visual regions, and prefrontal cortex.

Similar changes are not apparent in the disjunctive RB task, which shows a rather sparse pattern of correlations from early on. Thus, the increase in similarity between RDMs across training might be due to a decrease in the correlations between areas in the basal ganglia, frontal and medial temporal cortex and all other ROIs, which is observed in the II task and the simple RB task, but not in the disjunctive RB task.

4. Discussion

In the first scanning session, when category learning was likely to still be in progress, as expected the results suggested that patterns of activity common to all tasks and useful for categorization were present mainly in motor regions. Only ESC and GP showed signs of patterns common to II and RB tasks. On the other hand, the two RB tasks shared common patterns of activation additionally in striatal regions (PUT and CD-hd) and the frontal thalamus (mdTH). These results are in line with the COVIS model of category learning, which proposes that both CD-hd and mdTH are part of the explicit system in charge of RB learning, but not the implicit system in charge of II learning.

The COVIS explicit system assumes that, during RB tasks, the (working) memory of the current categorization rule is maintained via a reverberating loop that includes lateral PFC and the medial thalamic nucleus (Ashby, Ell, Valentin, & Casale, 2005). The identification of the mdTH in the common patterns analysis is in line with this role, if it is further assumed that the rule representation in the mdTH includes information about the current correct category. But if so, then why was there no evidence of the same representations in lateral PFC in this analysis? The two RB tasks require different rules, so if the working memory representation of the current rule is stored in lateral PFC, then one would not expect a common patterns analysis to identify the lateral PFC. In support of this prediction, the unique patterns analysis did identify the lateral PFC as a region that differentiated between the two RB tasks. Unique patterns were also present in the mdTH, so this area might actually include both types of representation. In sum, one interpretation of these results is that while mdTH and lateral PFC are involved in holding the current categorization rule in working memory, mdTH also holds information about the result of applying such rule in each specific trial.

On the other hand, COVIS proposes that the role of the head of the caudate in the explicit system is to switch attention away from the currently used rule when it does not lead to accurate performance. However, the present results suggest that the head of the caudate also carries information about the category of a stimulus cluster early in learning of RB tasks. Thus, the role of the caudate in RB tasks might be more complex than just to mediate an attention switch. Perhaps the head of the caudate is involved in the processing of feedback about the correct category, as suggested by previous research (Cincotta & Seger, 2007; Seger & Cincotta, 2005), and the t-maps obtained here did not completely un-mix the caudate response related to stimulus presentation from that related to feedback processing. Alternatively, the head of the caudate could be involved in predicting feedback from the presentation of a stimulus. However, neither of these hypotheses would explain why significant accuracy was found only early in training and not in the analyses involving the II task. One possibility is that initial stages of category learning in rule-based tasks involve learning of stimulus-response associations in the caudate, which is later followed by learning of more abstract aspects of the task in lateral PFC (see Antzoulatos & Miller, 2011; Helie, Roeder, & Ashby, 2010). More research seems to be necessary to better understand the role of the head of the caudate in RB tasks.

The unique patterns analysis revealed that the three tasks could be discriminated from one another using information from many different areas. During the first session, all pairs of tasks could be discriminated on the basis of patterns in cortical motor (M1, vPM, SMA, pACC), and thalamic motor (va/vlTH) regions. Thus, motor activity was consistently different across tasks despite the fact that the same motor responses were involved in all cases. Furthermore, visual regions (ESC, IT) also discriminated the simple RB task from the other two tasks, which could be due to top-down influences from category representations in other areas (Miller, Vytlacil, Fegen, Pradhan, & D’Esposito, 2010).

The CD-bt could distinguish between the simple RB task and both of the other two tasks. Each medium spiny neuron in this striatal region receives projections from thousands of neurons in visual cortex (Kincaid, Zheng, & Wilson, 1998), which makes it a candidate for visual category learning. If cells in the CD-bt came to respond to a stimulus category through learning, then this would explain why patterns of activity from this region supported high accuracy in the unique patterns analysis.

The two RB tasks could also be discriminated using patterns in the mdTH and vlPFC, the two regions involved in holding representations of rules and categories in working memory during learning in the explicit system of COVIS. This suggests that such representations were different across different RB tasks, in line with the fact that the two tasks required different explicit rules. The mdTH could also differentiate between the simple RB task and the II task, suggesting perhaps a different involvement of working memory in both. The same was not found for the discrimination of the disjunctive RB task and the II task. In this case, significant classifier accuracy was achieved using patterns from motor regions only.

Figure 3 shows that the analyses did not find either common or unique patterns of activation across tasks for a number of ROIs during the first session: V1, dlPFC, mACC, pre-SMA and HPC. COVIS does not propose any special role for most of these areas in category learning, with the exception of mACC. The role of the ACC in the COVIS explicit system is to select among different candidate rules (Ashby & Valentin, 2005). Such selection would occur only when there is evidence that the rule in current use does not produce good performance. Thus, activity in mACC would not be present on most learning trials.

COVIS proposes that explicit categorization rules are held in lateral PFC during RB category learning. Here it was found that RB tasks could be discriminated in the first session on the basis of vlPFC activation, but not dlPFC activation. Previous studies have shown that vlPFC is specifically involved in the maintenance of information in working memory, whereas dlPFC is involved in the preparation of planned actions (e.g., Yoon, Hoffman, & D’Esposito, 2007). The specific rules and categories that should be kept in working memory were different in the two RB tasks, but the actions involved in both tasks were not, which could explain why they were differentiated in vlPFC but not in dlPFC.

Currently, the role of the HPC in categorization is unclear (for a review, see Ashby & Crossley, 2010a). Although a few imaging studies have reported HPC activation in categorization tasks (Helie, Roeder, et al., 2010, Poldrack et al., 2001; Seger et al., 2011), many others have not (e.g. Lopez-Paniagua & Seger, 2011; Seger & Cincotta, 2005; Seger, Peterson, Cincotta, Lopez-Paniagua, & Anderson, 2010). Similarly, a few studies have reported category-learning deficits by amnesic patients (Kolodny, 1994; Zaki, Nosofsky, Jessup, & Unversagt, 2003), but many have reported near normal performance (e.g., Filoteo, Maddox, & Davis, 2001; Janowsky, Shimamura, Kritchevsky, & Squire, 1989; Leng & Parkin, 1988; Knowlton & Squire, 1993). COVIS predicts that the main role of the HPC is to encode the long-term memory of specific explicit categorization rules (Ashby & Valentin, 2005; see also Nomura & Reber, 2008). In support of this prediction, we found that accurate discrimination of the two RB tasks was possible using HPC activation only during the second scanning session, when subjects might have been able to recall the correct rules that they discovered during previous training sessions. After the development of automaticity in later sessions, explicit recall would not be necessary for correct categorization performance.

An important question is whether the size of different ROIs (see Table 1) might have an influence on classifier accuracy and could explain some of our results. Usually only a proportion of all voxels in an ROI will carry useful information for MVPA and all other voxels will carry only noise, which is why in most applications only a subset of informative voxels are selected to be included in the analysis. Larger ROIs would include a larger number of voxels contributing only noise to the classification task, potentially leading to lower accuracy for test patterns (due to over-fitting to the training data). To explore this possibility, we computed the Pearson correlation between ROI size and classifier accuracy for each of the analyses reported here. The average correlation (Corey, Dunlap & Burke, 1998) was equal to .264 and was statistically significant, z = 6.89, p < .001. However, this correlation was positive, which is the opposite result from what would be expected if larger ROIs were just adding noisy voxels. Furthermore, closer inspection of the data revealed that the correlation between ROI size and classifier accuracy was driven by a single outlier, M1, which was both the largest ROI (see Table 1) and showed the highest classification accuracy in most analyses. When M1 was excluded from the correlation analysis, the average correlation dropped to .004, which was not statistically significant, z = .09, p > .1. In sum, it is unlikely that ROI size affected our results importantly.

Note also that variations in ROI sizes could only explain differences in accuracy across different ROIs, whereas our analyses focused on the comparison of accuracy across sessions and groups within each ROI. Only the classification similarity analysis compared different ROIs for the same subject, and in that case the similarity between the confusion vectors associated to each pair of ROIs was computed using a correlation coefficient, which is invariant to overall changes in accuracy.

Our analyses revealed three important changes in the information held by different ROIs as automaticity developed with overtraining, from scanning sessions two to four. First, the number of ROIs that were informative for discrimination of different tasks dropped precipitously as training progressed, suggesting that patterns of activation unique to each task became more rare across all brain regions. Thus, the previously reported behavioral similarity between RB and II tasks after the development of automaticity (Helie, Waldschmidt, & Ashby, 2010) is mirrored by increasingly similar activity patterns across a variety of brain regions.

Second, common patterns of activation remained present in motor regions, but they mostly disappeared from basal ganglia regions and from SMA. The basal ganglia result is in line with the results of previous univariate analyses of the present dataset by Helie et al. (2010) and Waldschmidt and Ashby (2011), which revealed that basal ganglia activity that correlated with successful performance in II and RB tasks decreased with overtraining. A general reduction in the informativeness of SMA activation patterns for categorization is in line with its hypothesized role in motor control. It has been proposed that SMA might be involved in the production of voluntary movements, whereas other premotor areas would mediate the production of automatic movements (Haggard, 2008). From this perspective, SMA would be the only motor ROI expected to reduce its participation in categorization behavior with the development of automaticity, which was the observed result. The SMA has also been linked to more “cognitive” functions than other premotor areas, such as inhibiting conflicting responses or switching between rules or movement plans (Nachev, Kennard, & Husain, 2008). Both of these candidate functions are also consistent with a decrease of the involvement of SMA as automaticity develops. The exploration of different response alternatives and the active inhibition of responses might be necessary in the early stages of category learning, but not with well-learned tasks. Third, patterns of representational similarity between brain regions become more similar across tasks with the development of automaticity, as revealed by the classification similarity analysis.

These three results are in line with the general proposal advanced by SPEED and the model proposed by Helie and Ashby (2009), that overtraining in RB and II tasks should lead to progressively more similar networks in charge of successful classification behavior, resulting from a decrease in the role of subcortical areas in such behavior. The present results suggest that, in addition, the development of automaticity leads to more similar patterns of stimulus-related activation across brain areas, and more similar patterns of representational similarity. Thus, our results support the proposal of Ashby and Crossley (2012) that although humans have multiple category learning systems, they may have only one system for mediating automatic categorization responses. Further computational and empirical work will be necessary to better understand whether and how changes in the patterns of connectivity among brain areas could give rise to the changes in category representations suggested by the present results.

Acknowledgements

Preparation of this article was supported in part by Award Number P01NS044393 from the National Institute of Neurological Disorders and Stroke and by support from the US Army Research Office through the Institute for Collaborative Biotechnologies under grant W911NF-07-1-0072. The U.S. government is authorized to reproduce and distribute reprints for Governmental purposes notwithstanding any copyright annotation thereon. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies or endorsements, either expressed or implied, of the U.S. Government.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Anderson JLR, Jenkinson M, Smith SM. Non-linear registration, aka spatial normalization. FMRIB technical report TR07JA2. 2007 [Google Scholar]

- Antzoulatos EG, Miller EK. Differences between neural activity in prefrontal cortex and striatum during learning of novel abstract categories. Neuron. 2011;71:243–249. doi: 10.1016/j.neuron.2011.05.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashby FG, Alfonso-Reese LA, Turken AU, Waldron EM. A neuropsychological theory of multiple systems in category learning. Psychological Review. 1998;105(3):442–481. doi: 10.1037/0033-295x.105.3.442. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Crossley MJ. The neurobiology of categorization. In: Mareschal D, Quinn PC, Lea SEG, editors. The making of human concepts. New York: Oxford University Press; 2010a. pp. 75–98. [Google Scholar]

- Ashby FG, Crossley MJ. Interactions between declarative and procedural-learning categorization systems. Neurobiology of learning and memory. 2010b;94(1):1–12. doi: 10.1016/j.nlm.2010.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashby FG, Crossley MJ. Automaticity and multiple memory systems. Wires Cognitive Science. 2012;3:363–376. doi: 10.1002/wcs.1172. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Ell SW, Valentin VV, Casale MB. FROST: A distributed neurocomputational model of working memory maintenance. Journal of cognitive neuroscience. 2005;17(11):1728–1743. doi: 10.1162/089892905774589271. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Ell SW, Waldron EM. Procedural learning in perceptual categorization. Memory & Cognition. 2003;31(7):1114–1125. doi: 10.3758/bf03196132. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Ennis JM, Spiering BJ. A neurobiological theory of automaticity in perceptual categorization. Psychological Review. 2007;114(3):632–656. doi: 10.1037/0033-295X.114.3.632. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Maddox WT. Human category learning. Annual Review of Psychology. 2005;56:149–178. doi: 10.1146/annurev.psych.56.091103.070217. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Maddox WT, Bohil CJ. Observational versus feedback training in rule-based and information-integration category learning. Memory & Cognition. 2002;30(5):666–677. doi: 10.3758/bf03196423. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Paul E, Maddox WT. COVIS. In: Pothos EM, Wills AJ, editors. Formal Approaches in Categorization. New York, NY: Cambridge University Press; 2011. pp. 65–87. [Google Scholar]

- Ashby FG, Queller S, Berretty PM. On the dominance of unidimensional rules in unsupervised categorization. Attention, Perception, & Psychophysics. 1999;61(6):1178–1199. doi: 10.3758/bf03207622. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Valentin VV. Multiple systems of perceptual category learning: Theory and cognitive tests. In: Cohen H, Lefebvre C, editors. Categorization in Cognitive Science. New York: Elsevier; 2005. pp. 548–572. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B. Methodological. 1995;57(1):289–300. [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Chalupa LM, Werner JS. The visual neurosciences. Cambridge, MA: MIT Press; 2004. [Google Scholar]

- Cincotta CM, Seger CA. Dissociation between striatal regions while learning to categorize via feedback and via observation. Journal of Cognitive Neuroscience. 2007;19(2):249–265. doi: 10.1162/jocn.2007.19.2.249. [DOI] [PubMed] [Google Scholar]