Abstract

Modeling of multivariate unordered categorical (nominal) data is a challenging problem, particularly in high dimensions and cases in which one wishes to avoid strong assumptions about the dependence structure. Commonly used approaches rely on the incorporation of latent Gaussian random variables or parametric latent class models. The goal of this article is to develop a nonparametric Bayes approach, which defines a prior with full support on the space of distributions for multiple unordered categorical variables. This support condition ensures that we are not restricting the dependence structure a priori. We show this can be accomplished through a Dirichlet process mixture of product multinomial distributions, which is also a convenient form for posterior computation. Methods for nonparametric testing of violations of independence are proposed, and the methods are applied to model positional dependence within transcription factor binding motifs.

Keywords: Bayes factor, Dirichlet process, Goodness-of-fit test, Latent class, Mixture model, Motif data, Product multinomial, Unordered categorical

1. INTRODUCTION

Our goal is to develop methods for Bayesian nonparametric modeling of multivariate unordered categorical data. The application that motivated this work is the modeling of dependence of nucleotides within a transcription factor binding motif, which is a short DNA sequence involved in gene regulation. Let xi = (xi1, …, xip)′ denote a sequence of nucleotides within positions 1, …, p of a known motif of interest. We let xij ∈ {1, …, dj}, for dj = d = 4, with values 1, 2, 3, 4 corresponding to the A, C, G, T nucleotides, respectively. In the motif application, the most common model for xi is the product-multinomial distribution, which characterizes the elements of xi as independent draws from multinomial distributions having a position-specific weight matrix (Lawrence and Reilly 1990; Liu 1994; Liu, Neuwald, and Lawrence 1995). However, experimental data suggest independence is often violated (Bulyk, Johnson, and Church 2002; Tomovic and Oakeley 2007).

Barash et al. (2003) proposed using a Bayesian network (BN). Unless p is small, the number of possible BNs is so large that it is only feasible to visit a small proportion of the models. Even computationally expensive search algorithms can miss the best models. In large model spaces, there are typically many BNs that are consistent with the data, so the best BN according to any criteria is very unlikely to be the true model. In addition, the selected BN will be sensitive to the criteria chosen for inclusion of an edge, and there is a tendency for overfitting in large networks due to the massive number of potential edges. For a recent article developing an efficient recursive method for BN search, refer to Xie and Geng (2008). Zhou and Liu (2004) noted that the Barash et al. (2003) approach is very time consuming to implement and is subject to problems in prior choice and overfitting, motivating a simpler model based on pairs of correlated positions. Nikolajewa et al. (2007) focused on tree-augmented BNs to reduce computational time and problems with poor performance of BNs in small datasets.

Ideally, it would be possible to accommodate the fact that we have no idea what the true dependence structure is, while favoring a sparse structure that allows efficient computation without facing problems with overfitting. The dependence is of substantial interest biologically, and more realistic models of variability within motifs open the door for improved approaches for motif discovery. With this motivation in mind, our goal is to develop a nonparametric Bayes approach to allow the distribution of multiple unordered categorical variables to be unknown. We show that mixtures of product multinomial distributions have full support, with Dirichlet process (DP) (Ferguson 1973, 1974) mixtures providing a convenient and flexible framework. For posterior computation, we can rely on efficient and simple-to-implement methods developed for conjugate DP mixture models. Hence, we bypass the complexities involved in building a Bayes network for unordered categorical data without inducing any restrictions, while also favoring a sparse formulation of the heterogeneity structure. A formal test of lack of fit for the product multinomial model against a nonparametric alternative can be constructed directly from the Gibbs sampling output.

Although our initial motivation was the motif application, our methodology provides a general approach for nonparametric Bayes modeling of multivariate unordered categorical data. The current literature on parametric models focuses primarily on underlying continuous variable specifications. For example, the multinomial probit model (Ashford and Sowden 1970; Ochi and Prentice 1984; Chib and Greenberg 1998) incorporates a latent multivariate Gaussian random variable, which is linked to the categorical observations through thresholding. Zhang, Boscardin, and Belin (2008) developed a Bayes approach to posterior computation for the multivariate multinomial probit model. Such models are quite flexible, but also tend to be difficult to implement due to the need to estimate a correlation matrix in underlying variables that have a complex relationship with the measured outcomes. An alternative approach is to use a finite mixture model. Such an approach has historically been referred to as latent structure analysis (Lazarsfeld and Henry 1968), though the term latent class modeling is more commonly used in recent years. Refer to Formann (2007) for a recent article applying latent class modeling to multivariate categorical data with missing values.

The nonparametric Bayes literature on multivariate unordered categorical data analysis for more than two categories is essentially nonexistent. A number of articles have focused on nonparametric Bayes methods for multiple binary outcomes (Quintana and Newton 2000; Hoff 2005; Jara, Garcia-Zattera, and Lesaffre 2007). In addition, Kottas, Müller, and Quintana (2005) propose a nonparametric Bayes modification of the multivariate probit model for ordinal data, with the normal distribution on the underlying variables replaced with a Dirichlet process mixture (DPM) of normals. Ansari and Iyengar (2006) proposed a semiparametric Bayes approach to repeated unordered categorical choice data, again following the approach of using DPMs to relax normality assumptions on latent variables. Quintana (1998) instead considered a nonparametric Bayes approach to assess homogeneity in contingency tables having fixed right marginals. His approach borrowed information across the rows of the contingency table by assuming the row vectors of probabilities are drawn from a common distribution, which is assigned a DP prior. This approach is appropriate for univariate unordered categorical data collected for subjects in different groups.

Section 2 describes the proposed nonparametric Bayes mixture model, providing theoretical support. Section 3 describes a simple approach for posterior computation and goodness-of-fit testing. Section 4 contains a simulation study. Section 5 applies the method to assess dependence within the p53 motif, and Section 6 contains a discussion.

2. PRODUCT MULTINOMIAL MIXTURE MODELS

2.1 Latent Structure Analysis and Properties

We focus initially on the case in which p = 2, so that data for subject i consist of a pair of categorical variables, xi = (xi1,xi2)′, resulting in a d1 × d2 contingency table with cell (c1, c2) containing the count , for c1 = 1, …, d1 and c2 = 1, …, d2. Our focus is on sparse non-parametric modeling of the cell probabilities, π = {πc1c2} with πc1c2 = Pr(xi1 = c1, xi2 = c2).

One simple and parsimonious model for π can be obtained by assuming and with xi1 and xi2 independent. In this case, we obtain , so that instead of d1d2 − 1 free parameters characterizing π in the saturated case, we have d1 + d2 − 2 free parameters. In practice, this model may be overly simple, motivating latent structure analysis (Lazarsfeld and Henry 1968; Goodman 1974), which instead relies on the finite mixture specification

| (1) |

where ν = (ν1, …, νk)′ is a vector of mixture probabilities, zi ∈ {1, …, k} denotes a latent class index, is the probability of xi1 = c1 in class h, is the probability of xi2 = c2 in class h, and xi1 and xi2 are assumed to be conditionally independent given zi.

There is a rich literature focusing on issues in latent structure modeling, including identifiability of the ν and parameters, methods for selection of k, extensions to multiple group settings, etc. However, our goal is to build Bayesian non-parametric models that favor sparse formulations, while including all joint distributions for xi1 and xi2 in the support of the prior. With this goal in mind, it is important to verify the following theorem in order for formulation (1) to be sufficiently flexible.

Theorem 1

Any π ∈ Πd1d2 can be characterized as in (1) for some k, with Πd1d2 containing all d1 × d2 probability matrices with elements 0 ≤ πc1c2 ≤ 1 and .

It follows from Theorem 1 that the joint distribution of two categorical random variables can always be expressed as a finite mixture of product-multinomial distributions. Good (1969) previously noted the similarity between the singular value decomposition (SVD) and the latent structure model without providing a proof that any probability matrix, π, can be decomposed using formulation (1). Gilula (1979) instead used the SVD to provide conditions for identifying the distribution of latent variables inducing dependence in two categorical variables.

Focusing on the generalization to the multivariate case, let

denote a higher order tensor, with Πd1···dp denoting the set of all probability tensors of size d1 × d2 × · · · × dp, where probability tensors have nonnegative elements and

Using a tensor generalization of the matrix SVD (Kolda 2001), one can let

| (2) |

where λ1 ≥ · · · ≥ λk > 0, Uh is a decomposed tensor, , and ⊗ denotes the outer product, so that . If k is chosen to correspond to the minimum value such that π can be expressed as in (2), one obtains a rank decomposition. Rank decompositions are not necessarily unique, but uniqueness is not necessary for our purposes in developing a Bayesian nonparametric procedure.

Corollary 1

For any π ∈ Πd1··· dp decomposed as in (2), we can obtain an equivalent decomposition

where ν = (ν1, …, νk)′ is a probability vector, Ψh ∈ Πd1··· dp, and is a dj × 1 probability vector, for h = 1, …, k and j = 1, …, p.

From Corollary 1 it is clear that any multivariate categorical data distribution can be expressed as a latent structure model,

| (3) |

where ν is a vector of component probabilities, zi ∈ {1, …, k} is a latent class index, xi = (xi1, …, xip)′ are conditionally independent given zi and is the probability of xij = cj given allocation of individual i to class h.

2.2 Infinite Mixture of Product Multinomials

Although any multivariate categorical data distribution can be expressed as in (3) for a sufficiently large k, a number of practical issues arise in the implementation. Firstly, it is not straightforward to obtain a well-justified approach for estimation of k. Because the data are often very sparse with most of the cells in the d1 × · · · × dp contingency table being empty, a unique maximum likelihood estimate of the parameters in (3) often does not exist even when a modest k is chosen. Such problems may lead one to choose a very small k, which may be in-sufficiently large to provide an adequate approximation to the true multivariate distribution. Hence, inferences on the dependence structure in the observations may be severely biased.

These issues provide motivation for a Bayesian nonparametric approach, which avoids selection of a single finite k, allowing the number of components that are occupied by individuals in the sample to grow with sample size, while also allowing model averaging over the posterior distribution for the number of components. Such model averaging is preferable to approaches that conduct inferences conditional on a selected k, and hence ignore the (often substantial) uncertainty in estimation of k.

We propose to induce a prior, π ~ P, through the following specification

| (4) |

where P0j is a probability measure on the dj-dimensional probability simplex,

, and Q is a probability measure on the countably infinite probability simplex,

, and Q is a probability measure on the countably infinite probability simplex,

. For example, P0j may correspond to a Dirichlet measure, while Q corresponds to a Dirichlet process or more broadly to a GEM or Poisson–Dirichlet measure. In the Dirichlet process case, the stick-breaking representation of Sethuraman (1994) implies that νh = Vh Πl<h (1 − Vl) with Vh ~ beta(1, α) independently for h = 1, …, ∞, where α > 0 is a precision parameter characterizing Q. For small values of α, νh decreases towards zero rapidly with increases in the index h, so that the prior favors a sparse representation with most of the weight on few components. By choosing a hyperprior for α, one can allow the data to inform about an appropriate degree of sparsity, with the intrinsic Bayes penalty for model complexity protecting against overfitting.

. For example, P0j may correspond to a Dirichlet measure, while Q corresponds to a Dirichlet process or more broadly to a GEM or Poisson–Dirichlet measure. In the Dirichlet process case, the stick-breaking representation of Sethuraman (1994) implies that νh = Vh Πl<h (1 − Vl) with Vh ~ beta(1, α) independently for h = 1, …, ∞, where α > 0 is a precision parameter characterizing Q. For small values of α, νh decreases towards zero rapidly with increases in the index h, so that the prior favors a sparse representation with most of the weight on few components. By choosing a hyperprior for α, one can allow the data to inform about an appropriate degree of sparsity, with the intrinsic Bayes penalty for model complexity protecting against overfitting.

From Section 2.1, it is clear that any π0 ∈ Πd1···dp can be characterized using the infinite mixture representation in the first line of (4). However, in placing prior distributions on the components characterizing (4), it is not immediately clear that this flexibility is maintained. Certainly, this is not true for any choice of P0 = P01 ⊗ · · · ⊗ P0p and Q. For this reason, it is necessary to place conditions on P0 and Q so that P has full support on Πd1···dp with respect to some appropriate topology.

Theorem 2

Letting

(π0) = {π : ||π − π0||1 < ε} denote an L1 neighborhood around an arbitrary π0 ∈ Πd1···dp, the probability P{

(π0) = {π : ||π − π0||1 < ε} denote an L1 neighborhood around an arbitrary π0 ∈ Πd1···dp, the probability P{

(π0)} > 0 for any ε > 0 under the following conditions:

(π0)} > 0 for any ε > 0 under the following conditions:

for any and ε > 0, j = 1, …, p;

Q{

(ν0)} > 0 for any ε > 0 and ν0 ∈

(ν0)} > 0 for any ε > 0 and ν0 ∈

such that ν0h = 0 for h > r with r the (finite) maximum possible rank of π.

such that ν0h = 0 for h > r with r the (finite) maximum possible rank of π.

For P0j chosen as a finite Dirichlet and Q a Dirichlet process, it is straightforward to verify that the conditions of Theorem 2 are satisfied, so the resulting prior P has L1 support on Πd1···dp. Because there are finitely many parameters in π, this condition is sufficient for posterior consistency, with

where π0 is the true value of π, xi = (xi1, …, xip)′ is the data for subject i, and X = {x1, …, xn} is the data for all n subjects.

3. POSTERIOR COMPUTATION

3.1 Slice Sampling Algorithm

In the special case in which P0j are finite Dirichlet and Q is a Dirichlet process, posterior computation under prior (4) can proceed via a simple-to-implement and efficient Gibbs sampling algorithm, which modifies the slice sampling approach of Walker (2007). To clarify, the model can be expressed in the following hierarchical form in this case:

| (5) |

where the xij’s are sampled independently conditional on the latent class zi and the stick-breaking random variables V = {Vh} and probability vectors are mutually independent. We introduce a vector of latent variables, u = (u1, …, un)′, and define the joint likelihood of u and X given V and ψ as

| (6) |

where νh = Vh Πl<h(1 − Vl), for h = 1, …, ∞. In marginalizing out u, it is clear that (6) is consistent with (5). The augmented joint posterior distribution is proportional to the prior on V, ψ and α multiplied by the augmented data likelihood (6). Conditional posterior distributions for each of the unknowns can be derived using standard algebra, and a data augmentation Gibbs sampler is then used for posterior computation.

This Gibbs sampler iterates through the following sampling steps:

Update ui, for i = 1, …, n, by sampling from the conditional posterior, Uniform(0, νzi).

- For h = 1, …, k*, with k* = max{z1, …, zn}, update from the full conditional posterior distribution obtained in updating the Dirichlet prior with the likelihood of the jth response for subjects in component h,

- For h = 1, …, k*, sample Vh from the full conditional posterior, which is beta(1, α) truncated to fall into the interval

-

To update zi, for i = 1, …, n, sample from the multinomial full conditional with

where Ai = {h : νh > ui}. To identify the elements in A1, …, An, first update Vh, for h = 1, …, k̃, where k̃ is the smallest value satisfying .

- Finally, assuming a gamma(aα, bα) hyperprior for α, with the gamma parameterized to have mean aα/bα and variance , the conditional posterior is

These steps are quite similar to those presented in Walker (2007), though he considered Dirichlet process mixtures of normals. Each step involves sampling from a standard distribution, so should be very simple and efficient to implement. Note that in using the slice sampler we avoid the need to approximate (4) through truncation, and only update those components that are needed. This is conceptually related to the retrospective sampling approach of Papaspiliopoulos and Roberts (2008), though the slice sampler is simpler to implement. One can potentially gain efficiency while maintaining simple sampling steps by combining the slice sampler with the retrospective sampler using the approach described by Papaspiliopoulos (2008). Such an algorithm is similar to our proposed algorithm, but updates the Vhs from a beta conditional posterior distribution obtained in marginalizing out the latent variables u.

3.2 Testing and Inferences

It is often of interest to test for independence of the elements of xi = (xi1, …, xip)′. For example, in the transcription factor binding motif application, there has been considerable debate in the literature regarding the appropriateness of the independence assumption implicit in the product multinomial model. Under our proposed formulation, the null hypothesis of independence is nested within a nonparametric alternative that accommodates a sequence of models of increasing complexity including the saturated model. In particular, the independence model corresponds to H0 : ν1 = 1. As noted in Berger and Sellke (1987), interval null hypotheses are often preferred to point null hypotheses. Motivated by this reasoning and by computational considerations, we focus instead on the interval null H0 : ν* > 1 − ε, with ν* = max{νh, h = 1, …, k*} and ε a prespecified small positive constant. In particular, ε is chosen to permit deviations from independence that are so small as to be nonsignificant in the subject matter area. We find that ε = 0.05 provides a good default value based on simulations from the prior in a variety of cases. For very small ε, the interval null provides an approximation to the point null.

Under the prior proposed in Section 3.1, the probability allocated to H0 is approximately

which can easily be calculated numerically. From this expression, it is clear that the hyperparameters aα and bα control the prior probability allocated to H0. In order to assign approximately equal probabilities to H0 and H1, one should choose values of aα, bα to produce Pr(H0) ≈ 0.5. Noting that aα + bα is often interpreted as a prior sample size, we recommend letting aα + bα = 1/2 as a default corresponding to a vague prior. As ν1 is a parameter in each of the models under consideration, it is acceptable to choose a vague prior. We then choose aα = 1/4 to obtain Pr(H0 : ν* > 1 − ε) ≈ 0.5. To complete a default prior specification, we let aj1 = · · · = ajcj = 1, for j = 1, …, p, which corresponds to choosing uniform priors for the category probabilities in each class for each outcome type.

To conduct a Bayesian test of independence, we can rely on the Bayes factor in favor of the alternative hypothesis, which corresponds to

| (7) |

which can easily be estimated based on the output of the Gibbs sampler proposed in Section 3.1, with equal to the proportion of samples for which ν* ≤ 1 − ε and . The performance of the Bayes factor-based test is assessed in a simulation study in Section 4.

In addition to testing for independence, it may be of interest to estimate the marginal distribution for each xij and to conduct inferences on the dependence structure in the different elements of xi. To obtain approximate draws from the posterior distribution for πjl = Pr(xij = l), which can be used to obtain point and interval estimates, we suggest using the approximation

where k* is the maximum class index. Clearly, unless the sample size is quite small, the error in this approximation is neglible, with providing an easily calculated upper bound on this error. In small samples, one can obtain an approximation that is as accurate as desired by drawing νh’s and for h = k* + 1, …, km, with km chosen so that the error bound is below a prespecified constant. This idea is conceptually related to that proposed by Muliere and Tardella (1998).

To conduct inferences on the dependence between xij and xij′ for all j, j′ ∈ {1, …, p}, it is necessary to first choose a measure of association for two nominal variables. Most of the symmetric measures of association that are commonly used are based on the Pearson χ2, with various adjustments proposed to attempt to remove the dependence on sample and table size. For example, Cramer’s , with k = min{dj, dj′}, is a commonly used measure that ranges from 0 to 1. From a Bayesian perspective, the measure of association should not depend on the data directly, but should be a function of the parameters characterizing the multivariate distribution. Hence, we recommend

| (8) |

where ρjj′ is a model-based version of Cramer’s V and ranges from 0 to 1, with ρjj′ ≈ 0 when xij and xij′ are independent.

Our Bayesian approach has the nice feature that we can estimate the posterior distribution of ρjj′ for all j, j′ pairs based on the output of the Gibbs sampler from Section 3.1. To conduct inference on the dependence structure between each of the pairs, we recommend reporting a p × p association matrix, with the elements corresponding to posterior means for each ρjj′. In addition, we can calculate posterior probabilities and Bayes factors for local null hypotheses, H1jj′ : ρjj′ > ε from the Gibbs output.

Using posterior probabilities of H1jj′ for each j, j′ pair as a basis for inferences on the dependence structure has substantial advantages over model selection-based approaches. In particular, in a standard Bayesian network analysis, one would estimate a directed acyclic graph (DAG) characterizing the dependence structure in the elements of xi. Unless p is small, this estimation involves a massive-dimensional model selection problem, as the number of possible DAGs is enormous. In very large model spaces, the available data are typically consistent with a large number of different models, and it is extremely unlikely that the estimated DAG will correspond to the true model.

Although we focus on testing of global dependence and dependence between pairs of variables, since these are the hypotheses most often of interest, our approach can be adapted for comparing competing dependence structure models. For example, one may have two or more alternative graphical models of dependence representing different biological hypotheses. In this case, it is likely that none of the graphical models in the list of those being compared is exactly true. In this setting, one can represent the true model using our proposed Dirichlet process mixture of product multinomial models. The graphical model is then selected that produces a predictive distribution closest to that for the nonparametric model in KL distance using the methods of Walker and Gutiérrez-Peña (2007).

4. SIMULATION STUDIES

To assess the performance of the proposed approach, we conducted a simulation study. Simulated data consisted of A, C, G, T nucleotides (dj = d = 4) at p = 20 positions for n = 100 sequences. We considered two simulation scenarios, generating the nucleotides (1) independently, and (2) assuming dependence in locations 2, 4, 12, and 14. One mechanism by which positional dependence can be induced is the existence of multiple subpopulations, with each subpopulation having different A, C, G, T probabilities at certain locations. Within a subpopulation, nucleotides are positionally independent. However, marginalizing out the latent subpopulation indicator, one obtains dependence in those locations that have different nucleotide frequencies across the subpopulations. The presence of such sub-populations is biologically motivated, with different subpopulations potentially having a different combination of dominant (i.e., high probability) nucleotides at certain locations. Presumably there is more than one combination that can lead to a functioning motif, so that different working combinations can arise through evolution in isolated groups of individuals. We assumed 80% of individuals fall into the first subpopulation, with the remaining individuals in a second subpopulation. Nucleotides were generated from a discrete uniform except at locations 2, 4, 12, and 14. For these locations, we used a product multinomial, with the probabilities of A, C, G, or T differing between the subpopulations in case 2 but not in case 1.

For each case, we generate 100 simulated datasets, analyzing each separately using the default prior of Section 3.2 and the Gibbs sampler of Section 3.1 run for 100,000 iterations with a 20,000 iteration burn-in. Mixing and convergence rates were good based on examination of trace plots. We also implemented the Bayes network search method of Xie and Geng (2008) for comparison, using a threshold of 0.05 on the p-values for inclusion of an edge.

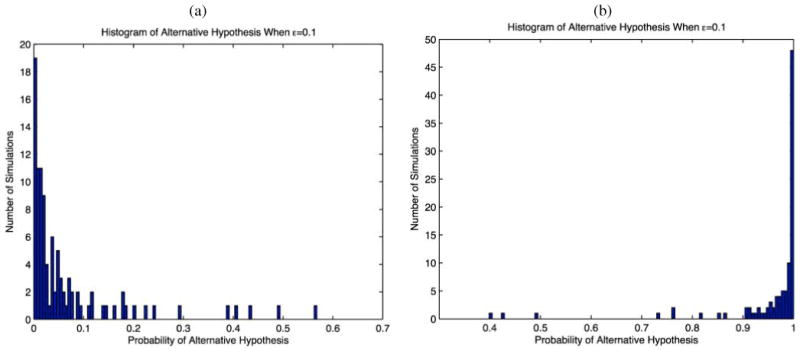

For simulation case 1 (no positional dependence, H0 is true), we obtained good results, with the estimated values of ρjj′ close to zero for each j, j′ pair and all of the simulation replicates. Figure 1(a) provides a histogram showing the estimated posterior probabilities of H1 across the 100 simulated datasets using ε = 0.10. The method appropriately assigns a value close to zero to Pr(H1|data) when H0 is true in most cases, with only 1/100 having an estimated Pr(H1|data)>0.5. In contrast, the Xie and Geng (2008) approach badly over-fit the data, including dependence in 26.7 pairs of variables on average (range = [5, 171] out of 190 possible). These results improved somewhat using a threshold of 0.01 for inclusion of an edge, but the average number of false positives was still 3.5 (range = [0, 25]).

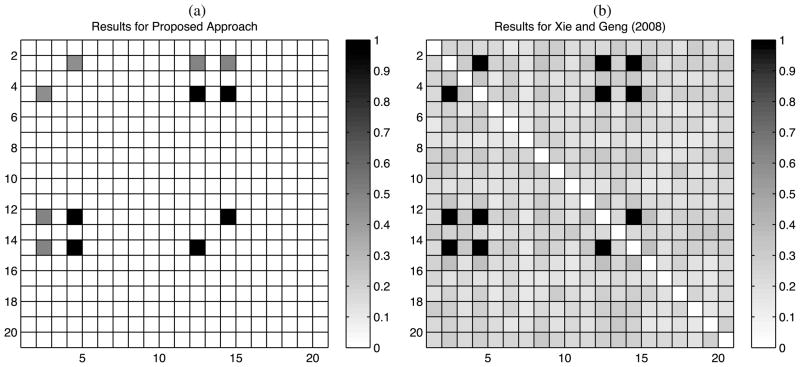

Figure 1.

Histograms of estimated posterior probabilities of H1 in each of the 100 simulations under (a) case 1 (no positional dependence—H0 is true) and (b) case 2 (positional dependence—H1 is true).

Figure 1(b) provides results in simulation case 2. The posterior probability assigned to H1 was close to one in the majority of the simulations. The left panel of Figure 2 shows the proportions of simulations having Pr(H1jj′|X) > 0.95. These proportions are 0/100 for all position pairs that are truly uncorrelated and ranged from 53/100 to 97/100 for position pairs that were dependent. The right panel of Figure 2 shows results for the Xie and Geng (2008) approach, plotting the proportion of simulations in which each position pair is flagged as correlated. The Xie and Geng (2008) approach had slightly higher power, but a substantially higher Type I error rate. Their method detected 5.7 of the 6 truly dependent pairs of variables on average (range = [3, 6]), while also reporting 36.7 false positives on average (range = [10, 105]). For a threshold of 0.01 for edge inclusion, the average number of true and false positives detected were 5.36 and 5.6, respectively.

Figure 2.

Results of simulation case 2—percentages of simulations for which (a) Pr(H1jj′|X)>0.95, and (b) the Xie and Geng (2008) method estimated an association between positions j, j′. The true model has dependence in positions 2, 4, 12, and 14.

The relatively poor performance of the Xie and Geng (2008) method does not reflect on their approach for BN search, but instead on the general drawbacks of relying on model selection in very large model spaces. For comparison, we conducted a simple frequentist alternative, which avoids BN search by focusing on pairwise testing, while adjusting for multiplicity. In particular, we implemented separate chi-square tests for each position pair, flagging those positions having p-values below the Benjamini and Hochberg (1995) threshold to maintain a false discovery rate ≤ 0.05. We find that dependence in (4, 12) and (12, 14) is detected in all of the simulations, but dependence in positions (2, 4), (2, 12), (2, 14), and (4, 14) was missed in all 100 simulations.

Hence, based on these simulations, the proposed Bayesian nonparametric approach has better performance than Bayes network-based methods and frequentist pairwise testing. In addition to bypassing the difficulties involved in graphical model search, our approach has the conceptual advantage of providing a weight of evidence that two variables are correlated accounting for uncertainty in the dependence in other variables and automatically adjusting for dependence in the different hypothesis tests. Accounting for dependence in hypotheses induces an automatic Bayes adjustment for multiple testing, as discussed in the setting of simpler parametric models by Scott and Berger (2006).

5. APPLICATION TO MODELING POSITIONAL DEPENDENCE WITHIN MOTIFS

We applied the method to data for the p53 transcription factor binding motif. The data consisted of A, C, G, T nucleotides (dj = d = 4) with p = 20 positions for n = 574 sequences obtained from 542 high-confidence p53 binding loci identified by ChIP-PET experiments (Wei et al. 2006). The p53 tumor suppressor is a sequence-specific DNA binding transcription factor. It regulates the expression of genes involved in a variety of cellular functions, including cell-cycle arrest, DNA repair, and apoptosis (Vogelstein, Lane, and Levine 2000). Hence, p53 provides a natural starting application for our methodology for flexibly characterizing and testing for positional dependence within gene sequences.

We applied the same approach implemented in the simulation study examples. The null hypothesis test for p53 data gave that , with equal to the proportion of samples from which max{νh|h = 1,2, …, k*} ≤ 1 − ε, where ε is set to be 0.10 (identical results were obtained for 0.01 and 0.05). To estimate the pairwise positional dependence structure, we used the ρjj′ correlation measure defined in expression (8). Figure 3(a) shows the posterior means of ρjj′ for each pair j, j′ ∈ {1, …, 20}, while Figure 3(b) shows the frequentist Cramer’s V estimates applied separately to each pair of locations. Our estimates are consistent with the Cramer’s V estimates in that we assign relatively high correlations to similar pairs of locations. However, as expected in using our Bayes sparseness-favor approach, the smaller correlations are shrunk closer to zero.

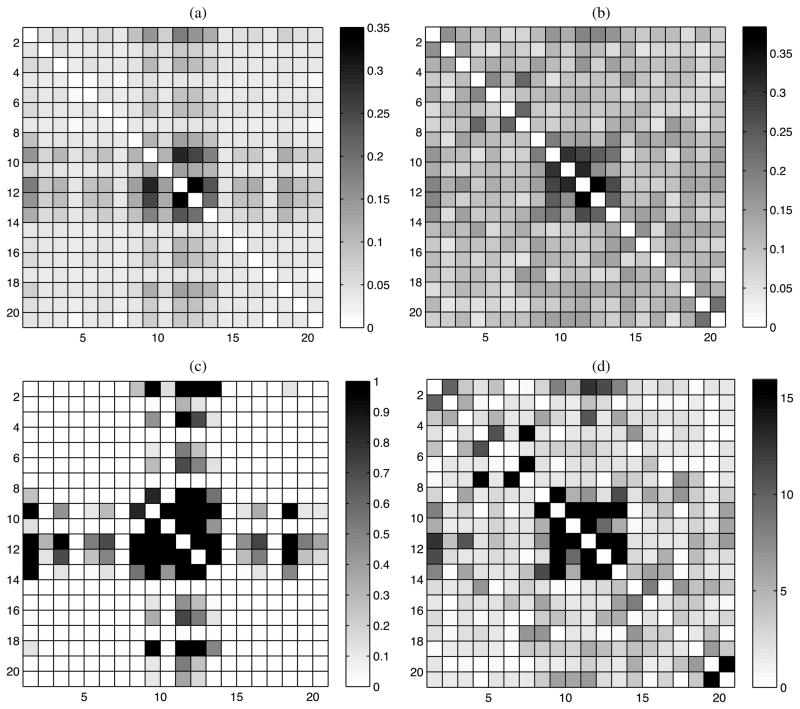

Figure 3.

Results for p53 data. (a) Posterior mean of ρjj. (b) Pairwise Cramer’s V values. (c) Pairwise posterior probabilities, Pr(H1jj′|X). (d) − log10 p-values from pairwise χ2 tests.

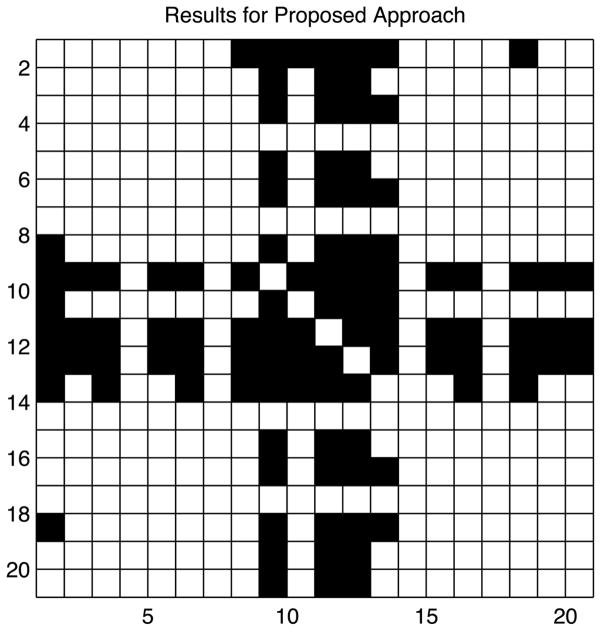

Figure 3(c) shows the estimated posterior probabilities of H1jj′ : ρjj′ > 0.1, while Figure 3(d) shows − log10 p-values from pairwise χ2 tests. Given that − log10 0.05 = 1.3, it is apparent in examining Figure 3(d) that there is a large number of very small p-values. In fact, choosing those position pairs that satisfy the Benjamini and Hochberg (1995) threshold to maintain a false discovery rate (FDR) ≤ 0.05, we obtain 126 pairs, with a large number flagged even for FDR ≤ 0.01. The Xie and Geng (2008) approach selected all 190 pairs as dependent when using a p-value threshold of 0.05 or 0.01 for edge inclusion. In contrast, our Bayesian procedure selects only 16 pairs of positions having Pr(H1jj′|X) > 0.95. The pairs of positions selected by our method are shown in Figure 4. Given the much lower Type I error rate of our Bayes nonparametric approach in the simulation study, we expect our results are closer to the truth.

Figure 4.

Pairs of positions flagged as dependent for the p53 data using the proposed Bayesian nonparametric approach with Pr(H1jj′|X) > 0.95.

6. DISCUSSION

This article has proposed a Bayesian nonparametric approach for inference in sparse contingency tables constructed for multivariate nominal data. We focused in particular on Dirichlet process mixtures of product multinomial models, which can be shown to be highly flexible and computationally convenient. In fact, we find the proposed Gibbs sampler for posterior computation to be quite efficient, which is promising in terms of scaling up the approach to deal with several problems that are of substantial interest. The first is flexible modeling of dependence in large numbers of single nucleotide polymorphisms. As long as the dependence structure can be characterized using a sparse mixture, with most of the weight on few components in the latent structure decomposition, then scaling up to much larger p should be straightforward. In addition to inferences on dependence across the genome, one important application is to accommodating missing genotype data, which is straightforward within the proposed framework. In fact, following the argument of Formann (2007), one can even allow violations of the missing at random assumption.

A common focus of statistical analyses of sequence data is searching for new motifs in long nucleotide sequences, with such searches attempting to identify words (sequences of nucleotides) that are conserved across sequences collected for different subjects. Most search algorithms make the assumption of independence across the positions within a motif. However, the Gibbs sampling approach of Liu, Neuwald, and Lawrence (1995) can potentially be modified to allow dependence through our proposed approach, so that one can allow for dependence in searching for motifs. Jensen and Liu (2008) recently used a Dirichlet process-based approach for clustering of different transcription factor binding motifs allowing for different lengths.

Another interesting extension of our proposed approach would be to include predictors, wi = (wi1, …, wiq)′, that potentially impact the joint distribution of xi. This can be accomplished in a sparse manner by allowing the weights to be predictor dependent, so that νh is replaced by νih = νh(wi). A predictor-dependent stick-breaking process, which generalizes the Dirichlet process, can then be defined for these weight functions. For example, the kernel stick-breaking process of Dunson and Park (2008) can be used directly.

APPENDIX A: PROOF OF THEOREM 1

To prove Theorem 1, first note that the singular value decomposition (SVD) expresses π as

| (A.1) |

where λh ≥ 0 are singular values, are the nonzero eigenvalues of the matrix ππ′, ξh is a d1 × 1 vector, κh is a d2 × 1 vector, and and are orthonormal bases for the spaces spanned by the rows of ππ′ and π′π, respectively. The SVD decomposition in (A.1) is unique up to multiplication of ξh and κh by −1. As A = ππ′ is nonnegative, the rows of A (A′) can be expressed as linear combinations of nonnegative orthonormal bases ξ(κ). We remove sign ambiguity in (A.1) by restricting ξ and κ to be nonnegative. The spectral radius of the positive definite matrix A is defined as . It is known that ρ(A) ≤ ||A||, where ||·|| denotes an induced norm, such as . Because the elements of π are nonnegative probabilities summing to one, it is clear that for all j, so that ρ(A) ≤ 1. From the Perron–Frobenius theorem, , and hence 0 < λh ≤ 1 for h = 1, …, k.

Using the known restrictions on , ξ and κ, we can equivalently express (A.1) as

| (A.2) |

where ξh = cξ,h ξ̃h, , κh = cκ,h κ̃h, , and λ̃h = cξ,hcκ,hλh. In the reparameterization, ξ̃h and κ̃h are probability vectors, with the elements in summing to one. Because the elements of π sum to one, it is straightforward to show that , and hence λ̃ = (λ̃1, …, λ̃k)′ is a probability, vector. Noting that (1) can be expressed as , Theorem 1 follows directly.

APPENDIX B: PROOF OF COROLLARY 1

The approach used in proving Theorem 1 can be applied directly after showing that expression (2) holds under the constraints (i) 0 < λh ≤ 1 for h = 1, …, k; and (ii) the elements of are nonnegative for h = 1, …, k and j = 1, …, p. Letting π[jj′] denote the dj × dj′ matrix containing Pr(xij = cj, xij′ = cj′) in element cj, cj′, it follows from (2) that

where the rows of can be expressed as linear combinations of . Hence, forms a basis for the union of the row spaces of . Because π is a nonnegative tensor, the rows of are nonnegative and can be expressed as linear combinations of nonnegative vectors having positive weights. Hence one can choose each of the vectors to be nonnegative, which satisfies property (ii). Because (2) is not uniquely defined in that λhUh can be replaced by λhCC−1Uh for any C ∈ ℜ+, the restriction 0 < λh ≤ 1 can be included trivially.

APPENDIX C: PROOF OF THEOREM 2

Using Corollary 1, π0 can be expressed as

where ν0 = (ν01, …, ν0k0, 0, …, 0)′, k0 is the rank of the tensor, π0, ν0h = 0 for h = k0 + 1, …, ∞, and are probability vectors with respective dimensions d1, …, dp. The L1 distance between π0 and an arbitrary π ∈ Πd1···dp can be defined as

| (C.1) |

which is a function of the probability vectors characterizing the tensor SVD decompositions. Holding π0 fixed, the probability allocated to

ε (π0)can then be defined as

ε (π0)can then be defined as

| (C.2) |

For any ε >0, it is straightforward to show that there exist infinitely many values ε̃ >0 and ε* > 0 such that

implies that ||π − π0||1 < ε. Hence, to show that (C.2) is strictly positive, it suffices to show

which follows directly from conditions (i) and (ii) of the Theorem 2 due to independence.

Contributor Information

David B. Dunson, Email: dunson@stat.duke.edu, Department of Statistical Science, Duke University, Durham, NC 27705.

Chuanhua Xing, Email: chuanhua.xing@duke.edu, Department of Biology, Duke University, Durham, NC 27705.

References

- Ansari A, Iyengar R. Semiparametric Thurstonian Models for Recurrent Choices: A Bayesian Analysis. Psychometrika. 2006;71:631–657. [Google Scholar]

- Ashford JR, Sowden RR. Multivariate Probit Analysis. Biometrics. 1970;26:535–546. [PubMed] [Google Scholar]

- Barash Y, Elidan G, Friedman M, Kaplan T. Modeling Dependence in Protein-DNA Binding Sites. RECOMB 2003; Berlin, Germany. April 2003.2003. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the False Discovery Rate: A Practical and Powerful Approach to Multiple Testing. Journal of the Royal Statistical Society, Ser B. 1995;57:289–300. [Google Scholar]

- Berger JO, Sellke T. Testing a Point Null Hypothesis—The Irreconcilability of p-Values and Evidence. Journal of the American Statistical Association. 1987;82:112–122. [Google Scholar]

- Bulyk ML, Johnson PLF, Church GM. Nucleotides of Transcription Factor Binding Sites Exert Interdependent Effects on the Binding Affinities of Transcription Factors. Nucleic Acids Research. 2002;30:1255–1261. doi: 10.1093/nar/30.5.1255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chib S, Greenberg E. Analysis of Multivariate Probit Models. Biometrika. 1998;85:347–361. [Google Scholar]

- Dunson DB, Park J-H. Kernel Stick-Breaking Processes. Biometrika. 2008;95:307–323. doi: 10.1093/biomet/asn012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferguson TS. A Bayesian Analysis of Some Nonparametric Problems. The Annals of Statistics. 1973;1:209–230. [Google Scholar]

- Ferguson TS. Prior Distributions on Spaces of Probability Measures. The Annals of Statistics. 1974;2:615–629. [Google Scholar]

- Formann AK. Mixture Analysis of Multivariate Categorical Data With Covariates and Missing Entries. Computational Statistics & Data Analysis. 2007;51:5236–5246. [Google Scholar]

- Gilula Z. Singular Value Decomposition of Probability Matrices: Probabilistic Aspects of Latent Dichotomous Variables. Biometrika. 1979;66:339–344. [Google Scholar]

- Good IJ. Some Applications of the Singular Decomposition of a Matrix. Technometrics. 1969;11:823–831. [Google Scholar]

- Goodman LA. Explanatory Latent Structure Assigning Both Identifiable and Unidentifiable Models. Biometrika. 1974;61:215–231. [Google Scholar]

- Hoff PD. Subset Clustering of Binary Sequences, With an Application to Genomic Abnormalities. Biometrics. 2005;61:1027–1036. doi: 10.1111/j.1541-0420.2005.00381.x. [DOI] [PubMed] [Google Scholar]

- Jara A, Garcia-Zattera MJ, Lesaffre E. A Dirichlet Process Mixture Model for the Analysis of Correlated Binary Responses. Computational Statistics & Data Analysis. 2007;51:5402–5415. [Google Scholar]

- Jensen ST, Liu JS. Bayesian Clustering of Transcription Factor Binding Motifs. Journal of the American Statistical Association. 2008;103:188–200. [Google Scholar]

- Kolda TG. Orthogonal Tensor Decompositions. SIAM Journal on Matrix Analysis and Applications. 2001;23:243–255. [Google Scholar]

- Kottas A, Müller P, Quintana F. Nonparametric Bayesian Modeling for Multivariate Ordinal Data. Journal of Computational and Graphical Statistics. 2005;14:610–625. [Google Scholar]

- Lawrence CE, Reilly AA. An Expectation Maximization (EM) Algorithm for the Identification and Characterization of Common Sites in Unaligned Biopolymer Sequences. Proteins. 1990;7:41–51. doi: 10.1002/prot.340070105. [DOI] [PubMed] [Google Scholar]

- Lazarsfeld PF, Henry NW. Latent Structure Analysis. Boston, MA: Houghton Mifflin; 1968. [Google Scholar]

- Liu JS. The Collapsed Gibbs Sampler in Bayesian Computations With Applications to a Gene Regulation Problem. Journal of the American Statistical Association. 1994;89:958–966. [Google Scholar]

- Liu JS, Neuwald AN, Lawrence CE. Bayesian Models for Multiple Local Sequence Alignment and Gibbs Sampling Strategies. Journal of the American Statistical Association. 1995;90:1156–1170. [Google Scholar]

- Muliere P, Tardella L. Approximating Distributions of Random Functionals of Ferguson–Dirichlet Priors. Canadian Journal of Statistics. 1998;26:283–297. [Google Scholar]

- Nikolajewa S, Pudimat R, Hiller M, Platzer M, Backofen R. BioBayesNet: A Web Server for Feature Extraction and Bayesian Network Modeling of Biological Sequence Data. Nucleic Acids Research. 2007;35:W688–W693. doi: 10.1093/nar/gkm292. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ochi Y, Prentice RL. Likelihood Inference in a Correlated Probit Regression Model. Biometrika. 1984;71:531–544. [Google Scholar]

- Papaspiliopoulos O. Working Paper 08-20. Centre for Research in Statistical Methodology, University Warwick; Coventry, U.K: 2008. A Note on Posterior Sampling From Dirichlet Process Mixture Models. [Google Scholar]

- Papaspiliopoulos O, Roberts GO. Retrospective Markov Chain Monte Carlo Methods for Dirichlet Process Hierarchical Models. Biometrika. 2008;95:169–186. [Google Scholar]

- Quintana FA. Nonparametric Bayesian Analysis for Assessing Homogeneity in k × I Contingency Tables With Fixed Right Margin Totals. Journal of the American Statistical Association. 1998;93:1140–1149. [Google Scholar]

- Quintana FA, Newton MA. Computational Aspects of Non-parametric Bayesian Analysis With Applications to the Modeling of Multiple Binary Sequences. Journal of Computational and Graphical Statistics. 2000;9:711–737. [Google Scholar]

- Scott JG, Berger JO. An Exploration of Aspects of Bayesian Multiple Testing. Journal of Statistical Planning and Inference. 2006;136:2144–2162. [Google Scholar]

- Sethuraman J. A Constructive Definition of Dirichlet Priors. Statistica Sinica. 1994;4:639–650. [Google Scholar]

- Tomovic A, Oakeley EJ. Position Dependencies in Transcription Factor Binding Sites. Bioinformatics. 2007;23:933–941. doi: 10.1093/bioinformatics/btm055. [DOI] [PubMed] [Google Scholar]

- Vogelstein B, Lane D, Levine AJ. Surfing the p53 Network. Nature. 2000;408:307–310. doi: 10.1038/35042675. [DOI] [PubMed] [Google Scholar]

- Walker SG. Sampling the Dirichlet Mixture Model With Slices. Communications in Statistics—Simulation and Computation. 2007;36:45–54. [Google Scholar]

- Walker SG, Gutiérrez-Peña E. Bayesian Parametric Inference in a Nonparametric Framework. TEST. 2007;16:188–197. [Google Scholar]

- Wei CL, et al. A Global Map of p53 Transcription-Factor Binding Sites in the Human Genome. Cell. 2006;124:207–219. doi: 10.1016/j.cell.2005.10.043. [DOI] [PubMed] [Google Scholar]

- Xie X, Geng Z. A Recursive Method for Structural Learning of Directed Acyclic Graphs. Journal of Machine Learning Research. 2008;9:459–483. [PMC free article] [PubMed] [Google Scholar]

- Zhang X, Boscardin WJ, Belin TR. Bayesian Analysis of Multivariate Nominal Measures Using Multivariate Multinomial Probit Models. Computational Statistics & Data Analysis. 2008;52:3297–3708. doi: 10.1016/j.csda.2007.12.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou Q, Liu JS. Modeling Within-Motif Dependence for Transcription Factor Binding Site Predictions. Bioinformatics. 2004;20:909–916. doi: 10.1093/bioinformatics/bth006. [DOI] [PubMed] [Google Scholar]