Abstract

Background

Computer-based clinical decision support (CDS) systems have been shown to improve quality of care and workflow efficiency, and health care reform legislation relies on electronic health records and CDS systems to improve the cost and quality of health care in the United States; however, the heterogeneity of CDS content and infrastructure of CDS systems across sites is not well known.

Objective

We aimed to determine the scope of CDS content in diabetes care at six sites, assess the capabilities of CDS in use at these sites, characterize the scope of CDS infrastructure at these sites, and determine how the sites use CDS beyond individual patient care in order to identify characteristics of CDS systems and content that have been successfully implemented in diabetes care.

Methods

We compared CDS systems in six collaborating sites of the Clinical Decision Support Consortium. We gathered CDS content on care for patients with diabetes mellitus and surveyed institutions on characteristics of their site, the infrastructure of CDS at these sites, and the capabilities of CDS at these sites.

Results

The approach to CDS and the characteristics of CDS content varied among sites. Some commonalities included providing customizability by role or user, applying sophisticated exclusion criteria, and using CDS automatically at the time of decision-making. Many messages were actionable recommendations. Most sites had monitoring rules (e.g. assessing hemoglobin A1c), but few had rules to diagnose diabetes or suggest specific treatments. All sites had numerous prevention rules including reminders for providing eye examinations, influenza vaccines, lipid screenings, nephropathy screenings, and pneumococcal vaccines.

Conclusion

Computer-based CDS systems vary widely across sites in content and scope, but both institution-created and purchased systems had many similar features and functionality, such as integration of alerts and reminders into the decision-making workflow of the provider and providing messages that are actionable recommendations.

Keywords: Computer assisted decision making, hospital information system, clinical decision support systems, computerized medical record system, clinical decision support, medicine clinical information system, clinical care, disease management, specific conditions, diabetes mellitus

1. Background

Electronic health records (EHRs) and computerized clinical information systems have been touted by many politicians and academics as part of the cure for the unacceptably high costs and inconsistent quality that characterizes health care in the United States. Indeed, electronic health records are a cornerstone of health reform in the American Recovery and Reinvestment Act (ARRA) of 2009 [1]. Moreover, the Health Information Technology for Economic and Clinical Health Act (HITECH) offers incentive payments to eligible health care providers and hospitals to implement “meaningful use” of EHRs, which includes using clinical decision support systems alongside EHRs to improve quality and safety [2]. As the United States moves forward with the widespread implementation of health information technology, it is crucial to understand how clinical decision support systems have been designed and used and how they differ from site to site and among different EHR systems.

Clinical decision support (CDS) systems are manual or computer-based information systems that assist in clinical decision-making. Broadly, clinical decision support “provides clinicians, staff, patients, or other individuals with knowledge and person-specific information, intelligently filtered or presented at appropriate times, to enhance health and health care” [3]. CDS systems have numerous uses, including aiding in diagnosis, prevention, and treatment; checking for drug-drug or drug-allergy interactions; and assisting in the management of patients with chronic medical conditions [4]. CDS systems are often implemented with the goal of increasing efficiency, improving quality of care, and enhancing patient safety.

Studies have shown many benefits of CDS use, including increasing health system efficiency, for instance, by reducing unnecessary utilization; streamlining workflow, such as by offering preformatted orders; improving quality of care, for instance, by increasing preventive care measures and adherence to national care guidelines; and enhancing patient safety, such as by reducing medication errors [5, 6]. Recent systematic reviews have shown that most but not all (64% in one review and 68% in another) studies evaluating CDS systems demonstrate improvements in clinical practice [7, 8]. However, studies have also demonstrated that the impact of a CDS system is dependent on attributes such as design, features, automaticity, and usability [8]. In diabetes care, CDS systems have been shown to improve adherence rates for preventive care measures such as influenza and pneumococcal vaccine utilization, blood glucose control, blood pressure control, and cholesterol control as well as improvements in measures of diabetes control such as hemoglobin A1c (HbA1c), microalbuminuria, retinal examinations, and foot examinations [9, 10]. However, other randomized studies have shown mixed results, in some cases showing improvement on process measures without improvement on outcomes including HbA1c [11], or improvement on certain measures (glycemic control and blood pressure) but not others (cholesterol) [12]. In addition, one small cross-sectional study showed lower performance on diabetes measures in practices that adopted electronic medical records, though it did not look at CDS specifically [13].

Although CDS holds great promise, CDS systems have not been widely adopted in the United States. There is no gold standard CDS system, and many of the institutions that have implemented CDS have forged their own way with little collaboration or advice from others who have trodden the path [3]. Moreover, because CDS systems are often integrated with electronic health record systems or electronic order entry systems, they are compatible with only certain systems and are difficult to share [14]. Although the percentage of physicians in small group or solo practices in the United States has declined in the past decade, over 40% of physicians still practice in groups of 5 physicians or fewer [15]. It is particularly burdensome for these small practices to gather the information technology knowledge necessary to implement CDS and to allocate resources for regular knowledge management, CDS content updating, and trouble-shooting. Many institutions that wish to employ CDS lack the resources or infrastructure to design and launch their own CDS systems and instead purchase CDS content from a vendor, often the vendor of the EHR used by the institution. Thus, much of the literature on CDS systems stems from a small number of academic medical centers and integrated delivery networks with internally developed CDS systems, although a few studies have evaluated commercial systems [3].

In this paper, we conducted an analysis of clinical decision support content in use at six diverse hospitals and health systems throughout the United States in order to gain a better understanding of the similarities and differences in decision support in use in the United States. The six sites are members of the Clinical Decision Support Consortium (CDSC), a group of collaborating institutions with CDS systems whose goal is to “assess, define, demonstrate, and evaluate best practices for knowledge management and clinical decision support in healthcare information technology at scale – across multiple ambulatory care settings and EHR technology platforms” [16]. We focused specifically on CDS content in use for care of patients with diabetes mellitus.

2. Objectives

Computer-based clinical decision support (CDS) systems have been shown to improve quality of care and workflow efficiency in a variety of settings; however, the heterogeneity of CDS content across sites is not well known, the adoption of CDS has been far from universal, and not all institutions that have attempted CDS have been successful. A variety of factors can affect the success (or failure) of CDS [17], but one of the most critical factors is the clinical content that underlies the CDS. At present, most institutions developing CDS also develop the content that underlies their CDS. Though the content is usually based on clinical guidelines and measures, many local decisions are made in the process of translating guidelines into actionable CDS, and the heterogeneity of CDS content across sites (which reflects these local decisions) is not well known.

In this study, we aimed to assess the heterogeneity of CDS content across six sites in the domain of diabetes mellitus management in the outpatient setting. We focused on diabetes because it is common in the United States, involves frequent monitoring of both clinical symptoms and laboratory tests, has a treatment, and has a course that can be affected by preventive health measures. Over the course of our analysis, we determined the scope of clinical decision support content in use at the six sites for care of patients with diabetes mellitus, assessed the decision support capabilities in use at these six sites, characterized the CDS infrastructure at the six sites, and determined how the six sites use CDS beyond individual patient care in order to identify characteristics of CDS systems and content that have been successfully implemented in diabetes care.

Understanding CDS heterogeneity is important for two primary reasons. First, such heterogeneity may account, at least in part, for the observation that different institutions have had more and less success with CDS. Second, one potential solution for achieving scalability in clinical decision support is likely to be content sharing [18]. The extent and importance of CDS content heterogeneity across institutions will affect how effectively such content can be shared as well as the amount of local customization required [19]. More broadly, understanding such heterogeneity can also inform the work of clinical guideline and measure developers, clinical information system vendors, policymakers, and researchers, all of whom facilitate or influence CDS adoption and use.

3. Methods

We began by collecting decision support content for diabetes mellitus and preventive care from six collaborating CDSC member sites: Kaiser Permanente Northwest (KPNW), the Mid-Valley Independent Physicians Association (MVIPA), Partners HealthCare (Partners), the Regenstrief Institute (Regenstrief), the University of Medicine and Dentistry of New Jersey (UMDNJ) Robert Wood Johnson Medical School (RWJMS), and the Veterans Health Administration (VHA). These sites were chosen as they were the CDSC sites at the time the survey was conducted and agreed to share their CDS content; all are located within the United States. Specifically, we requested all CDS content that applied to patients with diabetes mellitus, and we requested all CDS content for preventive care that included patients with diabetes mellitus. Content that was submitted was current in 2008–2009 when the survey was completed. When available, screen shots were used to supplement data provided in the survey or to provide examples of data provided in the survey. Site representatives submitting content included Clinical Decision Support and Knowledge Management Lead (KPNW); Medical Director of Information Systems (MVIPA); Principal Medical Informatician for Knowledge Management (Partners); Research Scientists, Knowledge Engineer, and Assistant Professor of Clinical Medicine (Regenstrief), Medical Director of Clinical Information Systems (RWJMS), and Research Scientists (VHA). We also surveyed sites on characteristics of their clinical information system and sites, including:

-

1.

Whether the site purchased or developed its CDS system and, if purchased, from whom.

-

2.

Whether sites used guidelines as the basis for creating clinical decision support rules.

-

3.

Whether the inputs to CDS rules were clinical data, such as from an EHR, or billing data.

-

4.

Whether CDS was used at the site in the care of inpatients or outpatients.

-

5.

Which providers at the site used CDS.

-

6.

Whether users of CDS could customize CDS rules and whether certain rules were used only by certain sets of providers.

-

7.

Whether use of CDS was the default at the site or whether users had to activate CDS in order to use it.

-

8.

Whether rules were actionable, such as allowing providers to order medications or tests directly.

-

9.

Whether the default of the rule was to follow the rule.

-

10.

Whether CDS was integrated with an order entry system.

-

11.

Whether CDS was used at time of clinical decision making.

-

12.

Whether CDS was used by the site for pay-for-performance.

-

13.

Whether the site used adherence to clinical decision support prompts as a quality measurement.

-

14.

Whether certain rules were used to warn against ordering unnecessary testing or treatments (such as duplicate orders).

-

15.

Whether sites used CDS as a tool to control costs.

CDS content was submitted by each site in human readable format (although some was also executable) and a representative from each site assisted in interpreting the content when it was unclear. Sample logic and screen shots of rules from three sites are included in ►Figures 1–3. CDS content applicable to care for patients with diabetes mellitus was requested, which also included content that applied to a broader population of patients including those with diabetes mellitus (for instance, hypertension screening rules that apply to all patients, including those with diabetes mellitus) or content with specific rule adjustments for patients with diabetes mellitus (for example, cholesterol screening rules that apply to all patients but set a different value target value for diabetic patients).

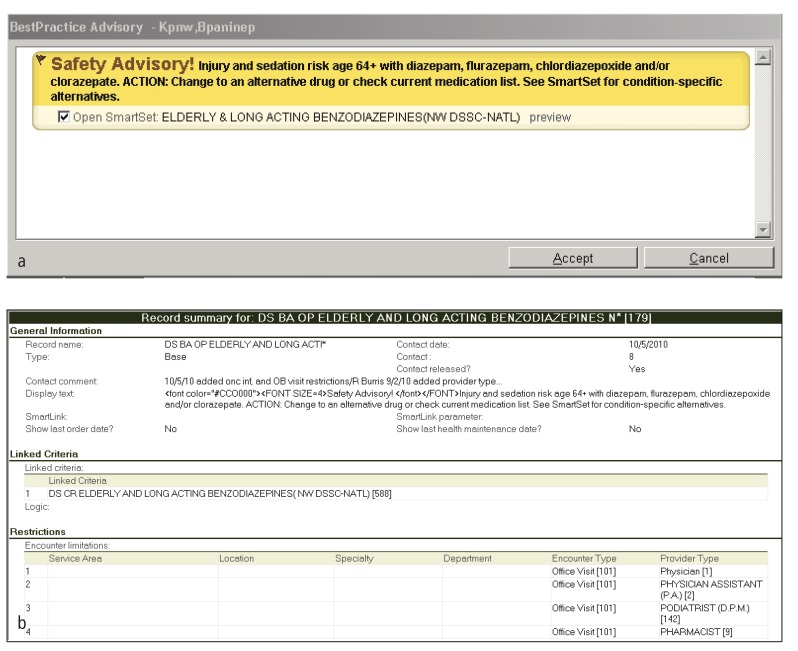

Fig. 1.

Sample CDS content from KPNW. (a) Representative alert from Kaiser Permanente Northwest and (b) representative screen shots of corresponding rule logic. This rule is applicable for patients with and without diabetes who are over 64 years old.

We developed a framework for organizing and evaluating individual rules in our study. The elements of the framework included clinical objective, clinical category (disease assessment, treatment, or prevention), inclusion criteria, exclusion criteria, value threshold(s), message(s) displayed, and recommended treatment(s) or action(s). This framework is demonstrated in ►Table 1 using a sample rule. This framework facilitated across-site comparison of rules with similar clinical objectives as well as comparison of clinical objectives met by rules at different sites.

Table 1.

A sample rule from Partners HealthCare Systems illustrating the parameters compared for submitted CDS content.

| Parameter | Definition | Example Rule (Partners HealthCare) |

|---|---|---|

| Clinical Objective | Goal to be met by rule | • Diabetic patients with evidence of renal disease should be prescribed an ACE inhibitor (ACEI) or angiotensin II receptorblocker (ARB). |

| Clinical Category | Disease Assessment, Treatment, or Prevention | • Prevention (diabetic nephropathy) |

| Inclusion Criteria | Criteria that qualify an individual patient for a particular CDS rule | Include the patient if: • Patient has history of diabetes mellitus OR diabetes mellitus type 1 OR diabetes mellitus type 2 OR diabetic ketoacidosis • Patient >18 years old • Microalbumin/creatinine ratio > 30 •No ACEI and ARB on medication list • ACEI and ARB not on allergy list |

| Exclusion Criteria | Criteria that disqualify an individual patient for a particular CDS rule | Exclude patients with: • Type I Diabetes mellitus • Problem list entry contains a family history, “rule out,” “risk of,” OR “negative” modifier |

| Value Threshold | Cut-off point in measured value for laboratory or other tests, or for time of overdue tests or examinations | Include patients with: • a microalbumin/creatinine ratio <30 |

| Message Displayed | Message displayed to a user when a particular CDS rule is activated | • Display the text: “Patient with DM, microalbumin/creatinine ratio ≥30 and not on ACEI, ARB.Recommend ACEI or ARB.” |

| Recommended Treatment or Action | Action recommended by a particular CDS rule | • Suggest an ACEI or ARB |

4. Results

4.1 Sites

The Clinical Decision Support Consortium is a group of collaborating institutions with CDS systems whose goal is to “assess, define, demonstrate, and evaluate best practices for knowledge management and clinical decision support in healthcare information technology at scale – across multiple ambulatory care settings and EHR technology platforms” [16]. The Clinical Decision Support Consortium (CDSC) collaborating members include Partners HealthCare, Regenstrief Institute, Kaiser Permanente Northwest, Veterans Health Administration, UMDNJ Robert Wood Johnson Medical School, and Mid-Valley Independent Physicians Association. The sites are compared in ►Table 2, and detailed descriptions of the sites are included in the Appendix.

Table 2.

Comparison of the six sites by characteristics of the site and history of use of EHRs and CDS.

| KPNW | MVIPA | Partners | Regenstrief* | RWJMS | VHA | |

|---|---|---|---|---|---|---|

| Location | Pacific Northwest | Salem, OR | Boston, MA | Indianapolis, IN | New Jersey | National |

| Type | Group practice HMO | Community outpatient | Academic | Academic-affiliated | Academic | Government, academic-affiliated |

| Size | 30+ outpatient clinics; 199-bed hospital; multispecialty medical practice of 800 providers | 500+ practitioners; 200+ providers use community EHR | 5000+ providers; 2 academic hospitals, community hospitals, a psychiatric hospital, rehabilitation hospitals | 264-bed county hospital; 8 outpatient community health centers* | 500 physicians in 39 specialty practices | 1,400+ sites of care; 14,700 practitioners |

| Clinical Volume | 472,000 members; 1.5 million outpatient visits/year | 500,000+ ambulatory visits/year | 1.2 million outpatient visits/year; 100,000 admissions/year | 1.2 million outpatient visits/year; 15,000 adult admissions / year* | 400,000 patient visits/year | 60 million outpatient visits/year; 5.5+ million patients |

| Status | Private (Nonprofit) | Independent Physicians’ Association | Private (Nonprofit) | Public | Public | Public |

| Began EHR | 1989 | 2006 | 1969 | 1972 | 2000 | mid 1970s |

| Began CDS | 1997 | 2006 | 1969 | 1974 | 2008 | mid 1990’s |

* Regenstrief Institute, which is a research organization, provides computerized physician order entry (CPOE) and CDS for Wishard Health Services. The size data provided are that of Wishard Health Services.

The sites are located across the country, from New England to the Pacific Northwest, and range in size as well as clinical volume. They include both public and private sites as well as academic-affiliated sites, a community outpatient site, a group practice HMO, and a government site. The sites are also diverse in their date of implementation of EHR and CDS, as some sites began to use EHRs four decades ago and others began only in the past 5–10 years.

4.2 General Characteristics of CDS systems

A comparison of the general characteristics of the EHR and CDS systems at each site is presented in tabular format in ►Table 3. Like the sites themselves, there is great variety in the decision support systems implemented, which include both internally developed and purchased or leased vendor systems; systems which focus on inpatients, outpatients, or all patients; and decision support which is actionable or not.

Table 3.

General Characteristics: A comparison of a selection of the general characteristics of CDS infrastructure at the six sites.

| KPNW | MVIPA | Partners | Regenstrief | RWJMS | VHA | |

|---|---|---|---|---|---|---|

| CDS Development Process | ||||||

| System developed or purchased | Purchased (Epic Systems Corporation) | Purchased (NextGen) | Mainly developed; some sites purchase | Developed | Purchased (GE Healthcare) | Developed |

| Based on guidelines | Yes | Yes | Yes | Yes | Yes | Yes |

| Applicability | ||||||

| Input source (clinical or billing) | Clinical | Clinical | Clinical | Clinical | Clinical | Clinical and Billing (ICD-9) |

| Inpatients, outpatients, or both | Both | Outpatients | Both; different systems for each | Both; certain rules for each | Outpatients | Both |

| Message recipients | All care providers; patients have access to some reminders via a patient portal | All care providers | All care providers; all patients via patient portal | All care providers who use the order entry system | Certain care providers | All care providers; there is also a patient portal with reminders |

| CDS Usability | ||||||

| CDS is automatic | Both solicited and unsolicited CDS | Yes | Yes | Yes | Optional | Yes |

| Message is actionable | Yes; can order tests, referrals, patient instructions, and perform other functions | Usually | Only at a few sites | Yes; can order on the page | Some | Yes; can order test directly from the clinical reminder dialog box (preferred method of ordering) |

| Default is to follow rule | No Default | Yes | No | Yes | No | No Default |

| CDS integrated with EHR or order entry | Yes | Yes | Yes | Yes | Yes | Yes |

| CDS used at time and location of decision-making | Yes | Yes | Yes | Yes | Yes | Yes |

4.2.1 Development

The six sites use a mix of self-developed and commercial systems. Partners, Regenstrief, and VHA all developed their own EHRs, while KPNW purchased software from Epic, MVIPA purchased software from NextGen, and RWJMS purchased GE’s Centricity system. These systems represent a broad cross-section of the commercial EHR market: Epic is a leader in the market for large practices such as hospitals and integrated health care networks, NextGen is positioned as a system for smaller practices such as small ambulatory practices and group practices, and Centricity is used across practice settings – small, medium, and large.

Each site reported that they use guidelines as the basis for their decision content. Partners uses published guidelines, with local adjustments made by Partners experts, the VHA bases their content on national performance measures, and MVIPA mainly uses content supplied by NextGen, which is, in turn, guidelines-based. KPNW develops all of its content, drawing on a variety of internal and external experts and resources.

4.2.2 Applicability and Usability

All sites use clinical data, including data stored in an EHR, as the source of inputs for CDS rules; only the VHA system additionally uses billing data within their rules. KPNW, Partners, Regenstrief, and VHA apply CDS to both inpatients and outpatients, although Partners uses different systems for each and Regenstrief applies different rules to each. MVIPA and RWJMS use CDS exclusively for in the outpatient setting.

The sites vary in the forms of decision support they provide to users; for example, some sites use documentation templates while others provide order sets. However, one commonality is that all sites provide alert or reminder messages to providers in some form. Every site shows alerts and/or reminders to care providers, and all sites except RWJMS show all care providers CDS. In addition, KPNW and Partners also present some CDS content to patients in the form of reminders via an online patient portal. All sites provide role-based customizability, allowing the site to tailor which users see the message, such as providers in certain specialties. However, only MVIPA provides individual customizability, allowing individual users to customize their own reminders. At VHA and KPNW, providers cannot turn off a base set of reminders but can activate optional reminders if they are not automatically generated (VHA) or change reminder settings to see reminders more frequently than the minimum (KPNW). At RWJMS, providers choose whether to use CDS in their clinical practice and which alerts to use.

At KPNW, MVIPA, Partners, Regenstrief, and VHA, CDS is generated automatically (unsolicited), meaning that users do not have to activate the system in order to view the message. Most sites use simple or single-step rules, but Regenstrief and Partners allow a complex chaining of rules, where the output of one rule can serve as the input for another rule. At most sites, the CDS messages are actionable some or all of the time – allowing providers to order tests, medications, or appointments directly through the clinical reminder – but there is heterogeneity among the sites in whether such rules default to performing the recommendation or not. All sites use CDS prospectively at the time of decision-making and integrate CDS with the EHR’s charting or order entry system. Some of the sites also provide asynchronous decision support in the form of registries and reports.

Across sites, rules differ considerably more in their exclusion criteria than in their inclusion criteria, and several sites developed sophisticated exclusion criteria for CDS rules which help to increase the specificity of the alerts. For example, certain KPNW rules exclude hospice patients; some Regenstrief rules exclude patients who have Do Not Resuscitate orders, who are in the intensive care unit, or who are older than 80; and several VHA rules exclude patients whose physicians record an estimated life expectancy of less than 6 months. Such rules avoid the prompting providers to order tests or preventive care measures for patients unlikely to benefit from them. Other examples include suppressing reminders for actions that have been ordered recently but are not yet complete (such as pending test results), suppressing reminders that no longer apply to patients (such as eye exams for legally blind patients, or foot exams for patients with bilateral lower extremity amputations), and showing reminders only during restricted times (such as for flu vaccinations).

4.2.3 Message Attributes

At most sites, the message displayed as part of the CDS rule contains a recommendation and is phrased prescriptively, such as “DISEASE MANAGEMENT: This patient is due or due soon for a lipid screening test. ACTION: Use the SmartSet to order” (KPNW). Some of the messages used by Regenstrief are statements followed by an orderable item, such as “Diabetics with proteinuria benefit from therapy with an ACE inhibitor,” followed by the option to place an order, “Benazepril 10 mg po qAM.”

The types of messages presented to clinicians differ widely. For example, KPNW and Partners use messages with commands, such as “Order” or “Refer,” while Regenstrief and VHA messages vary from commands to statements (e.g. “Use of ACE inhibitors has been shown to preserve renal function in diabetics”), and RWJMS messages are suggestions, such as “Would you like to order now?” Notably, messages to avoid or refrain from a course of action were more indirectly phrased than messages that suggested action. For instance, at Regenstrief, a rule for discouraging the use of contrast dye displays the message: “Last Creatinine = (VALUE) and diabetic. Pt at very high risk for CONTRAST-INDUCED ACUTE RENAL FAILURE (unless already on dialysis). Consider potential interventions – E.g., alternative imaging (MRI, Ultrasound), avoiding NSAIDs, IVFs, Acetylcysteine.” Sites vary on whether specific drugs are recommended in messages, with KPNW, Partners, Regenstrief, and VHA providing specific drug names in at least some messages and RWJMS providing drug class suggestions in messages.

Nearly every site offers explanations for or provides supporting evidence for some or all messages. Most sites do not consistently require users to document a reason for not following the recommendation; however, some sites allow users to document contraindications. Whereas most sites use CDS to recommend an action, only Partners also displayed messages even when no actions needed to be taken (e.g. when tests were up to date) for positive feedback to providers and patients.

4.2.4 Quality and Performance Applications

The reported applications of CDS beyond individual patient care vary by site. Partners, RWJMS, and VHA tie CDS explicitly to pay-for-performance measures, and VHA uses reminder completion rates as a factor in bonuses. KPNW does not explicitly link its content to pay-for-performance; however, both its content and pay-for-performance efforts focus on some of the same clinical areas. Most of the sites also enmesh CDS use with quality measurement efforts: KPNW, Partners, RWJMS and VHA do this explicitly, and VHA relies heavily on quality reports and generates reminder reports at the provider, clinic, hospital, regional, and national level. MVIPA links CDS to quality measures upon request by individual practices. Regenstrief participates in a large quality effort called “Quality Health First” but measures quality using database queries and does not explicitly use decision support to drive or measure performance on these quality measures.

CDS was also used for cost control at some sites. For example, Regenstrief emphasizes the costs of expensive drugs in its ordering systems, and KPNW uses order sets and other tools to promote evidence-based and cost-effective care. Regenstrief and KPNW also discourage ordering of unnecessary tests and treatments – for example, alerting the user to reconsider ordering a microalbumin if patient had a recent microalbumin or their last urinalysis demonstrated proteinuria.

4.3 Clinical Care Rules

Clinical care rules for the disease assessment, treatment, and disease prevention for patients with DM are compared in tabular format in ►Table 4.

Table 4.

Clinical Characteristics: A comparison of CDS content for diabetes mellitus care highlighting the similarities in rules for diabetes disease assessment and preventive care.

| KPNW | MVIPA | Partners | Regenstrief | RWJMS | VHA | |

|---|---|---|---|---|---|---|

| Disease Assessment | ||||||

| Diagnose Diabetes | No | No | No | Yes | No | No |

| Assess HbA1c | Yes | Yes | Yes | Yes | Yes | Yes |

| HbA1c Due (Time) | 1 year (fires at 11 months) | 3 months | 3 months (if HbA1c >7%); 6 months (if HbA1c<7%; fires at 5 months) | 6 months | 90 days | 1 year (fires at 9 months) |

| Prevention | ||||||

| Aspirin Prophylaxis | Yes | Yes | Yes | Yes | Yes | No |

| Block Use of Contrast in Patients with Renal Failure | No | No | No | Yes | No | No |

| Eye Exam | Yes | Yes | Yes | Yes | Yes | Yes |

| Foot Exam | Yes | Yes | Yes | No | No | Yes |

| Hypertension Screening | Yes | Yes | No | No | No | No |

| Hypertension Treatment | No | No | Yes | Yes | Yes | No |

| Influenza Vaccine | Yes | Yes | Yes | Yes | Yes | Yes |

| Lipid Screening | Yes | Yes | Yes | Yes | Yes | Yes |

| Nephropathy Screening | Yes | Yes | Yes | Yes | Yes | Yes |

| Pneumococcal Vaccine | Yes | Yes | Yes | Yes | Yes | Yes |

| Thyroid Function | No | No | No | Yes | Yes | No |

4.3.1 Disease Assessment

Only one of the sites (Regenstrief) has a rule aiding physicians in the diagnosis of diabetes. In contrast, all sites have a rule to remind providers to assess a patient’s hemoglobin A1c (HbA1c) on an ongoing basis once a diagnosis of diabetes is established, although sites differ on the time interval at which reminders fire. Some remind providers to assess a patient’s HbA1c annually, with the rule firing at one to three months beforehand (KPNW, VHA), while other sites (MVIPA, RWJMS) remind providers to assess HbA1c every 3 months. Partners and Regenstrief have different reminders depending on the patient’s prior HbA1c level; for instance, at Partners, providers are reminded to assess HbA1c every 3 months if a patient’s prior HbA1c is greater than 7% and every 6 months if a patient’s prior HbA1c is less than 7%. Additionally, three of the sites have complex rules involving different courses of action depending on laboratory values, such as blood pressure (Regenstrief), creatinine (Regenstrief), HbA1c (Partners, Regenstrief), LDL cholesterol (Partners, RWJMS), and microalbumin (Regenstrief).

4.3.2 Treatment

Most sites do not offer treatment rules for diabetes mellitus; only Partners and Regenstrief offer rules suggesting or discouraging treatment options. At both sites, suggested treatments are orderable. Regenstrief rules suggest specific drugs with doses, route, and frequency specified, while most Partners treatment rules suggest treatments at the level of drug class, such as ACE inhibitor, statin, or oral hypoglycemic agent. Both Partners and Regenstrief also have rules to suppress reminders that would suggest a contraindicated treatment or to discourage providers from ordering a contraindicated treatment, such as because of an allergy (Partners) or other condition – for instance, metformin in patients with renal or hepatic impairment, congestive heart failure, or alcoholism (Regenstrief). In addition, CDS at Regenstrief allows for fine-tuning of patient care – for example, by recommending alternative drugs in patients with uncontrolled hypertension who have contraindications to certain medications or are already using other anti-hypertensive medications.

4.3.3 Prevention

A wide variety and depth of preventive care rules are used across sites. These prevention rules apply either specifically to patients with diabetes mellitus or to a cohort of patients that includes patients with diabetes mellitus. All sites have rules for influenza vaccine reminders, lipid screening, pneumococcal vaccine, and nephropathy screening (for instance, microalbumin urine test or creatinine blood or urine test) reminders. Five sites have rules for aspirin prophylaxis and four have rules reminding providers to perform foot exams on diabetic patients. While five sites have rules for hypertension screening or hypertension treatment, none of the sites has rules for both hypertension screening and hypertension treatment. While Regenstrief has a variety of rules to suggest medications and actions based on the blood pressure measurement, Regenstrief reported no rule for hypertension screening because all of the outpatient clinics routinely measure blood pressure as part of the pre-visit registration process.

All sites have a rule to remind providers to order an eye exam in diabetic patients, although the specific rule differs across sites. Most sites use a rule reminding providers to order an eye exam annually. However, KPNW has two separate rules: one rule applies to patients with retinopathy (recommending annual eye exams), while the second rule applies to patients without retinopathy (recommending biannual eye exams). KPNW’s rule is based on evidence that a two year interval is sufficient for eye exams in diabetic patients with documented absence of retinopathy.

Several sites have additional unique preventive rules not found at other sites; for example, RWJMS has a rule for annual thyroid function testing and Regenstrief has a rule to block the use of imaging contrast in diabetic patients with creatinine >1.5. All sites have at least six preventive care rules.

5. Discussion

Although CDS systems can improve the quality of care and increase workflow efficiency, they have not been widely adopted in the United States, in part because such systems are difficult and expensive to create and are not easily shareable. In this study, we compared clinical decision support systems in six collaborating sites of the Clinical Decision Support Consortium located throughout the United States. We gathered clinical decision support content within the area of diabetes mellitus disease management as well as preventive care and surveyed institutions on characteristics of their electronic health record (EHR) system and the infrastructure of CDS at their site. We found that the details of CDS systems vary widely, and each site had a unique CDS system with unique rules. However, some commonalities between sites existed which can be used to inform CDS development at institutions without CDS or looking to enhance their existing CDS system.

These commonalities may provide a useful starting point for institutions looking to add CDS to their EHR. For example, such institutions might begin by implementing an automatic CDS system based on clinical data and used at time of decision-making that provides messages that are recommendations for action, and by making messages actionable, as these were commonalities shared by most or all of the CDS systems studied. Fortunately for novice institutions that can choose either to buy or build their CDS system, our study showed that purchased and internally developed systems share many of the same features. Purchasing first may be easier and/or more cost effective; however, internally developed content tended to be more sophisticated and complex, reflecting development and optimization over decades.

All of the sites implemented rules for preventive medicine and all developed content for common clinical problems such as diabetes. This is likely due to multiple factors, including greater availability of practice guidelines, greater penetration of electronic health record utilization among primary care providers versus specialists, more availability of relevant clinical data to author rules, and higher pay for performance and reimbursement pressures on meeting primary-care-oriented metrics. Therefore, implementers of CDS in a new site may choose to focus on preventive care first, since many sites in the CDSC have found prevention rules feasible to implement and generally less controversial. The most common prevention rules used by the six sites studied were reminders for lipid screening, eye exam for diabetic patients, influenza vaccination, hypertension screening or treatment, pneumococcal vaccination, nephropathy screening in diabetic patients, foot exam in diabetic patients, and aspirin prophylaxis in patients with coronary artery disease risk factors.

Even in the content areas where sites shared similar rules, differences could be found in the details of the content, such as how conditions were defined (inclusion and exclusion criteria), or the threshold for generating an alert (such as duration between reminders or threshold lab values). This probably reflects local practice patterns or workflows, or areas not precisely defined by literature and evidence. This may presage significant challenges to expand CDS nationally or to the care of more complex or nuanced conditions or rules. This variation took two forms: clinical variation (i.e. differences in clinical practice or opinion across the sites) and EHR-related variation. Tools to reduce (inappropriate) clinical variation may include expanded and more detailed clinical practice guidelines as well as performance measurement. EHR-related variation occurred in part because each site used a different EHR system. If collaborators are to share information and knowledge gathered in their EHR systems, it must be known whether the EHR systems are compatible from a data exchange perspective and whether the systems support similar CDS functionality and rule formats [20]. If widespread adoption of CDS systems is to occur nationally, it must be determined which common content elements will be used and what, if any, degree of local variation will be permissible. In addition, the variation among sites included some practices that are not consistent with current practice guidelines; this highlights the need to increase standardization and implementation of evidence-based guidelines and incorporation of guidelines into development of CDS content [21]. Although the development of consistent guidelines is a crucial step, some have argued that much of guideline implementation is necessarily local [19]; thus, determining the correct balance between evidence-based standardization and local tailoring is a key challenge for those developing clinical decision support tools.

Mature forms of decision support differed between sites and were more commonly used at sites that have been using CDS for longer periods of time. These included making CDS messages available to patients via an online portal, using CDS for both inpatients and outpatients, allowing customizability on either a role-based or individual level, excluding inapplicable patients, preventing rules from firing for initiated but incomplete actions (e.g. pending tests), and developing rules suggesting different courses of action depending on thresholds of laboratory values.

Some of the strengths of this study include the diversity of sites surveyed. Sites were located across the country and included academic, community-based, government-run, and HMO institutions with annual clinical volume ranging from 400,000 to 60,000,000 outpatient visits. An additional strength was the close collaboration among the sites to share CDS rules and information about their sites. A final strength of this study is the wide variety of metrics examined as related to diabetes care, including rules for disease assessment, management, and complication prevention.

Our study also has some important limitations. First, we relied on self-report and focused primarily on a single condition (diabetes mellitus) and related CDS. It is possible that the degree of similarity and difference observed for diabetes-related content may have differed had we focused on another content area. Also, our report is descriptive in nature, and we have not correlated the characteristics of CDS with clinical outcomes, costs of care, provider compliance, ease of use, or minimum time necessary to operate. Thus, we can report only the most and least common features or rules among sites but not which were associated with best patient outcomes or greatest provider compliance.

In the future, we hope to correlate attributes of CDS systems with clinical outcomes, costs, user acceptance of CDS, patient and provider satisfaction, and provider compliance. Moreover, future studies could explore the differences in individual rules and determine the best approach for implementing individual rules in a common format. Finally, we are currently undertaking efforts within the CDSC to develop standard, Consortium-wide CDS content which could be deployed at multiple sites. We hope to learn more about the feasibility of this standardization as well as the clinical and technical complexities entailed.

6. Conclusions

Computer-based CDS systems vary widely across sites in content and scope as well as infrastructure. However, despite the differences in infrastructure and the ways in which CDS was used across sites, both institution-created and purchased systems had similar features and functionality, such as integration of alerts and reminders into the decision-making workflow of the provider and providing messages that are actionable recommendations. In order to achieve the promise of continued improvement in patient care, clinical decision support must address the variation in rule logic and functionality that currently exists across sites so that future content can be easily shared and that standards can be developed. Future guidelines about the management of clinical entities should consider definitive and unambiguous recommendations that can be incorporated into clinical decision support, especially for common, treatable diseases and preventive health measures. As health care practioners in the United States move towards implementing electronic health records and clinical decision support, it is imperative to ensure that decision support relies on evidence-based guidelines but allows for local variation and institution standards.

Clinical Relevance

This study provides information to physician practices and clinical sites looking to begin implementation of CDS but lack the resources to build their own system or wish to enhance their existing system, as we have shown that purchased systems have similar clinical uses as institution-created systems. Practices looking to implement CDS may choose to look for systems that use an automatic CDS system based on clinical data and used at time of decision-making that provided messages that are recommendations for action and makes these messages actionable, as these were commonalities shared by most or all of the CDS systems studied. This study also provides insight into ways in which meaningful use of EHRs can be applied in the care of patients with diabetes mellitus, such as providing preventive care reminders and reminders to order hemoglobin A1c testing.

Conflicts of Interest

The authors declare that they have no conflicts of interest in the research presented.

Protection of Human and Animal Subjects.

No human or animal subjects were used in this study.

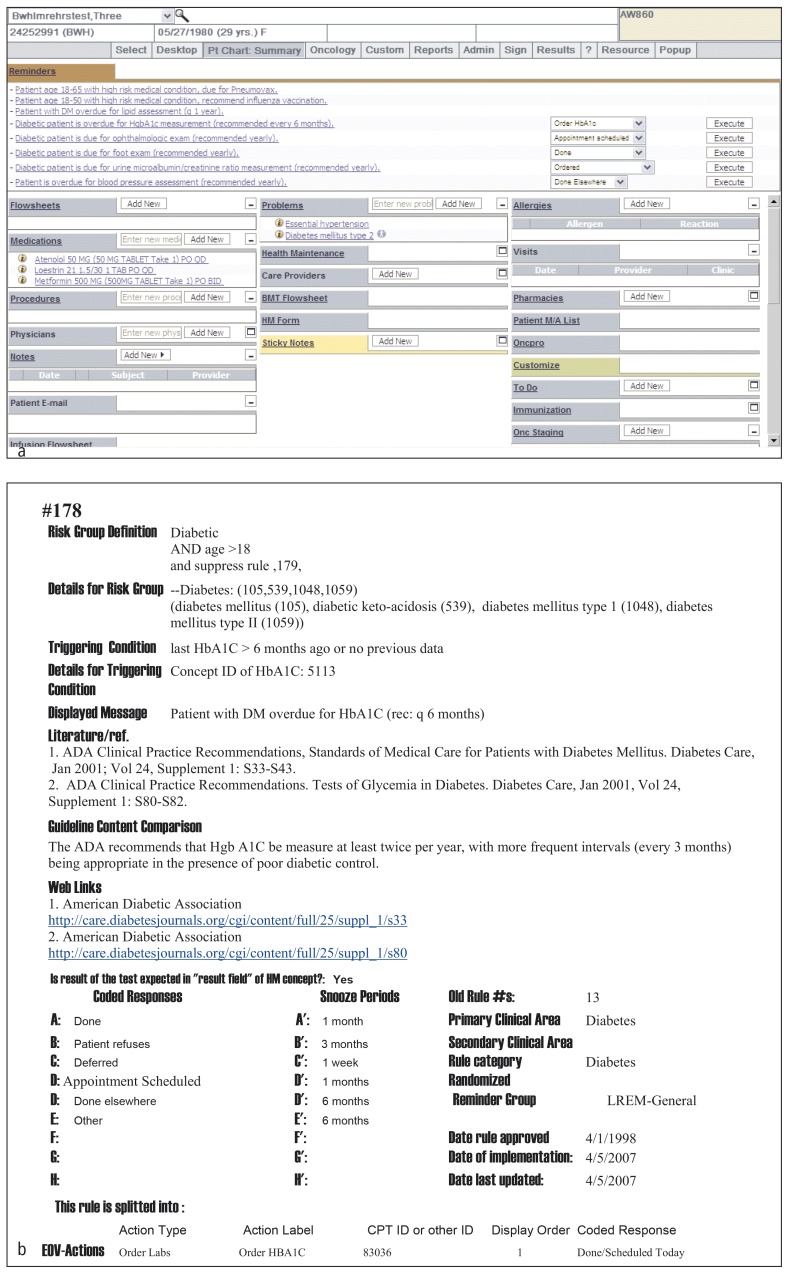

Fig. 2.

Sample CDS content from Partners. (a) Diabetes and other reminder screen shot from Partners and (b) sample diabetes rule logic from Partners.

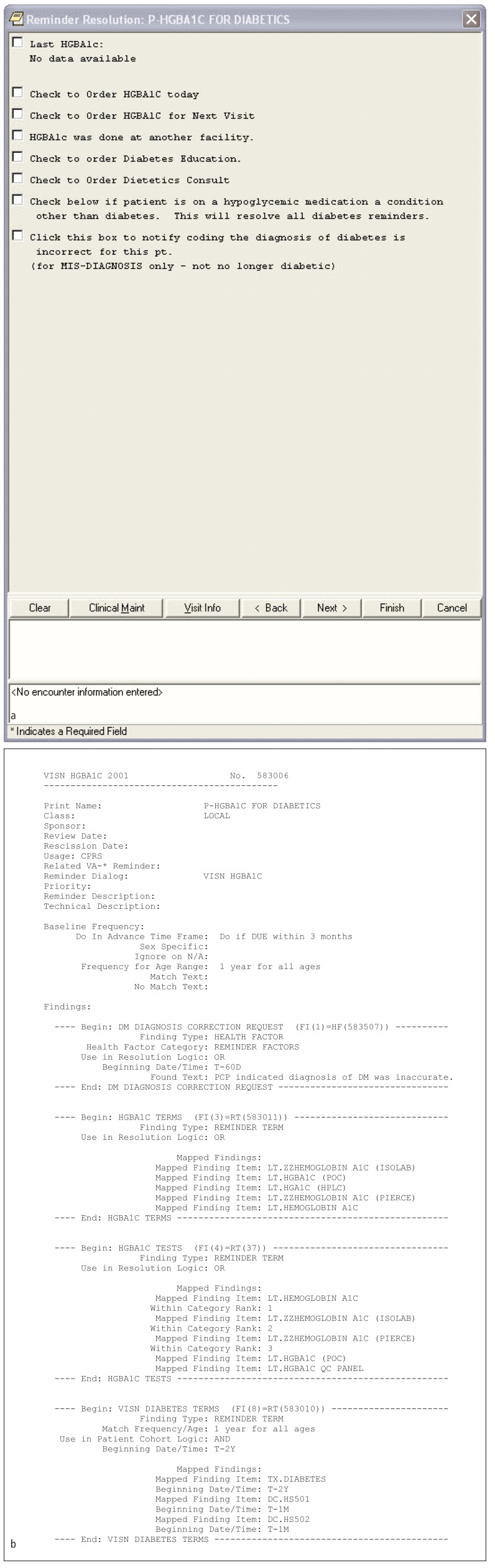

Fig. 3.

Sample CDS content from VHA. (a) Diabetes reminder screen shot from VHA and (b) corresponding rule logic.

Acknowledgments

We thank Christie Brandt, Jason Miller, Jason Saleem, and Ben Tucker for contributing to the work. This project was funded under contract HHSA290200810010 from the Agency for Healthcare Research and Quality (AHRQ), U.S. Department of Health and Human Services. The opinions expressed in this document are those of the authors and do not reflect the official position of AHRQ or the U.S. Department of Health and Human Services. Funding was also received by the Office of Enrichment Programs, Harvard Medical School.

References

- 1.American Recovery and Reinvestment Act of 2009, Pub No L. 111–5 [Google Scholar]

- 2.Blumenthal D, Tavenner M.The „meaningful use“ regulation for electronic health records. N Engl J Med2010; 363(6): 501–504 [DOI] [PubMed] [Google Scholar]

- 3.Osheroff JA, Teich JM, Middleton B, Steen EB, Wright A, Detmer DE. A roadmap for national action on clinical decision support. J Am Med Inform Assoc 2007; 14(2): 141–145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Zai AH, Grant RW, Estey G, Lester WT, Andrews CT, Yee R, et al. Lessons from implementing a combined workflow-informatics system for diabetes management. Ibid 2008; 15(4): 524–533 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Annals of internal medicine 2006; 144(10): 742–752 [DOI] [PubMed] [Google Scholar]

- 6.Goud R, de Keizer NF, ter Riet G, Wyatt JC, Hasman A, Hellemans IM, et al. Effect of guideline based computerised decision support on decision making of multidisciplinary teams: cluster randomised trial in cardiac rehabilitation. BMJ (Clinical research ed.) 2009; 338: b1440 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Garg AX, Adhikari NK, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005; 293(10): 1223–1238 [DOI] [PubMed] [Google Scholar]

- 8.Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ (Clinical research ed.) 2005; 330(7494):765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Weber V, Bloom F, Pierdon S, Wood C.Employing the electronic health record to improve diabetes care: a multifaceted intervention in an integrated delivery system. Journal of general internal medicine 2008; 23(4): 379–382 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Montori VM, Dinneen SF, Gorman CA, et al. The impact of planned care and a diabetes electronic management system on community-based diabetes care: the Mayo Health System Diabetes Translation Project. Diabetes care 2002; 25(11): 1952–1957 [DOI] [PubMed] [Google Scholar]

- 11.Mullan RJ, Montori VM, Shah ND, et al. The diabetes mellitus medication choice decision aid: a randomized trial. Archives of internal medicine 2009; 169(17): 1560–1568 [DOI] [PubMed] [Google Scholar]

- 12.O’Connor PJ, Sperl-Hillen JM, Rush WA, et al. Impact of electronic health record clinical decision support on diabetes care: a randomized trial. Annals of family medicine 2011: 9(1): 12–21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Crosson JC, Ohman-Strickland PA, Hahn KA, et al. Electronic medical records and diabetes quality of care: results from a sample of family medicine practices. Annals of family medicine 2007; 5(3): 209–215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sittig DF, Wright A, Osheroff JA, Middleton B, Teich JM, Ash JS, et al. Grand challenges in clinical decision support. Journal of biomedical informatics 2008; 41(2): 387–392 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Center for Studying Health System Change: Tracking Report No 18: Results from the Community Tracking Study [Internet]. c2007 [cited 2009 Oct 30]. Available:http://www.hschange.com/CONTENT/941/ [Google Scholar]

- 16.CDS Consortium: CDSC Overview Document. [Internet]. c2008 [cited 2009 Jul 1]. Available from: http://www.allhealth.org/chcrep/1024docs_ww/CDSCOverview.pdf.

- 17.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003; 10(6): 523–530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wright A, Sittig DF. A four-phase model of the evolution of clinical decision support architectures. International journal of medical informatics 2008; 77(10): 641–649 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Waitman LR, Miller RA. Pragmatics of implementing guidelines on the front lines. J Am Med Inform Assoc 2004; 11(5): 436–438 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wright A, Sittig DF, Ash JS, Sharma S, Pang JE, Middleton B.Clinical decision support capabilities of commercially-available clinical information systems. J Am Med Inform Assoc 2009; 16(5): 637–644 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Graham R, Mancher M, Wolman DM, Greenfield S, Steinberg E; Committee on Standards for Developing Trustworthy Clinical Practice Guidelines; Institute of Medicine. „Clinical Practice Guidelines We Can Trust.“ Washington DC: National Academy of Sciences,2011 [Google Scholar]