Abstract

Objective

The purpose of this research was to determine whether negative effects of hearing loss on recall accuracy for spoken narratives can be mitigated by allowing listeners to control the rate of speech input.

Design

Paragraph-length narratives were presented for recall under two listening conditions in a within-participants design: presentation without interruption (continuous) at an average speech-rate of 150 words per minute; and presentation interrupted at periodic intervals at which participants were allowed to pause before initiating the next segment (self-paced).

Study sample

Participants were 24 adults ranging from 21 to 33 years of age. Half had age-normal hearing acuity and half had mild-to-moderate hearing loss. The two groups were comparable for age, years of formal education, and vocabulary.

Results

When narrative passages were presented continuously, without interruption, participants with hearing loss recalled significantly fewer story elements, both main ideas and narrative details, than those with age-normal hearing. The recall difference was eliminated when the two groups were allowed to self-pace the speech input.

Conclusion

Results support the hypothesis that the listening effort associated with reduced hearing acuity can slow processing operations and increase demands on working memory, with consequent negative effects on accuracy of narrative recall.

Keywords: Narrative recall, hearing acuity, self-paced listening

There is no question that even a mild-to-moderate hearing loss can cause a listener to miss or miss-hear words in fluent discourse, with consequent effects on comprehension or recall of what has been heard. A number of years ago Rabbitt (1968, 1991) suggested a potentially more subtle effect of hearing loss. The concern is that even when speech is audible, the effort required for its recognition may draw on cognitive resources that might otherwise be available for encoding what has been heard in memory. One might thus see poorer recall for a degraded speech signal relative to clear speech, even when it can be demonstrated that in both cases the to-be-recalled words could be correctly identified.

This so-called effortfulness hypothesis has attracted considerable attention in aging research because of the concern that effortful processing attendant to age-related hearing loss may multiply effects of older adults’ reduced working memory and executive resources to the detriment of effective memory for speech input (Baldwin & Ash, 2011; McCoy et al, 2005; Murphy et al, 2000; Pichora-Fuller, 2003; Rabbitt, 1991; Stewart & Wingfield, 2009; Surprenant, 2007; Wingfield et al, 2005).

Although this issue is clearly important for understanding the special problems of older adults with hearing loss, one may ask whether there may be equally subtle effects on recall for speech content by healthy young adults with mild-to-moderate hearing loss. Specifically, one may ask whether reduced hearing acuity may change what is ordinarily an automatic and rapid process of speech comprehension and memory encoding in young adults, to an effortful process that slows processing time, with negative effects on recall for what has been heard. This question takes on special importance because of reports of a significant increase in the incidence of hearing loss among young adults (e.g. Shargorodsky et al, 2010). Added to this concern is the finding that university students with hearing loss are not always aware of their loss, and hence, its cognitive consequences (Le Prell et al, 2011; Widen et al, 2009).

In the present paper we examine recall of spoken discourse by young adult listeners with normal hearing acuity and another group of participants with mild-to-moderate hearing loss. In reporting participants’ recall accuracy we took advantage of a formalized representational system for prose that organizes narrative elements into a hierarchical array that indexes the relative importance of different elements to the text as a whole (Kintsch & vanDijk, 1978, 1983; Meyer, 1985). This class of formal models has been validated by showing a “level-of-detail effect” in text recall, whereby the narrative elements that are higher in the hierarchy (the main ideas represented in the text) are better remembered than units lower on the hierarchy (details that embellish or add subsidiary specifics to the main ideas). Although the effect may be moderated by, for example, unexpectedly good recall of details due to their salience for individuals (Anderson & Pichert, 1978; Mandel & Johnson, 1984), one typically sees better recall for main ideas expressed in a narrative relative to recall of more minor detail (e.g. Dixon et al, 1982; Mandel & Johnson, 1984; Stine & Wingfield, 1987, 1990; Zelinski et al, 1984). Thus, so long as the speech is audible to the listener, one would expect to see this effect of narrative structure on participants’ recall. Our question, however, is whether effects of reduced hearing acuity will be revealed in poorer recall for speech materials, even when it can be shown that the speech itself is loud enough for successful identification of the stimulus words, albeit potentially supported by linguistic (top-down) context supplementing reduced sensory (bottom up) evidence (Benichov et al, 2012; Rönnberg et al, 2011; Wingfield et al, 2006).

To the extent that such a recall difference is due to slowed processing for those with hearing loss, one would predict that allowing more time for the listener to process what has been heard would improve recall success to a differentially greater degree for those with hearing loss relative to those with normal acuity. To test this possibility, listeners with normal hearing acuity and those with mild-to-moderate hearing loss heard recorded passages either without interruption at a normal speech rate, or with intermittent interruptions where the listener was allowed to control the initiation of the next segment of the speech input. In this way, listeners were able to pace themselves through a recorded passage on a segment-by-segment basis, calling for each segment with a keypress only when they felt ready to hear the next segment. This method, sometimes referred to as an auditory moving window technique (Ferreira et al, 1996), would thus allow a direct test of whether perceptual slowing can be an accompaniment of hearing loss, with concomitant effects on speech recall, and, as a corollary, whether allowing additional processing time can ameliorate this negative effect of hearing loss on recall.

Method

Participants

Twenty-four participants, six men and 18 women, with ages ranging from 21–33 years (M = 27.2 years, SD = 3.4) took part in this study. Of these, 12 participants had clinically normal hearing, with a mean pure-tone average (PTA; 500, 1000, 2000 Hz) of 6.0 dB HL (SD = 3.7), and 12 had a mild-to-moderate hearing loss, with a mean PTA of 41.0 dB HL (SD = 10.0) in the better ear (Hall & Mueller, 1997). Left and right ear acuity was symmetrical for both groups (mean difference = 0.6 dB for the normal-hearing group, and 1.1 dB for the hearing-loss group).

The two groups were similar in age, years of formal education, and vocabulary. The normal-hearing group ranged in age from 21 to 32 years (M = 26.4 years, SD = 3.3) and the hearing-loss group ranged from 22 to 33 years (M = 28.0 years, SD = 3.5), t(22) = 1.14, p = 0.27. The normal-hearing group had an average of 18.8 years of formal education (SD = 2.0), and the hearing-loss group 18.2 years of formal education (SD = 2.2), t(22) = 0.68, p = 0.51. Verbal ability as assessed by the Wechsler adult intelligence scale (WAIS-III; Wechsler, 1997) vocabulary test was 59.3 (SD = 8.0) for the normal-hearing group and 61.9 (SD = 7.0) for the hearing-loss group, t(22) = 0.87, p = 0.39. All participants reported themselves to be in good health, and all spoke English as their first language. None of the participants reported regular use of a hearing aid; one participant reported irregular use.

Stimulus materials

The stimuli were 10 spoken narrative passages averaging 166 words in length taken from Dixon et al (1989). Each passage contained a total of 13 sentences and an average of 86 propositions. Propositions represent the relationship between two or more words in a text, typically consisting of a predicate and an argument or in some cases a single modifier. As defined by Kintsch and van Dijk (1978), the proposition is thus the smallest particle of text meaning. The propositions in each narrative were organized into the previously described hierarchical structure to represent the relative importance of each proposition to the narrative as a whole. For example, to understand the sentence “Tom was exhausted, but proud to have finished the longest race of his life”, the listener must represent three ideas: the main idea is the proposition Tom finished a race, an intermediate level is represented by the qualifying propositions exhausted, proud, and longest, and more specific details represented by the modifier, of his life, and the conjunction but.

All passages were recorded by a female speaker of American English at an average speaking rate of 150 words per minute (wpm) onto computer sound files using SoundEdit 16 (Macromedia, Inc., San Francisco, USA) for the Macintosh computer (Apple, Cupertino, USA) that digitized (16 bit) the sound files at a sampling rate of 44,100 Hz. Sound level was normalized to ensure equivalent mean amplitude across all stimulus passages.

For the self-paced condition computer speech-editing was used to divide each passage into segments that would interrupt the speech input after major syntactic clause and sentence boundaries. The mean length of segments was five words (SD = 1.8). The scripting software stopped the speech passages at the end of each segment, with each subsequent segment initiated by a keypress.

Procedure

Each participant heard all 10 passages: five in a continuous, uninterrupted fashion, and five presented in a segment-by-segment fashion for self-pacing. Participants were told that they would hear spoken passages and, when each passage was finished, they were to recall aloud as much of the narrative as they could, as accurately as possible. They were told that some of the passages would be presented to allow them to self-pace through the passage. In this condition the passage would stop periodically, and they were to press an indicated key on the computer keyboard to initiate the next segment. Participants were told that they were free to continue through the passage at their own rate, with the goal being to recall as much of the passage as possible when it was finished.

Participants were told that their recall responses were being recorded for later scoring. No mention was made that in the self-paced condition the computer would also be recording the duration of their pauses between the end of each segment and their keypress to initiate the next segment. Stimuli were presented using PsyScope scripting software (Cohen et al, 1993).

Participants were tested individually in a sound-isolated testing room with stimuli presented binaurally at 68 dB HL via Eartone 3A (E-A-R Auditory Systems, Aero Company, Indianapolis, USA) insert earphones coupled to a GSI 61 (Grason-Stadler, Inc., Madison, USA) clinical audiometer. Continuous and self-paced conditions were blocked in presentation, with the order of blocks counterbalanced within participants groups, such that, by the end of the experiment, each condition order was presented an equal number of times. The particular passages heard in each condition were also varied across participants such that, by the end of the experiment, each passage was heard an equal number of times in the continuous or self-paced conditions.

Audibility of stimuli

An audibility control was conducted to ensure that the speech materials could be correctly identified by both participant groups at the presentation level used in the experiment. For this purpose participants heard three 9- to 12-word sentences recorded by the same speaker and at the same sound level used for the main experiment. The listener’s task was to “shadow” the speech, repeating aloud each word as it was heard, thus minimizing a memory component. All participants in both groups achieved 100% shadowing accuracy.

It is important to emphasize that successful identification of target words does not imply that participants with hearing loss had as full access to the information carried by the words’ sound patterns as those with normal hearing acuity. Indeed, it is the thesis of this work that, although perceptual success was attained by those with reduced hearing acuity, this was accomplished at the cost of greater cognitive effort for perceptual resolution than for those with normal acuity (McCoy et al, 2005; Pichora-Fuller, 2003; Stewart & Wingfield, 2009; Surprenant, 2007).

Results

Recall accuracy

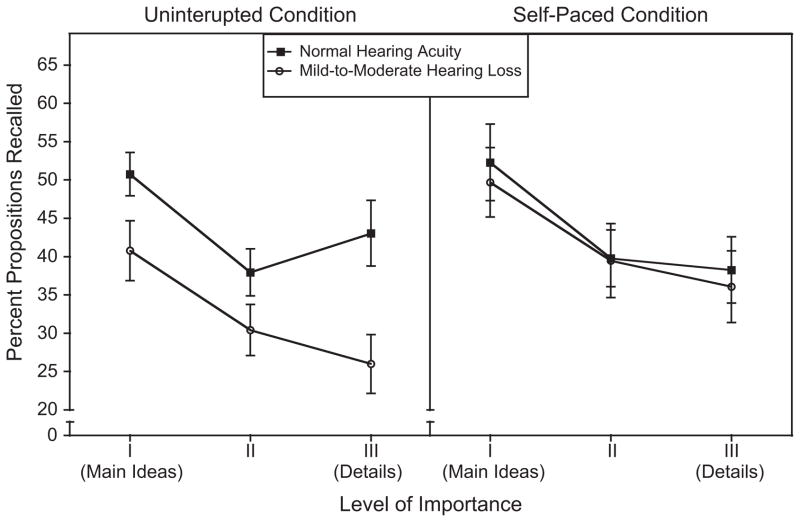

The left panel of Figure 1 shows recall accuracy for the two hearing acuity groups when passages were heard in the standard uninterrupted condition. Data are plotted as the percentage of propositions recalled for each of three levels of importance of the propositions to the narrative as a whole. Propositional representations, as previously defined (e.g. Kintsch, 1988; Kintsch & van Dijk, 1978, 1983), were constructed for each narrative. Although the propositions in these passages may be divided into as many as six detail levels ranging from main ideas to very fine detail, as described in Dixon et al (1989), for clarity we combined these finer gradations into three general levels: (I) main ideas, (II) mid-level information, and (III) finer detail. The right panel of Figure 1 shows recall performance for the two participant groups when speech was presented in the self-paced condition.

Figure 1.

Percentage of main ideas (I), middle level information (II), and details (III) recalled when passages were heard continuously without interruption (left panel) and when presented in a self-paced condition (right panel). Data are shown for participants with normal hearing acuity and those with a mild-to-moderate hearing loss. Error bars are one standard error.

A proposition was scored as correct if the participant’s recall accurately contained the core meaning of the proposition (Stine et al, 1986; Turner & Green, 1978). For each proposition recalled, the participant received either full or half credit depending on the completeness of the idea unit recalled (Turner & Green, 1978). All scoring was conducted on written transcripts of audio recordings of participants’ responses without the scorer’s knowledge of either the presentation condition or the hearing acuity of the participant. A sample of passages was scored independently by a second scorer, with good scoring agreement.

The data shown in the two panels of Figure 1 were submitted to a 2 (Hearing acuity: Normal hearing, Hearing loss) × 2 (Presentation condition: Continuous, Self-paced) × 3 (Level of detail: Main ideas, Mid-level information, Details) mixed-design analysis of covariance (ANCOVA). In this analysis, presentation condition and level of detail were within-participant variables, and hearing acuity was a between-participants variable.

As indicated previously, although neither the mean age nor mean WAIS vocabulary scores differed significantly between the two hearing acuity groups, there was some within-group variability in both factors. An examination of the data showed no overall significant relationship between participant age and recall performance. As we shall note in detail below, however, there was a positive relationship between WAIS vocabulary and recall performance. For this reason, WAIS vocabulary was entered in the analysis as a covariate as a conventional method to increase sensitivity of the statistical tests. WAIS vocabulary scores were centered (i.e. the mean was subtracted from individual scores) to support their use as a covariate in a repeated measures design (Delaney & Maxwell, 1981).

Visual inspection of Figure 1 shows a striking interaction involving presentation condition and hearing acuity, in which hearing acuity had a major effect on recall in the continuous presentation condition that virtually disappeared in the self-paced condition. Although the ANCOVA did not yield a significant main effect of presentation condition, F(1,21) = 2.35, p = 0.14, ηp2 = 0.10, there was a significant main effect of hearing acuity group, F(1,21) = 4.53, p= 0.05, ηp2 = 0.18. This pattern was moderated by a marginal Hearing acuity × Presentation condition interaction, F(1,21) = 3.37 = 0.08, ηp2 = 0.14. We probed this suggested interaction by carrying out simple tests of main effects without the use of covariates. The normal-hearing group’s overall level of recall was similar for the continuous (43.9%, SD = 10.8) and self-pacing (43.4%, SD = 14.0) conditions, t(11) = 0.11, p = 0.92. In contrast, the poor-hearing group’s recall was significantly better in the self-pacing condition (M = 41.7%, SD = 15.5) than in the continuous presentation condition (M = 32.4%, SD = 11.7), t(11) = 2.60, p = 0.03. Most important, although the good-hearing and poor-hearing groups differed significantly in the continuous presentation condition, t(22) = 2.51, p = 0.02, this difference was absent in the self-paced condition, t(22) = 0.28, p = 0.78.

The level of detail factor (main ideas, mid-level information, details) produced a strong main effect in the ANCOVA, as would be expected from the narrative recall literature (e.g. Rubin, 1978; Stine & Wingfield, 1987, 1990), with participants generally recalling main ideas better than mid-level information or details, F(2,42) = 48.10, p = 0.00, ηp2 = 0.70. An absence of a Hearing acuity group × Level of detail interaction, F(2,42) = 2.23, p = 0.12, ηp2= 0.10, or a Level of detail × Presentation condition interaction, F(2,42) = 0.86, p = 0.43, ηp2 = 0.04, was, however, accompanied by a marginal three-way Hearing acuity × Level of detail x Presentation condition interaction, F(2,42) = 2.47, p = 0.10, ηp2 = 0.11. The meaning of this suggestion of a three-way interaction was explored by conducting separate ANCO-VAs on the data for the continuous and the self-paced conditions.

For the continuous presentation condition in the left panel of Figure 1, this analysis confirmed a significant main effect of hearing acuity on recall performance, F(1,21) = 14.02, p = 0.00, ηp2 = 0.40, a significant main effect of level of detail, F(2,42) = 26.98, p = 0.00, ηp2 = 0.56, and a significant Level of detail × Hearing acuity inter-action, F(2,42) = 4.66, p = .02, ηp2 = 0.18. The source of this interaction was the observation that, while the poor-hearing group showed a progressive decline in recall from main ideas to details as typically found in studies of narrative recall (e.g. Rubin, 1978; Stine & Wingfield, 1987, 1990), the normal-hearing group showed unexpectedly good recall for the Level III details relative to recall of the mid-level information. That is, while there was a marginal linear contrast within the interaction, F(1,21) = 3.22, p = 0.09, ηp2 = 0.13, there was a significant quadratic contrast, F(1,21) = 11.62, p = 0.00, ηp2 = 0.36. The reason for the absence of a monotonic decline across level of detail in the uninterrupted condition for the participants with normal hearing acuity is not clear. This pattern was not due to effects of specific passages as a counterbalanced design insured that the same passages were employed in all conditions.

The recall data for the self-paced condition also showed a significant main effect of level of detail, F(2,42) = 30.15, p = 0.00, ηp2 = 0.59. We observed the presence of a linear trend, F(1,21) = 33.82, p = 0.00, ηp2 = 0.62, reflecting a progressive decrease in recall from main ideas to finer level detail. A quadratic trend was also present in the data, F(1,21) = 16.88, p = 0.00, ηp2 = 0.45, due to a relatively smaller decrease in accuracy from mid-level information to finer detail than from main ideas to mid-level information. Most important, however, in the self-paced condition there was no longer a significant main effect of hearing acuity on recall, F(1,21) = 0.29, p = .60, ηp2 = .01, nor was there a Hearing acuity × Level of detail interaction, F(2,42) = 0.24, p = 0.79, ηp2 = 0.01.

Effect of vocabulary on recall accuracy

In addition to their use as a covariate, WAIS vocabulary scores provided a useful perspective on the role of hearing acuity. For the continuous presentation condition, there was a significant positive correlation between WAIS vocabulary and participants’ recall accuracy collapsed across level of detail, r = 0.45, df = 22, p = .03. A multiple regression analysis with hearing acuity group and WAIS vocabulary scores entered as independent variables, showed that hearing acuity (beta = −0.57, t = 3., p = 0.00) and WAIS vocabulary (beta = 0.56, t = 3.65, p = 0.00) each had an independent significant association with recall performance in the continuous presentation condition (R2 = 0.52; F(2,21) = 11.55, p = 0.00). A preliminary regression analysis including Hearing acuity group, WAIS, and the Group × WAIS product vector as independent variables showed that the association between WAIS scores and recall in the continuous condition was not significantly different for the two groups: the product vector’s beta weight was −0.043, t = −0.28, df = 20, p = 0.79. Specifically, bivariate correlations showed a similar strength of association between WAIS vocabulary scores and recall performance for the two acuity groups: r = 0.74, df = 10, p = 0.00, for the normal hearing acuity group, and r = 0.51, df = 10, p = 0.09, for the impaired acuity group.

By contrast with recall in the continuous presentation condition, when participants were allowed to self-pace the passages, the data failed to show a significant correlation between WAIS vocabulary and recall accuracy for either the normal (r = 0.22, df = 10, p = 0.501) or impaired hearing acuity group (r = 0.37, df = 10, p = 0.234) separately, or for both groups considered together, r = 0.27, df = 22, p = 0.195. Consistent with this, the comparable multiple regression analysis for the self-pacing condition failed to show WAIS vocabulary to have a significant contribution to recall performance, beta = 0.295, t = 1.39, p = 0.179. Nor, as implied by the right panel of Figure 1 and the previous ANCOVA analysis, was there a significant effect of hearing acuity on recall accuracy, beta = −0.114, t = 0.54, p = 0.598 (R2 = 0.088, F(2,21) = 1.01, p = 0.382).

Pause durations

Individuals’ pause durations using the auditory moving window technique typically show that listeners pause longer at sentence boundaries than at within-sentence clause boundaries, reflecting their responsiveness to the syntactic structure of the speech input (Fallon et al, 2006; Ferriera et al, 1996; Titone et al, 2000; Waters & Caplan, 2001). This general pattern was replicated in the present study, with the normal-hearing group pausing for a mean of 993 ms (SD = 498 ms) following syntactic clause boundaries, and 1034 ms (SD = 565 ms) following sentence boundaries, and the hearing-loss group pausing for a mean of 1316 ms (SD = 850 ms) following clause boundaries, and 1532 ms (SD = 1003 ms) following sentence boundaries. A 2 (Hearing acuity: Normal-hearing, Hearing loss) × 2 (Boundary-type: Sentence boundary, Clause boundary) ANOVA confirmed a significant main effect of boundary-type, F(1,22) = 6.30, p = 0.02, ηp2 = 0.22. The two hearing acuity groups did not differ reliably in overall pause times, F(1,22) = 1.81, p = 0.19, ηp2 = 0.08, nor was there a significant Boundary type × Hearing acuity interaction, F(1,22) = 2.90, p = 0.10, ηp2 = 0.12. Examination of the data showed no significant relationship between participants’ ages or WAIS vocabulary scores on pause-time data.

Discussion

Beginning with Rabbitt’s (1968) seminal paper on degraded sensory information and recall of verbal materials, there have been numerous suggestions in the literature that the need for perceptual effort in the face of hearing loss or poor listening conditions may come at the cost of cognitive resources that might otherwise be available for encoding what has been heard in memory (McCoy et al, 2005; Murphy et al, 2000; Pichora-Fuller, 2003; Rabbitt, 1991; Surprenant, 1999, 2007; Wingfield et al, 2005). This effect is not limited to memory tasks alone, but appears also in comprehension accuracy for spoken sentences, an effect that is differentially greater for sentences in which the meaning is expressed using complex syntax (Stewart & Wingfield, 2009; Wingfield et al, 2006), and in performance on non-language cognitive tasks presented in an auditory mode (Baldwin & Ash, 2011). Indeed, a similar argument has been made for effects of degraded vision on reading times for written texts, as well as for performance on visually presented cognitive tasks, suggesting the generality of the effect beyond audition (Amick et al, 2003; Cronin-Golomb et al, 2007; Dickinson & Rabbit, 1991; Gao et al, 2011).

Subjective reports of cognitive effort associated with speech comprehension in the face of hearing loss are common, from school-age children in the classroom (Hicks & Tharpe, 2002) to older adults in everyday listening (Fellinger et al, 2007; Pichora-Fuller, 2003). In spite of the universality of this experience, and a long history in cognitive psychology (Kahneman, 1973; Titchener, 1908), however, the concept of effort still remains poorly defined in the cognitive literature. It continues to serve as a primarily descriptive term that is often defined only operationally in terms of its effects. Such effects appear, for example, in declines in secondary task performance while attending to speech degraded by noise (Frasier et al, 2010; Mackersie et al, 2000; Sarampalis et al, 2009) or hearing loss (Hicks & Tharpe, 2002; Tun et al, 2009), or evidence from physiological responses such as increased pupil dilatation as a reflection of perceptual or cognitive effort (Kramer et al, 1997; Piquado et al, 2010; Zekveld et al, 2010). In the following sections we consider potential mechanisms that may underlie the effect of hearing loss on recall performance in the continuous (uninterrupted) and self-paced presentation conditions.

Narrative recall with a continuous presentation

There were two key findings in the present study. The first is that young, otherwise healthy adults with a mild-to-moderate hearing loss can show significant negative effects of listening effort on recall for meaningful speech, even when an audibility check confirmed participants’ ability to correctly identify words recorded by the same speaker, and presented at the same intensity level. That is, when a narrative passage was presented at 150 wpm, a very natural speech rate in daily conversation (Wingfield, 1996), those with reduced hearing acuity recalled less information, both in terms of detail and in terms of the main ideas represented in the passages, than did a group of young adults with normal hearing acuity who were similar in age, years of formal education and general vocabulary knowledge.

A notable feature of speech comprehension is the speed with which it is accomplished, necessitated by speech rates that average between 140 to 180 wpm in ordinary conversation, and even faster, such as with a television or radio newsreader working from a prepared script (Stine et al, 1990). This rapid processing is made possible in part by the way in which context-driven (“top down”) information interacts with “bottom up” information extracted from the sensory input as the speech unfolds in time (e.g. Marslen-Wilson & Welsh, 1978; Marslen-Wilson & Zwitserlood, 1989). For example, words heard within a linguistic context can often be recognized within 200 ms of their onset, or when less than half of their full acoustic duration has been heard. Words heard out of context require, on average, only130 ms more (Grosjean, 1980, 1985; Marslen-Wilson, 1984; Tyler, 1984; Wingfield et al, 1997) due to the rapid reduction in the number of words that share the same onset phonology and stress pattern with the target word as the amount of onset information is progressively increased (Lindfield et al, 1999; Tyler, 1984; Wayland et al, 1989). This cohort model of word recognition is similar to the neighborhood activation model of word recognition (Luce & Pisoni, 1998; Luce et al, 1990), except for its emphasis on word onsets.

It is important to emphasize that the ability of the participants with hearing loss to repeat back words with 100% accuracy in the audibility check should not imply that these participants had as full access to the information carried by the sound patterns of the speech as those with normal hearing acuity. Even when word recognition is successful, it is known that the word-onset duration necessary for correct word identification increases with decreasing acoustic clarity of the speech (Nooteboom & Doodeman, 1984). This would, in turn, slow the rate of lexical identification and require, in many cases, retrospective identification based on the context that follows the ambiguous acoustic signal, putting additional demands on working memory (Connine et al, 1991; Grosjean, 1985; Wingfield et al, 1994).

Even for those with normal hearing acuity, the ordinarily rapid and seemingly effortless process of speech comprehension is presumed to shift when the quality of the speech input is degraded, to an increased reliance on controlled processing and the use of top-down contextual information (Alain et al, 2004; Morton, 1969; Wingfield et al, 2006). This general principle has been formalized in the Ease of language understanding (ELU) model of Rönnberg and colleagues (Rönnberg, 2003; Rönnberg et al, 2008, 2010, 2011). This model proposes that with clear speech a successful match between the phonological input and the stored phonological representation of the stimulus word in the mental lexicon results in an automatic (implicit) lexical activation. In the case of a degraded input, whether due to hearing loss or noisy listening conditions, a resultant mismatch leads to a shift from automatic to explicit controlled processing, in which phonological, lexical, and semantic representations retrieved from long-term memory interact in working memory with the information extracted from the sensory input. The effect will be a priming of phonological processing and a facilitation of speech comprehension (Rönnberg et al, 2010). Such a process explains why recognition accuracy for words heard in isolation by listeners with hearing loss tends to underestimate recall of sentential material (Stewart & Wingfield, 2009). Consistent with the ELU model, these cognitive operations might be expected to take a toll on working memory, and in the present experiment may underlie the poorer recall performance by the hearing-loss group relative to the normal-hearing group.

An unqualified postulate that linguistic context and information retrieved from long-term semantic memory are engaged only in the absence of a clear acoustic signal, however, may be overly restrictive. This is so because of the rarity of a perfect match between the sound patterns of spoken words and phonological representations in the mental lexicon. In part this is due to allophonic variation resulting from differences in coarticulation dependant on a syllabic surround, compounded by under-articulation that is common in natural speech. Because of these factors, speech perception is most often, if not invariably, context-dependent (cf. Hunnicutt, 1985; Lieberman, 1963; Lindblom et al, 1992; Pollack & Pickett, 1963), even when speakers are attempting to speak artificially clearly (Cox et al, 1987; Picheny et al, 1986).

The detrimental effect of hearing loss on narrative recall in this experiment is consistent with other findings in the literature, such as the demonstration that changing the level of presentation can affect working memory span in young adults, and especially so for older adults with already limited working memory capacity (Baldwin & Ash, 2011). It has been suggested that reducing perceptual effort and consequent cognitive demands through the use of hearing aids, speech perception training, and communication strategies, may have a positive effect on cognitive performance in older adults with hearing impairment (Kuchinsky et al, 2011). Randomized controlled trials of hearing aid intervention on cognitive function have begun to appear in the literature, although to date with mixed outcomes (cf. Mulrow et al, 1990; van Hooren et al, 2005).

Narrative recall with a self-paced presentation

The second key finding in the present study was that the effect of reduced hearing acuity on recall for both main ideas and narrative details disappeared when listeners were allowed to slow the rate of the speech input by self-pacing through the recorded passages. Although the normal-hearing participants showed virtually no changes in recall performance, the hearing-loss group improved their recall significantly when allowed more processing time. This “temporal rescue” of recall accuracy is consistent with an argument that at least one effect of the listening effort associated with hearing loss is to slow the processing of the speech input, with negative consequences for encoding what had been heard in memory. Indeed, computational modeling, supported by behavioral simulations, have identified biologically plausible neural mechanisms that could account for slowed activation of a target representation when reduced sensory evidence allows greater competition from other, partially activated, lexical possibilities (Miller & Wingfield, 2010).

Within this general framework, one can entertain several non-exclusive reasons why slowing the rate of speech input improved recall performance for the listeners with hearing loss to a differentially greater degree than for the listeners with normal hearing acuity.

The first possibility is that self-pacing allowed more time for perceptual encoding and a best-fit mapping of the degraded input against the cohort of lexical possibilities sharing overlapping phonology with the target word (cf. Luce & Pisoni, 1998; Marslen-Wilson, 1990; Morton, 1969). The second possibility is that self-pacing allowed more time for inferential processes to operate within the cognitive system (Rönnberg et al, 2010), whether this involved the use of information from semantic memory enabling the use of linguistic context to facilitate word recognition, conducting syntactic operations and determining thematic roles within the sentences, or forming deductive inferences at the discourse level. We cannot with certainty say how much each of these possibilities may have contributed to the ameliorating effects of self-pacing. It is probable that both may have contributed to the elimination of the recall difference between the normal-hearing and hearing-impaired participants in the self-pacing condition.

It could be argued that participants ’ WAIS vocabulary scores may serve as a rough index of individuals’ ability to rapidly access information in long-term semantic memory to support on-line processing. Some support for this suggestion appeared in the multiple regression analysis conducted on the recall data for the continuous presentation condition. This analysis revealed a significant contribution of WAIS vocabulary scores to recall performance independent of effects of hearing acuity. The absence of an independent contribution of vocabulary scores to recall performance in the self-paced condition is not inconsistent with this possibility. That is, allowing participants to self-pace the input would allow individuals with poor hearing acuity, as well as those with normal acuity, more than adequate time to retrieve and utilize information from semantic memory to facilitate processing and recall.

Assuming that participants with reduced hearing acuity rely to a greater extent on utilization of top-down information drawn from semantic memory, however, one might have expected to see in the continuous presentation condition, a stronger correlation between WAIS vocabulary scores and recall performance for the poor-hearing group relative to those with normal hearing acuity. As we saw, however, this was not the case. This is a question that must await further research.

A final possibility that seems a less likely account for the effectiveness of self-pacing on recall is that the additional time between input segments simply allowed more time for rehearsal. Although rehearsal of a speech segment during the interval between the end of that segment and initiation of a subsequent segment might be beneficial, there is no reason to expect rehearsal to be less beneficial for those participants with normal hearing than for those with a hearing loss.

Conclusions

It is important to note that the temporal amelioration of the negative effect of hearing loss on memory performance for speech materials was obtained for listeners with a mild-to-moderate hearing loss. In the case of more severe hearing impairment, reduced availability of bottom-up information might be expected to set upper limits on the extent to which additional time and/or cognitive effort would overcome the recall deficit. The present results, however, make clear that what may be becoming an increasing incidence of slight, mild, or more severe hearing loss among university-age young adults (Shargorodsky et al, 2010) can have subtle but significant effects on recall performance for verbal materials, even when materials pass a screen for audibility. The terms “cognitive hearing science” (Arlinger et al, 2009) and “cognitive audiology” (Jerger, cited in Fabry, 2011, p. 20) have begun to appear in the literature to emphasize the importance of taking into account how cognitive processes interact with hearing acuity in communicative behavior and hence, potential remediation strategies. These present results fall within this rubric.

Acknowledgments

This research was supported by NIH Grant AG019714 from the National Institute on Aging. We also acknowledge support from the W.M. Keck Foundation. We thank Nicole Amichetti for help in the preparation of this manuscript. Tepring Piquado is now at the Department of Cognitive Sciences, University of California at Irvine. Jonathan I. Benichov is now at the Department of Biology, City College of the City University of New York.

Abbreviations

- ANCOVA

Analysis of covariance

- PTA

Pure-tone average

- WAIS

Wechsler adult intelligence scale

- Wpm

Words per minute

Footnotes

Declaration of interest: The authors report no conflicts of interest. The authors alone are responsible for the content and writing of the paper.

References

- Alain C, McDonald KL, Osteroff JM, Schneider B. Aging: A switch from automatic to controlled processing of sounds? Psychol Aging. 2004;19:125–133. doi: 10.1037/0882-7974.19.1.125. [DOI] [PubMed] [Google Scholar]

- Amick MM, Cronin-Golomb A, Gilmore GC. Visual processing of rapidly presented stimuli is normalized in Parkinson’s disease when proximal stimulus strength is enhanced. Vis Res. 2003;43:2827–2835. doi: 10.1016/s0042-6989(03)00476-0. [DOI] [PubMed] [Google Scholar]

- Anderson RC, Pichert JW. Recall of previously unrecallable information following a shift in perspective. J Verb Learn Verb Behav. 1978;17:1–12. [Google Scholar]

- Arlinger S, Lunner T, Lyxell B, Pichora-Fuller MK. The emergence of cognitive hearing science. Scand J Psychol. 2009;50:371–384. doi: 10.1111/j.1467-9450.2009.00753.x. [DOI] [PubMed] [Google Scholar]

- Baldwin CL, Ash IK. Impact of sensory acuity on auditory working memory span in young and older adults. Psychol Aging. 2011;26:85–91. doi: 10.1037/a0020360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benichov J, Cox LC, Tun PA, Wingfield A. Word recognition within a linguistic context: Effects of age, hearing acuity, verbal ability, and cognitive function. Ear Hear. 2012;33:250–256. doi: 10.1097/AUD.0b013e31822f680f. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen J, MacWhinney B, Flatt M, Provost J. Psyscope: A new graphic interactive environment for designing psychology experiments. Behav Res Methods. 1993;25:257–271. [Google Scholar]

- Connine CM, Blasko DG, Hall M. Effects of subsequent sentence context in auditory word recognition: Temporal and linguistic constraints. J Mem Lang. 1991;30:234–250. [Google Scholar]

- Cox RM, Alexander GC, Gilmore C. Intelligibility of average talkers in typical listening environments. J Acoust Soc Am. 1987;81:1598–1608. doi: 10.1121/1.394512. [DOI] [PubMed] [Google Scholar]

- Cronin-Golomb A, Gilmore GC, Neargarder S, Morrison SR, Laudate TM. Enhanced stimulus strength improves visual cognition in aging and Alzheimer’s disease. Cortex. 2007;43:952–966. doi: 10.1016/s0010-9452(08)70693-2. [DOI] [PubMed] [Google Scholar]

- Delaney HD, Maxwell SE. On using analysis of covariance in repeated measures designs. Multivariate Behav Res. 1981;16:105–123. doi: 10.1207/s15327906mbr1601_6. [DOI] [PubMed] [Google Scholar]

- Dickinson CVM, Rabbitt PMA. Simulated visual impairment: Effects on text comprehension and reading speed. Clin Vis Sci. 1991;6:301–308. [Google Scholar]

- Dixon RA, Hultsch DF, Hertzog C. A Manual of 25 Three-tiered Structurally Equivalent Texts For Use in Aging Research. University of Victoria, Department of Psychology; Victoria, Canada: 1989. CRGCA Tech. Rep. No. 2. [Google Scholar]

- Dixon RA, Simon EW, Nowak CA, Hultch DF. Text recall in adulthood as a function of information, input modality, and delay interval. J Gerontol. 1982;37:358–364. doi: 10.1093/geronj/37.3.358. [DOI] [PubMed] [Google Scholar]

- Fabry D. Jim Jerger by the letters. Audiol Today. 2011 Jan-Feb;:19–29. [Google Scholar]

- Fallon M, Peelle JE, Wingfield A. Spoken sentence processing in young and older adults modulated by task demands: Evidence from self-paced listening. J Gerontol Psychol Sci. 2006;61B:310–317. doi: 10.1093/geronb/61.1.p10. [DOI] [PubMed] [Google Scholar]

- Fellinger J, Holzinger D, Gerich J, Goldberg D. Mental distress and quality of life in the hard of hearing. Acta Psychiatr Scand. 2007;115:243–245. doi: 10.1111/j.1600-0447.2006.00976.x. [DOI] [PubMed] [Google Scholar]

- Ferreira F, Henderson JM, Anes MD, Weeks PA, McFarlane MD. Effects of lexical frequency and syntactic complexity on spoken-language comprehension: Evidence from the auditory moving window technique. J Exp Psychol Learn Mem Cogn. 1996;22:324–335. [Google Scholar]

- Fraser S, Gagne JP, Alepins M, Dubois P. Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. J Speech Lang Hear Res. 2010;53:18–33. doi: 10.1044/1092-4388(2009/08-0140). [DOI] [PubMed] [Google Scholar]

- Gao X, Stine-Morrow EAL, Noh SR, Eskew RT., Jr Visual noise disrupts conceptual integration in reading. Psychon Bull Rev. 2011;18:83–88. doi: 10.3758/s13423-010-0014-4. [DOI] [PubMed] [Google Scholar]

- Grosjean F. Spoken word recognition processes and the gating paradigm. Percept Psychophys. 1980:267–283. doi: 10.3758/bf03204386. [DOI] [PubMed] [Google Scholar]

- Grosjean F. The recognition of words after their acoustic offset: Evidence and implications. Percept Psychophys. 1985;28:299–310. doi: 10.3758/bf03207159. [DOI] [PubMed] [Google Scholar]

- Hall J, Mueller G. Audiologist Desk Reference. San Diego, USA: Singular; 1997. [Google Scholar]

- Hicks CB, Tharpe AM. Listening effort and fatigue in school-age children with and without hearing loss. J Speech Lang Hear Res. 2002;45:573–584. doi: 10.1044/1092-4388(2002/046). [DOI] [PubMed] [Google Scholar]

- Hunnicut S. Intelligibility versus redundancy: Conditions of dependency. Lang Speech. 1985;28:47–56. [Google Scholar]

- Kahneman D. Attention and Effort. Englewood Cliffs, USA: Prentice-Hall; 1973. [Google Scholar]

- Kintsch W. The role of knowledge in discourse comprehension: A construction-integration model. Psychol Rev. 1988;95:163–182. doi: 10.1037/0033-295x.95.2.163. [DOI] [PubMed] [Google Scholar]

- Kintsch W, van Dijk TA. Toward a model of text comprehension and production. Psychol Rev. 1978;85:363–394. [Google Scholar]

- Kramer SE, Katelyn TS, Fasten JM, Kuok DJ. Assessing aspects of auditory handicap by means of pupil dilation. Audiology. 1997;36:155–164. doi: 10.3109/00206099709071969. [DOI] [PubMed] [Google Scholar]

- Kuchinsky SE, Eckert MA, Dub no JR. The eyes are the windows to the ears: Pupil size reflects listening effort. Audiol Today. 2011 Jan-Feb;:56–59. [Google Scholar]

- Le Prell CG, Hensley BN, Campbell KCM, Hall JW, Guiro K. Evidence of hearing loss in a ‘normally-hearing’ college-student population. Into J Audiol. 2011;50:S21–S31. doi: 10.3109/14992027.2010.540722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman P. Some effects of semantic and grammatical context on the production and perception of speech. Lang Speech. 1963;6:172–187. [Google Scholar]

- Lindblom B, Brownlee S, Davis B, Moon SJ. Speech transforms. Speech Comm. 1992;11:357–368. [Google Scholar]

- Lindfield KC, Wingfield A, Good glass H. The contribution of prosody to spoken word recognition. Apple Psycholinguist. 1999;20:395–405. [Google Scholar]

- Luce PA, Pisoni DB. Recognizing spoken words: The neighborhood activation model. Ear Hear. 1998;19:1–36. doi: 10.1097/00003446-199802000-00001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luce PA, Pisoni DB, Golding SD. Similarity neighborhoods of spoken words. In: Altman GT, editor. Cognitive Models of Speech Processing: Psycholinguistic and Computational Perspectives. Cambridge, USA: MIT Press; 1990. pp. 122–147. [Google Scholar]

- Mackersie CL, Boothroyd A, Proda T. Use of a simultaneous sentence perception test to enhance sensitivity to ease of listening. J Speech Lang Hear Res. 2000;43:675–682. doi: 10.1044/jslhr.4303.675. [DOI] [PubMed] [Google Scholar]

- Mandel RG, Johnson NS. A developmental analysis of story recall and comprehension in adulthood. J Verb Learn Verb Behav. 1984;23:643–659. [Google Scholar]

- Mandler JM. A code in the node: The use of story schema in retrieval. Discourse Process. 1978;1:14–35. [Google Scholar]

- Marslen-Wilson WD. Function and process in spoken word recognition. In: Bouma H, Bouwhuis D, editors. Attention and Performance X: Control of Language Processes. Hillsdale, USA: Erlbaum; 1984. pp. 125–148. [Google Scholar]

- Marslen-Wilson WD. Activation, competition, and frequency in lexical access. In: Altmann GTM, editor. Cognitive Models of Speech Processing. Cambridge, USA: MIT Press; 1990. pp. 148–172. [Google Scholar]

- Marslen-Wilson WD, Welsh A. Processing interactions and lexical access during word recognition in continuous speech. Cog Psychol. 1978;10:29–63. [Google Scholar]

- Marslen-Wilson WD, Zwitserlood P. Accessing spoken words: The importance of word onsets. J Exp Psychol Human Percept Perform. 1989;15:576–585. [Google Scholar]

- McCoy SL, Tun PA, Cox LC, Colangelo M, Stewart RA, et al. Hearing loss and perceptual effort: Downstream effects on older adults’ memory for speech. Q J Exp Psychol. 2005;58A:22–33. doi: 10.1080/02724980443000151. [DOI] [PubMed] [Google Scholar]

- Meyer BJF. Prose analysis: Purposes, procedures, and problems. In: Britton BK, Black JB, editors. Understanding Expository Texts. Hillsdale, USA: Erlbaum; 1985. pp. 11–65. [Google Scholar]

- Miller P, Wingfield A. Distinct effects of perceptual quality on auditory word recognition, memory formation, and recall in a neural model of sequential memory. Frontiers in Systems Neuroscience. 2010;4:14. doi: 10.3389/fnsys.2010.00014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morton J. Interaction of information in word recognition. Psychol Rev. 1969;76:165–178. [Google Scholar]

- Mulrow CD, Aguilar C, Endicott JE, Tuley MR, Velez R, et al. Quality-of-life changes and hearing impairment. A randomized trial. Ann Intern Med. 1990;113:188–194. doi: 10.7326/0003-4819-113-3-188. [DOI] [PubMed] [Google Scholar]

- Murphy DR, Craik FIM, Li KZH, Schneider BA. Comparing the effects of aging and background noise on short-term memory performance. Psychol Aging. 2000;15:323–334. doi: 10.1037/0882-7974.15.2.323. [DOI] [PubMed] [Google Scholar]

- Picheny MA, Durlach NI, Braida LD. Speaking clearly for the hard of hearing II: Acoustic characteristics of clear and conversational speech. J Speech Hear Res. 1986;29:434–446. doi: 10.1044/jshr.2904.434. [DOI] [PubMed] [Google Scholar]

- Pichora-Fuller MK. Cognitive aging and auditory information processing. Int J Audiol. 2003;42:2S26–2S32. [PubMed] [Google Scholar]

- Piquado T, Isaacowitz D, Wingfield A. Pupillometry as a measure of cognitive effort in younger and older adults. Psychophysiology. 2010;47:560–569. doi: 10.1111/j.1469-8986.2009.00947.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pollack I, Pickett JM. The intelligibility of excerpts from conversation. Lang Speech. 1963;6:165–171. [Google Scholar]

- Rabbitt PMA. Channel capacity, intelligibility and immediate memory. Q J Exp Psychol. 1968;20:241–248. doi: 10.1080/14640746808400158. [DOI] [PubMed] [Google Scholar]

- Rabbitt PMA. Mild hearing loss can cause apparent memory failures which increase with age and reduce with IQ. Acta Otolaryngol Suppl. 1991;476:167–176. doi: 10.3109/00016489109127274. [DOI] [PubMed] [Google Scholar]

- Rönnberg J. Cognition in the hearing impaired and deaf as a bridge between signal and dialogue: A framework and a model. Int J Audiol. 2003;42:S68–S76. doi: 10.3109/14992020309074626. [DOI] [PubMed] [Google Scholar]

- Rönnberg J, Danielsson H, Rudner M, Arlinger S, Sternäng O, et al. Hearing loss is negatively related to episodic and semantic long-term memory but not to short-term memory. J Speech Lang Hear Res. 2011;54:705–726. doi: 10.1044/1092-4388(2010/09-0088). [DOI] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Foo C, Lunner T. Cognition counts: A working memory system for ease of language understanding (ELU) Int J Audiol. 2008;42:S99–S105. doi: 10.1080/14992020802301167. [DOI] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Lunner T. Cognitive hearing science: The legacy of Stuart Gatehouse. Trends Amplif. 2011;20:1–9. doi: 10.1177/1084713811409762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rönnberg J, Rudner M, Lunner T, Zekveld AA. When cognition kicks in: Working memory and speech understanding in noise. Noise Health. 2010;12:263–269. doi: 10.4103/1463-1741.70505. [DOI] [PubMed] [Google Scholar]

- Rubin DC. A unit analysis of prose memory. J Verbal Learn Verbal Behav. 1978;17:599–620. [Google Scholar]

- Sarampalis A, Kalluri S, Edwards B, Hafter E. Objective measures of listening effort: Effects of background noise and noise reduction. J Speech Lang Hear Res. 2009;52:1230–1240. doi: 10.1044/1092-4388(2009/08-0111). [DOI] [PubMed] [Google Scholar]

- Shargorodsky J, Curhan SG, Curhan GC, Eavey R. Change in prevalence of hearing loss in US adolescents. J Am Med Assoc. 2010;304:772–778. doi: 10.1001/jama.2010.1124. [DOI] [PubMed] [Google Scholar]

- Stewart R, Wingfield A. Hearing loss and cognitive effort in older adults’ report accuracy for verbal materials. J Am Acad Audiol. 2009;20:147–154. doi: 10.3766/jaaa.20.2.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stine EL, Wingfield A. Levels upon levels: Predicting age differences in text recall. Experimental Aging Research. 1987;13:179–183. doi: 10.1080/03610738708259322. [DOI] [PubMed] [Google Scholar]

- Stine EAL, Wingfield A. The assessment of qualitative age differences in discourse processing. In: Hess TM, editor. Aging and Cognition: Knowledge Organization and Utilization. Amsterdam: North-Holland; 1990. pp. 33–92. [Google Scholar]

- Stine EAL, Wingfield A, Myers SD. Age differences in processing information from television news: The effects of bisensory augmentation. J Gerontol Psychol Sci. 1990;45:1–8. doi: 10.1093/geronj/45.1.p1. [DOI] [PubMed] [Google Scholar]

- Stine EAL, Wingfield A, Poon LW. How much and how fast: Rapid processing of spoken language in later adulthood. Psychol Aging. 1986;1:303–311. doi: 10.1037//0882-7974.1.4.303. [DOI] [PubMed] [Google Scholar]

- Surprenant AM. The effect of noise on memory for spoken syllables. Int J Psychol. 1999;34:328–333. [Google Scholar]

- Surprenant AM. Effects of noise on identification and serial recall of nonsense syllables in older and younger adults. Aging Neuropsychol Cogn. 2007;14:126–143. doi: 10.1080/13825580701217710. [DOI] [PubMed] [Google Scholar]

- Titchener EB. Lectures on the Elementary Psychology of Feeling and Attention. New York: Macmillan; 1908. [Google Scholar]

- Titone D, Prentice KJ, Wingfield A. Resource allocation during spoken discourse processing: Effects of age and passage difficulty as revealed by self-paced listening. Mem Cogn. 2000;28:1029–1040. doi: 10.3758/bf03209351. [DOI] [PubMed] [Google Scholar]

- Tun PA, McCoy S, Wingfield A. Aging, hearing acuity, and the attentional costs of effortful listening. Psychol Aging. 2009;24:761–766. doi: 10.1037/a0014802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner A, Greene F. Construction and use of a propositional text base. JSAS: Catalog of Selected Documents in Psychology. 1978;8:3. (ms. no. 1713) [Google Scholar]

- Tyler L. The structure of the initial cohort: Evidence from gating. Percept Psychophys. 1984;36:417–427. doi: 10.3758/bf03207496. [DOI] [PubMed] [Google Scholar]

- van Dijk TA, Kintsch W. Strategies of Discourse Comprehension. San Diego, USA: Academic Press; 1983. [Google Scholar]

- van Hooren SAH, Anteunis LJC, Valentijn SAM, Bosma H, Ponds RWHM, et al. Does cognitive function in older adults with hearing impairment improve by hearing aid use? Int J Audiol. 2005;44:265–271. doi: 10.1080/14992020500060370. [DOI] [PubMed] [Google Scholar]

- Waters GS, Caplan D. Age, working memory, and on-line syntactic processing in sentence comprehension. Psychol Aging. 2001;16:128–144. doi: 10.1037/0882-7974.16.1.128. [DOI] [PubMed] [Google Scholar]

- Wayland SC, Wingfield A, Goodglass H. Recognition of isolated words: The dynamics of cohort reduction. Appl Psycholinguist. 1989;10:475–487. [Google Scholar]

- Wechsler D. Wechsler Adult Intelligence Scale. 3. San Antonio, USA: The Psychological Corporation; 1997. [Google Scholar]

- Widen SE, Holmes AE, Johnson T, Bohlin M, Erlandsson SI. Hearing, use of hearing protection, and attitudes towards noise among young American adults. Int J Audiol. 2009;48:537–545. doi: 10.1080/14992020902894541. [DOI] [PubMed] [Google Scholar]

- Wingfield A. Cognitive factors in auditory performance: Context, speed of processing and constraints of memory. J Am Acad Audiol. 1996;7:175–182. [PubMed] [Google Scholar]

- Wingfield A, Alexander AH, Cavigelli S. Does memory constrain utilization of top-down information in spoken word recognition? Evidence from normal aging. Lang Speech. 1994;37:221–235. doi: 10.1177/002383099403700301. [DOI] [PubMed] [Google Scholar]

- Wingfield A, Goodglass H, Lindfield KC. Word recognition from acoustic onsets and acoustic offsets: Effects of cohort size and syllabic stress. Appl Psycholinguist. 1997;18:85–100. [Google Scholar]

- Wingfield A, McCoy SL, Peelle JE, Tun PA, Cox LC. Effects of adult aging and hearing loss on comprehension of rapid speech varying in syntactic complexity. J Am Acad Audiol. 2006;17:487–497. doi: 10.3766/jaaa.17.7.4. [DOI] [PubMed] [Google Scholar]

- Wingfield A, Tun PA, McCoy SL. Hearing loss in adulthood: What it is and how it interacts with cognitive performance. Curr Dir Psychol Sci. 2005;14:144–148. [Google Scholar]

- Wingfield A, Tun PA, McCoy SL, Stewart RA, Cox LC. Sensory and cognitive constraints in comprehension of spoken language in adult aging. Sem Hear. 2006;27:273–283. [Google Scholar]

- Zekveld A, Kramer SE, Festen JM. Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear Hear. 2010;31:480–490. doi: 10.1097/AUD.0b013e3181d4f251. [DOI] [PubMed] [Google Scholar]

- Zelinski EM, Light LL, Gilewski MJ. Adult age differences in memory for prose: The question of sensitivity to passage structure. Devel Psychol. 1984;20:1181–1192. [Google Scholar]