Abstract

The use of a Classroom Response System (CRS) was evaluated in two sections, A and B, of a large lecture microbiology course. In Section B the instructor used the CRS technology at the beginning of the class period posing a question on content from the previous class. Students could earn extra credit if they answered the question correctly. In Section A, the class also began with an extra credit CRS question. However, CRS questions were integrated into the lecture during the entire class period. We compared the two classes to see if augmenting lectures with this technology increased student learning, confidence, attendance, and the instructor’s ability to respond to student’s misconceptions, over simply using the CRS as a quizzing tool. Student performance was compared using shared examination questions. The questions were categorized by how the content had been presented in class. All questions came from instructors’ common lecture content, some without CRS use, and some questions where Instructor A used both lecture and CRS questions. Although Section A students scored significantly better on both types of examination questions, there was no demonstrable difference in learning based on CRS question participation. However, student survey data showed that students in Section A expressed higher confidence levels in their learning and knowledge and indicated that they interacted more with other students than did the students in Section B. In addition, Instructor A recorded more modifications to lecture content and recorded more student interaction in the course than did Instructor B.

Because of increasing enrollments and tighter budgets, colleges are offering larger classes as a way to save money (5, 12). Consequently, faculty-student ratios in many introductory courses range from 1:100 to 1:500. While studies show mixed results in determining what effect class size has on student performance, a trend does emerge—new students or students with lower achievement levels have trouble in larger classes (3, 9).

The current theory of learning, constructivism, is one where learning is an interpretive, recursive, building process whereby learners actively participate in the learning process in order to make meaning of new information and constructs so as to internalize this understanding as part of their own knowledge base (10). Therefore, attention to the limits of large class sizes is essential in order to maximize student achievement. Large classes inhibit types of instructional methods, strategies, and activities that are consistent with constructivism in four ways. First, learners need to make meaning of the new information in order to assimilate it into their existing knowledge base (19). Without meaning, information is often forgotten after a short period of time. As a result, learners need to interact with this new information in some way. Large classes limit this interaction, and the brief time allotted for instruction constrains the types of instructional strategies and activities that can be used to allow students to interact with new information.

Second, research on learning shows that individuals make meaning in a variety of ways and that these ways may not be the same for each individual (6). Using instructional methods in addition to the lecture method helps to meet the needs of all learners. Yet large classes are not conducive to instructional methods other than lecture.

Third, learning takes place in social settings through interaction with others (19). In other words, learners need to interact with others in order to make sense of new information prior to internalizing it (19). Again, large classes are not organized so that interaction between the instructor and students or among students can take place easily.

Finally, constructivism has expanded the role of assessment from primarily summative forms, assessments used to evaluate student learning at the end of a period of time or unit of study, to include formative forms, assessments that are conducted during instruction to inform ongoing instruction (1, 15, 16, 17). By using formative assessment during instruction, instructors gain information about students’ understanding of the course material and then use that information to adapt and modify instruction to correct misunderstandings and gaps before they are internalized by the student. However, the number of students in large classes makes it virtually impossible to gauge the learning of more than a few students during the class period, therefore, until recently, meaningful formative assessment has been difficult in large classes.

Classroom Response System (CRS) technology holds promise for remedying these weaknesses. (CRS is also known as Audience Response System, Interactive Response System, Personal Response System, and Student Response System.) In order to understand how CRS allows the transformation of large classes into learning settings congruent with constructivism, one must first understand how these systems operate.

CRS technology consists of an instructor’s computer, wall-mounted receivers, and a handheld personal data transmitter (PDT) registered to each student. Classroom wall-mounted receivers are connected to the instructor’s computer (as is each student’s PDT). During instruction, the instructor poses a multiple-choice question, and students respond by aiming their PDT toward a wall receiver and pressing a button that corresponds to their answer choice. The wall receivers transmit the information to the instructor’s computer where the results are tallied by answer chosen and displayed on a screen for the entire class to view. In addition, the data are stored. The PDT is registered in each student’s name so that the results can be broken out by student.

The transformation of large classes into more interactive learning communities can be accomplished with CRS technology in the following ways. First, the PDT requires participation on the part of the student. Because the PDT is registered to each student, the student becomes accountable for his/her learning and communicating that learning by answering all the questions that are posed throughout the class period. Second, the instructor can facilitate paired discussion by asking students to discuss the question with a peer and then answer using the PDT. One successful method is for the instructor to pose a selected response question to which the students individually respond using their PDTs. Based on the results, the instructor then asks students to discuss the answers with a peer and decide if they want to change their answer. The instructor poses the question again, and students have another opportunity to respond. Based on the responses, the instructor can choose to move forward, reteach the information, and/or go over each question explaining the reasons for each answer choice. These instructional strategies allow students to actively participate in instruction and interact with peers to make meaning of new information. The instructor uses the real-time information gained through the use of the CRS to make teaching modifications. As such, the traditional teacher-centered classes are made over into more interactive, student-centered classes and an environment that accommodates more learning styles.

Current research has documented a variety of benefits as a result of using CRS. For example, studies showed that use of CRS increased students’ attendance, advance preparation for class (4, 14), attentiveness and enthusiasm (2, 4, 8, 9, 14, 20), and in-class participation (4, 8, 14, 20). Studies have also noted students reporting more enjoyment in classes because of CRS (4, 8, 9, 14, 20). To illustrate, research on CRS technology in physics indicated that attendance in CRS courses and students’ satisfaction with the courses was higher. This same research showed that students’ confidence in their own learning increased (8).

However, research into the effects of CRS technology on student learning is mixed. Slain, Abate, Hodges, Stamatakis, and Wolak (18) studied students in a doctoral pharmacy program over a 2-year period. They found that students’ scores on examinations increased with the use of CRS technology. Judson and Sawada’s (11) review of research on CRS technology in higher education noted that student learning increased when the CRS technology was paired with constructivist-oriented pedagogy such as actively engaging students with new information, but did not increase when paired with behaviorist-oriented pedagogy, where students passively received information. Paschal (13) compared two sections of a systems physiology course, one that used CRS technology and one that did not. She found that student learning was at least as good or better in the sections that used CRS technology than in the sections that did not.

With all this in mind, we looked to evaluate our own implementation of the CRS technology in a general microbiology course over a period of two semesters. We wanted to know if the ways we were employing the CRS system (i) increased opportunities for formative assessment of student learning that led to changes in instruction, (ii) increased student attendance, (iii) increased student learning, and (iv) increased student confidence in their own learning.

METHODS

Class characteristics. General Microbiology is an introductory microbiology course taught at the junior level with 80% of the class being either juniors or seniors. Students enrolled represented approximately 30 different majors of which only 10% were microbiology majors. Course prerequisites included general biology and second semester chemistry. Two sections, A and B, were taught each semester with one senior faculty member responsible for each section. Enrollment in Section A was 143 students and in Section B was 84 students. There is no required laboratory component to this course.

Course instruction. The course consisted of three 1-hour lectures per week for a total of 40 days of classroom instruction. Students were involved in group learning projects throughout the semester; they also took four examinations, including a comprehensive 2-hour final. There were 700 total possible points during the semester, and the students’ grades were calculated using the percentage of the total points earned, so that an A equaled 90 to 100%, a B equaled 80 to 89%, and so forth.

The classroom response system. The instructors in both sections used the eInstruction system provided by the book publisher McGraw Hill Higher Education. Students could opt to purchase a personal data transmitter (PDT) for use during the semester, with each transmitter assigned a unique PDT number so that students could be tracked after registering their PDT. During class, each instructor asked a multiple-choice question, and students responded by aiming their PDT towards a receiver and pressing the button that corresponded with their answer. The receiver recorded the responses and transmitted the information to the instructor’s computer, which then projected a compilation of the results onto a large screen for the entire class to see. These results were summarized by percentage of response for each answer. To strengthen academic integrity and reduce the sharing of answers, instructors gave students 1 minute or less to respond to questions.

Use in the classrooms. In Section B the instructor used the CRS technology at the beginning of the class period by posing a question on content from the previous class. Fifteen times during the semester questions were selected, and students who answered correctly received one point of extra credit (for a total of 15 possible points throughout the semester). Students were not told which days would be counted for credit. This section was taught Monday, Wednesday, and Friday 12:10 to 1:00 p.m.

In Section A, the class also began with an extra credit CRS question. In addition, the instructor posed CRS questions throughout the lecture. Nineteen times during the semester students answered a question, then had a formal opportunity to discuss the question with other students and were then asked to reanswer the question. The percentage of students giving each possible answer to the question the first time it was asked and then after discussion was captured by the CRS program. These questions were not worth any credit. This section was taught Monday, Wednesday, and Friday 9:00 to 9:50 a.m.

Even though students could attain extra credit for CRS questions only through purchasing a PDT, a small number of students did not purchase one. To our knowledge, the reasonable $15 cost of the PDT was not a barrier toward student purchase. Neither instructor has ever had a student ask for an alternative to purchasing the PDT. Moreover, those students not purchasing a PDT still benefited from participating in the CRS interactive student discussions and associated active learning exercises. Additional extra credit opportunities that do not require PDT purchase, such as receiving credit for summarizing microbiology stories in the news, exist in both sections.

Data sources and analysis. One source of data came from a daily instructor’s journal, where each instructor recorded the number of CRS questions used in class that day, the number of times students were instructed to formally interact with each other, and the number of times the instructor modified instruction based on the answers to the CRS questions. The captured data from the CRS program comprised the second data source. A third data source was an analysis of student examination performance on the four formal examinations. Each examination contained 10 to 14 questions about the course content that were identical in both sections. Approximately five to seven of these identical questions were based on content presented using the same instructional delivery method (lecture) for both classes. The remaining questions were also identical; however, the instructional delivery in the two sections differed. In Section B, these questions came from information presented only during the lecture. However, in Section A the information was supplemented using CRS questions, which were sometimes followed by student-peer discussions and a reanswering opportunity. The individual instructors wrote the remaining examination questions differently for each section. For the first two formal examinations, the Section A instructor wrote the shared exam questions, and for the last two examinations the Section B instructor wrote the shared exam questions. In both cases instructors reviewed the questions and agreed that they matched the content from their course.

Statistical analysis of student examination performance. A multivariant statistical analysis of variance (ANOVA) with section, exam, and question type as fixed effects and questions with type and exam as a random effect was performed. Question types were questions that were either covered by lecture only in both classes or by CRS questions only in Section A. The differences were considered to be significant if P ≤ 0.05.

Analysis of student perceptions of learning, confidence, and study habits. During the last lecture, a survey was given to each class which asked students to rate each statement about their experiences with the CRS on a scale of 1 to 5; indicate the statement they agreed with most about their experiences with the CRS on a scale of 1 to 5; with 5 being strongly agree; 4 somewhat agree; 3 somewhat disagree; 2 strongly disagree; and 1 not applicable. Mantel-Haenszel chi-square analysis of differences between survey results for each question in the two sections was performed. The differences were considered to be significant if P ≤ 0.05. The Mantel-Haenszel chi-square test analyzes correlation between rows and columns of a table and is appropriate when rows and columns are ordinal scales or one is dichotomous. In our study the question responses are ordinal, while the rater scale is dichotomous.

RESULTS

Faculty restructuring of instruction based on CRS technology use. To determine if integrating CRS questions throughout the lectures changed instruction or how students interacted with each other, each instructor kept a daily journal where he/she recorded how many times students were asked CRS questions, how many times students were instructed to interact with other students in a formal manner, and the number of changes the instructor made during the lecture due to feedback from CRS questions. The results are summarized in Table 1. The Section B instructor indicated modifying the planned lecture only once due to responses on the CRS questions, whereas the Section A instructor indicated modifying the planned lecture 84 times in response to the information gained from the CRS questions.

TABLE 1.

Instructor record of interactions with students and between students

| Section | Total no. of CRS questions asked during semestera | No. of formal student interactions with other students | No. of changes in planned lecture due to CRS questions |

|---|---|---|---|

| A | 112 | 27 | 84 |

| B | 28 | 7 | 1 |

40 lectures total.

Increased student interaction was impacted based on how CRS technology was used. First, the number of students who opted to purchase the PDT differed based on how the CRS technology was used. In Section A where CRS questions were integrated throughout the lecture, 95.3% of students chose to purchase the optional PDT. However in Section B, where the PDT was used just for extra credit beginning of class quizzes, only 87.2% of students chose to purchase the PDT. Second, in Section A students participated in class via CRS 84 more times than students in Section B. Furthermore, Section A students were provided formal time to discuss a topic with other students 20 more times than those in Section B. (Table 1).

Instructors also monitored student attendance through students’ use of the CRS technology. Of students purchasing the optional PDT, the average percentage of students attending class was approximately equal for each section each day (72.8 versus 74.8%).

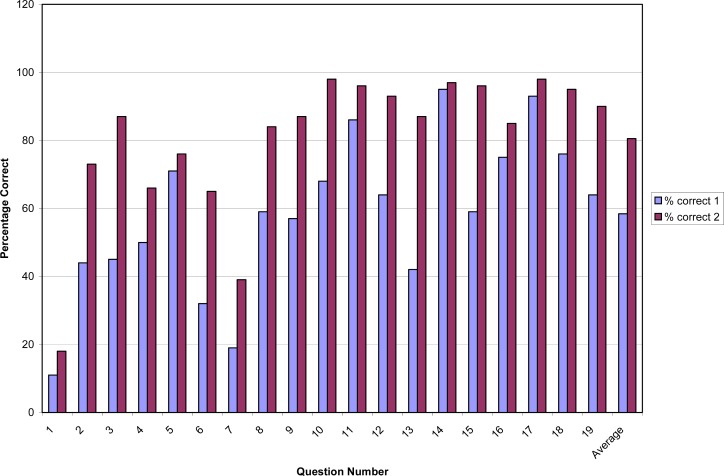

Student performance during instruction. The percentage of students answering the questions correctly at the beginning of the class in both sections was comparable (78.2 versus 82.0% average or 86.0 versus 88.4% median). In Section A, student scores on CRS questions improved dramatically when they were allowed to discuss a question and then reanswer it, an option unavailable in Section B where questions were asked only at the beginning of class (Fig. 1). In fact, every time this technique was used, student scores improved, with an average gain of 22%.

FIG.1.

Comparison of scores of students answering CRS questions during lecture. Score 1 (light bar) is the percentage of correct scores when students answered the question after being given a brief lecture on the topic by the instructor. Students were then instructed to discuss the question and reanswer. Score 2 (dark bar) is the percent correct upon reanswering.

Student performance on examinations. Student performance was analyzed using common examination items. When students in both classes were asked identical questions that were either covered by lecture only in both classes (non-CRSQ) or by CRS questions in only Section A where CRS questions were used to augment lecture (CRSQ), we theorized that we would see an improvement in performance on CRSQ versus non-CRSQ only in Section A. However, what we found was that the students in Section A performed better on both types of questions than students in Section B (Fig. 2).

FIG.2.

The average student performance on six or seven exam questions that were identical in each section and were either covered by lecture only in both classes (non-CRSQ) or by clicker questions in only Section A (CRSQ). Section A (light bar) is the section that used CRS questions during lecture; Section B (darker bar) used CRS questions only as quizzes at the beginning of class. Standard deviation of scores is indicated by error bars.

Statistical analysis of what factors were significant in differences in performance. When statistical analysis of the factors accounting for this variance was performed, it was found that only the section and the exam were statistically significant as indicated by a P value of less than 0.05 (Tables 2 and 3). No statistical significance was found between question types (CRSQ versus non-CRSQ) even when analyzed with section, exam, or both. However, students in Section A performed better on all question types; and this was statistically significant as is indicated by a P value of <0.0001. However, as they failed to perform better on CRS-specific questions, we cannot say that this improvement is a result of participating with CRS.

TABLE 2.

Statistical analysis of variance

| Fixed effect tested | F value | P value |

|---|---|---|

| Section | 33.02 | <0.0001a |

| Exam | 3.73 | 0.0182a |

| Section*Exam | 1.27 | 0.2967 |

| Question type | 0.00 | 0.9971 |

| Section*Question type | 0.04 | 0.8430 |

| Exam*Question type | 0.06 | 0.9823 |

| Section*Exam*Question type | 1.35 | 0.2711 |

The differences were considered to be significant if P ≤ 0.05.

TABLE 3.

Least square means for statistically significant variables

| Effect tested | Estimate | Standard error |

|---|---|---|

| Section A | 83.69 | 1.99 |

| Section B | 72.78 | 1.22 |

| Exam 1 | 88.80 | 3.83 |

| Exam 2 | 77.00 | 3.83 |

| Exam 3 | 72.38 | 3.57 |

| Exam 4 | 74.76 | 3.84 |

Students in CRS-integrated course expressed more confidence in their knowledge and felt more engaged than students in the nonintegrated course. At the end of the semester students were asked to fill out a student learning survey (Fig. 3); responses were tallied and a statistical analysis was performed. On five out of eight of the survey questions, students in the CRS-integrated question section gave a statistically significant more favorable response about their confidence, participation, and ability to give feedback than students in the course where CRS questions were only asked at the beginning of class (P ≤ 0.05) (Tables 4 and 5). Three questions showed a high significance (P = 0.0003–0.0004) when the responses from Section A (CRS-augmented lectures) were compared to the responses from Section B (CRS nonaugmented lectures). In Section A, 72.7% of students responded that they Strongly Agree or Somewhat Agree that they interacted more with other students due to CRS use (Question 2) versus 31.4% in Section B. In Section A, 80% of students responded that they Strongly Agree or Somewhat Agree that they had a higher than normal ability to give the instructor feedback about their level of understanding due to CRS use (Question 4) versus 42.9% in Section B. In Section A, 80.3% responded Strongly Agree or Somewhat Agree that they felt CRS use had increased their confidence in forming relationships amongst concepts (Question 6) versus 42.9% in Section B.

FIG.3.

End of the semester student learning questionnaire.

TABLE 4.

End of semester survey results

| Question no. | Answer | Avg. score | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||||

| 5 (strongly agree) | 4 (somewhat agree) | 3 (somewhat disagree) | 2 (strongly disagree) | 1 (not applicable) | Section | |||||||

|

| ||||||||||||

| Section | Section | Section | Section | Section | ||||||||

|

| ||||||||||||

| Aa | Bb | A | B | A | B | A | B | A | B | A | B | |

|

| ||||||||||||

| 1 | 10 | 1 | 30 | 19 | 11 | 12 | 4 | 2 | 0 | 1 | 3.84 | 3.49 |

| 2 | 19 | 4 | 27 | 11 | 6 | 17 | 3 | 3 | 0 | 0 | 4.13 | 3.46 |

| 3 | 9 | 2 | 35 | 19 | 11 | 14 | 0 | 0 | 0 | 0 | 3.96 | 3.66 |

| 4 | 15 | 1 | 29 | 14 | 7 | 16 | 4 | 4 | 0 | 0 | 4 | 3.34 |

| 5 | 8 | 3 | 19 | 11 | 17 | 11 | 8 | 5 | 3 | 5 | 3.38 | 3.06 |

| 6 | 14 | 1 | 27 | 14 | 12 | 15 | 2 | 3 | 0 | 2 | 3.96 | 3.26 |

| 7 | 5 | 2 | 15 | 8 | 19 | 14 | 9 | 10 | 7 | 1 | 3.04 | 3 |

| 8 | 5 | 3 | 13 | 6 | 17 | 17 | 15 | 7 | 4 | 1 | 2.95 | 3 |

n = 55/143 38.50%.

n = 35/84 41.70%.

TABLE 5.

Mantel-Haenszel chi-square analysis of differences between survey results in the two sections

The differences were considered to be significant if P ≤ 0.05.

A somewhat less striking but still significant difference existed between the two sections for two questions pertaining to their confidence about their learning and knowledge levels. In Section A, 72.7% responded Strongly Agree or Somewhat Agree that they felt CRS use had decreased their doubt about their learning progress (Question 1) versus 43.5% in Section B (P = 0.0462). In Section A, 83.5% responded Strongly Agree or Somewhat Agree that they felt CRS use had increased their confidence in the knowledge level they had obtained (Question 3) versus 60% in Section B (P = 0.0215). Students in Section A did not give a statistically significant more favorable response on the questions pertaining to the instructor making instructional changes based on CRS responses or the two questions about the effect of CRS questions on their study habits.

DISCUSSION

The current literature on CRS technology has reported the following outcomes: increased student attentiveness and enthusiasm for the course, increased student engagement in class, and increased student understanding of subject matter (4, 8, 14, 18, and 20). Our study shows that instructors who actively use CRS technology as an instructional tool to involve students and as a formative assessment tool to gauge understanding throughout a class session do seem to have a greater awareness of student difficulties, as indicated by the increased number of changes made to instruction during a class over the course of the semester (84 lecture modifications). Therefore, the frequency of CRS use during a lecture appears to impact formative course improvements. Interestingly, although the Section A instructor indicated making 84 changes in the course due to student responses to CRS questions during instruction and the Section B instructor only indicated making a single change, students in both classes responded similarly to the survey item, “At least once, I noticed that the instructor quickly adjusted or changed the way course information was presented to the class in response to how we students answered questions using the PDT.” Section A’s response level stood at 3.38 compared to 3.06 for Section B, a difference that was not statistically significant. However, the students may not recognize going over the answer to a question where the class did poorly as a change to the course as the instructor does.

Students did however notice the increased interaction with other students in the course, as indicated by the large (4.14 versus 3.46) and most statistically significant (P = 0.0004) difference in survey responses for the two sections on question 2 of the survey, “I experienced greater interaction and participated in more debate with my class peers as a result of using the PDT in this class than I experienced in other large classes where this instructional method is not used.”

Most interestingly, on all three survey questions that dealt with student confidence in their learning, knowledge, and ability to make connections among the course’s central concepts, the students in Section A (where the CRS questions were actively used throughout the lectures) responded more favorably at a statistically significant level. This is congruent with the research and literature on teaching and learning that states that learners learn in a variety of ways, need to be actively involved in the learning process, and need to engage with others to make meaning of new information.

Even though increased attendance in courses using CRS is well documented, there is little information about whether the type of use affects student attendance. Of students purchasing the optional PDT, the average percentage of students attending class was approximately equal for each section each day (72.8 versus 74.8%). However, 8% more students chose to purchase the optional PDT in Section A where CRS questions were used to augment the lectures, so attendance may have actually been slightly higher in this section. We do not have any data on the attendance habits of the 4.7% of students in Section A and 12.8% of students in Section B who chose not to purchase the PDT.

Though it is a tenuous link at best, we had hoped that the use of CRS technology would motivate students to study more. However, students in both sections responded similarly that they somewhat disagreed that the use of CRS motivated them to study more or interact more outside of class with other students.

Our hypothesis based on the literature (14) was that students in Section A would perform better when comparing the student scores in Section A to those in Section B on exam questions pertaining to information that was augmented with CRS questions (CRSQ) in Section A only. We predicted that students in Section A would perform better on CRSQ and that the scores from both sections would be the same for questions covered only by lecture in both sections (non-CRSQ). Instead we found that the students in Section A, where CRS questions were integrated throughout the course, performed better on both types of questions, and that this was statistically significant (P ≤ 0.0001). However, they did not perform better on CRSQ than on non-CRSQ. Therefore, we cannot demonstrate an increase in learning based on the use of CRS questions as has been suggested.

However, the fact that students who used the CRS technology throughout a class, rather than only at the beginning of class, performed better on all questions suggests that using this technology as more than just a quizzing tool may translate to greater learning of those topics not covered by CRS questions. This may be due to students participating in activities during class that show them the level of understanding the instructor expects. The authors realize that many other factors could also account for these differences, such as time of day, instructor, or class demographics. However, the fact that the percentage of students answering the questions at the beginning of the class correctly in both sections was comparable suggests that these two classes should have been expected to perform equivalently on exams.

We found that student scores on CRS questions improve dramatically (22% average, range 2 to 45%) when students are allowed to talk about a question and then reanswer it, an option unavailable in the section where questions were asked merely at the beginning of class each day. This is consistent with the prevailing theory of learning—that knowledge is actively constructed rather than passively absorbed and that learning takes place in social settings. To confirm this it would be interesting to compare the number of correct responses on questions posed during a lecture when there is no option for discussion with the number of correct responses on questions posed during a lecture when there is an option for discussion and match them to responses on exam questions that cover the same material.

Even though this study did not demonstrate value-added gains in student learning, it is important to recognize that Section A CRS students also did not suffer a diminished performance in student learning. Some argue that the extra time taken to actively engage students in large classes using CRS detracts from the time that could be used to cover more course material during lectures. This study contradicts this position with evidence of even exam performance from both sections. We believe that our data support expanding use of CRS technology beyond quizzing activity for increasing students’ learning and confidence in their learning.

Acknowledgments

We thank James ZumBrunnen, Associate Director of the Statistics Lab, for his assistance in carrying out and interpreting the statistical analyses.

REFERENCES

- 1.Black P, Wiliam D. Assessment and classroom learning. Assessment in Educ. Principles Policy Practice. 1998;5:7–74. doi: 10.1080/0969595980050102. [DOI] [Google Scholar]

- 2.Blackman MS, Dooley P, Kuchinski B, Chapman D. It worked a different way. Coll Teaching. 2002;50:27–28. doi: 10.1080/87567550209595868. [DOI] [Google Scholar]

- 3.Borden VMH, Burton KL. The impact of class size on student performance in introductory courses: AIR 1999 annual forum paper 21. Association for Institutional Research; Tallahassee, Fla: 1999. [Google Scholar]

- 4.Bullock DW, LaBella VP, Clingan T, Ding Z, Stewart G, Thibado PM. Enhancing the student-instructor interaction frequency. Phys Teacher. 2002;40:535–541. doi: 10.1119/1.1534821. [DOI] [Google Scholar]

- 5.Cooper JL, Robinson P. The argument for making large classes seem small. New Directions for Teaching Learning. 2000;2000:5–16. doi: 10.1002/tl.8101. [DOI] [Google Scholar]

- 6.Denig SJ. Multiple intelligences and learning styles: two complementary dimensions. Teachers Coll Rec. 2004;106:96–111. doi: 10.1111/j.1467-9620.2004.00322.x. [DOI] [Google Scholar]

- 7.Dillon M, Kokkelenberg EC. The effects of class size on student achievement in higher education: applying an earnings function. 42nd Annual AIR Forum; Ontario, Canada. Tallahassee, Fla: Association for Institutional Research; 2002. [Google Scholar]

- 8.Duncan D. Clickers in the classroom. Pearson, Addison Wesley; Boston, Mass: 2005. [Google Scholar]

- 9.Elliott C. Using a personal response system in economics teaching. Int Rev Economics Educ. 2003;1:80–86. [Google Scholar]

- 10.Fostnot CT. Constructivism: theory, perspectives, and practice. Teachers College Press; New York, N.Y: 1996. p. 228. [Google Scholar]

- 11.Judson E, Sawada D. Learning from past and present: electronic response systems in college lecture halls. J. Computers in Math. Sci. Teaching. 2002;21:167–181. [Google Scholar]

- 12.Menges RJ, Austin AE. Teaching in higher education. In: Richardson V, editor. Handbook of research on teaching. American Educational Research Association; Washington D.C.: 2001. pp. 1122–1156. [Google Scholar]

- 13.Paschal CB. Formative assessment in physiology teaching using a wireless classroom communication system. Adv Physiol Educ. 2002;26:299–308. doi: 10.1152/advan.00030.2002. [DOI] [PubMed] [Google Scholar]

- 14.Roschelle J, Penuel WR, Abrahamson L. AERA 2004 paper proposal, San Diego, California. American Educational Research Association; Washington D.C.: 2004. Classroom response and communication systems: research review and theory; p. 8. [Google Scholar]

- 15.Sadler DR. Formative assessment and the design of instructional systems. Instructional Sci. 1989;18:119–144. doi: 10.1007/BF00117714. [DOI] [Google Scholar]

- 16.Sadler DR. Formative assessment: revisiting the territory. Assessment in Educ. Principles Policy Practice. 1998;5:77–84. doi: 10.1080/0969595980050104. [DOI] [Google Scholar]

- 17.Shepard LA. The role of classroom assessment in teaching and learning. In: Richardson V, editor. Handbook of research on teaching. American Educational Research Association; Washington D.C.: 2001. pp. 1066–1101. [Google Scholar]

- 18.Slain D, Abate M, Hodges BM, Stamatakis MK, Wolak S. An interactive response system to promote active learning in the doctor of pharmacy curriculum. Am J Pharm Educ. 2004;68:1–9. doi: 10.5688/aj6805117. [DOI] [Google Scholar]

- 19.Vygotsky LS. Mind in society: the development of higher psychological processes. Harvard University Press; Cambridge, Mass: 1978. p. 131. [Google Scholar]

- 20.Wit E. Who wants to be...the use of a personal response system in statistics teaching. MSOR Connections. 2003;3:14–20. [Google Scholar]