Abstract

Objective:

Convincing evidence of the effectiveness of health information outreach projects is essential to ensure their continuity. This paper reviews the current state of health information outreach evaluation, characterizes strengths and weaknesses in projects' ability to measure their impact, and assesses enablers of and barriers to projects' success. It also relates the projects' characteristics to evaluation practices. The paper then makes recommendations for strengthening evaluation.

Methods:

Upon conducting a literature search, the authors identified thirty-three articles describing consumer health information outreach programs, published between 2000 and 2010. We then analyzed the outreach projects with respect to their goals and characteristics, evaluation methods and measures, and reported outcomes.

Results:

The results uncovered great variation in the quality of evaluation methods, outcome measures, and reporting. Outcome measures did not always match project objectives; few quantitative measures employed pretests or reported statistical significance; and institutional change was not measured in a structured way. While papers reported successful outcomes, greater rigor in measuring and documenting outcomes would be helpful.

Conclusion:

Planning outcome evaluation carefully and conducting research into mediators between health information and behavior will strengthen the ability to identify best practices and develop a theoretical framework and practical guidance for health information outreach.

Highlights.

The quality of outreach evaluation and the reporting of results vary tremendously. These limitations make it impossible to develop a description of best practices for successful outreach.

Few studies are theory based, and few qualitative studies employ systematic methods of analysis.

Few quantitative studies employ pretest-posttest design, test for statistical significance, and measure long-term outcomes or impact.

Implications.

Community organizations must carefully plan outcome evaluation to ensure continued funding for these types of projects. In addition, it is preferable that evaluations be grounded in a theoretical framework and community needs assessment.

Funding agencies can help improve evaluations by providing clearer reporting requirements and additional training and logistical support.

Outreach projects should move toward improving overall health literacy by targeting related skills and assessing their development in the course of outreach and training.

Academic researchers can help community organizations by isolating health information–related factors that contribute to long-lasting behavioral change.

INTRODUCTION

One of the key missions of health sciences librarianship is connecting the public to medical and health information. Activities are grounded in the conviction that health information enables individuals to make better health-related decisions and stay healthier. This mission is supported by research that links lower health literacy—defined as the ability to “obtain, process, and understand the basic health information”—to poorer health status and outcomes 1. The National Library of Medicine's (NLM's) Long Range Plan 2006–2016 includes a recommendation to “advance new outreach programs by NLM and [the National Network of Libraries of Medicine (NN/LM)] for underserved populations at home and abroad,” suggesting that improved information access contributes to reducing health disparities 2. NLM's concept of health information outreach involves improving the public's access to quality, reliable health information. This improved access is achieved via improving individuals' and communities' awareness of quality resources (primarily, digital, but also print) and providing information and training in their use. The focus is not on delivering specific content or health messages, but on equipping individuals with flexible knowledge and skills, thus enabling them to locate quality health information when they need it.

As part of its efforts to improve health information access for the underserved, NLM sponsors and conducts a number of outreach programs to the public, focusing on racial and ethnic minorities, older adults, children and adolescents, and residents of rural communities. As health information practices and health behaviors are usually grounded in social, community-level factors, small-to-medium community-based organizations (such as nonprofit and faith-based organizations, local libraries and health departments, and educational institutions primarily serving local residents) are often in the best position to assess their local information needs and conduct outreach. Community-based organizations are therefore at the heart of many NLM-sponsored programs, and small-to-medium-scale community health information outreach is the focus of this paper. Typical programs support community-based organizations by providing individual grant or contract awards of $20,000–$50,000 for a period of 1 or 2 years. NLM encourages organizations to develop projects that best suit their local health information needs, while building on existing community resources. Explicit review criteria state that projects need to be clear in goals and scope, as well as sustainable past the period of the award.

Convincing evidence of the effectiveness of health information outreach projects is essential to ensure their continued funding and support, and project evaluation is the method for obtaining the evidence. NLM's Long Range Plan postulates a goal of “develop[ing] new methodologies to evaluate the impact of health information on patient care and health outcomes.” To support outreach projects in their evaluation efforts, NLM created Measuring the Difference, a seminal health information outreach evaluation guide 3. In addition to the guide, the Outreach Evaluation Resource Center offers several workshops on outreach planning and evaluation 4. Requests for proposals (RFPs) for NLM-supported outreach programs typically ask applicants to address evaluation plans in their applications, although these requests are usually not prescriptive, leaving the selection of outcome measures and methods to the applicants. For example, the 2011 RFP for a program aiming to improve health information access on campuses of historically black colleges and universities (HCBU) encouraged the applicants to provide the description of a specific evaluation plan, complete with outcome variables and measures, and to include copies of proposed pre-activity and post-activity assessment instruments. This level of evaluation requirements reflects NLM's recognition that evaluations should match unique programmatic approaches of local communities, as well as awareness that meeting precisely defined evaluation standards may present organizations with insurmountable financial and technical hardship.

Despite NLM's efforts to support outreach evaluation, communications with grantees and contract recipients suggest that evaluation remains a challenging task, especially for small-scale community programs. The objective of this paper is to review the current state of health information outreach evaluation, characterizing strengths and weaknesses in projects' ability to measure their impact on the recipients, as well as to assess enablers of and barriers to the projects' success. As project evaluation practices are inextricably tied to projects' objectives and design, our ancillary objective is to catalog the projects' characteristics, attempting to relate them to evaluation practices. The authors also aim to develop recommendations for strengthening the evaluation of health information outreach. The paper focuses on evaluating the outcomes of the outreach, rather than the process of conducting the projects.

BACKGROUND

What are desired characteristics of health information outreach evaluations?

Measuring the Difference echoes general evaluation theory in describing many best practices of program evaluation. It also stresses points that are unique to health information outreach. Several of these are related to planning the projects themselves, as good evaluation is closely related to the projects' objectives. The suggestions include: (1) grounding the project's activities in existing theories of behavioral change; (2) conducting a community assessment prior to finalizing program's specific objectives; (3) identifying measurable objectives and ensuring that evaluation measures reflect those objectives; and (4) developing an evaluation plan with a well-justified choice of evaluation methods (e.g., quantitative versus qualitative) and design (e.g., experimental versus nonexperimental). In addition, while health information outreach projects aim to enable individuals, they usually take place in community organizations. Many institutional and community factors can contribute to or hinder success. It is therefore important for evaluations to address complex environmental factors and include measures of community sustainability and institutional change in the outcomes 5. To ensure that the results of evaluation are useful, it is also important that evaluations are “participatory,” drawing on on-the-ground organizations and community members to determine criteria for project success and failure and providing in-depth description of success enablers and barriers 6.

What effects of health information outreach can be measured?

The greatest challenge of health information outreach is the gap between what researchers would like to measure and what can be realistically measured. Health information outreach is predicated on the assumption that providing individuals with health information improves their health behaviors and outcomes. At the same time, capturing actual behavioral and health-status outcomes resulting from small-scale projects is often neither realistic nor logistically feasible. For this reason, measurement must focus on important mediating variables.

Although health librarianship does not provide a model, a number of social and behavioral theories allow hypotheses about what these variables might be. Health information outreach projects typically aim to make individuals aware of health information and equip them with information-seeking skills. It is reasonable to expect that greater awareness and skills will lead to more instances of online health information seeking, thus contributing to health knowledge. Research in health literacy suggests that knowledge (which is a component of health literacy) indeed contributes to more desirable actions and outcomes. For examples, studies found that diabetes patients with higher levels of health literacy had better glycemic control and were less likely to suffer complications such as retinopathy 7. Another study found that greater health literacy decreased the risk of hospitalization among seniors 8. The effect of health literacy on health holds even when various social, demographic, and health status factors are taken into account 9. Health literacy research suggests that health knowledge is a valuable mediating factor, worth attempting to increase and to measure in evaluations.

While knowledge is important, it alone does not determine health behaviors. A number of health behavior theories attempt to identify cognitive, social, and emotional factors that influence individuals' actions. For example, the “Health Belief Model” views health behaviors as the outcome of four factors: (1) perceived susceptibility to a condition, (2) perceived severity of that condition, (3) perceived benefits of a health-related behavior for preventing or affecting the condition, and (4) perceived barriers to the behavior 10. While these four factors are predicated on health knowledge (e.g., perceived severity of a condition is related to the understanding of its disease mechanism), they are also shaped by emotions and social norms. Other health behavior theories similarly emphasize that the impact of knowledge on behavior is complicated by many mediating factors. One commonly cited mediator is self-efficacy, or one's confidence in the ability to succeed in performing an action (e.g., plan a healthy meal, exercise) 11. Jointly, these theories suggest that self-efficacy and beliefs about the role of health information are important targets for programmatic intervention and assessment.

In summary, while measuring the impact of health information on health behaviors and outcomes is desirable but challenging, measuring mediating factors such as awareness, information-seeking skills, information use, attitudes, and self-efficacy can provide valuable information about the effectiveness of health information outreach efforts. Since outreach projects are typically conducted in community organizations that can resonate or stifle their effects, it is also essential to measure institutional impact of projects. Carefully designed evaluations can help build the evidence base for best practices in health information outreach and contribute to theories about how information access impacts health behaviors and outcomes.

METHODS

Strategies for articles selection

For the purpose of this project, we chose to focus our analysis on publicly available resources, thus restricting our scope to journal publications and excluding unpublished documents, such as grantees' reports to funding agencies. We performed literature searches in PubMed; CINAHL; Library, Information Science & Technology Abstracts (LISTA); and Communication & Mass Media Complete to identify articles published between 2000 and 2010 that described consumer health information outreach projects using the strategies as described below:

PubMed: (health education[majr] OR health promotion[majr] OR consumer health information[majr] OR outreach[tw] OR information dissemination[majr]) AND (internet[majr] OR computer-assisted instruction[majr]) AND (health knowledge, attitudes, practice[mh] OR outcome assessment OR program evaluation[mh] OR outcome*) AND 2000 : 2010[dp]

CINAHL, LISTA, and Communication & Mass Media Complete (separate searches in each)†: 1-(“health information” OR “consumer health information” OR “computer-assisted instruction”) and (“health promotion” OR outreach OR “health education” OR “patient education”) and “best practice*” (Limiters - Publication Date: 20000101-20101231)

2- health information AND outreach (Limiters - Publication Date: 20000101-20101231)

In reviewing the results, we excluded programs aimed at reducing or promoting specific behaviors (e.g., smoking cessation programs) and included programs that focused on providing access to health information resources, raising awareness of authoritative health information resources on the Internet, or teaching how to find and evaluate health information. We excluded programs that did not include some type of program evaluation. Finally, we excluded papers that described large national or regional efforts that combined many individual projects (e.g., “Tribal Connections Health Information Outreach: Results, Evaluation, and Challenges” by Wood et al. 12), because the unit of our analysis was the individual project and because we wanted to focus on the efforts and activities of small-to-medium-sized organizations. While culling through the resulting articles and their reference lists, we also noticed that many came from the Journal of the Medical Library Association (JMLA) and the Journal of Consumer Health on the Internet, so we complemented database searching with hand-searching of these two journals. Since the Journal of Consumer Health on the Internet is not indexed for MEDLINE, manual searching of this publication turned out to be crucial. We also checked the reference lists of articles found in both the database and journal searches to identify additional papers.

We narrowed the search from the more than 700 retrieved initially to 33 articles (Appendix, online only) that fit our inclusion criteria with 70% of them coming from the JMLA (9 articles) and the Journal of Consumer Health on the Internet (14 articles). The remaining 10 articles were published in a variety of library, biomedical, psychological, and informatics journals.

Coding scheme development and inter-rater reliability

To develop a coding scheme, two authors (Whitney and Keselman) engaged in reading and iterative discussions of six randomly selected articles. In doing so, the two authors postulated and refined codes that pertained to (1) design of outreach activities and (2) project evaluation. Some coding categories were imposed in a top-down fashion and stemmed from the evaluation theory (e.g., types of posttest: immediate versus delayed) and NLM outreach goals (e.g., sustainability indicators). Others emerged from the data (e.g., match between stated objectives and evaluation measures). Once the preliminary scheme was developed, the two authors tested its feasibility by applying it to three articles that used different evaluation approaches, which resulted in a slight modification of the coding scheme. Table 1 (online only) presents the final coding scheme.

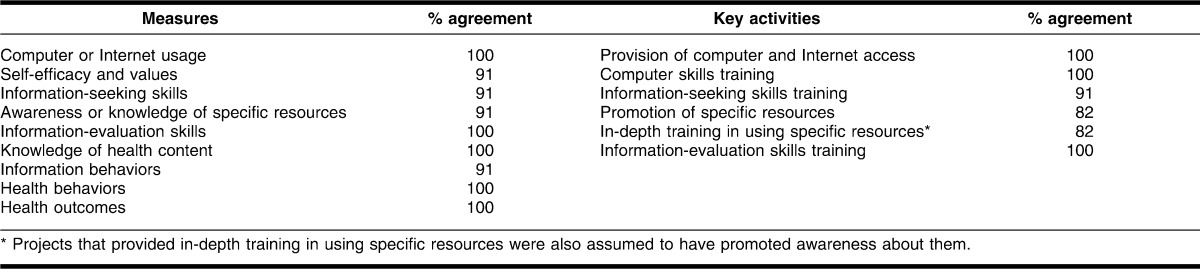

Once the scheme was developed, the two authors applied it to eleven articles in order to assess inter-rater reliability for two coding categories, “Key Activities” and “Measures.” These categories were selected because of their centrality to the study and their straightforward quantitative nature. As Cohen's kappa would not be a reliable measure of inter-rater reliability due to low variability in the outcomes (e.g., few studies attempted to measure health behaviors), the two authors used simple percent agreement (Table 2). Further research to confirm sufficient inter-rater reliability may be conducted with a different dataset with known variability in the outcome (not available at the present).

Table 2.

Percent agreement between two raters on measures and key activities categories

Once the agreement level was judged satisfactory, one author (Whitney) coded the remaining articles. Finally, coding in the categories that required subjective judgment (e.g., match between stated objectives and reported outcomes) were confirmed in discussions.

RESULTS

Format of existing programs

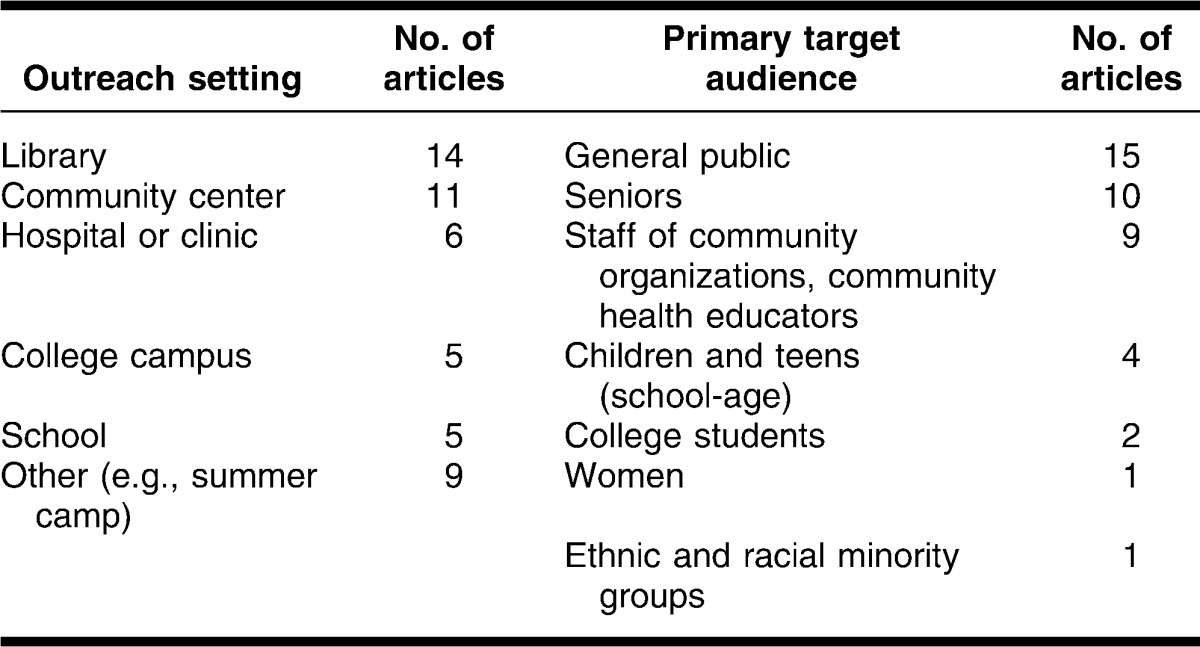

Outreach programs described in the literature spanned a range of formats. Programs' settings and targeted populations are presented in Table 3. Twenty out of thirty-three programs were funded by NLM, usually through subcontracts awarded by a Regional Medical Library (e.g., Houston Academy of Medicine Library).

Table 3.

Settings and primary target audiences

Many projects utilized multiple settings and reached out to more than one primary audience. Seventeen projects conducted needs assessment among target audience members in their community prior to the outreach activities and based their activities on the results of needs assessment. These ranged from focus groups and community interviews to review of public library reference statistics. Many also based their activities and approaches on a literature review of information needs and health statistics reported for their target audience.

Eighteen of the thirty-three projects involved multi-session training, ranging between two and eight sessions, for at least some of the participants. Formats of training activities varied, and most programs included multiple activities. All but one involved a hands-on component where participants worked with health information on the web during training. Twenty-four programs included a lecture component; twenty-one included a demonstration of a resource by an instructor or trainer. Sixteen projects included some other activity types, such as exhibits, role playing exercises, or group discussions.

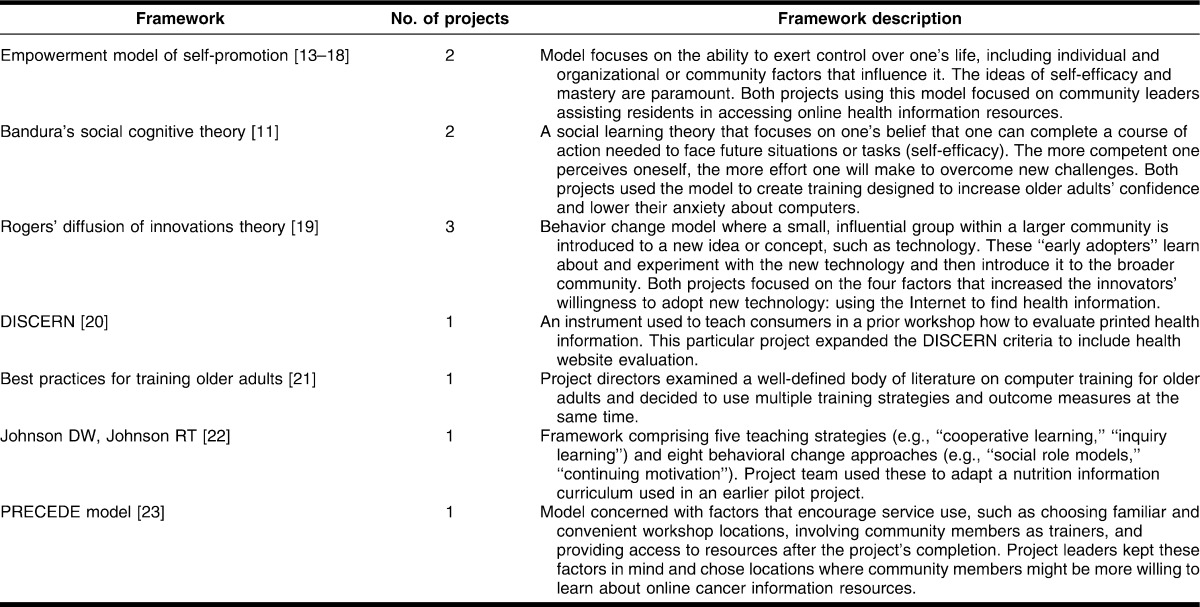

Theoretical frameworks

Only nine of thirty-three projects were grounded in explicit theoretical frameworks or models. Table 4 provides a brief description of these frameworks and their use in the projects.

Table 4.

Theoretical frameworks at the foundation of outreach efforts

Stated broad goals and objectives of the projects

Measurable objectives are the key to successful evaluation and, ultimately, successful programs. Specificity of goal statements varied from easily measurable (e.g., “improving health information-evaluation skills among minority adolescents”) to rhetorical (e.g., “contribute to better health outcomes”). Overall, all goal statements could be classified into three formats. The first, evident in two of the thirty-three papers, presented the goals in terms of conducting an activity (e.g., “to deliver series of workshops to individuals living with HIV/AIDS in rural areas”‡). The second format, evident in twelve of thirty-three papers, presented the goals in terms of conducting an activity in order to affect some intermediate variable or set of variables, such as attitude, skills, or knowledge (e.g., “to conduct training sessions increasing information-evaluation skills among minority adolescents”). The expectation that this change would lead to a change in health behavior and outcomes was implicit. The third format, evident in nineteen of thirty-three papers, presented the goals in terms of conducting an activity to affect intermediate variables and made an explicit statement about the expected positive effect on behaviors and health outcomes (e.g., “to deliver workshops teaching residents to recognize authoritative health information, thus improving their information-evaluation skills and ultimately contributing to better health outcomes”).

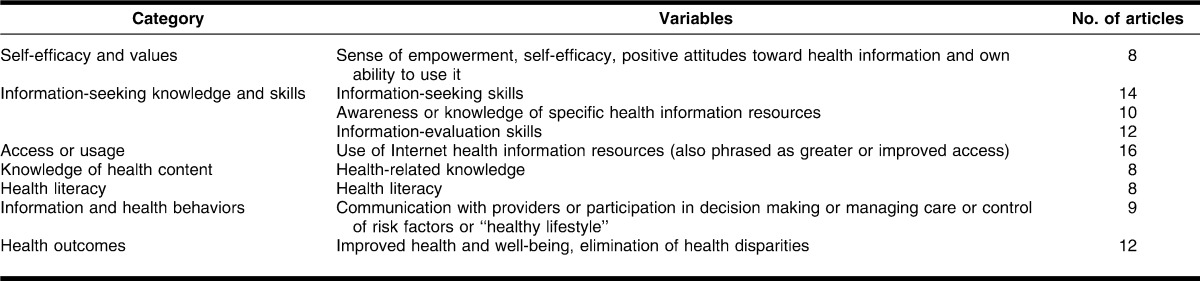

Unlike the first format, the latter two include specific desired outcomes and are therefore potentially measurable. Clarity of actual outcome variables ranged from highly specific (e.g., “increase awareness of MedlinePlus”) to vague and difficult to translate into measurable language (e.g., “facilitate information support”). Yet some others were clear but likely difficult to measure because of the complexity of the concept (e.g., health literacy). Table 5 presents immediate and ultimate goal variables, extracted from the goal statements and divided into seven categories. Although the articles were not always clear in distinguishing between immediate and ultimate goals, the latter typically pertained to the last three categories: health literacy, behaviors, and health outcomes. Correspondence between goals or objectives and actual outcome variables is discussed in a separate section.

Table 5.

Variables extracted from goals or objectives statements

Key activities

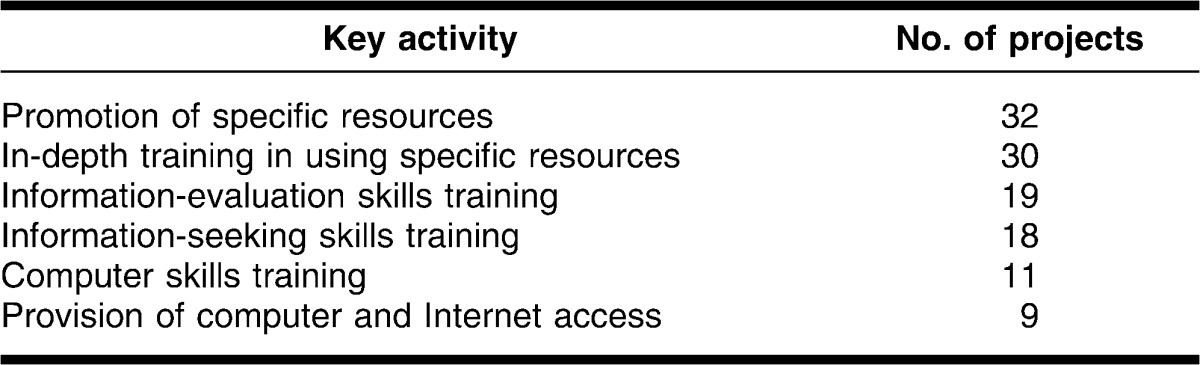

Most projects used a broad scope of activities (Table 6). The average number of key activities in a project was 3.6, ranging between 2 and 5.

Table 6.

Key activities

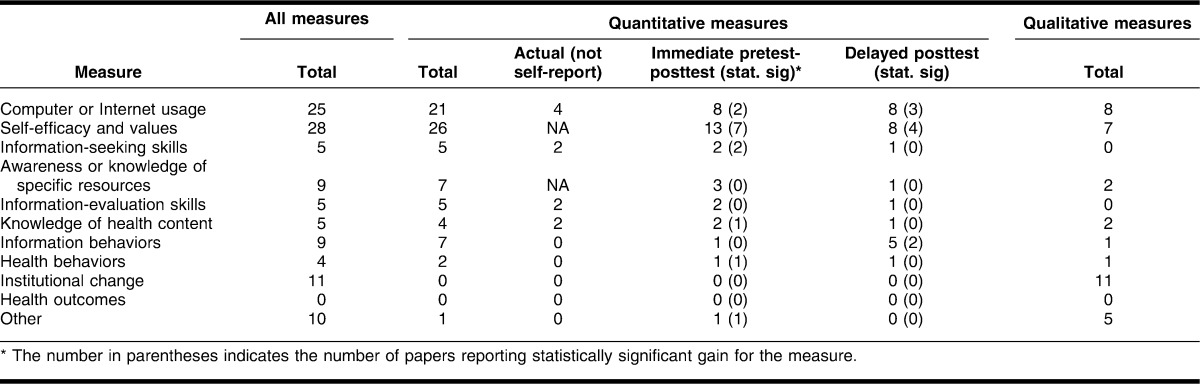

Evaluation and reporting of outcomes

The projects reported assessing a wide range of quantitative and qualitative outcome measures (Table 7). The rigor of assessment varied: Five studies treated quantitative variables as complex constructs and measured them by using structured instruments. Four studies used commercial web analytics software tools. Others assessed quantitative measures via a simple question or two. Qualitative measures also differed in their depth, as described below. The level of details in reporting the results (e.g., inclusion of tables or figures, description of the type of survey instrument used, provision of copies of survey instruments, or reporting of formal techniques for analyzing qualitative data) also varied widely.

Table 7.

Number of studies reporting outcome measures

The average number of measures per article was 3.33, ranging from 0 to 6. The match between specific objectives (not long-term goals) and reported outcome measures was deemed “perfect” in only 10 of 33 studies. Seventeen studies were coded as having partial matches. Six studies had no match between specific objectives and reported outcomes.

Quantitative measures

Quantitative measures were more prevalent than qualitative ones for most variables. Most commonly, obtaining these measures involved administering questionnaires and computing scores; as noted earlier, a few studies collected web use data. Few studies presented participants with objective tests of knowledge and skills; most relied on self-report.

Table 7 shows that pretest-posttest design was rare, with most projects presenting questionnaires at the end of outreach activities only. This could in part be attributed to situations when a pretest appeared irrelevant due to assumed zero baseline. For example, project leaders might have assumed that extremely low numbers of high school students or community participants had any knowledge of NLM resources prior to training, so any knowledge demonstrated during the posttest would be interpreted as gained in the course of the project. When a pretest-posttest design was employed, it was not always clear whether a test of statistical significance was performed. Reported statistically significant gains were uncommon for all variables. With the exception of efficacy and values, delayed posttest measures were almost as frequent as immediate posttest measures. A variable, most commonly measured some weeks or months after the intervention, was information behavior (measured in five projects).

Qualitative measures

Of the thirty-three projects analyzed, sixteen included qualitative data from focus group sessions, interviews, and journal entries (Table 7). Most qualitative data were gathered immediately after the intervention. Three projects, however, included a delayed data collection. Only two of the projects collecting qualitative data reported using formal analytic techniques involving the coding and thematic categorization of responses. Qualitative evaluations measured institutional change (eleven projects); usage, with participants providing examples of their information use (eight projects); and self-efficacy (seven projects). In addition, five projects measured variables that we placed in the “Other” category, such as trainees' satisfaction with the training. Although variables such as usage and self-efficacy can be measured quantitatively, using qualitative methods yielded descriptive examples and explanations, which enriched the quantitative data.

Institutional change

Eleven project evaluations made mention of change that happened at the organizational or institutional level as a result of the intervention. These usually did not constitute in-depth qualitative measures but rather brief statements made during focus groups or interviews. The majority of the organizations where institutional change was reported were either libraries (six projects) or educational institutions (five projects). Community health organizations constituted the rest (three projects). The most frequently cited change had to do with the integration of the resources introduced in the project into existing curricula (five projects), followed closely by the routine use of project resources by library or community health agency staff when answering customers' health information questions (four projects). Another significant change involved the increased role of library staff in the larger organization and recognition of their value in delivering services to the organizations' stakeholders (two projects).

Reported enablers of and barriers to success and sustainability indicators

One of the objectives of this review was to characterize the projects' ability to assess enablers of and barriers to their successes. While this assessment was not done via formal methods, the vast majority of papers reported at least partial success for their projects and included a discussion of enablers of and barriers to success. They also often mentioned some program elements that lasted beyond the intervention.

Enablers

Most commonly mentioned success enablers included collaborating with community-based organizations, conducting community assessments prior to the intervention, and using behavior change theories or established research in the training of specific populations (e.g., older adults). Other enablers included involving the target population in the planning and execution of the intervention, demonstrating cultural sensitivity toward the target population, engaging participants with previous computer and Internet experience, and revising project activities that participants found confusing (projects with multiple sessions).

Barriers

The most significant barriers to project success mentioned by investigators were high attrition rates from one session to another in multi-session interventions or low attendance in single-session interventions, time or scheduling constraints among the participants, problems with recruitment or participant transportation to the training site, lack of previous computer or Internet experience, and funding issues.

Sustainability indicators

Twenty-five articles described some elements of the program that lasted beyond the period of the intervention. Eleven of these were reports of institutional change, described in an earlier section. Other indicators included training materials in the form of electronic presentation handouts, workbooks with additional exercises, or project websites. One library renewed subscriptions to some of the databases introduced in the original project in order to retain access to the resources used in the original training. Finally, some project leaders counted the maintenance of computer workstations and Internet service as evidence of sustainability beyond the original project.

DISCUSSION

Evaluations in the existing outreach projects

Our analysis found tremendous variation in the quality of evaluation methods, outcome measures, and reporting among outreach projects. Projects sometimes did not define exactly what they intended to teach (other than introduce specific resources) and measured what seemed easiest to measure, such as workshop satisfaction, instead of measuring variables related to the projects' objectives. The quality of how the results were reported also varied widely. For example, a few project evaluations with a pretest-posttest design included information about statistical significance testing, while others reported pretest-to-posttest improvement without mentioning the significance testing. It was therefore not clear whether a significance test was not conducted; the test was conducted and the results not found significant; or the results were statistically significant, but the fact of their significance was not reported in the paper. In addition, two projects utilizing qualitative data performed a formal analysis and thematic coding to enrich the quantitative data collected for variables such as self-efficacy and resource usage; however, most projects providing information about qualitative data did not report using any formal analytic techniques. This type of variation in reporting makes it impossible to develop a theory about best practices for successful health information outreach.

The impact of the projects

While many projects demonstrated success for variables such as improving computer or Internet usage and self-efficacy, only one-third described the long-term impact of the projects on health information-seeking behavior at the individual or institutional level. In many cases, the project was planned as a single session workshop with no long-term follow-up, which prevented project staff from determining the long-term effects of the intervention on the participants or the broader community. Projects involving multiple sessions over a longer period of time with a delayed post-evaluation were better able to demonstrate long-term impact.

Recommendations for community organizations

Project leaders must carefully plan outcome evaluation, with objectives and measures determined beforehand. Basing the project on a theoretical framework and conducting a community needs assessment are helpful here. Theories such as Rogers' diffusion of innovations 19 or Bandura's social cognitive theory 11, together with a community assessment, can help planners focus on project activities designed to meet objectives that may lead to lasting behavior change. As mentioned earlier, NLM's Measuring the Difference and the Outreach Evaluation Resource Center offer evaluation guidance to project planners 3,4. Using these tools can help planners decide on appropriate project activities and outcome measures. It also seems desirable to strive for institutional change, rather than just provide information to one cohort of participants at a single point in time and decide how to document this change in advance.

While most projects that the authors analyzed demonstrated short-term success, we in health information outreach need to think about moving toward measuring long-term goals. It is unlikely that small community organizations will ever be able to measure the impact on behavioral change and health outcomes in one community project. However, we should look at ways to improve and measure overall health literacy, which has been shown to correlate with improved health behavior and outcomes. If we believe that the dimensions of health literacy include information literacy, critical evaluation skills, and content knowledge, then we need to specifically target related skills and then assess their development in the course of outreach and training.

Recommendations for funding agencies

Suggestions need to be realistic. Many health information outreach projects are carried out in the face of time and financial strain, so developing elaborate plans is a significant hardship. On the other hand, when funding is tight, accountability is especially important. Funding agencies, including NLM, can support community organizations in their evaluation efforts by providing training and logistical support and streamlining reporting requirements. For example, most funding agencies require that organizations complete project evaluations and describe outcomes in their final reports. However, given the substantial variability in outcomes reporting, funders could make reporting instructions more explicit so that project planners have a clearer idea of what they must include in their evaluations and final reports. Huber et al. offer a similar suggestion in their outreach impact study published in October 2011 24. Funders may also want to offer easy-to-adopt guides and instruments, provide consultations to emergent and active projects, deliver education about evaluation practices, and provide guidelines for improving evaluation, including report templates and variables that should be included in their evaluations so that project leaders know what funders expect to see in the final report. Funding organizations should also be mindful of their awardees' funding cycles so that they have adequate time to complete evaluations as well as their project activities.

Recommendations for basic research

Although helpful, the data from community projects have to be somewhat limited and will not provide a complete understanding of the relationship between health information outreach and behavioral change. In a position paper about evaluation of community-based information outreach, field leader Friedman suggests that measuring behavioral change should not be the task of community-based organizations: instead, they should engage in “smallball” evaluation that provides valuable in-depth information about intermediate variables at critical points in project implementation 25. However, the field of community outreach should also fund and encourage research interventions where the main goal is not to obtain maximum possible gain in a specific sample, but to isolate causal factors that contribute to long-lasting behavioral change. While measuring lasting behavioral change at the community level is beyond the scope of most small community projects, research and outreach studies carried out by larger academic institutions can aim to gauge this change and attempt to rely on actual, rather than self-reported, outcomes. They should also aim to develop comprehensive measurable dimensions of institutional change and sustainability, both qualitative and quantitative. This type of research should then assist future project planners to develop activities for long-term community impact.

CONCLUSIONS

This review suggests that while most community organizations involved in health information outreach accomplish their goals, their approach to evaluation does not allow conclusions to be drawn about the impact of various characteristics of health information outreach on attitudes, knowledge, behaviors, and outcomes. Improving the rigor of evaluation studies and assembling an informative body of knowledge requires a joint effort by funding agencies, community organizations, and academic research institutions.

Electronic Content

Footnotes

This research is supported by the Intramural Research Program of the National Institutes of Health, National Library of Medicine.

This article has been approved for the Medical Library Association's Independent Reading Program <http://www.mlanet.org/education/irp/>.

Supplemental Table 1 and a supplemental appendix are available with the online version of this journal.

All examples are hypothetical.

REFERENCES

- 1.Committee on Health Literacy, Board on Neuroscience and Behavioral Health. Health literacy: a prescription to end confusion [Internet] Washington, DC: The National Academies Press; 2004 [cited 10 Apr 2012]. p. 346. < http://www.nap.edu/openbook.php?record_id=10883>. [Google Scholar]

- 2.National Library of Medicine. Charting the course for the 21st century—NLM's long range plan 2006–2016 [Internet] Bethesda (MD): The Library, National Institutes of Health; 2007 May 14 [updated 14 May 2007; cited 27 Mar 2012]. < http://www.nlm.nih.gov/pubs/plan/lrp06/report/default.html>. [Google Scholar]

- 3.Burroughs CM, Wood FB, editors. Measuring the difference: guide to planning and evaluating health information outreach [Internet] Bethesda, MD: National Network of Libraries of Medicine, National Library of Medicine; 2000 Sep [cited 27 Mar 2012]. p. 130. < http://www.nnlm.gov/evaluation/guide/>. [Google Scholar]

- 4.National Network of Libraries of Medicine. Outreach evaluation resource center [Internet] Bethesda (MD): National Library of Medicine [updated 3 Jan 2012; cited 27 Mar 2012]; < http://www.nnlm.gov/evaluation/>. [Google Scholar]

- 5.Olney CA. Using evaluation to adapt health information outreach to the complex environments of community-based organizations. J Med Lib Assoc. 2005 Oct;93(4 suppl):S57–S67. [PMC free article] [PubMed] [Google Scholar]

- 6.Ottoson JM, Green LW. Community outreach: from measuring the difference to making a difference with health information. J Med Lib Assoc. 2005 Oct;93(4 suppl):S49–S56. [PMC free article] [PubMed] [Google Scholar]

- 7.Schillinger D, Grumback A, Piette J, Wang F, Osmond D, Daher C, Palacios J, Sullivan GD, Bindman AB. Association of health literacy with diabetes outcomes. JAMA. 2002 Jul 24;288(4):475–82. doi: 10.1001/jama.288.4.475. DOI: http://dx.doi.org/10.1001/jama.288.4.475. [DOI] [PubMed] [Google Scholar]

- 8.Baker DW, Gazmararian JA, Williams MV, Scott T, Parker RM, Green D, Ren J, Peel J. Functional health literacy and the risk of hospital admission among Medicare managed care enrollees. Am J Public Health. 2002 Aug;92(8):1278–83. doi: 10.2105/ajph.92.8.1278. DOI: http://dx.doi.org/10.2105/AJPH.92.8.1278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Wolf MS, Gazmararian JA, Baker DW. Health literacy and functional health status among older adults. Arch Intern Med. 2005 Sep 26;165(17):1946–52. doi: 10.1001/archinte.165.17.1946. DOI: http://dx.doi.org/10.1001/archinte.165.17.1946. [DOI] [PubMed] [Google Scholar]

- 10.Rosenstock IM. Health belief model. Encyclopedia of Psychology [Internet] Washington (DC): American Psychological Association; 2000. 4 [cited 28 Mar 2012]. [about 78–80 p.]. < http://content.apa.org/books/10519-035>. DOI: http://dx.doi.org/10.1037/10519-035. [Google Scholar]

- 11.Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977 Mar;84(2):191–215. doi: 10.1037//0033-295x.84.2.191. [DOI] [PubMed] [Google Scholar]

- 12.Wood FB, Sahali R, Press N, Burroughs C, Mala TA, Siegel ER, Fuller SS, Rambo N. Tribal connections health information outreach: results, evaluation, and challenges. J Med Lib Assoc. 2003 Jan;91(1):57–66. [PMC free article] [PubMed] [Google Scholar]

- 13.Laverack G, Labonte R. A planning framework for community empowerment goals within health promotion. Health Policy Plan. 2000 Sep;15(3):255–62. doi: 10.1093/heapol/15.3.255. [DOI] [PubMed] [Google Scholar]

- 14.Conger JA, Kanungo RN. The empowerment process: integrating theory and practice. Acad Manag Rev. 1988 Jul:471–82. [Google Scholar]

- 15.Israel BA, Checkoway B, Schulz A, Zimmerman M. Health education and community empowerment: conceptualizing and measuring perceptions of individual, organizational, and community control. Health Educ Q. 1994 Summer;21(2):149–70. doi: 10.1177/109019819402100203. [DOI] [PubMed] [Google Scholar]

- 16.Menon ST. Psychological empowerment: definition, measurement, and validation. Can J Behav Sci/Revue canadienne des sciences du comportement. 1999 Jul;31(3):161–4. DOI: http://dx.doi.org/10.1037/h0087084. [Google Scholar]

- 17.Thomas KW, Velthouse BA. Cognitive elements of empowerment: an “interpretive” model of intrinsic task motivation. Acad Manag Rev. 1990 Oct:666–81. [Google Scholar]

- 18.Zimmerman MA. Handbook of community psychology. Dordrecht, Netherlands: Kluwer Academic Publishers; 2000. Empowerment theory: psychological, organizational, and community levels of analysis; pp. 43–63. [Google Scholar]

- 19.Rogers EM. Diffusion of innovations. 4th ed. New York, NY: Free Press; 2003. [Google Scholar]

- 20.Charnock D, Shepperd S, Needham G, Gann R. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health. 1999 Feb;53(2):105–11. doi: 10.1136/jech.53.2.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Xie B, Bugg JM. Public library computer training for older adults to access high-quality Internet health information. Lib Inf Sci Res. 2009 Sep 1;31(3):155–62. doi: 10.1016/j.lisr.2009.03.004. DOI: http://dx.doi.org/10.1016/j.lisr.2009.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Johnson DW, Johnson RT. The process of nutrition education: a model for effectiveness. J Nutr Educ. 1985 Jun;17(2 suppl):S1–S7. [Google Scholar]

- 23.Green LW. Health education models. In: Matarazzo JD, Weiss SM, Herd JA, Miller NE, editors. Behavioral health: a handbook of health enhancement and disease prevention. New York, NY: John Wiley & Sons; 1984. pp. 181–98. [Google Scholar]

- 24.Huber JT, Kean EB, Fitzgerald PD, Altman TA, Young ZG, Dupin KM, Leskovec J, Holst R. Outreach impact study: the case of the Greater Midwest Region. J Med Lib Assoc. 2011 Oct;99(4):297–303. doi: 10.3163/1536-5050.99.4.007. DOI: http://dx.doi.org/10.3163/1536-5050.99.4.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Friedman CP. “Smallball” evaluation: a prescription for studying community-based information interventions. J Med Lib Assoc. 2005 Oct;93(4 suppl):S43–S48. [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.