Abstract

We propose a new residual for regression models of ordinal outcomes, defined as E{sign(y,Y)}, where y is the observed outcome and Y is a random variable from the fitted distribution. This new residual is a single value per subject irrespective of the number of categories of the ordinal outcome, contains directional information between the observed value and the fitted distribution, and does not require the assignment of arbitrary numbers to categories. We study its properties, describe its connections with other residuals, ranks and ridits, and demonstrate its use in model diagnostics.

Keywords: Model diagnostics, Ordinal outcome, Ordinal regression, Residual

1. Introduction

Residuals are an important component of regression analysis. The most basic residual, from linear regression, has many nice features. Specifically, the residual

-

(a)

results in only one value per subject;

-

(b)

reflects the overall direction of the observed value compared with the fitted value;

-

(c)

is monotonic with respect to the observed value for those with the same covariates;

-

(d)

has a range of possible values that is symmetric about zero; and

-

(e)

has expectation zero.

This list is by no means comprehensive, and some of these features are more important than others. But these combined features make the linear regression residual popular and useful for diagnostics and tests of conditional independence.

Residuals exist for ordinal outcomes, notably Pearson, cumulative Pearson and deviance residuals (McCullagh & Nelder, 1989). Although these residuals have some use for checking model assumptions and fit, they do not satisfy (a)–(e). Liu et al. (2009) proposed some new residuals; their residual based on the sum of cumulative residuals satisfies (a)–(e), but it implicitly assigns equal-distance scores to the categories; see the Supplementary Material. For ordinal outcomes, in addition to (a)–(e), the ideal residual

-

(f)

should preserve order without assigning arbitrary scores to the categories.

A residual satisfying (a)–(f) could permit the application of diagnostic tools developed for linear regression to ordinal models, and could provide a framework for testing conditional independence with ordinal variables. Here we study a new residual for ordinal data. It was introduced as a tool for constructing tests of association between ordinal variables (Li & Shepherd, 2010). However, its properties and other uses have not been studied.

2. New residual for ordinal outcomes

2.1. Definition

Consider a set of s ordered categories, S = {1, . . . , s}, with order 1 < ⋯ < s. For a category y in S and a distribution F over S, we define a residual

where Y is a random variable with distribution F, and sign(a, b) is −1, 0 and 1 for a < b, a = b and a > b, respectively.

In ordinal regression models, we fit an ordinal outcome variable Y on covariates Z. Some common models include continuation ratio, proportional odds and other cumulative link models (Agresti, 2002). For subject i, let Yi be the outcome and let FZi;θ be the distribution of Yi given covariates Zi under a model with parameters θ. We define

| (1) |

Given data (yi, zi) and a fitted model with parameter estimates θ̂, the residual for subject i is r̂i = r(yi, Fzi;θ̂). Notice that r̂i is not a realization of Ri, but of the random variable R̂i = r(Yi, FZi;θ̂). If θ̂ → θ in probability, then FZi;θ̂ → FZi;θ̂ and R̂i → Ri in distribution. Therefore, moment properties of Ri are applicable to R̂i asymptotically.

Our residual has all the desirable features listed in § 1; (a), (b) and (f) are obvious and (c)–(e) will result from Properties 1 and 8 below. Proofs of all the properties are given in the Appendix.

2.2. Properties of r(y, F), Ri and r̂i

First consider a distribution F = (p1, . . . , ps) over S. The corresponding cumulative probabilities are γj = ∑k⩽jpk, with γs = 1. For convenience, we define γ0 = 0. Then for category j ∈ S, r(j, F) = γj−1 − (1 − γj) = γj−1 + γj − 1. The following properties hold:

Property 1. −1 ⩽ r(1, F) ⩽ ⋯ ⩽ r(s, F) ⩽ 1;

Property 2. when s = 1, r(1, F) = r(1, 1) = 0, where F = (1) is a point mass;

Property 3. r(j, F) = −r(s − j + 1, G), where G = (q1, . . . , qs) = (ps, . . . , p1) with qj = ps−j+1;

Property 4. as functions of (γ1, . . . ,γs−1), ∂r(j, F)/∂γj = ∂r(j + 1, F)/∂γj.

Property 1 implies (c) and (d). Property 3 means that when the order of the categories is reversed the residual has the same magnitude but the opposite sign. Property 4 implies that when all probabilities are fixed except for pj and pj+1, the residuals for categories j and j + 1 change at the same rate as γj, or equivalently, pj, changes.

If two adjacent categories t and t + 1 are merged, with distribution G = (q1, . . . , qs−1), where qj = pj for j < t, qt = pt + pt+1 and qj = pj+1 for j > t, then the branching property (Brockett & Levine, 1977),

Property 5. ensures robustness to the number of categories, that is, the residual for the new category is a weighted average of those of the merged categories with weights proportional to their probabilities, and the residual remains the same for the other categories.

Properties 1–5 are sensible for a residual measure for ordinal outcomes. Our residual is the only measure, up to a constant factor, that satisfies Properties 1–5. In fact, Properties 2, 5, and simpler versions of Properties 3 and 4, are sufficient for deriving our residual. Specifically, when s = 2, consider:

Property 3′. r{1, (p, 1 − p)} = −r{2, (1 − p, p)};

Property 4′. dr{1, (p, 1 − p)}/dp = dr{2, (p, 1 − p)}/dp.

Then the following uniqueness property holds.

Property 6. The function r(j, F) satisfies Properties 2, 3′, 4′ and 5 if and only if r(j, F) = c{γj−1 −(1 − γj)}, where c is an arbitrary constant.

A similar uniqueness property was proved by Brockett & Levine (1977) while studying the properties of ridits (Bross, 1958; Agresti, 1984). In fact, our residual is closely related to ridits, which have been used for scoring levels of an ordinal variable. The ridit for level j is riditj = γj−1 + pj/2 = (γj−1 + γj)/2, and the mean ridit is 1/2. The following property holds:

Property 7. r(j, F)/2 = riditj − 1/2.

Now consider a random variable Y over S, with distribution F = (p1, . . . , ps). Then R = r(Y, F) is a random variable, for which the following hold:

Property 8. E(R) = 0;

Property 9. , or alternatively, ;

Property 10. when p1 = ⋯ = ps = 1/s var(R) reaches its maximum (1 − 1/s2)/3.

Property 9 provides alternative ways of calculating var(R) and implies that var(R) does not depend on the order of the probabilities. The maximum of var(R) is an increasing function of s and it approaches the cap 1/3 fairly quickly, being 0.25, 0.30, 0.32, 0.33, for s = 2, 3, 5, 10, respectively.

Now consider a random sample of n subjects, with nj subjects in category j (j = 1, . . . , s). Their empirical distribution is F̂ = (p̂1, . . . , p̂s), where p̂j = nj/n, with cumulative probabilities γ̂j = ∑k⩽jnk/n. Since there are no covariates, we can think of a constant predictor for all subjects. Then F̂ is the fitted distribution and for subjects in category j, their residual is rj = r(j, F̂) = γ̂j−1 + γ̂j − 1 = (∑k<j 2nk + nj − n)/n. If we rank these subjects their midrank is rankj = ∑k<jnk + (nj + 1)/2 and,

Property 11. rankj = T (rj), where T (r) = (n/2)r + (n + 1)/2.

The function T (r) can be viewed as a translation from the residual scale to the rank scale. Li & Shepherd (2010) presented statistics for testing the association between two ordinal variables, X and Y, while adjusting for covariates Z. One statistic was the correlation coefficient between the residuals from models for X | Z and Y | Z. Property 11 implies that when there are no covariates, our statistic is Spearman’s rank correlation coefficient between X and Y. When covariates exist, T (r) will yield adjusted ranks of the subjects, and our statistic can be interpreted as an adjusted rank correlation.

We now focus on models for an ordinal outcome Y on covariates Z with parameters θ. For subject i (i = 1, . . . , n) and category j (j = 1, . . . , s), let γi,j = pr(Yi ⩽ j | Zi; θ); for convenience, we define γi,0 = 0. Let pi,j = pr(Yi = j | Zi) = γi,j − γi,j−1. The following moment properties hold:

Property 12. E(Ri | Zi; θ) = 0;

Property 13. .

Let p̂i,j and γ̂i,j be the maximum likelihood estimates of pi,j and γi,j. Then r̂i = γ̂i,yi−1 + γ̂i, yi− 1. The variance of Ri can be consistently estimated by inserting these estimates into Property 13, . One could therefore calculate a standardized residual as r̂i/{vâr(Ri)}1/2, which for binary Y is the Pearson residual (I{Yi=2} − p̂i,2)(p̂i,1p̂i,2)−1/2 (McCullagh & Nelder, 1989).

Now consider a proportional odds model (McCullagh, 1980), logit{pr(Y ⩽ j | Z)} = αj + ZTβ (j = 1, . . . , s − 1), with parameters θ = (α1, . . . , αs−1, β). Under this model, our residuals are related to score residuals (Therneau et al., 1990). Let li be the loglikelihood for subject i, and Ui = Ui (θ) = ∂li/∂θ. Because Eθ (Ui) = 0, Ui is called the score residual. For proportional odds models, the following properties hold:

Property 14. , where θ̂ is the maximum likelihood estimate;

Property 15. .

Property 14 implies that our residual is a partially aggregated score residual over the α components of Ui (θ̂), and Property 15 is analogous to that of linear regression residuals.

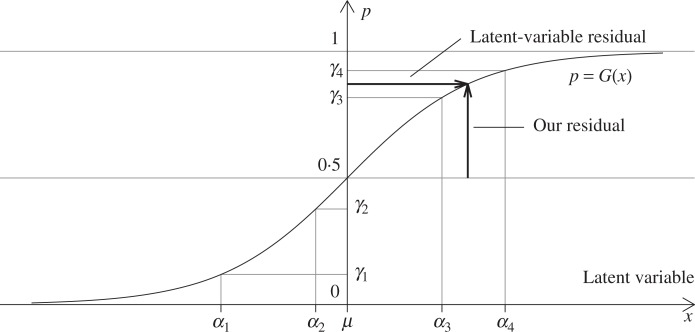

2.3. Connection to residuals on a latent variable scale

In cumulative link regression models, the cumulative probability γi,j is modelled through a link function G−1 (γi,j) = αj + β, where G is a cumulative distribution function over the real line. The ordinal outcome Yi can be viewed as the result of applying thresholds α1, . . . , αs−1 to a latent random variable Ui that has cumulative distribution function Gi (u) = G(u + β). Then E(Ui) = μ − β, where μ is the mean of G. For convenience, let α0 = −∞ and αs = +∞.

If we observed Ui, the usual residual on the latent variable scale would be Ui,res = Ui − E(Ui) = Ui + β − μ. Since Ui + β ∼ G, Ui,res follow the same distribution for all subjects and E (Ui,res) = 0. As Ui is latent, we do not know its value but only that αj−1< Ui< αj if Yi = j. One may want to replace Ui with

and define a residual on the latent variable scale as

where E(Li,res) = E{E(Ui,res | Yi)} = E(Ui,res) = 0.

If the mean of G is its median, which is true for logit and probit link functions, then μ = G−1(1/2). And if the interval (γi,j−1,γi,j) is small, then

where mi,j = (γi,j−1 + γi,j)/2. Since , our residual is equivalent to comparing mi,j with 1/2 on the probability scale. Therefore, our residual captures information similar to that of a latent-variable residual, but on a probability scale irrespective of the choice of link function; see Fig. 1.

Fig. 1.

Connection between our residual and a latent-variable residual.

3. Use of the residual in model diagnostics

3.1. Residual-by-predictor plots

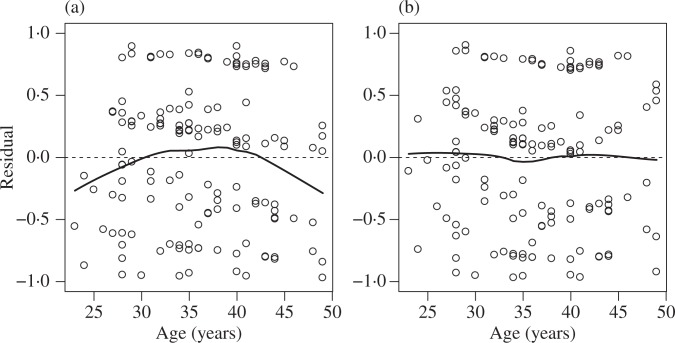

When an ordinal regression model is correct, E(Li,res) = 0 and Li,res = E(Ui,res | Yi) is a categorization of Ui,res, which follows the same distribution across all subjects. Therefore, Li,res may have similar distributions across subjects. In addition, we have shown that E(Ri) = 0, the range of Ri is symmetric about zero, and Ri and Li,res are closely related. Therefore, a plot of r̂, or its latent-variable version l̂res, versus a predictor can be useful for visually detecting if there is any additional effect of that predictor, such as nonlinearity, on the outcome. This plot is referred to as a residual-by-predictor plot.

We use data from a study of HIV-infected women in Zambia (Parham et al., 2006) to demonstrate an application of residual-by-predictor plots. Cervical specimens from 145 women were examined using cytology and categorized into five ordered stages. We fit proportional odds models to assess the association between stage of cervical lesions and age after adjusting for CD4 count. Under the assumption of a linear relationship of age with the log-odds of severity of lesions, the model tends to overpredict severity of lesions at low and high ages, as shown in Fig. 2. If age is put into the model with linear and quadratic terms, the residuals are much more uniform across ages. The plots are similar using standardized or latent-variable residuals, shown in the Supplementary Material.

Fig. 2.

Residual-by-predictor plots with age included in proportional odds models with a linear term (a) and with linear and quadratic terms (b). Lowess curves (solid) are added. A horizontal line (dashed) at zero is included for reference.

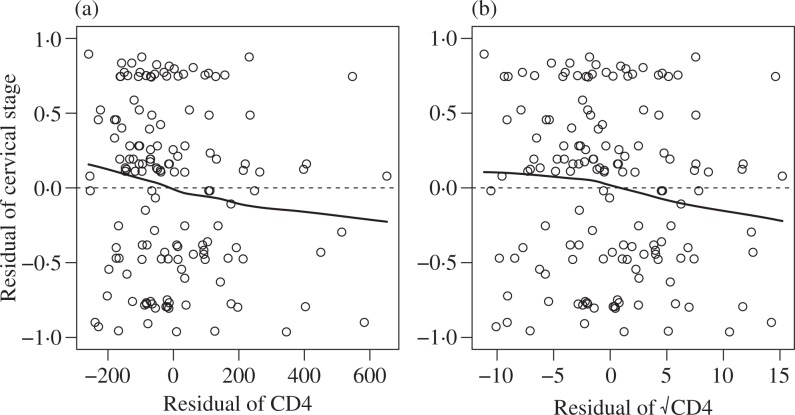

3.2. Partial regression plots

When fitting a regression model of an ordinal outcome Y on a covariate X and other covariates Z, we may want to examine whether X is associated with Y after adjusting for the effects of Z. To do this, we first fit an ordinal regression model of Y on Z to obtain residuals r̂y, then fit an appropriate regression model of X on Z to obtain residuals r̂x, and plot r̂y versus r̂x. This plot is called a partial regression plot. Let RY be the random variable as defined in (1) and RX be the residual random variable for the model for X | Z, of which r̂x is a realization. When the model for Y | Z is correct and Y and X are independent conditional on Z, RY and RX are independent given Z. Thus, E (RYRX) = E{E(RYRX | Z)} = E{E(RY | Z)E(RX | Z)} = 0 by Property 12, E(RY) = E{E(RY | Z)} = 0 and cov(RY, RX) = E(RYRX) − E(RY) E(RX) = 0; that is, RY and RX are uncorrelated. Hence, the partial regression plot can provide a visual inspection on whether X remains a useful predictor of Y after the effects of Z have been adjusted for. Although the validity of this plot does not depend on the type of residual for X, its effectiveness might.

We use the same dataset as that in § 3.1 to demonstrate partial regression plots. Figure 3(a) is a partial regression plot for the association between cervical lesions and CD4 T-cell count. Residuals from a proportional odds regression of cervical lesions on age and squared age are plotted against residuals from a linear regression of CD4 count on age and squared age. There appears to be correlation between these residuals, so it may be useful to include CD4 count in the model for cervical lesions. Partial regression plots may also be useful for detecting outliers: no single observation appears to be overly influential in this analysis, although the skewed nature of the CD4 residuals suggests that a square root transformation of this variable may lead to a better fit, as suggested by Fig. 3(b). Additional examples of partial regression plots using our residuals are in Li & Shepherd (2010).

Fig. 3.

Partial regression plots looking at the residual association of cervical lesions with (a) CD4 and (b) square-root transformed CD4 after adjusting for age, with both linear and quadratic terms. Lowess curves (solid) are added. A horizontal line (dashed) at zero is included for reference.

4. Discussion

Our residual is effectively defined on the probability scale of the fitted distribution, and can be extended to any regression analysis in which fitted distributions are calculated. One potential advantage of probability-scale residuals is that they can be defined for regression analyses in which the fitted distributions are not completely determined, such as in models of censored data or quantile regression.

Our new residual offers a general solution for how to include an ordinal predictor in a regression model. Traditional approaches treat an ordinal predictor as either numerical, enforcing a linearity assumption, or categorical, ignoring order information. An alternative approach would be to fit an appropriate regression model of the outcome on other covariates Z and an ordinal regression model of the ordinal predictor on Z, and test for correlation between the residuals of the models. The partial regression plot is the graphical counterpart of this approach. We have shown this approach to be robust and powerful when the outcome variable is also ordinal (Li & Shepherd, 2010), and we are studying this approach for other outcome types.

One limitation of our residual is that it seems not useful for checking the proportional odds assumption in proportional odds models, as the cell-wise information necessary for investigating this assumption is collapsed into a single value.

Acknowledgments

This work was supported in part by the National Institutes of Health, U.S.A. We thank Dr Vikrant Sahasrabuddhe for providing data on cervical lesions.

Appendix. Proofs of properties

Properties 2, 4, 7, 11–13 are obvious.

Proof of Property 1. Since r(j + 1, F) − r(j, F) = γj+1 − γj−1 ⩾ 0, we have r(j, F) ⩽ r(j + 1, F). Since γ1 ⩾ 0 and γs−1 ⩽ 1, r(1, F) = γ1 − 1 ⩾ −1 and r(s, F) = γs−1 ⩽ 1.

Proof of Property 3. Let γj|F and γj|G be the cumulative probabilities for distributions F and G, respectively. Let t = s − j + 1. Then γt−1|G = 1 − γj|F and γt|G = 1 − γj−1|F. Therefore, r (t, G) = γt−1|G +γt|G − 1 = 1 − γj|F − γj−1|F = −r(j, F).

Proof of Property 5. The results for j < t and j > t are obvious. For j = t, since r(t + 1, F) = r(t, F) + (pt + pt+1) and r(t, F) = 2γt−1 + pt − 1, we have {ptr (t, F) + pt+1r (t + 1, F)}/(pt + pt+1) = r(t, F) + pt+1 = 2γt−1 + (pt + pt+1) − 1 = r(t, G).

Proof of Property 6. Let f (p) = r{2, (p, 1 − p)} for 0 < p < 1. We first show that f (p)/ p is a constant. By Property 3′, r{1, (p, 1 − p)} = − f (1 − p). By Property 4′, f′(1 − p) = f′(p) and thus f (1 − p) + f (p) ≡ c is a constant as its derivative is always zero. By Properties 2 and 5, 0 = r(1, p + 1 −p) = − p f (1 − p) + (1 − p) f (p), and thus f (1 − p) = f (p)(1 − p)/ p. Then, c ≡ f (1 − p) + f (p) = f (p)/ p and f (p) = cp.

By Property 5, r(j, F) = r{2, (a, pj, b)}, where a = γj−1 and b = 1 − γj. By Properties 2 and 5,

Thus, r(j, F) = {af (1 − a) − bf (1 − b)}/ pj = c{a(1 − a) − b(1 − b)}/ pj = c{a(pj + b) − b(a + pj)}/ pj = c(a − b) = c{γj−1 − (1 − γj)}. It is easy to show the reverse.

Proof of Property 8. Since ∑jpjγj−1 = ∑jpj (∑k<jpk) = ∑j1< j2 pj1 pj2 and ∑jpj (1 − γj) = ∑jpj(∑k>jpk) = ∑j1 < j2 pj1pj2, we have E (R) = ∑jpj (γj−1 − (1 − γj)} = 0.

Proof of Property 9. Since E(R) = 0 and γa−1 − (1 − γa) = ∑b:b≠apb × sign(a − b),

Since 1 = (∑apa)3 = ∑a + 3 ∑a≠bpa + 6 ∑a<b<cpapbpc, var(R) = (1 − ∑a )/3.

For the alternative expression, since ∑a<bpapb = ∑a<bpapb ∑cpc = ∑a<b(pa + pb + ∑c≠a,bpapbpc) = ∑a≠bpa + 3 ∑a<b<cpapbpc,

Proof of Property 10. To maximize var(R) subject to the constraint ∑pj = 1, we employ a Lagrange multiplier in the function

Setting ∂f/∂λ = 0 gives the constraint ∑pj − 1. Setting ∂f/∂pj = − − λ = 0 for all j leads to p1 = ⋯ = ps = 1/s and var(R) = (1 − 1/s2)/3.

Proof of Properties 14 and 15. For proportional odds models, it can be shown that

For convenience, let γi,0 = 0. For subject i, let li = log(pi, yi) = log(γ i, yi − γ i, yi−1) be the loglikelihood. Let l = ∑ili and θ̂ be the maximum likelihood estimate. Then

and

Supplementary material

Supplementary material available at Biometrika online includes additional figures and a discussion of residuals in Liu et al. (2009).

References

- Agresti A. Analysis of Ordinal Categorical Data. New York: Wiley; 1984. [Google Scholar]

- Agresti A. Categorical Data Analysis. 2nd edn. Hoboken, NJ: Wiley; 2002. [Google Scholar]

- Brockett PL, Levine A. On a characterization of ridits. Ann Statist. 1977;5:1245–8. [Google Scholar]

- Bross IDJ. How to use ridit analysis. Biometrics. 1958;14:18–38. [Google Scholar]

- Li C, Shepherd BE. Test of association between two ordinal variables while adjusting for covariates. J Am Statist Assoc. 2010;105:612–20. doi: 10.1198/jasa.2010.tm09386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu I, Mukherjee B, Suesse T, Sparrow D, Park SK. Graphical diagnostics to check model misspecification for the proportional odds regression model. Statist Med. 2009;28:412–29. doi: 10.1002/sim.3386. [DOI] [PubMed] [Google Scholar]

- McCullagh P. Regression models for ordinal data (with discussions) J. R. Statist. Soc. B. 1980;42:109–42. [Google Scholar]

- McCullagh P, Nelder JA. Generalized Linear Models. 2nd edn. London: Chapman & Hall; 1989. [Google Scholar]

- Parham GP, Sahasrabuddhe VV, Mwanahamuntu MH, Shepherd BE, Hicks ML, Stringer EM, Vermund SH. Prevalence and predictors of squamous intraepithelial lesions of the cervix in HIV-infected women in Lusaka, Zambia. Gynecol Oncol. 2006;103:1017–22. doi: 10.1016/j.ygyno.2006.06.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Therneau TM, Grambsch PM, Fleming TR. Martingale-based residuals for survival models. Biometrika. 1990;77:147–60. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material available at Biometrika online includes additional figures and a discussion of residuals in Liu et al. (2009).