Abstract

We study estimation in quantile regression when covariates are measured with errors. Existing methods require stringent assumptions, such as spherically symmetric joint distribution of the regression and measurement error variables, or linearity of all quantile functions, which restrict model flexibility and complicate computation. In this paper, we develop a new estimation approach based on corrected scores to account for a class of covariate measurement errors in quantile regression. The proposed method is simple to implement. Its validity requires only linearity of the particular quantile function of interest, and it requires no parametric assumptions on the regression error distributions. Finite-sample results demonstrate that the proposed estimators are more efficient than the existing methods in various models considered.

Keywords: Corrected loss function, Laplace distribution, Measurement error, Normal distribution, Quantile regression, Smoothing

1. Introduction

In problems relating to econometrics, epidemiology and finance, the covariates of interest are often measured with errors. The measurement error, if ignored, often leads to bias in estimating the mean and quantile functions (Carroll et al., 2006; Wei & Carroll, 2009).

Less attention has been paid to quantile regression than to mean regression with a covariate measurement error. There are two main difficulties for correcting the bias in quantile regression caused by measurement error. First, a parametric regression-error likelihood is usually not specified in quantile regression. Second, unlike the mean, quantiles do not enjoy the additivity property, that is, the quantile of the sum of two random variables is not necessarily the sum of the two marginal quantiles. He & Liang (2000) proposed an estimation procedure that minimizes the quantile loss function of orthogonal residuals. This method assumes that the random errors in the response variable y and the measurement errors in the covariate x are independent and follow the same symmetric distribution. Assuming the existence of an instrumental variable, Hu & Schennach (2008) and Schennach (2008) proposed methods that require nonparametric modelling of densities such as that of y given x, and that of x given the instrumental variable. Wei & Carroll (2009) developed an iterative estimation procedure that requires estimating the conditional density of y given x via modelling the entire quantile process, and this complicates the computation. In addition, Wei & Carroll’s method relies on a strong global assumption, that is, estimation of the τth conditional quantile of y given x depends on the assumption that all the conditional quantiles below the τth are linear. In this paper, we propose a simple and consistent estimation procedure assuming a class of measurement error distributions. The proposed method avoids the symmetry assumption used in He & Liang (2000), and requires estimation only at the quantile of interest.

Whatever the approach taken, one must resolve the identifiability issue in measurement error models. In the proposed method, it is resolved by assuming a parametric form for the measurement error distribution whose parameters such as variance can be estimated. However, we leave the quantile regression error distribution completely unspecified.

We consider the linear quantile regression model

| (1) |

where Qτ(yj | xj) denotes the τth conditional quantile of the response variable yj given by the covariate xj, β0(τ) ∈ ℝp is the coefficient vector and τ ∈ (0, 1) is the quantile level of interest. Our main interest is in estimating β0(τ) when xj is measured with an error. We assume an additive measurement error model, wj = xj + uj, relating the surrogate wj and xj, where the uj ∈ ℝp are the independent and identically distributed measurement errors. Throughout, we assume that uj is independent of xj and yj, and we drop τ in β0(τ) for notational simplicity.

2. Proposed methods

2.1. Corrected-loss estimator

When xj is measured without an error, β0 can be estimated consistently by

| (2) |

where ρ(y, x, β) = ρτ(y – xTβ), ρτ(∊) = ∊{τ – I(∊ < 0)} is the quantile loss function and I(·) is the indicator function. The estimator β̂x also satisfies

| (3) |

where ψ(y, x, β) = x{I(y – xTβ < 0) – τ}. Under model (1), pr(y < xTβ0 | x) = τ. Therefore, E{ψ(y, x, β0)} = 0, and ψ(y, x, β) is an unbiased estimating function for β0.

When xj is subject to error and we observe only a surrogate wj, naively replacing xj with wj in (2) or (3) usually leads to inconsistent estimators, because E{ψ(y, w, β0)} = 0 may not hold. To account for the measurement error, we construct corrected score functions of w that are unbiased for β0 (Stefanski, 1989; Nakamura, 1990). However, in practice, it is challenging to determine the corrected scores, especially in quantile regression, as the quantile loss function ρτ(∊) is not differentiable at ∊ = 0. To overcome this difficulty, we approximate ρτ(∊) by a smooth function ρ(∊, h) depending on a positive smoothing parameter h.

Let E* denote the expectation with respect to w given y and x. Unless otherwise specified, we use E to denote the global expectation. We aim to find a corrected loss function ρ*(y, w, β, h) such that E*{ρ*(y, w, β, h)} = ρ(y, x, β, h) → ρ(y, x, β) pointwise in (y, x, β) as h → 0. Under some regularity conditions, β0 is the unique minimizer of E{ρ(y, x, β)}. Therefore, minimizing the sample analog of E{ρ*(y, w, β, h)} leads to a consistent estimator of β0, if h goes to zero at a suitable rate. Motivated by this idea, we define the corrected-loss quantile estimator as

In the next two subsections, we develop corrected-loss estimators for two measurement error models, normal and Laplace, because these two measurement error distributions provide reasonable error models in many applications. Our simulation study in § 3 suggests that the proposed estimators are robust against misspecification of the measurement error distribution. The extension to a wider class of distribution families is discussed in § 5.

2.2. Normal measurement error

Assume is a random sample with wj = xj + uj, where uj ∼ N(0, Σ) is a p-dimensional normal random vector that is independent of yj and xj; see Fuller (1987) and Carroll et al. (2006) for reviews on normal measurement errors in mean regression models.

We first review a useful result for normal random variables. Suppose that ∊ ∼ N(μ, σ2) and that g(·) is a sufficiently smooth function. Let u ∼ N(0, 1) be independent of ∊. Stefanski & Cook (1995) showed that E[E{g(∊ + iσu) | ∊}] = g(μ), where i = √–1, the outer expectation is with respect to ∊ and the inner one is with respect to u given ∊.

Motivated by the above result, we propose to approximate the quantile loss function ρτ (∊) by an infinitely smooth function

where is the sine integral function, which satisfies and . By such an approximation, we have the following theorem.

Theorem 1. Suppose that ∊ ∼ N(μ, σ2). Define A(∊, σ2, h) = E{ρN(∊+iσu, h) | ∊}, where u ∼ N(0, 1) is independent of ∊. Then

A(∊, σ2, h) = ∊(τ – 1/2) + π−1 {y−1 ∊ sin(y∊) – σ2 cos(y∊)} exp(y2σ2 /2) dy;

E{A(∊, σ2, h)} = ρN (μ, h).

Since (y – wTβ) | (y, x) ∼ N(y – xTβ, βTΣβ), we define the corrected quantile loss function as

By Theorem 1, E*{ (y, w, β, h)} = ρN(y – xTβ, h) ≐ ρN(y, x, β, h) → ρ(y, x, β) pointwise in (y, x, β) as h → 0. Let ℬ denote a compact subset of ℝp that contains β0. The corrected quantile estimator is then defined as

In applications, often only one or two covariates are measured with an error. Our proposed method accommodates such scenarios as special cases. Throughout the paper, we let the first component of x be 1, corresponding to the intercept, so there is no measurement error in the first component. For example, if we assume that only the pth component of x is subject to measurement error u ∼ N(0, σ2), then we have

where 0q and 0q×q denote a q-dimensional vector and a q × q matrix with zero elements, respectively. Consequently, the corrected quantile loss function becomes , where βp is the pth element of β. The same parameterization applies to the correction for a Laplace measurement error described in § 2.3.

2.3. Laplace measurement error

We consider the situation where the measurement error follows a multivariate Laplace distribution. The Laplace distribution is often used for modelling data with tails heavier than normal. We refer to Stefanski & Carroll (1990), Hong & Tamer (2003), Richardson & Hollinger (2005), Purdom & Holmes (2005), Visscher (2006) and McKenzie et al. (2008) for discussions of Laplace measurement errors. We first introduce a multivariate Laplace distribution adopted from Kotz et al. (2001, Ch. 6), and give a lemma stating some related properties.

Definition 1. A random vector X ∈ ℝp has a multivariate asymmetric Laplace distribution if its characteristic function is Ψ(t) = (1 + tTΣt/2 − iμTt)−1 for t ∈ ℝp, where μ ∈ ℝp and Σ is a p × p nonnegative definite symmetric matrix. In the following, we write X ∼ ALp(μ, Σ). If μ = 0, then ALp(0, Σ) corresponds to a symmetric multivariate Laplace distribution. In addition, AL1(0, σ2) is the classical univariate Laplace (1774) distribution L(μ, σ2) if and only if μ = 0.

Lemma 1. Let X ∼ ALp(μ, Σ). Then

the mean and covariance matrix of X are E(X) = μ, and cov(X) = Σ + μμT;

if μ = 0, then for any constant a and vector b ∈ ℝp, the random variable a + bTX ∼ L(a, σ2), where σ2 = bTΣb, and L(a, σ2) is the standard univariate Laplace distribution with mean a and variance σ2.

Suppose that the measured covariates are wj = xj + uj, where uj ∼ ALp(0, Σ), independent of xj and yj . Our corrected loss function is based on the following theorem.

Theorem 2. Suppose that the random variable ∊ follows the univariate Laplace distribution L(μ, σ2). If g(∊) is a twice-differentiable function of ∊, then

where g(2)(∊) is the second derivative of g(∊).

Let K (·) denote a kernel density function and define GL (x) = ∫u<x K (u) du. In our numerical studies, we choose K (·) as the probability density function of N(0, 1). We consider the smoothed quantile loss function ρL (∊, h) = ∊{τ − 1 + GL (∊ / h)}. For Laplace measurement error, by Lemma 1, (y − wTβ) | (y, x) ∼ L(y − xT β, σ2), where σ2 = βTΣβ. Let ∊ = y − wTβ. Define the corrected quantile loss function as

| (4) |

By Theorem 2, pointwise in (y, x, β) as h → 0.

The corrected quantile estimator is therefore defined as

2.4. Large sample properties

To establish the asymptotic results in this paper, we make the following assumptions.

Assumption 1. The samples {(yj, xj) : j = 1, . . . , n} are independent and identically distributed.

Assumption 2. The vector β0 is an interior point of the parameter space ℬ, a compact subset of ℝp.

Assumption 3. The expectation E(‖xj‖2) is bounded, and is a positive definite p × p matrix.

Assumption 4. Let . The conditional density of ej, fj (ej | xj), is bounded from infinity, and it is bounded away from zero and has a bounded first derivative in the neighbourhood of zero.

Assumption 5. For each j, is bounded as a function of τ.

Assumption 6. The kernel function K (u) is a bounded probability density function having finite fourth moment and is symmetric about the origin. In addition, K (u) is twice-differentiable, and its second derivative K(2)(u) is bounded and Lipschitz continuous on (− ∞, ∞).

Theorem 3 states the strong consistency of the proposed estimators for normal and Laplace measurement errors, respectively.

Theorem 3. (i) Suppose that the measurement error uj ∼ N(0, Σ), that Assumptions 1–5 hold, and that h → 0 and h = c(log n)−δ, where δ < 1/2 and c is some positive constant. Then β̂N → β0 almost surely as n → ∞. (ii) If the measurement error uj ∼ ALp(0, Σ) and Assumptions 1–4 and 6 hold, h → 0, and (nh)−1/2 log n → 0, then β̂L → β0 almost surely as n → ∞.

Assumption 2 ensures the existence of β̂N and β̂L, and the uniformity of the convergence of the minimand over ℬ, as required to prove the consistency. Assumptions 3 and 4 ensure that β0 is the unique minimizer of E{ρ(y, x, β)}. With normal measurement error, because the corrected quantile loss function is complicated, Assumption 5 is used in the Appendix to bound the first-order expansion of uniformly over ej. Assumption 5 is not needed for the Laplace measurement error. Assumption 6 specifies the conditions on the kernel function used in β̂L for the Laplace measurement error. In Theorem 3, the rate of h differs for normal and Laplace measurement errors. This difference is related to the smoothness of the measurement error distribution. It is well known in the deconvolution literature that the rates of convergence are lower for smoother error distributions (Carroll & Hall, 1988; Fan, 1992).

We next establish asymptotic normality of the proposed estimators. For notational simplicity, let β̂ denote the proposed corrected estimator, and ρ*(y, w, β, h) denote the corrected quantile loss function, for either normal or Laplace measurement errors. We make the following additional assumption.

Assumption 7. Let and . As n → ∞ and h → 0, there exist positive definite matrices D and A such that and .

Theorem 4. Suppose that Assumptions 1–7 hold, and β̂ is the consistent estimator of β0, either β̂N or β̂L defined in § § 2.3 and 2.4. Then n1/2(β̂ − β0) → N(0, A−1D A−1) in distribution, as n → ∞.

2.5. Estimated measurement error covariance matrix

Thus far we have described our method under the assumption that the covariance matrix Σ is known. Applications where Σ is known exist, but are rare. The more common scenario is one in which an unbiased estimate, Σ̂, is available. Analysis then proceeds using Σ̂ as a plug-in estimator of Σ. A common design where this strategy is used is when each wj is itself the average of m replicate measurements wj,k (k = 1, . . . , m), each having variance Γ = mΣ. A consistent and unbiased estimator of Σ is Σ̂ = Γ̂/m, where

is based on n(m − 1) degrees of freedom; see Liang et al. (2007). The application data in § 4 have this structure with m = 6 in which case Σ̂ is estimated on 5n degrees of freedom. In the Monte Carlo study in § 3, we simulate this situation with m = 2.

Let σ be a q-dimensional vector consisting of the elements of the upper triangle of Σ including the diagonals, where q = p(p + 1)/2. To reflect the dependence on σ, we let ρ*(y, w, β, h, σ) denote the corrected quantile loss function, for either normal or Laplace measurement errors. We next establish the asymptotic properties of the corrected estimator,

| (5) |

where . Let Sj be a q-vector consisting of the elements of the upper triangle of the matrix including the diagonals. Define , and . We replace Assumption 7 with the following Assumption 7′.

Assumption 7′. As n → ∞ and h → 0, , , , , where A and D are p × p-positive definite matrices, and B and C are p × q matrices.

Theorem 5. Under the conditions of Theorem 3 and Assumption 7′, the estimator β̂ given in (5) is consistent and asymptotically normal with covariance matrix A−1D* A−1, where D* = D + {m(m − 1)}−2B E[{Sj − m(m − 1)σ}⊗2]BT + {m(m − 1)}−1(C BT + BCT).

Remark 1. Compared with Theorem 4, the covariance of β̂ has three additional terms due to the variation in the estimated measurement error variance. For normal measurement error, (yj, wj) are independent of Γ̂, so the last two terms of D* reduce to zero.

2.6. Some computational issues

Motivated by the method of Delaigle & Hall (2008), we propose a modified simulation-extrapolation-type strategy to choose the smoothing parameter h. The simulation and extrapolation method was introduced by Stefanski & Cook (1995) for estimation in a parametric setting; see also Stefanski (2000), Luo et al. (2006) among others. Delaigle & Hall (2008) showed how this strategy can be adapted to choose the smoothing parameter in nonparametric modelling.

Let β̂(h) be the corrected-loss estimator associated with smoothing parameter h. Define M(h) = E[{β̂(h) − β0}TΩ−1{β̂(h) − β0}] as the mean squared error of β̂(h), where Ω = cov{β̂(h)}. Ideally, we would like to find the optimal smoothing parameter h0 = argmin M(h). However, since M(h) depends on the unknown xj, the minimization of M(h) cannot be executed in practice. Instead, we develop two versions of M(h) by simulating higher levels of measurement errors. Let and denote independent and identically distributed random vectors from N(0, Σ) for the normal measurement error or from ALp(0, Σ) for Laplace measurement error depending on the error model assumed. Let , , and as the corrected-loss estimators based on samples (yj, ) and (yj, ), respectively. Define

where S* and S** are the sample covariance matrices of and , respectively. Let ĥ1 = argminh M1(h) and ĥ2 = argminh M2(h). Since measures in the same way that measures wj, it is reasonable to expect that the relationship between ĥ2 and ĥ1 is similar to that between ĥ1 and h0. Therefore, back extrapolation can be used to approximate h0. In our implementation, we use the linear extrapolation from the pair (log ĥ1, log ĥ2) and define the second-order approximation to h0 as .

For corrected-loss approaches, one computational complication is that, in finite samples, the corrected objective function may not be globally convex in β; see also Stefanski (1989), Stefanski & Carroll (1985, 1987) and Nakamura (1990) for similar observations. If xj is measured with a Laplace error, then or +∞ when σ2 = βTΓβ → ∞, depending on the sign of the last term in brackets in (4). In such a case, the corrected objective function has no global minimum. However, it is locally convex around a local minimizer β̂ that is the desired corrected-loss estimate. In our work, when solving the minimization problem for β̂, we adopted the common strategy of starting from the naive estimator obtained by regressing yj on wj, and then searched using the R (R Development Core Team, 2012) function optim with default options. This algorithm worked well in numerical studies.

3. Simulation study

We conduct a simulation study to investigate the performance of the proposed corrected-loss approaches. The data were generated from the model

where . Under the above model, the τth conditional quantile of y given x is β01(τ) + β02(τ)x with β01(τ) = 1 + σeΘ−1(τ) and β02(τ) = 1 + ησeΘ−1(τ), and Θ(·) is the cumulative distribution function of N(0, 1). We further assume that the x j are subject to measurement error following the model

We consider four different cases. The measurement errors uj are generated from in Cases 1–2, from in Case 3, and from the normalized with mean zero and variance in Case 4. We set η = 0 in Case 1, corresponding to a homoscedastic model, and η = 0.2 in Cases 2–4, corresponding to heteroscedastic models. We give ej and uj standard deviations σe = σu = 0.5, so the assumption required by He & Liang’s method is satisfied in Case 1. In Cases 2–4 with heteroscedasticity, the variances of the regression errors depends on xj and thus are on different scales with the measurement error.

For each case, 100 simulations are performed. Focusing on τ = 0.5 and τ = 0.75, we compare five estimators, including the naive estimator obtained from regressing yj on wj, He & Liang’s estimator, the proposed corrected-loss estimator for normal measurement error, the proposed corrected-loss estimator for Laplace measurement error and Wei & Carroll’s estimator obtained using the R program developed by Wei and Carroll with 20 iterations.

To make a fair comparison, in the implementation of He & Liang’s method, we first transform yi to with λ = [E{(1 + ηxj)2}]1/2σe/σu to match the marginal variance of regression error with the measurement error variance. The resulting coefficient estimates are then transformed back to the original scale. For the proposed corrected-loss estimators and Wei & Carroll’s approach, we generated an independent estimate of based on n degrees of freedom as explained in § 2.5 for each dataset. This simulates the situation in which each wj is the average of two replicate measurements or with .

In the implementation of the two proposed methods, we choose the smoothing parameter h following the simulation and extrapolation procedure in § 2.6 with Nb = 20. In Case 1, the mean ĥ for the corrected-loss estimators for normal and Laplace errors are 2.82 and 2.29 at τ = 0.5, and 1.04 and 1.09 at τ = 0.75, respectively.

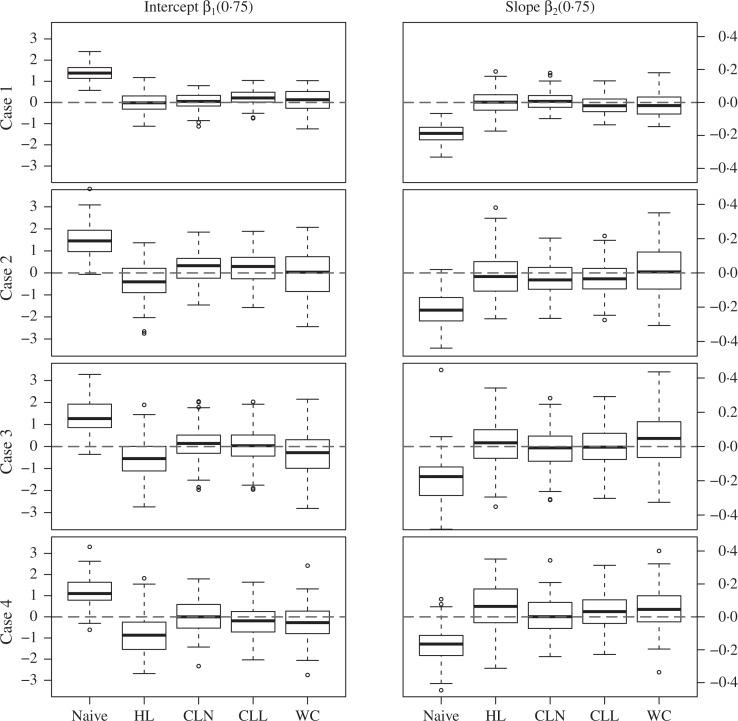

Figure 1 presents boxplots of β̂k(τ) − β0k(τ) (k = 1, 2) at τ = 0.75 from the five approaches. We omit the boxplots at τ = 0.5 since the main observations are similar to those at τ = 0.75. As expected, the naive estimator is seriously biased under all scenarios. He & Liang’s estimator performs well in Case 1 when ej and uj have the same distribution, but has considerable bias in Cases 2–4 with heteroscedastic regression errors for estimation at τ = 0.75. The two proposed estimators and Wei & Carroll’s estimator successfully correct the bias for both homoscedastic and heteroscedastic models. Even though the proposed methods require parametric assumptions on the measurement error distribution, they are quite robust against model misspecification. The two methods perform very well not only in Cases 1–3 when the normal error assumption is used for the Laplace measurement error and vice versa, but also in Case 4 when the measurement error distribution is substantially right skewed.

Fig. 1.

Boxplots of β̂k (τ) − β0k (τ), k = 1, 2 for different methods in Cases 1–4 at τ = 0.75. Naive, the naive method by regressing yj on wj; HL, He & Liang’s method; CLN, corrected-loss estimator for normal measurement error; CLL, corrected-loss estimator for Laplace measurement error; WC, Wei & Carroll’s method.

For detailed comparison, Table 1 summarizes the mean squared errors of the different estimators. The two proposed corrected-loss estimators are more efficient than Wei & Carroll’s estimator in all cases. In addition, since Wei & Carroll’s estimator requires estimation of the whole quantile process simultaneously, it is computationally much more expensive than the proposed estimators when the focus is on one or a few quantile levels. The normal corrected-loss estimator is slower than the Laplace corrected-loss estimator, as the corrected loss function involves an integral that has no closed form and thus requires numerical integration. For one simulated dataset in Case 2 with n = 200, using R version 2.8.1 on a 3 GHz Dell computer, estimation at the median required 9.7 seconds for the Laplace corrected-loss estimator, 496 seconds for the normal corrected-loss estimator, and it took 1020 seconds for Wei & Carroll’s estimator to obtain estimates at 39 quantile levels. The number of quantile levels required by Wei & Carroll’s estimator grows with the sample size n, and thus the computation is even more challenging for larger datasets.

Table 1.

Mean squared errors of different estimators of the intercept β1(τ) and slope β2(τ) parameters. The values in the parentheses are the Monte Carlo standard deviations

| 100 × MSE{β̂1(τ)} | 100 × MSE{β̂2(τ)} | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Naive | HL | CLN | CLL | WC | Naive | HL | CLN | CLL | WC | |

| Case 1 | ||||||||||

| τ = 0.5 | 179 (8) | 20 (4) | 15 (2) | 15 (2) | 18 (3) | 4.0 (0.2) | 0.4 (0.1) | 0.3 (0.1) | 0.3 (0.0) | 0.4 (0.1) |

| τ = 0.75 | 201 (10) | 23 (3) | 16 (2) | 19 (2) | 28 (4) | 3.8 (0.2) | 0.5 (0.1) | 0.3 (0.1) | 0.3 (0.0) | 0.6 (0.1) |

| Case 2 | ||||||||||

| τ = 0.5 | 192 (16) | 64 (9) | 47 (6) | 46 (6) | 67 (9) | 4.4 (0.4) | 1.5 (0.2) | 1.1 (0.1) | 1.1 (0.1) | 1.6 (0.2) |

| τ = 0.75 | 275 (24) | 78 (12) | 58 (7) | 56 (7) | 105 (12) | 5.8 (0.5) | 1.5 (0.2) | 1.1 (0.1) | 1.2 (0.1) | 2.3 (0.3) |

| Case 3 | ||||||||||

| τ = 0.5 | 192 (19) | 76 (11) | 74 (12) | 65 (10) | 104 (19) | 4.3 (0.4) | 1.8 (0.3) | 1.7 (0.3) | 1.5 (0.2) | 2.4 (0.5) |

| τ = 0.75 | 250 (24) | 101 (14) | 63 (10) | 63 (9) | 132 (21) | 5.2 (0.5) | 1.7 (0.3) | 1.3 (0.2) | 1.3 (0.2) | 2.9 (0.5) |

| Case 4 | ||||||||||

| τ = 0.5 | 205 (19) | 69 (10) | 56 (7) | 53 (8) | 87 (14) | 4.6 (0.4) | 1.6 (0.2) | 1.3 (0.2) | 1.2 (0.2) | 1.9 (0.3) |

| τ = 0.75 | 194 (19) | 157 (17) | 59 (8) | 58 (8) | 75 (13) | 4.0 (0.4) | 2.5 (0.3) | 1.2 (0.2) | 1.2 (0.2) | 1.8 (0.3) |

Naive, the naive method by regressing y on w; HL, He & Liang’s method; CLN, corrected-loss estimator for normal measurement error; CLL, corrected-loss estimator for Laplace measurement error; WC, Wei & Carroll’s method.

In quantile regression, it is challenging to estimate the asymptotic covariance of the quantile coefficients directly, as the covariance matrix involves unknown density functions that are difficult to estimate in finite samples. For practical implementation, we adopt a simple bootstrap approach through resampling (yj , wj) with replacement. To accommodate the variation in the estimation of σu, for each bootstrap, we obtain the proposed estimators by using the estimated σu calculated with the bootstrap sample of the internal replicates wj,k. Bootstrap confidence intervals can be constructed by using the bootstrap standard error and the asymptotic normality of the proposed estimators. In each simulation run, 200 bootstrap samples are used to obtain the confidence intervals. Table 2 summarizes the coverage probabilities of 95% confidence intervals from the two proposed estimators. The bootstrap approach performs reasonably well. The confidence intervals of the proposed methods have empirical coverage probabilities close to the nominal level 95% even in cases where the parametric measurement error distribution is misspecified.

Table 2.

Coverage probabilities, %, of bootstrap confidence intervals with a nominal level of 95%

| β1(0.5) | β2(0.5) | β1(0.75) | β2(0.75) | |||||

|---|---|---|---|---|---|---|---|---|

| CLN | CLL | CLN | CLL | CLN | CLL | CLN | CLL | |

| Case 1 | 97 | 95 | 97 | 95 | 96 | 92 | 96 | 92 |

| Case 2 | 95 | 96 | 95 | 96 | 91 | 94 | 91 | 94 |

| Case 3 | 91 | 94 | 91 | 94 | 91 | 92 | 91 | 92 |

| Case 4 | 98 | 95 | 98 | 95 | 92 | 92 | 92 | 92 |

CLN, the corrected-loss estimator for normal measurement error; CLL, the corrected-loss estimator for Laplace measurement error.

4. Application to a dietary data

For illustration, we analyse a dietary dataset from the Women’s Interview Survey of Health. These data are from 271 subjects, each completing a food frequency questionnaire and six 24-hour food recalls on randomly selected days. The food frequency questionnaire is a commonly used dietary assessment instrument in epidemiology studies; see Carroll et al. (1997) or Liang & Wang (2005), among others. We focus on studying the impacts of long-term usual intake, body mass index and age, on the food frequency questionnaire intake, measured as percent calories from fat. As the long-term intake cannot be observed due to measurement errors and other sources of variability, the 24-hour recalls were used to obtain error-prone measurements of intake.

We consider the following linear quantile regression and measurement error models:

where yj, xj, zj1 and zj2 are the food frequency questionnaire intake, the long-term usual intake, body mass index and age of the jth subject, wj,k is the kth food recall intake of the jth subject uj,k is the measurement error with mean zero and variance , and the intake measurements are on the log scale. For illustration, we study quantile levels τ = 0.2, 0.5 and 0.8. For this dataset, each subject j has six internal replicates of food recall intake, wj,k (k =1, . . . , 6). Therefore, we estimate by , where . Thus the estimated variance of wj as a measurement of xj is . The attenuation factor, , is estimated as 0.737. Using the simulation and extrapolation method, we chose h as 0.3, 0.55 and 0.35 for the normal corrected-loss estimator, and 0.36, 0.45 and 0.21 for the Laplace corrected-loss estimator, at τ = 0.2, 0.5 and 0.8, respectively.

Table 3 summarizes the coefficient estimates β̂(τ) from the naive method, He & Liang’s method, Wei & Carroll’s method, the normal corrected-loss and the Laplace corrected-loss methods at three quantile levels. The values in parentheses are the corresponding bootstrap standard errors, based on 200 bootstrap samples. In the implementation of He & Liang’s method, we first transform yj to to put the variances of measurement and regression errors on the same scale, where s is the standard deviation of the estimated residuals obtained from the naive method at the median. The resulting coefficient estimates are then transformed back to the original scale. According to He & Liang (2000, Theorem 2.1), their estimator β̂1(τ) of the intercept converges to some quantity depending on βk(τ) (k = 2, 3, 4) and the unknown τ th quantile of the regression error. Therefore, we omit β̂1(τ) in Table 3.

Table 3.

Estimates, standard errors, of the quantile coefficients from different methods for the Womens Interview Survey of Health data: β2(τ), β3(τ) and β4(τ) correspond to the effects of long-term usual intake, body mass index and age on the τ th quantile of the food frequency questionnaire intake, respectively

| τ | Method | β2(τ) | β3(τ) | 10 × β4(τ) |

|---|---|---|---|---|

| 0.2 | Naive | 0.65 (0.12) | −0.11 (0.18) | 0.28 (0.42) |

| HL | 0.81 (0.18) | −0.11 (0.28) | 0.35 (0.38) | |

| WC | 0.95 (0.18) | −0.19 (0.25) | 0.29 (0.39) | |

| CLN | 0.82 (0.20) | −0.01 (0.17) | 0.10 (0.28) | |

| CLL | 0.81 (0.16) | −0.05 (0.13) | 0.16 (0.26) | |

| 0.5 | Naive | 0.51 (0.10) | 0.22 (0.16) | −0.01 (0.29) |

| HL | 0.71 (0.13) | 0.49 (0.27) | 0.00 (0.30) | |

| WC | 0.70 (0.14) | 0.24 (0.16) | −0.13 (0.33) | |

| CLN | 0.73 (0.14) | 0.31 (0.13) | 0.04 (0.27) | |

| CLL | 0.71 (0.13) | 0.29 (0.15) | −0.00 (0.27) | |

| 0.8 | Naive | 0.4 (0.17) | 0.5 (0.18) | −0.06 (0.37) |

| HL | 0.38 (0.26) | 0.75 (0.44) | 0.15 (0.41) | |

| WC | 0.62 (0.15) | 0.51 (0.21) | −0.18 (0.36) | |

| CLN | 0.70 (0.16) | 0.47 (0.15) | −0.05 (0.28) | |

| CLL | 0.78 (0.24) | 0.71 (0.16) | −0.09 (0.33) |

Naive, the naive method; HL, He & Liang’s method; CLN, corrected-loss estimator for normal measurement error; CLL, corrected-loss estimator for Laplace measurement error; WC, Wei & Carroll’s method.

By accounting for the measurement error, both normal and Laplace corrected-loss methods identify a stronger association between food frequency questionnaire intake and the long-term intake at all three quantiles than the naive method. For instance, the normal corrected-loss estimates of β2(τ) increase by 26, 44 and 74% at τ = 0.2, 0.5, and 0.8, respectively, compared with the naive estimates. In contrast, He & Liang’s method gives a β2(τ) estimate smaller than the naive estimates at τ = 0.8. Both normal and Laplace corrected-loss methods suggest that body mass index has a significantly positive effect at τ = 0.8, but He & Liang’s method gives a larger β2(τ) estimate associated with a large standard error, which leads to insignificance. All methods show that age has no significant effect on any of the three quantiles. The effect of body mass index increases with the quantile level, and the effect of the long-term intake decreased with the quantile level, which indicate some form of heteroscedasticity. Our simulation demonstrated that He & Liang’s method gives biased estimates for such heteroscedastic data. Wei & Carroll’s method yields the same significance results as the Laplace corrected-loss method, but it is computationally much more expensive. Using the same computer, it took 218 hours to obtain the bootstrap standard error of Wei & Carroll’s estimates with 20 iterations for each of the 200 bootstrap samples, while it required only 35 minutes for the Laplace corrected-loss method.

5. Discussion

Our proposed estimation procedure has the following general structure. Since the quantile loss function cannot be corrected in the manner of Stefanski (1989) and Nakamura (1990), we projected the function into a class of suitably smooth functions via kernel smoothing. The corrected-loss method was then applied to the smoothed quantile objective function. We balanced the bias and variance by choosing the smoothing parameter using the simulation and extrapolation method of Delaigle & Hall (2008). This strategy is general and can be used in other problems where correction is possible after some smoothing of the objective functions.

We assumed a class of measurement errors, including normal and Laplace, for identification purpose. The two proposed estimators both showed robustness against misspecification of the measurement error distribution in the simulation study. Considering the finite sample performance and the computational efficiency, we recommend the Laplace corrected-loss estimator for practical usage. The corrected-loss methods developed herein can be extended to a wider class of distribution families, as long as their characteristic functions are proportional to the inverse of a polynomial; see Hong & Tamer (2003) for related discussions. The degree of the polynomial puts constraints on the smoothness of the objective function. Such an extension is beyond the scope of this paper.

Acknowledgments

The authors would like to thank two anonymous reviewers, the associate editor and editor for constructive comments and helpful suggestions. This research was supported by the National Science Foundation, U.S.A., the National Institutes of Health, U.S.A. and the National Natural Science Foundation of China.

Appendix

Proof of Theorem 1. We first prove (i). By the definitions of A(∊, σ2, h) and ρN (∊, h), we get A(∊, σ2, h) = ∊(τ − 1/2 + I1/π) + I2/π, where

Recall that sin(x) = (eix − e−ix)/(2i) and cos(x) = (eix + e−ix)/2. Then

Applying similar arguments, we have

We next show (ii). For any U ∼ N(0, 1), it is easy to show that

| (A1) |

By (A1), E{∊ sin(y∊)} = E{(μ + σU) sin(yμ + yσU)} = e− y2σ2/2 {μ sin(yμ) + yσ2 cos(yμ)}. Therefore, we have

Proof of Lemma 1. Assertion (i) can be obtained from representation (6.3.4) in Kotz et al. (2001), and (ii) is a direct conclusion of Proposition 6.8.1 in Kotz et al. (2001).

Proof of Theorem 2. Suppose that there exists a function ḡ(∊) such that E{ḡ(∊)} = g(μ). We shall show that ḡ(∊) = g(∊) − 0.5σ2g(2)(∊). First recall that if ∊ ∼ L(μ, σ2), then f (∊) = (√2σ)−1e−√2|∊−μ|/σ. Denote σ = √2b. Therefore

| (A2) |

Differentiating both sides of the equation (A2) with respect to μ gives

| (A3) |

Differentiating (A3) again with respect to μ, we get b−2{e−μ/b I1(μ) +eμ/b I2(μ)} −b−2ḡ(μ) = g(2)(μ). Thus, we have ḡ(μ) = g(μ) − b2g(2)(μ) = g(μ) − 0.5σ2g(2)(μ).

Proof of Theorem 3. For easy demonstration, we first show (ii). Define

By Theorem 2, E{ (w, β, h)} = E{ML (x, β, h)}. Therefore,

| (A4) |

Following the arguments used for proving Horowitz (1998, Lemma 1), we can show that the following relations hold almost surely as n → ∞:

| (A5) |

Since the corrected loss function (·) involves the second derivative of ρL(·), similar to Horowitz (1998, Lemma 3(b)), we obtain

| (A6) |

almost surely. Furthermore, under Assumption 6, sup∊|ρL (∊, h) − ρτ (∊)| = sup∊|∊{GL (∊/h) − I (∊ > 0)}| = supt |htGL (−t)| ⩽ hE|Z| = O(h), where t = |∊/ h|, Z ∼ GL (·). Therefore,

| (A7) |

almost surely. Combining (A4)–(A7), we have that as h → 0 and (nh)−1/2 log n → 0,

almost surely. By Assumptions 3 and 4, β0 uniquely minimizes E {MN (x, β)} over ℬ. By White (1980, Lemma 2.2), β̂L → β0 almost surely. To prove (i), we define

By Theorem 1, E{ (w, β, h)} = E{MN (x, β, h)}. Therefore,

| (A8) |

Denote , where KN (u) = sin(u)/(uπ). For any t > 0, there exists an integer number k ⩾ 0 such that t ∈ (kπ, (k + 1)π], and

Therefore, we have almost surely

| (A9) |

and

| (A10) |

By arguments similar to Horowitz (1998, Lemma 3(a)), it is easy to see that

| (A11) |

almost surely. Let ∊ = y − wTβ and σ2 = βTΓβ. By Assumption 5, the compactness of ℬ and the fact that | sin(t)/t| ⩽ 1, we have

where C1 and C2 are some positive constants. By Nolan & Pollard (1987, Lemma 22) and Pollard (1984, Theorem 2.37),

| (A12) |

almost surely, which is o(1) if h = C(log n)−δ, where δ < 1/2 and C is some positive constant. The above equation together with (A5), (A8) and (A9)–(A11) gives supβ∈ℬ | (w, β, h) − E{M(x, β)}| = o(1) almost surely The rest of the proof follows the same lines as that for Theorem 3(ii).

Proof of Theorem 4. Let ρ*(y, w, β, h) denote the corrected quantile loss function for either normal or Laplace measurement errors. Define , . Furthermore, let , and . Under the conditions of Theorem 2 and 3, similar to (A6) and (A12), we have . Taylor expansion gives . By Assumption 7, we have . On the other hand, . By using the results of Theorems 1–2 and methods like those used to obtain the asymptotic means and variances of kernel density estimators, we have as n → ∞ and h → 0, where ψ1(y, x, β, h) = ∂ρ(y, x, β, h)/∂β and ρ(y, x, β, h) is the smoothed quantile loss function. Therefore, , which together with the central limit theorem gives in distribution.

Proof of Theorem 5. Similar to the proof in Theorem 3, the consistency of β̂ can be proven by using the fact that Σ̂ − Σ = Op(n−1/2). In addition, note that minimizing the objective function in (5) with the estimated covariance matrix Σ∊ is equivalent to solving the following stacked estimating equation , . The asymptotic normality can be proven by following the same arguments as in the proof of Theorem 4 and by expanding the stacked estimating function.

References

- Carroll RJ, Hall P. Optimal rates of convergence for deconvolving a density. J Am Statist Assoc. 1988;83:1184–6. [Google Scholar]

- Carroll RJ, Freedman L, Pee D. Design aspects of calibration studies in nutrition, with analysis of missing data in linear measurement error models. Biometrics. 1997;53:1440–57. [PubMed] [Google Scholar]

- Carroll RJ, Ruppert D, Stefanski LA, Crainiceanu C. Measurement Error in Nonlinear Models: A Modern Perspective. New York: Chapman and Hall; 2006. [Google Scholar]

- Delaigle A, Hall P. Using SIMEX for smoothing-parameter choice in errors-in-variables problems. J Am Statist Assoc. 2008;103:280–7. [Google Scholar]

- Fan J. Deconvolution with supersmooth distributions. Can J Statist. 1992;20:155–69. [Google Scholar]

- Fuller WA. Measurement Error Models. New York: Wiley; 1987. [Google Scholar]

- He X, Liang H. Quantile regression estimates for a class of linear and partially linear errors-in-variables models. Statist. Sinica. 2000;10:129–40. [Google Scholar]

- Hong H, Tamer E. A simple estimator for nonlinear error in variable models. J Economet. 2003;117:1–19. [Google Scholar]

- Horowitz JL. Bootstrap methods for median regression models. Econometrica. 1998;66:1327–52. [Google Scholar]

- Hu Y, Schennach SM. Identification and estimation of nonclassical nonlinear errors-in-variables models with continuous distributions using instruments. Econometrica. 2008;76:195–216. [Google Scholar]

- Kotz S, Kozubowski TJ, Podgórski K. The Laplace Distribution and Generalizations. Boston: Birkhäuser; 2001. [Google Scholar]

- Laplace PS. Memoir on the probability of causes of events. Mém. Acad. R. Sci. 1774;6:621–56. (Translated in Statist. Sci. 1, 359–78) [Google Scholar]

- Liang H, Wang N. Partially linear single-index measurement error models. Statist. Sinica. 2005;15:99–116. [Google Scholar]

- Liang H, Wang S, Carroll RJ. Partially linear models with missing response variables and error-prone covariates. Biometrika. 2007;94:185–98. doi: 10.1093/biomet/asm010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luo XH, Stefanski LA, Boos DD. Tuning variable selection procedures by adding noise. Technometrics. 2006;48:165–75. [Google Scholar]

- McKenzie H, Jerde C, Visscher D, Merrill E, Lewis M. Inferring linear feature use in the presence of GPS measurement error. J Envir Ecol Statist. 2008;16:531–46. [Google Scholar]

- Nakamura T. Corrected score function for errors-in-variables models: methodology and application to generalized linear models. Biometrika. 1990;77:127–37. [Google Scholar]

- Nolan D, Pollard D. U-processes: rates of convergence. Ann Statist. 1987;15:780–99. [Google Scholar]

- Pollard D. Convergence of Stochastic Processes. New York: Springer; 1984. [Google Scholar]

- Purdom E, Holmes SP. Error distribution for gene expression data. Statist Appl Genet Mol Biol. 2005;4 doi: 10.2202/1544-6115.1070. Article 16. [DOI] [PubMed] [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2012. [Google Scholar]

- Richardson AD, Hollinger DY. Statistical modeling of ecosystem respiration using eddy covariance data: Maximum likelihood parameter estimation, and Monte Carlo simulation of model and parameter uncertainty, applied to three simple models. Agric Forest Meteorol. 2005;131:191–208. [Google Scholar]

- Schennach SM. Quantile regression with mismeasured covariates. Economet. Theory. 2008;24:1010–43. [Google Scholar]

- Stefanski LA. Unbiased estimation of a nonlinear function of a normal-mean with application to measurement error models. Commun. Statist. A. 1989;18:4335–58. [Google Scholar]

- Stefanski LA. Measurement error models. J Am Statist Assoc. 2000;95:1353–8. [Google Scholar]

- Stefanski LA, Carroll RJ. Covariate measurement error in logistic regression. Ann Statist. 1985;13:1335–51. [Google Scholar]

- Stefanski LA, Carroll RJ. Conditional scores and optimal scores for generalized linear measurement-error models. Biometrika. 1987;74:703–16. [Google Scholar]

- Stefanski LA, Carroll RJ. Deconvoluting kernel density estimators. Statistics. 1990;21:165–84. [Google Scholar]

- Stefanski LA, Cook JR. Simulation-extrapolation: the measurement error jackknife. J Am Statist Assoc. 1995;90:1247–56. [Google Scholar]

- Visscher DR. GPS measurement error and resource selection functions in a fragmented landscape. Ecography. 2006;29:458–64. [Google Scholar]

- Wei Y, Carroll RJ. Quantile regression with measurement error. J Am Statist Assoc. 2009;104:1129–43. doi: 10.1198/jasa.2009.tm08420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White H. Nonlinear regression on cross-sectional data. Econometrica. 1980;48:721–46. [Google Scholar]