Abstract

This article examines two issues: the role of gesture in the communication of spatial information and the relation between communication and mental representation. Children (8–10 years) and adults walked through a space to learn the locations of six hidden toy animals and then explained the space to another person. In Study 1, older children and adults typically gestured when describing the space and rarely provided spatial information in speech without also providing the information in gesture. However, few 8-year-olds communicated spatial information in speech or gesture. Studies 2 and 3 showed that 8-year-olds did understand the spatial arrangement of the animals and could communicate spatial information if prompted to use their hands. Taken together, these results indicate that gesture is important for conveying spatial relations at all ages and, as such, provides us with a more complete picture of what children do and do not know about communicating spatial relations.

Keywords: Spatial cognition, Communication, Gesture, Child development, Spatial representation, Learning

Introduction

When people talk, they gesture. Gesture and speech serve complementary roles, and effective communication often requires both modalities. In some cases, gesture highlights or emphasizes information conveyed in speech (Cassell & McNeill, 1991). For example, speakers can use their fingers to count when describing a list of items in order of importance. In other cases, gesture provides unique information (McNeill, 2005; Rauscher, Krauss, & Chen, 1996), including information that would be difficult, if not impossible, to communicate in words (Goldin-Meadow, 2003).

The communication of spatial information is a good example of a domain in which gesture has the potential to play a particularly important role. For example, when talking about locations in space, speakers can use deictic gestures to indicate specific locations. The speech component of this communicative act can be simple and spatially vague (e.g., “here” or “there”; McNeill, 2005), leaving gesture to do most of the communicative work in specifying the locations.

One of the roles gesture can play in communicating spatial information is to help us overcome an inherent limitation of language—the linearization problem (Levelt, 1981, 1982). Spatial relations must be communicated serially in language (Newcombe & Huttenlocher, 2000). Only one spatial relation can be described at a time. Some words encode direction but not distance (e.g., “right” and “left”), and some words encode distance but not direction (e.g., “near” and “far”). Of course, people may be able to mentally integrate the serial descriptions given in speech into some form of mental map, but the number of relations that must be spoken, recalled, and integrated can become mentally taxing (e.g., Brunye, Rapp, & Taylor, 2008).

In contrast, gesture can be used to convey multiple pieces of information simultaneously. Thus, gesture can help speakers to deal with some of the challenges of the linearization problem. For example, speakers can use their hands to set up locations in space and then refer back to those locations throughout the communication. Using gesture in this manner allows the structure of a physical space (or a metaphorical space such as a diagram) to emerge through the depiction of multiple relations (Enfield, 2005; So, Coppola, Licciardello, & Goldin-Meadow, 2005). Thus, a particularly important use of gesture is to communicate relations among locations, which we refer to here as relational information. For example, Emmorey, Tversky, and Taylor (2000) asked English-speaking adults to describe the layout of several large-scale spaces (e.g., a town and a convention center). Although the participants were not instructed to gesture, many did gesture. Some participants used gestures to form models of the space, which were particularly useful in conveying spatial relations. One participant conveyed the spatial relations among the school, the town hall, and the store by holding his left hand (which he identified as standing for the school) in one location and positioning his right hand in relation to the left in order to locate the town hall and store. With just a few hand movements, speakers were able to convey important features of a complex environment that would have taken many words to express and much mental effort to understand. Because gesture afforded speakers the ability to visually depict relations and reference points, its use greatly facilitated the communication of spatial relations.

Although earlier research on spatial communication focused primarily on speech, more recent research has begun to take seriously the unique and complementary role gesture can play in communicating spatial information that would be difficult to convey in speech. However, relatively little research has taken a developmental approach to using gesture to communicate spatial information, particularly spatial relations. Our goal in this research was to consider the role of gesture in the development of spatial communication, with a focus on the communication of spatial information that can be difficult to express in words.

Gesture and the development of spatial communication

Our focus here is on how gesture is used over development to communicate spatial relational information. There are two reasons to investigate this issue. First, at a general level, gesture has been shown to be both an important influence on and an important indicator of cognitive development and learning. Children’s gestures when solving problems sometimes reveal that they know more about the underlying concept than their words alone reveal. For example, when solving Piagetian conservation problems (Church & Goldin-Meadow, 1986) and mathematics equivalence problems (Alibali & Goldin-Meadow, 1993; Garber, Alibali, & Goldin-Meadow, 1998; Perry, Church, & Goldin-Meadow, 1988), children often use gestures that indicate they are beginning to reach a new stage of understanding even when their words suggest otherwise. Moreover, gesture can be used as a vehicle for influencing learning. For example, teaching children to use certain gestures (Goldin-Meadow, Cook, & Mitchell, 2009), and even just telling children to move their hands as they explained how they solved a set of math problems (Broaders, Cook, Mitchell, & Goldin-Meadow, 2007), led to an improved understanding of the concept of mathematical equivalence. Taken together, these studies suggest that analyzing children’s gestures (and encouraging gesture use) can shed significant light on cognitive development and mechanisms.

There is also a more specific reason to include gesture in work on the development of spatial communication. Communicating spatial relational information in speech has been found to be challenging for young children. At 3 years of age, children can describe the spatial location of an object when the location can be specified with respect to a single salient landmark. However, when asked to describe locations that require specifying a spatial relation between two landmarks, children have trouble in disambiguating the information (Plumert & Hawkins, 2001; Weist, Atanassova, Wysocka, & Pawlak, 1999). At 6 years of age, children have a better lexicon for describing spatial relations but still have difficulty in organizing and accurately describing these relations (Allen, Kirasic, & Beard, 1989; Plumert, Pick, Kintsch, & Wegestin, 1994). For example, Plumert and colleagues (1994) asked children and adults to verbally describe the relations among important locations in the environment, starting from the largest spatial unit (e.g., the correct floor) and moving to the smallest spatial unit (e.g., the hiding space). Adults were able to organize their descriptions from largest to smallest spatial unit, but 6-year-olds were not able to do so unless they were prompted during the task (e.g., “Where do you go first? What comes next?”). Even children as old as 10 years continued to have difficulty in organizing their descriptions in a spatial manner (Plumert et al., 1994).

However, most studies of children’s spatial communication have focused uniquely on verbal communication. Only a few studies have investigated the role of co-speech gesture in children’s spatial communication (e.g., Iverson, 1999; Iverson & Goldin-Meadow, 1997; Sekine, 2009). Iverson (1999) asked blind and sighted children (9–18 years) to give route descriptions and found that both groups expressed information about location and direction in gesture that was not expressed in speech. However, this study did not examine developmental differences in gesture use and focused primarily on route descriptions. More recently, Sekine (2009) asked 4-, 5-, and 6-year-olds to describe their route to school. The 4-year-olds’ gestures were often piecemeal; for example, pointing in the direction of their house or toward the nursery school gate. By age 6, the gestures were more abstract and depended less on the environment as a reference frame (i.e., they began to look more model-like). Sekine’s work clearly shows that children can use gesture in abstract ways; whereas the 4-year-olds produced gestures with perspectives tied to specific points along the route, the 6-year-olds often used gestures that were less perspective dependent (and therefore more survey-like). However, this work focused on perspective taking rather than the communication of relational information.

Our primary goal in this study was to examine how children use gesture to communicate spatial relational information. As noted above, gesture is critically important in adults’ communication of spatial information. But most of the previous studies that have demonstrated weaknesses in children’s communication of spatial relations have not examined gesture. We investigated how gesture and speech work together to convey information about spatial locations and whether the relation between gesture and speech changes with age. We predicted that including gesture in the analysis of children’s descriptions of spatial relational information would reveal greater competence than focusing on speech alone.

Our second goal was to examine sources of difficulty in children’s communication of relational information. One possible source of difficulty is that children might not know the relations or might represent them differently than adults would. That is, children could have qualitatively different mental representations of spaces than adults, which could then lead to differences in communication. Traditionally, researchers have assumed that developmental differences in mental representations stem from the difficulty children have in integrating the multiple relations among objects in space (Hazen, Lockman, & Pick, 1978; Piaget & Inhelder, 1956; Siegal & White, 1975). According to this developmental view, as children get older, their representations of space progress from loose groupings of landmarks to more integrated map-like representations. For example, when asked to make models of the layout of a space they previously navigated, 5-year-olds often misrepresent turns and create a model that looks very different from the actual layout (Hazen et al., 1978). Even by 8 years of age, children continue to have some difficulty in integrating relations. Uttal, Fisher, and Taylor (2006) found that 8-year-olds were less likely to integrate spatial relations learned through verbal descriptions than 10-year-olds and adults; some 8-year-olds constructed models of the space that preserved the order of the landmarks heard in the description but did not preserve the overall spatial configuration.

A second possible source of difficulty is that children may be able to represent the spatial relations but may have more difficulty than adults in communicating what they know about those relations. Recent studies have shown that, at least under some circumstances, children can form integrated survey-like representations of spaces (Davies & Uttal, 2007; Spelke, Gilmore, & McCarthy, 2011; Uttal, Fisher, et al., 2006; Uttal & Wellman, 1989). If children know the relations but it is not apparent to them how to communicate this information, we may see important changes in their communication if we encourage gesture as a tool for conveying relations. Because relational information is comparatively easy to express using gesture, encouraging children to gesture has the potential to bring out spatial relational knowledge that they might not convey in speech.

We tested 8-year-olds, 10-year-olds, and adults. We focused on these ages because, by age 8, children are able to form mental representations of space, although these representations sometimes differ from older children’s and adults’ representations; by age 10, most children are capable of forming mental representations of spaces that include information about the relations among locations, at least for simple spaces (Siegel & White, 1975; Uttal, Fisher, et al., 2006). Thus, by including these age groups, we were able to ask whether, and how, the communication of spatial relations develops in relation to participants’ mental representation of these relations.

Participants in Study 1 first learned the locations of six toy animals hidden in a room. They were then asked to describe the space to someone who had never seen the room before. Their descriptions were videotaped and then transcribed and coded for speech and gesture. We examined the children’s ability to communicate the spatial relations among the locations, first using the lens through which spatial communication is typically assessed—speech—and then widening our lens to include gesture. We investigated whether including gesture in the analyses would provide a different, and more complete, picture of children’s understanding of space than focusing on speech alone. We also examined participants’ performance on a model construction task in which they were asked to re-create the space using photographs of the animals. The model construction task allowed us to examine whether any developmental differences we find in spatial communication are possibly related to developmental differences in spatial representations of the space.

Study 1

Method

Participants

Participants were 46 children and 23 adults from the greater Chicago area. The children were recruited through direct mailings to their parents. There were 15 8-year-olds (M = 102 months, range = 96–108), 15 9-year-olds (M = 114 months, range = 109–119), and 16 10-year-olds (M = 128 months, range = 122–132). The adults were undergraduate students in an introductory psychology course, and they received class credit for their participation.

Materials

The materials were six large wardrobe boxes, six toy animals, and a video camera. The wardrobe boxes were arranged in two rows of three boxes in a 4.27 by 4.27-m room. Each box measured 35.56 cm in width by 30.48 cm in length by 1.22 m in height. The distance between rows was 1.07 m, and the distance between the boxes in each row was 0.6 m. There was a different toy animal in each box. Participants opened the boxes by pulling down a flap on the upper front. The openings of all boxes (and the animals within) faced the entrance to the room.

Procedure

The experimenter told the children that they needed to learn the locations of six animals and then describe the locations to their parents so that the parents could find the six animals in the room. Adults were told that they would tell a fellow undergraduate student (a lab assistant) the locations of the animals so that the student could later find the animals.1 All participants were tested individually.

Learning phase

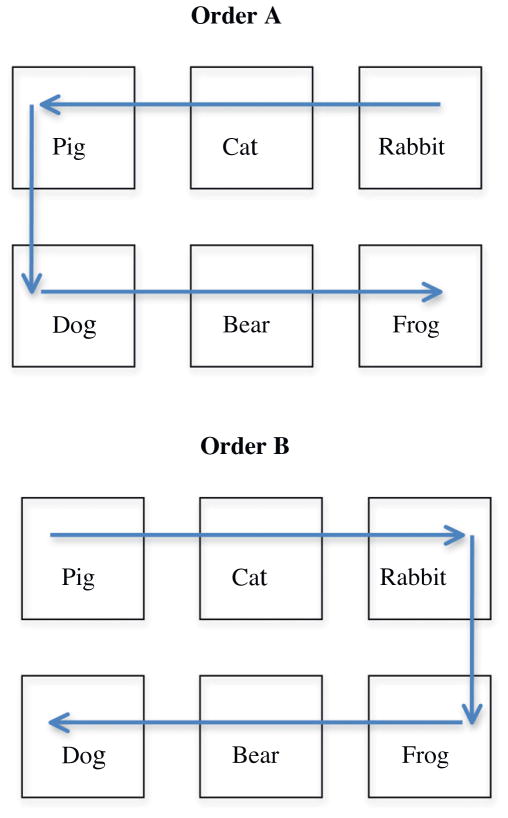

In a small testing room, the experimenter showed the participant the six stuffed animals, one at a time, and asked him or her to identify each. After each animal was correctly identified, the experimenter moved the animals to the large room and placed them in their designated boxes. The experimenter then brought the participant to the large room to learn where each animal was located. All of the boxes were closed when the participant first entered the room, and there was never more than one box open at a time. The participant learned the animals in one of two orders: either clockwise starting at the pig or counterclockwise starting at the rabbit (see Fig. 1). The experimenter did not use spatial language during the learning phase and instead used neutral language such as “Let’s see which animal is in this box.” The participant walked in either the clockwise or counter clockwise route two times and each time looked in each animal’s box. When the participant walked along the route a third time, the experimenter asked him or her to name the animals inside before opening the box (to make sure the participant had learned the locations). At each box, the experimenter asked the participant, “Do you remember which animal was here?” and then opened the box to show the participant whether he or she was correct. Once all boxes had been correctly identified, the experimenter touched the top of each box in the same order and asked the participant to identify the animal inside. This time, the experimenter did not open the boxes. The participant did not proceed to the description task until he or she could correctly identify each animal in each box. All participants, including the 8-year-olds, remembered the locations of all the animals by the end of this procedure.

Fig. 1.

Path orders during the learning phase.

Description task

The description task took place in a small nearby room. Before leaving the room with the boxes, the participant was given the following instructions for the description task: “Do you feel confident that you know where all the animals are? Okay, now we’re going to return to the other room where you will be able to describe this space to your [mom/dad/friend]. Your goal is to help [him/her] learn where all the animals are so that [he/she] could come in here and know where all the animals are without having to open the boxes.” If the participant said that he or she was not confident, the participant had the option of seeing the animals one last time. Once in the small room for the description task, the participant sat facing in the same direction as when he or she first entered the large testing room. The interlocutor sat directly across from the participant. The interlocutor was allowed to ask clarification questions after the participant had finished describing the space. The description task was videotaped. Only the description prior to the interlocutor probes was used for analysis because of the inconsistency in interlocutor probes.2

Coding the description task

For the description task, we first assessed participants’ ability to communicate the locations of the animals in speech alone (the traditional measure) and then included gesture to see whether children revealed knowledge in their hands that was not found in their speech. To assess the information conveyed in speech, we transcribed the speech of participants and examined whether and how they communicated the spatial information. To assess the information conveyed in gesture, we watched the videos and coded the content of the gestures participants produced during their descriptions. Two kinds of spatial information were coded: overall layout information and relative locations.

Overall layout information

Layout information specifies the overall structure or outline of the space. Participants could communicate layout information in speech, gesture, or both. We first coded the quality of the layout information in speech or gesture and then coded whether, taken together, gesture augmented speech to provide a more detailed layout of the space. We had parallel coding schemes for speech and gesture. Participants could fall into one of four categories for each modality, listed from lowest to highest quality of layout information: No layout, Basic layout, Partial layout, and Full layout.

In speech, participants achieved a Basic layout by mentioning rows or columns but specifying neither the number of rows/columns nor the number of boxes per row/column. For example, a participant who says, “There were boxes in lines,” would be conveying a Basic layout. Participants achieved a Partial layout by mentioning either the number of rows/columns or the number of boxes per row/column but not both pieces of information. For example, a participant who says, “There were two rows of boxes,” would be conveying a Partial layout because he did not specify the number of boxes in each row. Participants achieved a Full layout by mentioning both the number of rows/columns and the number of boxes per row/column such that a 2 × 3 layout emerges. For example, a participant who says, “The boxes were set up in two rows of three boxes each,” would be conveying a Full layout. Participants who did not explicitly mention any layout information were classified as having produced No layout.

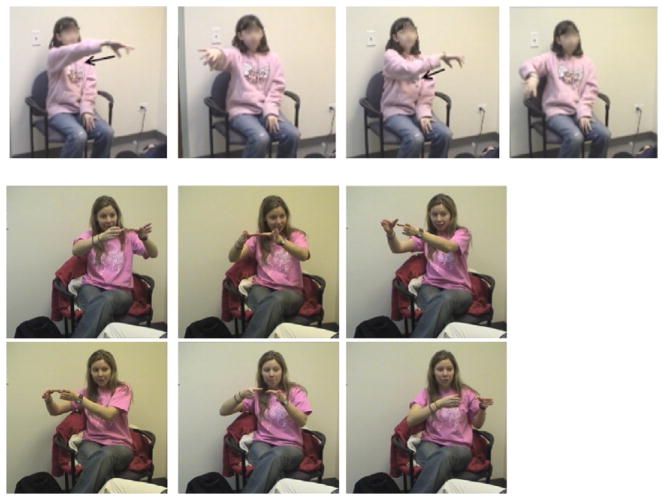

For gesture, we coded layout in a similar way. We begin by describing how participants used gesture to convey spatial information, which (unlike spatial information in speech) was often conveyed simultaneously (e.g., indicating rows by placing their arms or hands parallel to each other, mirroring the relative positions of the rows). Participants also indicated rows or columns by tracing them in the air (see Fig. 2, top). Participants indicated the boxes by using placing or pointing gestures; they used three adjacent gestures to match the relative positions of three boxes in a row or used two adjacent gestures to match the relative positions of two boxes in a column. Participants indicated the 2 × 3 structure of the space using several methods. Some participants combined the previously described methods. For instance, when tracing the two lines to indicate rows, some participants punctuated the line three times to indicate the locations of the boxes in the row. Other participants created new gestures to indicate the 2 × 3 structure. These participants extended three fingers of each hand and placed one hand in front of the other. Each hand indicated a row, and each finger indicated a box.

Fig. 2.

Examples of how gesture was used to convey the overall layout of the space (top) and the locations of the boxes in relation to one another (bottom).

As in speech, participants achieved a Basic layout in gesture by indicating rows or columns without specifying either the number of rows/columns or the number of boxes per row/column. Participants achieved a Partial layout in gesture by indicating either the number of rows/columns or the number of boxes per row/column but not both pieces of information. Participants achieved a Full layout in gesture by indicating both the number of rows/columns and the number of boxes per row/column such that a 2 × 3 layout emerges. Participants who did not gesture any layout information were classified as having produced No layout in gesture. When coding layout gestures, we attended to the semantic unit conveyed (e.g., rows, 2 × 3) rather than the number of gestures used to make up the semantic unit. Therefore, we coded for the total amount of information conveyed by the gestures. For example, a participant could convey the 2 × 3 Full layout simultaneously by extending two fingers representing the rows overlaid by three fingers representing the columns. But if a participant gestured “two rows” and then “three columns,” she would also be counted as giving a Full layout. This procedure is parallel to our coding for speech; if a participant said “two rows” and “three columns,” this response was similarly counted as a Full layout.

Finally, we combined our codes for speech and gesture to see how much information was provided when both modalities were taken into account. The goal of this coding was to see whether including gesture in the analysis would give a richer picture of participants’ spatial layout information than speech alone. For example, consider an adult who says, “There are two rows of boxes,” to describe the layout. Her speech gives us a general idea of the configuration but does not indicate how many boxes were in each row. She would be credited with a Partial layout in the speech analysis. When we look at her gestures, however, we see that she traces two lines with her hand and punctuates three times per line, indicating the positions of the three boxes in each row. Therefore, she would be credited with a Full layout in the analysis taking both speech and gesture into account.

Relative locations

Communicating layout information (i.e., describing the space in gesture and/or speech in terms of two rows and three columns) does not, in itself, provide specific information about the locations of the animals (i.e., which animal was next to, above, or below another animal). Therefore, we also coded whether participants conveyed the specific relative locations of the animals.

Relative location information informs the listener (in speech or gesture) about the position of individual animals in relation to other animals. In coding relative location, we counted how many correct locations were conveyed in speech, how many were conveyed in gesture, and how many were conveyed in both speech and gesture. As with the layout information, the content of the coding scheme was parallel for speech and gesture.

To communicate the relative location of an animal, the participant needed to give enough information about the animal’s location that another person could locate the animal without being familiar with the room or the layout of the boxes. Participants received 1 point for every animal that was successfully located, for a maximum score of 6. Coders operated under the guideline that another person should be able to draw the location of an animal from the information given; if not, the communication was considered as ambiguous and participants received no points for it.

First, we examined how many locations participants communicated in speech. To convey relative location information in speech, participants used relational phrases such as “to the right,” “to the left,” “between,” “in front of,” and “behind” (e.g., “The pig is to the left of the cat”). Participants also described locations in relation to the overall structure such as “front right corner” and “back left corner” (e.g., “The rabbit is in the back right corner”). To receive credit for a location, the participant needed to fully specify the animal’s location; a nonspecific term such as “next to,” “near,” “here,” or “there” was not sufficient to convey a specific location.

Next, we examined how many locations participants communicated in gesture. To convey relational information in gesture, participants could use their hands to indicate the locations of the animals by pointing to the imagined locations or by using a placing hand to indicate the locations on a horizontal or vertical plane (see Fig. 2, bottom). These locations, taken together, formed a model of the space. Participants could create partial models of the space as well (e.g., fewer than six locations). In coding partial models, we looked at whether the locations specified were in the correct positions relative to one another. For example, if the participant conveyed only “pig” and “cat,” these locations would need to be next to each other in order to be scored as two locations.

Finally, we combined our codes for speech and gesture to discover how many relative spatial locations were communicated using both modalities and how many were communicated uniquely in one modality. For example, consider a 9-year-old boy who described the relations in speech as follows: “First one was the rabbit, the second one was the cat, and the third one was the pig, and the fourth one was the dog, the fifth one was the bear, and the sixth one was the frog.” The boy gave a sequence, but he did not explicitly give any spatial relations in speech. Therefore, he would receive no points for relations in speech. When we included his gesture, however, we found that he used a pointing gesture to indicate each animal’s relative location as he named the animal in speech. He did this for all six animals and, therefore, received 6 points for relations in gesture.

At times, the gestures conveying spatial locations were accompanied by specific spatial terms; the locations indicated would then be classified as having been conveyed in both speech and gesture. At other times, the gestures were accompanied by nonspatial speech (as in the preceding example where the child simply named the animals while pointing to a location in the gesture space); the locations indicated would then be classified as having been conveyed only in gesture.

A second coder transcribed the speech and gesture of a subset of the videos (n = 23, 28% of participants). Within this sample, 52% produced information in speech and gesture, 9% produced information in gesture only, 4% produced information in speech only, and 35% produced no spatial information. Agreement between coders was 100% (kappa = 1) for identifying layout descriptions in speech and 91% (kappa = .82) for identifying layout locations descriptions in gesture. Agreement between coders was 93% (kappa = .86) for the number of relations in speech and 96% (kappa = .91) for the number of relations in gesture. The coders revisited the videos and resolved disagreements through discussion.

Model construction task

To assess participants’ memory of the space, we asked them to create a model of the locations of the animals using cards with photos of the animals pasted on them. This task assessed whether any developmental differences found in spatial communication could be explained by limitations in children’s memory. That is, even though all participants knew the locations of the animals at the end of the learning phase, some may have forgotten the locations and the spatial arrangement.

The experimenter handed participants randomly sorted cards with photos of the animals on them. Participants were asked to place the cards on the table “like how the boxes were in the room.” Participants received 1 point for each card that they placed on the table in the correct position, with a maximum score of 6. A fully correct model, worth 6 points, would be a 2 × 3 grid with each animal in its correct relative location. If participants placed the cards in incorrect relative locations, they would receive fewer points (or no points at all). For example, if a participant switched the cards for two of the locations (e.g., putting the dog where the frog belonged and vice versa) but all of the other cards were in the correct relative locations, she would receive 4 points.

Results

Spatial understanding conveyed in talk

We first assessed participants’ descriptions of the spaces they had walked through using the traditional measure—speech alone. The typical 8-year-old mentioned the animals in sequential order without conveying much relational information or information about the layout. For example, one 8-year-old said, “First there was a pig, then there was a cat, then there was a rabbit, then there was a frog, then there was a bear, and then there was a dog.” The older children incorporated more spatial information into their descriptions, and by adulthood speakers often gave highly spatial descriptions of the room. For example, they would first describe the layout of the room and then describe the relations of the animals in the boxes. A typical adult described the space as follows: “So you walk into the room, and there are three boxes in the first row and three boxes in the second row. So in the first row, going left to right when you enter the room, first, there’s a dog. In the next box, there’s a bear, and in the last box, all the way on the right, there is a frog. And then, the second row, left to right, in the first box, on the left, there is a pig and in the middle there is a cat, and in the last box, all the way on the right, there is a rabbit.”

Overall layout information

We first determined how many participants in each group mentioned any layout information in speech and used a chi-square to analyze the data. We separated participants in each age group according to whether or not they mentioned any layout information at all. We found a reliable difference across groups, χ2(3,N = 69) = 25.46, p < .001 (see Table 1). We then compared groups using individual chi-squares and found significant differences between the numbers of adults and 8-year-olds who gave layout information, χ2(1,N = 37) = 19.71, p < .01, between the numbers of adults and 9-year-olds who gave layout information, χ2(1,N = 37) = 11.09, p < .01, and between the numbers of adults and 10-year-olds who gave layout information, χ2(1,N = 38) = 9.84, p < .01. There were no other significant differences between age groups.

Table 1.

Layout information by age and modality.

| Analysis | 8 years | 9 years | 10 years | Adults |

|---|---|---|---|---|

| Layout in speech | 7% (n = 1) | 27% (n = 4) | 31% (n = 5) | 83% (n = 19) |

| Layout in speech or gesture | 14% (n = 2) | 40% (n = 6) | 44% (n = 7) | 92% (n = 21) |

We then examined the quality of the layout information participants gave in speech. The only 8-year-old to give layout information mentioned “rows” without specifying the number of rows, which qualified as a Basic layout; this was the only Basic layout produced in the study. All other participants who gave layout information produced either a Full or Partial layout. We gave each participant a layout quality score as follows: 3 points for a Full layout, 2 points for a Partial layout, 1 point for a Basic layout, and no points for No layout. We then performed a repeated measures analysis of variance (ANOVA) using layout quality score as the dependent measure and found a main effect of age, F(3,65) = 15.97, p < .001. Post hoc tests using Tukey’s HSD revealed that the spatial quality of the adults’ layout in speech (M = 2.30, SD = 1.14) was higher than that of the 8-year-olds (M = 0.07, SD = 0.26, p < .001), 9-year-olds (M = 0.60, SD = 1.06, p < .001), and 10-year-olds (M = 0.88, SD = 1.36, p < .01). There were no other significant differences between age groups.

Relative locations

There were also developmental differences in the number of locations participants gave in speech. We performed an ANOVA on the number of locations in speech and found a main effect of age, F(3,65) = 56.09, p < .01. Post hoc tests using Tukey’s HSD revealed that adults (M = 3.83, SD = 2.46) conveyed significantly more locations in speech than 8-year-olds (M = 0.93, SD = 1.87, p < .01) and 10-year-olds (M = 1.94, SD = 2.05, p < .05). There were no other significant differences between age groups for locations conveyed in speech.

What did the children say when they did not unambiguously indicate the locations of the animals? Many of the 8- and 9-year-olds (and a few 10-year-olds and adults) listed the animals without specifying the spatial relations among them (e.g., they listed the animals in sequence using words such as “and then,” which gave the order of the animals along the route but not their spatial arrangement). Within the sample, 67% of the 8-year olds (n = 10), 60% of the 9-year-olds (n = 9), 25% of the 10-year-olds (n = 4), and 9% of the adults (n = 2) conveyed only sequential information, with no information at all about the spatial arrangement of the animals, in their speech. The proportions of participants conveying no spatial relational information differed according to age, χ2(3,N = 69) = 18.10, p < .001. The 8- and 9-year olds were particularly prone to communicating only sequential information. Significantly more 8-year-olds (10 of 15) communicated nonspatially (i.e., only sequential information) than 10-year-olds (4 of 16), χ2(1,N = 31) = 3.88, p < .05, and adults (2 of 23), χ2(1,N = 31) = 11.57, p < .001. In addition, significantly more 9-year-olds (9 of 15) communicated nonspatially than adults, χ2(1, N = 37) = 9.26, p < .01.

Gesturing and talking about space

Many participants gestured as they described the space. Within the sample, 5 8-year-olds (33%), 10 9-year-olds (67%), 12 10-year-olds (75%), and 22 adults (96%) gestured during their descriptions. We describe how children and adults used their gestures to convey spatial relations, first, with respect to overall layout and, second, with respect to the relative locations of the animals. We then describe individual differences between those who did gesture and those who did not gesture.

Overall layout information

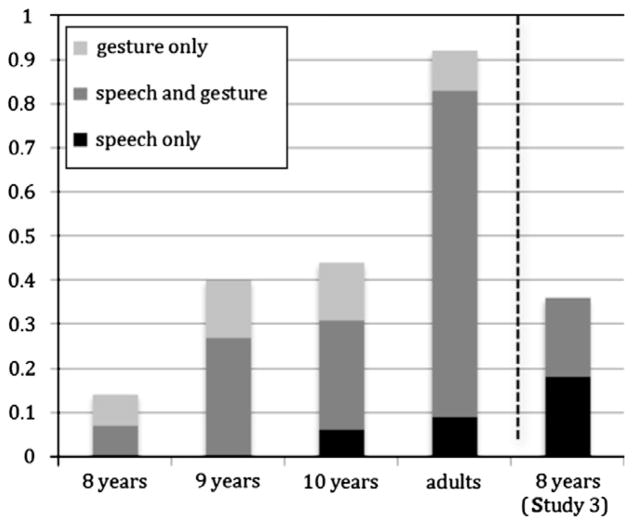

We first calculated the number of participants conveying any type of layout information at all (regardless of quality), taking both speech and gesture into account. Participants who produced all of their layout information in speech are classified in the “speech only” group, and those who produced all of their layout information in gesture are classified in the “gesture only” group. Any participants who produced some layout information in speech and some in gesture were classified in the “speech and gesture” group. Some of these participants produced all of the layout information they gave in both modalities, for example, saying that “there were two rows of cardboard boxes” while tracing two rows in the air with the hands. Others produced some layout information in speech and some in gesture; the information conveyed in one modality was always a subset of the information conveyed in the other, for example, saying that there were two rows of three boxes while gesturing two rows or gesturing a 2 × 3 matrix while saying there were two rows of boxes.

We first examined the proportion of participants who gave layout information in each age group in either speech or gesture. We separated participants in each age group according to whether or not they gave layout information (in either speech or gesture). As in our first analysis, which focused entirely on speech, we found a reliable difference across groups, χ2(3,N = 69) = 24.53, p < .001 (see Table 1). There were significant differences between adults and each of the younger age groups: 8-year-olds, χ2(1,N = 37) = 23.10, p < .01, 9-year-olds, χ2(1,N = 37) = 11.62, p < .01, and 10-year-olds, χ2(1,N = 38) = 10.54, p < .01. There were no other significant differences between age groups.

Fig. 3 illustrates which modality (or combination of modalities) the participants used to convey layout information. The most striking result in Fig. 3 is how rarely participants of any age conveyed layout information in speech alone or in gesture alone. Most participants who mentioned the layout of the space did so using both gesture and speech. What this means is that including gesture has little effect on how many participants we classify as conveying layout information (without regard to quality); the number of participants over all age groups who conveyed layout information was 29 (42%) when we look only at speech compared with 36 (52%) when we look at both speech and gesture.

Fig. 3.

Proportion of participants in each age group in Study 1 who conveyed layout information of any quality (bars to the left of the vertical line). Participants are classified according to whether they conveyed the layout information only in speech, in both speech and gesture, or only in gesture. The rightmost bar displays comparable data for 8-year-old participants in Study 3 who were encouraged to gesture.

However, including gesture in our analysis does give us a different picture of layout quality. Using a repeated measures ANOVA (with layout quality score as the dependent measure) to compare the first analysis (which focused only on speech) with the second analysis (which included gesture), we found that quality of layout was significantly better when we took gesture into account than when we did not take gesture into account, F(1,65) = 8.98, p < .01. The mean layout quality score was 2.61 (SD = 0.89) with gesture versus 2.30 (SD = 1.15) without gesture for adults; comparable scores were 1.25 (SD = 1.48) versus 0.88 (SD = 1.36) for 10-year-olds, 0.87 (SD = 1.25) versus 0.60 (SD = 1.10) for 9-year-olds, and 0.33 (SD = 0.90) versus 0.07 (SD = 0.26) for 8-year-olds. Planned post hoc comparisons revealed that the layout quality score calculated with gesture was significantly different from the score calculated without gesture for 9-year-olds (p < .05) and 10-year-olds (p < .01) but did not reach significance for adults (p = .11) or 8-year-olds (p = .16). There was also a main effect of age, F(3,65) = 17.59, p < .001. Planned post hoc comparisons revealed significant differences in layout quality between adults and children of all ages when gesture and speech are considered (mean difference > 1.39, p < .001).

Relative locations

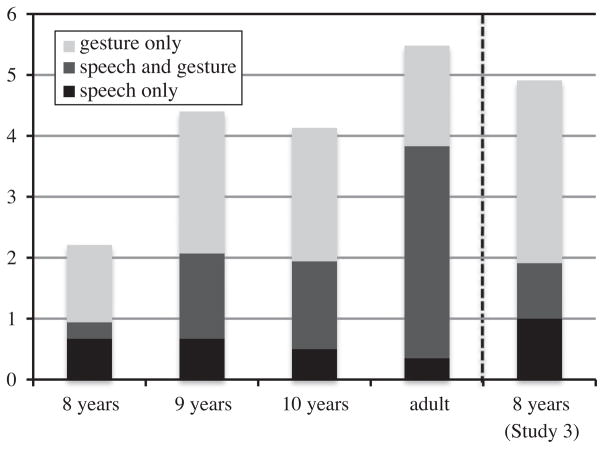

We turn next to the number of locations that participants in each age group conveyed in gesture (with or without the same locations in speech). Using an ANOVA on the number of locations, we found a significant effect of age, F(3,65) = 6.50, p < .01, driven primarily by the 8-year-olds, who produced few locations in gesture. The adults (M = 5.13, SD = 1.91) conveyed significantly more locations in gesture than the 8-year-olds (M = 1.53, SD = 2.47, p < .001). The 9-year-olds (M = 3.73, SD = 2.91) and 10-year-olds (M = 3.65, SD = 2.70) conveyed marginally more locations in gesture than the 8-year-olds (p = .079 and p = .095, respectively). The 9-year-olds, 10-year-olds, and adults did not differ from one another.

We then explored the number of locations conveyed taking both speech and gesture into account. The mean number of locations conveyed was 5.48 (SD = 1.73) with gesture versus 3.83 (SD = 2.46) without gesture for adults; comparable scores were 4.13 (SD = 2.42) versus 1.94 (SD = 2.05) for 10-year-olds, 4.40 (SD = 2.75) versus 2.07 (SD = 1.95) for 9-year-olds, and 2.20 (SD = 2.68) versus 0.93 (SD = 1.87) for 8-year-olds. Using a repeated measures ANOVA (with number of locations as the dependent measure) to compare our earlier analysis (which focused only on speech) with this second analysis (which included gesture), we found that significantly more locations were conveyed when we took gesture into account than when we looked only at speech, F(1,65) = 38.36, p < .001. There was a main effect of age, F(3,65) = 7.28, p < .001, but no interaction, F(3,65) = 0.63, ns. We performed post hoc comparisons to examine the age effect and found that 8-year-olds and 10-year-olds differed significantly from adults (p < .05). Planned post hoc comparisons also revealed that the mean numbers of locations calculated with gesture were significantly different from the mean numbers calculated without gesture for 9-year-olds, 10-year-olds, and adults (p < .01) and were marginally different for 8-yearolds (p = .055).

Fig. 4 presents the mean number of locations conveyed in speech only, speech and gesture, and gesture only by each age group. Note that, as in Fig. 3, relatively little information was conveyed by any of the participants in speech alone by any age group (the dark bars in Fig. 4). However, in contrast to the results shown in Fig. 3, a substantial amount of information was conveyed in gesture alone (the light gray bars in Fig. 4), with 1.65 (30%) of the locations mentioned being conveyed uniquely in gesture for the adults, 2.19 (53%) for the 10-year-olds, 2.33 (53%) for the 9-year-olds, and 1.27 (57%) for the 8-year-olds. What this means is that, unlike layout information (most of which was accessible whether or not we looked at participants’ hands), a substantial amount of the information about the relative spatial locations of the animals could be accessed only if we looked at participants’ gestures.

Fig. 4.

Mean number of spatial locations given only in speech, in both speech and gesture, or only in gesture by each age group in Study 1 (bars to the left of the vertical line) and by 8-year-olds who were encouraged to gesture in Study 3 (bar to the right of the vertical line).

Individual differences

As described earlier, many participants gestured while describing the space: 5 (of 15) 8-year-olds (33%), 10 (of 15) 9-year-olds (67%), 12 (of 16) 10-year-olds (75%), and 22 (of 23) adults (96%). We examined whether participants who gestured used speech differently than those who did not gesture.

We performed two ANOVAs: one on the layout score in speech and one on the number of relative locations communicated in speech (with age and gesture use as independent variables). Regardless of age, participants who gestured gave higher quality layout information in their speech, F(1,61) = 7.74, p < .01. On average, those who did not gesture had a speech layout score of 0.10 (SD = 0.45), whereas those who gestured had a speech layout score of 1.53 (SD = 1.40). Similarly, participants who gestured communicated more relative locations in their speech than those who did not gesture, F(1,61) = 4.27, p < .05; there was again no effect of age. On average, those who did not gesture communicated 0.90 locations in speech (SD = 1.98), whereas those who gestured communicated 2.92 locations in speech (SD = 2.55). Overall, those participants who gestured gave substantially more spatial information in their speech than those who did not gesture.

Model construction task

We also asked participants to construct a model of the space using photographs of the animals. This task allowed us to determine whether participants who failed to convey the locations of the animals in their descriptions did so because they did not know the relative locations. With the exception of one 9-year-old who switched two cards, all participants placed all six cards in the correct relative positions. Using an ANOVA, we found no significant differences across ages on this task, F(3,65) = 1.21, ns.

We performed two analyses to examine how closely the card sorting task reflected participants’ experience in moving through the space. We first examined how likely participants were to place the cards down in the same order that they had seen the animals in the space. Overall, 17% of participants placed the cards down following the order in which they had experienced them: 27% of 8-year-olds (n = 4), 17% of 9-year-olds (n = 2), 25% of 10-year-olds (n = 4), and 9% of adults (n = 2). Using a chi-square, we found no differences in these proportions by age, χ2(3,N = 69) = 2.93, ns.

We also examined how often participants shuffled the cards, which could be taken as a sign that the task was too difficult to accomplish when the cards were given in random order. We performed a chi-square on the proportion of participants who shuffled cards and found a difference by age, χ2(3,N = 69) = 15.54, p < .01. Within the sample, 67% of 8-year-olds (n = 10), 67% of 9-year-olds (n = 10), 38% of 10-year-olds (n = 3), and 15% of adults (n = 3) shuffled the cards while completing the task. The adults differed from both the 8- and 9-year-olds (ps < .01). There were no other differences by age.

Discussion

Our analyses of children’s speech replicate previous work on children’s descriptions of space (Blades & Medlicott, 1992; Gauvain & Rogoff, 1989), showing that 8- and 9-year-olds, and even some 10-year-olds, do not typically convey spatial relations in their speech. However, examining children’s gestures leads to a different interpretation: Many of the older children (and the adults) exhibit a richer understanding of space when both gesture and speech are taken into account.

Participants often used gesture to communicate spatial information. They rarely expressed information about spatial layout in speech without also expressing the information in gesture. Moreover, speech and gesture together conveyed higher quality information about layout than speech on its own, highlighting the important interplay between speech and gesture for this type of spatial information. Similarly, when conveying information about the relative locations of the objects in space, participants conveyed more information in gesture and speech than in speech alone. A substantial amount of this type of spatial information appeared uniquely in gesture and not in speech for all participants; across age groups, including gesture accounted for 30% to 57% of the spatial locations conveyed. There were also individual differences between participants who gestured and those who did not gesture; namely, those who gestured conveyed more spatial information in speech than those who did not gesture. Thus, it seems that those who communicate spatial information do so in both modalities.

However, there were developmental differences in how children and adults described the space, even considering both modalities. The 8-year-olds, in particular, described the layout less often than the other participants and provided fewer spatial locations for the animals. They also often described the space sequentially using speech, and conveyed fewer relations in gesture, than the other age groups. The model construction results are important in helping us to constrain possible explanations for this developmental difference. One possible explanation for the fact that the 8-year-olds conveyed few spatial locations in speech or gesture compared with the older age groups is that they simply did not know the relations among the locations. However, their success on the model task indicates that the 8-year-olds had some knowledge of the space: although they shuffled the cards more than the older age groups, they were still able to place cards of all the animals in the correct relative positions.

This interpretation rests on an important assumption—that the model task provides a valid measure of children’s knowledge about the space. However, one could argue that the task was not a sufficiently rigorous assessment of the children’s knowledge because they had access to all of the cards and could shuffle them so that they could place the cards following the order in which they had experienced the animals. The majority of 8- and 9-year-olds shuffled the cards, which could reflect a search to put the cards in the order experienced. Thus, a child might be able to perform well on the model construction task even if her knowledge of the space included only the route she had followed through the space. We addressed this concern with respect to the youngest children, the 8-year-olds, in Study 2.

Study 2

We used a more demanding version of the model construction task in the second study. We handed the cards to participants one at a time and in random order. If participants knew the relations among the animals in the space, they should be able to place any card down in relation to the other cards on the table. In other words, they should be able to reconstruct the space even if the cards representing animals at the beginning of the route had not yet been placed on the table.

Method

Participants

Participants were 20 8-year-olds (M = 102.6 months, range = 98–108) and 32 adults. Children were recruited as in Study 1. Adults were undergraduate students who received class credit for their participation.

Materials

Materials were the same as those used in Study 1.

Procedure

The procedure for the learning phase was identical to the procedure used in Study 1, with the exception that participants were handed each animal’s card one at a time in a random order and were asked to place each card (representing an animal’s box) in its location in a space on the table representing the room. Half of the participants in each age group were randomly assigned to one of two model construction groups. In the “all cards” group, participants were allowed to keep the cards on the table where they were placed. Eventually, all of the cards were on the table at the same time. In the “single card only” group, the experimenter took away each card after the participant placed it on the table. Therefore, only one card was on the table at a time.

Model construction performance was coded for accuracy. Participants received 1 point for each card that they placed on the table in the correct relative position, with a maximum score of 6.

Results and discussion

Participants again performed nearly perfectly. The 8-year-olds (mean score = 5.85, SD = 0.37) and the adults (mean score = 5.95, SD = 0.21) in both the all cards and single card only groups placed the cards directly in their correct relative positions on the table. There was no significant effect of either model construction task type, F(1,48) = 0.086, ns, or age, F(1,48) = 0.019, ns. Fully 90% of the 8-year-olds and 94% of the adults placed all of the cards in the correct relative positions on the table.

Study 2 used a stringent test of participants’ understanding of spatial relations. To succeed on the model construction tasks in this study, participants needed to have simultaneous mental access to each location within the playhouse. The 8-year-olds’ successful performance on these tasks indicated that they had formed an integrated, highly relational knowledge of the space.

These findings raise an important question: Why did the 8-year-olds in Study 1 provide so little spatial information in their descriptions in either speech or gesture? The results of Study 2 allowed us to rule out the possibility that the children simply did not know the spatial relations. In Study 3, we examined the possibility that 8-year-olds who are prompted to use gesture when communicating spatial information would provide more complete descriptions than when not prompted to use gesture. Given that gesture is well-suited to conveying spatial information and that it often reflects learners’ first insight into solving a problem (e.g., Alibali & Goldin-Meadow, 1993; Church & Goldin-Meadow, 1986; Perry et al., 1988), we predicted that encouraging children to gesture could help them to communicate more of what they know about the space than they otherwise would.

Study 3

Studies 1 and 2 showed that 8-year-olds had knowledge of spatial relations but did not spontaneously communicate this information when asked to do so. In the third study, we explicitly asked children to use their hands while describing the space. Although both gesture and speech can be used to convey spatial relations, we predicted that using the hands could be easier for children because it does not require a full lexicon of relational phrases and avoids the linearization problem discussed earlier. The fact that participants in Study 1 conveyed many of the animals’ locations in gesture and not in speech (the light gray bars in Fig. 4) suggests that gesture is well-suited to the task of conveying individual locations to an interlocutor.

We asked whether telling 8-year-olds to use their hands when describing the space would lead them to provide more spatial information than they would otherwise have provided. We examined how much information children conveyed in speech and gesture, again concentrating on two types of spatial information: layout information and information about the relations among the animals’ locations.

Method

Participants

Participants were 11 8-year-olds (5 girls and 6 boys, mean age = 101.32 months, range = 96–106). All participants were recruited through direct mailings to their parents.

Procedure

The procedure was the same as in Study 1, with one important modification: Children were encouraged to gesture during the test phase. The experimenter told participants to “use your hands to show where the animals were” when describing the animals’ locations to their parents.

Results and discussion

All 11 children followed the instruction to gesture. Whereas 9 used their gestures to convey spatial information, 2 used their hands only to count the animals while naming them.

Overall layout information

We first conducted an analysis looking only at speech and found that encouraging children to gesture increased the likelihood that a child would convey spatial layout information in speech. Within the sample in Study 3, 4 children (36%) conveyed layout information in speech compared with 1 of 15 children (7%) in Study 1, although the difference was only marginally significant (p = .08, Fisher exact, one-tailed). When we included gesture in the analysis, we found no difference between the analyses focusing on speech alone versus both speech and gesture (see the rightmost bar in Fig. 3); all 4 of the children in Study 3 who produced layout information when asked to gesture produced that information in speech (2 with gesture and 2 without gesture). Thus, if our invitation to gesture had an effect on the children’s production of layout information, the effect was on speech as much as gesture.

In terms of quality of layout information, we first looked at speech alone and found that the children in Study 3 produced significantly higher quality layout descriptions in speech (M = 0.73, SD = 1.01) than the children in Study 1 (M = 0.07, SD = 0.26), F(1,24) = 2.77, p < .05. This difference was no longer significant when we took gesture into account; the children in Study 3 had a mean layout score of 0.81 (SD = 1.17) when both speech and gesture were coded compared with the children in Study 1 whose layout score in speech and gesture was 0.33 (SD = 0.90), F(1,24) = 1.49, ns.

Relative locations

We next examined the number of spatial locations children produced in speech and found that the children in Study 3 mentioned more locations in speech (M = 1.91, SD = 2.07) than the children in Study 1 (M = 0.93, SD = 1.87), but the difference was not significant, F(1,24) = 6.04, p = .221.

However, when we included gesture, we found that the 8-year-olds in Study 3 communicated significantly more relative spatial locations in speech and/or gesture (M = 4.91, SD = 1.92) than the 8-year-olds in Study 1, who were not prompted to gesture (M = 2.20, SD = 2.78), F(1,24) = 8.14, p < .01. Focusing on the children in Study 3, we found that the mean number of locations children conveyed with gesture was 3.91 (SD = 2.51) compared with 1.00 (SD = 1.50) without gesture, F(1,24) = 6.80, p < .05. In fact, when we included gesture in our analysis, we found that the 8-year-olds in Study 3, who were encouraged to gesture, mentioned nearly as many spatial locations in speech and/or gesture as the adults in Study 1 (M = 4.91 for the children in Study 3 [see the rightmost bar in Fig. 4] vs. M = 5.13 for the adults in Study 1).

General discussion

When speakers communicated spatial information, they often did so using gesture. Speakers rarely conveyed information in speech without also communicating this information in gesture. The converse, however, was not true. Speakers conveyed a great deal of information in gesture that they did not communicate in speech, particularly information about relations among locations, although not spatial layout.3

Our findings also indicate an important relation between spatial gesture and spatial speech. Speakers who used gesture tended to give more spatial information in their speech than those who did not use gesture. Moreover, the 8-year-olds in Study 3 who were encouraged to gesture conveyed higher quality layout information in speech than the 8-year-olds in Study 1 who were not encouraged to gesture. Taken together, these results suggest that using gesture may lead to more and better spatial speech. Therefore, gesturing may encourage the development of spatial communication (in speech as well as gesture), prompting children to convey more spatial information than when they use speech alone.

Although we found that gesture was important for spatial communication, not all speakers used it. There were developmental differences in how speakers communicated spatial information. In particular, 8-year-olds were significantly less likely than adults to convey information about spatial layout and object locations in speech or gesture, with 9- and 10-year-olds falling in between. There are at least two possible sources of the developmental differences we found in spatial communication: knowing how to communicate about spatial relations and knowing when to communicate about spatial relations. We discuss each of these potential explanations in turn.

Knowing how to communicate about spatial relations

We began our study with the following question: Does widening the lens to include gesture as well as speech shed light on how children and adults communicate spatial information? The answer to this question is yes. Gesture can combine with speech to create higher quality information (as in spatial layouts), or it can carry a great deal of the information on its own (as in the relative spatial locations). For both children and adults, taking gesture and speech into account revealed knowledge of more spatial information than focusing on speech alone revealed. This spatial information is there for listeners, assuming that listeners pay attention to speakers’ gestures, an assumption that has a great deal of support (e.g., Goldin-Meadow & Sandhofer, 1999; Kendon, 1994). In fact, in the descriptions produced in our study, very little spatial information was expressed uniquely in speech (i.e., the black bars in Figs. 3 and 4 are relatively short). Most of the information was conveyed either in both gesture and speech (the dark gray bars) or uniquely in gesture (the light gray bars). Thus, a good strategy for listeners interested in the spatial information speakers convey is to pay attention to speakers’ hands.

The youngest children in our study, for the most part, did not provide their listeners with essential spatial information even though it was clear (from the model construction task) that they themselves knew the information. However, they were able to convey more information (particularly about spatial locations) when they were asked to gesture than when they were not prompted to gesture.4 Previous work has shown that telling children to gesture when explaining a task can change their understanding of the task. For example, children who are told to gesture on a math task—either to produce whatever gestures they like (Broaders et al., 2007) or to produce particular gestures taught to them by the experimenter (Cook, Mitchell, & Goldin-Meadow, 2008; Goldin-Meadow et al., 2009)—are more likely to learn how to solve the math problems correctly than children who are not instructed to gesture. These findings suggest that the act of gesturing itself may play a role in bringing about change.

In our study, the change that occurred when we encouraged children to gesture was not a change in their understanding of spatial relations—8-year-olds understood how the animals were arranged—but rather a change in how they conveyed that understanding. Encouraging them to gesture improved their ability to communicate; when told to gesture, the 8-year-olds in Study 3 conveyed significantly more information about spatial locations than the 8-year-olds in Study 1. Much of the additional information about the relative locations of the animals was conveyed only in gesture and not in speech. Gesture provided a means by which children could communicate what they knew about the space. However, why did 8-year-olds rarely use gesture unless prompted? It is very likely that children knew how they could communicate about space but did not know when it was necessary to do so.

Knowing when it is necessary to communicate about spatial relations

A better possibility is that children knew how to communicate the spatial relations but did not understand that the situation called for doing so. Both children and adults knew the spatial layout of the animals (as evidenced by their performance on the model construction task) as well as the sequence of locations they experienced. For adults, the request to tell someone about the space brought to mind the spatial layout; therefore, they described the spatial relations among the animals in speech and gesture to their listener. In contrast, for children, the request to tell someone about the space may have been interpreted as a request to recount the sequential ordering in which the animals were experienced. It might not have occurred to the 8-year-olds that the spatial layout would have been useful information for their listeners.

Young children often are not good at providing the information another person needs to adequately solve a task (e.g., Flavell, Botkin, Fry, Wright, & Jarvis, 1968; Flavell, Speer, Green, & August, 1981; Krauss & Glucksberg, 1969). Communicating about space, like many other communicative tasks, requires matching the information that the speaker has about the space to the listener’s needs. Although perspective taking often improves with age (see Krauss & Glucksberg, 1969), even 8- to 10-year-olds have some difficulty with spatial perspective taking, (Presson, 1980; Roberts & Aman, 1993). For example, children have some difficulty in differentiating “right” and “left” from another person’s perspective and in imagining what an array would look like from a different angle. Doing these tasks well may require a level of metacognitive or metarepresentational knowledge that develops during the elementary school years (Chandler & Sokol, 1999; Kuhn, Amsel, & O’Loughlin, 1988). Consequently, having encoded or inferred information about the relations among locations is not enough to ensure that children will spontaneously provide this information when describing a space to another person. The adults in our study spontaneously thought to communicate the layout of the space more often than the children. Doing so provides information that is particularly useful to a listener who has not navigated the space. In contrast, many of the youngest children simply communicated the information as they had experienced it—as an ordered series of locations—with no apparent thought about the listener’s perspective.

This possibility is consistent with an emerging theoretical perspective regarding the development of spatial cognition. Earlier theoretical perspectives (e.g., Piaget, Inhelder, & Szeminska, 1960; Siegel & White, 1975) suggested that development involves replacing a relatively simple spatial representation with a more complex or sophisticated representation. In contrast, more recent theories (e.g., Gouteux & Spelke, 2000; Newcombe & Huttenlocher, 2000; Uttal, Sandstrom, & Newcombe, 2006) suggest that children may possess multiple forms of spatial representations at an early age and that development instead involves learning when and how to use these representations. For example, Uttal, Sandstrom, et al., (2006) found that children as young as 4 years represented the location of a hidden toy both in terms of the distance and angle to a single landmark and in terms of the relation between two landmarks.

In the current case, our results suggest that 8-year-olds are capable of representing survey-based information (as reflected in their responses to the model construction task) but that, when asked to talk about the space, they represent mainly route-based information (see also Sekine, 2009). In the model construction task, children were asked to use the cards to stand for the animals (and, implicitly, to use the table to stand for the room). This external support may have prompted children to access (or even form) a survey representation. In contrast, the communication task did not provide an obvious external support other than gesture, which could explain why more children conveyed survey (layout) information in their communications when encouraged to gesture. Thus, our findings suggest that gesture could be used as a way to prompt children to use a more spatially integrated representation than they would have otherwise thought to use.

In summary, we suggest that the youngest children (a) might not have known to spontaneously use their hands when describing spatial information and (b) may have accessed a linear (rather than a survey) representation during the description task because no external supports prompted them to do otherwise. Encouraging them to gesture forced them to use their hands, which in turn provided support for accessing a more spatially integrated representation. As a result, children who were encouraged to gesture communicated more spatial information (including information in speech). Taken with the finding that participants of all ages in our study communicated more spatial information in speech when they gestured, we suggest that gesture can serve as a mechanism for bettering spatial communication.

Conclusions

Our results demonstrate that gesture looms large in spatial descriptions. Gesture can serve either a synergistic or unique function; speech produced along with gesture conveyed higher quality descriptions of spatial layout than speech produced without gesture, and gesture contributed unique information about spatial locations not found in the accompanying speech. One of the striking results of our study is how rarely spatial information was conveyed (by children or adults) in speech alone; speakers conveyed spatial information either in both speech and gesture (for spatial layouts and spatial locations) or in gesture and not in speech (for spatial locations).

Our results also reveal that 8-year-olds are capable of using spatial gestures in adult-like ways but that they do so infrequently. We suggest that they do not communicate spatial information that they have represented because they do not know when it is necessary to do so. This problem can be solved by telling children to gesture because gesture naturally provides support for accessing the more sophisticated spatial representations that young children appear to have. Thus, encouraging gesture can improve the overall quality of spatial communication in children and perhaps in adults.

Acknowledgments

This research was funded by the Spatial Intelligence and Learning Center (National Science Foundation [NSF], SBE0541957). We thank Bridget O’Brien for her assistance in coding and Dedre Gentner for her thoughts and comments throughout the preparation of this manuscript. We also thank the students, children, and parents who participated.

Footnotes

The simple instructions used with the children were also used with the adults with this addition: “This study has been conducted with children. We are asking adults to participate in order to compare them to children, but we need to keep the procedures as similar as possible. Therefore, some aspects of this study may seem simple or childish to you, but I ask that you bear with us. Then again, just because this study was designed for children does not mean that it is easy.”

Among the interlocutors, 62% did not ask any questions, 7% asked nonspatial questions (e.g., “What color were the boxes?”), 14% asked the speaker to repeat the information, and 16% asked spatial clarification questions (e.g., clarifying whether “next to” meant right or left).

The linearization problem endemic to speech might be more severe in a more complex layout. If so, we might find that participants do indeed convey more layout information uniquely in gesture in these spatially complex environments.

Some of the difficulty that the young children had in conveying layout information may have come from the fact that they were too short to have a good view of the layout (the boxes were 1.22 m tall). Note, however, that encouraging the children to gesture did lead to an increase in layout information in speech, suggesting that speaker height was not the sole factor in determining whether layout information is conveyed.

References

- Alibali MW, Goldin-Meadow S. Gesture–speech mismatch and mechanisms of learning: What hands reveal about the mind. Cognitive Psychology. 1993;25:486–523. doi: 10.1006/cogp.1993.1012. [DOI] [PubMed] [Google Scholar]

- Allen GL, Kirasic KC, Beard RL. Children’s expressions of spatial knowledge. Journal of Experimental Child Psychology. 1989;48:114–130. doi: 10.1016/0022-0965(89)90043-x. [DOI] [PubMed] [Google Scholar]

- Blades M, Medlicott L. Developmental differences in the ability to give route directions from a map. Journal of Environmental Psychology. 1992;12:175–185. [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture reveals implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136:539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Brunye TT, Rapp DN, Taylor HA. Representational flexibility and specificity following spatial descriptions of real-world environments. Cognition. 2008;108:418–443. doi: 10.1016/j.cognition.2008.03.005. [DOI] [PubMed] [Google Scholar]

- Cassell J, McNeill D. Gesture and the poetics of prose. Poetics Today. 1991;12:375–404. [Google Scholar]

- Chandler MJ, Sokol BW. Representation once removed: Children’s developing conceptions of representational life. In: Sigel IE, editor. Development of mental representation: Theories and applications. Mahwah, NJ: Lawrence Erlbaum; 1999. pp. 201–230. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23:43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesture makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davies C, Uttal DH. Map use and the development of spatial cognition. In: Plumert JM, Spencer JP, editors. The emerging spatial mind. New York: Oxford University Press; 2007. pp. 219–247. [Google Scholar]

- Emmorey K, Tversky B, Taylor HA. Using space to describe space: Perspective in speech, sign, and gesture. Spatial Cognition and Computation. 2000;2:157–180. [Google Scholar]

- Enfield NJ. The body as a cognitive artifact in kinship representations: Hand gesture diagrams by speakers of Lao. Current Anthropology. 2005;46:51–81. [Google Scholar]

- Flavell JH, Botkin PT, Fry CL, Wright JC, Jarvis PE. The development of role-taking and communication skills in children. New York: John Wiley; 1968. [Google Scholar]

- Flavell JH, Speer JR, Green FL, August DL. The development of comprehension monitoring and knowledge about communication. Monographs of the Society for Research in Child Development. 1981;46(5) Serial No. 192. [Google Scholar]

- Garber P, Alibali MW, Goldin-Meadow S. Knowledge conveyed in gesture is not tied to the hands. Child Development. 1998;69:75–84. [PubMed] [Google Scholar]

- Gauvain M, Rogoff B. Ways of speaking about space: The development of children’s skill in communicating spatial knowledge. Cognitive Development. 1989;4:295–307. [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Cambridge, MA: Harvard University Press; 2003. [Google Scholar]

- Goldin-Meadow S, Cook SW, Mitchell ZA. Gesturing gives children new ideas about math. Psychological Science. 2009;20:267–272. doi: 10.1111/j.1467-9280.2009.02297.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Sandhofer CM. Gesture conveys substantive information about a child’s thoughts to ordinary listeners. Developmental Science. 1999;2:67–74. [Google Scholar]

- Gouteux S, Spelke E. Children’s use of geometry and landmarks to reorient in an open space. Cognition. 2000;81:119–148. doi: 10.1016/s0010-0277(01)00128-7. [DOI] [PubMed] [Google Scholar]

- Hazen NL, Lockman JJ, Pick HL. The development of children’s representations of large-scale environments. Child Development. 1978;49:623–636. [Google Scholar]

- Iverson JM. How to get to the cafeteria: Gesture and speech in blind and sighted children’s spatial descriptions. Developmental Psychology. 1999;35:1132–1142. doi: 10.1037//0012-1649.35.4.1132. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. What’s communication got to do with it? Gesture in children blind from birth. Developmental Psychology. 1997;33:453–467. doi: 10.1037//0012-1649.33.3.453. [DOI] [PubMed] [Google Scholar]

- Kendon A. Do gestures communicate? A review. Research on Language and Social Interaction. 1994;27:175–200. [Google Scholar]

- Krauss RM, Glucksberg S. The development of communication: Competence as a function of age. Child Development. 1969;40:255–266. [Google Scholar]

- Kuhn D, Amsel E, O’Loughlin M. The development of scientific thinking skills. San Diego: Academic Press; 1988. [Google Scholar]

- Levelt WJM. The speaker’s linearization problem. Philosophical Transactions of the Royal Society of London B: Biological Sciences. 1981;295:305–314. [Google Scholar]

- Levelt WJM. Linearization is describing spatial networks. In: Peters S, Saarinen E, editors. Processes, beliefs, and questions: Essays on formal semantics of natural language and natural language processing. Dordrecht, Netherlands: Reidel; 1982. pp. 199–220. [Google Scholar]

- McNeill D. Gesture and thought. Chicago: University of Chicago Press; 2005. [Google Scholar]

- Newcombe NS, Huttenlocher J. Making space: The development of spatial representation and reasoning. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- Perry M, Church RB, Goldin-Meadow S. Transitional knowledge in the acquisition of concepts. Cognitive Development. 1988;3:359–400. [Google Scholar]

- Piaget J, Inhelder B. The child’s conception of space. London: Routledge; 1956. [Google Scholar]

- Piaget J, Inhelder B, Szeminska A. The child’s conception of geometry. New York: Norton; 1960. [Google Scholar]

- Plumert JM, Hawkins AM. Biases in young children’s communication about spatial relations: Containment versus proximity. Child Development. 2001;72:22–36. doi: 10.1111/1467-8624.00263. [DOI] [PubMed] [Google Scholar]

- Plumert JM, Pick HL, Kintsch AS, Wegestin D. Locating objects and communicating about locations: Organizational differences in children’s searching and direction-giving. Developmental Psychology. 1994;30:443–453. [Google Scholar]

- Presson CC. Spatial egocentrism and the effect of an alternate frame of reference. Journal of Experimental Child Psychology. 1980;29:391–402. doi: 10.1016/0022-0965(80)90102-2. [DOI] [PubMed] [Google Scholar]

- Rauscher FH, Krauss RM, Chen Y. Gesture, speech, and lexical access: The role of lexical movements in speech production. Psychological Science. 1996;7:226–231. [Google Scholar]

- Roberts RJ, Aman CJ. Developmental differences in giving directions: Spatial frames of reference and mental rotation. Child Development. 1993;64:1258–1270. [PubMed] [Google Scholar]

- Siegel AW, White SH. The development of spatial representations of large-scale environments. In: Reese HW, editor. Advances in child development and behavior. Vol. 10. New York: Academic Press; 1975. pp. 9–55. [DOI] [PubMed] [Google Scholar]